Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK

Abstract

1. Introduction

- Uncertainty in vehicle dynamics and limited precision in following commands,

- Uncertainty in knowledge of the environment, including obstacles in it,

- Unpredictability of factors in operating environment,

- Uncertainty of position information.

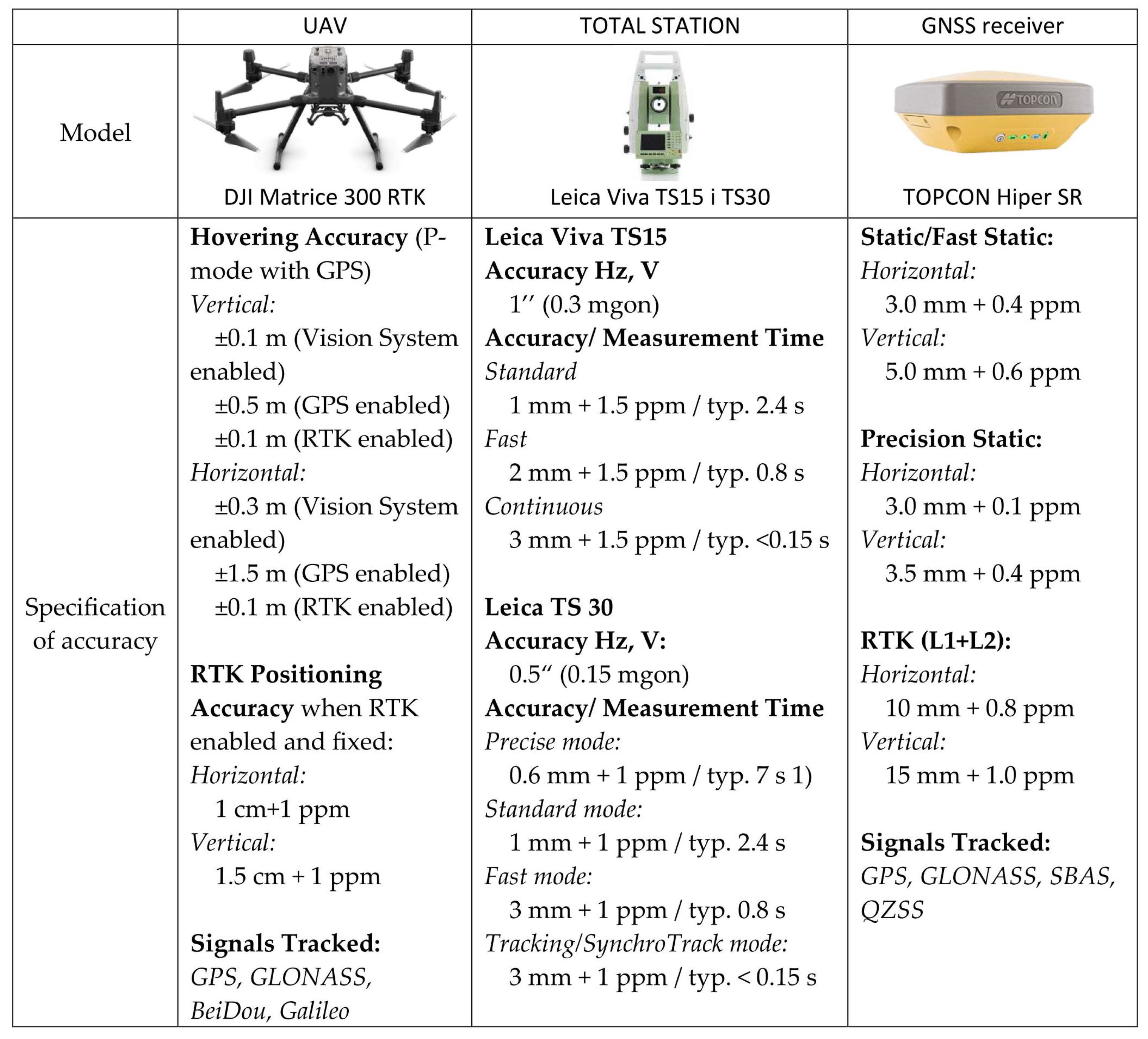

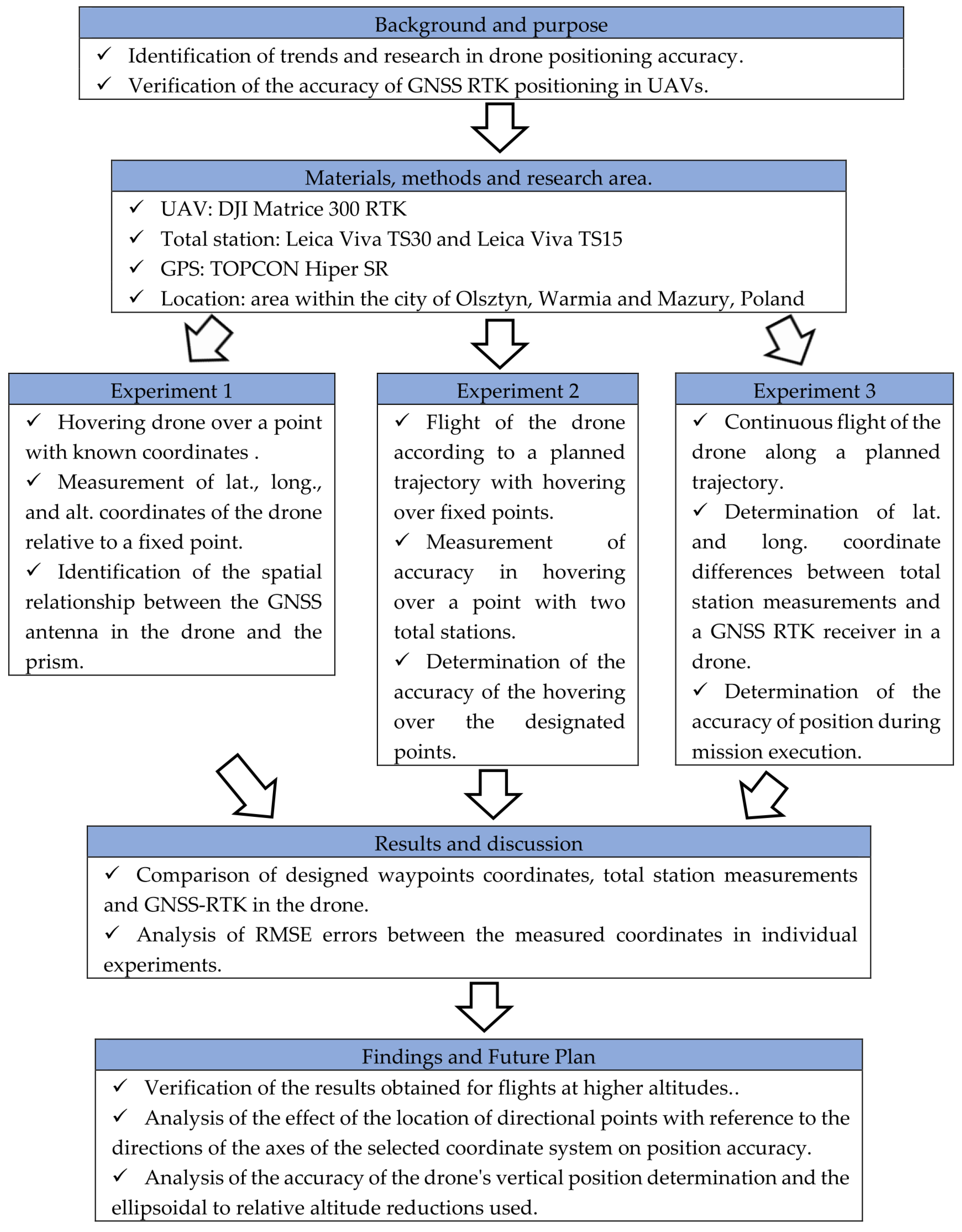

2. Materials and Methods

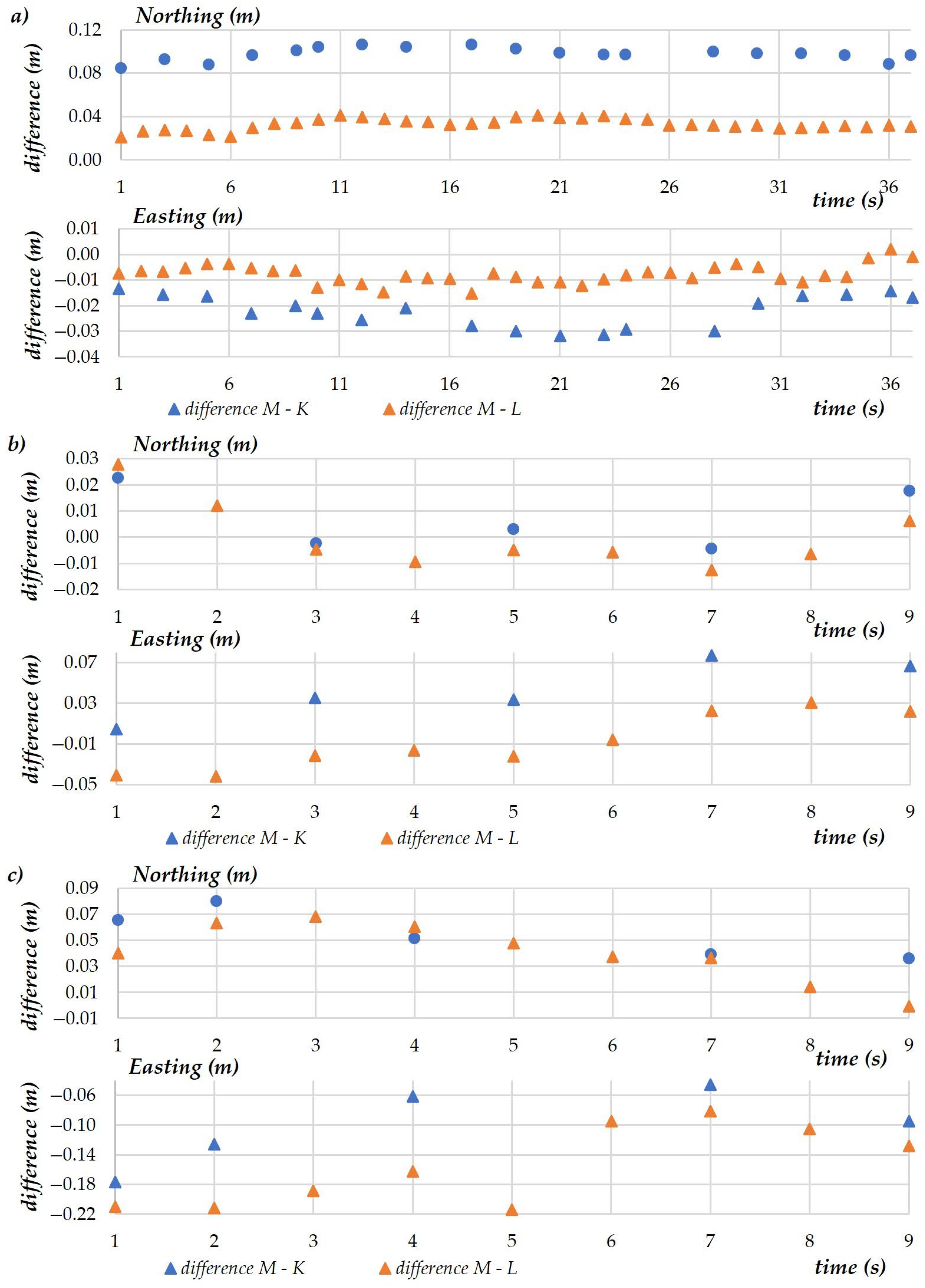

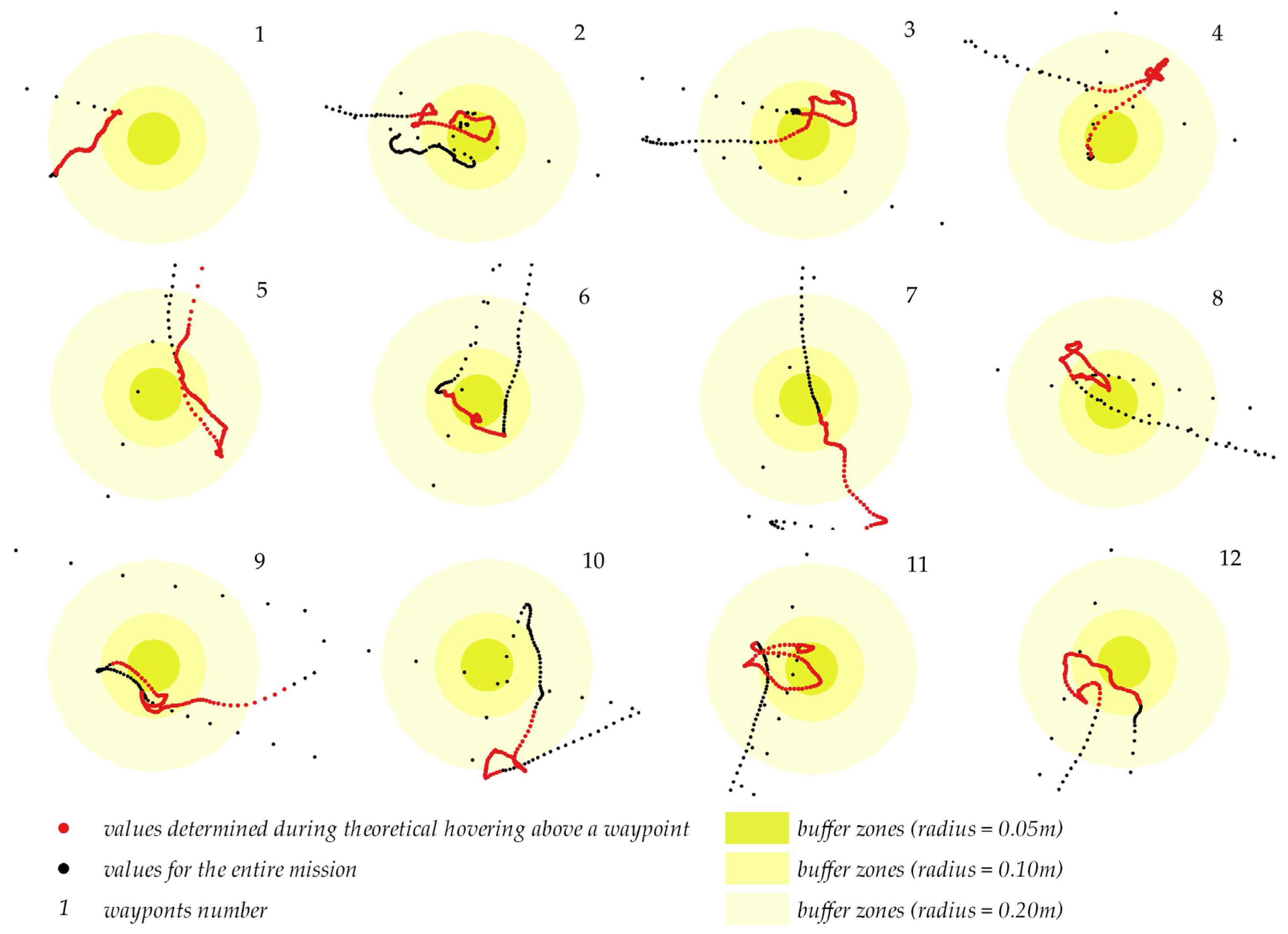

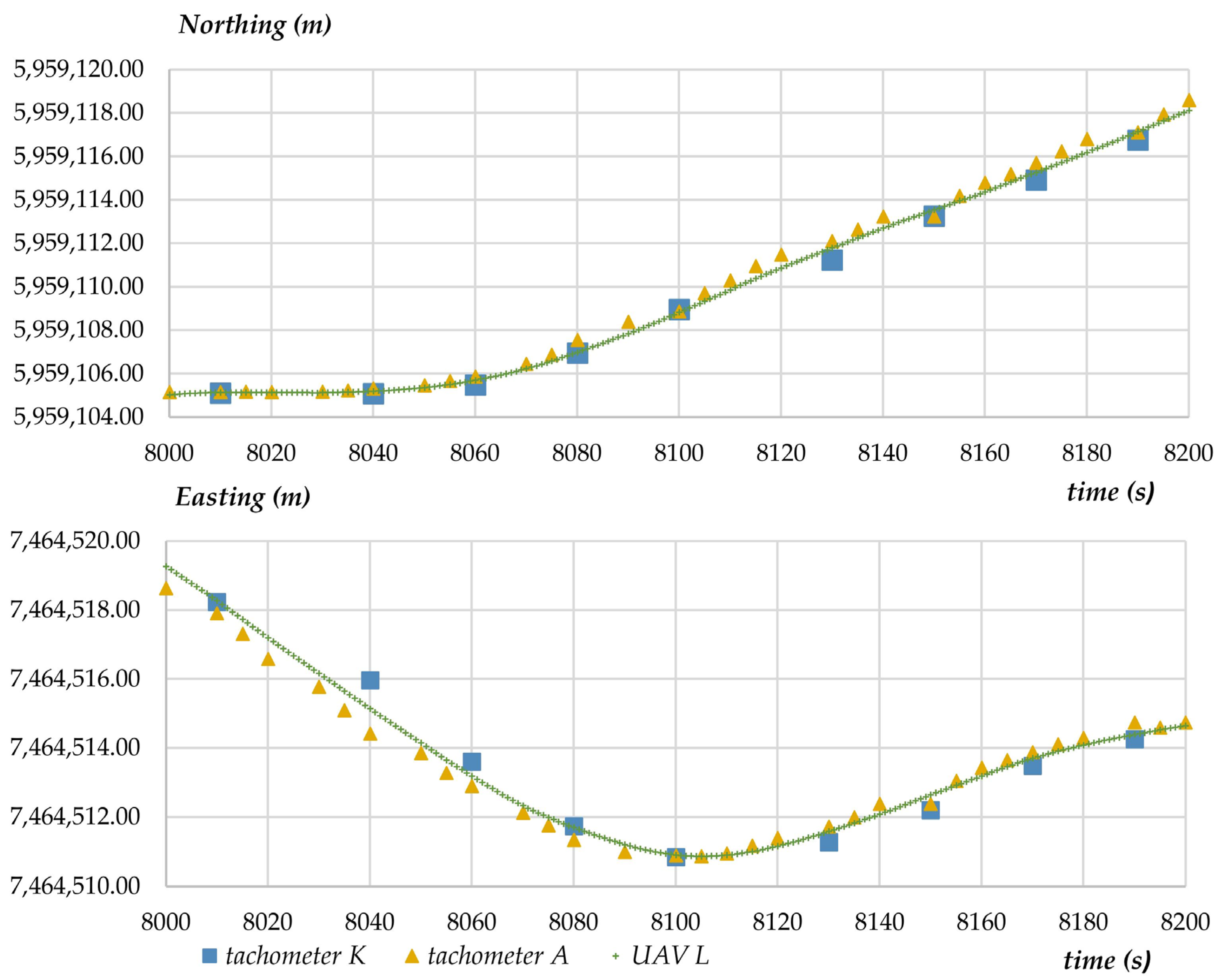

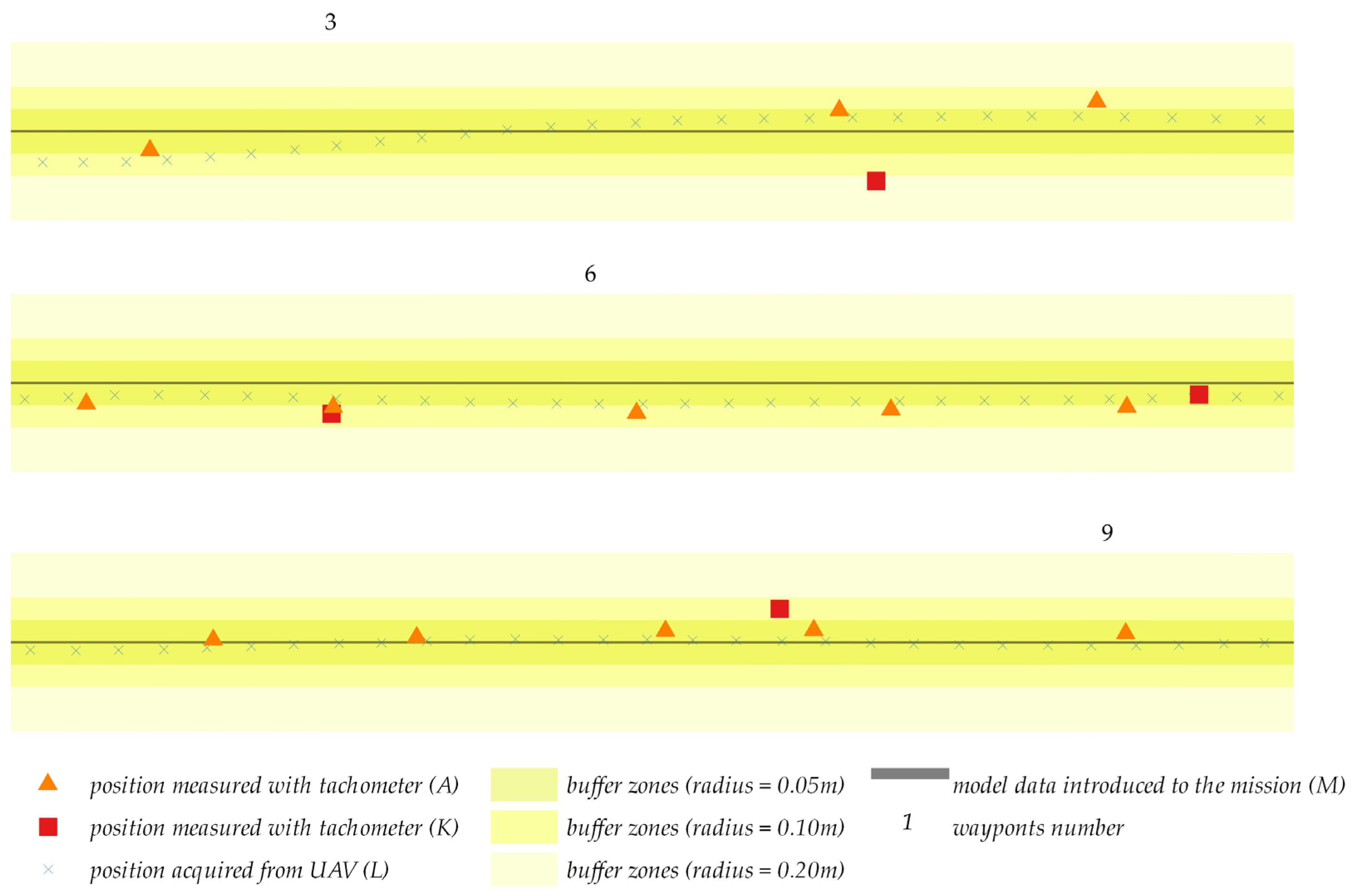

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alzahrani, B.; Oubbati, O.S.; Barnawi, A.; Atiquzzaman, M.; Alghazzawi, D. UAV Assistance Paradigm: State-of-the-Art in Applications and Challenges. J. Netw. Comput. Appl. 2020, 166, 102706. [Google Scholar] [CrossRef]

- Current and Future UAV Military Users and Applications. Air Space Eur. 1999, 1, 51–58. [CrossRef]

- Ogrodniczak, M.; Szuniewicz, K.; Czyża, S.; Cieślak, I. Obtaining Information Using Unmanned Aerial Vehicles. Int. Multidiscip. Sci. GeoConference SGEM 2018, 18, 503–510. [Google Scholar] [CrossRef]

- Kršák, B.; Blišťan, P.; Pauliková, A.; Puškárová, P.; Kovanič, Ľ.; Palková, J.; Zelizňaková, V. Use of Low-Cost UAV Photogrammetry to Analyze the Accuracy of a Digital Elevation Model in a Case Study. Measurement 2016, 91, 276–287. [Google Scholar] [CrossRef]

- Watanabe, Y.; Kawahara, Y. UAV Photogrammetry for Monitoring Changes in River Topography and Vegetation. Procedia Eng. 2016, 154, 317–325. [Google Scholar] [CrossRef]

- Tziavou, O.; Pytharouli, S.; Souter, J. Unmanned Aerial Vehicle (UAV) Based Mapping in Engineering Geological Surveys: Considerations for Optimum Results. Eng. Geol. 2018, 232, 12–21. [Google Scholar] [CrossRef]

- Sedano-Cibrián, J.; Pérez-Álvarez, R.; de Luis-Ruiz, J.M.; Pereda-García, R.; Salas-Menocal, B.R. Thermal Water Prospection with UAV, Low-Cost Sensors and GIS. Application to the Case of La Hermida. Sensors 2022, 22, 6756. [Google Scholar] [CrossRef] [PubMed]

- Micieli, M.; Botter, G.; Mendicino, G.; Senatore, A. UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment. Remote Sens. 2021, 14, 108. [Google Scholar] [CrossRef]

- Mukherjee, A.; Misra, S.; Raghuwanshi, N.S. A Survey of Unmanned Aerial Sensing Solutions in Precision Agriculture. J. Netw. Comput. Appl. 2019, 148, 102461. [Google Scholar] [CrossRef]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the Advent of the Twenties: Where We Stand and What Is Next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Balázsik, V.; Tóth, Z.; Abdurahmanov, I. Analysis of Data Acquisition Accuracy with UAV. Int. J. Geoinf. 2021, 17, 1–10. [Google Scholar] [CrossRef]

- Uysal, M.; Toprak, A.S.; Polat, N. DEM Generation with UAV Photogrammetry and Accuracy Analysis in Sahitler Hill. Measurement 2015, 73, 539–543. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Unmanned Aerial Vehicle (UAV) Photogrammetry Produces Accurate High-Resolution Orthophotos, Point Clouds and Surface Models for Mapping Wetlands. S. Afr. J. Geomat. 2016, 5, 186. [Google Scholar] [CrossRef]

- Tache, A.V.; Sandu, I.C.A.; Popescu, O.C.; Petrişor, A.I. UAV Solutions for the Protection and Management of Cultural Heritage. Case Study: Halmyris Archaeological Site. Int. J. Conserv. Sci. 2018, 9, 795–804. [Google Scholar]

- lo Brutto, M.; Garraffa, A.; Meli, P. UAV Platforms for Cultural Heritage Survey: First Results. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II–5, 227–234. [Google Scholar] [CrossRef]

- Qin, R. An Object-Based Hierarchical Method for Change Detection Using Unmanned Aerial Vehicle Images. Remote Sens. 2014, 6, 7911. [Google Scholar] [CrossRef]

- Li, H. Application of 5G in Electric Power Inspection UAV. J. Phys. Conf. Ser. 2021, 1920, 012048. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Huang, W.; Yang, L. UAV-Based Oblique Photogrammetry for Outdoor Data Acquisition and Offsite Visual Inspection of Transmission Line. Remote Sens. 2017, 9, 278. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Grasso, N.; Lingua, A.M.; Noardo, F.; Spanó, A. UAV Photogrammetry with Oblique Images: First Analysis on Data Acquisition and Processing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41. [Google Scholar] [CrossRef]

- Gulkowski, S.; Skomorowska, A. Autonomous Photovoltaic Observatory Station Integrated with UAV-a Case Study. E3S Web Conf. 2018, 49, 00043. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Perz, R.; Wronowski, K. UAV Application for Precision Agriculture. Aircr. Eng. Aerosp. Technol. 2019, 91, 257–263. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Hartley, R.J.L.; Leonardo, E.M.; Massam, P.; Watt, M.S.; Estarija, H.J.; Wright, L.; Melia, N.; Pearse, G.D. An Assessment of High-Density UAV Point Clouds for the Measurement of Young Forestry Trials. Remote Sens. 2020, 12, 4039. [Google Scholar] [CrossRef]

- Kotlinski, M. UTM System Operational Implementation as a Way for U-Space Deployment on Basis of Polish National Law. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 1680–1687. [Google Scholar]

- Barrado, C.; Boyero, M.; Brucculeri, L.; Ferrara, G.; Hately, A.; Hullah, P.; Martin-Marrero, D.; Pastor, E.; Rushton, A.P.; Volkert, A. U-Space Concept of Operations: A Key Enabler for Opening Airspace to Emerging Low-Altitude Operations. Aerospace 2020, 7, 24. [Google Scholar] [CrossRef]

- Choi, S.Y.; Cha, D. Unmanned Aerial Vehicles Using Machine Learning for Autonomous Flight; State-of-the-Art. Adv. Robot. 2019, 33, 265–277. [Google Scholar] [CrossRef]

- Jinke, H.; Guoqiang, F.; Boxin, Z. Research on Autonomy of UAV System. In Proceedings of the 2021 IEEE 4th International Conference on Automation, Electronics and Electrical Engineering (AUTEEE), Shenyang, China, 19–21 November 2021; pp. 745–750. [Google Scholar]

- Akgul, M.; Yurtseven, H.; Gulci, S.; Akay, A.E. Evaluation of UAV- and GNSS-Based DEMs for Earthwork Volume. Arab. J. Sci. Eng. 2018, 43, 1893–1909. [Google Scholar] [CrossRef]

- Przybilla, H.J.; Baeumker, M. RTK and PPK: GNSS-Technologies for Direct Georeferencing of UAV Image Flights. In Proceedings of the FIG Working Week 2020, Amsterdam, The Netherlands, 10–14 May 2020; p. 52. [Google Scholar]

- Kawaguchi, S.; SuzukiI, K.; Tsuruta, N. Research on an estimation method of inundation depth using RTK-GNSS mounted UAV. J. Jpn. Soc. Civ. Eng. Ser. B2 2019, 75, I_1297–I_1302. [Google Scholar] [CrossRef]

- Huang, L.; Song, J.; Zhang, C.; Cai, G. Observable Modes and Absolute Navigation Capability for Landmark-Based IMU/Vision Navigation System of UAV. Optik 2020, 202, 163725. [Google Scholar] [CrossRef]

- Yang, Q.; Sun, L. A Fuzzy Complementary Kalman Filter Based on Visual and IMU Data for UAV Landing. Optik 2018, 173, 279–291. [Google Scholar] [CrossRef]

- Varbla, S.; Puust, R.; Ellmann, A. Accuracy Assessment of RTK-GNSS Equipped UAV Conducted as-Built Surveys for Construction Site Modelling. Surv. Rev. 2021, 53, 477–492. [Google Scholar] [CrossRef]

- Ćwiąkała, P. Testing Procedure of Unmanned Aerial Vehicles (UAVs) Trajectory in Automatic Missions. Appl. Sci. 2019, 9, 3488. [Google Scholar] [CrossRef]

- Dadkhah, N.; Mettler, B. Survey of Motion Planning Literature in the Presence of Uncertainty: Considerations for UAV Guidance. J. Intell. Robot. Syst. 2012, 65, 233–246. [Google Scholar] [CrossRef]

- la Valle, S.M.; Sharma, R. A Framework for Motion Planning in Stochastic Environments: Modeling and Analysis. In Proceedings of the IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 3, pp. 3057–3062. [Google Scholar] [CrossRef]

- la Valle, S.M.; Sharma, R. Framework for Motion Planning in Stochastic Environments: Applications and Computational Issues. In Proceedings of the IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 3, pp. 3063–3068. [Google Scholar] [CrossRef]

- Um, I.; Park, S.; Kim, H.T.; Kim, H. Configuring RTK-GPS Architecture for System Redundancy in Multi-Drone Operations. IEEE Access 2020, 8, 76228–76242. [Google Scholar] [CrossRef]

- Bakuła, M.; Pelc-Mieczkowska, R.; Walawski, M. Reliable and Redundant RTK Positioning for Applications in Hard Observational Conditions. Artif. Satell. 2012, 47, 23–33. [Google Scholar] [CrossRef]

- Eker, R.; Alkan, E.; Aydin, A. Accuracy Comparison of UAV-RTK and UAV-PPK Methods in Mapping Different Surface Types. Eur. J. For. Eng. 2021, 7, 12–25. [Google Scholar] [CrossRef]

- Joo, Y.; Ahn, Y. Enhancement of UAV-Based Spatial Positioning Using the Triangular Center Method with Multiple GPS. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 379–388. [Google Scholar] [CrossRef]

- Stempfhuber, W.; Buchholz, M. A Precise, Low-Cost Rtk Gnss System for Uav Applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3822, 289–293. [Google Scholar] [CrossRef]

- Rieke, M.; Foerster, T.; Geipel, J.; Prinz, T. High-Precision Positioning and Real-Time Data Processing of Uav-Systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 38, 119–124. [Google Scholar] [CrossRef]

- Singh, C.; Mishra, V.; Harshit, H.; Jain, K.; Mokros, M. Application of uav swarm semi-autonomous system for the linear photogrammetric survey. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B1-2022, 407–413. [Google Scholar] [CrossRef]

- Nagai, M.; Chen, T.; Shibasaki, R.; Kumagai, H.; Ahmed, A. UAV-Borne 3-D Mapping System by Multisensor Integration. IEEE Trans. Geosci. Remote Sens. 2009, 47, 701–708. [Google Scholar] [CrossRef]

- Nagai, M.; Chen, T.; Ahmed, A.; Shibasaki, R. UAV-Based Mapping System by Multi-Sensor Integration. In Proceedings of the 29th Asian Conference on Remote Sensing 2008, Colombo, Sri Lanka, 10–14 November 2008; Volume 1. [Google Scholar]

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Munguía, R.; Urzua, S.; Bolea, Y.; Grau, A. Vision-Based SLAM System for Unmanned Aerial Vehicles. Sensors 2016, 16, 372. [Google Scholar] [CrossRef]

- Shang, Z.; Shen, Z. Real-Time 3D Reconstruction on Construction Site Using Visual SLAM and UAV. In Proceedings of the Construction Research Congress 2018, Reston, VA, USA, 29 March 2018; pp. 305–315. [Google Scholar]

- Huang, F.; Yang, H.; Tan, X.; Peng, S.; Tao, J.; Peng, S. Fast Reconstruction of 3D Point Cloud Model Using Visual SLAM on Embedded UAV Development Platform. Remote Sens. 2020, 12, 3308. [Google Scholar] [CrossRef]

- Schneider, J.; Eling, C.; Klingbeil, L.; Kuhlmann, H.; Forstner, W.; Stachniss, C. Fast and Effective Online Pose Estimation and Mapping for UAVs. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Guizilini, V.; Ramos, F. Visual Odometry Learning for Unmanned Aerial Vehicles. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 6213–6220. [Google Scholar]

- George, A.; Koivumäki, N.; Hakala, T.; Suomalainen, J.; Honkavaara, E. Visual-Inertial Odometry Using High Flying Altitude Drone Datasets. Drones 2023, 7, 36. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 13–16 November 2007; pp. 1–10. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense Tracking and Mapping in Real-Time. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 6–13 November 2011. [Google Scholar]

- Kingston, D.; Beard, R. Real-Time Attitude and Position Estimation for Small UAVs Using Low-Cost Sensors. In Proceedings of the AIAA 3rd “Unmanned Unlimited” Technical Conference, Workshop and Exhibit, Reston, VA, USA, 20 September 2004. [Google Scholar]

- Adams, J.C.; Gregorwich, W.; Capots, L.; Liccardo, D. Ultra-Wideband for Navigation and Communications. IEEE Aerosp. Conf. Proc. 2001, 2, 785–792. [Google Scholar] [CrossRef]

- Amt, J.H.R.; Raquet, J.F. Flight Testing of a Pseudolite Navigation System on a UAV. In Proceedings of the Institute of Navigation, National Technical Meeting, Fort Worth, TX, USA, 25–28 September 2007; Volume 2. [Google Scholar]

- Rizos, C.; Yang, L. Background and Recent Advances in the Locata Terrestrial Positioning and Timing Technology. Sensors 2019, 19, 1821. [Google Scholar] [CrossRef]

- Lee, I.S.; Ge, L. The Performance of RTK-GPS for Surveying under Challenging Environmental Conditions. Earth Planets Space 2006, 58, 515–522. [Google Scholar] [CrossRef]

- Kowalczyk, K. Analiza Błędów Generowanych Podczas Pomiaru Szczegółów Sytuacyjnych Metodą GPS RTK. Acta Sci. Polonorum. Geod. Descr. Terrarum 2011, 10, 5–21. [Google Scholar]

- Bakuła, M.; Pelc-Mieczkowska, R.; Chodnicka, B.; Rogala, M.; Tyszko, A. Initial Results of RTK/OTF Positioning Using the Ntrip Data Teletransmission Technology. Tech. Sci. 2008, 11, 213–227. [Google Scholar] [CrossRef]

- Beshr, A.A.A.; Abo Elnaga, I.M. Investigating the Accuracy of Digital Levels and Reflectorless Total Stations for Purposes of Geodetic Engineering. Alex. Eng. J. 2011, 50, 399–405. [Google Scholar] [CrossRef]

- Chella Kavitha, M.N.; Viswanath, R.; Kavibharathi, P.; Aakash, K.; Balajimanikandan, M. A Comparative Study of Conventional Surveying Techniques with Total Station and GPS. Int. J. Civ. Eng. Technol. 2018, 9, 440–446. [Google Scholar]

- Kizil, U.; Tisor, L. Evaluation of RTK-GPS and Total Station for Applications in Land Surveying. J. Earth Syst. Sci. 2011, 120, 215–221. [Google Scholar] [CrossRef]

- Cellmer, S. The Real Time Precise Positioning Using MAFA Method. In Proceedings of the 8th International Conference on Environmental Engineering, Vilnius, Lithuania, 19–20 May 2011. [Google Scholar]

| Tachometer K | UAV L | Odds K-L (m) | ||||

|---|---|---|---|---|---|---|

| Northing (m) | Easting (m) | Northing (m) | Easting (m) | |||

| Minimum | 5,959,078.56 | 7,464,512.84 | 5,959,078.63 | 7,464,512.83 | −0.06 | 0.02 |

| Maximum | 5,959,078.59 | 7,464,512.86 | 5,959,078.65 | 7,464,512.85 | −0.07 | 0.02 |

| Mean | 5,959,078.57 | 7,464,512.85 | 5,959,078.64 | 7,464,512.84 | −0.06 | 0.01 |

| Std deviation | 0.01 | 0.01 | 0.01 | 0.00 | − | − |

| Tachometer K | UAV L | Odds K-L (m) | ||||

|---|---|---|---|---|---|---|

| Northing (m) | Easting (m) | Northing (m) | Easting (m) | |||

| Minimum | 5959,078.59 | 7,464,512.81 | 5,959,078.64 | 7,464,512.80 | −0.05 | 0.01 |

| Maximum | 5,959,078.64 | 7,464,512.83 | 5,959,078.71 | 7,464,512.84 | −0.07 | −0.01 |

| Mean | 5,959,078.61 | 7,464,512.82 | 5,959,078.68 | 7,464,512.83 | −0.06 | −0.01 |

| Std deviation | 0.05 | 0.01 | 0.03 | 0.01 | − | − |

| Tachometer K | UAV L | Odds K-L (m) | ||||

|---|---|---|---|---|---|---|

| Northing (m) | Easting (m) | Northing (m) | Easting (m) | |||

| Minimum | 5,959,078.72 | 7,464,512.75 | 5,959,078.75 | 7,464,512.76 | −0.04 | −0.01 |

| Maximum | 5,959,078.85 | 7,464,512.79 | 5,959,078.88 | 7,464,512.83 | −0.04 | −0.04 |

| Mean | 5,959,078.77 | 7,464,512.78 | 5,959,078.83 | 7,464,512.79 | −0.05 | −0.01 |

| Std deviation | 0.05 | 0.02 | 0.05 | 0.02 | − | − |

| UAV (L) | Tachometer (K) | |||

|---|---|---|---|---|

| Northing (m) | Easting (m) | Northing (m) | Easting (m) | |

| Altitude | 1.5 m | |||

| Difference (m) | 0.02 | 0.02 | 0.02 | 0.02 |

| Standard deviation (m) | 0.01 | 0.01 | 0.01 | 0.00 |

| Altitude | 5 m | |||

| Difference (m) | 0.04 | 0.03 | 0.07 | 0.04 |

| Standard deviation (m) | 0.05 | 0.01 | 0.03 | 0.01 |

| Altitude | 10 m | |||

| Difference (m) | 0.13 | 0.04 | 0.13 | 0.07 |

| Standard deviation (m) | 0.05 | 0.02 | 0.05 | 0.02 |

| Experiment 1 | Experiment 2 | Experiment 3 | ||||

|---|---|---|---|---|---|---|

| Northing (m) | Easting (m) | Northing (m) | Easting (m) | Northing (m) | Easting (m) | |

| Min difference (m) | −0.050 | 0.010 | −0.090 | −0.057 | 0.010 | 0.010 |

| Max Difference (m) | −0.070 | 0.010 | 0.181 | 0.165 | 1.130 | 1.347 |

| Standard deviation (m) | 0.05 | 0.03 | 0.040 | 0.070 | 0.313 | 0.357 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Czyża, S.; Szuniewicz, K.; Kowalczyk, K.; Dumalski, A.; Ogrodniczak, M.; Zieleniewicz, Ł. Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK. Sensors 2023, 23, 2092. https://doi.org/10.3390/s23042092

Czyża S, Szuniewicz K, Kowalczyk K, Dumalski A, Ogrodniczak M, Zieleniewicz Ł. Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK. Sensors. 2023; 23(4):2092. https://doi.org/10.3390/s23042092

Chicago/Turabian StyleCzyża, Szymon, Karol Szuniewicz, Kamil Kowalczyk, Andrzej Dumalski, Michał Ogrodniczak, and Łukasz Zieleniewicz. 2023. "Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK" Sensors 23, no. 4: 2092. https://doi.org/10.3390/s23042092

APA StyleCzyża, S., Szuniewicz, K., Kowalczyk, K., Dumalski, A., Ogrodniczak, M., & Zieleniewicz, Ł. (2023). Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK. Sensors, 23(4), 2092. https://doi.org/10.3390/s23042092