A YOLOX-Based Automatic Monitoring Approach of Broken Wires in Prestressed Concrete Cylinder Pipe Using Fiber-Optic Distributed Acoustic Sensors

Abstract

1. Introduction

2. Fundamentals and Methods

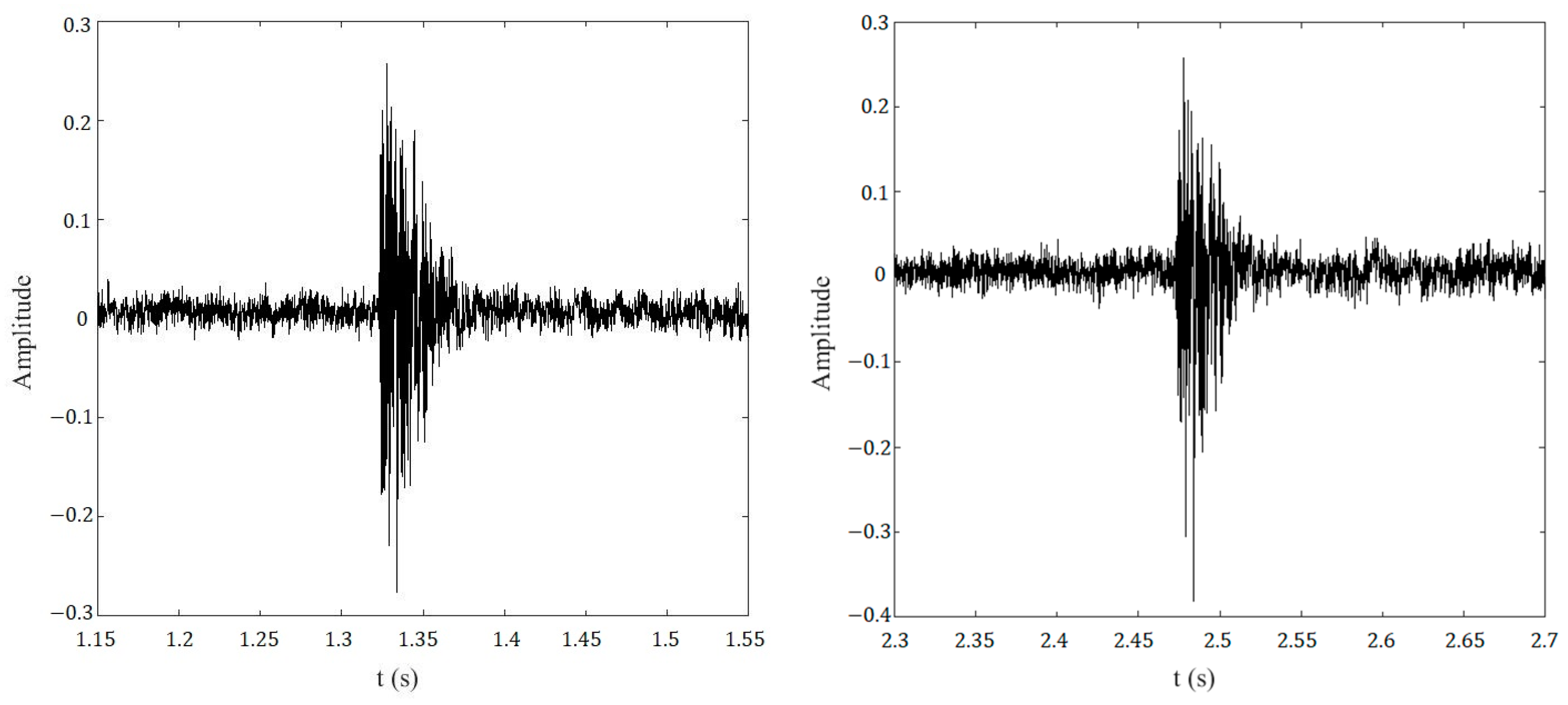

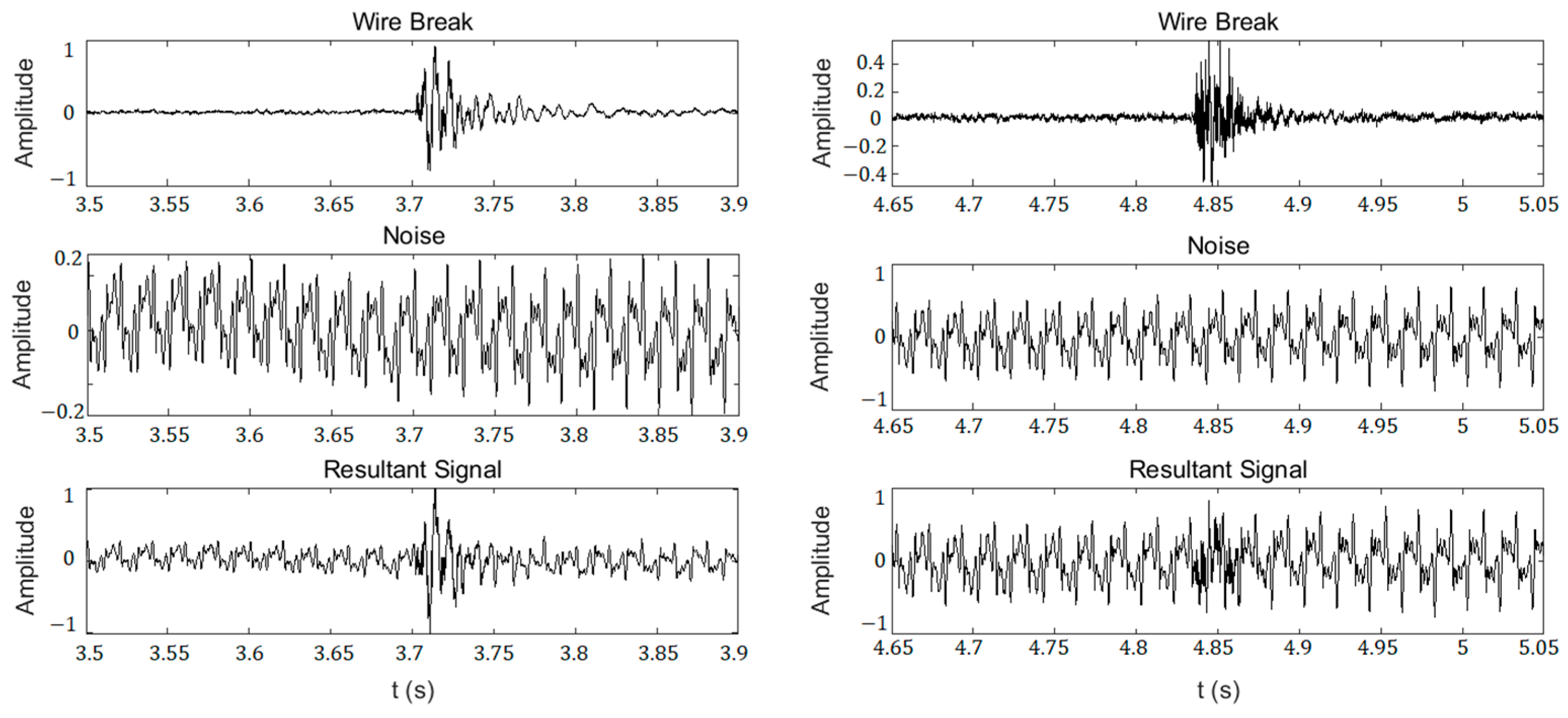

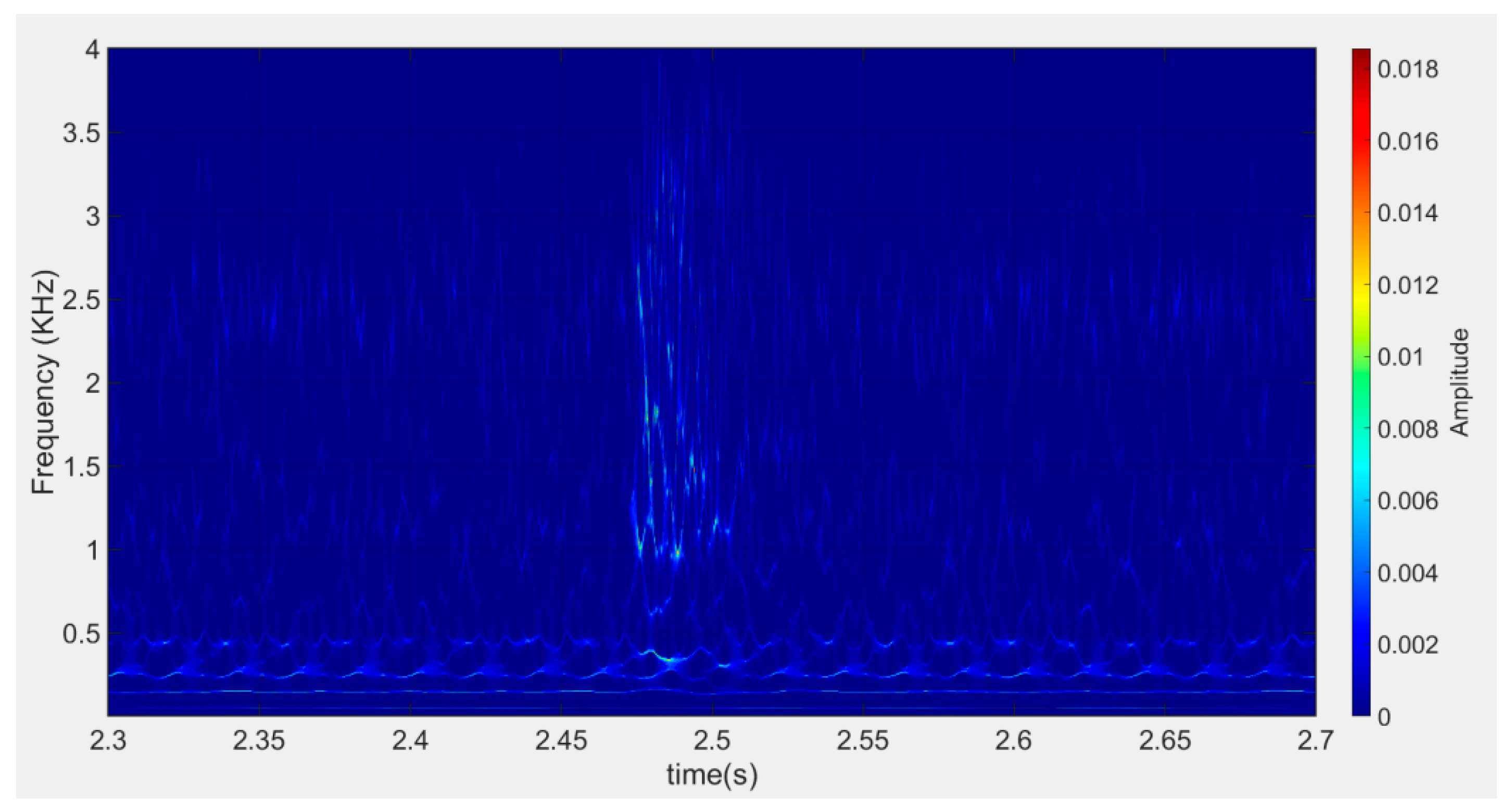

2.1. Acoustic Signal Processing for Wire Breaks

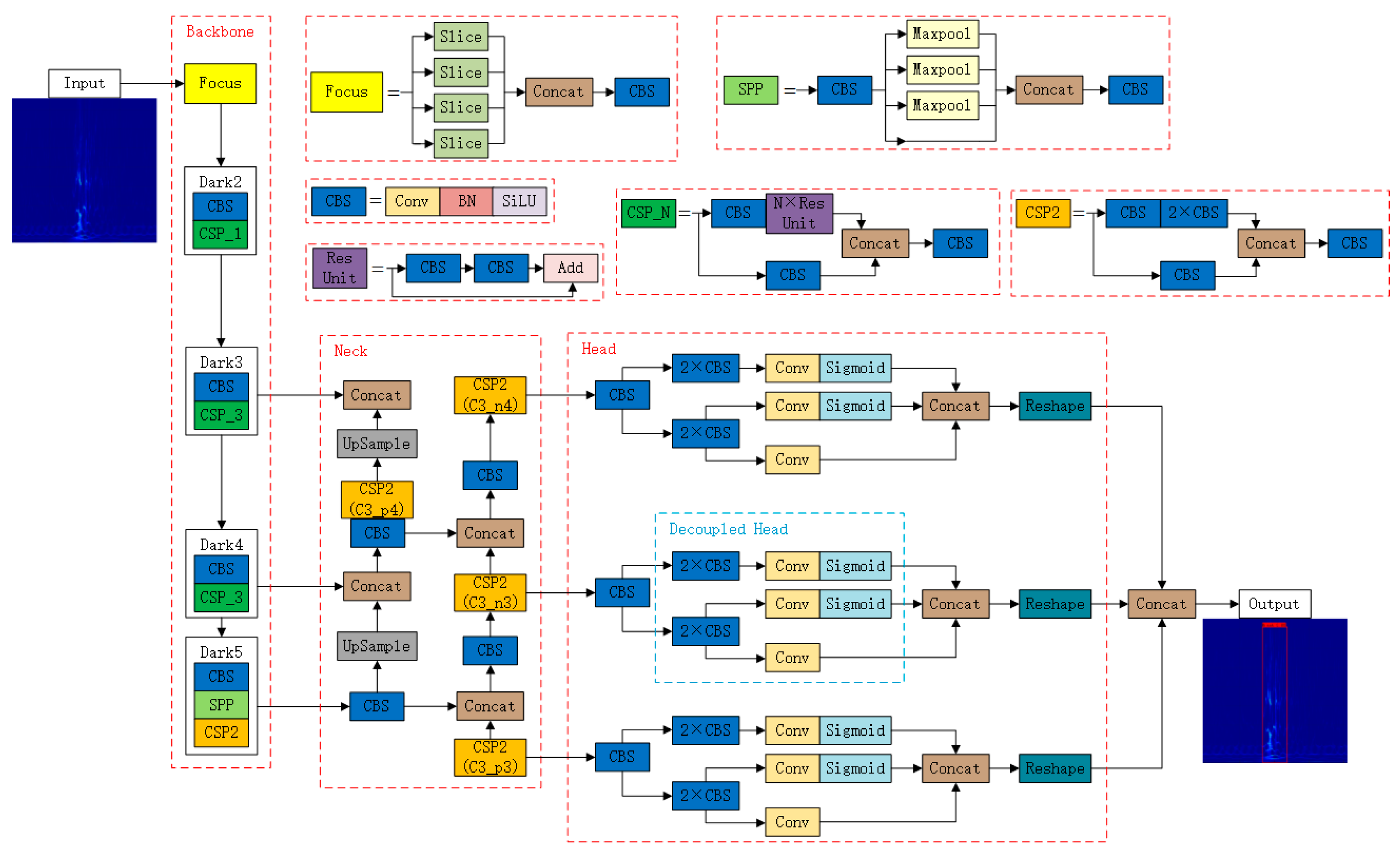

2.2. Neural Network Architecture

2.3. Pruning Algorithm for YOLOXs Model

2.4. Fusing Convolution Layers with BN Layers

3. Brief Test Summary

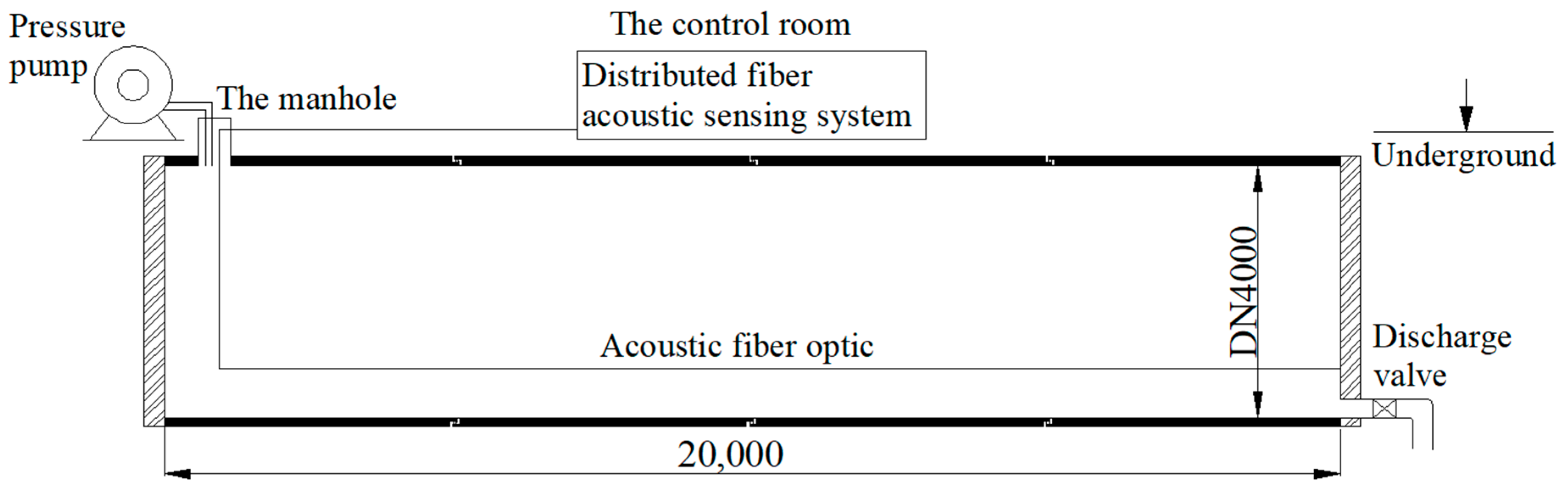

3.1. Wire Break Monitoring Test

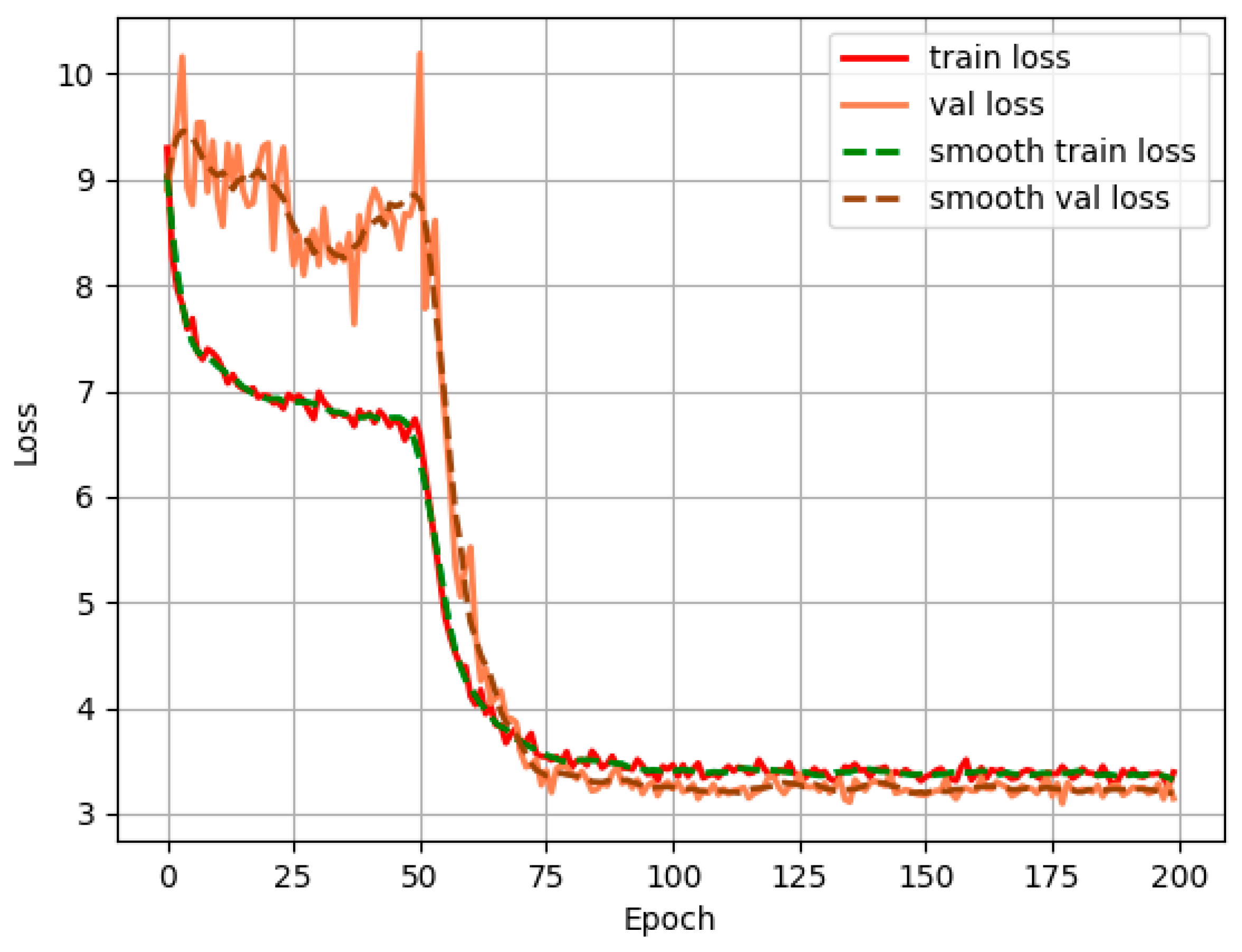

3.2. Network Training

3.2.1. Training Platform

3.2.2. Training Dataset

3.2.3. Fine Tuning YOLOXs

3.2.4. Pruning the YOLOXs Model

4. Results and Discussion

4.1. Evaluation Criteria

4.2. Results

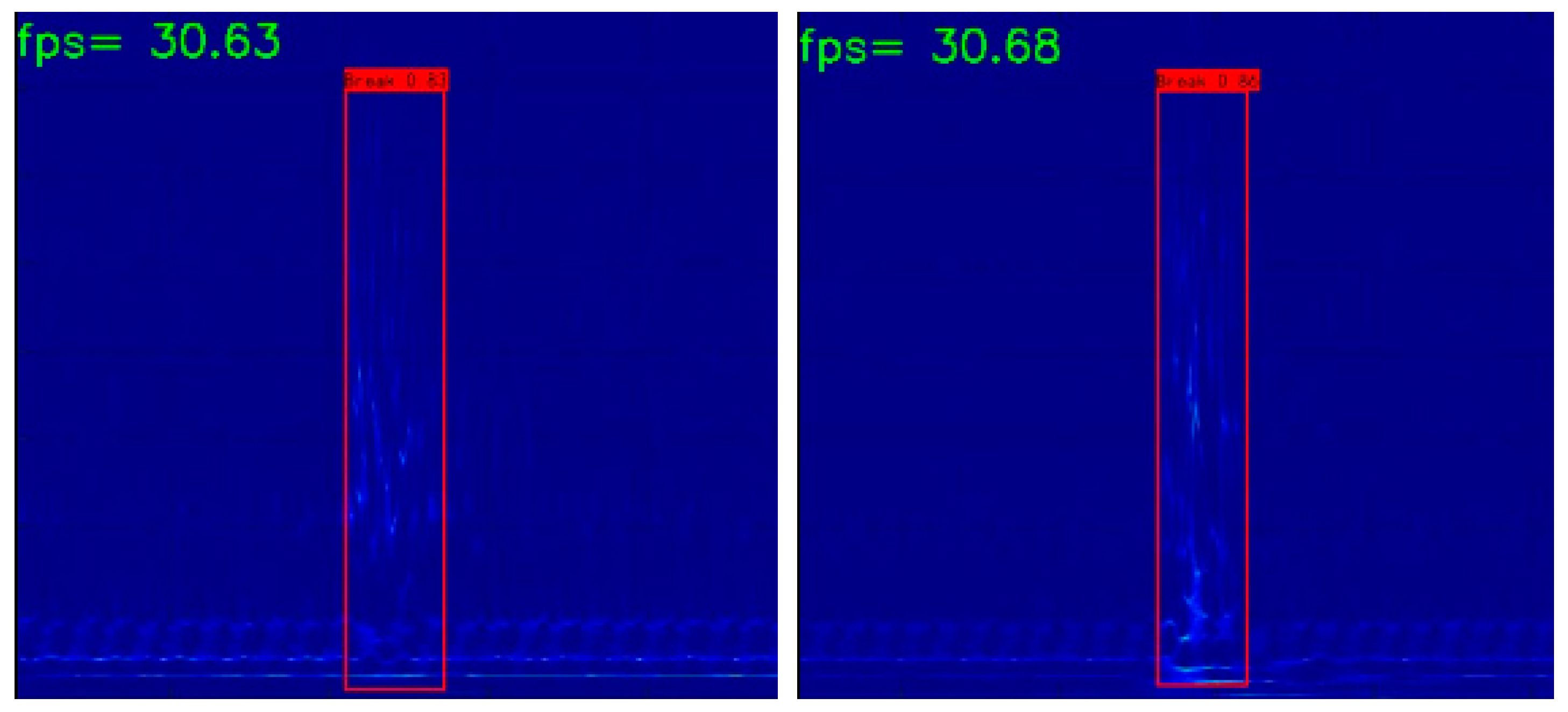

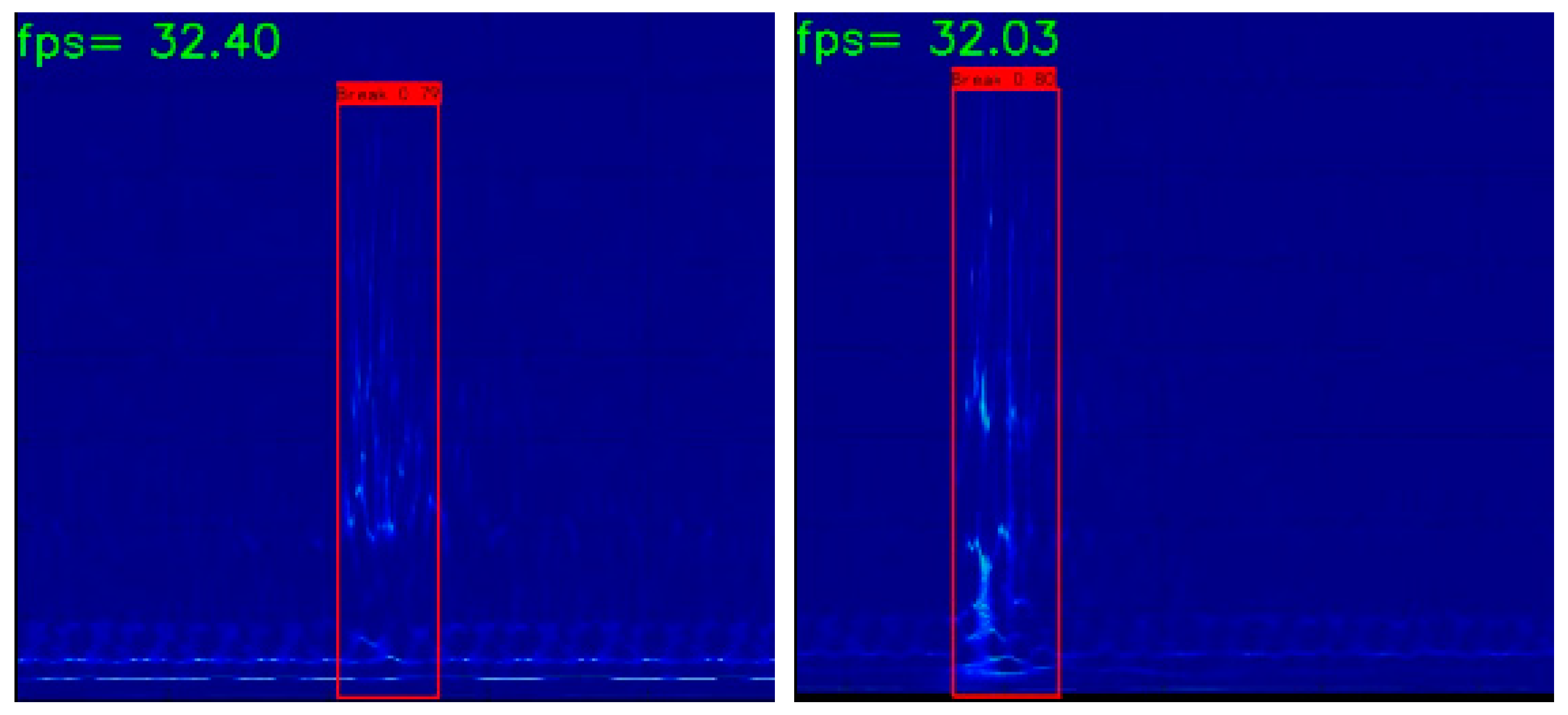

4.2.1. Wire Break Detection Results of the Well-Tuned YOLOXs Model

4.2.2. Wire Break Detection Results for the Pruned YOLOXs Model

4.3. Discussion

4.3.1. Comparison before and after Pruning

4.3.2. Fusing BN and Convolution Layers to Accelerate Inference

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Holley, M.; Diaz, R.; Giovanniello, M. Acoustic monitoring of prestressed concrete cylinder pipe: A case history. In Pipelines 2001: Advances in Pipelines Engineering and Construction; ACSE: Reston, VA, USA, 2001; pp. 1–9. [Google Scholar]

- Feng, X.; Li, H.; Chen, B.; Zhao, L.; Zhou, J. Numerical investigations into the failure mode of buried prestressed concrete cylinder pipes under differential settlement. Eng. Fail. Anal. 2020, 111, 104492. [Google Scholar] [CrossRef]

- Wang, X.; Hu, S.; Li, W.; Qi, H.; Xue, X. Use of numerical methods for identifying the number of wire breaks in prestressed concrete cylinder pipe by piezoelectric sensing technology. Constr. Build. Mater. 2021, 268, 121207. [Google Scholar] [CrossRef]

- Dong, X.; Dou, T.; Dong, P.; Wang, Z.; Li, Y.; Ning, J.; Wei, J.; Li, K.; Cheng, B. Failure experiment and calculation model for prestressed concrete cylinder pipe under three-edge bearing test using distributed fiber optic sensors. Tunn. Undergr. Space Technol. 2022, 129, 104682. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, Z.; Zhang, D.; Yao, X.; Li, L. Online monitoring of wire breaks in prestressed concrete cylinder pipe utilising fiber Bragg grating sensors. Measurement 2016, 79, 112–118. [Google Scholar] [CrossRef]

- Dong, X.; Dou, T.; Cheng, B.; Zhao, L. Failure analysis of a prestressed concrete cylinder pipe under clustered broken wires by FEM. Structures 2021, 33, 3284–3297. [Google Scholar] [CrossRef]

- Li, K.; Li, Y.; Dong, P.; Wang, Z.; Dou, T.; Ning, J.; Dong, X.; Si, Z.; Wang, J. Mechanical properties of prestressed concrete cylinder pipe with broken wires using distributed fiber optic sensors. Eng. Fail. Anal. 2022, 141, 106635. [Google Scholar] [CrossRef]

- Hajali, M.; Alavinasab, A.; Shdid, C.A. Effect of the location of broken wire wraps on the failure pressure of prestressed concrete cylinder pipes. Struct. Concr. 2015, 16, 297–303. [Google Scholar] [CrossRef]

- Ge, S.; Sinha, S. Failure analysis, condition assessment technologies, and performance prediction of prestressed-concrete cylinder pipe: State-of-the-art literature review. J. Perform. Constr. Facil. 2014, 28, 618–628. [Google Scholar] [CrossRef]

- Zhai, K.; Fang, H.; Fu, B.; Wang, F.; Hu, B. Mechanical response of externally bonded CFRP on repair of PCCPs with broken wires under internal water pressure. Constr. Build. Mater. 2020, 239, 117878. [Google Scholar] [CrossRef]

- Zhai, K.; Fang, H.; Guo, C.; Ni, P.; Wu, H.; Wang, F. Full-scale experiment and numerical simulation of prestressed concrete cylinder pipe with broken wires strengthened by prestressed CFRP. Tunn. Undergr. Space Technol. 2021, 115, 104021. [Google Scholar] [CrossRef]

- Zarghamee, M.S.; Eggers, D.W.; Ojdrovic, R.P. Finite-element modeling of failure of PCCP with broken wires subjected to combined loads. In Pipelines 2002: Beneath Our Feet: Challenges and Solutions; ACSE: Reston, VA, USA, 2002; pp. 1–17. [Google Scholar]

- You, R.; Gong, H.B. Failure analysis of PCCP with broken wires. In Applied Mechanics and Materials; Trans Tech Publications Ltd.: Bäch, Switzerland, 2012; pp. 855–858. [Google Scholar]

- Hajali, M.; Alavinasab, A.; Abi Shdid, C. Structural performance of buried prestressed concrete cylinder pipes with harnessed joints interaction using numerical modeling. Tunn. Undergr. Space Technol. 2016, 51, 11–19. [Google Scholar] [CrossRef]

- Hu, B.; Fang, H.; Wang, F.; Zhai, K. Full-scale test and numerical simulation study on load-carrying capacity of prestressed concrete cylinder pipe (PCCP) with broken wires under internal water pressure. Eng. Fail. Anal. 2019, 104, 513–530. [Google Scholar] [CrossRef]

- Wang, D.Y.; Zhu, H.H.; Wang, B.J.; Shi, B. Performance evaluation of buried pipe under loading using fiber Bragg grating and particle image velocimetry techniques. Measurement 2021, 186, 110086. [Google Scholar] [CrossRef]

- Elfergani, H.A.; Pullin, R.; Holford, K.M. Damage assessment of corrosion in prestressed concrete by acoustic emission. Constr. Build. Mater. 2013, 40, 925–933. [Google Scholar] [CrossRef]

- Tennyson, R.C.; Morison, W.D.; Miesner, T. Pipeline integrity assessment using fiber optic sensors. In Pipelines 2005: Optimizing Pipeline Design, Operations, and Maintenance in Today’s Economy; ACSE: Reston, VA, USA, 2005; pp. 803–817. [Google Scholar]

- Higgins, M.S.; Paulson, P.O. Fiber optic sensors for acoustic monitoring of PCCP. In Pipelines 2006: Service to the Owner; ACSE: Reston, VA, USA, 2006; pp. 1–8. [Google Scholar]

- Bell, G.E.C.; Paulson, P. Measurement and analysis of PCCP wire breaks, slips, and delaminations. In Pipelines 2010: Climbing New Peaks to Infrastructure Reliability: Renew, Rehab, and Reinvest; ACSE: Reston, VA, USA, 2010; pp. 1016–1024. [Google Scholar]

- Habel, W.R.; Krebber, K. Fiber-optic sensor applications in civil and geotechnical engineering. Photonic Sens. 2011, 1, 268–280. [Google Scholar] [CrossRef]

- Galleher, J.J., Jr.; Holley, M.; Shenkiryk, M. Acoustic Fiber Optic Monitoring: How It Is Changing the Remaining Service Life of the Water Authority’s Pipelines. In Pipelines 2009: Infrastructure’s Hidden Assets; Holley, M., Ed.; ACSE: Reston, VA, USA, 2009; pp. 21–29. [Google Scholar]

- He, Z.; Liu, Q. Optical fiber distributed acoustic sensors: A review. J. Light. Technol. 2021, 39, 3671–3686. [Google Scholar] [CrossRef]

- Shiloh, L.; Eyal, A.; Giryes, R. Efficient processing of distributed acoustic sensing data using a deep learning approach. J. Light. Technol. 2019, 37, 4755–4762. [Google Scholar] [CrossRef]

- Liu, H.; Ma, J.; Xu, T.; Yan, W.; Ma, L.; Zhang, X. Vehicle detection and classification using distributed fiber optic acoustic sensing. IEEE Trans. Veh. Technol. 2019, 69, 1363–1374. [Google Scholar] [CrossRef]

- Liu, H.; Ma, J.; Yan, W.; Liu, W.; Zhang, X.; Li, C. Traffic flow detection using distributed fiber optic acoustic sensing. IEEE Access 2018, 6, 68968–68980. [Google Scholar] [CrossRef]

- Daley, T.M.; Freifeld, B.M.; Ajo-Franklin, J.; Dou, S.; Pevzner, R.; Shulakova, V.; Kashikar, S.; Miller, D.; Goetz, J.; Henninges, J.; et al. Field testing of fiber-optic distributed acoustic sensing (DAS) for subsurface seismic monitoring. Lead. Edge 2013, 32, 699–706. [Google Scholar] [CrossRef]

- Jousset, P.; Currenti, G.; Schwarz, B.; Athena, C.; Frederik, T.; Thomas, R.; Luciano, Z.; Eugenio, P.; Charlotte, M.K. Fiber optic distributed acoustic sensing of volcanic events. Nat. Commun. 2022, 13, 1–16. [Google Scholar]

- Bakhoum, E.G.; Zhang, C.; Cheng, M.H. Real time measurement of airplane flutter via distributed acoustic sensing. Aerospace 2020, 7, 125. [Google Scholar] [CrossRef]

- Li, Y.; Sun, K.; Si, Z.; Chen, F.; Tao, L.; Li, K.; Zhou, H. Monitoring and identification of wire breaks in prestressed concrete cylinder pipe based on distributed fiber optic acoustic sensing. J. Civ. Struct. Health Monit. 2022, 1–12. [Google Scholar] [CrossRef]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. Structures 2022, 37, 426–441. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Boddapati, V.; Petef, A.; Rasmusson, J.; Lundberg, L. Classifying environmental sounds using image recognition networks. Procedia Comput. Sci. 2017, 112, 2048–2056. [Google Scholar] [CrossRef]

- Zhang, H.; McLoughlin, I.; Song, Y. Robust sound event recognition using convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QL, Australia, 19–24 April 2015; pp. 559–563. [Google Scholar]

- Mushtaq, Z.; Su, S.F.; Tran, Q.V. Spectral images based environmental sound classification using CNN with meaningful data augmentation. Appl. Acoust. 2021, 172, 107581. [Google Scholar] [CrossRef]

- Peng, Z.; Jian, J.; Wen, H.; Gribok, A.; Wang, M.; Liu, H.; Huang, S.; Mao, Z.H.; Chen, K.P. Distributed fiber sensor and machine learning data analytics for pipeline protection against extrinsic intrusions and intrinsic corrosions. Opt. Express 2020, 28, 27277–27292. [Google Scholar] [CrossRef]

- Jakkampudi, S.; Shen, J.; Li, W.; Dev, A.; Zhu, T.; Martin, E. Footstep detection in urban seismic data with a convolutional neural network. Lead. Edge 2020, 39, 654–660. [Google Scholar] [CrossRef]

- Huot, F.; Biondi, B. Machine learning algorithms for automated seismic ambient noise processing applied to DAS acquisition. In Proceedings of the 2018 SEG International Exposition and Annual Meeting, Anaheim, CA, USA, 14–19 October 2018. [Google Scholar]

- KC, S. Enhanced pothole detection system using YOLOX algorithm. Auton. Intell. Syst. 2022, 2, 1–16. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding Yolo Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Stork, A.L.; Baird, A.F.; Horne, S.A.; Naldrett, G.; Lapins, S.; Kendall, J.M.; Wookey, J.; Verdon, J.P.; Clarke, A.; Williams, A. Application of machine learning to microseismic event detection in distributed acoustic sensing data. Geophysics 2020, 85, KS149–KS160. [Google Scholar] [CrossRef]

- Luo, Q.; Wang, J.; Gao, M.; Lin, H.; Zhou, H.; Miao, Q. G-YOLOX: A Lightweight Network for Detecting Vehicle Types. J. Sens. 2022, 2022, 4488400. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, W.; Yang, S.; Xu, Y.; Yu, X. Improved YOLOX detection algorithm for contraband in X-ray images. Appl. Opt. 2022, 61, 6297–6310. [Google Scholar] [CrossRef] [PubMed]

- Mushtaq, Z.; Su, S.F. Efficient classification of environmental sounds through multiple features aggregation and data enhancement techniques for spectrogram images. Symmetry 2020, 12, 1822. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Daubechies, I.; Lu, J.; Wu, H.T. Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appl. Comput. Harmon. Anal. 2011, 30, 243–261. [Google Scholar] [CrossRef]

- Daubechies, I. Ten Lectures on Wavelets; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1992. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Ma-chine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

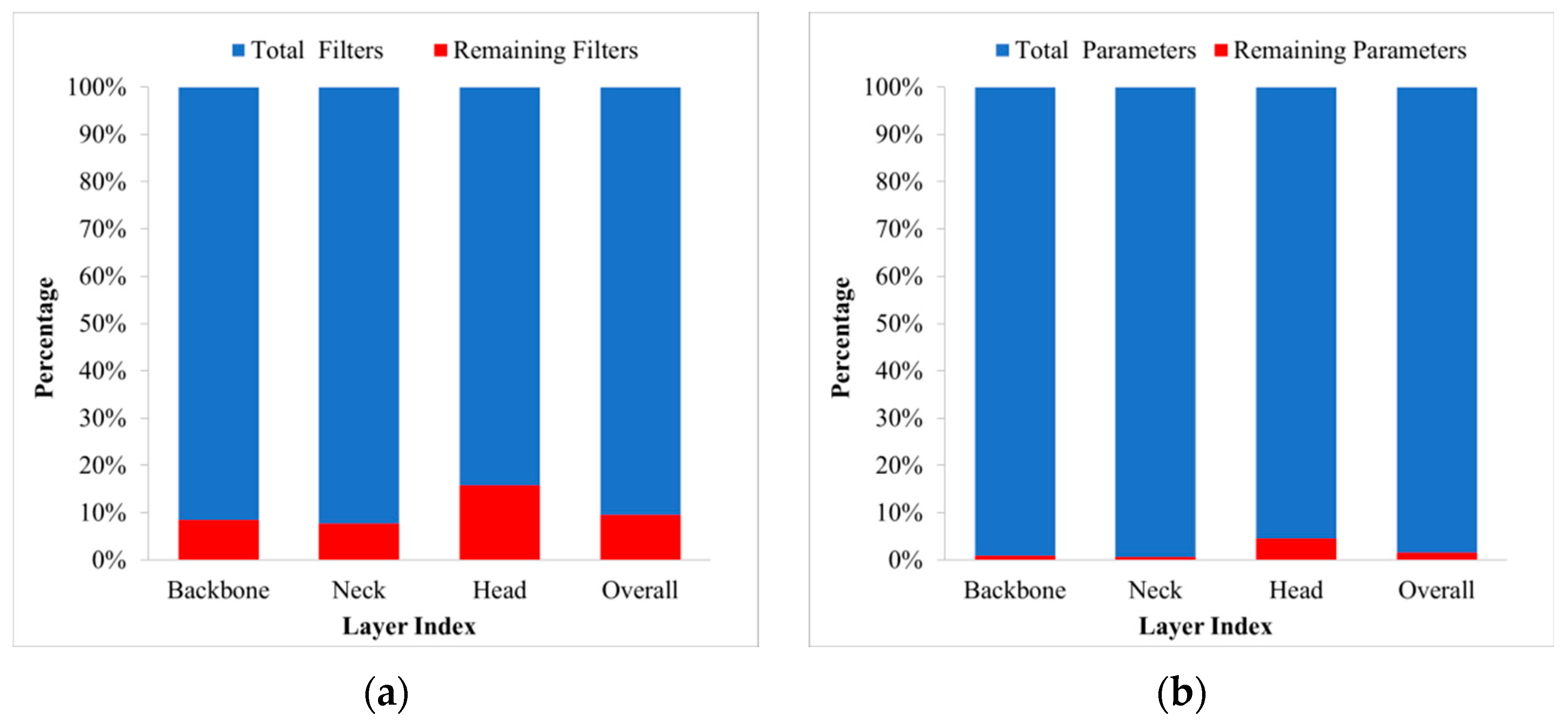

| Layer | Backbone | Neck | Head | Overall | ||||

|---|---|---|---|---|---|---|---|---|

| Model | YOLOXs | Pruned YOLOXs | YOLOXs | Pruned YOLOXs | YOLOXs | Pruned YOLOXs | YOLOXs | Pruned YOLOXs |

| Number of Filters | 5408 | 502 | 4224 | 348 | 1938 | 363 | 11,570 | 1213 |

| Pruning Rate | 0.91 | 0.92 | 0.81 | 0.90 | ||||

| Number of Parameters | Number of Filters | Model Size | F1 Score | Inference | |

|---|---|---|---|---|---|

| YOLOXs | 8,619,648 | 11,570 | 34.3 MB | 1 | 30 fps |

| Pruned YOLOXs | 133,629 | 1213 | 732 KB | 1 | 32 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, B.; Gao, R.; Zhang, J.; Zhu, X. A YOLOX-Based Automatic Monitoring Approach of Broken Wires in Prestressed Concrete Cylinder Pipe Using Fiber-Optic Distributed Acoustic Sensors. Sensors 2023, 23, 2090. https://doi.org/10.3390/s23042090

Ma B, Gao R, Zhang J, Zhu X. A YOLOX-Based Automatic Monitoring Approach of Broken Wires in Prestressed Concrete Cylinder Pipe Using Fiber-Optic Distributed Acoustic Sensors. Sensors. 2023; 23(4):2090. https://doi.org/10.3390/s23042090

Chicago/Turabian StyleMa, Baolong, Ruizhen Gao, Jingjun Zhang, and Xinmin Zhu. 2023. "A YOLOX-Based Automatic Monitoring Approach of Broken Wires in Prestressed Concrete Cylinder Pipe Using Fiber-Optic Distributed Acoustic Sensors" Sensors 23, no. 4: 2090. https://doi.org/10.3390/s23042090

APA StyleMa, B., Gao, R., Zhang, J., & Zhu, X. (2023). A YOLOX-Based Automatic Monitoring Approach of Broken Wires in Prestressed Concrete Cylinder Pipe Using Fiber-Optic Distributed Acoustic Sensors. Sensors, 23(4), 2090. https://doi.org/10.3390/s23042090