WLiT: Windows and Linear Transformer for Video Action Recognition

Abstract

:1. Introduction

- (1)

- We propose a complementary framework of Windows and Linear Transformer (WLiT), which ensures the ability of the model to capture global information while achieving efficient action recognition.

- (2)

- We present the Spatial-Windows attention module that only divides the feature maps along the spatial dimensions, which further reduces the computational complexity.

- (3)

- We fully analyze and discuss the computational complexity of the attention mechanism, and theoretically prove our method.

- (4)

- We conduct a lot of experiments to verify our method. On the SSV2 dataset, our method achieves higher accuracy than the SOTA method while having less computational complexity.

2. Related Works

2.1. Convolution-Based Action Recognition Methods

2.2. Transformer-Based Action Recognition Methods

3. Method

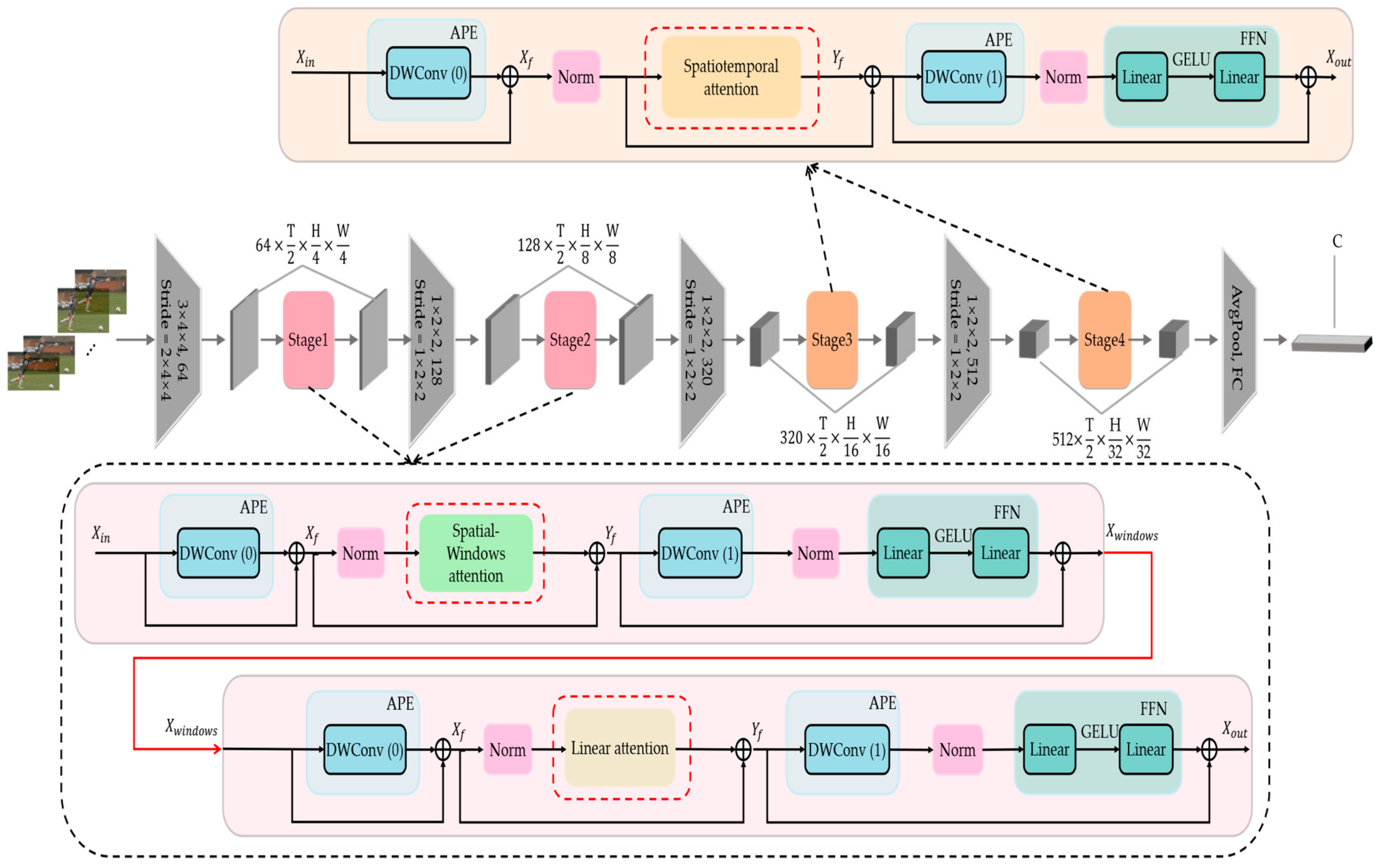

3.1. Overview of WLiT Architecture

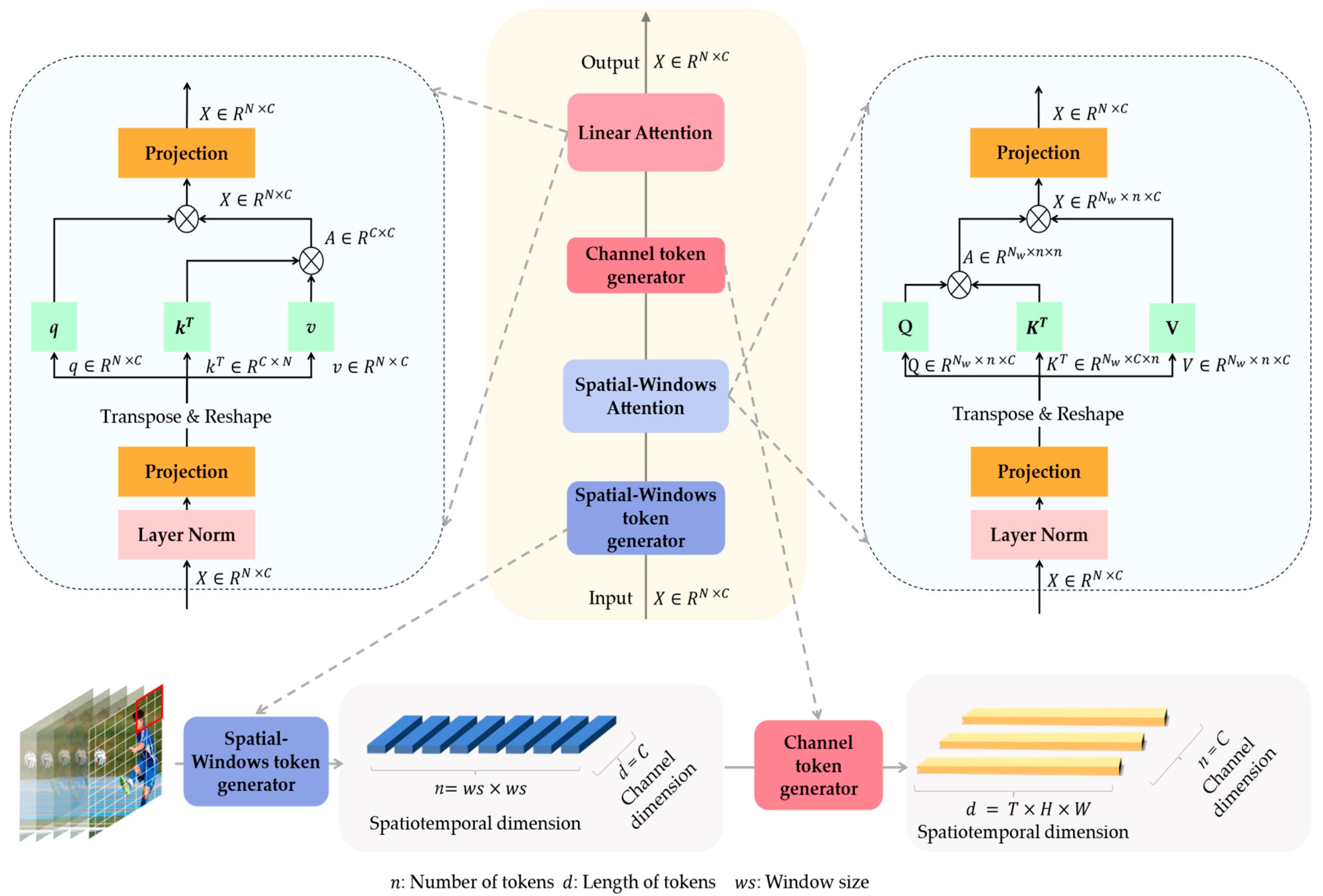

3.2. Spatial-Windows Attention

3.3. Channel-Based Linear Attention

3.4. Adaptive Position Encoding

3.5. Computational Complexity Analysis and Model Structure Design

4. Experiments

4.1. Setup

4.1.1. Datasets

4.1.2. Implementation Details

4.2. Comparison with the State-Of-The-Arts

4.2.1. Kinetics400

4.2.2. Something-Something V2

4.2.3. UCF101 and HMDB51

4.3. Ablation Study

4.3.1. The Performance of the Linear Attention

4.3.2. The Performance of the Extra FFN for the Linear Attention

4.3.3. The Performance of the Adaptive Position Encoding

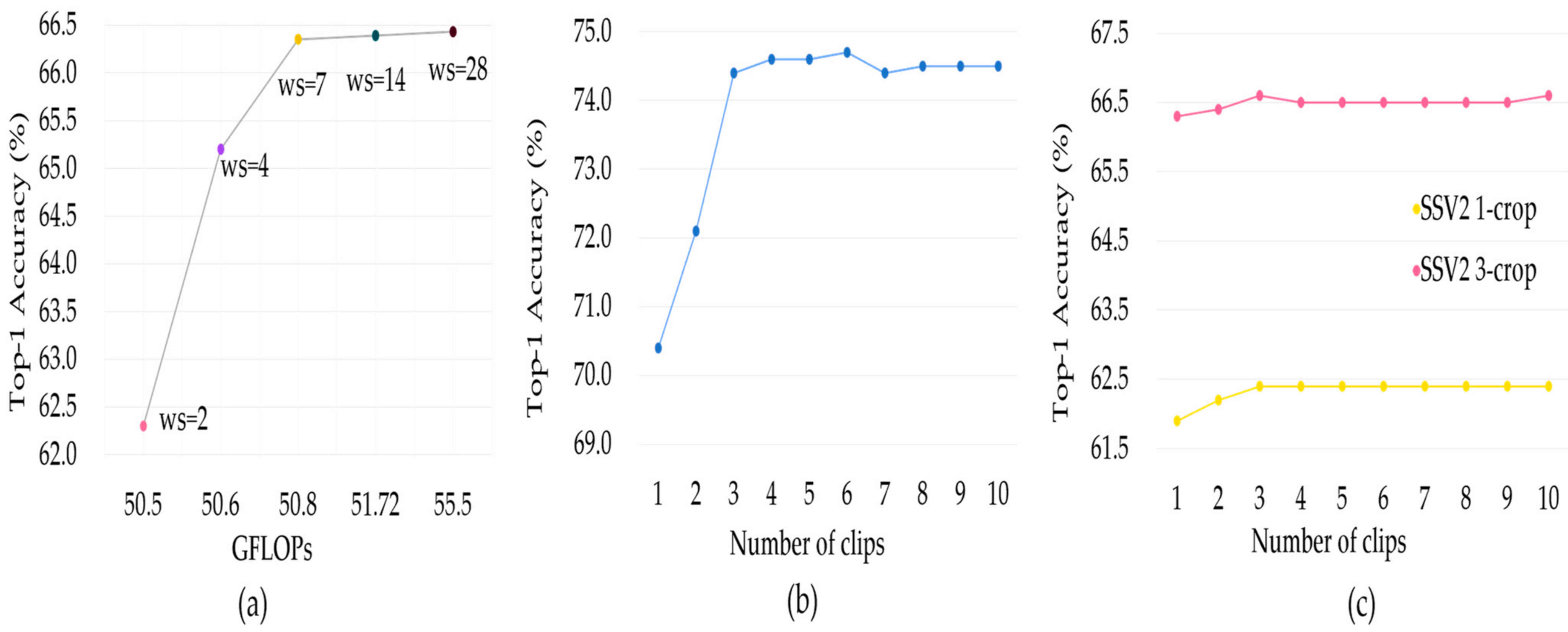

4.3.4. Empirical Investigation on Model Settings

4.4. Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhu, Y.; Li, X.; Liu, C.; Zolfaghari, M.; Xiong, Y.; Wu, C.; Zhang, Z.; Tighe, J.; Manmatha, R.; Li, M. A comprehensive study of deep video action recognition. arXiv 2020, arXiv:2012.06567. [Google Scholar]

- Ulhaq, A.; Akhtar, N.; Pogrebna, G.; Mian, A. Vision Transformers for Action Recognition: A Survey. arXiv 2022, arXiv:2209.05700. [Google Scholar]

- Bertasius, G.; Wang, H.; Torresani, L. Is space-time attention all you need for video understanding? In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; Volume 2, No. 3, p. 4. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. NIPS 2017, 30, 1–5, 7. [Google Scholar]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. NIPS 2016, 29, 4898–4906. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 11976–11986. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–12 December 2015; pp. 4489–4497. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Venice, Italy, 22–29 October 2017; pp. 6299–6308. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Chen, M.; Radford, A.; Child, R.; Wu, J.; Jun, H.; Luan, D.; Sutskever, I. Generative pretraining from pixels. In Proceedings of the International Conference on Machine Learning (ICML), Online, 13–18 July 2020; pp. 1691–1703. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural machine translation of rare words with subword units. arXiv 2015, arXiv:1508.07909. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Online, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Vaswani, A.; Ramachandran, P.; Srinivas, A.; Parmar, N.; Hechtman, B.; Shlens, J. Scaling local self-attention for parameter efficient visual backbones. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 12894–12904. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 2736–2746. [Google Scholar]

- Ding, M.; Xiao, B.; Codella, N.; Luo, P.; Wang, J.; Yuan, L. DaViT: Dual Attention Vision Transformers. arXiv 2022, arXiv:2204.03645. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Online, 11–17 October 2021; pp. 22–31. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Li, R.; Su, J.; Duan, C.; Zheng, S. Linear attention mechanism: An efficient attention for semantic segmentation. arXiv 2020, arXiv:2007.14902. [Google Scholar]

- Hu, P.; Perazzi, F.; Heilbron, F.C.; Wang, O.; Lin, Z.; Saenko, K.; Sclaroff, S.J.I.R.; Letters, A. Real-time semantic segmentation with fast attention. IEEE Robot. Autom. Lett. 2020, 6, 263–270. [Google Scholar] [CrossRef]

- Schlag, I.; Irie, K.; Schmidhuber, J. Linear transformers are secretly fast weight programmers. In Proceedings of the International Conference on Machine Learning (ICML), Online, 18–24 July 2021; pp. 9355–9366. [Google Scholar]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video swin transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 3202–3211. [Google Scholar]

- Klaser, A.; Marszałek, M.; Schmid, C. A spatio-temporal descriptor based on 3d-gradients. In Proceedings of the BMVC 2008-19th British Machine Vision Conference, Leeds, UK, 1–4 September 2008; British Machine Vision Association: Durham, UK, 2008; Volume 275, pp. 1–10. [Google Scholar]

- Laptev, I.; Lindeberg, T. On space-time interest points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.-L. Dense trajectories and motion boundary descriptors for action recognition. Int. J. Comput. Vis. 2013, 103, 60–79. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 1725–1732. [Google Scholar]

- Yue-Hei Ng, J.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 4694–4702. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. arXiv 2014, arXiv:1406.2199v2. [Google Scholar]

- Christoph, R.; Pinz, F.A. Spatiotemporal residual networks for video action recognition. Adv. Neural Inf. Process. Syst. 2016, 3, 3468–3476. [Google Scholar]

- Feichtenhofer, C. X3d: Expanding architectures for efficient video recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 203–213. [Google Scholar]

- Sun, L.; Jia, K.; Yeung, D.-Y.; Shi, B.E. Human action recognition using factorized spatio-temporal convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–12 December 2015; pp. 4597–4605. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018; pp. 6450–6459. [Google Scholar]

- Xie, S.; Sun, C.; Huang, J.; Tu, Z.; Murphy, K. Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 305–321. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Cham, Switzerland, 11–14 October 2016; pp. 20–36. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7083–7093. [Google Scholar]

- Li, Y.; Ji, B.; Shi, X.; Zhang, J.; Kang, B.; Wang, L. Tea: Temporal excitation and aggregation for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 909–918. [Google Scholar]

- Wang, L.; Tong, Z.; Ji, B.; Wu, G. Tdn: Temporal difference networks for efficient action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 1895–1904. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Wang, X.; Xiong, X.; Neumann, M.; Piergiovanni, A.; Ryoo, M.S.; Angelova, A.; Kitani, K.M.; Hua, W. Attentionnas: Spatiotemporal attention cell search for video classification. In Proceedings of the European Conference on Computer Vision, Online, 23–28 August 2020; pp. 449–465. [Google Scholar]

- Sharir, G.; Noy, A.; Zelnik-Manor, L. An image is worth 16x16 words, what is a video worth? arXiv 2021, arXiv:2103.13915. [Google Scholar]

- Neimark, D.; Bar, O.; Zohar, M.; Asselmann, D. Video transformer network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 3163–3172. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 6824–6835. [Google Scholar]

- Katharopoulos, A.; Vyas, A.; Pappas, N.; Fleuret, F. Transformers are rnns: Fast autoregressive transformers with linear attention. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020; pp. 5156–5165. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Islam, M.A.; Jia, S.; Bruce, N.D. How much position information do convolutional neural networks encode? arXiv 2020, arXiv:2001.08248. [Google Scholar]

- Li, K.; Wang, Y.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. Uniformer: Unified transformer for efficient spatiotemporal representation learning. arXiv 2022, arXiv:2201.04676. [Google Scholar]

- Goyal, R.; Ebrahimi Kahou, S.; Michalski, V.; Materzynska, J.; Westphal, S.; Kim, H.; Haenel, V.; Fruend, I.; Yianilos, P.; Mueller-Freitag, M. The” something something” video database for learning and evaluating visual common sense. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5842–5850. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International conference on computer vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Fan, Q.; Chen, C.-F.R.; Kuehne, H.; Pistoia, M.; Cox, D. More is less: Learning efficient video representations by big-little network and depthwise temporal aggregation. arXiv 2019, arXiv:1912.00869. [Google Scholar]

- Jiang, B.; Wang, M.; Gan, W.; Wu, W.; Yan, J. Stm: Spatiotemporal and motion encoding for action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2000–2009. [Google Scholar]

- Kwon, H.; Kim, M.; Kwak, S.; Cho, M. Motionsqueeze: Neural motion feature learning for video understanding. In Proceedings of the European Conference on Computer Vision, Online, 23–28 August 2020; pp. 345–362. [Google Scholar]

- Li, K.; Li, X.; Wang, Y.; Wang, J.; Qiao, Y. CT-net: Channel tensorization network for video classification. arXiv 2021, arXiv:2106.01603. [Google Scholar]

- Bulat, A.; Perez Rua, J.M.; Sudhakaran, S.; Martinez, B.; Tzimiropoulos, G. Space-time mixing attention for video transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 19594–19607. [Google Scholar]

- Alfasly, S.; Chui, C.K.; Jiang, Q.; Lu, J.; Xu, C. An effective video transformer with synchronized spatiotemporal and spatial self-attention for action recognition. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Wang, L.; Li, W.; Li, W.; Van Gool, L. Appearance-and-relation networks for video classification. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake, UT, USA, 18–22 June 2018; pp. 1430–1439. [Google Scholar]

- Stroud, J.; Ross, D.; Sun, C.; Deng, J.; Sukthankar, R. D3d: Distilled 3d networks for video action recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 2–5 May 2020; pp. 625–634. [Google Scholar]

- Zhu, L.; Tran, D.; Sevilla-Lara, L.; Yang, Y.; Feiszli, M.; Wang, H. Faster recurrent networks for efficient video classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Hilton New York Midtown, NY, USA, 7–12 February 2020; pp. 13098–13105. [Google Scholar]

- Zhang, Y.J.S. MEST: An Action Recognition Network with Motion Encoder and Spatio-Temporal Module. Sensors 2022, 22, 6595. [Google Scholar] [CrossRef]

| Stage | Operators | Output Sizes |

|---|---|---|

| Pre-processing | Sampling | |

| Patch embedding | Kernel Stride | |

| Stage 1 | ||

| Stage 2 | ||

| Stage 3 | ||

| Stage 4 |

| Dataset | Category | Samples (Train) | Samples (Test) |

|---|---|---|---|

| Kinetics400 [8] | 400 | 240,436 | 19,787 |

| Something-Something V2 [52] | 174 | 168,913 | 24,777 |

| UCF101 [53] | 101 | 9537 | 3734 |

| HMDB51 [54] | 51 | 3570 | 1530 |

| Method | Pre-Train | Frame | GFLOPs | Param. | Top-1 (%) | Top-5 (%) |

|---|---|---|---|---|---|---|

| Two-Stream I3D [8] | ImageNet | 64 | - | 25.0 | 71.6 | 90.0 |

| D [34] | - | 32 | 61.8 | 72.0 | 90.0 | |

| bLVNet-TAM-24 × 2 [56] | Kinetics400 | 24 | 25.0 | 73.5 | 91.2 | |

| TSM [38] | ImageNet | 8 | 24.3 | 74.1 | 91.2 | |

| STM [57] | ImageNet | 16 | - | - | 73.7 | 91.6 |

| ViT-B [46] | - | 16 | 87.2 | 68.5 | 86.9 | |

| ViT-B-VTN [44] | ImageNet | 250 | 114.0 | 78.6 | 93.7 | |

| ViViT [45] | ImageNet21K | 32 | 86.7 | 75.8 | - | |

| TimeSFormer [3] | ImageNet | 8 | 121.4 | 75.8 | - | |

| MViT-S (Our baseline) [46] | - | 8 | 26.1 | 76.0 | 92.1 | |

| WLiT (Ours) | - | 8 | 21.9 | 74.6 | 92.0 |

| Method | Pre-Train | Frame | GFLOPs | Param. | Top-1 (%) | Top-5 (%) |

|---|---|---|---|---|---|---|

| bLVNet-TAM-32 × 2 [56] | - | 32 | 40.2 | 65.2 | 90.3 | |

| MSNet-R50 [58] | - | 16 | 24.6 | 64.7 | 89.4 | |

| Slow-Fast R101 [9] | K400 | 8 | 53.3 | 63.1 | 87.6 | |

| TSM [38] | K400 | 16 | 24.3 | 64.3 | 89.6 | |

| STM [57] | ImageNet | 16 | - | - | 63.5 | 89.6 |

| TEA [39] | ImageNet21K | 16 | - | 65.1 | 89.9 | |

| TDN [40] | ImageNet | 16 | - | 65.3 | 89.5 | |

| CTNet [59] | ImageNet | 16 | - | 65.9 | 90.1 | |

| X-ViT [60] | ImageNet21K | 32 | - | 66.2 | 90.6 | |

| ViViT-L [45] | K400 | 32 | 86.7 | 65.9 | 89.9 | |

| SSTSA-L [61] | ImageNet21K | 32 | 181.6 | 66.2 | - | |

| TimeSFormer [3] | ImageNet21K | 16 | - | 62.5 | - | |

| MViT-B (our baseline) [46] | K400 | 16 | 36.6 | 64.7 | 89.2 | |

| MViT-B [46] | K600 | 16 | 36.6 | 66.2 | 90.2 | |

| WLiT (Ours) | K400 | 16 | 21.9 | 66.3 | 91.5 |

| Method | Pre-Train | UCF101 (Top-1%) | HMDB51 (Top-1%) |

|---|---|---|---|

| TSN [37] | ImageNet | 94.0 | 68.5 |

| P3D [36] | ImageNet | 88.6 | - |

| ARTNet [62] | K400 | 94.3 | 70.9 |

| TSM [38] | K400 | 95.9 | 70.7 |

| D3D [63] | K600 | 97.1 | 79.3 |

| FASTER32 [64] | K400 | 96.9 | 75.7 |

| Two-stream I3D [8] | K400 | 93.4 | 80.9 |

| MEST [65] | ImageNet | 96.8 | 73.4 |

| WLiT (Ours) | K400 | 97.3 | 83.7 |

| Model | GFLOPs | Param. | Top-1 (%) | Top-5 (%) |

|---|---|---|---|---|

| Spatial-Windows attention | 46.6 | 21.4 | 61.6 | 88.7 |

| Linear attention | 46.7 | 21.4 | 62.2 | 89.1 |

| Linear → Windows | 50.8 | 21.9 | 65.9 | 91.1 |

| Windows → Linear (WLiT) | 50.8 | 21.9 | 66.3 | 91.5 |

| FFN | SSV2 | ||||

|---|---|---|---|---|---|

| In Spatial-Windows Attention | In Linear Attention | GFLOPs | Param. | Top-1 (%) | Top-5 (%) |

| ✗ | ✗ | 45.8 | 21.3 | 63.5 | 90.1 |

| ✓ | ✗ | 48.3 | 21.6 | 65.1 | 90.5 |

| ✗ | ✓ | 48.3 | 21.6 | 65.3 | 90.7 |

| ✓ | ✓ | 50.8 | 21.9 | 66.3 | 91.5 |

| APE | SSV2 | ||||

|---|---|---|---|---|---|

| APE [0] | APE [1] | GFLOPs | Param. | Top-1 (%) | Top-5 (%) |

| ✗ | ✗ | 50.5 | 21.7 | 64.1 | 90.2 |

| ✓ | ✗ | 50.7 | 21.8 | 66.0 | 91.3 |

| ✗ | ✓ | 50.7 | 21.8 | 65.8 | 91.4 |

| ✓ | ✓ | 50.8 | 21.9 | 66.3 | 91.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, R.; Zhang, T.; Wan, Y.; Zhang, F.; Wei, J. WLiT: Windows and Linear Transformer for Video Action Recognition. Sensors 2023, 23, 1616. https://doi.org/10.3390/s23031616

Sun R, Zhang T, Wan Y, Zhang F, Wei J. WLiT: Windows and Linear Transformer for Video Action Recognition. Sensors. 2023; 23(3):1616. https://doi.org/10.3390/s23031616

Chicago/Turabian StyleSun, Ruoxi, Tianzhao Zhang, Yong Wan, Fuping Zhang, and Jianming Wei. 2023. "WLiT: Windows and Linear Transformer for Video Action Recognition" Sensors 23, no. 3: 1616. https://doi.org/10.3390/s23031616

APA StyleSun, R., Zhang, T., Wan, Y., Zhang, F., & Wei, J. (2023). WLiT: Windows and Linear Transformer for Video Action Recognition. Sensors, 23(3), 1616. https://doi.org/10.3390/s23031616