Abstract

Accurate location information can offer huge commercial and social value and has become a key research topic. Acoustic-based positioning has high positioning accuracy, although some anomalies that affect the positioning performance arise. Inertia-assisted positioning has excellent autonomous characteristics, but its localization errors accumulate over time. To address these issues, we propose a novel positioning navigation system that integrates acoustic estimation and dead reckoning with a novel step-length model. First, the features that include acceleration peak-to-valley amplitude difference, walk frequency, variance of acceleration, mean acceleration, peak median, and valley median are extracted from the collected motion data. The previous three steps and the maximum and minimum values of the acceleration measurement at the current step are extracted to predict step length. Then, the LASSO regularization spatial constraint under the extracted features optimizes and solves for the accurate step length. The acoustic estimation is determined by a hybrid CHAN–Taylor algorithm. Finally, the location is determined using an extended Kalman filter (EKF) merged with the improved pedestrian dead reckoning (PDR) estimation and acoustic estimation. We conducted some comparative experiments in two different scenarios using two heterogeneous devices. The experimental results show that the proposed fusion positioning navigation method achieves 8~56.28 cm localization accuracy. The proposed method can significantly migrate the cumulative error of PDR and high-robustness localization under different experimental conditions.

1. Introduction

Location based services (LBS) have been involved in every aspect of people’s lives, such as obtaining location information about products in a shopping mall, searching for a vehicle in an underground parking lot, and monitoring the location of a patient in a hospital. At present, the global navigation satellite system (GNSS) can meet all LBS requirements in outdoor environments across all weather conditions and times [1,2]. However, in indoor environments, there are many interferences and obstacles that prevent satellite signals from entering indoor environments. Thus, the GNSS cannot support the location estimation [3]. Moreover, related studies have revealed that people currently spend more than 80% of their time in indoor environments. Overall, the study of LBSs in indoor navigation is of great research significance [4].

There are many common indoor localization methods, such as vision, Bluetooth, Wi-Fi, and positioning based acoustic. Vision-based positioning technology has good adaptability, but it is not able to protect the privacy issue well [5]. Bluetooth positioning is low- cost and requires simple implementation, but it is only suitable for positioning in a small range [6]. Wi-Fi positioning has a large coverage area and a wide deployment capacity with susceptible environmental interferences [7]. Currently, mobile smartphones are equipped with microphones and receivers for acoustic signals. Therefore, mobile smartphones can be used to send and receive acoustic signals, and no more additional infrastructure is required [8]. In addition, acoustic positioning has good security and there is no leakage of personal privacy information. Furthermore, acoustic signal positioning has the advantages of high accuracy and good compatibility [9,10]. However, acoustic signals are susceptible to environmental disturbances. These noises include inherent noises, the heating noises of electronic components, acoustic signal reflection, interference, diffraction, and pedestrian movement and conversations in the indoor environment.

Acoustic-based localization and navigation have become hot topics in current LBS research. Lopes, S.I., et al. [8] designed a passive time difference of arrival (TDOA) positioning system that is compatible with smartphones, which yielded a protocol for synchronizing acoustic beacons. Murakami, H., et al. [11] described a method for three-dimensional positioning using a smartphone with only an external speaker. Zhou, R.H., et al. [12] proposed a hybrid CHAN–Taylor algorithm. In this method, CHAN localization estimation is used as the initial value for the iteration of the Taylor algorithm. The Taylor iteration is interrupted when the error is below a preset threshold. The simulation results demonstrated that the hybrid CHAN–Taylor algorithm has better localization accuracy, convergence speed, and self-adaptability than the CHAN algorithm. Wang, X., et al. [13] used the combined CHAN–Taylor algorithm to effectively suppress non-line-of-sight (NLOS) errors for target localization in 3D indoor scenes. Yang, H.B., et al. [14] employed the hybrid CHAN–Taylor algorithm for underwater localization with a high accuracy of time-delay estimation. It was verified that the hybrid CHAN–Taylor algorithm can suppress the error of the CHAN algorithm.

Inertial measuring unit (IMU) navigation estimation includes an inertial navigation system (INS) and pedestrian dead reckoning (PDR) [15]. The INS estimates the current position with the angular velocity observed by the gyroscope sensors and the force observed by the accelerometer sensors. INS is not limited by application scenarios and is a very ideal navigation method. However, low-performance micro-electromechanical system (MEMS) devices are used and INS cannot provide reliable navigation results. PDR can provide long-term and stable relative positioning results by accurately detecting step counting and estimating step length and walking direction based on the movement characteristics of the pedestrian in the walking process [16,17,18]. It has simple implementation. Compared with the INS, PDR requires less accuracy for the sensor and enables better localization with limited cost. The implementation method has been widely used in the field of pedestrian navigation.

Many methods have been proposed to solve the problem of reducing the cumulative error of PDR. Yotsuya, K., et al. [19] presented an improvement to the accuracy of trajectories using a large amount of pedestrian trajectory data. Guo, S.L., et al. [20] presented a gait-detection method based on dual-frequency Butterworth filtering and a linear combination of multiple features combining the step frequency, the amplitude of acceleration, the mean of acceleration, and the variance of acceleration. Im, C., et al. [21] presented a multi-modal PDR system based on recurrent neural networks with a long short-term memory (LSTM) algorithm to extract potential features from sensor data. Yao, Y.B., et al. [22] proposed a method of identifying the step length based on the features extracted at each step, and the step-length error was approximately about 3%. Zhang, M., et al. [23] used adaptive step length estimation based on time windows and dynamic thresholds. Vathsangam, H., et al. [24] used Gaussian process-based regression (GPR) to estimate walking speeds and compared the performance of the Bayesian linear regression (BLR) and least squares regression methods. Zihajehzadeh, S., et al. [25] applied a linear model to estimate walking speed. Yan et al. [26] proposed an improved PDR method, which adds the previous three steps to predict the step value. The experimental results indicate that this method can obtain a more accurate step estimation.

Scholars have made some achievements in localization acoustic-based research. Reflections and diffraction from walls in indoor environments and noise from the environments can affect the accuracy performance of acoustic-based localization, and some outliers can even occur. The PDR not only has a low computational complexity but can also output accurate and reliable location information in a short period of time without relying on building any external infrastructure [27]. Nevertheless, cumulative errors in PDR can occur over time, which can have an extremely detrimental effect on the localization results [28,29].

To address the problems mentioned above, we propose a positioning and navigation system. To effectively mitigate the cumulative error of PDR, a novel step-length model with constraint LASSO regression [30,31] is proposed. This improved step-length model considers more relevant information to predict the current value than the state-of-the-art methods. The EKF is adopted to determine the target location by integrating acoustic-based localization with improved PDR. The main contributions of this paper are summarized below:

- A novel weighted step-length model: To improve the accuracy of step length, we propose a novel weighted step length with constraint LASSO regression in this paper. In the first step, the coarse current step length is predicted by combining the previous three steps inspired by Weinberg model. Then, the LASSO regression is used to correct the step estimation by combining the acceleration peak-to-valley amplitude difference, the walk frequency, the variance of acceleration, the mean acceleration, the peak median, and the valley median. The experimental results demonstrate that the proposed step-length model has better performance than the state-of-the-art methods.

- A fusion positioning and navigation framework: An EKF-based fusion positioning and navigation framework is presented. In this framework, the hybrid CHAN–Taylor method is used to estimate the location in the acoustic-based positioning. The improved PDR is adopted by the weighted step-length LASSO-based model. Then, the improved PDR is used as the state model and the acoustic estimation is used as the measurement model. The experiments show that the proposed positioning and navigation achieve better localization performance for different users, different devices, and different scenarios than existing methods. The framework is highly robust.

2. Related Works

Fusion positioning technology has become a research hotspot in the field of indoor positioning. Song, X.Y., et al. [32] presented a method to validate the plausibility of PDR results using acoustic constraints between the acoustic source and the image source. Wang, M., et al. [33] proposed a method that combines the Hamming distance-based acoustic estimation with PDR. Yan proposed a CHAN–IPDR–ILS method in reference [34], which combines the CHAN algorithm and PDR algorithm. Al Mamun et al. [35] presented a lightweight fusion technique combining the PDR algorithm with the RSSI fingerprinting method. To decrease the cumulative error, landmarks are adopted to achieve localization. The experiment showed that the median positioning can reach 0.73 m. Poulose, A., et al. [36] proposed a fusion framework based on Wi-Fi and the PDR algorithm. The average localization accuracy of the combined position estimation algorithm was improved by 1.6 m compared with those of the separate algorithm. Lee, G.T., et al. [37] proposed a fusion algorithm based on Kalman filter (KF) for UWB localization and UWB-assisted PDR (U-PDR). Better performances were shown by comparing the UWB localization and PDR algorithm in the experimental results. Wu, J., et al. [38] proposed a text map-based indoor localization method that integrated RFID and the PDR method in a narrow corridor.

The EKF is a recursive algorithm that can be used for nonlinear systems and has a wide range of applications in the fields of navigation, positioning, and information fusion. Tian, X., et al. [39] used a two-step EKF iterative process to perform a state estimation of all the anchors in indoor environments. Yang, C.Y., et al. [40] constructed a 5G/geomagnetic/visual inertial odometry (VIO) positioning system based on an error-state EKF. Liu, W., et al. [41] proposed an autonomous navigation method combining EKF and a rapid exploration random tree (RRT) for four-wheel-steering vehicles to improve the accuracy of autonomous vehicle navigation in indoor environments. Mendoza, L.R., et al. [42] proposed a wearable ultrawideband indoor positioning system based on periodic EKF. Pak, J.M., et al. [43] proposed a switched extended Kalman filter bank (SEKFB) algorithm to overcome the problem of unstable noise covariance generated by isokinetic motion models for indoor localization.

Inspired by the existing positioning algorithms, we propose an indoor positioning method based on EKF fusion integrated with improved PDR and acoustic-based positioning. Specifically, in acoustic-based localization, a hybrid CHAN–Taylor algorithm is utilized to obtain the localization position. In PDR estimation, we propose a weighted fusion step improvement model based on LASSO. The step length estimation is obtained by the previous three steps and the Weinberg model. LASSO is used to modify the predicted step estimation, which makes the prediction value optimally close to the real value.

3. Methodology

In this section, we describe the EKF-based fusion localization architecture integrated into the acoustic-based and improved PDR positioning estimation. An overview of the proposed method is introduced in Section 3.1. The acoustic-based positioning method is described in Section 3.2. Step-count detection is presented in Section 3.3. The improved step model based on LASSO is proposed in Section 3.4. Section 3.5 depicts the heading direction calculation, and Section 3.6 analyzes the fusion method based on the EKF.

3.1. Overview

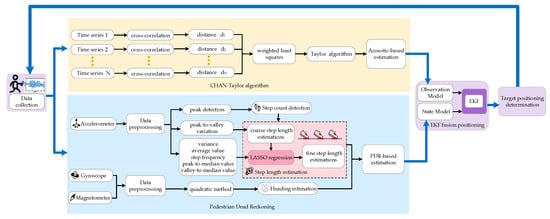

The methodological framework of the proposed positioning and navigation system is presented in Figure 1. The framework is divided into four parts: data collection, acoustic-based estimation, PDR-based estimation, and EKF-based fusion positioning.

Figure 1.

The methodological framework of the proposed positioning and navigation system.

In data collection, acceleration, gyroscope, magnetometer, and ultrasonic signals can be sampled and saved in *.txt format in a smartphone. The collected data will be intermittently uploaded to the server terminal. After preprocessing the collected data, the estimation-based acoustic is solved by the hybrid CHAN–Taylor algorithm. Then, the peaks and valleys of accelerations are detected and step frequency can be determined. The coarse step-length estimation is obtained by the previous three steps and the maximum and minimum values of the acceleration at the current step, and then we combine LASSO regularization spatial constraint and the acceleration peak-to-valley amplitude difference, walking frequency, acceleration variance, mean acceleration, peak median, and valley median to achieve fine step-length estimation. The heading direction is obtained by quaternions method. In the target location estimation, the outliers are detected for acoustic-based estimation. Then, the EKF is used to fuse the target localization. The dead reckoning estimation is taken as the state vector, and the acoustic-based estimation is taken as the observation vector. Finally, the target location is obtained by incorporating the EKF method.

3.2. Acoustic-Based Estimation

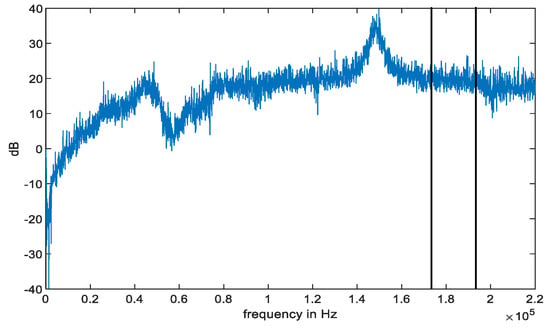

Linear frequency modulation signals increase the transmission bandwidth of the signal by carrier frequency and perform pulse compression during reception. Additionally, linear frequency modulation signals have high resolution, can distinguish interference and targets at a distance, and can greatly simplify the signal processing system. A chirp is a typical nonstationary signal with great applications in sonar, radar, and other fields. In this paper, we use the chirp signal to transmit the acoustic signal. To validate the characteristics of the acoustic signals, we collect the acoustic signal using a Vivo X30 (Guangdong, China) smartphone. Collected signals are filtered and preprocessed through a Finite Impulse Response (FIR) bandpass filter, which basically filters out the interference information, such as indoor inherent noise and electronic components. In the final filtering stage, the adaptive minimum mean square error method is used to fuse the nonlinear approximation linearization, which once again alleviates the impact of noise. Figure 2 shows the strength fluctuation of the acoustic signal after filtering. From the figure, the acoustic signal is stable at 8–14 kHz and 17.5–19.5 kHz. Considering the interference of speech signals on positioning, pseudo ultrasound ranging from 17.5 to 19.5 kHz is selected as the acoustic localization source because the human ear is not sensitive to it. And the location estimation based on acoustic signal is solved using a cross correlation function. These data come from the same sending and receiving device every time. Device heterogeneity has little effect on the performance based on acoustic localization.

Figure 2.

Spectrogram of the acoustic signal using a Vivo X30 smartphone.

The CHAN algorithm is a non-iterative method with an analytic solution. The advantages of this algorithm are a high localization accuracy and low computation, but the localization accuracy is easily affected by complex indoor obstacles. The Taylor algorithm is a recursive algorithm that requires an initial position estimate. This algorithm solves the local least squares solution of the measurement error value at each recursion, continuously updating the estimate. The Taylor algorithm is robust and suitable for complex environments, but it is too dependent on initial values. This hybrid algorithm combines the advantages of the CHAN algorithm’s low computation and the Taylor algorithm’s good robustness. Therefore, for acoustic-based estimation, we chose the CHAN–Taylor hybrid algorithm for this paper.

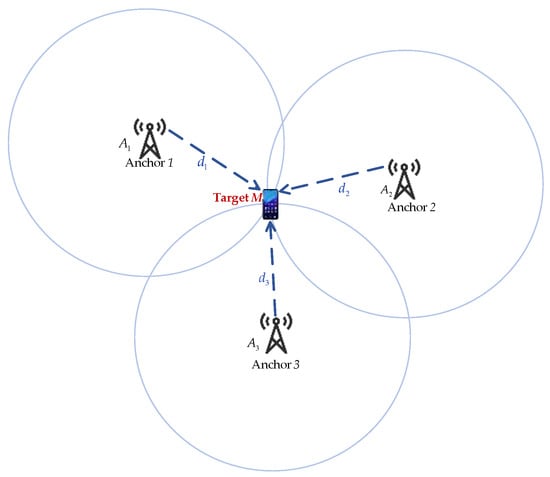

The spatial geometric distribution of the three anchors and the target location is shown in Figure 3. Assuming the target location is , the three anchors are , .

Figure 3.

Spatial geometry distribution with three anchors and the target M.

The distance between the target and the anchor is

where denotes the distance from the i-th anchor to the target .

Expanding Equation (1), we can obtain

where .

Using anchor as the reference anchor, the difference between the i-th anchor and anchor can be derived:

where denotes the distance from the first anchor to the target .

Then,

Letting ,,

Suppose

Equation (5) can be expressed with matrix as follows:

Considering the measurement error, the error vector is depicted as

where is the value without Gaussian noise.

Its covariance matrix is

where , and is the covariance matrix of the measurement errors.

The weighted least squares estimate of can be derived:

After obtaining the first estimate, the weighted squares method was again utilized to calculate the second estimate. The error variance can be expressed as

with the constraint:

where are the estimation errors. , , .

Then, the estimation is

where

The location of the target is

Then, the estimate is used as the initial iterative solution of the Taylor algorithm. Specifically, the function is assumed to represent the constraint relationship between the anchor and the target position. is expanded in a Taylor series at , ignoring components above the second order to obtain the following equation:

By defining and , the following can be obtained:

According to Equation (3), can be represented as

where is the distance between the coordinate and the anchor .

Converting Equation (17) into matrix form is as follows:

where is the error vector and is the difference matrix between the real and measured values. is the estimation error, as follows:

The weighted least squares solution is computed as

In the next recursive operation, the iterative computation is performed after updating the coordinate values of the target estimate.

where is the updated estimate calculated at each iteration. and are also constantly updated. The above process is repeated continuously until the iterative operation stops when the error meets the set conditions.

where η is the error threshold.

Finally, the localization of the target M is determined as

3.3. Step Count Detection

During the data collection process, the collected data always include noise. Inaccurate step count detection, pseudo peaks and pseudo valleys, or missed detections will occur in the peak and valley detection if the original data are used. Therefore, noise cancellation processing is required for data collection.

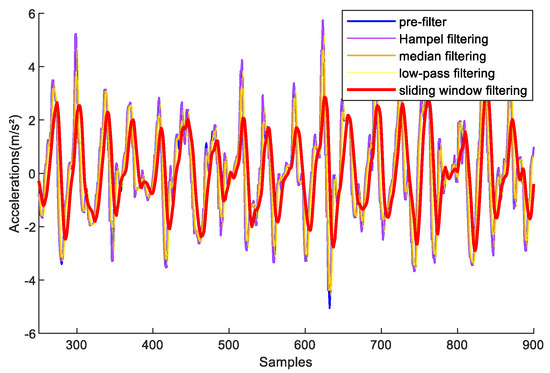

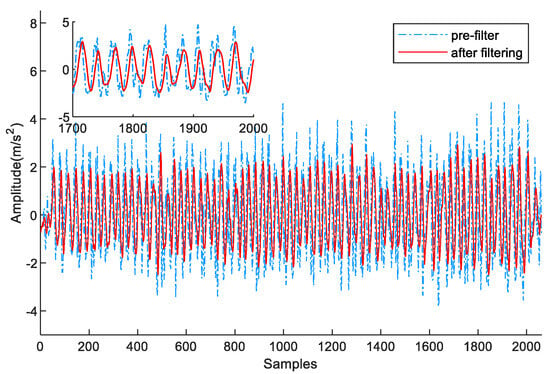

Sliding-window filtering, low-pass filtering, median filtering, and Hampel filtering are common methods. To validate the performance of these methods, we recruited one volunteer to sample acceleration data in the experimental path at a stable speed. Figure 4 shows the acceleration results after filtering. The experiments demonstrate that sliding-window filtering retains better smoothness for the collected acceleration data than the other three methods. It has the best filtering performance compared with the other methods.

Figure 4.

Comparison results among sliding-window filtering, low-pass filtering, median filtering, and Hampel filtering on acceleration processing.

Therefore, we adopt sliding-window filtering to preprocess the original data. The width of the window size is chosen as 10 samples. In Figure 5, the original acceleration data are denoted by the blue dashed line, and the acceleration data after filtering are denoted by the red solid line. Compared with the original data, the filtered acceleration values have less fluctuation, which is favorable for step detection.

Figure 5.

Acceleration data preprocessed by sliding-window filtering.

The peaks and valleys of the acceleration values are used to determine the step count. This mainly includes the following steps:

- (1)

- Setting the acceleration thresholdDifferent pedestrians have different motion patterns. Depending on the motion pattern, the acceleration threshold is set differently. When the acceleration value is greater than the preset threshold, this is determined as a candidate peak or a candidate valley.

- (2)

- Setting the recognition sequenceAcceleration exhibits a distinct regularity with successive peak–valley pairs. When one peak is recognized in the acceleration data, the valley will be judged in the next interval of data.

- (3)

- Setting the time interval thresholdThe current candidate peak or candidate valley is valid only if the time interval between two neighboring peaks or valleys exceeds the preset time interval threshold.

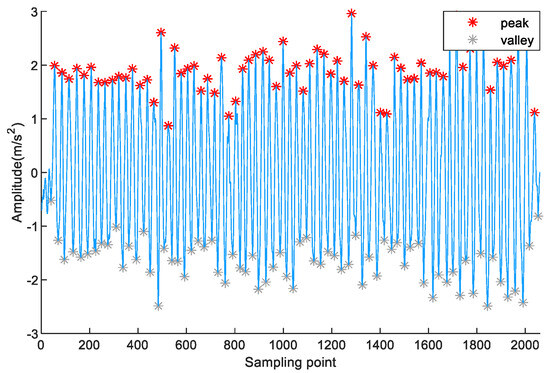

To validate the above step detection method, a volunteer holding a Vivo X30 phone collected acceleration data on a 42 m experimental path. Figure 6 shows the maximum and minimum results of the pedestrian accelerations for each step on a 42 m experimental path. The maximum values of the pedestrian acceleration each step are marked in red stars, and the minimum values of the pedestrian acceleration each step are marked in gray stars. Therefore, step counts are accurately detected. This is because the above step detection methods can effectively identify pseudo-peaks and pseudo-valleys.

Figure 6.

Peak and valley detection results on a 42 m experimental path.

3.4. Step Length Prediction

Step-length prediction plays an important role in PDR localization. There are nonlinear and linear models in step-length prediction. A linear model only considers the relationship between step length and step frequency, which is not very accurate. A nonlinear model, which describes the more accurate correlation between the step size and motion parameters, is often used. The Scarlet model [44], Kim model [45], and Weinberg model [46] are typical nonlinear models. These three models are established on the basis of the relationship between the peak and valley of pedestrian acceleration and step length. However, pedestrian step length is related not only to the peak-to-valley amplitude difference in acceleration but also to multiple other potential characteristics. Therefore, it can achieve better performance when multiple characteristics are used to estimate the step length. Additionally, data overfitting and increased model complexity occur if there are too many characteristics.

Considering the continuity of adjacent steps and inspired by reference [26], the current step length is estimated by the weighted fusion of the previous three step lengths. In addition, to avoid overfitting, a regularization term constraining multiple characteristics is adopted to modify the step length. LASSO regression and ridge regression are commonly used regression methods with regularization terms. Ridge regression incorporates an L2 regularization term. LASSO regression incorporates an L1 regularization term and has an additional variable-filtering function compared with the former [47]. In addition, LASSO can not only prevent data overfitting but also reduces the model complexity. Therefore, LASSO regression is chosen to deal with the feature variables related to step length in this paper.

To address the above problems, we propose a novel step-length model; the coarse predicted value of the current step length is obtained using the weighted previous three steps based on the Weinberg model. The coarse step length at time i can be obtained by the previous three steps and the acceleration maximum and minimum.

The coarse predicted step length is described below:

with the LASSO constraint:

where , , and are the lengths of the previous three steps. , , and are the weight factors. K is an empirical constant. are the maximum and minimum of the pedestrian accelerations for step i. denotes the step number and N represents the number of features. is the six features of the acceleration values. denotes the regression coefficient, and is the penalty coefficient, which is chosen based on 10-fold cross-validation.

Firstly, we can obtain the coarse step length from Equation (28); is used as the dependent variable of the model. The peak-to-valley amplitude difference, walking frequency, acceleration variance, acceleration mean, peak median, and valley median are extracted from the collected acceleration sensors. and the six motion features are used as the independent variables of the model. Then, we will find the optimal value from Equation (29).

Equation (29) presents the minimum of loss function. The first part represents the squared loss function, and the second part represents the L1 regularization term. in Equation (29) adjusts the size of the regression coefficient .

Expanding Equation (29), we can obtain the following:

where denotes the i-th sample value of the j-th feature variable.

To achieve better performance, the loss function in Equation (29) chooses the minimum value. Therefore, the first derivative of the regularization term in Equation (32) is expressed as follows:

Then, the first derivative of Equation (27) is obtained:

In the multidimensional derivative, the fixed values can be described as follows:

Assuming that

Equation (34) can be simplified as follows:

Then, is

Finally, all regression coefficients are calculated. The final estimates of the step length are obtained:

where denotes the matrix of constants corresponding to the regression coefficients.

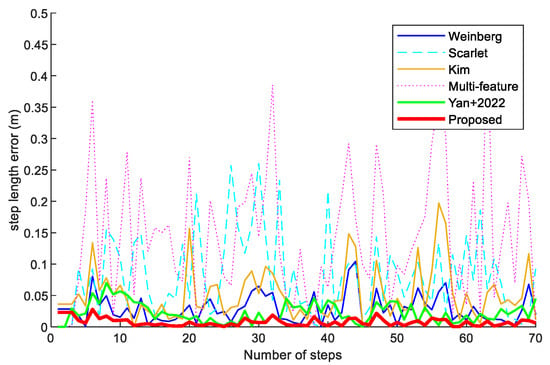

To validate the weighted fusion step improvement model based on LASSO, a volunteer holding a Vivo X30 smartphone collected acceleration data along a 42 m experimental path. Figure 7 shows the step error of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models. From the results, the average step length error of the step improvement model proposed in this paper has the least errors compared with the others. Therefore, we can find that the step improvement method proposed in this paper is effective and the accuracy of the calculated step length is higher.

Figure 7.

The step length error of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models.

3.5. Heading Direction Calculation

Heading direction estimation is also an important factor in PDR and determines the direction of the entire track deflection [48]. The measured angular velocity of gyroscope sensors , the angular velocity of earth coordinate system relative to inertial coordinate system , the angular velocity of navigation coordinate system relative to earth coordinate system and the angular velocity of body coordinate system relative to navigation coordinate system satisfy as follows:

where is the transfer matrix between earth coordinate system and navigation coordinate system. is the angular velocity of earth coordinate system. is the angular velocity of navigation coordinate system relative to earth coordinate system.

The attitude angular velocity equation can be expressed in matrix as

where , , and are the transfer matrix vectors of earth coordinate system to navigation coordinate system, is the transfer matrix from navigation coordinate system to body coordinate system.

From Equation (42), we can obtain the angular velocity , and then we will continue to find the quaternion elements through the differential equation below:

where , are real numbers, and , , are mutually orthogonal unit vectors. is called a normalized quaternion.

Expanding Equation (43) into matrix as

Once determining the vector (), the attitude matrix can be depicted as follows:

To simplify Equation (45), can be expressed as:

The attitude directions are

3.6. EKF-Based Fusion Positioning

In fusion positioning, the acoustic-based estimation is set as the initial location of the target. To avoid the outliers, we set a threshold to detect anomalies in the estimation. At time i − 1, the acoustic-based estimation is , and the estimation of the proposed dead reckoning method is .

Case 1: If the distance between the acoustic-based estimation and the localization is greater than the preset threshold , the acoustic-based estimation is discarded as an outlier. Then, the estimation at time i − 1 is used for localization, where .

Case 2: When the distance between the acoustic-based estimation and the localization is less than the preset threshold , is determined by EKF-based fusion positioning.

In our localization scheme, the PDR estimation is set as the state variable and the estimation is set as the observation variable. The state and observation vector are expressed as follows:

where is the pedestrian step length and is the heading direction of the target. is the PDR estimation, and is the acoustic-based estimation.

In fusion localization, the observation equation and state equation of the EKF algorithm are described as follows:

where , is the pedestrian target position to be estimated, which is the state vector of the Kalman filter. is the volume measurement vector, representing the acoustic estimate. is the process noise. is the measurement noise, which satisfies a Gaussian distribution. and are the nonlinear state and observation functions, respectively.

State vectors , measure vectors and noise signals , satisfy statistical properties:

where and are

where are the errors in PDR positioning. are the errors in acoustic positioning. are the number of steps and direction angle of the PDR, respectively.

To estimate accurate pedestrian target location information, the nonlinear function needs to be linearized. The local linearization and of nonlinear functions and are expressed as follows:

where

The linearization of Equation (50) is described as follows:

Equation (60) can then be used to achieve fused localization using Kalman filtering. Thus, the fusion localization objective in this paper becomes the design of a suitable optimized filter for the system.

Design the Kalman recursive filter in the following form:

where is the filtering gain at moment . is the state estimate at moment i with initial value . is the one-step state vector prediction at moment i.

In fusion localization, calculating the gain of the Kalman filter often requires calculating the inverse of a high-dimensional matrix, which increases the computational complexity. Therefore, it is necessary to consider suboptimal filters. To facilitate the analytical derivation of the suboptimization problem, the following two theorems are introduced.

Theorem 1. For matrices A and B of appropriate dimensions, the trace of the matrix exists:

Theorem 2.

The filter Equation (61) is estimated unbiased, implying that all satisfies E{(i)} is zero.

Proof. Combining Equations (60) and (61), the estimated value of the state vector at time i:

Then the expectation of the state vector is expressed as

In the fusion localization process, the mean value of the time i = 0 is used as the estimated mean value, , According to Equation (51),

When , . Thus, we have proved that the filter (53) is unbiasedly estimated. □

For the fusion localization in this paper, we need to solve the recursive filtering suboptimization problem.

According to Equation (61), the estimation error is

The mean square error of prediction is

The measurement noise is uncorrelated with the one-step prediction error , resulting in

Equation (66) can be expressed as

Thus, the suboptimal problem for Equation (61) becomes solving Equation (69) to minimize the mean-square error, which is equal to the derivation of the matrix trace for Equation (69).

According to Theorem 1, the derivation of the matrix trace for Equation (69) is

To obtain min (), we obtain

The filter gain is

Lemma 1.

is the position of the target to be estimated, which is a state vector of the extended Kalman filter. is the one-step predicted value of the target, and is the process noise obeying a Gaussian distribution. is an approximate linear state function. The one-step prediction estimation of the mean square error satisfies linear estimation with the mean square error of the previous moment.

Proof. According to Equation (60), the mean square error of the one-step prediction estimate is

According to Equation (65), we obtain

Compute the second and third terms of Equation (73), respectively.

Based on Equations (52) and (54) of the previous fusion localization model, the following can be obtained:

The one-step prediction mean square error is

Thus, the mean square error one-step prediction value is proved. □

Substituting Equation (79) into (72), the filter gain at moment i can be derived based on the minimum mean square error. The minimum mean square error under suboptimal filtering is obtained by substituting the obtained from the projection into Equation (69). Thus, the suboptimal estimation problem of fusion localization is solved.

The filter gain design in Equation (72) does not require a very large dimensional inversion of the inverse. A fusion localization scheme is established based on Theorems 1, 2, and Lemma 1. In this paper, the focus is on the transient characteristics, where the filtered mean-square error is obtained at each sampling instant . The appropriate gain is designed to make the fusion localization sub-optimal.

4. Results

In this section, the experimental setup is depicted in Section 4.1. Then, the localization accuracy of the LASSO-based weighted fusion step improvement model is analyzed in Section 4.2. In Section 4.3, the CDF positioning performance of the EKF-based PDR combined with the acoustic estimation method is reported. The mean and RMS error performance of the EKF-based PDR combined with the acoustic estimation method is discussed.

4.1. Experimental Setup

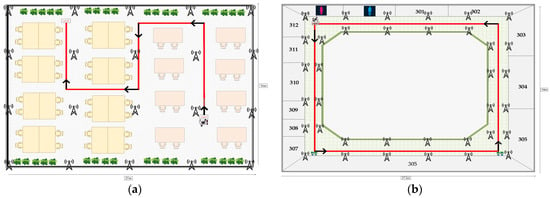

In this paper, we conducted experiments in two indoor environments. Scenario 1, with dimensions of 27 × 16 × 3 m3, is a reading room, similar to a seminar room. There are many tables, some air conditioners, and potted plants in the reading room. The windows in the reading room are made of glass on both sides and walls on the other sides, which may affect signal reflection and absorption. The second scene, at 34 × 17.3 × 3 m3, is a big, closed corridor that follows an indoor loop. This scene is more open compared with the first scene. The anchor distribution is shown in Figure 8. Twenty-five beacons are deployed in the first experimental scenario and thirty-six beacons in the second scenario. The red solid line denotes the pedestrian movement trajectory, and the black solid arrows are the direction of movement. In Figure 8a, the experimental path is along the desks in the reading room. The start and end points are not the same location. In Figure 8b, the experimental path is a closed rectangle with the same start and endpoints.

Figure 8.

Floor plan of the experimental site: (a) scene 1, (b) scene 2.

In this experiment, we invited a female volunteer, 160 cm in height (#1), and a male volunteer, 181 cm in height (#2), to collect acoustic signals and IMU data. The two volunteers, holding Vivo X30 and OPPO K5 smartphones, walked along the test path with a 0.6 m/step speed several times, respectively.

4.2. Improved Step Length Performance

To assess the performance of the weighted fusion step estimation model based on LASSO, we conducted experiments on the Scarlet model, Kim model, Weinberg model, multifeatured model, Yan+ 2022 [26] model, and our model.

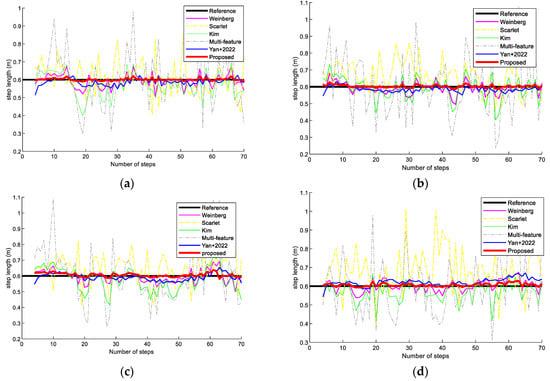

Figure 9 shows the step-length results using a Vivo X30 and an OPPO K5 smartphone (Guangdong, China) for two volunteers, in scene 1, respectively. The proposed step estimation model is more accurate and produced a result closer to the real step length than the state-of-the-art step length models for different volunteers and mobile devices in scene 1. This is because the step estimation model proposed in this paper considers not only the first three steps but also the acceleration peak-to-valley amplitude difference, walk frequency, variance of acceleration, mean acceleration, peak median, and valley median. The method can supply more features to predict step length and can effectively mitigate the errors in the approximate symmetry.

Figure 9.

Step-length estimation comparison on different methods at scene 1. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

Figure 10 presents step-length results with two volunteers holding the Vivo X30 and OPPO K5 smartphones in scene 2. The experimental results show that the proposed step-length improvement model also performs better than the state-of-the-art step-length models. This is because the proposed step-length model has better robustness and can avoid the effects of different pedestrians and devices.

Figure 10.

Step-length comparison on different methods in scene 2. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

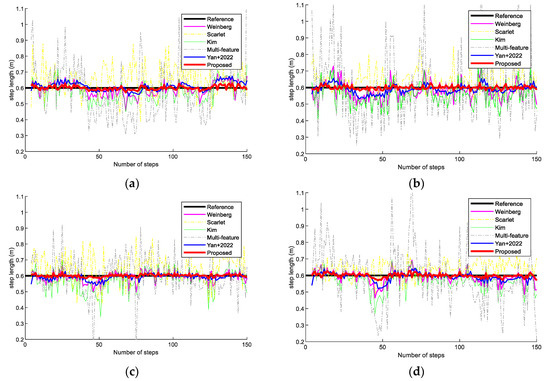

In addition, we compared the step errors of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models in scene 1. In Figure 11, it can be observed that the step errors of the step-length model proposed in this paper are smaller than those of the other models. The reason is that the proposed step-length estimation model combines various influencing features to estimate the step length in a more comprehensive way.

Figure 11.

Step-length errors of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models in scene 1. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

Figure 12 illustrates the step length errors of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models in scene 2. In longer paths, the step improvement model proposed in this paper had a smaller average step error and can achieve higher target localization. This is because the model estimation with constrained LASSO can obtain more features for a fine estimation.

Figure 12.

Step-length errors of the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26], and proposed step models in scene 2. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

Table 1 and Table 2 show the average step-length results among the Scarlet model, Kim model, Weinberg model, Multi-feature model, Yan+ 2022 [26] model, and proposed model for Volunteer #1 holding the OPPO K5 and Vivo X30 smartphones in the two scenes. The step estimation results of the proposed model have a higher step-length estimation performance for different scenarios and devices.

Table 1.

Estimation of the average step length in scene 1 by Volunteer #1 using the OPPO K5 and Vivo X30 smartphone.

Table 2.

Estimation of the average step length in scene 2 by Volunteer #1 using the OPPO K5 and Vivo X30 smartphone.

Table 3 and Table 4 show the average step-length estimation results of the Scarlet model, Kim model, Weinberg model, Multi-feature model, Yan+ 2022 [26] model, and the proposed model by Volunteer #2 holding OPPO K5 and Vivo X30 smartphones in the two scenes. The step estimation results demonstrate that the proposed model is more robust at different heights and has better universality.

Table 3.

Estimation of the average step length in scene 1 by Volunteer #2 using the OPPO K5 and Vivo X30 smartphone.

Table 4.

Estimation of the average step length in scene 2 by Volunteer #2 using the OPPO K5 and Vivo X30 smartphone.

4.3. CDF Positioning Performance

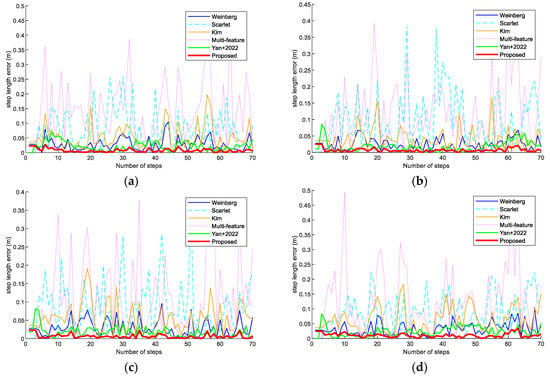

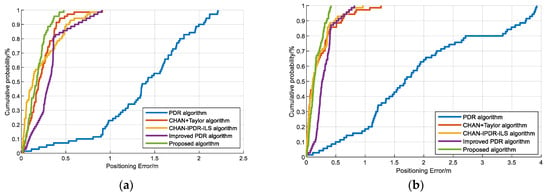

To verify the positioning performance, we carried out multiple experiments on the PDR algorithm, CHAN–Taylor hybrid algorithm, CHAN–IPDR–ILS [34], improved PDR algorithm, and proposed algorithm using different phones in different scenarios. Figure 13 presents the localization performance of the above-mentioned algorithms for two volunteers using the Vivo X30 and OPPO K5 mobile phones in scene 1. The experiments show that the proposed algorithm has smaller positioning errors than the state-of-the-art algorithms. This method not only uses the weighted fusion step estimation model based on LASSO to improve the step accuracy of PDR but also combines it with acoustic estimation to reduce the cumulative error of PDR.

Figure 13.

CDFs of the positioning errors on the different algorithms at the first scene: (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

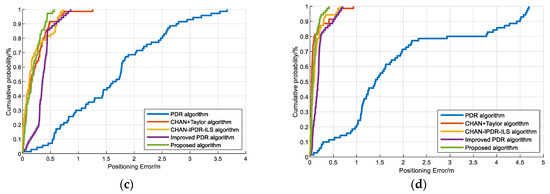

Figure 14 presents the positioning performance of the PDR algorithm, CHAN–Taylor hybrid algorithm, CHAN–IPDR–ILS, improved PDR algorithm, and our algorithm by two volunteers using Vivo X30 and OPPO K5 smartphones in the second scene. The proposed algorithm has a smaller localization error over long movement times in similar scenes. The experiments demonstrate that the PDR algorithm in this paper significantly improves its positioning performance in similar scenarios, and the EKF fusion of the proposed positioning algorithm has the best positioning performance among these algorithms and solves the contradiction between high positioning accuracy and low cost. The main reason is that this method can extract accurate features for step-length prediction in the dead reckoning. The outlier schemes are determined during the fusion positioning process, and the EKF can achieve good nonlinear filtering.

Figure 14.

CDFs of the positioning errors on the different algorithms at the second scene: (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

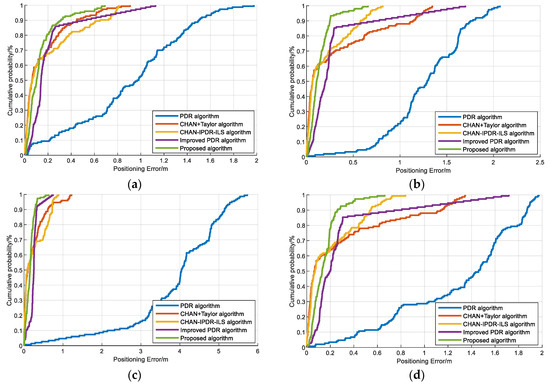

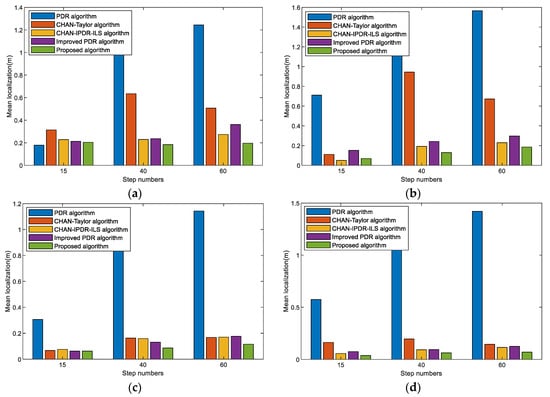

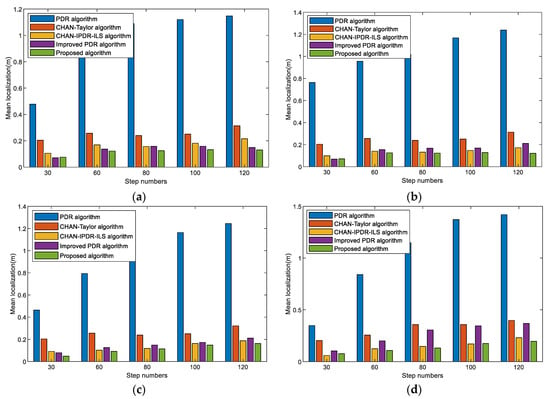

The mean localization errors of different step numbers for the PDR algorithm, CHAN–Taylor algorithm, CHAN–IPDR–ILS algorithm, improved PDR algorithm, and our algorithm at the first scene are shown in Figure 15. The proposed algorithm resulted in the least positioning errors for different length paths. This is because the method attenuates the cumulative error in the PDR algorithm over long movement times and the occasional error of the acoustic-based estimation.

Figure 15.

Mean localization errors of different step numbers on different algorithms in the first scene. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a Vivo X30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

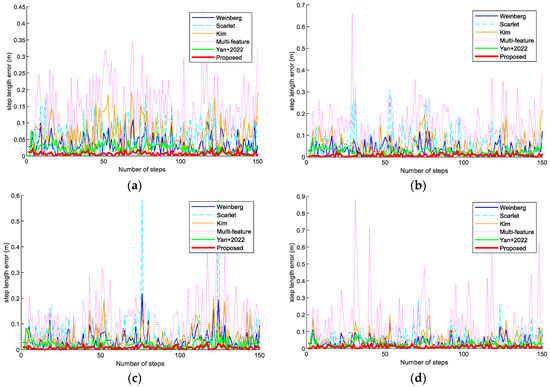

Figure 16 shows the mean localization errors of different length paths in different algorithms with different smartphones and pedestrians in the second scene. The results reveal that the positioning errors of the proposed algorithm increase slightly as the step numbers increases. However, the overall positioning performance remains basically stable, and the accumulated errors are effectively reduced. The improved PDR has better performance in the cumulative errors. The proposed system exhibits a good positioning performance in different length paths, and good robustness and universality. This is because device heterogeneity and pedestrian step differences during step-length prediction are effectively eliminated, and pedestrian motion features are accurately extracted. In addition, the impact of the environment on acoustic signal localization is addressed.

Figure 16.

Mean localization errors of different step numbers on the different algorithms at the second scene. (a) Volunteer #1 using an OPPO K5 smartphone. (b) Volunteer #1 using a VivoX30 smartphone. (c) Volunteer #2 using an OPPO K5 smartphone. (d) Volunteer #2 using a Vivo X30 smartphone.

4.4. Mean and RMS Error Performance

Table 5 and Table 6 present the mean and RMS errors among the different algorithms in scene 1. The PDR algorithm in this paper has good robustness for different pedestrians. Due to equipment heterogeneity, the proposed algorithm has slightly different errors, of less than 10 cm. In this open symmetric scene, the proposed positioning system has better performance than the other algorithms. This is because the improved PDR algorithm effectively addresses the accumulated errors, and EKF fusion reduces the nonlinearity effect.

Table 5.

Mean and RMS error comparison among different algorithms for Volunteer #1 holding the OPPO K5 and Vivo X30 in scene 1 (m).

Table 6.

Mean and RMS error comparison among different algorithms for Volunteer #2 holding the OPPO K5 and Vivo X30 in scene 1 (m).

Table 7 and Table 8 describe the mean and RMS errors in the different algorithms in scene 2. The proposed algorithm has better performance than the others. Moreover, it can be revealed that the proposed EKF-based PDR method combined with acoustic estimation has better localization accuracy, and more robust localization performance under many different experimental conditions. Its greatest highlight is that the step-length estimation model is based on LASSO. Therefore, more accurate localization can be achieved.

Table 7.

Mean and RMS error comparison among different algorithms for Volunteer #1 holding the OPPO K5 and Vivo X30 in scene 2 (m).

Table 8.

Mean and RMS error comparison among different algorithms for Volunteer #2 holding the OPPO K5 and Vivo X30 in scene 2 (m).

5. Conclusions

In this paper, we present a localization method that utilizes the EKF to fuse acoustic estimation and improve PDR. In the method in this paper, acoustic estimation is implemented using a hybrid CHAN–Taylor algorithm. In the dead reckoning, we propose a novel weighted step-length model with constraint LASSO. In this model, the peak and valley of the current step and the previous three steps are used to obtain a coarse step estimation; then, acceleration peak-to-valley amplitude difference, walk frequency, variance of acceleration, mean acceleration, peak median, valley median are extracted from the collected data. Finally, we combine the extracted motion features and LASSO regularization spatial constraints to obtain accurate step length. The model utilizes LASSO regression to combine multiple features to predict step results and improve the step estimation accuracy of PDR. Finally, the improved PDR is used as the state model and the acoustic estimation is used as the observation model. The target location is determined by EKF fusion.

To demonstrate the localization accuracy of the proposed method, we conducted extensive experiments on different experimental paths in two scenes. Scene 1 is a reading room with an area of approximately 432 m2, and scene 2 is a corridor with an area of approximately 584.8 m2. Two volunteers with different heights holding Vivo X30 and OPPO K5 smartphones were recruited to collect the data in the experiment. The experimental results demonstrate that the proposed step-length method is more accurate at comparing than the Weinberg, Scarlet, Kim, Multi-feature, Yan+ 2022 [26] model. It validates that our method can extract more accurate information to achieve high performance. Finally, we fuse the acoustic positioning with dead reckoning to obtain high positioning performance and low lost. Different pedestrians and devices were carried out in two different scenes. The experimental results show that the proposed positioning system can achieve a more accurate localization performance in the case of different users and different devices. The localization method can effectively mitigate the cumulative error of PDR and improve the accuracy and stability of indoor positioning. Although we conducted the experiment in different scenes, there were not enough obstacles for the two scenes. Therefore, the underground parking lot is an interesting test scenario for future work.

Author Contributions

Conceptualization, S.Y., X.L. and R.W.; methodology, S.Y., X.X. and R.W.; software, X.L., Y.J. and R.W.; validation, S.Y., X.X. and J.X.; formal analysis, X.L., Y.J. and R.W.; resources, J.X. and Y.J.; data curation, S.Y. and X.X.; writing—original draft preparation, S.Y. and X.X.; writing—review and editing, S.Y., X.X. and J.X.; funding acquisition, X.L., J.X. and Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by Guangxi Science and Technology Project: Grant AB21196041, Grant AB22035074, Grant AD22080061, Grant AB23026120, and Grant AA20302022; National Natural Science Foundation of China: Grant 62061010, Grant 62161007, Grant U23A20280, Grant 61936002, Grant 62033001, and Grant 6202780103; National Key Research and Development Program (2018AA100305); Guangxi Bagui Scholar Project; Guilin Science and Technology Project: Grant 20210222-1; Guangxi Key Laboratory of Precision Navigation Technology and Application; Innovation Project of Guang Xi Graduate Education: YCSW2022291; Innovation Project of GUET Graduate Education: 2023YCXS024.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All test data mentioned in this paper will be made available on request to the corresponding author’s email with appropriate justification.

Conflicts of Interest

Author Y.J. is the expert in GUET-Nanning E-Tech Research Institute Co., Ltd. The remaining authors declare that the research has no any commercial or financial relationships that could cause a conflict of interest.

References

- Ge, H.B.; Li, B.F.; Jia, S.; Nie, L.W.; Wu, T.H.; Yang, Z.; Shang, J.Z.; Zheng, Y.N.; Ge, M.R. LEO Enhanced Global Navigation Satellite System (LeGNSS): Progress, opportunities, and challenges. Geo-Spat. Inf. Sci. 2022, 25, 1–13. [Google Scholar] [CrossRef]

- Morales-Ferre, R.; Richter, P.; Falletti, E.; de la Fuente, A.; Lohan, E.S. A Survey on Coping With Intentional Interference in Satellite Navigation for Manned and Unmanned Aircraft. IEEE Commun. Surv. Tutor. 2020, 22, 249–291. [Google Scholar] [CrossRef]

- Shu, Y.M.; Xu, P.L.; Niu, X.J.; Chen, Q.J.; Qiao, L.L.; Liu, J.N. High-Rate Attitude Determination of Moving Vehicles With GNSS: GPS, BDS, GLONASS, and Galileo. IEEE Trans. Instrum. Meas. 2022, 71. [Google Scholar] [CrossRef]

- Bi, J.X.; Zhao, M.Q.; Yao, G.B.; Cao, H.J.; Feng, Y.G.; Jiang, H.; Chai, D.S. PSOSVRPos: WiFi indoor positioning using SVR optimized by PSO. Expert Syst. Appl. 2023, 222, 119778. [Google Scholar] [CrossRef]

- Mandia, S.; Kumar, A.; Verma, K.; Deegwal, J.K. Vision-Based Assistive Systems for Visually Impaired People: A Review. In Proceedings of the 5th International Conference on Optical and Wireless Technologies (OWT), Electr Network, Jaipur, India, 9–10 October 2021; pp. 163–172. [Google Scholar]

- Zhuang, Y.; Zhang, C.Y.; Huai, J.Z.; Li, Y.; Chen, L.; Chen, R.Z. Bluetooth Localization Technology: Principles, Applications, and Future Trends. IEEE Internet Things J. 2022, 9, 23506–23524. [Google Scholar] [CrossRef]

- Jia, M.; Khattak, S.B.; Guo, Q.; Gu, X.M.; Lin, Y. Access Point Optimization for Reliable Indoor Localization Systems. IEEE Trans. Reliab. 2020, 69, 1424–1436. [Google Scholar] [CrossRef]

- Lopes, S.I.; Vieira, J.M.N.; Reis, J.; Albuquerque, D.; Carvalho, N.B. Accurate smartphone indoor positioning using a WSN infrastructure and non-invasive audio for TDoA estimation. Pervasive Mob. Comput. 2015, 20, 29–46. [Google Scholar] [CrossRef]

- Chen, X.; Chen, Y.H.; Cao, S.; Zhang, L.; Zhang, X.; Chen, X. Acoustic Indoor Localization System Integrating TDMA plus FDMA Transmission Scheme and Positioning Correction Technique. Sensors 2019, 19, 2353. [Google Scholar] [CrossRef]

- Filonenko, V.; Cullen, C.; Carswell, J.D. Indoor Positioning for Smartphones Using Asynchronous Ultrasound Trilateration. ISPRS Int. Geo-Inf. 2013, 2, 598–620. [Google Scholar] [CrossRef]

- Murakami, H.; Nakamura, M.; Hashizume, H.; Sugimoto, M. 3-D Localization for Smartphones using a Single Speaker. In Proceedings of the 10th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Zhou, R.H.; Sun, H.M.; Li, H.; Luo, W.L. Time-difference-of-arrival Location Method of UAV Swarms Based on Chan-Taylor. In Proceedings of the 3rd International Conference on Unmanned Systems (ICUS), Harbin, China, 27–28 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1161–1166. [Google Scholar]

- Wang, X.; Huang, Z.H.; Zheng, F.Q.; Tian, X.C. The Research of Indoor Three-Dimensional Positioning Algorithm Based on Ultra-Wideband Technology. In Proceedings of the 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 5144–5149. [Google Scholar]

- Yang, H.B.; Gao, X.J.; Huang, H.W.; Li, B.S.; Xiao, B. An LBL positioning algorithm based on an EMD-ML hybrid method. EURASIP J. Adv. Signal Process. 2022, 2022, 38. [Google Scholar] [CrossRef]

- Feng, T.Y.; Zhang, Z.X.; Wong, W.C.; Sun, S.M.; Sikdar, B. A Privacy-Preserving Pedestrian Dead Reckoning Framework Based on Differential Privacy. In Proceedings of the 32nd IEEE Annual International Symposium on Personal, Indoor and Mobile Radio Communications (IEEE PIMRC), Electr Network, Helsinki, Finland, 13–16 September 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Zhang, R.; Bannoura, A.; Hoflinger, F.; Reindl, L.M.; Schindelhauer, C. Indoor Localization Using A Smart PhoneAC. In Proceedings of the 8th IEEE Sensors Applications Symposium (SAS), Galveston, TX, USA, 19–21 February 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 38–42. [Google Scholar]

- Ehrlich, C.R.; Blankenbach, J. Indoor localization for pedestrians with real-time capability using multi-sensor smartphones. Geo-Spat. Inf. Sci. 2019, 22, 73–88. [Google Scholar] [CrossRef]

- Diez, L.E.; Bahillo, A.; Otegui, J.; Otim, T. Step Length Estimation Methods Based on Inertial Sensors: A Review. IEEE Sens. J. 2018, 18, 6908–6926. [Google Scholar] [CrossRef]

- Yotsuya, K.; Ito, N.; Naito, K.; Chujo, N.; Mizuno, T.; Kaji, K. Method to Improve Accuracy of Indoor PDR Trajectories Using a Large Amount of Trajectories. In Proceedings of the 11th International Conference on Mobile Computing and Ubiquitous Network (ICMU), Auckland, New Zealand, 5–8 October 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Guo, S.L.; Zhang, Y.T.; Gui, X.Z.; Han, L.N. An Improved PDR/UWB Integrated System for Indoor Navigation Applications. IEEE Sens. J. 2020, 20, 8046–8061. [Google Scholar] [CrossRef]

- Im, C.; Eom, C.; Lee, H.; Jang, S.; Lee, C. Deep LSTM-Based Multimode Pedestrian Dead Reckoning System for Indoor Localization. In Proceedings of the International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Republic of Korea, 6–9 February 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Yao, Y.B.; Pan, L.; Fen, W.; Xu, X.R.; Liang, X.S.; Xu, X. A Robust Step Detection and Stride Length Estimation for Pedestrian Dead Reckoning Using a Smartphone. IEEE Sens. J. 2020, 20, 9685–9697. [Google Scholar] [CrossRef]

- Zhang, M.; Shen, W.B.; Yao, Z.; Zhu, J.H. Multiple information fusion indoor location algorithm based on WIFI and improved PDR. In Proceedings of the 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 5086–5092. [Google Scholar]

- Vathsangam, H.; Emken, A.; Spruijt-Metz, D.; Sukhatme, G.S. Toward free-living walking speed estimation using Gaussian Process-based Regression with on-body accelerometers and gyroscopes. In Proceedings of the 2010 4th International Conference on Pervasive Computing Technologies for Healthcare, Munich, Germany, 22–25 March 2010; pp. 1–8. [Google Scholar]

- Zihajehzadeh, S.; Park, E.J. Experimental Evaluation of Regression Model-Based Walking Speed Estimation Using Lower Body-Mounted IMU. In Proceedings of the 38th Annual International Conference of the IEEE-Engineering-in-Medicine-and-Biology-Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 243–246. [Google Scholar]

- Yan, S.Q.; Wu, C.P.; Deng, H.G.; Luo, X.N.; Ji, Y.F.; Xiao, J.M. A Low-Cost and Efficient Indoor Fusion Localization Method. Sensors 2022, 22, 5505. [Google Scholar] [CrossRef]

- Naser, R.S.; Lam, M.C.; Qamar, F.; Zaidan, B.B. Smartphone-Based Indoor Localization Systems: A Systematic Literature Review. Electronics 2023, 12, 1814. [Google Scholar] [CrossRef]

- Lu, Y.L.; Luo, S.Q.; Yao, Z.X.; Zhou, J.F.; Lu, S.C.A.; Li, J.W. Optimization of Kalman Filter Indoor Positioning Method Fusing WiFi and PDR. In Proceedings of the 7th International Conference on Human Centered Computing (HCC), Electr Network, Virtual, 9–11 December 2021; pp. 196–207. [Google Scholar]

- Hou, X.Y.; Bergmann, J. Pedestrian Dead Reckoning With Wearable Sensors: A Systematic Review. IEEE Sens. J. 2021, 21, 143–152. [Google Scholar] [CrossRef]

- Duan, J.B.; Soussen, C.; Brie, D.; Idier, J.; Wan, M.X.; Wang, Y.P. Generalized LASSO with under-determined regularization matrices. Signal Process. 2016, 127, 239–246. [Google Scholar] [CrossRef]

- Arbet, J.; McGue, M.; Chatterjee, S.; Basu, S. Resampling-based tests for Lasso in genome-wide association studies. BMC Genet. 2017, 18, 15. [Google Scholar] [CrossRef]

- Song, X.Y.; Wang, M.; Qiu, H.B.; Luo, L.Y. Indoor Pedestrian Self-Positioning Based on Image Acoustic Source Impulse Using a Sensor-Rich Smartphone. Sensors 2018, 18, 4143. [Google Scholar] [CrossRef]

- Wang, M.; Duan, N.; Zhou, Z.; Zheng, F.; Qiu, H.B.; Li, X.P.; Zhang, G.L. Indoor PDR Positioning Assisted by Acoustic Source Localization, and Pedestrian Movement Behavior Recognition, Using a Dual-Microphone Smartphone. Wirel. Commun. Mob. Comput. 2021, 2021, 9981802. [Google Scholar] [CrossRef]

- Yan, S.Q.; Wu, C.P.; Luo, X.A.; Ji, Y.F.; Xiao, J.M. Multi-Information Fusion Indoor Localization Using Smartphones. Appl. Sci. 2023, 13, 3270. [Google Scholar] [CrossRef]

- Al Mamun, M.A.; Yuce, M.R. Map-Aided Fusion of IMU PDR and RSSI Fingerprinting for Improved Indoor Positioning. In Proceedings of the 20th IEEE Sensors Conference, Electr Network, Virtual, 31 October–4 November 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Poulose, A.; Eyobu, O.S.; Han, D.S. A Combined PDR and Wi-Fi Trilateration Algorithm for Indoor Localization. In Proceedings of the 1st International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 72–77. [Google Scholar]

- Lee, G.T.; Seo, S.B.; Jeon, W.S. Indoor Localization by Kalman Filter based Combining of UWB-Positioning and PDR. In Proceedings of the IEEE 18th Annual Consumer Communications and Networking Conference (CCNC), Electr Network, Las Vegas, NV, USA, 9–13 January 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Wu, J.; Zhu, M.H.; Xiao, B.; Qiu, Y.Z. Graph-Based Indoor Localization with the Fusion of PDR and RFID Technologies. In Proceedings of the 18th International Conference on Algorithms and Architectures for Parallel Processing (ICA3PP), Guangzhou, China, 15–17 November 2018; pp. 630–639. [Google Scholar]

- Tian, X.; Wei, G.L.; Zhou, J. Calibration method of anchor position in indoor environment based on two-step extended Kalman filter. Multidimens. Syst. Signal Process. 2021, 32, 1141–1158. [Google Scholar] [CrossRef]

- Yang, C.Y.; Cheng, Z.H.; Jia, X.X.; Zhang, L.T.; Li, L.Y.; Zhao, D.Q. A Novel Deep Learning Approach to 5G CSI/Geomagnetism/VIO Fused Indoor Localization. Sensors 2023, 23, 1311. [Google Scholar] [CrossRef]

- Liu, W.; Jing, C.; Wan, P.; Ma, Y.H.; Cheng, J. Combining extended Kalman filtering and rapidly-exploring random tree: An improved autonomous navigation strategy for four-wheel steering vehicle in narrow indoor environments. Proc. Inst. Mech. Eng. Part I–J Syst Control Eng. 2022, 236, 883–896. [Google Scholar] [CrossRef]

- Mendoza, L.R.; O’Keefe, K. Periodic Extended Kalman Filter to Estimate Rowing Motion Indoors Using a Wearable Ultra-Wideband Ranging Positioning System. In Proceedings of the 11th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Univ Oberta Catalunya, Lloret de Mar, Spain, 29 November–2 December 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Pak, J.M. Switching Extended Kalman Filter Bank for Indoor Localization Using Wireless Sensor Networks. Electronics 2021, 10, 718. [Google Scholar] [CrossRef]

- Scarlett, J. Enhancing the Performance of Pedometers Using a Single Accelerometer; Analog Devices: Wilmington, MA, USA, 2009. [Google Scholar]

- Kim, J.W.; Jang, H.J.; Hwang, D.-H. A Step, Stride and Heading Determination for the Pedestrian Navigation System. J. Glob. Position. Syst. 2004, 3, 273–279. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications; Analog Devices: Wilmington, MA, USA, 2009. [Google Scholar]

- Reddy, M.S.K.; Sumathi, R.; Reddy, N.V.K.; Revanth, N.; Bhavani, S. Analysis of Various Regressions for Stock Data Prediction. In Proceedings of the 2022 2nd International Conference on Technological Advancements in Computational Sciences (ICTACS), Tashkent, Uzbekistan, 10–12 October 2022; pp. 538–542. [Google Scholar]

- Chen, D.Z.; Zhang, W.B.; Zhang, Z.Z. Indoor Positioning with Sensors in a Smartphone and a Fabricated High-Precision Gyroscope. In Proceedings of the 7th International Conference on Communications, Signal Processing, and Systems (CSPS), Dalian, China, 14–16 July 2018; pp. 1126–1134. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).