Mathematical Camera Array Optimization for Face 3D Modeling Application

Abstract

:1. Introduction

- Sufficient overlap percentage among an acceptable number of captured images.

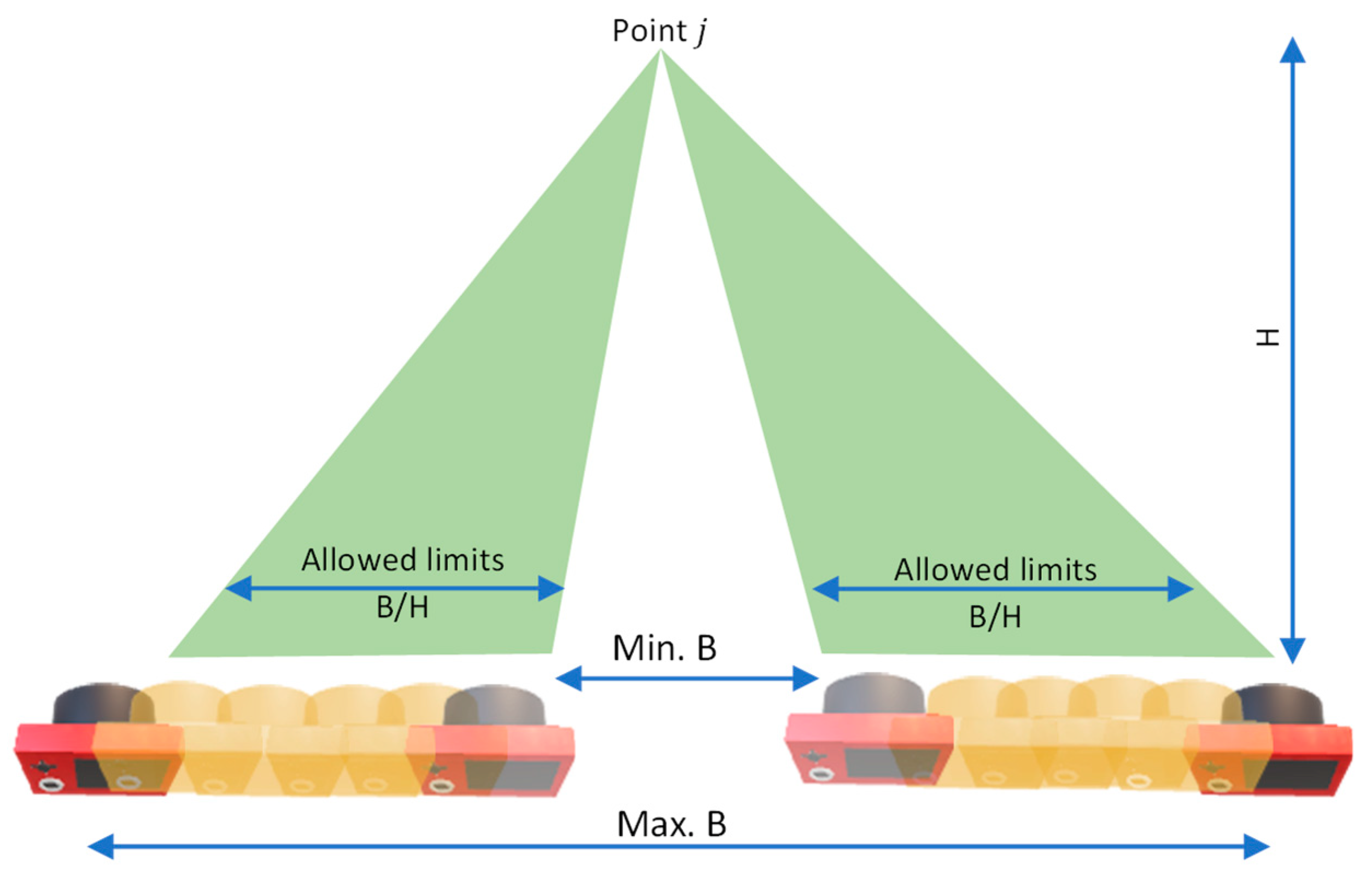

- Suitable ray intersection geometry of the images defined by the base/height (B/H) ratio. The B/H ratio is an expression of the acceptable base distance B between the cameras themselves and the distance to the object H.

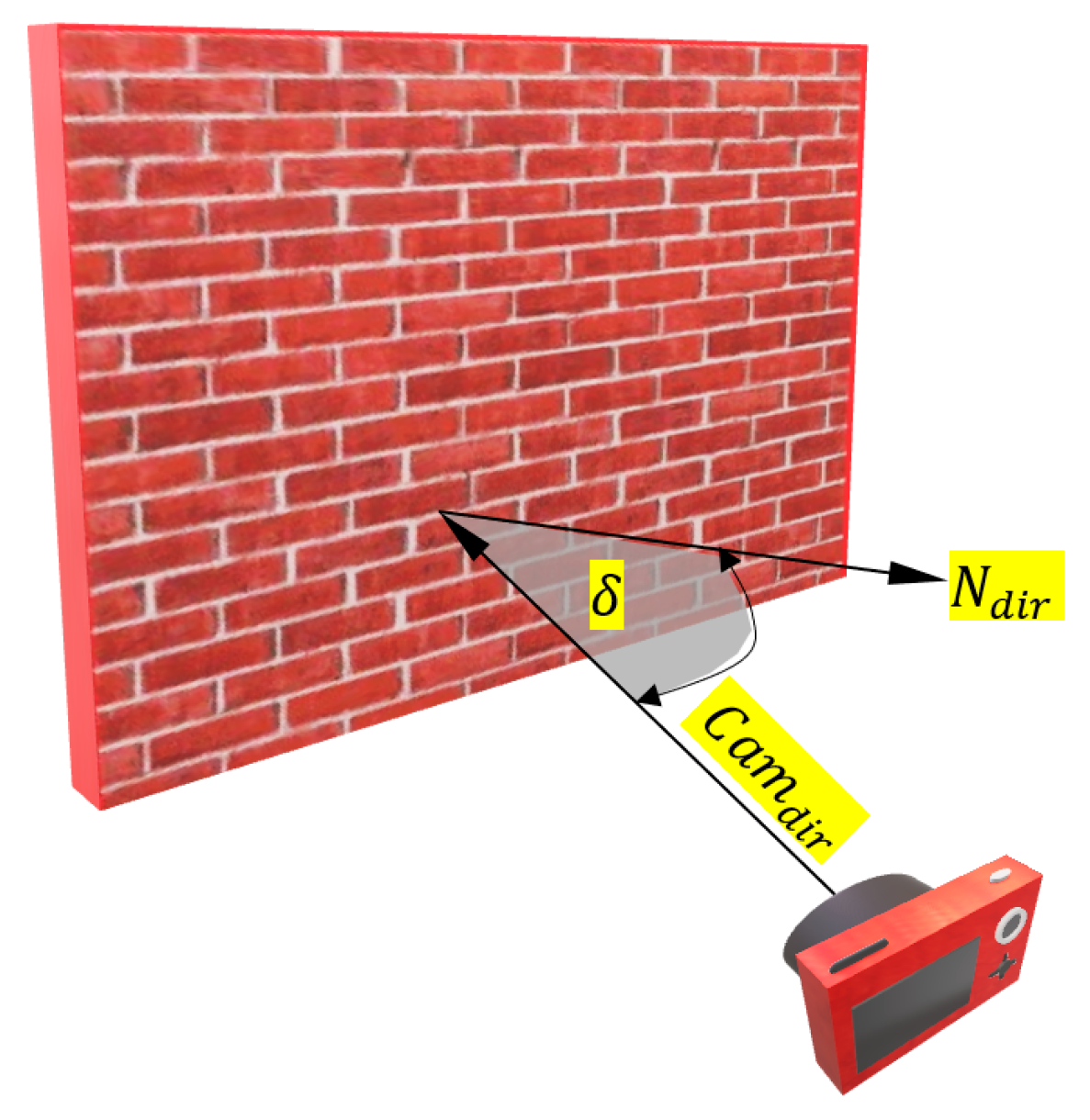

- Acceptable angles of incidence between the image rays and the object features.

- Pre-calibrated camera or pre-identified interior camera parameters.

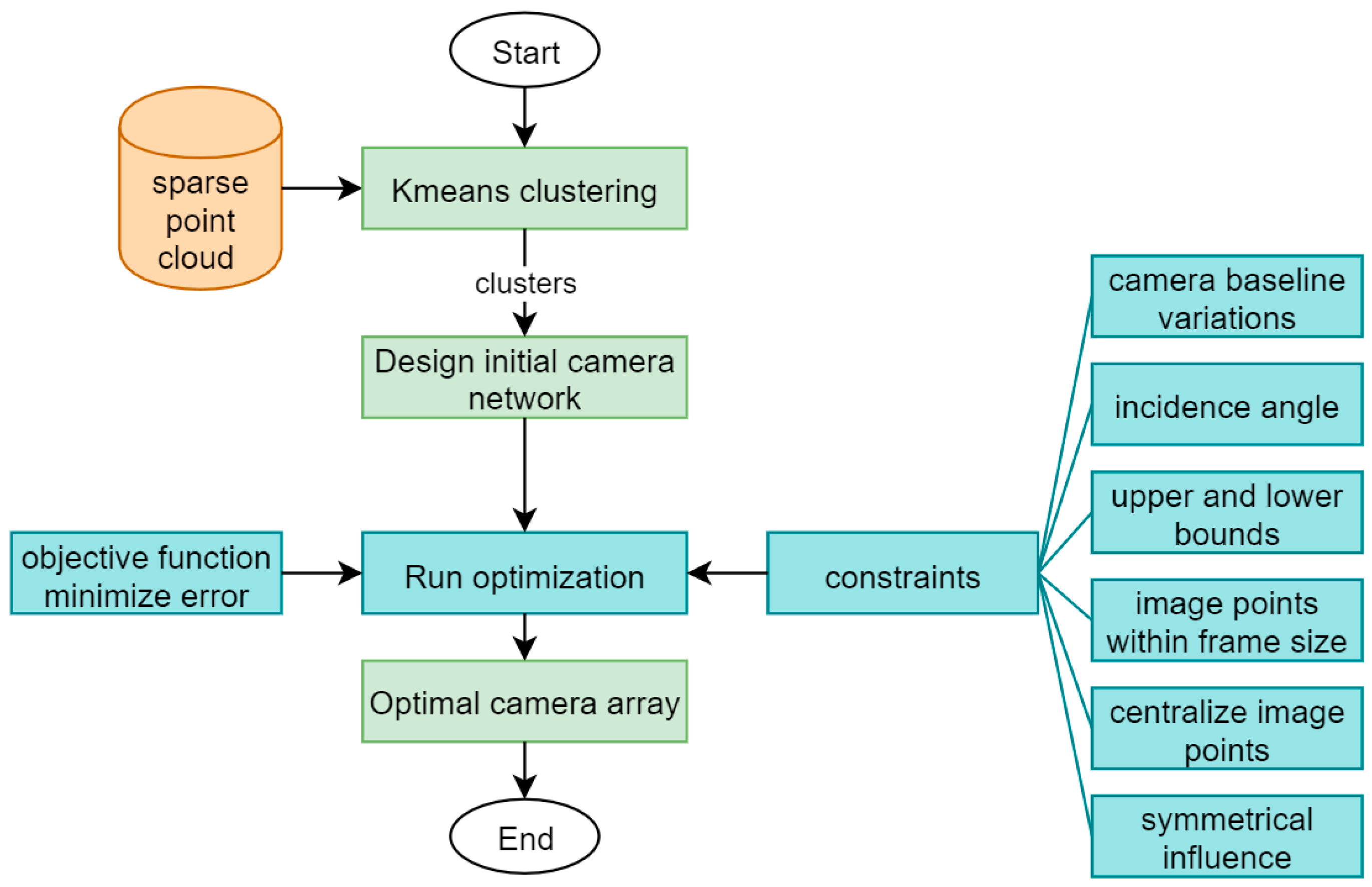

2. Methodology

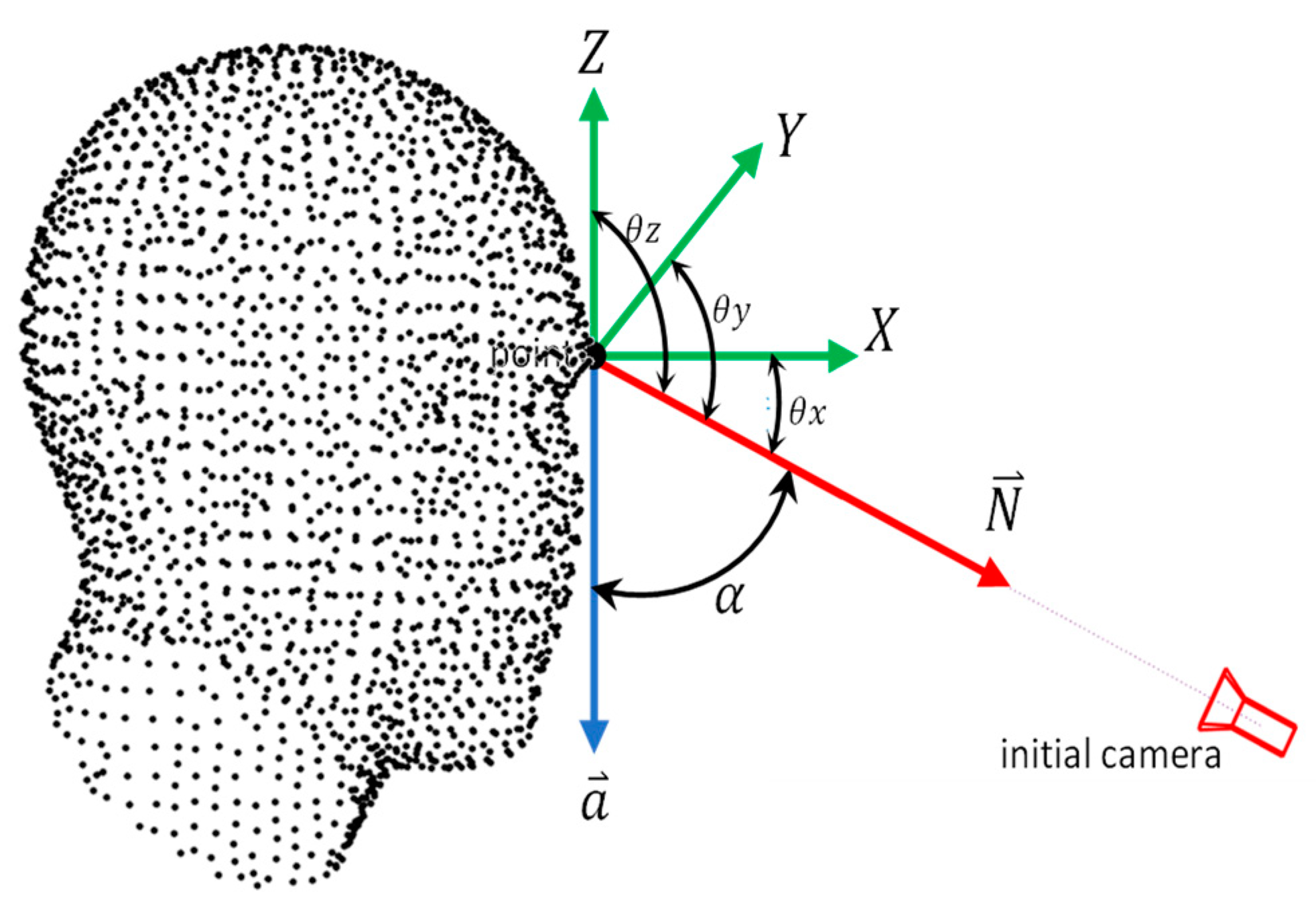

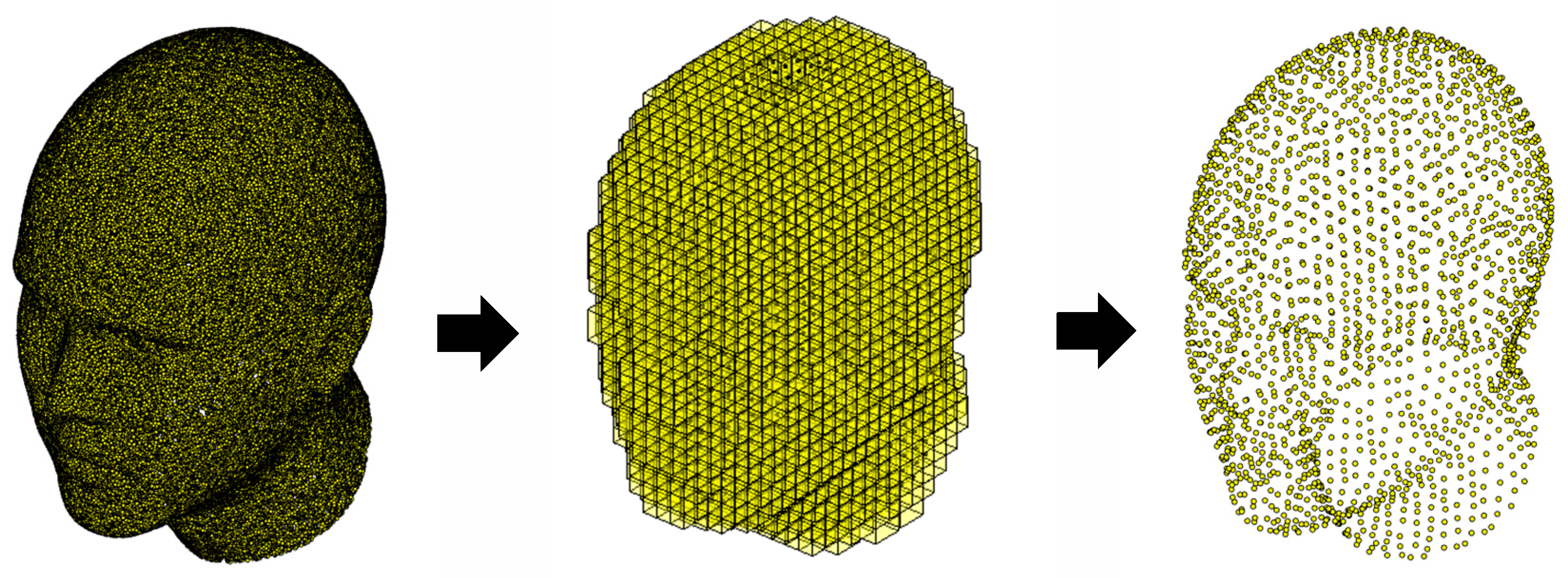

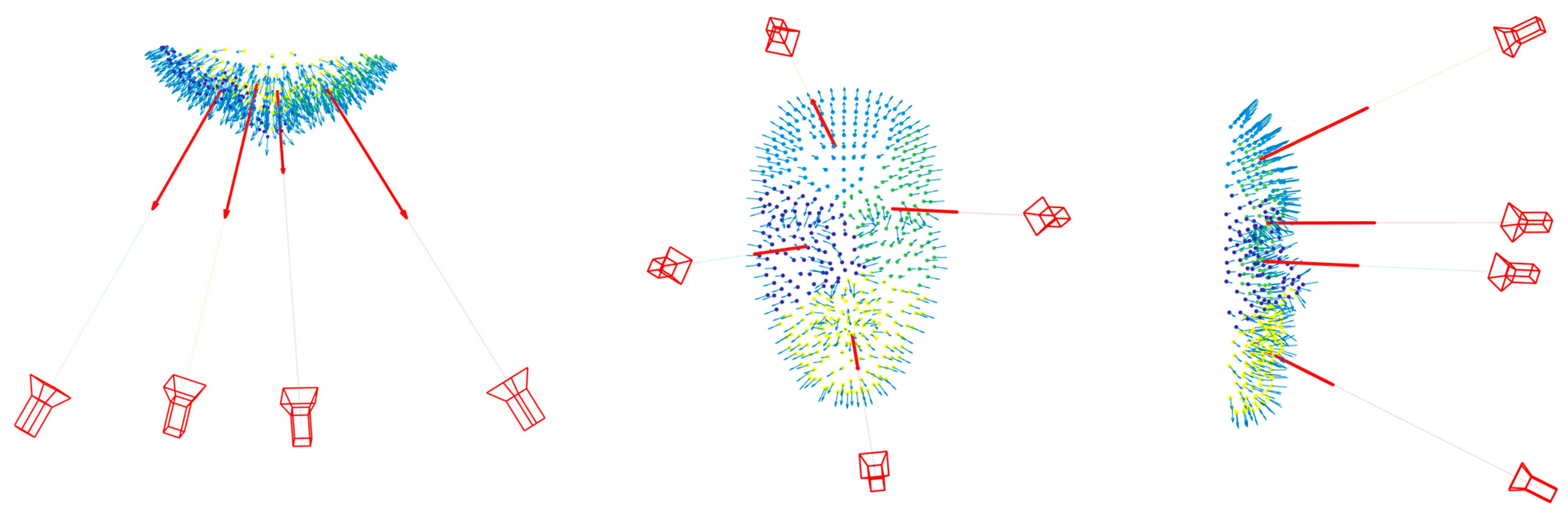

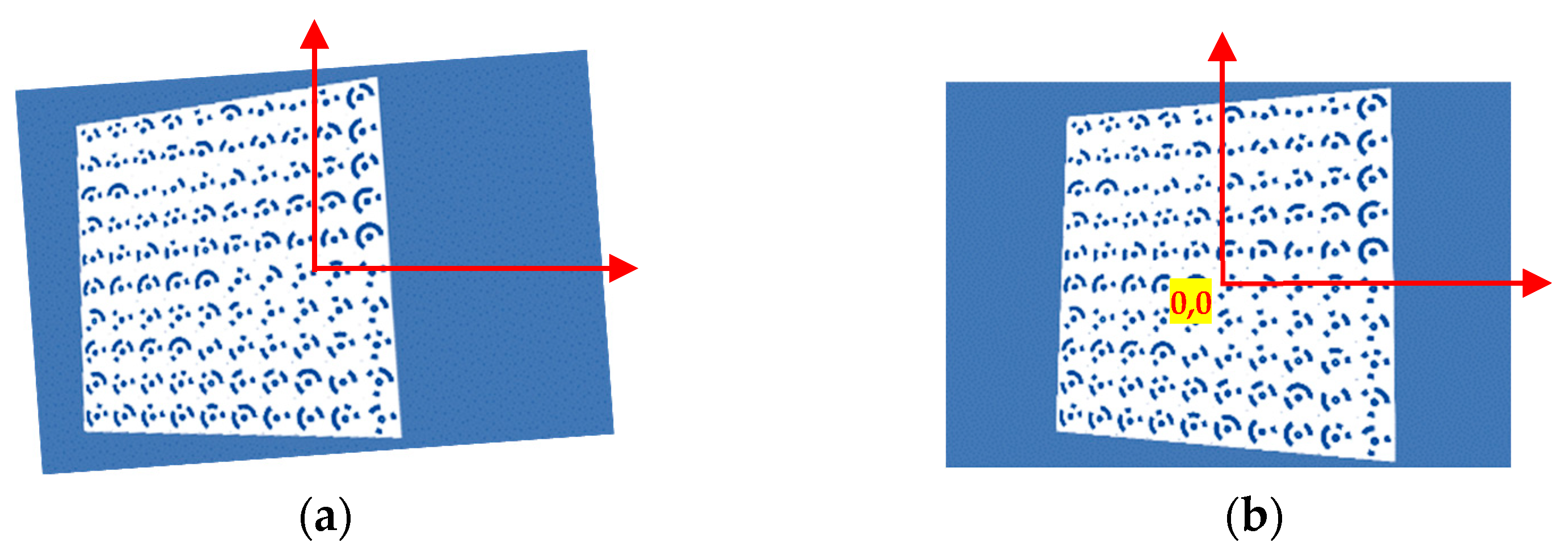

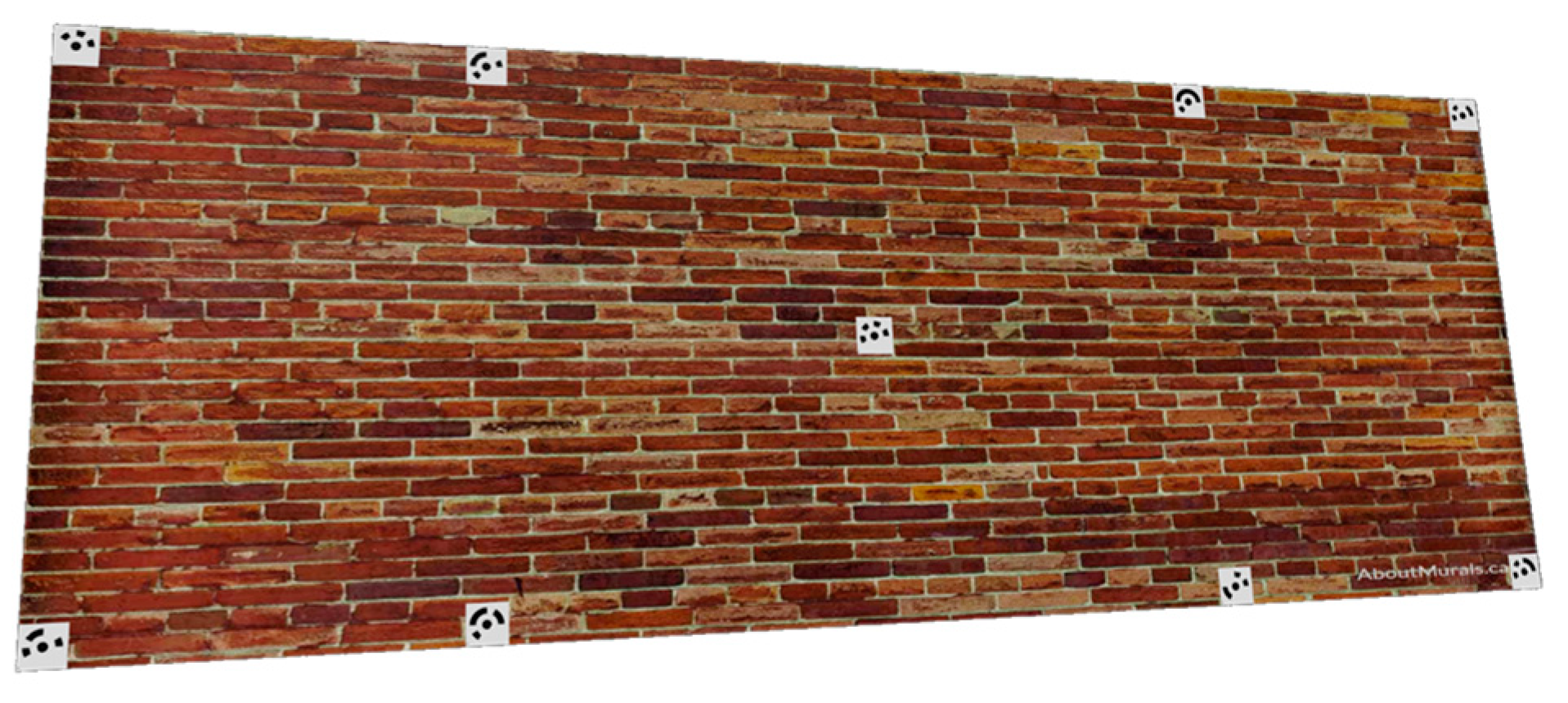

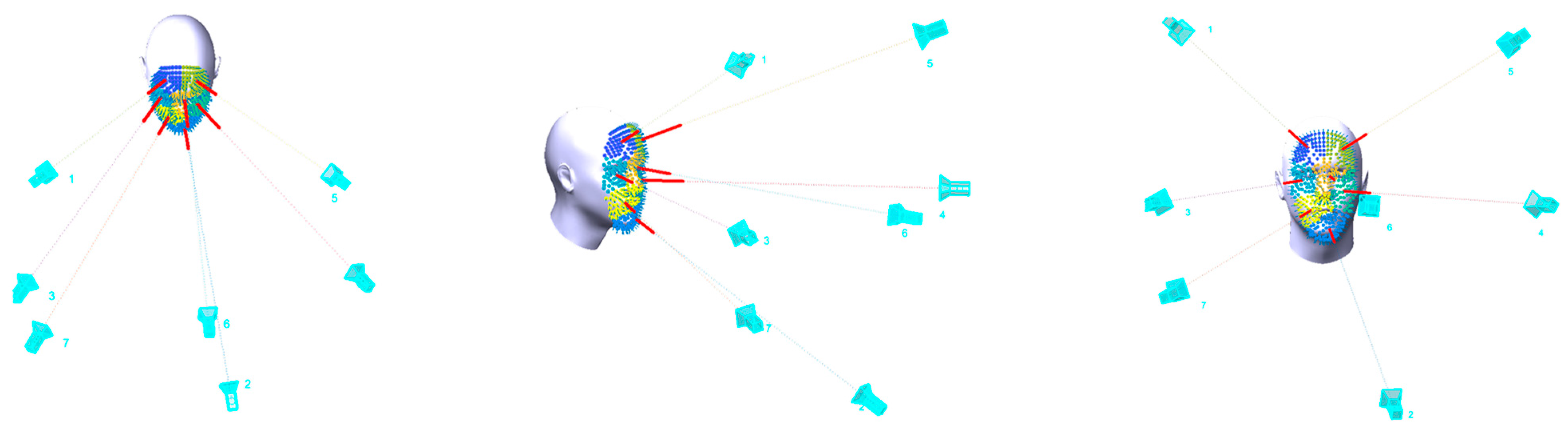

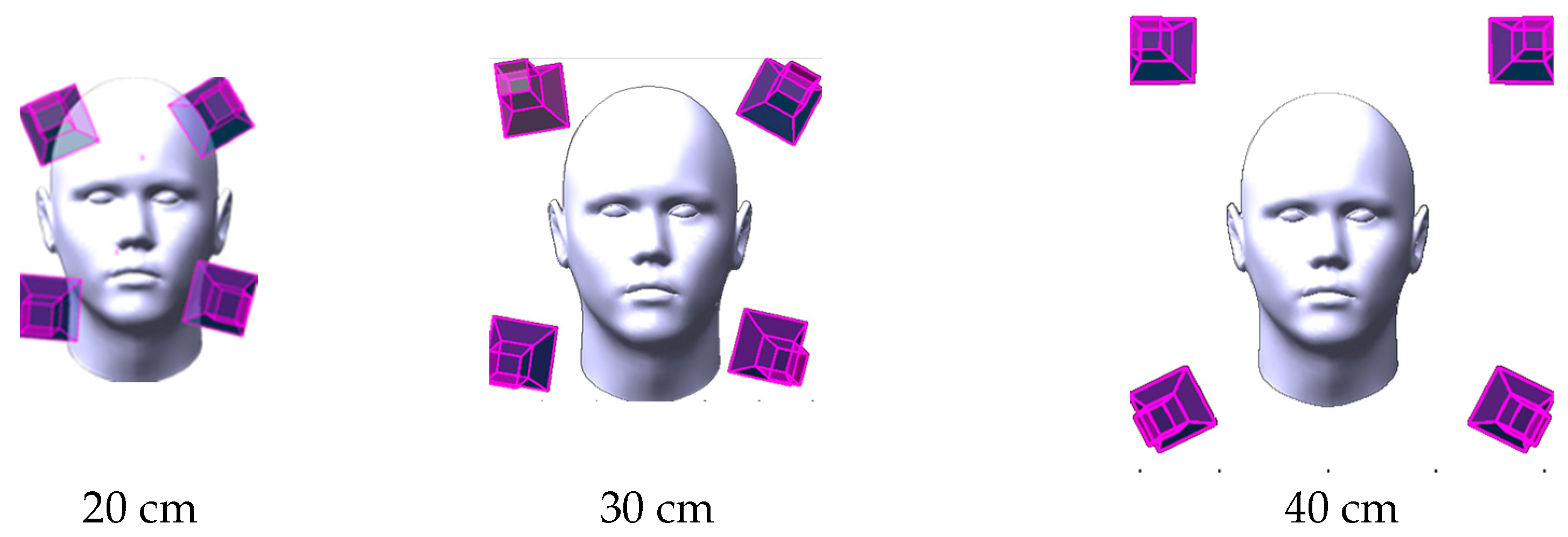

2.1. Automated Initial Camera Network Design

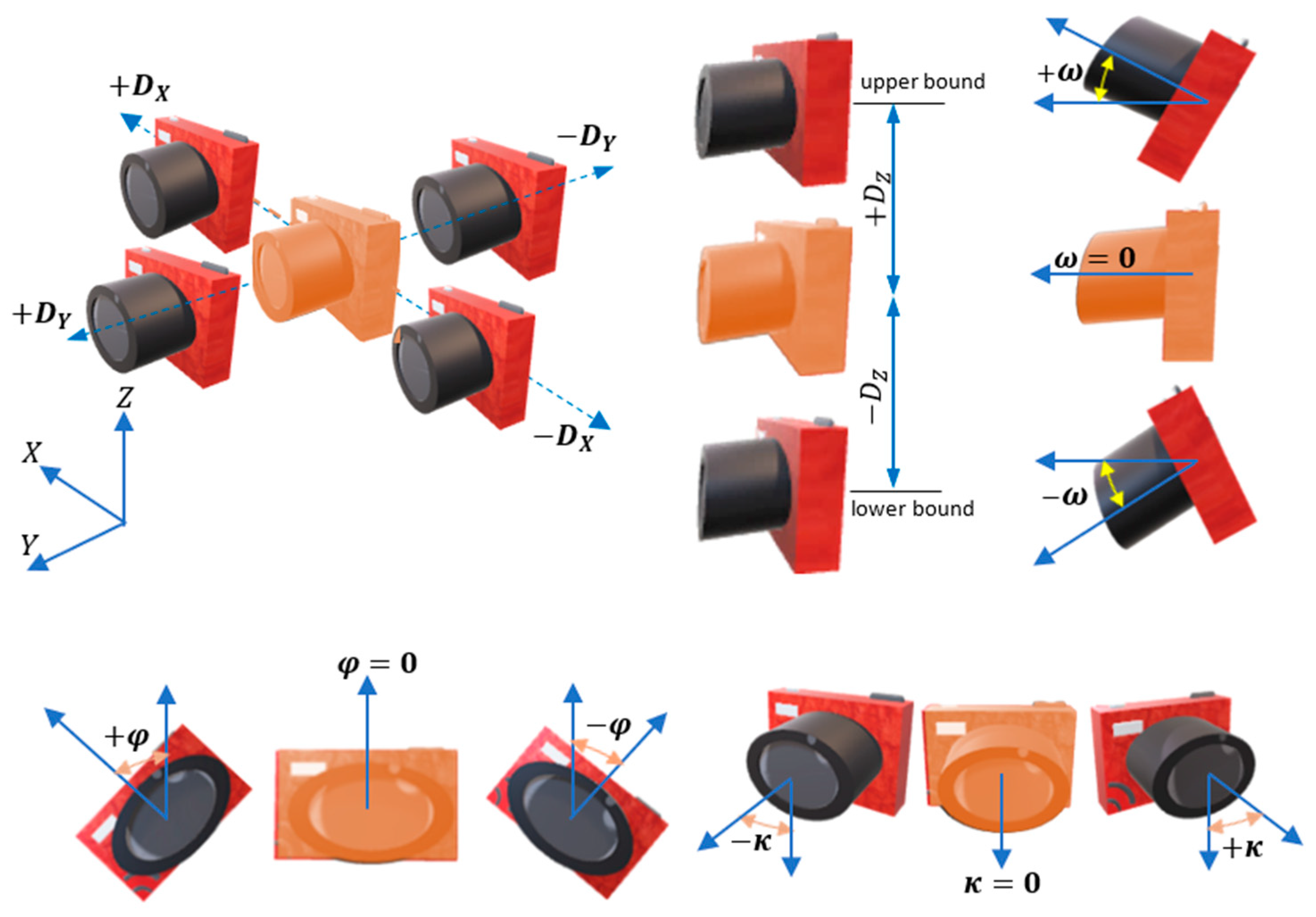

2.2. Elements of the Mathematical Optimization

2.3. The Formulation of the Camera Network Optimization Problem

2.3.1. Cost Function

2.3.2. Network Design Constraints

2.4. Pseudocode

| Algorithm 1: Main program includes the input and output and call the optimization. |

| functions of both: cost function and nonlinear constraints. |

| Input: |

| – object points |

| – camera parameters: focal length, frame size, pixel size, lens distortion. |

| – initial camera orientation for 1:num. of cameras |

| call Algorithm 2 |

| call Algorithm 3 |

| run nonlinear constrained minimzation using the interior-point method. |

| Output: optimal camera orientation |

| Print results. |

| Algorithm 2: Compute the cost function of minimizing the Q matrix of the object points. |

| Input: initial camera orientation and parameters, object points P and their normal directions. |

| Output: cost function F min.eigen (Q covariance matrix) |

| For j = 1:P |

| For i = 1:no. of cameras |

| compute rotation matrix M |

| compute image coordinates. |

| end |

| check visibility of Pj in camera i |

| compute covariance matrix Qj |

| end |

| cost function F = |eig(Q)| |

| Algorithm 3: Compute the nonlinear constraints function of the camera design. |

| Input: initial camera orientation and parameters, object points P and their normal directions. |

| Output: nonlinear constraints [c,ceq] |

| For j = 1:P |

| For i = 1:no. of cameras |

| compute rotation matrix M. |

| compute angle of incidence ij. |

| compute image coordinates. |

| end |

| check visibility of Pj in camera i |

| end |

| For h = 1:no. of cameras |

| compute B/H ratio |

| nonequality constraints |

| equality constraints |

| optional equality constraints ceq = mean (T) − median (T) = 0 |

| optional equality constraints ceq = mean (edge_length) = design distance |

| nonequality constraints |

| end |

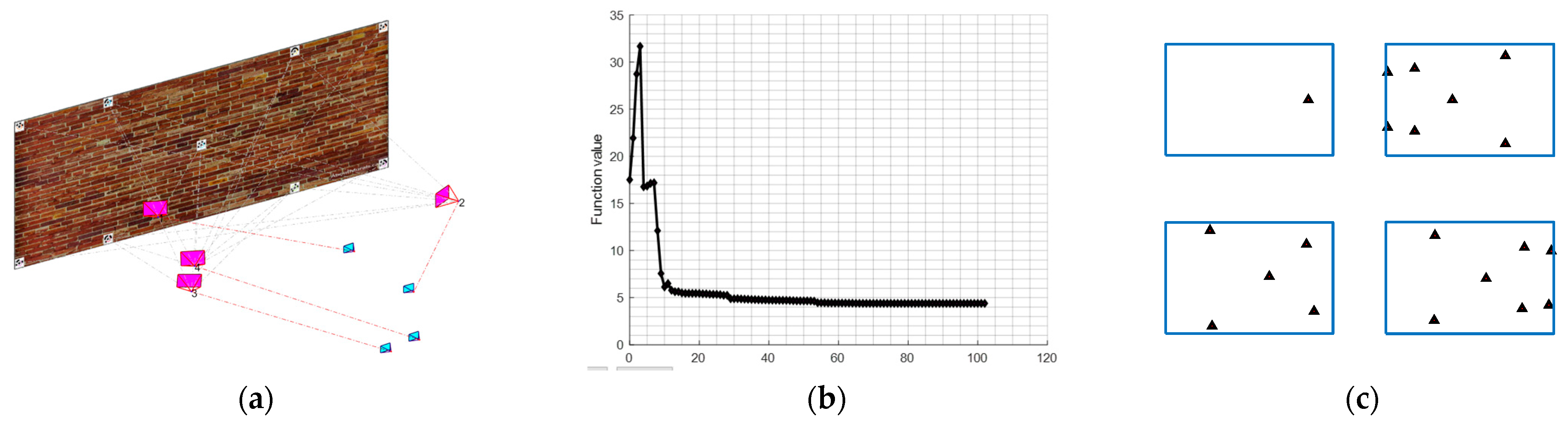

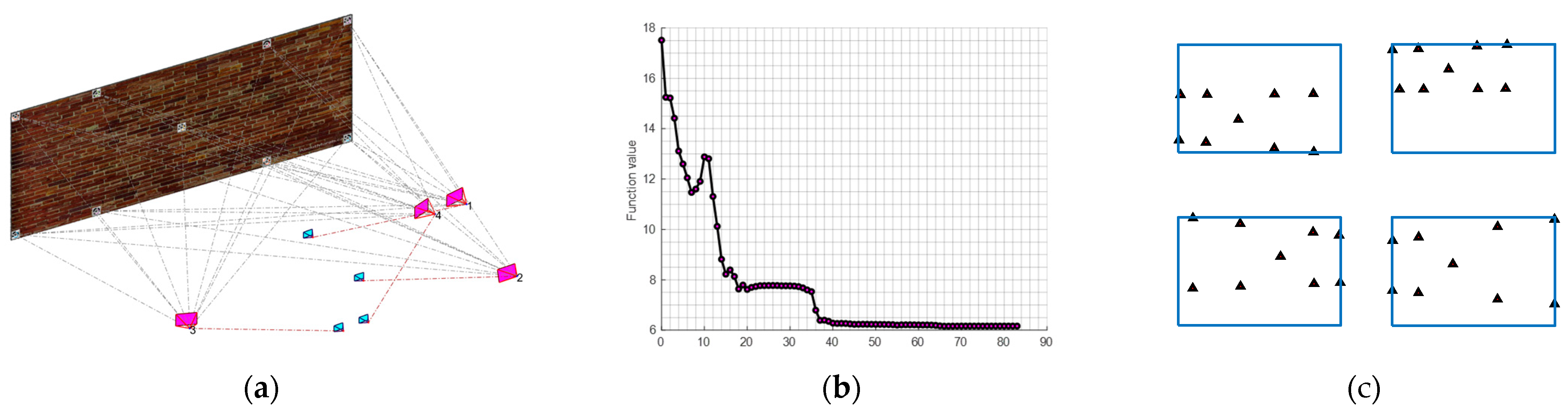

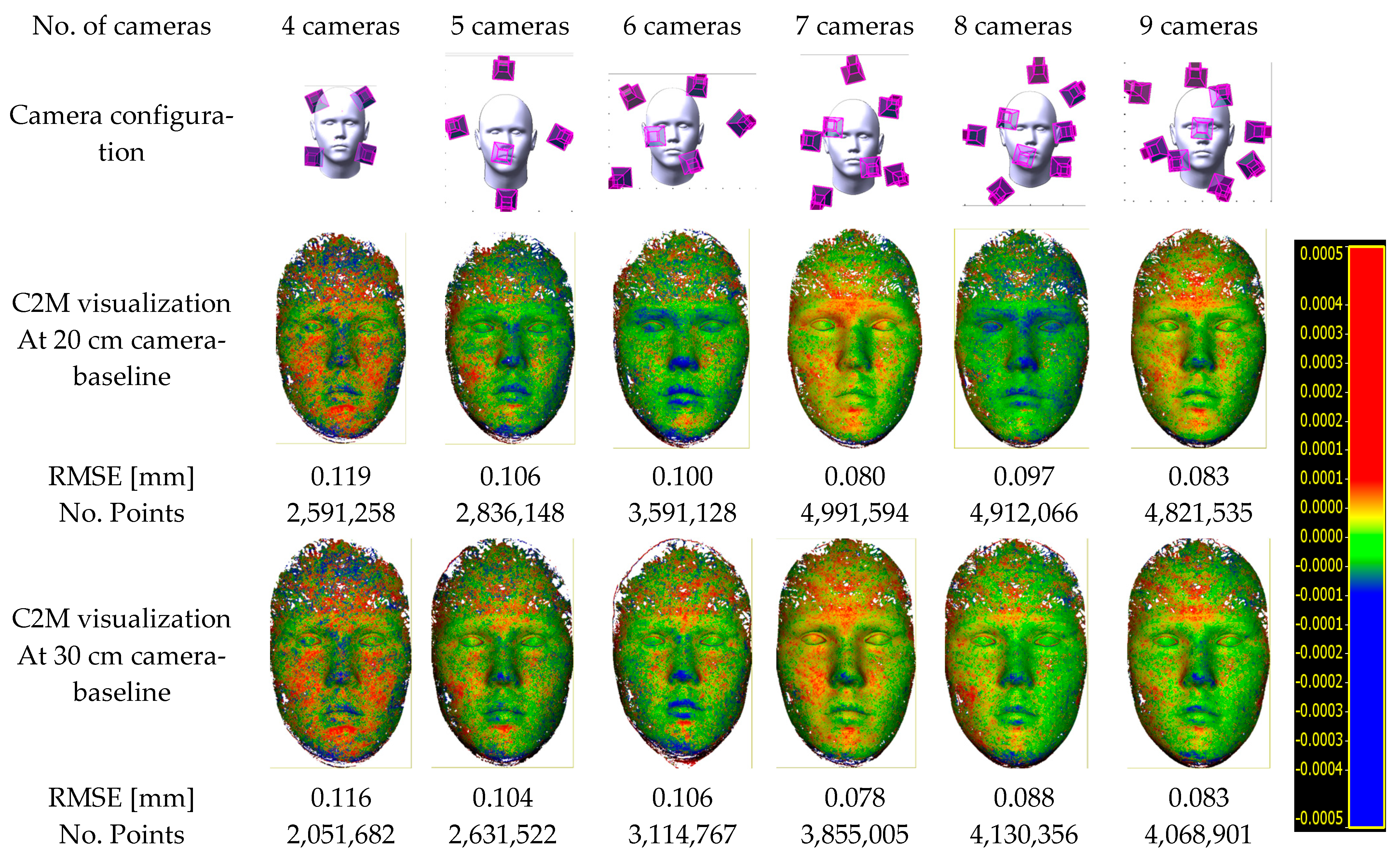

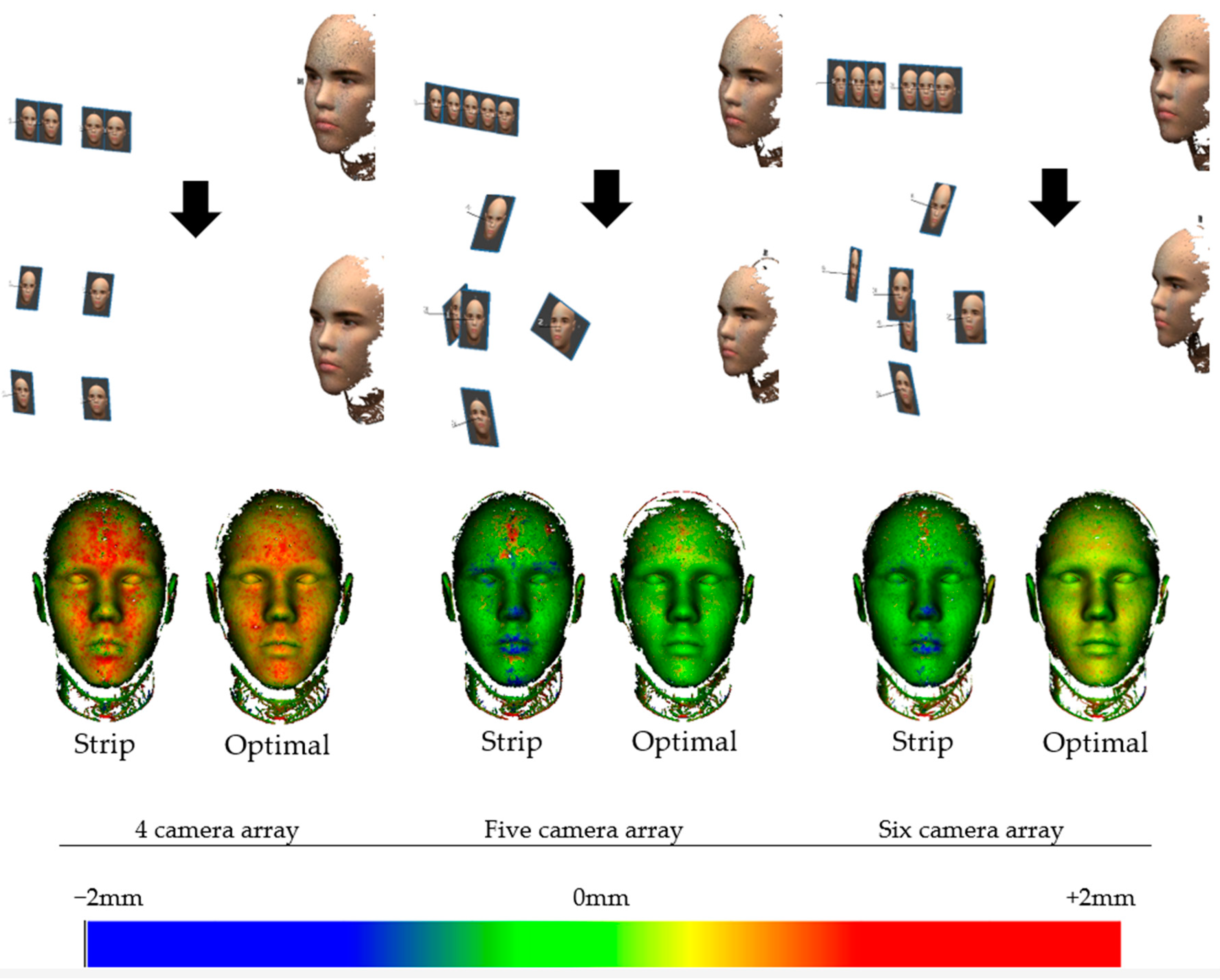

2.5. Evaluation of the Optimization Algorithm

- 1-

- nonequality constraint of the image coordinates (Equation (11)).

- 2-

- equality constraint of the image coordinates (Equation (12)).

- 3-

- average B/H ≥ 0.6 and minimum B/H ≥ 0.2.

- 4-

- average incident angles ≤ 30°.

- 5-

- The and will be selected for angles in the range of ±45° from the initial values while in the range of ±15 m for Tx and Ty from the initial values.

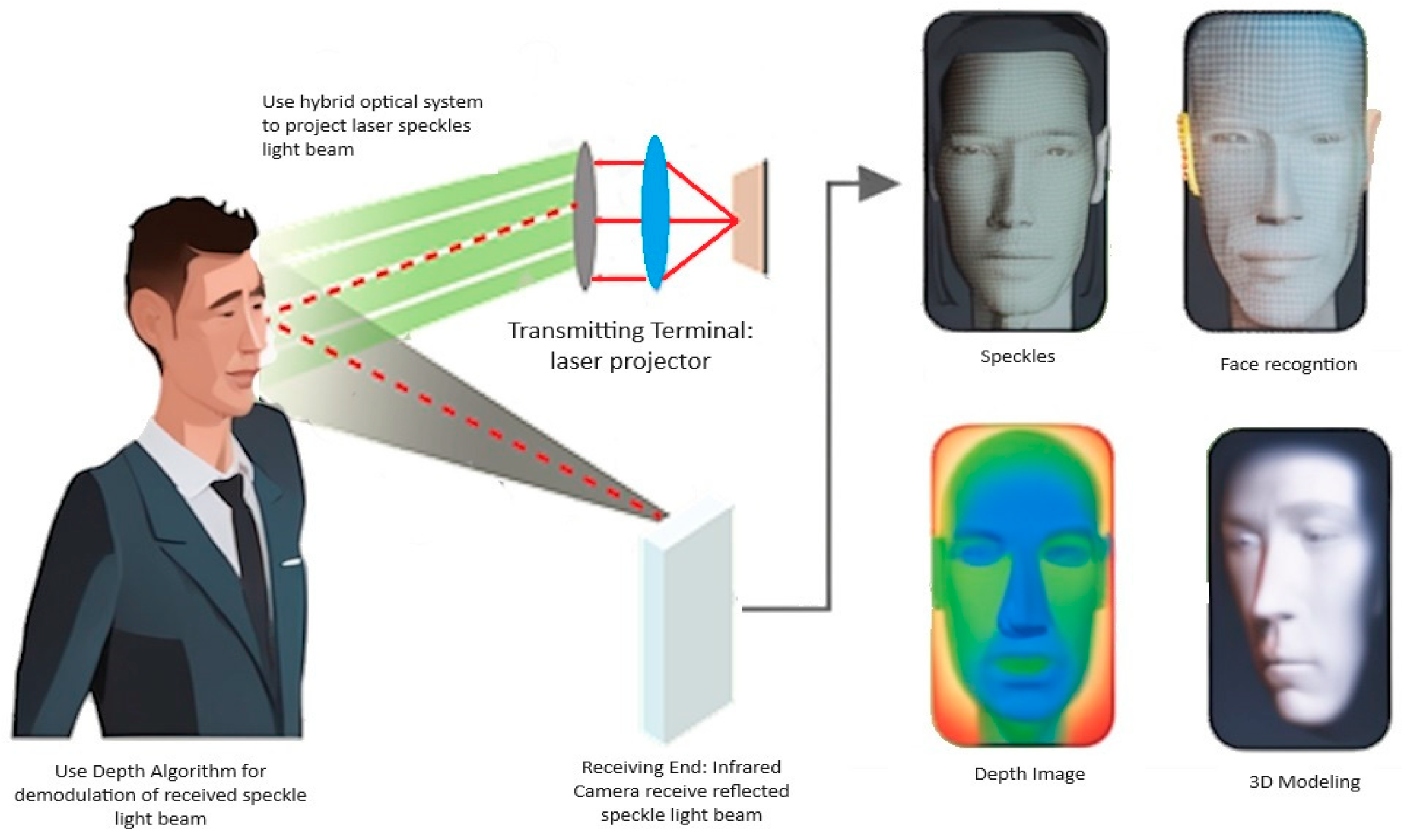

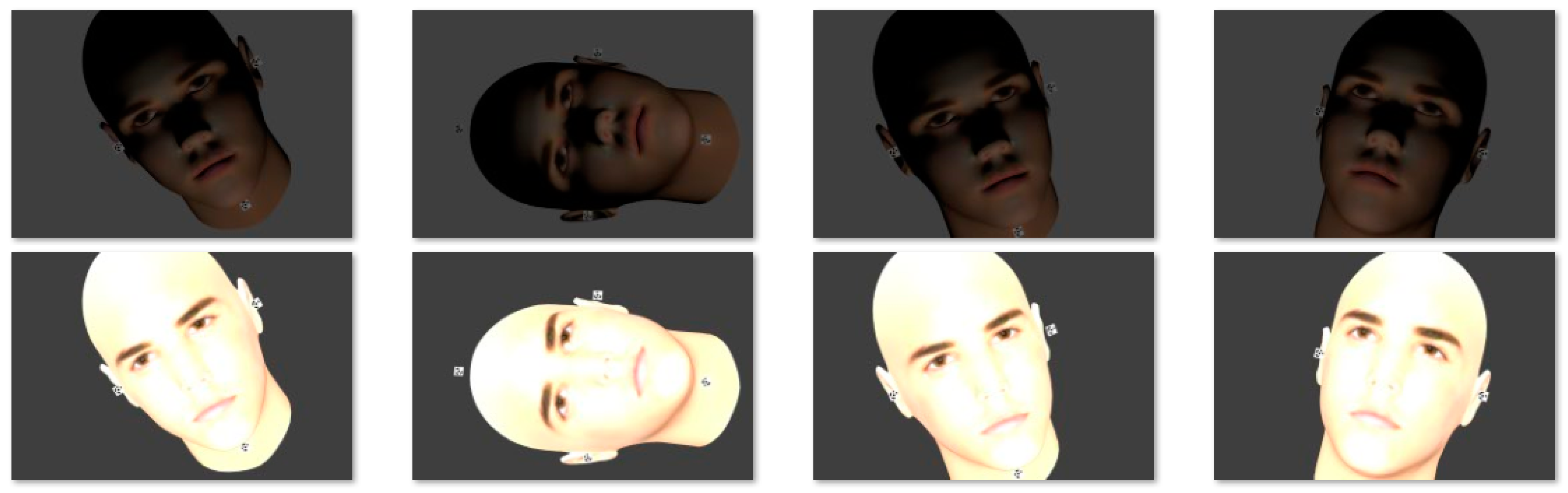

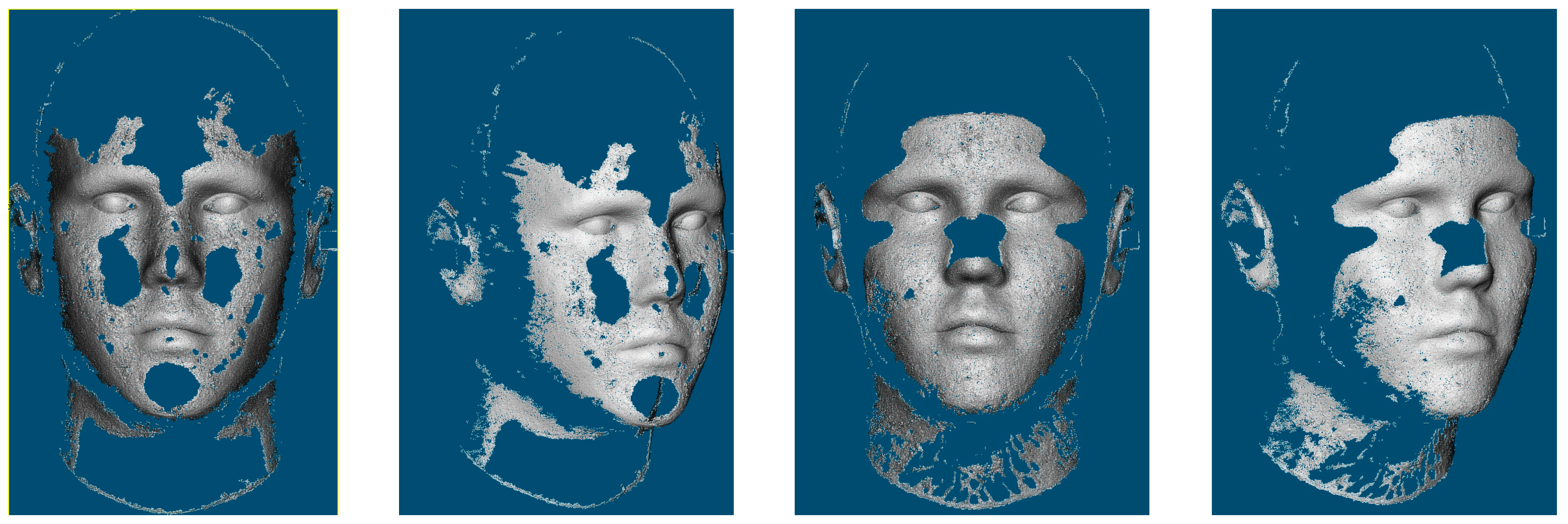

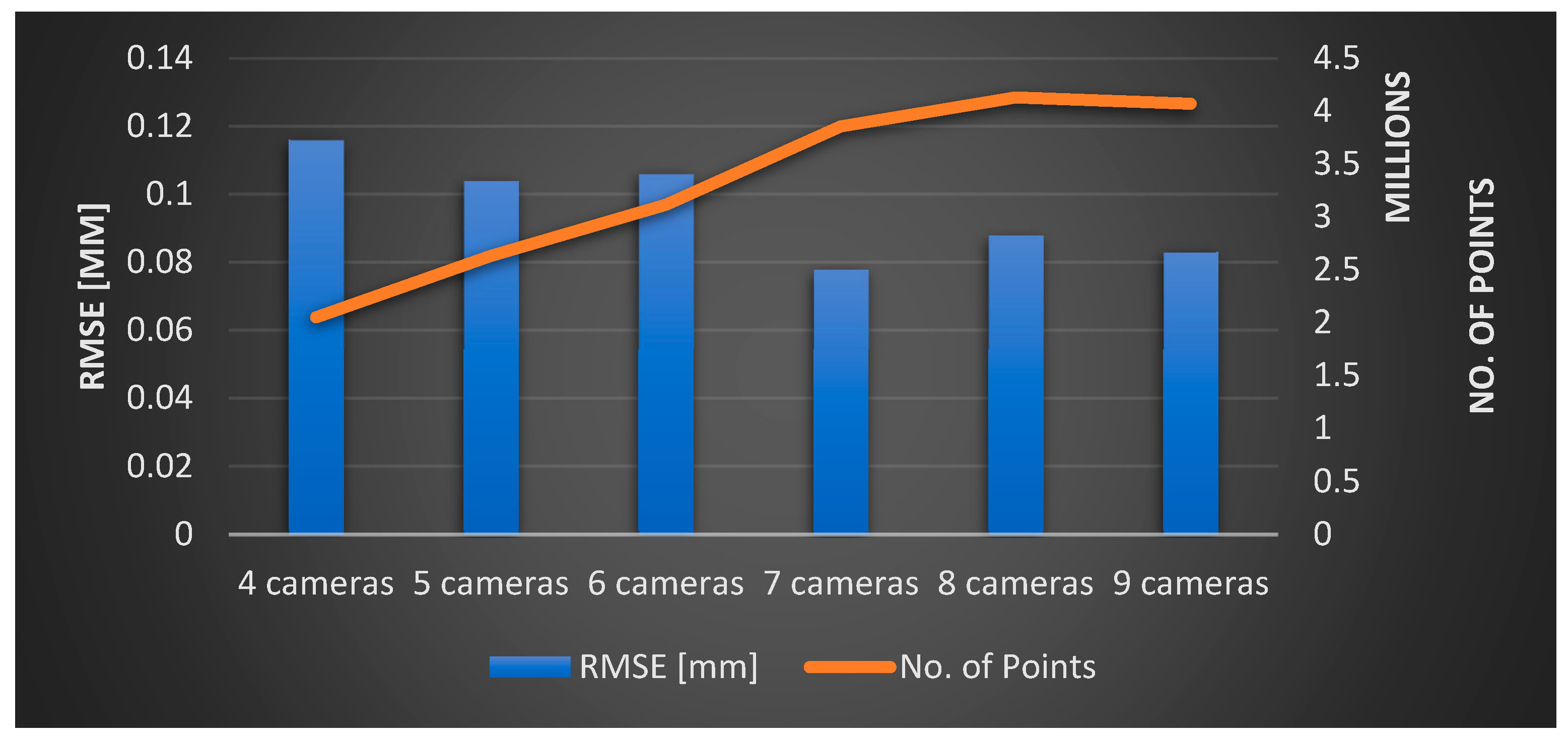

3. Face 3D Modeling for Recognition

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kakadiaris, I.A.; Passalis, G.; Toderici, G.; Perakis, T.; Theoharis, T. Face Recognition, 3D-Based. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 329–338. [Google Scholar]

- Katkoria, D.V.; Arjona, A.C. 3-D Facial Recognition System. 2020. Available online: https://www.nxp.com/docs/en/brochure/3DFacialRecognition.pdf (accessed on 10 October 2023).

- ZKTECO. Introduction of 3D Structured Light Facial Recognition. 2021. Available online: https://www.zkteco.me/solution/3Dstructuredlightfacialrecognition.pdf (accessed on 10 October 2023).

- Meers, S.; Ward, K. Face Recognition Using a Time-of-Flight Camera. In Proceedings of the 2009 Sixth International Conference on Computer Graphics, Imaging and Visualization, Tianjin, China, 11–14 August 2009; pp. 377–382. [Google Scholar]

- Bauer, S.; Wasza, J.; Müller, K.; Hornegger, J. 4D Photogeometric face recognition with time-of-flight sensors. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 196–203. [Google Scholar]

- Manterola, I.N. Evaluating the Feasibility and Effectiveness of Multi-Perspective Stereoscopy for 3D Face Reconstruction. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2021. [Google Scholar]

- Dou, P.; Wu, Y.; Shah, S.K.; Kakadiaris, I.A. Monocular 3D facial shape reconstruction from a single 2D image with coupled-dictionary learning and sparse coding. Pattern Recognit. 2018, 81, 515–527. [Google Scholar] [CrossRef]

- Wu, J.; Yin, D.; Chen, J.; Wu, Y.; Si, H.; Lin, K. A Survey on Monocular 3D Object Detection Algorithms Based on Deep Learning. J. Phys. Conf. Ser. 2020, 1518, 012049. [Google Scholar] [CrossRef]

- Castellani, U.; Bicego, M.; Iacono, G.; Murino, V. 3D Face Recognition Using Stereoscopic Vision. In Advanced Studies in Biometrics, Proceedings of the Summer School on Biometrics, Alghero, Italy, 2–6 June 2003; Tistarelli, M., Bigun, J., Grosso, E., Eds.; Revised Selected Lectures and Papers; Springer: Berlin/Heidelberg, Germany, 2005; pp. 126–137. [Google Scholar]

- Uchida, N.; Shibahara, T.; Aoki, T.; Nakajima, H.; Kobayashi, K. 3D face recognition using passive stereo vision. In Proceedings of the IEEE International Conference on Image Processing 2005, Genoa, Italy, 14 September 2005; p. II-950. [Google Scholar]

- Hayasaka, A.; Shibahara, T.; Ito, K.; Aoki, T.; Nakajima, H.; Kobayashi, K. A 3D Face Recognition System Using Passive Stereo Vision and Its Performance Evaluation. In Proceedings of the 2006 International Symposium on Intelligent Signal Processing and Communications, Yonago, Japan, 12–15 December 2006; pp. 379–382. [Google Scholar]

- Dawi, M.; Al-Alaoui, M.A.; Baydoun, M. 3D face recognition using stereo images. In Proceedings of the MELECON 2014—2014 17th IEEE Mediterranean Electrotechnical Conference, Beirut, Lebanon, 13–16 April 2014; pp. 247–251. [Google Scholar]

- Li, Y.; Li, Y.; Xiao, B. A Physical-World Adversarial Attack against 3D Face Recognition. arXiv 2022, arXiv:2205.13412. [Google Scholar]

- Tsalakanidou, F.; Malassiotis, S. Real-time 2D+3D facial action and expression recognition. Pattern Recognit. 2010, 43, 1763–1775. [Google Scholar] [CrossRef]

- Vázquez, M.A.; Cuevas, F.J. A 3D Facial Recognition System Using Structured Light Projection. In Lecture Notes in Computer Science, Proceedings of the HAIS 2014: Hybrid Artificial Intelligence Systems, Salamanca, Spain, 11–13 June 2014; Springer: Cham, Switzerland, 2014; pp. 241–253. [Google Scholar]

- Bergh, M.V.D.; Gool, L.V. Combining RGB and ToF cameras for real-time 3D hand gesture interaction. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 66–72. [Google Scholar]

- Kim, J.; Park, S.; Kim, S.; Lee, S. Registration method between ToF and color cameras for face recognition. In Proceedings of the 2011 6th IEEE Conference on Industrial Electronics and Applications, Beijing, China, 21–23 June 2011; pp. 1977–1980. [Google Scholar]

- Min, R.; Choi, J.; Medioni, G.; Dugelay, J.-L. Real-time 3D face identification from a depth camera. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1739–1742. [Google Scholar]

- Berretti, S.; Pala, P.; Bimbo, A.D. Face Recognition by Super-Resolved 3D Models From Consumer Depth Cameras. IEEE Trans. Inf. Forensics Secur. 2014, 9, 1436–1449. [Google Scholar] [CrossRef]

- Hsu, G.S.; Liu, Y.L.; Peng, H.C.; Chung, S.L. RGB-D Based Face Reconstruction and Recognition. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 339–344. [Google Scholar]

- Bondi, E.; Pala, P.; Berretti, S.; Bimbo, A.D. Reconstructing High-Resolution Face Models From Kinect Depth Sequences. IEEE Trans. Inf. Forensics Secur. 2016, 11, 2843–2853. [Google Scholar] [CrossRef]

- LLC, F. Multi Camera Systems with GPU Image Processing. Available online: https://www.fastcompression.com/solutions/multi-camera-systems.htm (accessed on 3 October 2023).

- 3DCOPYSYSTEMS. Big ALICE, High Resolution 3D Studio. Available online: https://3dcopysystems.com/ (accessed on 3 October 2021).

- Koch, T.; Körner, M.; Fraundorfer, F. Automatic and Semantically-Aware 3D UAV Flight Planning for Image-Based 3D Reconstruction. Remote Sens. 2019, 11, 1550. [Google Scholar] [CrossRef]

- Fraser, C.S. Network Design Considerations for Non-Topographic Photogrammetry. Photogramm. Eng. Remote Sens. 1984, 50, 1115–1126. [Google Scholar]

- Alsadik, B.; Gerke, M.; Vosselman, G. Automated camera network design for 3D modeling of cultural heritage objects. J. Cult. Herit. 2013, 14, 515–526. [Google Scholar] [CrossRef]

- Bogaerts, B.; Sels, S.; Vanlanduit, S.; Penne, R. Interactive Camera Network Design Using a Virtual Reality Interface. Sensors 2019, 19, 1003. [Google Scholar] [CrossRef] [PubMed]

- Vasquez-Gomez, J.I.; Sucar, L.E.; Murrieta-Cid, R.; Lopez-Damian, E. Volumetric Next-best-view Planning for 3D Object Reconstruction with Positioning Error. Int. J. Adv. Robot. Syst. 2014, 11, 159. [Google Scholar] [CrossRef]

- Mendoza, M.; Vasquez-Gomez, J.; Taud, H. NBV-Net: A 3D Convolutional Neural Network for Predicting the Next-Best-View. Master’s Thesis, Instituto Politécnico Nacional, Mexico City, Mexico, 2018. [Google Scholar]

- Alsadik, B.; Gerke, M.; Vosselman, G.; Daham, A.; Jasim, L. Minimal Camera Networks for 3D Image Based Modeling of Cultural Heritage Objects. Sensors 2014, 14, 5785–5804. [Google Scholar] [CrossRef] [PubMed]

- Hullo, J.F.; Grussenmeyer, P.; Fares, S.C. Photogrammetry and Dense Stereo Matching Approach Applied to the Documentation of the Cultural Heritage Site of Kilwa (Saudi Arabia). In Proceedings of the 22nd CIPA Symposium, Kyoto, Japan, 11–15 October 2009. [Google Scholar]

- Waldhausl, P.; Ogleby, C. 3 × 3 Rules for Simple Photogrammetry Documentation of Architecture. 1994. Available online: https://www.cipaheritagedocumentation.org/wp-content/uploads/2017/02/Waldh%C3%A4usl-Ogleby-3x3-rules-for-simple-photogrammetric-documentation-of-architecture.pdf (accessed on 10 October 2023).

- Rodrigues, O. Des lois géométriques qui régissent les déplacements d’un système solide dans l’espace, et de la variation des coordonnées provenant de ces déplacements considérés indépendants des causes qui peuvent les produire. J. Math. Pures Appl. 1840, 5, 380–440. [Google Scholar]

- Alsadik, B. Adjustment Models in 3D Geomatics and Computational Geophysics: With MATLAB Examples; Elsevier: Edinburgh, UK, 2019. [Google Scholar]

- Madsen, K.; Nielsen, H.B.; Tingleff, O. Methods for Non-Linear Least Squares Problems, 2nd ed.; Informatics and Mathematical Modelling, Technical University of Denmark, DTU: Kongens Lyngby, Denmark, 2004. [Google Scholar]

- Rao, S.S. Engineering Optimization—Theory and Practice, 4th ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2009. [Google Scholar]

- Waltz, R.A.; Morales, J.L.; Nocedal, J.; Orban, D. An interior algorithm for nonlinear optimization that combines line search and trust region steps. Math. Program. 2006, 107, 391–408. [Google Scholar] [CrossRef]

- Pearson, J.W.; Gondzio, J. Fast interior point solution of quadratic programming problems arising from PDE-constrained optimization. Numer. Math. 2017, 137, 959–999. [Google Scholar] [CrossRef] [PubMed]

- Curtis, F.E.; Huber, J.; Schenk, O.; Wächter, A. A note on the implementation of an interior-point algorithm for nonlinear optimization with inexact step computations. Math. Program. 2012, 136, 209–227. [Google Scholar] [CrossRef]

- Curtis, F.E.; Schenk, O.; Wächter, A. An Interior-Point Algorithm for Large-Scale Nonlinear Optimization with Inexact Step Computations. SIAM J. Sci. Comput. 2010, 32, 3447–3475. [Google Scholar] [CrossRef]

- Förstner, W.; Wrobel, B.P. Bundle Adjustment. In Photogrammetric Computer Vision: Statistics, Geometry, Orientation and Reconstruction; Springer International Publishing: Cham, Switzerland, 2016; pp. 643–725. [Google Scholar]

- Models, I.D.D. Bieber. Available online: https://www.turbosquid.com/ (accessed on 1 February 2020).

- Agisoft. AgiSoft Metashape. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 22 July 2020).

- Spreeuwers, L. Fast and Accurate 3D Face Recognition. Int. J. Comput. Vis. 2011, 93, 389–414. [Google Scholar] [CrossRef]

| Stereo Vision [9,10,11,12] | Structured Light [13,14,15] | Time-of-Flight (ToF) [4,16,17] | Depth Cameras [18,19,20,21] | |

|---|---|---|---|---|

| Advantages |

|

|

|

|

|

|

|

| |

|

| |||

| Disadvantages |

|

|

|

|

| Initial | computed image coordinates [mm] | |||||||||||||||

| omega [deg] | phi [deg] | kappa [deg] | X [m] | Y [m] | Z [m] | x-coordinates | y-coordinates | |||||||||

| 90.12 | 5.51 | 0.00 | 1.00 | −24.23 | 4.82 | coded target | cam 1 | cam 2 | cam 3 | cam4 | cam 1 | cam2 | cam3 | cam 4 | ||

| 91.00 | 0.48 | 0.00 | 0.40 | −35.74 | 5.80 | point 1 | −11.15 | −9.42 | −10.82 | −8.49 | 4.79 | 3.01 | 4.80 | 3.05 | ||

| 90.49 | 5.22 | 0.00 | −0.82 | −33.74 | −0.85 | point 2 | 9.51 | 11.15 | 8.27 | 10.90 | 3.08 | 4.94 | 3.00 | 4.89 | ||

| 90.16 | 4.98 | 0.00 | 0.58 | −36.45 | 1.15 | point 3 | 8.72 | 10.85 | 9.45 | 11.15 | −3.10 | −4.81 | −2.96 | −5.00 | ||

| point 4 | −10.84 | −8.43 | −11.15 | −9.33 | −4.70 | −3.04 | −4.95 | −3.02 | ||||||||

| Given targes coordinates | point 5 | 1.18 | −1.29 | 1.32 | −1.32 | −0.11 | −0.13 | 0.15 | 0.11 | |||||||

| X [m] | Y [m] | Z [m] | point 6 | −4.25 | −6.05 | −4.33 | −5.25 | 4.22 | 3.32 | 4.19 | 3.36 | |||||

| point 1 | −19.50 | 1.20 | 12.00 | point 7 | 5.97 | 4.03 | 5.07 | 4.30 | 3.37 | 4.27 | 3.30 | 4.26 | ||||

| point 2 | 19.50 | 1.20 | 12.00 | point 8 | 5.29 | 4.33 | 6.13 | 4.04 | −3.38 | −4.21 | −3.28 | −4.31 | ||||

| point 3 | 19.50 | 1.20 | −2.00 | point 9 | −4.43 | −5.17 | −3.94 | −5.99 | −4.18 | −3.34 | −4.26 | −3.35 | ||||

| point 4 | −19.50 | 1.20 | −2.00 | sum | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ||||

| point 5 | 0.00 | 1.20 | 5.00 | computed angular deviation [deg] | ||||||||||||

| point 6 | −10.00 | 1.20 | 12.00 | point 1 | point 2 | point 3 | point 4 | point 5 | polnt 6 | point 7 | point 8 | point 9 | ||||

| polnt 7 | 10.00 | 1.20 | 12.00 | cam 1 | 21.78 | 21.78 | 21.78 | 21.78 | 21.78 | 21.78 | 21.78 | 21.78 | 21.78 | |||

| point 8 | 10.00 | 1.20 | −2.00 | cam 2 | 24.58 | 24.58 | 24.58 | 24.58 | 24.58 | 24.58 | 24.58 | 24.58 | 24.58 | |||

| point 9 | −10.00 | 1.20 | −2.00 | cam 3 | 24.54 | 24.54 | 24.54 | 24.54 | 24.54 | 24.54 | 24.54 | 24.54 | 24.54 | |||

| cam 4 | 23.90 | 23.90 | 23.90 | 23.90 | 23.90 | 23.90 | 23.90 | 23.90 | 23.90 | |||||||

| computed optimal orientataion | max(Ab) = 24 deg. < 30 | |||||||||||||||

| omega [deg] | phi [deg] | kappa [deg] | X [m] | Y [m] | Z [m] | |||||||||||

| 81.21 | −21.09 | 0.00 | −14.00 | −28.64 | 5.82 | results | ||||||||||

| 79.12 | 23.49 | 0.00 | 15.40 | −27.69 | 7.93 | B/H | constraint is min (B_H) > 0.2 | |||||||||

| 102.99 | −24.20 | 0.00 | −15.82 | −27.25 | −0.44 | 0.88 | 0.20 | 0.90 | 0.97 | 0.20 | 0.95 | |||||

| 99.15 | 23.85 | 0.00 | 15.58 | −27.66 | 1.32 | mean B_D = 0.69 | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsadik, B.; Spreeuwers, L.; Dadrass Javan, F.; Manterola, N. Mathematical Camera Array Optimization for Face 3D Modeling Application. Sensors 2023, 23, 9776. https://doi.org/10.3390/s23249776

Alsadik B, Spreeuwers L, Dadrass Javan F, Manterola N. Mathematical Camera Array Optimization for Face 3D Modeling Application. Sensors. 2023; 23(24):9776. https://doi.org/10.3390/s23249776

Chicago/Turabian StyleAlsadik, Bashar, Luuk Spreeuwers, Farzaneh Dadrass Javan, and Nahuel Manterola. 2023. "Mathematical Camera Array Optimization for Face 3D Modeling Application" Sensors 23, no. 24: 9776. https://doi.org/10.3390/s23249776

APA StyleAlsadik, B., Spreeuwers, L., Dadrass Javan, F., & Manterola, N. (2023). Mathematical Camera Array Optimization for Face 3D Modeling Application. Sensors, 23(24), 9776. https://doi.org/10.3390/s23249776