Abstract

Accurate and timely monitoring of biomass in breeding nurseries is essential for evaluating plant performance and selecting superior genotypes. Traditional methods for phenotyping above-ground biomass in field conditions requires significant time, cost, and labor. Unmanned Aerial Vehicles (UAVs) offer a rapid and non-destructive approach for phenotyping multiple field plots at a low cost. While Vegetation Indices (VIs) extracted from remote sensing imagery have been widely employed for biomass estimation, they mainly capture spectral information and disregard the 3D canopy structure and spatial pixel relationships. Addressing these limitations, this study, conducted in 2020 and 2021, aimed to explore the potential of integrating UAV multispectral imagery-derived canopy spectral, structural, and textural features with machine learning algorithms for accurate oat biomass estimation. Six oat genotypes planted at two seeding rates were evaluated in two South Dakota locations at multiple growth stages. Plot-level canopy spectral, structural, and textural features were extracted from the multispectral imagery and used as input variables for three machine learning models: Partial Least Squares Regression (PLSR), Support Vector Regression (SVR), and Random Forest Regression (RFR). The results showed that (1) in addition to canopy spectral features, canopy structural and textural features are also important indicators for oat biomass estimation; (2) combining spectral, structural, and textural features significantly improved biomass estimation accuracy over using a single feature type; (3) machine learning algorithms showed good predictive ability with slightly better estimation accuracy shown by RFR (R2 = 0.926 and relative root mean square error (RMSE%) = 15.97%). This study demonstrated the benefits of UAV imagery-based multi-feature fusion using machine learning for above-ground biomass estimation in oat breeding nurseries, holding promise for enhancing the efficiency of oat breeding through UAV-based phenotyping and crop management practices.

1. Introduction

Oat (Avena sativa L.) is among the most widely cultivated small grains and is primarily grown for forage and feed grain. It is considered as a superior forage crop because of its fine stem [1], high dry matter content, and presence of digestible fibers in the leaves contributing to high palatability [2,3]. In comparison to perennial forage crops such as alfalfa, oat provides a quick supply of high-quality forage as an annual crop; it is fairly easy to establish and harvest, and it has low production and management cost [4]. Improving forage yield and quality of oat varieties is a key objective in oat breeding programs across the US. Forage yield, which corresponds to above-ground biomass, is a complex trait controlled by multiple genes [5] and is affected by the environment to varying degrees. Therefore, multi-environment large scale trials are often set up in breeding programs to evaluate trait stability across environments.

Conventional phenotyping methods for quantifying above-ground biomass often require manual measurement of biomass via cutting, weighing, and drying a sub-sample for moisture estimation. This process is highly tedious, labor-intensive, costly, and destructive [6,7], and limits the extent of phenotyping since it is not operationally feasible for large number of genotypes over multiple environments [8]. However, large scale multi-environment trials are crucial to measure the extent of genotype-by-environment interactions. Remote sensing technologies, including satellites, aircraft, Unmanned Aerial Vehicles (UAVs), Unmanned Ground Vehicles (UGVs), and handheld instruments and sensors, have been used as important tools for crop monitoring, and phenotyping. Aircraft and satellite-based observations cover large areas, but the data often suffer from coarse spatial resolution, the effect of clouds, and low temporal resolution [9,10]. Handheld and UGV-based phenotyping are often inefficient, time consuming, and may destroy crops and fields, limiting their applicability on a large scale [11]. In contrast, UAV-based remote sensing is gaining popularity in plant phenotyping, especially crop biomass estimation applications due to its cost effectiveness, as well as efficient and non-destructive nature of data collection [12]. Additionally, UAVs enable flexible and high resolution image acquisition [13], can avoid cloud cover disturbances, and are excellent for extracting plot-level information from large fields. They can be equipped with different sensors, making them an effective field phenotyping tool [14,15]. RGB [16,17], multispectral [18], and hyperspectral images acquired from UAVs provide improved spectral, spatial, and temporal resolution, in comparison to images acquired from satellite and airborne platforms. High throughput phenotyping using UAVs has been reported for estimation of ground cover [19,20], nitrogen concentration [21,22], and grain yield [23,24,25]. Similarly, UAVs have been also employed for plant biomass estimation in alfalfa [5], grass swards [26], tomato [27], winter wheat [7], sorghum [28], black oat [29], soybean [6], and barley [30].

Canopy spectral, structural, textural, and thermal features extracted from UAV-based imagery can be used to estimate plant biomass. Spectral features, such as Vegetation Indices (VIs), have been commonly employed to estimate biomass in various crops, including winter wheat [20], barley [31], rice [32], and maize [33]. However, spectral features can present limitations [34]. For example, the Normalized Difference Vegetation Index (NDVI) is a widely used VI that has a tendency of attaining asymptotic saturation once it reaches a certain canopy density [12,34]. They are often easily affected by soil background and atmospheric effects [35,36]. Moreover, canopy spectral features such as VIs are unable to capture and explain the complex three-dimensional (3D) characteristics of the canopy structure.

Canopy structural features, such as canopy height and vegetation fraction, offer a better representation of the 3D canopy structure and geometric properties. Differences in canopy height reflect the health and vigor of crops and thus, canopy height features are found to be greatly correlated with biomass either used separately or in conjunction with spectral features. Many studies have utilized 3D canopy structural features to estimate biomass in a variety of agricultural crops such as soybean [6], black oat [29], maize [37,38], winter wheat [7,39] and barley [30]. Canopy height often can be derived from photogrammetry-based or Light Detection and Ranging (LIDAR) point clouds. Bendig et al. [30] reported strong correlation of barley biomass with canopy height derived from photogrammetry-based point clouds. Combining canopy spectral and structural features have also shown great potential in crop biomass estimation in many crops [7,26,40].

Canopy height and density vary as plants mature, thus, characterizing spatial changes in the plant canopy is very helpful. One of the limitations of spectral indices is that they fail to capture the spatial variability of the pixel intensity level between neighboring pixels within an image [41]. Canopy textural features, on the other hand, offer valuable insights into the spatial distribution and patterns of pixel intensities in an image. They enable the assessment of changes in pixel values among neighboring pixels within a defined analysis window [42,43]. Canopy textural features are often utilized to smoothen the differences in canopy structure and geometric features [12], and to lessen the interference of background [43]. These features have found widespread application in image classification [44,45], and in forest biomass estimation [46,47], yet their potential in agricultural crop biomass estimation is less explored. Few studies have examined the potential of textural features and their incorporation with VIs for biomass estimation in agricultural crops [48,49]. Liu et. al. [49] found that the incorporation of textural features derived from multispectral imagery reduced the RMSE by 7.3–15.7% when estimating winter oilseed biomass. Similar results were reported by Zheng et al. [48] in their study estimating rice biomass, where the inclusion of textural features into multispectral VIs exhibited improved results.

A combination of canopy structural and textural features with the spectral features derived from UAV images has the potential to deliver better estimations of biomass and crop grain yield than using a single type of features [12,49,50,51]. To the best of our knowledge, no prior studies have explored the potential of combining canopy textural features with multispectral VIs and canopy height features for oat biomass estimation. In recent years, many studies have evaluated statistical and machine learning (ML) based regression techniques for estimation of biophysical traits in a variety of crops like winter wheat [7,52], potato [16,50], maize [38], etc. Biomass growth typically follows a complex, non-linear pattern and, therefore, is hard to effectively model with linear statistical regression techniques [8,53]. ML algorithms such as Random Forest, Support Vector Machine, Gradient Boosting model, Neural Networks, etc. are gaining popularity nowadays in remote sensing-based biomass estimation due to their ability to model complex non-linear relationships between crop biomass and remote sensing variables [41,54]. Partial Least Squares Regression (PLSR), Random Forest Regression (RFR), and Support Vector Regression (SVR) are being extensively used for the purpose of crop biomass estimation. Fu et al. [55] highlighted the flexibility and efficiency of using PLSR-based modelling to predict biomass of winter wheat. Similarly, Wang et al. [36] reported that PLS and RF regressions perform well to estimate biomass of winter wheat at multiple growth stages. SVR has been found to adapt very well with complex data and can be effectively used in biomass estimation studies [56]. Sharma et al. [57] also emphasized the benefits of using PLS, SVR and RF regression for oat biomass estimation. The combination of canopy spectral, structural, and textural features derived from UAV-based multispectral imagery to predict biomass in oats using ML algorithms has not been explored. This study aims to examine the effectiveness of combining canopy textural and structural features with multispectral VIs in the estimation of oat biomass. The specific objectives of this study were to (i) evaluate the contributions of canopy structural and textural features in biomass estimation; (ii) examine whether fusion of multiple features (canopy spectral, structural, and textural) improve the accuracy of biomass estimation models for oats; and (iii) compare the performance of different ML models for oat biomass estimation.

2. Materials and Methods

2.1. Test Site and Field Data Acquision

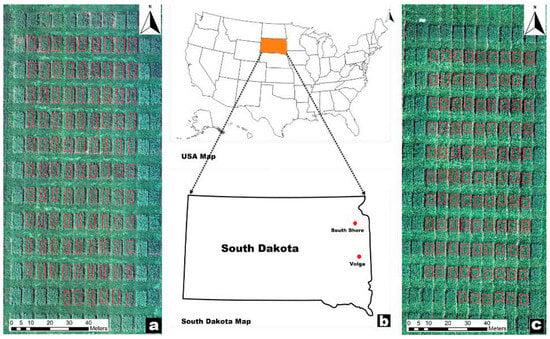

A forage trial was planted at two sites in South Dakota (Volga and South Shore) in 2020 and 2021 (Figure 1). The trial included six oat genotypes (Jerry, Rushmore, Warrior, SD150081, SD120665, and SD150012) were planted at two seeding rates (approximately 150 and 300 seeds/m2) at a depth of approximately 0.038 m. Each plot was 1.524 m by 1.219 m. The trials were managed by using recommended agronomic practices for proper growth and yield.

Figure 1.

Locations of the testing sites (b) and imagery of the experimental plots at each sites in 2021 ((a) South Shore and (c) Volga).

The experimental design consisted of a completely randomized block design with 8 replicates. Replications 1, 2, and 3 were harvested at booting, replications 4, 5, and 6 were harvested at heading, and replications 7 and 8 were harvested at the milk stage (Table 1). The multiple growth stage harvests and planting at two seeding rates ensured a wider range of obtained biomass.

Table 1.

Planting and harvesting dates for the forage oat variety trial conducted at South Shore and Volga in 2020 and 2021.

Throughout the growing season, oat growth was continuously monitored. On the same days as harvesting, field measurements of canopy height and biomass sampling were conducted to gather accurate and reliable data. The canopy height was measured using a representative plant selected to reflect the average height of all plants within a plot. This measurement, taken just prior to harvesting, recorded the distance from the soil to the tip of the chosen plant’s panicle.

To obtain the above-ground fresh biomass, the plots were carefully cut close to the ground using a Jari mower. During the harvesting process, the bordering rows on both sides of the plot were excluded to ensure precise biomass sampling. A small subsample of the biomass was collected, weighed, and dried in an oven set at 75 °C until a stable weight was achieved. The dry matter content was determined by dividing the weight of the dried subsample by the weight of the corresponding fresh subsample and multiplying the result by 100%. The dry biomass yield was calculated by multiplying the dry matter content by the fresh weight of the biomass, expressed in kilogram per hectare.

2.2. UAV Data Acquisition and Image Preprocessing

Aerial images were collected on the day of biomass harvest with a DJI phantom 4 pro UAV (SZ DJI Technology Co., Shenzhen, China) (Figure 2c) equipped with a Multispectral Double 4 K camera (Sentera Inc., Minneapolis, MN, USA) (Figure 2b). The camera has a 12.3-megapixel BSI CMOS Sony Exmor R™ IMX377 Sensor. It can capture five precise spectral light bands: blue, green, red, red-edge, and near-infrared (NIR). The central wavelength and full width at half maximum (FWHM) bandwidth of each spectral bands is presented in Table 2.

Figure 2.

UAV systems and their setup in the field. (a) DJI Phantom 4 pro and attached Sentera double 4K sensor; (b) Sentera double 4K sensor (zoomed view); (c) DJI Phantom 4 pro (top view); (d) Micasense calibrated panel; (e) white poly tarps as ground control points (GCPs).

Table 2.

Center wavelength and full width at half maximum (FWHM) bandwidth of each spectral band of Sentera Double 4K multispectral sensor.

The UAV flights were conducted on sunny, cloud-free days with minimal sun shadow and low wind speeds (wind gusts less than 12 miles per hour). The flights were performed at an altitude of 25 m with 80% front and side overlap. Four white woven polypropylene bags of size 0.35 m by 0.66 m, fixed permanently in the ground throughout the growing period, were placed in the corners of the fields and used as Ground Control Points (GCPs) for georeferencing purpose (Figure 2e). The geographic coordinates of these GCPs were measured using Mesa ® Rugged Tablets from Juniper Systems (Juniper Systems, Logan, UT, USA). Additionally, a calibration panel (Micasense Inc., Seattle, WA, USA) with known reflectance factors was used for radiometric correction purposes (Figure 2d).

Digital Surface Model (DSM) and orthomosaic imagery were generated using Pix4DMapper software v 4.8.0 (Pix4D S.A., Prilly, Switzerland). The process involved three main steps: initial processing for key point computation and image matching, radiometric calibration for converting raw digital numbers to reflectance values, and DSM generation for surface elevation representation. Georeferencing was performed using GNSS coordinates of four Ground Control Points (GCPs) recorded in QGIS software v 3.20 (QGIS Development Team, Open Source Geospatial Foundation). Georeferenced images were saved in a TIFF format and exported to ArcMap software for creating a 5-band orthomosaic and for spectral indices extraction and DTM generation.

2.3. Features Extraction

Following image preprocessing, various features were extracted to be used as input variables for oat biomass estimation. These included canopy spectral indices such as VIs, canopy structural features such as plant height, and canopy textural features. For each plot, a rectangular region of interest (ROI) or plot was defined on the images, and a polygon shapefile was created using ArcMap software (Redlands, CA, USA). Plot-level statistics were obtained by averaging pixel values from spectral, structural, and textural raster layers using the zonal statistics tool.

2.3.1. Spectral Features

Ten different VIs were calculated using the reflectance bands (red, green, blue, NIR, and red edge) obtained from the multispectral sensor (Table 3). These VIs were categorized into seven multispectral VIs (1–7 in Table 3) and three true-color VIs (8–10 in Table 3). The selection of these VIs was guided by their ability to correlate well with biomass and their sensitivity to changes in greenness and vegetation vigor, as observed in previous studies [20,57,58,59].

Table 3.

Details of spectral features used in this study to predict biomass in oats.

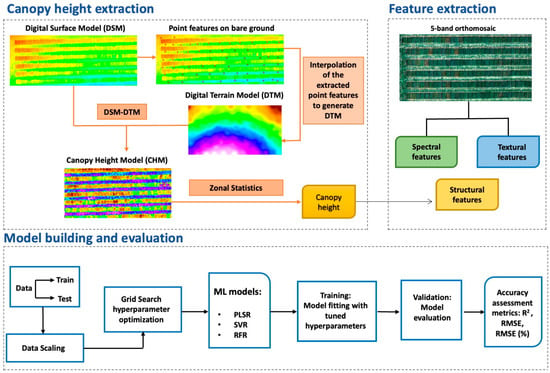

2.3.2. Structural Features

Height features were derived through digital photogrammetry-based point clouds. The Digital Surface Model (DSM) obtained from Pix4DMapper software was imported into ArcGIS software. The DSM represents the ground’s structures, encompassing both bare earth and canopy. Since we were not able to collect preplant bare soil UAV imagery to generate Digital Terrain Model (DTM), which represents the soil surface elevation, thus, bare surface points were selected from the DSM imagery and interpolated using the Inverse Distance Weighting (IDW) method, resulting in a raster layer representing ground surface elevation. The Canopy Height Model (CHM) was obtained by subtracting the DTM from the DSM, capturing the height of the vegetation (CHM = DSM − DTM). Figure 3 provides a comprehensive illustration of the canopy height extraction process.

Figure 3.

Workflow of feature extraction and model development for biomass estimation in this study.

The CHM and shapefile were subsequently exported to ArcGIS software to calculate height features for each plot, including average height (Hmean), maximum height (Hmax), minimum height (Hmin), median height (Hmedian), height standard deviation (Hstd), height 90% percentile (Hp90), height 93% percentile (Hp93), and height 95% percentile (Hp95). (Table 4).

Table 4.

Digital photogrammetry-based point cloud-derived canopy structural features used in this study to predict biomass in oats.

2.3.3. Textural Features

Textural features for the plots in each image were computed using the Gray Level Co-occurrence Matrix (GLCM) texture algorithm, introduced by Haralick et al. [70]. The GLCM provides information on the spatial relationship of pixel pairs in an image and is one of the widely used image textural features in remote sensing. Using the ENVI 5.6.1 software, different textural features were calculated for each of the five bands, including variance (VAR), mean (ME) homogeneity (HOM), dissimilarity (DIS) contrast (CON), entropy (ENT), angular second moment (ASE), and correlation (COR). A 3 × 3 moving window was set for the calculations. Further details about these textural features can be found in [71]. Table 5 presents the list of GLCM textural features used in this study. To denote each extracted texture, they are prefixed with either “R-”, “G-”, “B-”, “NIR-” and “Red edge-” to denote the GLCM-based textures for the five bands (e.g., R-ME denotes the mean of the red band).

Table 5.

The grey level co-occurrence matrix (GLCM) textural features and their definitions used in this study to predict biomass in oats.

2.4. Statistical Analysis

2.4.1. Data Preprocessing and Feature Selection

All data points including UAV imagery features and corresponding biomass ground truth values from the 2 years and two fields were combined for further analysis and modeling (Table 6). The dataset had ground-truth biomass and UAV imagery features obtained from all three harvests: the first three replications from the booting stage harvest, the next three from the heading stage harvest, and the final two from the milk stage harvest. The dataset was first subjected to a preliminary statistical test to check the presence of any outliers. Then, the correlation between canopy height features obtained from CHM and canopy height obtained from ground measurement (Href) were calculated to investigate the accuracy and quality of UAV-based canopy height data. In addition, correlations between the spectral indices, structural features, and textural features with biomass were determined and used for feature selection.

Table 6.

Statistics of ground-truth oat biomass data (kg/ha) at different oat growth stages.

2.4.2. Biomass Estimation Modelling

Machine Learning Models

Machine learning (ML) algorithms like Partial Least Squares Regression (PLSR), Random Forest Regression (RFR), and Support Vector Regression (SVR) were used to develop predictive models for oat biomass yields. The implementation of ML methods was conducted using the Scikit-learn library [72] in Python (Python version 3.9.7).

Model Building and Evaluation

A 10-fold cross-validation analysis was performed by randomly splitting all data points (n = 384) into the training dataset (70%) for calibrating the model and the testing dataset (30%) for model testing. Feature scaling was carried out before fitting our model. To find the best set of hyperparameters for each model, a hyperparameter optimization strategy, namely Grid search cross-validation, was used.

To evaluate and compare the model performance, the coefficient of determination (R2), root mean square error (RMSE), and relative RMSE (RMSE%) were calculated as follows:

where and are the measured and predicted biomass yield, respectively; is the mean of measured biomass yield; and n is the total number of samples in the validation set. A detailed workflow showing feature extraction (spectral, structural, and textural) and modelling using traditional ML algorithms for biomass estimation of oats is presented in Figure 3.

3. Results and Discussions

3.1. Statistical Analysis of Biomass Data

The genotype, seeding rate, year, location, and the growth stage all affected biomass yield. Growing conditions in 2020 were more favorable than in 2021 at both locations; as a result, the biomass production was higher in 2020 compared to 2021 (Table 3). In 2021, the drought stress experienced in June reduced mean biomass production by 63.37%. The location (Volga vs. South Shore) also affected biomass production. In 2020, the highest biomass was produced in South Shore (Table 3). The severe (Puccinia coronate f. sp. avenae) infections observed on susceptible cultivars in Volga that year likely contributed to the lower biomass production at that location in comparison to South Shore. In 2021, however, higher biomass was produced in Volga compared to South Shore (Table 3). The more severe drought stress in South Shore likely affected plants more severely in comparison to those at the Volga site. Finally, as expected, we consistently observed an increase in biomass production at later growing stages. Biomass production increased by 56% between booting and milk stage in 2020 and by 112% between those two stages in 2021. Overall, in this study, a wide range of biomass was obtained, ranging from 2201.4 to 20,415.8 kg/ha (Table 3).

3.2. Correlation Analysis

3.2.1. Relationships between Manually Measured and UAV-Estimated Canopy Height

Pearson’s correlation coefficient (r) was calculated between the manually measured and UAV-estimated canopy height. Compared to other height features, a strong correlation (r = 0.77) is found between Hp90, Hp93, and Hp95 of estimated canopy height and manually measured canopy height (Href) (Table 7). A similar relationship between the 90th percentile of UAV estimated canopy height and manually measured canopy height was reported in a study to predict sorghum biomass [58]. In our study, some negative values were reported for the minimum canopy height estimated from UAV imagery. This could be attributed to errors incurred during the interpolation step of DTM extraction, which added noise. While the DSM-extracted canopy height is surely a low-cost solution, there is a high chance of error in this method as it requires large bare buffer zones that are not always assured [16] and a high resolution in the extracted DTM, which is also a problem with multispectral imagery. As an alternative approach, DEM can be acquired by capturing images of the bare ground prior to plant emergence. This method would be more practical and would offer more reliable estimates of extracted canopy height, provided an adequate number of ground control points is available.

Table 7.

Pearson correlation coefficient (r) of manually measured canopy height with UAV-derived height features.

3.2.2. Relationships between UAV Imagery-Extracted Features and Biomass

Pearson’s correlation coefficient (r) was calculated between biomass and UAV imagery extracted spectral, structural, and textural features (Table 8 and Table 9). Among the three different feature types, structural features are the most strongly correlated with biomass. All spectral and structural features (except Hmin) show significant positive correlations with biomass (Table 8).

Table 8.

Pearson correlation coefficient (r) of oat biomass with VIs and canopy height features.

Table 9.

Pearson correlation coefficient (r) of oat biomass with textural features.

Among the spectral features, GLI shows the strongest correlation with biomass (r = 0.63). The usefulness of GLI in predicting biomass and green vegetation has been highlighted by previous studies. Taugourdeau et al. [73] found GLI to be among the most important variables when estimating herbaceous above-ground biomass in Sahelian rangelands. In another study, GLI was found to show higher sensitivity in detecting green vegetation [74]. However, other studies reported GLI to be a weak indicator for biomass estimation [75,76]. Among the NIR-based VIs, NDRE shows the strongest correlation (r = 0.49) with biomass (Table 8). NDRE is based on Red Edge spectral band, which does not suffer from the optical saturation issue and, hence, performs well even at a higher plant density [34].

Among the structural features, Hp90 shows the strongest correlation with biomass (r = 0.74) (Table 8). The correlation between biomass and manually measured canopy height is found to be 0.88, which is higher than the correlation observed between UAV multispectral imagery-derived height features and biomass. Most of the literature has reported promising and reliable estimates of UAV imagery extracted canopy height (r ≥ 0.8) [12,58], and, therefore, strong correlations between biomass and UAV imagery extracted canopy height were reported. Nonetheless, in our study too, moderate to strong correlations are obtained between most canopy height features and biomass. These results suggest that canopy height is an important indicator of biomass as it directly reflects plant growth (i.e., biomass) and can be used to quickly estimate oat biomass.

Textural features are not all significantly correlated with biomass (Table 9). The features with significant correlations are all negatively correlated with biomass (r = −0.1 to −0.7). The Gray Level Co-occurrence Matrix (GLCM) textural features showing the strongest negative correlation with the biomass yield is Correlation (COR) calculated on NIR Band (r = −0.7) (Table 9). Correlation measures, in general, showed higher negative correlation with biomass than any other measures (Table 9). This correlation measure characterizes the texture of an image by measuring the joint probability of the occurrence of two specified pixel pairs. Liu et al. [50] have also reported strong correlations of canopy textural features (COR, CON, HOM) calculated on RGB bands with potato biomass, suggesting that canopy textural features are suitable in predicting biomass.

3.3. Oat Biomass Estimation Analysis

Machine learning models PLSR, SVR, and RFR were used to predict the biomass of oats using UAV multispectral imagery-derived spectral, structural, and textural features individually and in combination. The 10 VIs calculated using five-spectral bands, along with 8 texture parameters for each band gave a total of 40 textural features but only 24 were selected based on their correlation with the biomass. So, the 10 VIs, 10 canopy height features, and 24 textural features, resulted in a total of 44 features that were used for modelling. The model testing statistics for biomass estimation are presented in Table 10.

Table 10.

Validation statistics of oat biomass yield estimation using three machine learning methods.

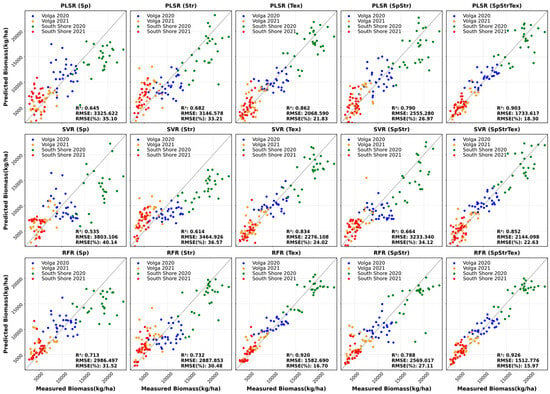

3.3.1. Spectral Feature-Based Biomass Estimation

Variations in seeding rates, genotypic differences, and in growing environments (two locations and 2 years) led to a variation in oat canopy growth. These variations consequently led to differences in canopy spectral reflectance. Biomass estimation models were built based on PLSR, SVR, and RFR methods by using 10 VIs as input variables. The estimation accuracy obtained from three models are in the range of 0.53–0.71 (Table 10). The highest estimation accuracy (R2 = 0.71) and lowest estimation error (RMSE% = 35.12) was obtained from RFR modelling. The superior goodness RFR model in comparison to the other two ML models is also visible on the scatter plots as the data points are seen to be more converged towards the bisector (black dashed line) (Figure 4). The lowest accuracy (R2 = 0.53 and highest RMSE% = 40.14%) was yielded by SVR. Significant correlations of the VIs are seen with the biomass (Table 8) suggesting that spectral features derived from UAV-based multispectral imagery are important indicators for oat biomass estimation. Biomass estimation using canopy spectral features has been reported extensively in the literature. Many studies have shown the usefulness of using multiple VIs for biomass estimation and our results are in agreement with those previous studies.

Figure 4.

Scatter plots of measured vs. predicted oat biomass yield using different models and input features.

It is worth noting that the three models based on spectral features underestimated biomass samples with higher values (Figure 4). One reason behind this could be the optical saturation of the VIs. Similar results were also observed in winter cover crop biomass estimation [20] and soybean biomass and LAI estimation [77]. VIs that are based on NIR and red ratios (e.g., NDVI) tend to saturate at high/dense canopies [34,78], which result in poor performance of predictive models. Prabhakara et al. [20] reported that NDVI showed asymptotic saturation in the higher range of rye biomass (>1500 kg/ha). VIs are also environment and sensor specific [79]. They often do not reflect 3D canopy structure and geometrical patterns.

3.3.2. Structural Feature-Based Biomass Estimation

Structural features like canopy height metrics were also used for estimating oat biomass. In this study, canopy structural features resulted in superior estimations than spectral features (Table 10). Noticeably higher estimation accuracies were obtained from models based on structural features (R2 ranges from 0.61 to 0.73 and RMSE% ranges from 30.48 to 36.57%) than canopy spectral features-based models. RFR also exhibited the highest estimation accuracy for biomass with R2 of 0.73 and RMSE% of 30.48%. With improved R2 and decreased RMSE%, the use of structural features improved the estimation results for oat biomass, which is also demonstrated by general convergence pattern of the spread points towards the bisector (Figure 4). Nonetheless, the SVR model still underestimates the higher values of biomass.

A possible explanation of the superior performance of estimation models based on structural features over those based on spectral features is that canopy structural features can provide the three-dimensional canopy information and can better reflect canopy growth and biomass. Also, canopy structural features do not suffer from asymptotic saturation, unlike spectral indices [77]. Many studies have validated the potential of structural features in biomass estimation. Bendig et al. [30] reported that canopy height derived from Crop Surface Model (CSM) is a suitable indicator of biomass in barley. In their study, they tested five different models based on canopy height and predicted biomass with R2 of 0.8. Similarly, a study for rice crop [80] showed good estimations of biomass from canopy height (R2 = 0.68–0.81). Acorsi et al. [29] also showed successful estimations of fresh and dry biomass of black oats using structural features (R2 ranges from 0.69 to 0.81).

Many studies have highlighted the potential of combining canopy height with VIs, rather than using them separately [7,31,40,81], which resulted in robust and improved estimations of biomass yield in previous studies. Consistent with previous studies, the results in this work also show that a combination of spectral and structural features resulted in more improved estimation accuracy than using spectral or structural features alone, with R2 ranging from 0.66 to 0.79 and RMSE% ranging from 26.97 to 34.12% (Table 10). Canopy structural features can provide information about canopy architecture, not provided by spectral features and can, to some extent, overcome the saturation problem of spectral features. This is observed in our study as well. A combination of spectral and structural features has resolved the underestimation trend of biomass samples at higher values to some extent, which is demonstrated by scatter plots of measured vs. predicted oat biomass yield (Figure 4).

3.3.3. Textural Feature-Based Biomass Estimation

Textural features have been widely used for image classification purposes and for forest biomass estimation. Textural features have also been tested in a few studies for crop biomass estimation in recent years. In these studies, textural features are often used alone [71] or in conjunction with spectral features [48,49,52]. Our study demonstrates that biomass estimation using textural features alone shows a higher accuracy than either spectral or structural features, with an R2 ranging from 0.834 to 0.92 and RMSE% from 16.7 to 24.02%. (Table 10). The data points are also more converged towards the bisector, which demonstrated the improved estimation performance of canopy textural feature-based biomass estimation (Figure 4). Textural features are based on spectral bands, and, thus, they show some collinearity with the spectral features. However, in contrast to spectral features, textural features can characterize canopy architecture and structure patterns to some extent [82], as well as weaken saturation issues and suppress the soil background effect. This could be a possible explanation for the superior performance of textural features over spectral features. The superior performance of textural features over spectral features in this study is in agreement with previous findings estimating forest biomass [71,83].

Several studies have also combined canopy spectral and textural features to improve estimation accuracy for crop biomass estimation. Wengert et al. [84] found that GLCM-based textural features improved the estimation of barley dry biomass and leaf area index. Similar results were also documented in above-ground biomass estimation of legume grass mixtures [41], rice [48], and winter wheat [52]. It is worth noting that, in this study, the estimation accuracy provided by using only textural features is higher than combining spectral and structural features. A possible explanation for the improved estimation accuracy is that textural features takes into account the spatial variation in the pixels and provides additional information about the physical structure of the canopy, edges of a canopy, and overall canopy architecture [85,86].

3.3.4. Data Fusion and Biomass Estimation

Inclusion of textural features to the spectral and structural features provided superior estimations of oat biomass. For all three regression models, fusion of all three types of features have yielded improved estimations of biomass over using a single type of features or combining two types of features, with R2 varying from 0.85 to 0.92 and RMSE% ranging from 15.97% to 22.63%. However, the estimation accuracy was not substantially improved when combining all three features compared to using textural features only (Table 10). Also, it is noteworthy that the estimation accuracy provided by PLSR when using textural features is slightly higher than that provided by SVR when combining all three features. This could be associated with information overlapping and redundancy issues linked with canopy spectral, structural and textural features [77].

The improved performance of combination of all three features can also be observed from the scatter plots of measured vs. predicted biomass (Figure 4). Observing the distribution of data points around the bisector (black dashed line), it is found that combining all three features significantly improved the estimation accuracy as data points are more converged towards the bisector. However, what is prominent and consistent with all the three models is the underestimation of higher biomass values. The higher values of biomass are underestimated to some extent by all three models. This is likely attributed to an optical saturation issue. Overall, combining structural features with spectral features increased R2 by 5–14%, whereas combining all three features led to an almost 28–56% increase in R2, depending on the models.

Several studies have tested the combination of multiple information, by integrating non-spectral features (3D, thermal) or textural features with spectral features for the evaluation of biomass in grasslands [26,87,88] and in cultivated crops. There have been many studies that have combined spectral and textural features [48,49] or spectral and structural features [40,89] to improve biomass estimation accuracy in agricultural crops. A study conducted in soybean [12] highlighted the benefits of fusing multiple features and multi-source information for the estimation of grain yield (R2 = 0.72). Future research should focus more on evaluating the benefits of feature fusion (spectral, structural, thermal, textural features) and multi-source (RGB, hyperspectral, LiDAR) data fusion for oat biomass estimation purposes.

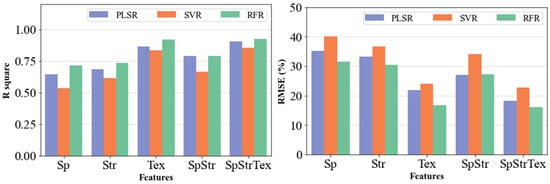

3.4. Performance of Different ML Models

Figure 5 shows the performance of each model in the estimation of biomass based on five different input feature combinations. Based on R2 and RMSE%, the RFR model yielded superior performance compared to the other two models for oat biomass estimation, irrespective of the input features. In all five combinations, SVR yielded estimations with the lowest R2 and highest RMSE% with the poorest estimation obtained when using spectral features only as the independent variable (Figure 4 and Table 10). With all the five different input feature scenarios, RFR and PLSR generally exhibited very close performance with RFR performing slightly better. The best performance was observed when using the RFR model with all three features, giving an R2 of 0.92 and RMSE% of 15.97%.

Figure 5.

Oat biomass yields estimation performance of different models with various input feature types and numbers.

Random forest is a tree-based ensemble learning method, which combines multiple predictors by building a complex non-linear relationship to solve complex problems [90]. It has gained considerable attention in terms of crop biomass modelling as it offers the advantage of faster training time, improved accuracy, higher stability, and robustness. Our results are consistent with many other studies that have demonstrated the superiority of RFR in modelling biomass and yield-related variables [26,41,84,91].

3.5. Limitations and Future Work

The digital terrain model used in our study has notable limitations. To obtain DTM, we employed an Inverse Distance Weighting (IDW) approach due to the absence of bare ground imagery before crop emergence. The DTM generated through this method may suffer from inherent errors, including issues related to shadows, interpolation, and the identification of ground versus off-ground surfaces, potentially resulting in underestimated canopy height data [92]. Therefore, we recommend acquiring imagery of bare ground before crop emergence to improve the reliability of DTM data.

In terms of future work, we propose several directions. Firstly, the use of transfer learning techniques holds significant potential for enhancing model generalizability, especially when predicting biomass across different years and locations [93]. A valuable approach involves utilizing one location for training and another for testing, or likewise, one year for training and another for testing. This allows for the assessment of spatial and temporal model transferability, which is of substantial value for future research. In the realm of biomass estimation, it is important to recognize that factors beyond imagery, such as soil properties, rainfall, irrigation, weather conditions, and solar elevation angle, play a significant role. Integrating weather, soil, and crop management information could help enhance the precision and reliability of oat biomass estimations.

4. Conclusions

This study investigated the potential of UAV-derived spectral, structural, textural features, and their combination for predicting the biomass yield of oats. The results show that a combination of multi-features can be superior to using spectral features alone for predicting oat biomass. The importance of canopy structural features when estimating plant biomass was highlighted by the strong relationships observed between biomass and UAV-derived canopy height. Canopy textural features also proved to be an important indicator for oat biomass estimation as the model using canopy textural features achieved higher estimation accuracy than models using spectral or structural features alone. Canopy structural and textural features likely provide a more accurate measurement of canopy architecture than spectral features and may also provide a means to overcome saturation issues associated with spectral features. All three machine-learning algorithms used in this study were highly efficient in oat biomass estimation, with RFR producing slightly higher estimation accuracies.

In light of these findings, it is evident that the integration of multi-feature data sources, including spectral, structural, and textural features, offers a promising avenue for accurate oat biomass estimation. The underestimation trend observed for higher biomass values suggests the need for further research in addressing the optical saturation issue. Additionally, future investigations in this field should explore the advantages of incorporating additional variables, such as soil properties, weather conditions, and crop management information, to enhance the robustness and precision of oat biomass predictions. This study contributes to the growing body of research on precision agriculture and remote sensing applications, and it paves the way for more comprehensive and reliable oat biomass estimation techniques in the years to come.

Author Contributions

Conceptualization, M.C.; methodology, M.C., M.M., J.C. and R.D.; formal analysis, M.C., M.M. and R.D.; investigation, M.C., J.C. and R.D.; data curation, R.D., M.M. and M.C.; writing—original draft preparation, R.D.; writing—review and editing, M.C., M.M., J.C. and R.D.; supervision, J.C., M.M. and M.C.; funding acquisition, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by South Dakota Crop Improvement Association, the South Dakota Agricultural Experiment Station, and the USDA NIFA under hatch project (project number SD00H529-14).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in the study will be available from the corresponding author upon request.

Acknowledgments

The authors appreciate the support from the members of the SDSU’s oat breeding lab especially Nicholas Hall, Paul Okello, and Krishna Ghimire for their technical support and SDSU Department of Agronomy, Horticulture and Plant Science for some of the research resources.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bibi, H.; Hameed, S.; Iqbal, M.; Al-Barty, A.; Darwish, H.; Khan, A.; Anwar, S.; Mian, I.A.; Ali, M.; Zia, A. Evaluation of exotic oat (Avena sativa L.) varieties for forage and grain yield in response to different levels of nitrogen and phosphorous. PeerJ 2021, 9, e12112. [Google Scholar] [CrossRef] [PubMed]

- Mccartney, D.; Fraser, J.G.C.; Ohama, A. Annual cool season crops for grazing by beef cattle. A Canadian Review. Can. J. Anim. Sci. 2008, 88, 517–533. [Google Scholar] [CrossRef]

- Kim, K.S.; Tinker, N.A.; Newell, M.A. Improvement of oat as a winter forage crop in the Southern United States. Crop Sci. 2014, 54, 1336–1346. [Google Scholar] [CrossRef]

- Beck, P.; Jennings, J.; Rogers, J. Management of pastures in the upper south: The I-30 and I-40 Corridors. In Management Strategies for Sustainable Cattle Production in Southern Pastures; Elsevier: Amsterdam, The Netherlands, 2020; pp. 189–226. [Google Scholar]

- Tang, Z.; Parajuli, A.; Chen, C.J.; Hu, Y.; Revolinski, S.; Medina, C.A.; Lin, S.; Zhang, Z.; Yu, L.-X. Validation of UAV-based alfalfa biomass predictability using photogrammetry with fully automatic plot segmentation. Sci. Rep. 2021, 11, 3336. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation index weighted canopy volume model (CVMVI) for soybean biomass estimation from unmanned aerial system-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Wang, T.; Liu, Y.; Wang, M.; Fan, Q.; Tian, H.; Qiao, X.; Li, Y. Applications of UAS in Crop Biomass Monitoring: A Review. Front. Plant Sci. 2021, 12, 595. [Google Scholar] [CrossRef] [PubMed]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Sozzi, M.; Kayad, A.; Gobbo, S.; Cogato, A.; Sartori, L.; Marinello, F. Economic comparison of satellite, plane and UAV-acquired NDVI images for site-specific nitrogen application: Observations from Italy. Agronomy 2021, 11, 2098. [Google Scholar] [CrossRef]

- Bhandari, M.; Ibrahim, A.M.; Xue, Q.; Jung, J.; Chang, A.; Rudd, J.C.; Maeda, M.; Rajan, N.; Neely, H.; Landivar, J. Assessing winter wheat foliage disease severity using aerial imagery acquired from small Unmanned Aerial Vehicle (UAV). Comput. Electron. Agric. 2020, 176, 105665. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Sanches, G.M.; Duft, D.G.; Kölln, O.T.; Luciano, A.C.d.S.; De Castro, S.G.Q.; Okuno, F.M.; Franco, H.C.J. The potential for RGB images obtained using unmanned aerial vehicle to assess and predict yield in sugarcane fields. Int. J. Remote Sens. 2018, 39, 5402–5414. [Google Scholar] [CrossRef]

- Théau, J.; Lauzier-Hudon, É.; Aubé, L.; Devillers, N. Estimation of forage biomass and vegetation cover in grasslands using UAV imagery. PLoS ONE 2021, 16, e0245784. [Google Scholar] [CrossRef]

- Duan, T.; Zheng, B.; Guo, W.; Ninomiya, S.; Guo, Y.; Chapman, S.C. Comparison of ground cover estimates from experiment plots in cotton, sorghum and sugarcane based on images and ortho-mosaics captured by UAV. Funct. Plant Biol. 2017, 44, 169–183. [Google Scholar] [CrossRef]

- Prabhakara, K.; Hively, W.D.; McCarty, G.W. Evaluating the relationship between biomass, percent groundcover and remote sensing indices across six winter cover crop fields in Maryland, United States. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 88–102. [Google Scholar] [CrossRef]

- Walsh, O.S.; Shafian, S.; Marshall, J.M.; Jackson, C.; McClintick-Chess, J.R.; Blanscet, S.M.; Swoboda, K.; Thompson, C.; Belmont, K.M.; Walsh, W.L. Assessment of UAV based vegetation indices for nitrogen concentration estimation in spring wheat. Adv. Remote Sens. 2018, 7, 71–90. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Duan, B.; Fang, S.; Zhu, R.; Wu, X.; Wang, S.; Gong, Y.; Peng, Y. Remote Estimation of Rice Yield With Unmanned Aerial Vehicle (UAV) Data and Spectral Mixture Analysis. Front. Plant Sci. 2019, 10, 204. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Wang, J.; Shan, B. Estimation of Winter Wheat Yield from UAV-Based Multi-Temporal Imagery Using Crop Allometric Relationship and SAFY Model. Drones 2021, 5, 78. [Google Scholar] [CrossRef]

- Viljanen, N.; Honkavaara, E.; Näsi, R.; Hakala, T.; Niemeläinen, O.; Kaivosoja, J. A novel machine learning method for estimating biomass of grass swards using a photogrammetric canopy height model, images and vegetation indices captured by a drone. Agriculture 2018, 8, 70. [Google Scholar] [CrossRef]

- Johansen, K.; Morton, M.J.L.; Malbeteau, Y.; Aragon, B.; Al-Mashharawi, S.; Ziliani, M.G.; Angel, Y.; Fiene, G.; Negrão, S.; Mousa, M.A.A.; et al. Predicting Biomass and Yield in a Tomato Phenotyping Experiment Using UAV Imagery and Random Forest. Front. Artif. Intell. 2020, 3, 28. [Google Scholar] [CrossRef] [PubMed]

- Masjedi, A.; Zhao, J.; Thompson, A.M.; Yang, K.-W.; Flatt, J.E.; Crawford, M.M.; Ebert, D.S.; Tuinstra, M.R.; Hammer, G.; Chapman, S. Sorghum biomass prediction using UAV-based remote sensing data and crop model simulation. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7719–7722. [Google Scholar]

- Acorsi, M.G.; das Dores Abati Miranda, F.; Martello, M.; Smaniotto, D.A.; Sartor, L.R. Estimating Biomass of Black Oat Using UAV-Based RGB Imaging. Agronomy 2019, 9, 344. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Tilly, N.; Aasen, H.; Bareth, G. Fusion of plant height and vegetation indices for the estimation of barley biomass. Remote Sens. 2015, 7, 11449–11480. [Google Scholar] [CrossRef]

- Swain, K.C.; Thomson, S.J.; Jayasuriya, H.P. Adoption of an unmanned helicopter for low-altitude remote sensing to estimate yield and total biomass of a rice crop. Trans. ASABE 2010, 53, 21–27. [Google Scholar] [CrossRef]

- Calou, V.B.; Teixeira, A.d.S.; Moreira, L.C.; Rocha, O.C.d.; Silva, J.A.d. Estimation of maize biomass using Unmanned Aerial Vehicles. Eng. Agrícola 2019, 39, 744–752. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Yan, N.; Chang, S.; Zhao, Y.; Dong, Q. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef]

- Wang, F.; Yang, M.; Ma, L.; Zhang, T.; Qin, W.; Li, W.; Zhang, Y.; Sun, Z.; Wang, Z.; Li, F. Estimation of above-ground biomass of winter wheat based on consumer-grade multi-spectral UAV. Remote Sens. 2022, 14, 1251. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Wang, C.; Huang, W.; Chen, H.; Gao, S.; Li, D.; Muhammad, S. Combined use of airborne LiDAR and satellite GF-1 data to estimate leaf area index, height, and aboveground biomass of maize during peak growing season. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4489–4501. [Google Scholar] [CrossRef]

- Wang, C.; Nie, S.; Xi, X.; Luo, S.; Sun, X. Estimating the biomass of maize with hyperspectral and LiDAR data. Remote Sens. 2017, 9, 11. [Google Scholar] [CrossRef]

- Yuan, W.; Li, J.; Bhatta, M.; Shi, Y.; Baenziger, P.S.; Ge, Y. Wheat height estimation using LiDAR in comparison to ultrasonic sensor and UAS. Sensors 2018, 18, 3731. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Grüner, E.; Wachendorf, M.; Astor, T. The potential of UAV-borne spectral and textural information for predicting aboveground biomass and N fixation in legume-grass mixtures. PLoS ONE 2020, 15, e0234703. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-UI-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef]

- Lu, J.; Eitel, J.U.; Engels, M.; Zhu, J.; Ma, Y.; Liao, F.; Zheng, H.; Wang, X.; Yao, X.; Cheng, T. Improving unmanned aerial vehicle (uav) remote sensing of rice plant potassium accumulation by fusing spectral and textural information. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102592. [Google Scholar] [CrossRef]

- Ciriza, R.; Sola, I.; Albizua, L.; Álvarez-Mozos, J.; González-Audícana, M. Automatic detection of uprooted orchards based on orthophoto texture analysis. Remote Sens. 2017, 9, 492. [Google Scholar] [CrossRef]

- Murray, H.; Lucieer, A.; Williams, R. Texture-based classification of sub-Antarctic vegetation communities on Heard Island. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 138–149. [Google Scholar] [CrossRef]

- Sarker, L.R.; Nichol, J.E. Improved forest biomass estimates using ALOS AVNIR-2 texture indices. Remote Sens. Environ. 2011, 115, 968–977. [Google Scholar] [CrossRef]

- Dube, T.; Mutanga, O. Investigating the robustness of the new Landsat-8 Operational Land Imager derived texture metrics in estimating plantation forest aboveground biomass in resource constrained areas. ISPRS J. Photogramm. Remote Sens. 2015, 108, 12–32. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Li, J.; Guo, X.; Wang, S.; Lu, J. Estimating biomass of winter oilseed rape using vegetation indices and texture metrics derived from UAV multispectral images. Comput. Electron. Agric. 2019, 166, 105026. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Li, Z.; Yang, G.; Song, X.; Yang, X.; Zhao, Y. Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 2022, 198, 107089. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Bian, M.; Ma, Y.; Jin, X.; Song, X.; Yang, G. Estimating potato above-ground biomass by using integrated unmanned aerial system-based optical, structural, and textural canopy measurements. Comput. Electron. Agric. 2023, 213, 108229. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving unmanned aerial vehicle remote sensing-based rice nitrogen nutrition index prediction with machine learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Earl, H.J.; Tulpan, D.; Sulik, J.; Eskandari, M. Application of machine learning algorithms in plant breeding: Predicting yield from hyperspectral reflectance in soybean. Front. Plant Sci. 2021, 11, 2169. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Wang, J.; Song, X.; Feng, H. Winter wheat biomass estimation based on spectral indices, band depth analysis and partial least squares regression using hyperspectral measurements. Comput. Electron. Agric. 2014, 100, 51–59. [Google Scholar] [CrossRef]

- Duan, B.; Liu, Y.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R.; Fang, S. Remote estimation of rice LAI based on Fourier spectrum texture from UAV image. Plant Methods 2019, 15, 124. [Google Scholar] [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffé, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22, 601. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Shi, Y.; Veeranampalayam-Sivakumar, A.-N.; Schachtman, D.P. Elucidating sorghum biomass, nitrogen and chlorophyll contents with spectral and morphological traits derived from unmanned aircraft system. Front. Plant Sci. 2018, 9, 1406. [Google Scholar] [CrossRef]

- Devia, C.A.; Rojas, J.P.; Petro, E.; Martinez, C.; Mondragon, I.F.; Patiño, D.; Rebolledo, M.C.; Colorado, J. High-throughput biomass estimation in rice crops using UAV multispectral imagery. J. Intell. Robot. Syst. 2019, 96, 573–589. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Neto, J.C. A Combined Statistical-Soft Computing Approach for Classification and Mapping Weed Species in Minimum-Tillage Systems; The University of Nebraska-Lincoln: Lincoln, NE, USA, 2004. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. Syst. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Nichol, J.E.; Sarker, M.L.R. Improved biomass estimation using the texture parameters of two high-resolution optical sensors. IEEE Trans. Geosci. Remote Sens. 2010, 49, 930–948. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Taugourdeau, S.; Diedhiou, A.; Fassinou, C.; Bossoukpe, M.; Diatta, O.; N’Goran, A.; Auderbert, A.; Ndiaye, O.; Diouf, A.A.; Tagesson, T. Estimating herbaceous aboveground biomass in Sahelian rangelands using Structure from Motion data collected on the ground and by UAV. Ecol. Evol. 2022, 12, e8867. [Google Scholar] [CrossRef]

- Eng, L.S.; Ismail, R.; Hashim, W.; Baharum, A. The use of VARI, GLI, and VIgreen formulas in detecting vegetation in aerial images. Int. J. Technol. 2019, 10, 1385–1394. [Google Scholar] [CrossRef]

- Lussem, U.; Bolten, A.; Gnyp, M.; Jasper, J.; Bareth, G. Evaluation of RGB-based vegetation indices from UAV imagery to estimate forage yield in grassland. Int. Arch. Photogramm. Remote Sens. Spatial. Inf. Sci. 2018, 42, 1215–1219. [Google Scholar] [CrossRef]

- Mao, P.; Qin, L.; Hao, M.; Zhao, W.; Luo, J.; Qiu, X.; Xu, L.; Xiong, Y.; Ran, Y.; Yan, C. An improved approach to estimate above-ground volume and biomass of desert shrub communities based on UAV RGB images. Ecol. Indic. 2021, 125, 107494. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Heege, H.; Reusch, S.; Thiessen, E. Prospects and results for optical systems for site-specific on-the-go control of nitrogen-top-dressing in Germany. Precis. Agric. 2008, 9, 115–131. [Google Scholar] [CrossRef]

- Huang, Z.; Turner, B.J.; Dury, S.J.; Wallis, I.R.; Foley, W.J. Estimating foliage nitrogen concentration from HYMAP data using continuum removal analysis. Remote Sens. Environ. 2004, 93, 18–29. [Google Scholar] [CrossRef]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-Destructive Monitoring of Rice by Hyperspectral in-Field Spectrometry and Uav-Based Remote Sensing: Case Study of Field-Grown Rice in North Rhine-Westphalia, Germany. In Proceedings of the 2016 XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016. [Google Scholar]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-temporal crop surface models combined with the RGB vegetation index from UAV-based images for forage monitoring in grassland. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 991. [Google Scholar] [CrossRef]

- Zhang, J.; Cheng, T.; Shi, L.; Wang, W.; Niu, Z.; Guo, W.; Ma, X. Combining spectral and texture features of UAV hyperspectral images for leaf nitrogen content monitoring in winter wheat. Int. J. Remote Sens. 2022, 43, 2335–2356. [Google Scholar] [CrossRef]

- Schumacher, P.; Mislimshoeva, B.; Brenning, A.; Zandler, H.; Brandt, M.; Samimi, C.; Koellner, T. Do red edge and texture attributes from high-resolution satellite data improve wood volume estimation in a semi-arid mountainous region? Remote Sens. 2016, 8, 540. [Google Scholar] [CrossRef]

- Wengert, M.; Piepho, H.-P.; Astor, T.; Graß, R.; Wijesingha, J.; Wachendorf, M. Assessing spatial variability of barley whole crop biomass yield and leaf area index in silvoarable agroforestry systems using UAV-borne remote sensing. Remote Sens. 2021, 13, 2751. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Wang, L. Evaluation of morphological texture features for mangrove forest mapping and species discrimination using multispectral IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2009, 6, 393–397. [Google Scholar] [CrossRef]

- Colombo, R.; Bellingeri, D.; Fasolini, D.; Marino, C.M. Retrieval of leaf area index in different vegetation types using high resolution satellite data. Remote Sens. Environ. 2003, 86, 120–131. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating biomass and nitrogen amount of barley and grass using UAV and aircraft based spectral and photogrammetric 3D features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef]

- Moeckel, T.; Safari, H.; Reddersen, B.; Fricke, T.; Wachendorf, M. Fusion of ultrasonic and spectral sensor data for improving the estimation of biomass in grasslands with heterogeneous sward structure. Remote Sens. 2017, 9, 98. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Freeman, E.A.; Moisen, G.G.; Coulston, J.W.; Wilson, B.T. Random forests and stochastic gradient boosting for predicting tree canopy cover: Comparing tuning processes and model performance. Can. J. For. Res. 2016, 46, 323–339. [Google Scholar] [CrossRef]

- Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar]

- Gevaert, C.; Persello, C.; Nex, F.; Vosselman, G. A deep learning approach to DTM extraction from imagery using rule-based training labels. ISPRS J. Photogramm. Remote Sens. 2018, 142, 106–123. [Google Scholar] [CrossRef]

- Wang, T.; Crawford, M.M.; Tuinstra, M.R. A novel transfer learning framework for sorghum biomass prediction using UAV-based remote sensing data and genetic markers. Front. Plant Sci. 2023, 14, 1138479. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).