1. Introduction

Many multi-robot systems, including swarm robotics, are inspired by collective behaviors observed in nature. The topic has emerged as a promising concept with numerous applications across various domains [

1]. The development of key elements underlying self-organization, namely, collaboration, cooperation, and robustness, has enabled breakthroughs in many challenging and time-consuming tasks [

2,

3,

4].

Multi-robot systems demonstrate their efficiency by sharing tasks among robots. For instance, they can rapidly cover a designated geographic area or perform search and tracking, particularly in the context of rescue missions [

5,

6,

7]. Along the same lines, data exchange (including images and positions) can prevent collisions and assist the group in navigating complex environments, as is required for inspecting critical infrastructures [

8].

Other advantages of these systems are their flexibility, adaptability, and robustness. Thanks to a decentralized approach facilitated by communication among group members, robots can reorganize themselves in response to dynamic and often unpredictable changes even when a few robots become inoperative. These properties prevent system failure, making multi-robot systems desirable for long-lasting tasks such as environmental monitoring, where individual robots may take over for others due to failure or lack of energy [

9].

Behind these few examples, thousands of scientific papers and books have been published over the last few decades on the topic of swarm robotics (over 15,000 hits in google scholar for the last decade, and even more for multi-robot systems). These works range from purely theoretical studies to real experiments conducted in harsh environmental conditions. Researchers have explored centralized and decentralized methods as well as hybrid approaches, studied a wide array of robotic platforms, including aerial, ground, and underwater robots, and proposed applications spanning numerous domains. In addition, many surveys have been written in an attempt to classify the problems, approaches, methodologies, experimental platforms, and results.

The work presented in this paper focuses on multi-UAV systems, which are groups of identical Unmanned Aerial Vehicles (UAVs), sometimes referred as drones. While many works have been published in this field [

10,

11,

12], the present paper specifically addresses the problem of pattern formation. In the context of this study, the goal consists of positioning a set of drones evenly along a predetermined planar shape, such as a circle, polygon, butterfly, etc. The term ‘evenly’ can be interpreted as maintaining equal distances between the drones or, alternatively, as arranging them at regular angular intervals from a fixed reference point along the shape. In this work, we investigate the latter option. Additionally, unlike several previous works described in [

3], the shape itself is defined before the mission by a human operator. Thus, it does not result from interactions among the robots, as is usually the case for swarm robotics.

The original motivation for this work stemmed from an industrial accident that occurred in Normandy in 2019 (

https://www.francetvinfo.fr/faits-divers/incendie/incendie-d-un-site-seveso-a-rouen/ (French television news), accessed on 29 November 2023). The Lubrizol petrochemical factory and several neighboring warehouses caught fire, and the toxic and dense cloud quickly spread high into the sky close to the city of Rouen. Due to its size and the proximity of houses, firefighters encountered great difficulty in controlling the fire. Later on, the debrief of this accident sparked discussions about possible future strategies for improving the conditions of action. One part of the discussion focused on the possibility of deploying a set of drones for observational purposes. The idea was to enable a multi-UAV system equipped with specific sensors [

13] to surround the cloud at a relevant distance, with each drone pointing its camera towards the source of the fire. The current paper presents a decentralized and flexible solution for achieving this goal. The implementation of such a solution faces several problems.

Information should arrive continuously from the multi-UAV system; thus, due to battery capacity limitations, the composition of the group may change during the mission. This constraint eliminates solutions relying on a leader or any form of centralization, such as the one proposed by Raja and his colleagues [

14]. Identifying the area of interest for observation and defining the shape delimiting this area is another issue. According to the situation, environment, and physical constraints, the area of interest may vary widely, invalidating all solutions targeting a specific set of shapes. In 2021, Huang and his colleagues [

15] performed 3D representation with a UAV fleet using graph theory. For

N UAVs, each 3D model is meshed by

N nodes at specific coordinates. When an event transition is triggered, the fleet of UAVs moves from one shape to another by solving a task assignment optimization problem and planning a collision-free trajectory. An artificial potential field is used to manage obstacle avoidance in real-time. This work shows a concrete realization of a robust system with six UAVs; however, the model for each desired shape is meshed by a central machine beforehand, and the number of drones is fixed.

In order to define a wide variety of shapes delimiting potential areas of interest, we propose the use of Fourier Descriptors (FDs) [

16,

17,

18]. When coupled with the Fourier Transform (FT), FDs allow a planar formation shape to be defined as a virtual rigid-body structure. Methods based on FDs ensure the representation and retrieval of a shape. They derive from a shape signature (in general, a 1D function) representing 2D areas or boundaries. Zhang and Lu [

19] compared several methods based on FDs on a set of complex shapes. This approach provides results for the centroid distance with better robustness, convergence speed, and computation complexity, and is able to handle open curves. The FT approximates the shape signature with a finite number of harmonics in order to transform an unknown function into a low-computational sum of sinusoidal functions. To cope with the reactivity and flexibility constraints of decentralized networks, we decided to use a composition of virtual actions [

20,

21,

22] to ensure collision avoidance. Each UAV adjusts its velocity according to the presence of static or dynamic obstacles in a defined range.

In the

Section 2, the FD and FT formulation used to depict the desired formation is described. The decentralized allocation of the bearing angle between robots when the number of UAVs is known prior to the mission and when this number changes during the mission is described and explained in

Section 3.

Section 4 discusses modeling and control, along with obstacle avoidance management. The simulation protocols and experiments are presented in

Section 5, followed by the results and commentary in

Section 5.3.

2. Designing Planar Shapes with Fourier Descriptors

2.1. Problem Statement

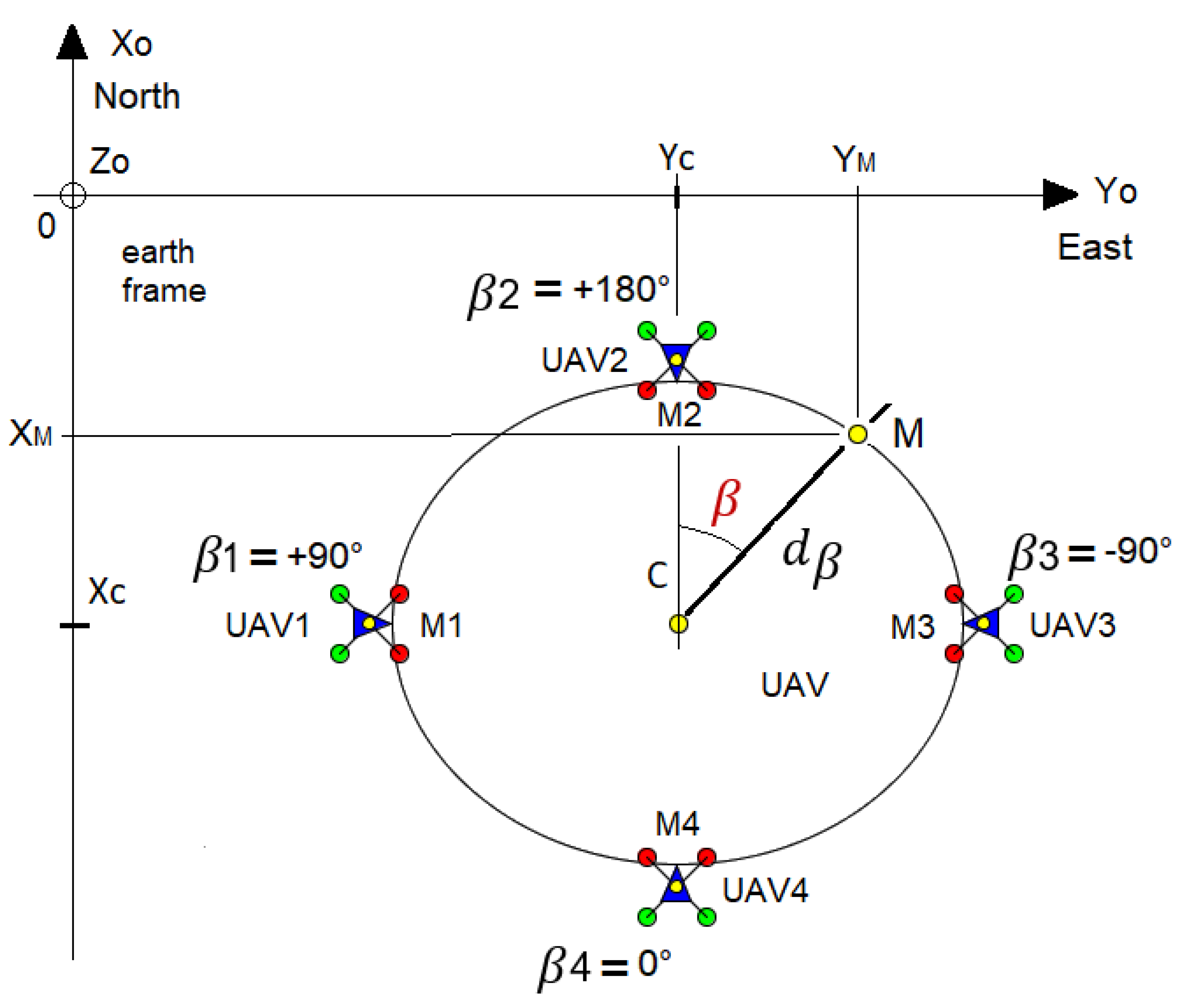

It is desirable to have a way to control the formation regardless of the number of UAVs. The formation should be maintained when the number of UAVs varies over time. For certain applications, conditions such as the number of deployed UAVs, target movement, objectives, etc., may vary during the execution of the mission. These variations may entail a change in the formation, such as its size, position, orientation, etc. Thus, it is appropriate to seek a way of describing the pattern irrespective of its absolute position, orientation, and size.

One way to ensure this is to use a combination of Fourier Descriptors (FDs) and the Fourier Transform (FT). This method allows shapes to be described through modifications while maintaining formation coherence using three parameters: amplitude, phase, and reference point.

In this study, we chose to equally distribute the UAVs (index i) around the shape with bearing angles (

) computed according to the number of available UAVs. It is worth noting that the number of UAVs can be unknown a priori; the decentralized method enabling the determination and allocation of the bearing angles between UAVs is described in

Section 3. Note that the term ‘equally’ (or ‘evenly’) refers to the angular distance from a UAV to a fixed reference point, not the Euclidean distance to that point or between UAVs. From now on, we consider that each UAV knows the bearing angle to be reached, which is implemented in the high-level control as a direction instruction.

Here, we want the UAV to find the coordinates of the point to be reached () while only knowing the reference point of the shape. Therefore, we have designed our functions to fit a real cyclic period of by describing them in polar coordinates from this point. These functions do not need to be continuous; they can be sampled or piecewise continuous. To allow the planar shape to rotate in 3D, a rotational quaternion is provided to all UAVs alongside the harmonics from the FT.

Each UAV has to regulate their distance errors from the point towards zero in order to reach (

). At all times, they must point towards the reference point, which is the opposite of the direction

(

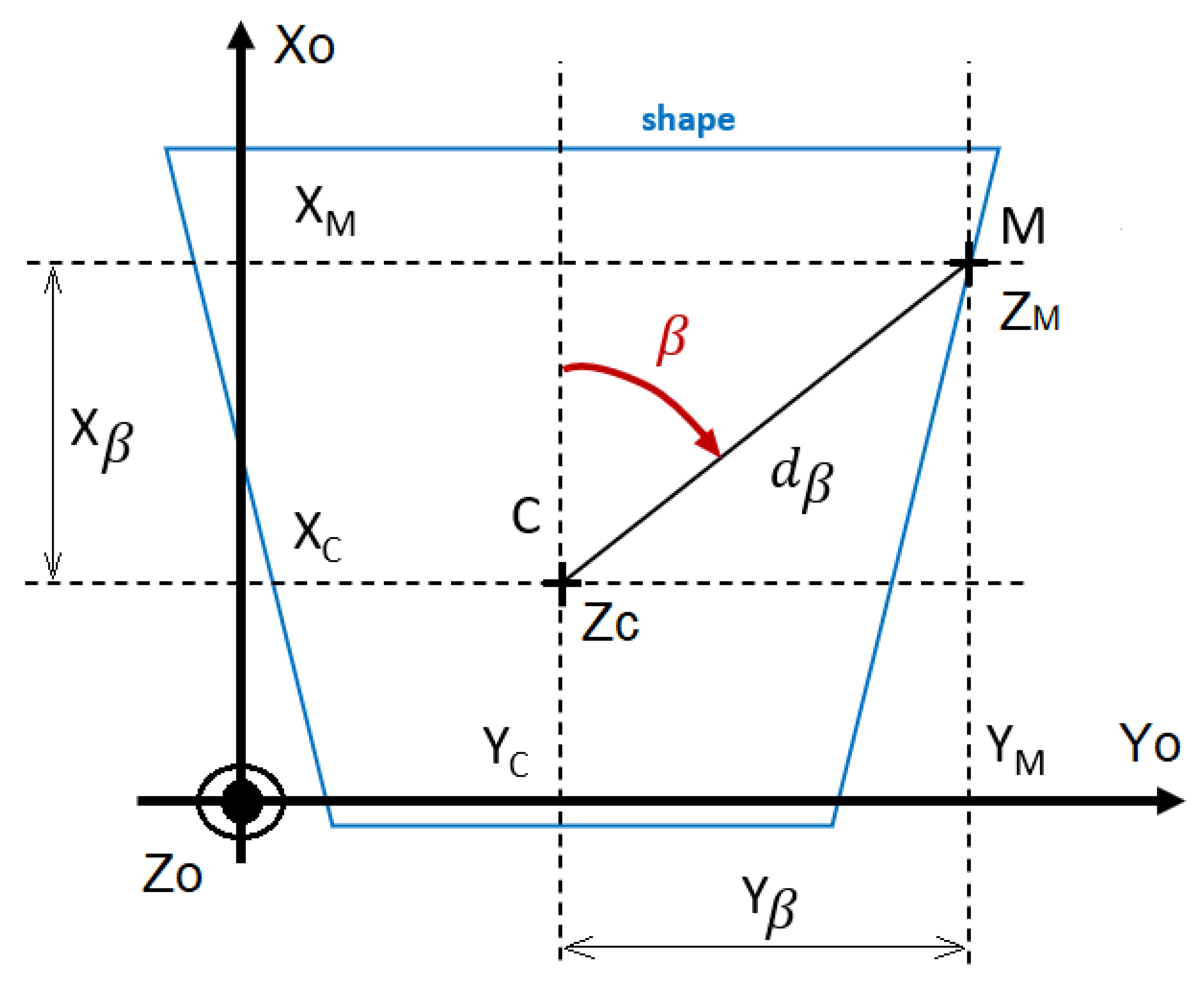

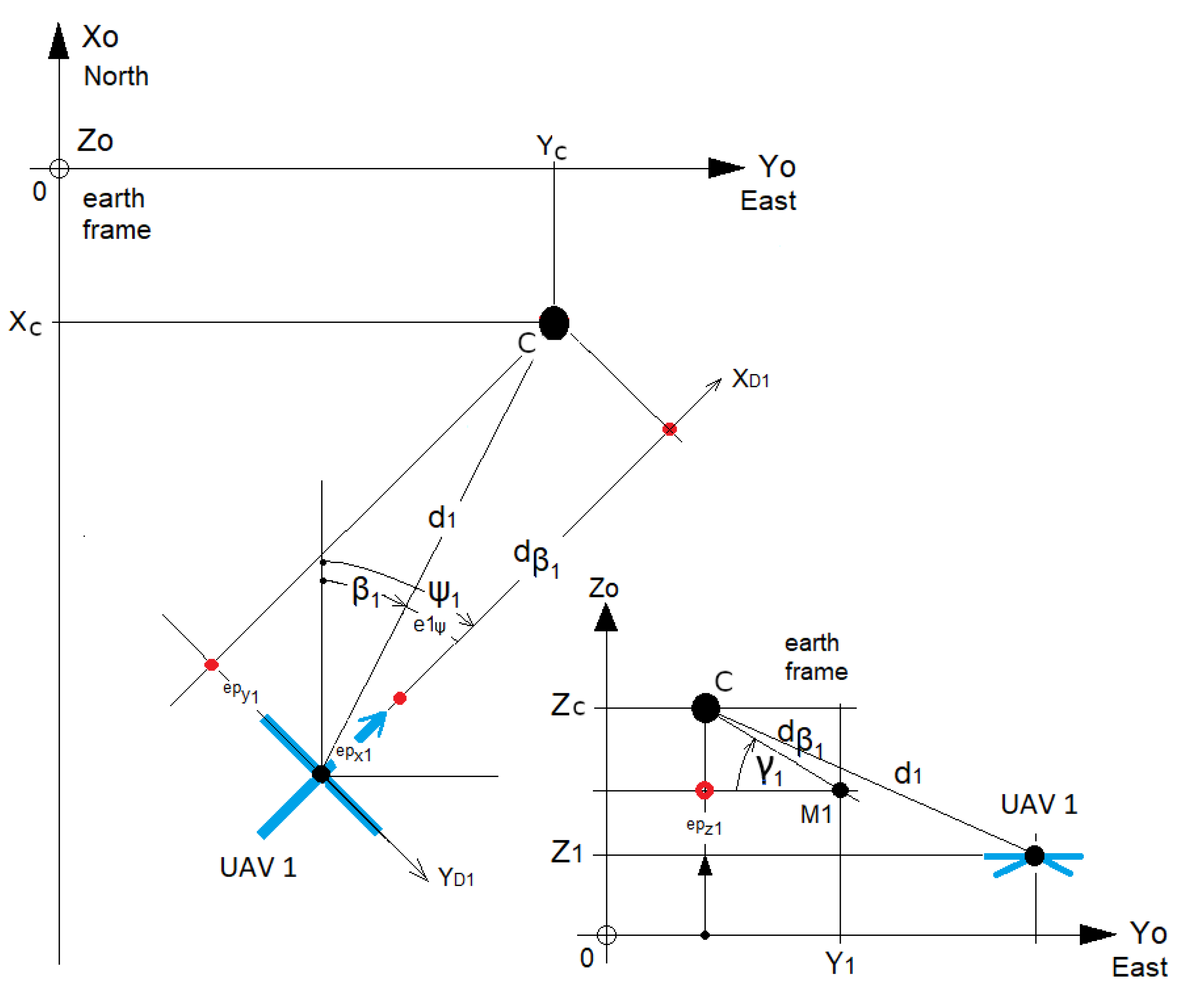

Figure 1).

2.2. Fourier Descriptor

Fourier descriptors [

16,

17,

19] describe a planar function

with a single variable

from a reference point

(

Figure 2). This one-dimensional function

is called the shape signature, and must be periodic.

In this study, we use the centroid distance to describe the signature shape

of the desired curve (

Figure 1 and

Figure 2), as it captures and retrieves complex patterns with the most accuracy [

19]. Additionally, it matches the polar description used to retrieve the desired position. It is expressed by the distance of the boundary points (

M) from the centroid (

C) of the shape. To match our problem statement, the reference point

C can be any point as long as one angle provides one possible distance.

Discrete Fourier Transformation (DFT) is applied to retrieve the harmonics (

1). Their number

depends on the sampling frequency

of the continuous function of the shape signature with respect the Nyquist–Shannon condition (

2);

N denotes the number of samples generated, and

is the associated angle of the sample

t parameter of the signature function.

The Fourier coefficients are determined using the DFT. The distance

from the reference point is computed with the bearing angle

and the coefficients:

: phase of the nth harmonic

: distance from the reference point C to the point M

: angle between the X (north) axis and the direction defined by point M and the reference point C

: distance along the X axis (north) of point M from the reference point C

: distance along the Y axis (east) of point M from the reference point C

2.3. Spatial Rotations

At this state, the position that is reached is determined by the altitude of the reference point

, and is purely planar (

Figure 2). Thus, a quaternion is defined to provide the third dimension of the planar shape, allowing 3D rotations. This only requires a revolutionary axis coupled with an angle.

Let

q the initial quaternion resulting from DF and let FT be defined as follows:

The rotational quaternion

is interpreted from the rotational angle

and the normalized vector

:

The new normalized position vector

, which is the result of the rotation of

q around

, can be expressed as

Next, the normalized position vector

is multiplied by the distance

to retrieve the desired position of point

M.

To conclude, the management of the formation involves leading each UAV i to their point defined around the 3D shape () while avoiding obstacles and collisions.

In summary, the method allows each drone to obtain its destination position belonging to the shape using four main information: the reference point of the shape, the harmonics, magnetic north, and the bearing angle. In the context of this work, we additionally want this whole process to be decentralized in order to guarantee its flexibility and robustness. Flexibility means that before the mission any drone might be replaced by another one without the need to assign it a unique identifier. Robustness means that drones may leave the formation during the mission, while others can arrive and insert themselves into the shape. Regarding the necessary information for performing the mission, only one parameter has to be determined in a decentralized and online way for the dynamic version. Notably, the reference point and harmonics are predefined mission parameters that remain constant throughout the mission. As far as the UAVs are concerned, magnetic north is assumed to be common knowledge. Thus, the only information that needs to be computed is the bearing angle. Allocating the bearing angles to the drones prior to the mission as part of their parameters is a possibility; however, this choice would make the method less flexible and less robust. In such a case, the bearing angles would have to be individually transmitted to each drone by a central device, which implies a unique identifier for each drone, which contradicts the core principles of decentralized algorithms. Furthermore, such an approach reduces robustness, as the number of drones cannot change during the mission. The next section addresses these issues by presenting the algorithms used to ensure decentralization.

3. Decentralized Allocation of Bearing Angles

In this section, we present algorithms that enable drones to self-determine their bearing angles through message exchanges. These algorithms are executed asynchronously by each drone. In all cases, the drones do not have identifiers. Thus, all broadcast messages are anonymous. Three cases are investigated:

Static and reliable communication: the size of the group (number of drones) is known by every drone and no messages are lost during the exchanges.

Static and unreliable communication: the number of UAVs is known and messages may be lost during communication.

Dynamic and reliable communication: the number of drones is unknown and may change during the mission, meaning that drones may leave the formation and new drones may join it, while no message are lost during communication.

In all scenarios, the drones communicate by broadcasting messages and the communication topology is a fully connected graph. Thus, no routing is needed. All of the drones have a compass, enabling every drone to consider magnetic north as the reference null angle. The drones have to be equally distributed over the shape; thus, computing an angle is equivalent to each of them choosing a number between 0 and , where N refers to the number of drones. These numbers are called positions, and two drones cannot have the same position. Note that for the 3 (dynamic-reliable-com) case N can change during the mission. In this study, the term consensus refers to the situation where each position has been chosen by one and only one drone. The algorithms presented in the sequelae are designed such that consensus is reached through a decentralized process.

3.1. Known Constant Number of UAVs

For this first case, we assume that several conditions are fulfilled. We suppose that the drones communicate by broadcasting messages and that each broadcast message is received by the other drones. We suppose that the number of drones initially equals N and that no drone leaves or joins the group during the mission.

The principle lies in the use of an array of Boolean values. In this array, cell

i is

true when position

i has been chosen by a drone and is

false when no drone has chosen this value. When necessary, drones broadcast both their array of Booleans and their chosen position. If two drones have chosen the same position, there is a conflict. Thus, upon reception of such a message, the drone compares its position and the received one; in the case of conflict, it performs a new random choice of position such that its corresponding cell in the array contains

false. The method is formally written out in Algorithm A1 (and see

Appendix A.2).

To measure the performance of the algorithm, the asynchronous execution of the algorithm by each drone was obtained through a multi-threaded simulation. Each drone was assigned to an independent thread that executed the algorithm. Two scenarios were considered. In the first scenario, message loss was not considered, while in the second scenario a substantial percentage of broadcast messages were lost.

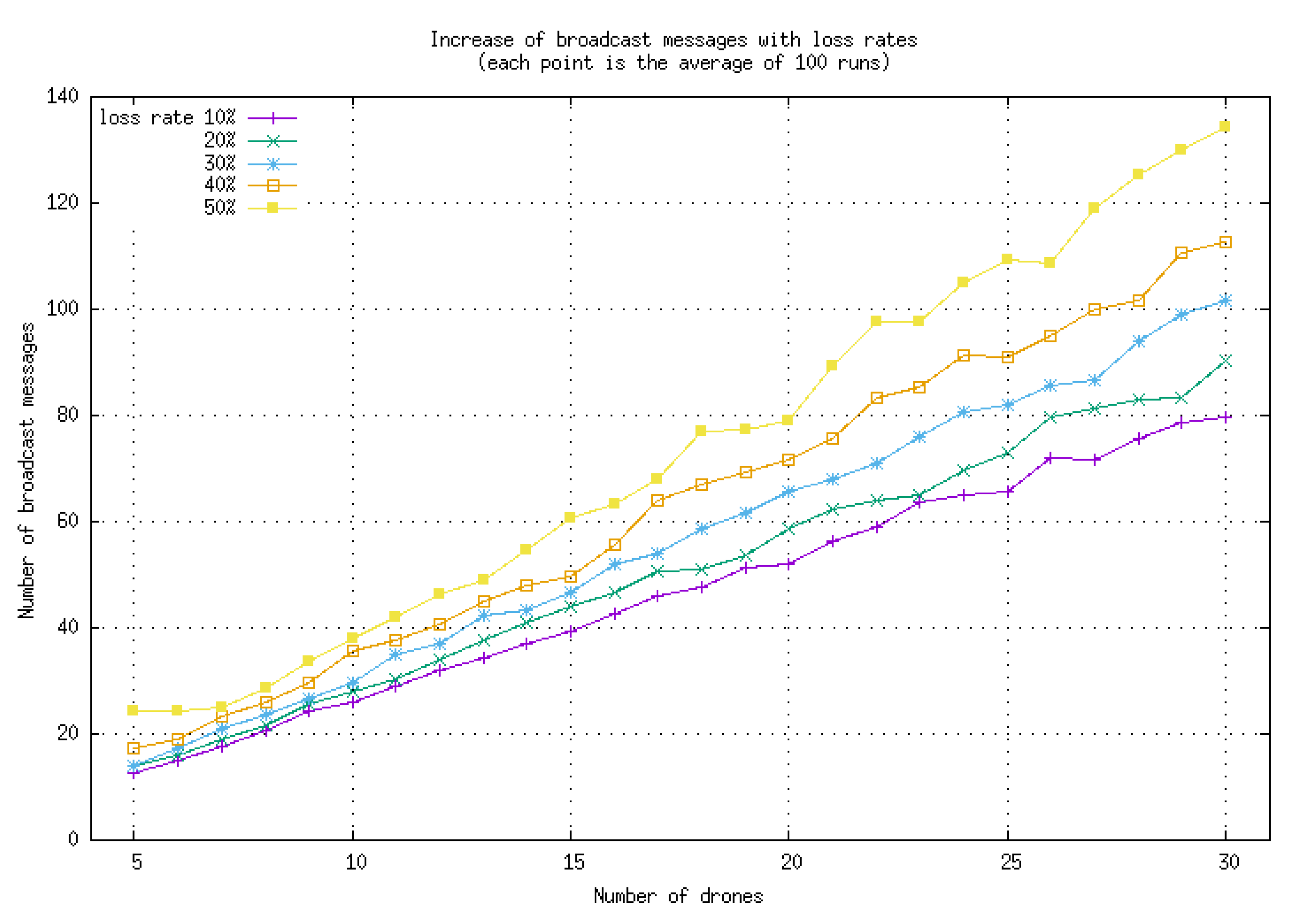

The algorithm is highly efficient in the scenario without message loss, with an average of only 2.5 broadcast messages per drone required to achieve consensus. In this process, each drone selects a unique number from

such that each number is associated with one and only one drone. The experimental results are reported in

Figure 3.

In the second scenario, broadcast messages loss may lead to a deadlock. In case of a conflict, the two involved drones have to choose another number and broadcast their new choice. If one of the two messages is lost, the other members of the group receive one message from the other drone with a cell containing “false”; as the other message is never received, the drones may wait forever. To cope with this issue, a timeout mechanism is introduced into the algorithm. If a drone does not receive any message after a given period of time and continues to have cells containing “false” within its array, it repeats broadcasting of its position array and its own position. This new broadcast triggers allows the correct positions to be marked “false” in the received array. Thus, the condition in line 5 of Algorithm A1 becomes: if change timeout then.

As reported in

Figure 4, the number of broadcast messages increases with the percentage of lost messages. However, the method remains very robust, as large loss percentages (up to 50%) do not prevent the algorithm from reaching consensus. Furthermore, the number of additional messages needed to cope with the lost messages grows linearly with the number of drones (with a factor close to 4.5 for a 50% loss rate), making communications congestion unlikely.

3.2. Dynamically Changing Number of UAVs

For this last scenario, the composition of the group is dynamic. Two opposite events may occur: a drone joins the group or a drone leaves the group. We assume several conditions to be true:

Communication is assumed to be reliable, i.e., no messages are lost.

There is enough time between two events (join or leave) for the group to reach a consensus on each drone’s respective chosen position.

The topology of the communication network is a fully connected graph; thus, no routing is needed.

It is assumed that messages are received in the same chronological order in which they are emitted. Specifically, it is expected that a message sent at time t by drone is received by all other drones before any message broadcast at a later time by drone . This assumption can be considered generally valid in the context of a fully connected communication topology.

The drones process their messages in First-In First-Out (FIFO) order.

Because of these changes, unlike the previous scenario the allocation of bearing angles is a never-ending process. The method lies in the broadcast messages. Each message contains three fields: the position chosen by the drone, its array of positions (Boolean values), and the type of the message.

| My position | | MSG_TYPE |

Three types of messages are considered:

JOIN: when a drone wants to join the group, it broadcasts a JOIN-type message with its position (0 by default) and an array of only one cell containing true.

LEAVE: when a drone leaves the group, it broadcasts a LEAVE-type message with its current position and the corresponding array with false at its own position.

UPDATE: several situations may lead to the emitting of an UPDATE-type message:

When a drone changes its position (caused by a conflict), it broadcasts a new UPDATE-type message with its newly chosen position and the new array with the Boolean false in the cell of its former position.

When a drone receives a JOIN-type or LEAVE-type message, it modifies its array of positions according to the situation and broadcasts an UPDATE-type message.

For newly arriving drones, a drone updates the size of its array after receiving an UPDATE-type message.

As an example, consider a drone D that has chosen a position j. Its array of positions contains true at index i (≠j) if and only if it receives a message from another drone claiming i as its own position. If D receives a message with false at index j in the array, it broadcasts a new UPDATE message claiming j as its position with true at index j in the array.

After a first consensus has been found (i.e., the drones have all chosen their respective positions), the decentralized method positions any newly arriving drone at the last position. When a drone leaves the group, only those drones with a larger position value change it, decreasing its value by 1. This ensures minimal distance changes for all drones in both cases and reduces the likelihood of collisions.

The algorithm is composed of two processes: a reception process that gathers received messages into a FIFO structure (mailbox in the Algorithms) and the main process described by Algorithms A2 and A3 (provided in

Appendix A).

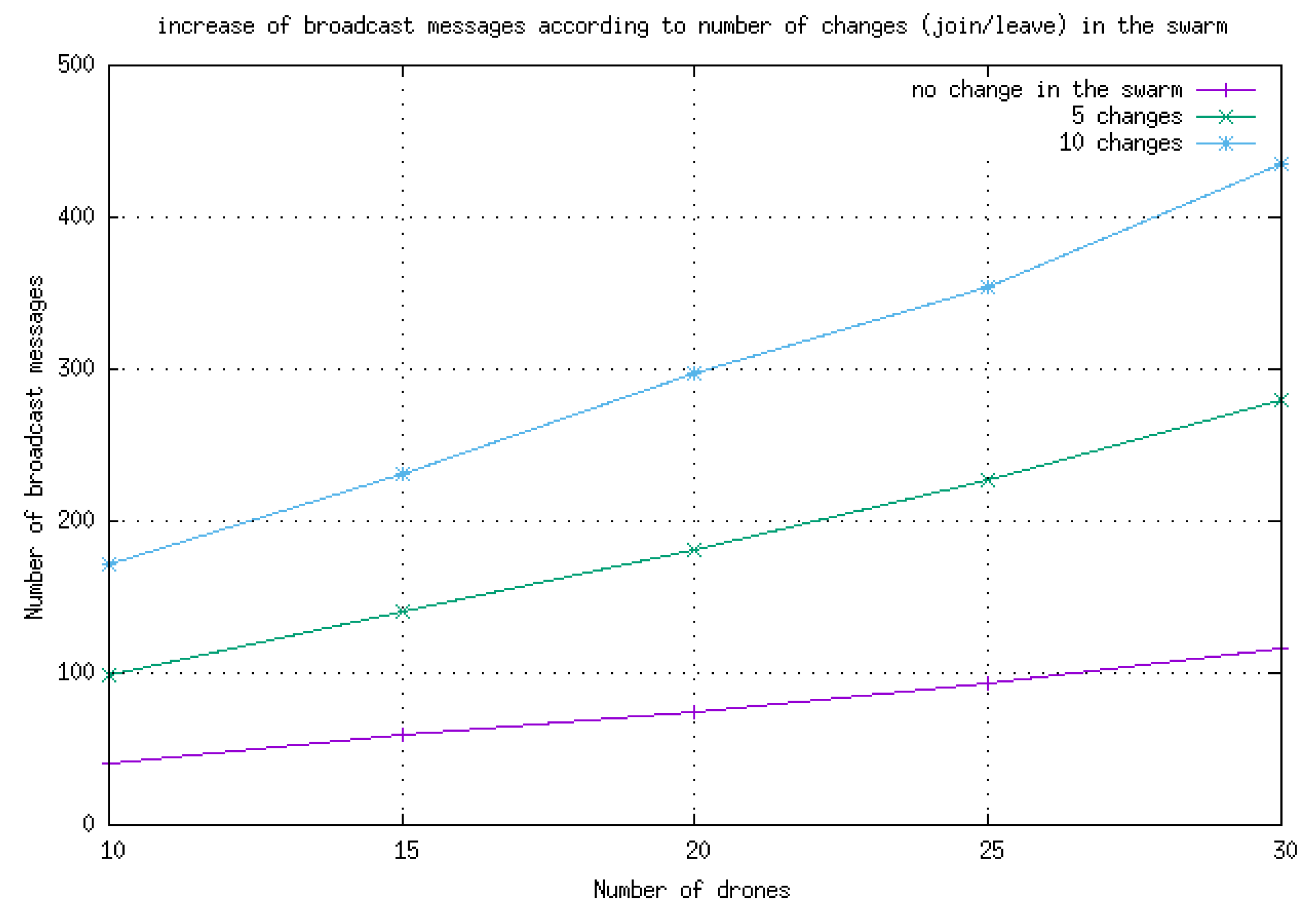

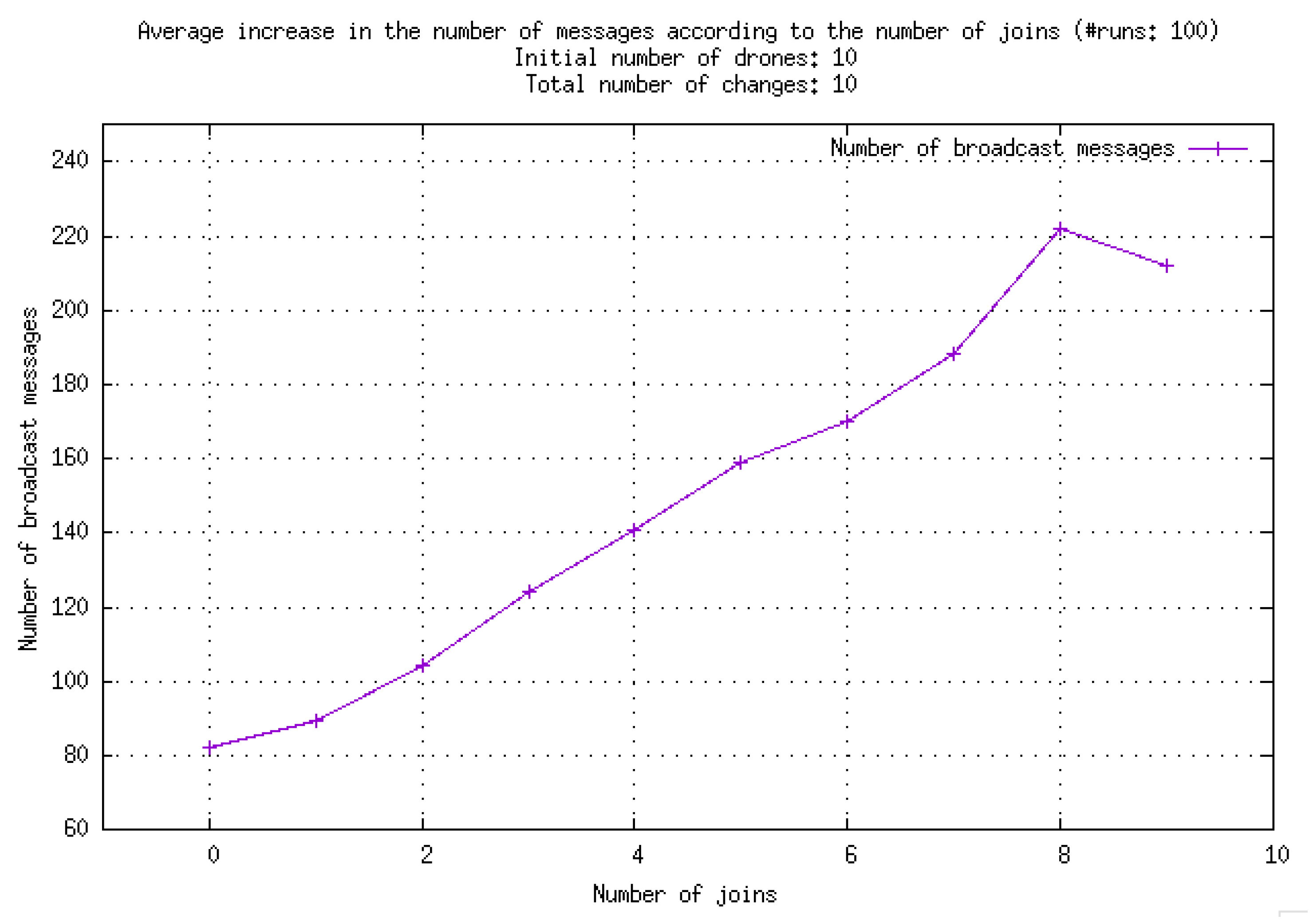

In

Figure 5, it can be observed that the number of broadcast messages increases linearly with the number of changes occurring within the group. However, this increase is mainly due to drones joining the group, as illustrated by

Figure 6.

4. Control Framework

The formation of UAVs (marked with an index

i) must surround a predefined shape computed using Fourier descriptors. The UAVs orient themselves towards the desired direction

(

Figure 1), defined as the angle between the

X axis and the direction of the UAV in the North/East/Down (NED) reference. The UAVs must reach their target position

while avoiding each other to prevent collisions. The GPS coordinates of the reference point (

C) are known. The Fourier descriptor returns the distance

and altitude angle

from point

C for a given angle

and a rotational quaternion (see

Section 2,

Figure 7). Each UAV has to regulate their distance errors with respect to the point to be reached (

) toward zero while pointing towards it according to the desired direction

(

Figure 1). Double exponential smoothing [

23,

24,

25] is performed in real time to compute a filtered estimation and predict the distance

and bearing angle

.

4.1. Control Law

The main goal of the proposed cascade controller is to regulate the position of the UAV compared to the fixed target point while maintaining good safety conditions. For this, two different controllers have been designed and implemented. The speed controller (inner loop) takes into account the speed errors according to the x, y, and z axes and their respective variations as functions of time. To ensure safe behavior, a first saturation function (f) is implemented to limit the pitch/yaw angles and the vertical acceleration when the sum of the speed errors (weighted by three parameters that have to be tuned) exceeds a given speed. Below this limit, the UAV starts to regulate its speed; otherwise, it moves at constant pitch/yaw angles and vertical acceleration. The speed controller outputs are the reference inputs (attitude and vertical acceleration) of the flight controller of the UAV. The three parameters of the speed controller have to be set first in order to obtain a closed loop behavior for the the speed control (inner loop) that is significantly faster than the position control (outer loop). The position controller (outer loop) takes into account the position errors according to the x, y, and z axes and their variations as functions of time. To ensure safe behavior, a second saturation function (f) is implemented to limit the speed according to x, y, and z when the sum of the position errors (weighted by three coefficients that have to be tuned) exceeds a given distance. Below this limit, the UAV starts to regulate its position compared to the target point; otherwise, it moves at a maximum constant speed. The outputs of the position controller are the reference inputs of the speed controller.

The attitude control was designed by Squadrone Systems [

26]; the control inputs of the UAVs, namely, the roll (

), pitch (

), yaw (

), and vertical acceleration (

), are available in the C++ API. Below, we describe the control law for

.

4.1.1. Velocity Regulation

The filtered velocity errors

of

are as follows (

Figure 7):

For

, the velocity control objective is as follows (

8):

To ensure the control objective (

8), the following velocity control law (

9) has been implemented:

, , : the coefficients to be customized,

= (, , ): the command vector of ,

, , : the maximal angular positions and vertical acceleration, respectively, reached when the velocity errors are higher or equal to the velocities , , ,

g is the gravity acceleration in m/s.

Note that the full command vector of is = (, , , )

4.1.2. Position Regulation

To reach the desired orientation

provided by the Fourier descriptors, we only have to obtain

as the reference value

of the yaw control:

The filtered position errors

of

are as follows (

Figure 7):

: the bearing angle of

computed by the Fourier descriptors (

Figure 2),

: the desired distance of

computed by the Fourier descriptors (

Figure 2),

: the altitude angle between the target point

and reference point

C computed by the Fourier descriptors and the quaternion (

Figure 2),

: the filtered distance between and the reference point C,

: the filtered bearing angle (yaw) of ,

, : the respective altitudes of the reference point C and .

For

, the position control objective is as follows (

12):

To ensure the control objective (

12), the following position control law (

13) has been implemented:

, , : the coefficients to be customized,

, , : the linear velocity vector of ,

, , : the maximal linear velocities reached when the position errors are respectively higher or equal to the distance , , .

The saturation function

f is defined as follows:

Note that the manufacturer [

26] provided us with the dynamic closed loop model of the UAV, which corresponds to the attitude and vertical acceleration control. We used this dynamic model in Matlab/Simulink to first tune the three parameters of the speed controller and then the three parameters of the position controller in order to obtain the shortest response time for each loop (pole placement) without static errors, damping, or oscillations.

4.1.3. Stability Analysis

For the speed controller (inner loop), we defined three positive gains corresponding to the slopes (max/lim) of the saturation function in the intervals [

…+

], [

…+

], and [

…+

]:

Consider the following (positive) candidate Lyapunov function:

Its time derivative can be written as

In conclusion, it is apparent that

, as

,

, and

are all positive gains.

For the position controller (outer loop), we define three positive gains corresponding to the slopes (max/lim) of the saturation function in the intervals [

…+

], [

…+

], and [

…+

]:

Let us consider the following (positive) candidate Lyapunov function:

Its time derivative can be written as

In conclusion, it is apparent that

, as

,

, and

are all positive gains.

4.1.4. Collision Avoidance

The proposed collision avoidance method can be used with either static obstacles (poles, etc.,) or dynamic obstacles (other UAVs) if their GPS coordinates are known or sent in real time. Let

, the velocity of

, be the subtraction of a repulsive speed

from an attractive one

(

10);

is the repulsive effect applied by the neighboring

on

. When

crosses the defined protected circular area of

(i.e.,

is below a threshold

),

is subject to a repulsive effect homogeneous to a velocity with an intensity

that changes according to the inter-UAV distance

(

Figure 8). Each UAV computes its distance

, altitude angle

, and bearing angle

with respect to its neighbor

j using the Haversine function based on its GPS coordinates emitted in real time over the communications network. When including the collision avoidance method, Equation (

14) becomes

with

The intensity of the repulsive effect (

Figure 8) applies when

5. Simulations and Experiments

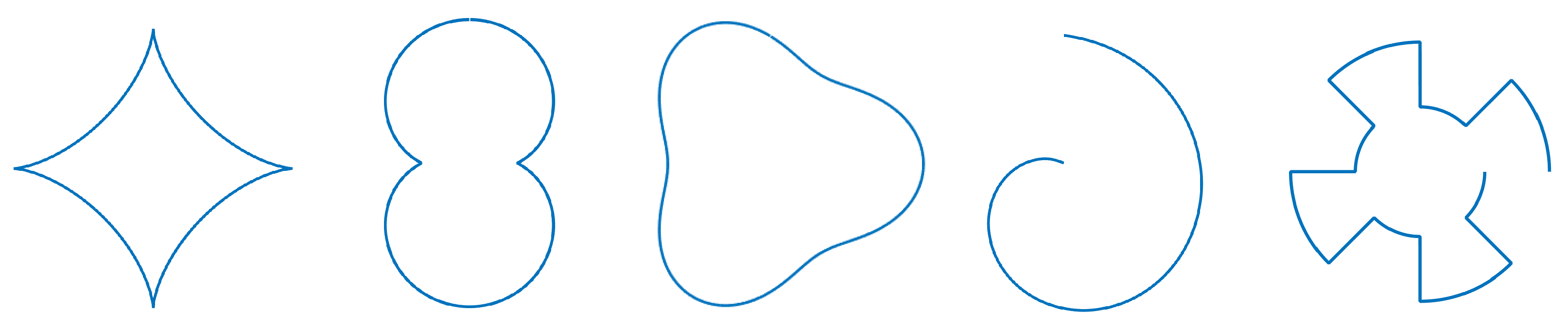

To demonstrate the decentralized coordination efficiency using FD and FT, several simulations were conducted on different shapes.

5.1. Simulations Description

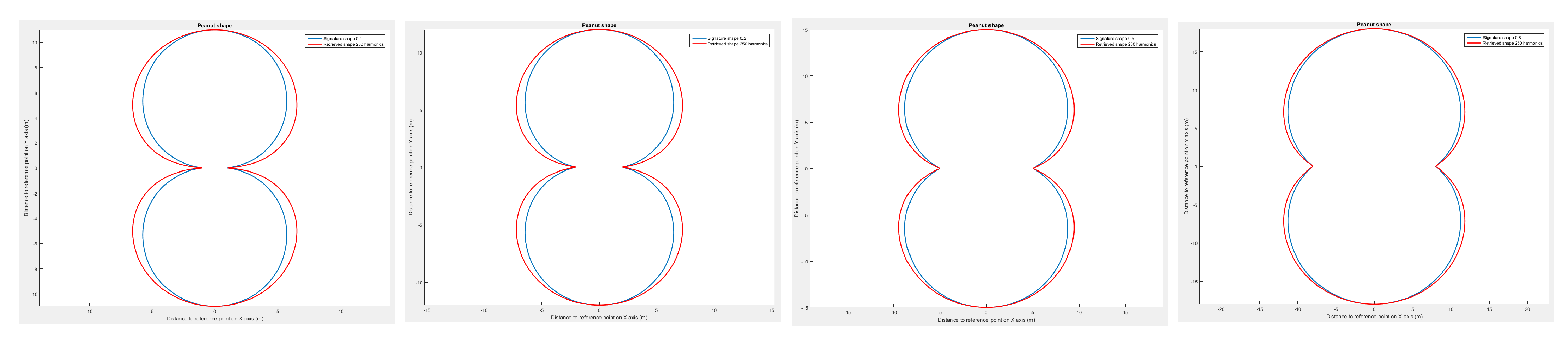

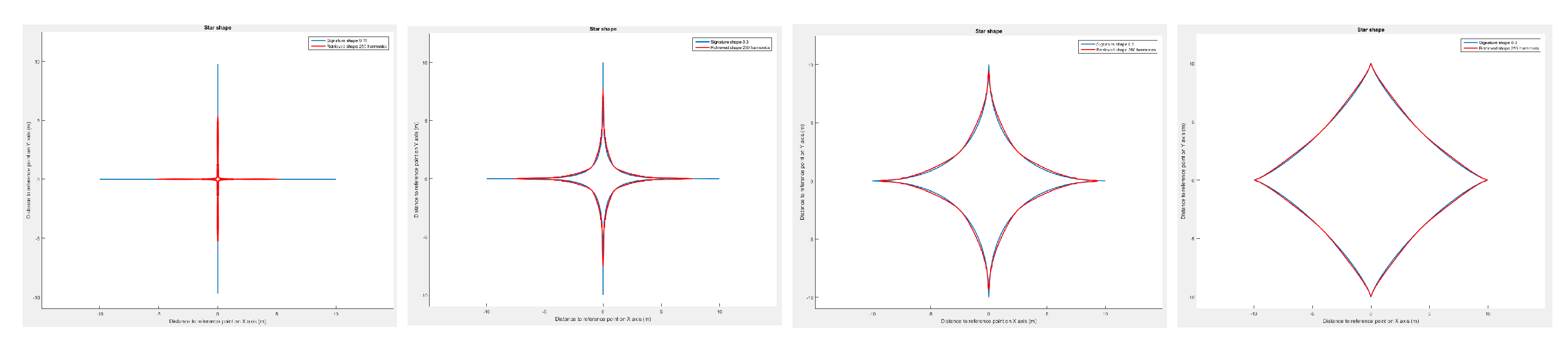

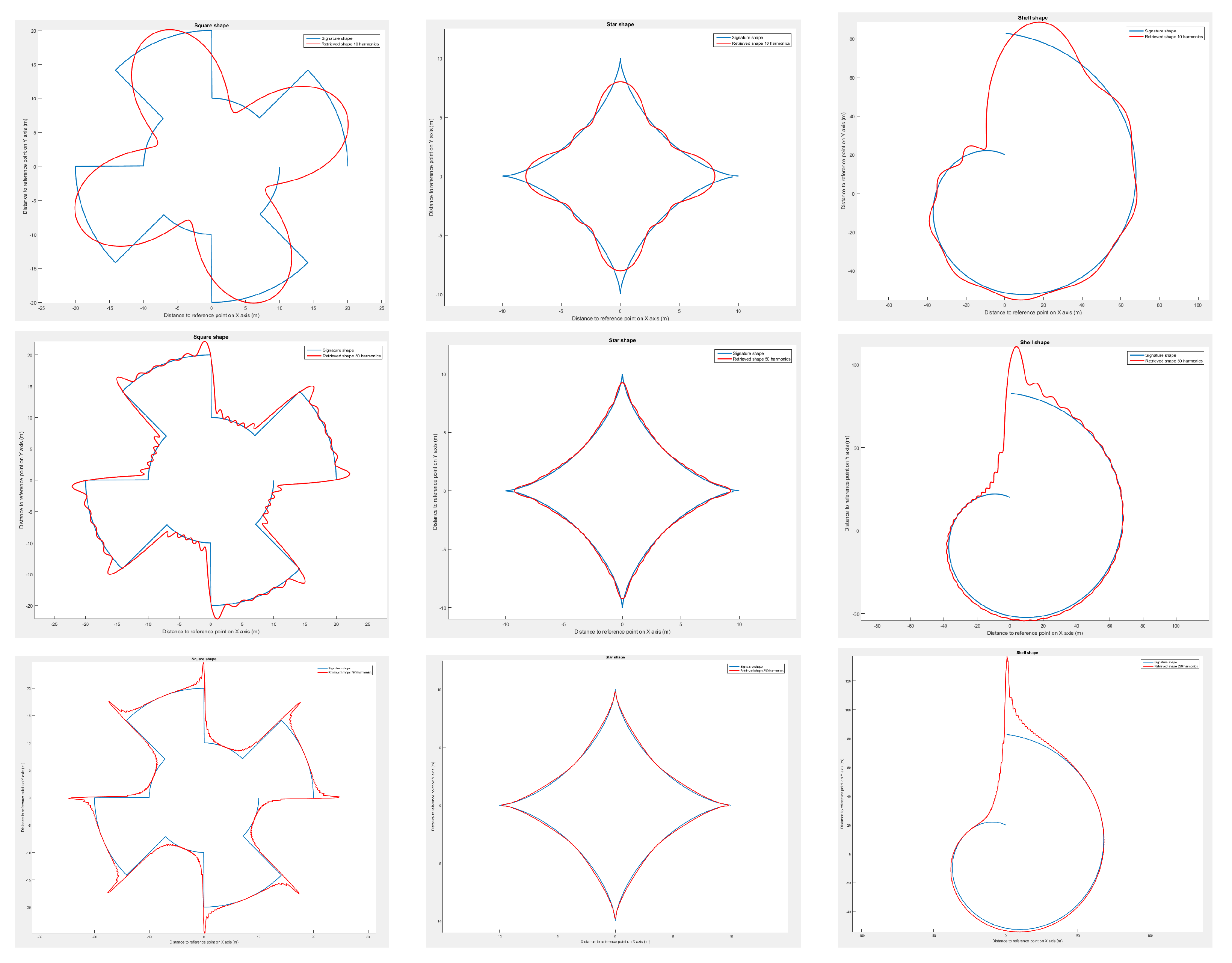

In keeping with the previous explanations, the shapes were restricted to be polar, continuous, and

periodical. This allows drones to compute their desired position from only the reference point and each drone’s desired bearing angle. Thus, we selected astroidal (star), peanut, pear, shell, and square signal shapes (see Equation (

19) and

Figure 9). This set was used to test the method’s robustness on asymmetrical, discontinuous, convex, and concave shapes.

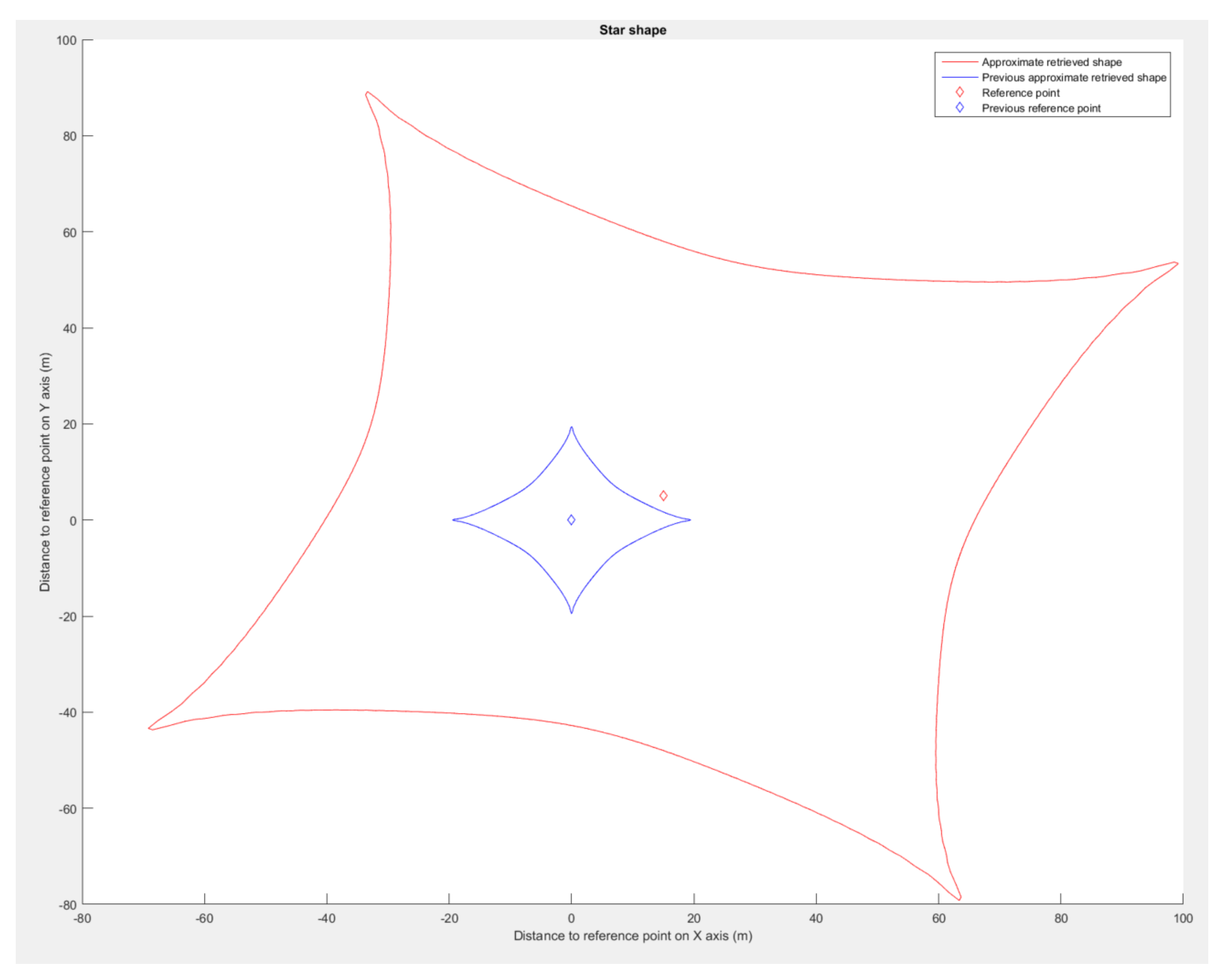

Each signature shape was sampled from 0 to by 1000 points, allowing up to 500 harmonics without folding effects (i.e., the Nyquist–Shannon criteria). For all simulations, we used 250 harmonics to retrieve the described shape. For each simulation, the parameters were set to a scale factor of 20, no rotation, and as the reference point. This was the setup used by default unless otherwise specified.

First, we evaluated our method for retrieving shapes to demonstrate its accuracy on the set of shapes. On the astroidal and the peanut formations, the n function parameter was varied to make the initial description more or less concave and convex, respectively.

In addition, these simulations were used to illustrate the impact of the number of harmonics used during the shape retrieval.

Transformations were applied to validate the non-variation of shape design in 3D space through modifications.

In the simulations, starting from their origin, the UAVs were required to equally distribute themselves angularly along the shape’s border while facing the reference point. Then, to depict the total shape and ensure shape continuity, we carried out runs in which one drone needed to follow the shape border by continuously incrementing its desired bearing angle ( per second).

Finally, a drone was deployed to reproduce the pear shape. A second drone was used to validate collision avoidance with both virtual and real obstacles.

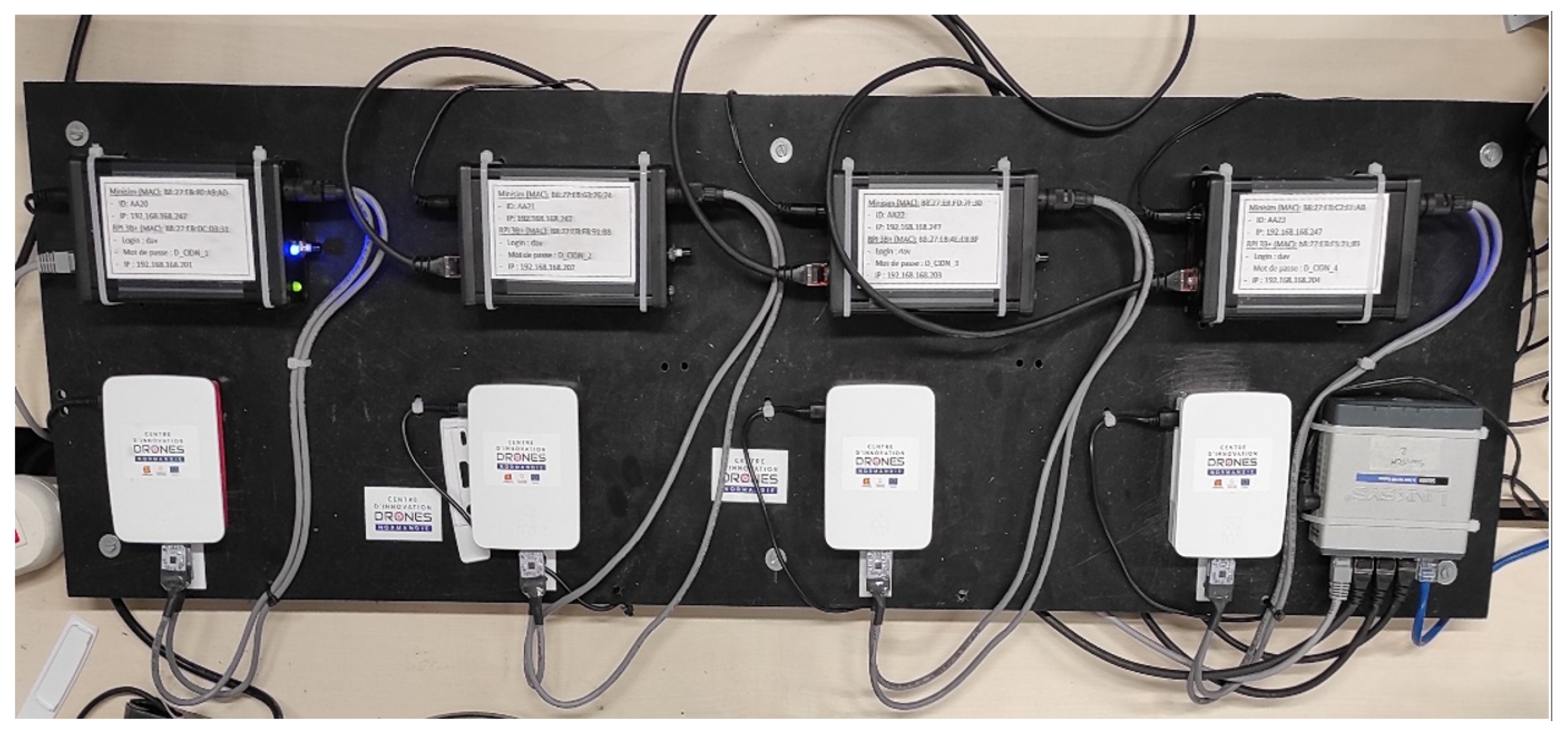

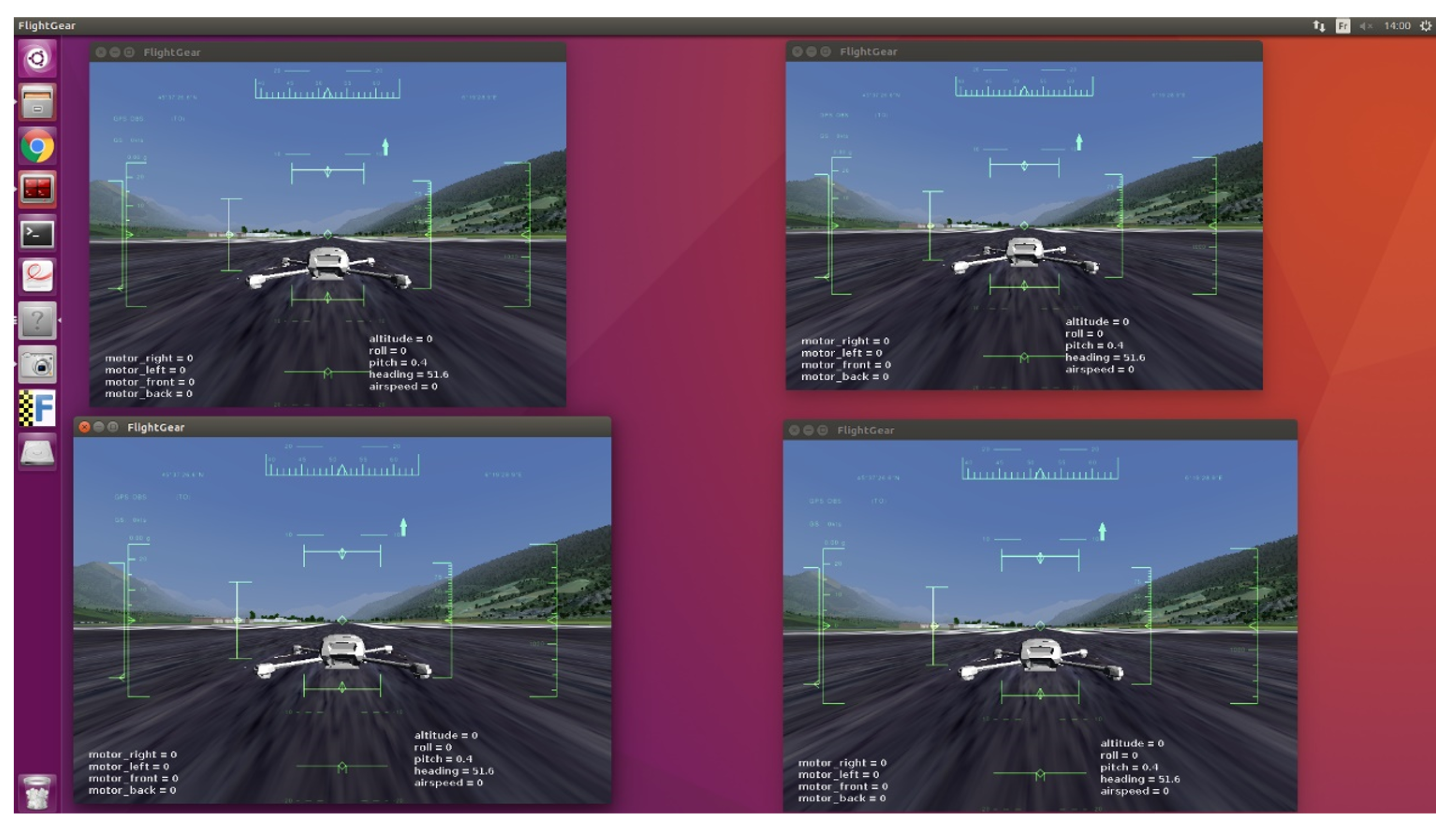

5.2. Flight Simulations

The first flight simulations were conducted on Processing [

27] to simulate any number of drones. The second set of runs were conducted on a system composed of the following:

Four “MiniSim” simulators (designed by Squadrone Systems [

26]) of the “hardware in the loop” (HITL) type, reproducing the dynamic behaviour of the UAVs (

Figure 10);

Four embedded RaspBerry PI 3B+ companion computers, on which our algorithms were implemented in C++. These were the same embedded computers installed on the real UAVs.

An operator computer to connect the four embedded computers together for programming and executing the algorithms.

A flight simulator on a separate computer on the “MiniSim” simulators linked via rooter. Open-source FlightGear (v. 2020.3) [

28] software was used to visualize the flight of the drones. This setup allowed several sessions to be run simultaneously in order to observe the flight of the group (one UAV per window, as illustrated on

Figure 11).

This whole system emulates the workings and behavior of real drones in a field test without being subject to communication hazards.

5.3. Simulations Results

We used Matlab 2015B [

29] to evaluate our shape retrieval method on the set of formations presented in

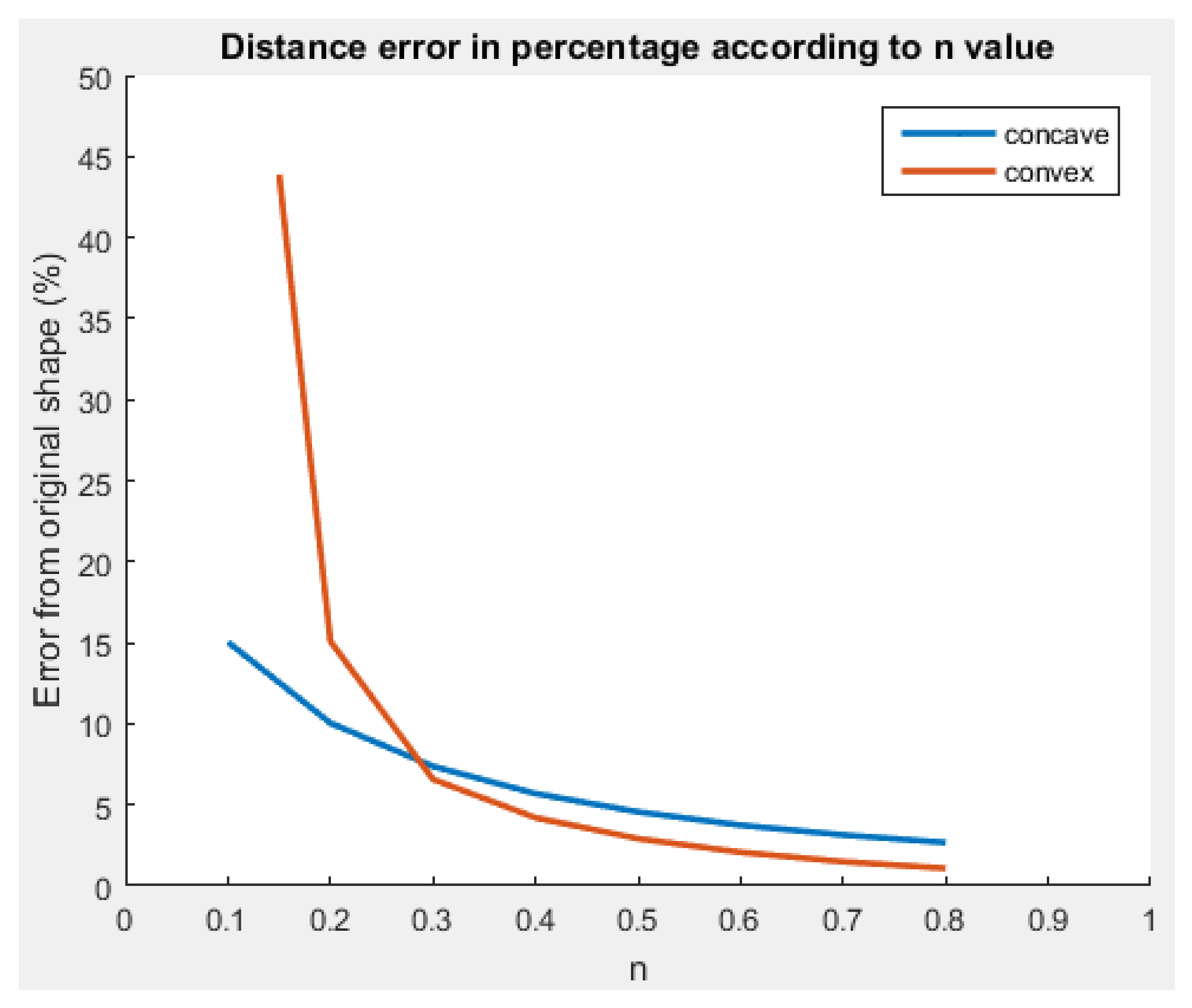

Figure 9. On the concave (peanut) and convex (star) shapes, we modified the curve factor

n in Equation (

19) to increase the given shape test panel. As a result, the more curvy the shape in

Figure 12, the more the retrieval accuracy decreases. When the star shape is almost a straight line in each direction, the method transcription falls drastically to an error of almost

.

It seems that the method using a polar FD lacks efficiency and robustness on shapes with high variations. We noticed the same phenomenon on the square signal and shell shapes even with large number of harmonics (see

Figure A3 in

Appendix A). Nonetheless, the non-continuity of the derivative seems to be handled flawlessly.

When varying the number of harmonics, the shape retrieval method does not behave the same on the whole set of shapes. In

Figure 13, the only shape for which the error continuously increases with the reduction of harmonics is the shell. For the other shapes, an increase or decrease in the number of steps reduces the retrieval accuracy.

The increase in the error at certain specific steps provides clear evidence of the presence of null harmonics for certain shapes. Depending on the ease with which the shape can be interpreted with fewer harmonics, it is possible to reduce the number of harmonics in order to limit the amount of information required by the drones.

As an example, we deleted all harmonics with a radius lower than

prior to depiction by the FD. The resulting retrieval error is illustrated in

Table 1.

Even when reducing the number of harmonics, the proposed method performs as if there were all 250 harmonics. Interestingly, the pear shape had only 2 harmonics after reduction. This means that only six values ( (frequency, amplitude, phase)) need to be sent to the drones in order for them to have the full shape depiction. Instead of sending a precomputed (x, y, z) coordinate each time the drone needs to move along the shape, it now requires only a bearing angle () after having sent the harmonics. Thus, for the pear shape, if a drone needs to move around it more than twice, the amount of data that needs to be exchanged is lower with our method.

Next, we visualized the shape retrieval process with different transformations applied (translation, rotation, and resizing). As a result, we were able to dynamically change the shapes without deformation or loss of continuity (

Figure 14).

In this way, dynamic formation control can be performed without recomputing the harmonics or resampling the initial set of points fed to the DFT. The results prove that our method saves computation time when applying transformations on the same shape compared to standard method of point control of each drone, which needs to compute new coordinate for the drones each time.

Using the proposed method, light shows using drones to depict moving objects no longer require multiple computations to animate the represented shape.

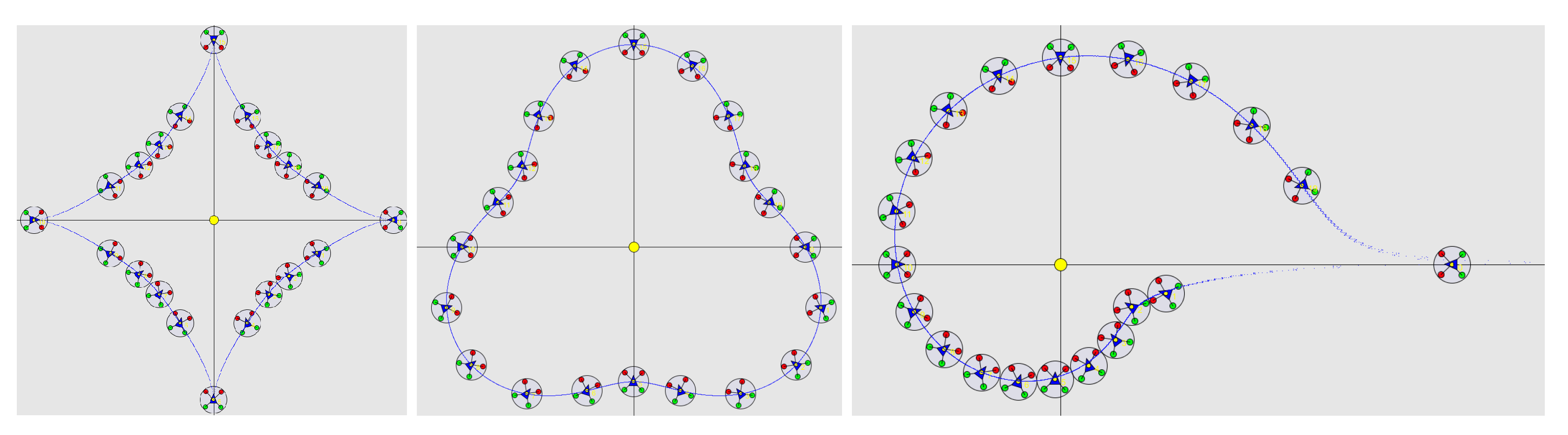

We used Processing [

27] to simulate the formation control of twenty drones on the astroidal, pear, and shell shapes (

Figure 15). UAVs were able to navigate to their self-computed positions without collision. They were able to form an angular distribution around the shape with only the shape’s harmonics. The angular distribution seems less relevant in case of asymmetrical and non-centered formations, for which a notion of curvilinear distance should be used instead.

When we removed eight drones from the simulation, the drones were able to autonomously manage the resulting rearrangement (

Figure 16).

Using the presented flight simulation setup, we made one drone navigate around the astroidal and pear shape. Examination of the data collected during the simulation (

Figure 17 and

Figure 18) validates the method.

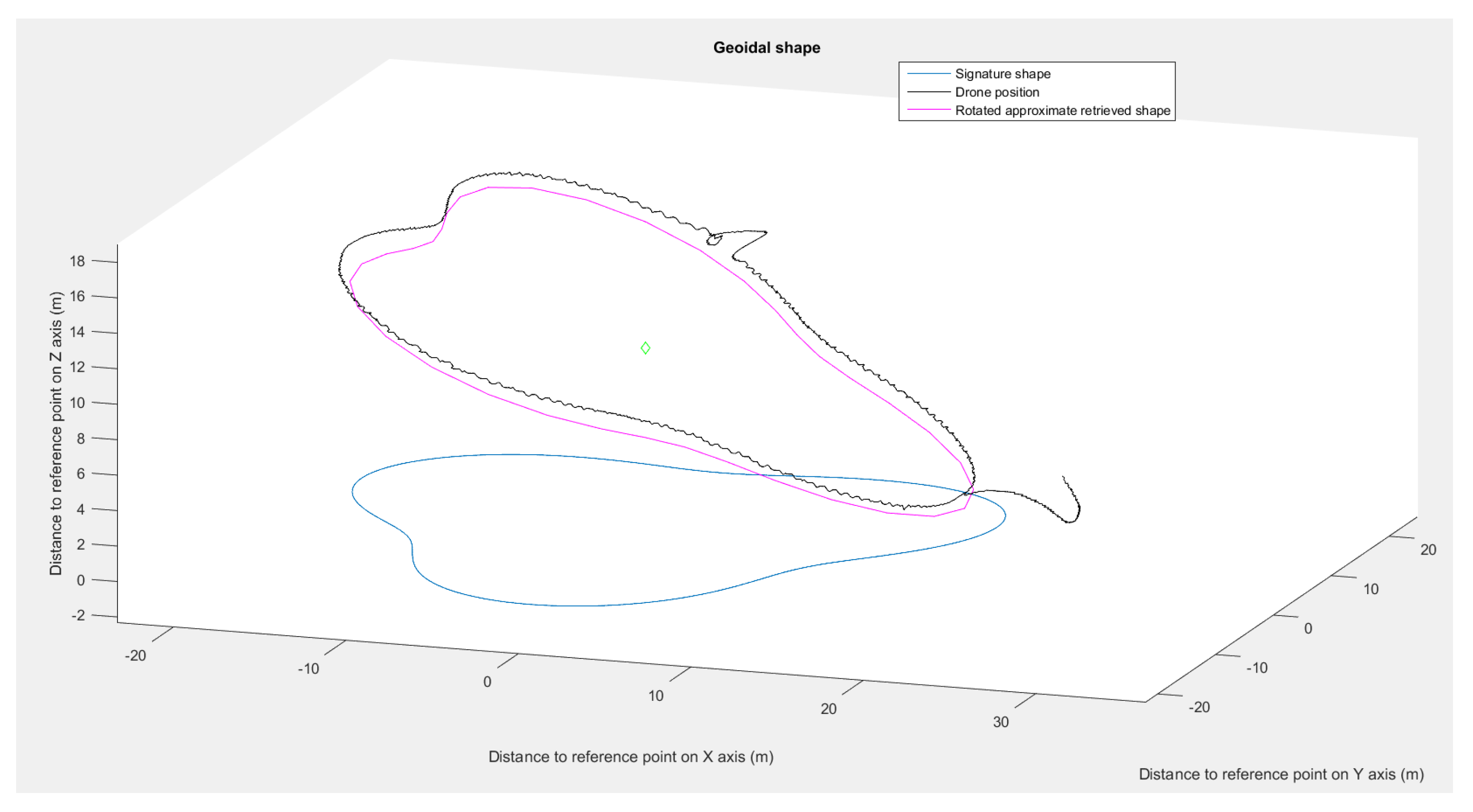

The drone maintained satisfying placements even with spatial rotations (

Figure 19), with positional deviations from the approximated shape of less than

. The deviations were caused by the control law used by the drone and inaccuracy of the simulated GPS. For the star shape (

Figure 18), the drone showed signs of delay in following the bearing angle instructions in the cardinal directions, which entailed an increase in the positioning error. The retrieval method returned an approximated shape with a deviation lower than 2% in each run. These results validate the shape interpretation by the simulated drone even in with spatial rotations.

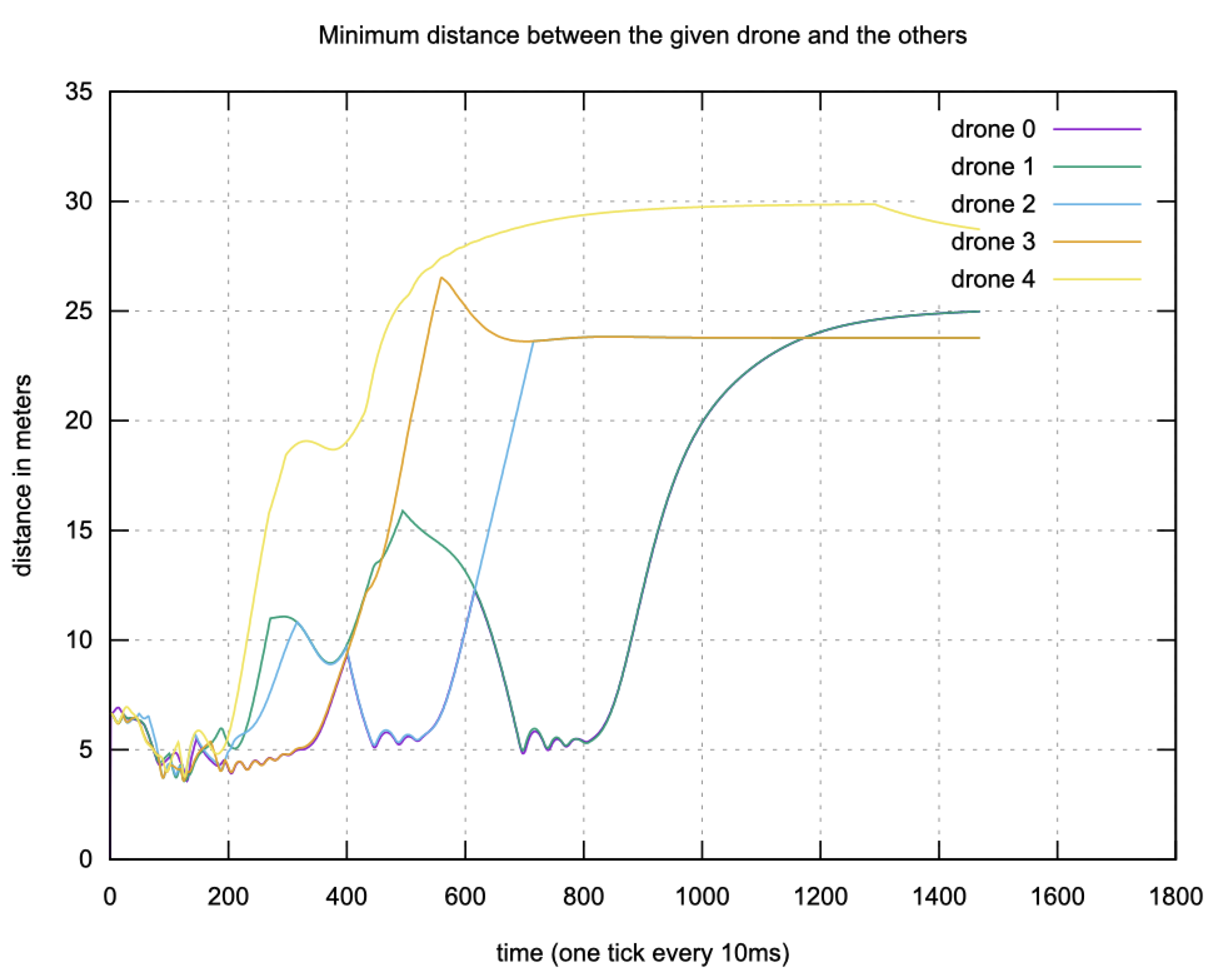

In addition, the drones were able to avoid collisions during the simulations.

Figure 20 shows the inter-drone distances during a run with five drones. With the distance threshold for avoidance activation (

) set to 6 m, the drones maintained a distance of

m even in the worst case.

5.4. Experiments

Experiments were been carried out using our home made drones (

Figure 21), based on the frame kit F450 proposed by DJI (the well-known drone manufacturer) and equipped with a flight controller and an API designed by Squadrone Systems [

26].

The flight controller and API were identical to those used in the Minisim Hardware-in-the-Loop simulators (

Figure 10). The Fourier descriptors and control laws were implemented in C++ language on the Raspberry PI 3B+ embedded computers. Following our successful simulations, it was possible to download the same C++ code into the UAVs. The UAVs communicated with the ground station via MicroHard

GHz radio modules.

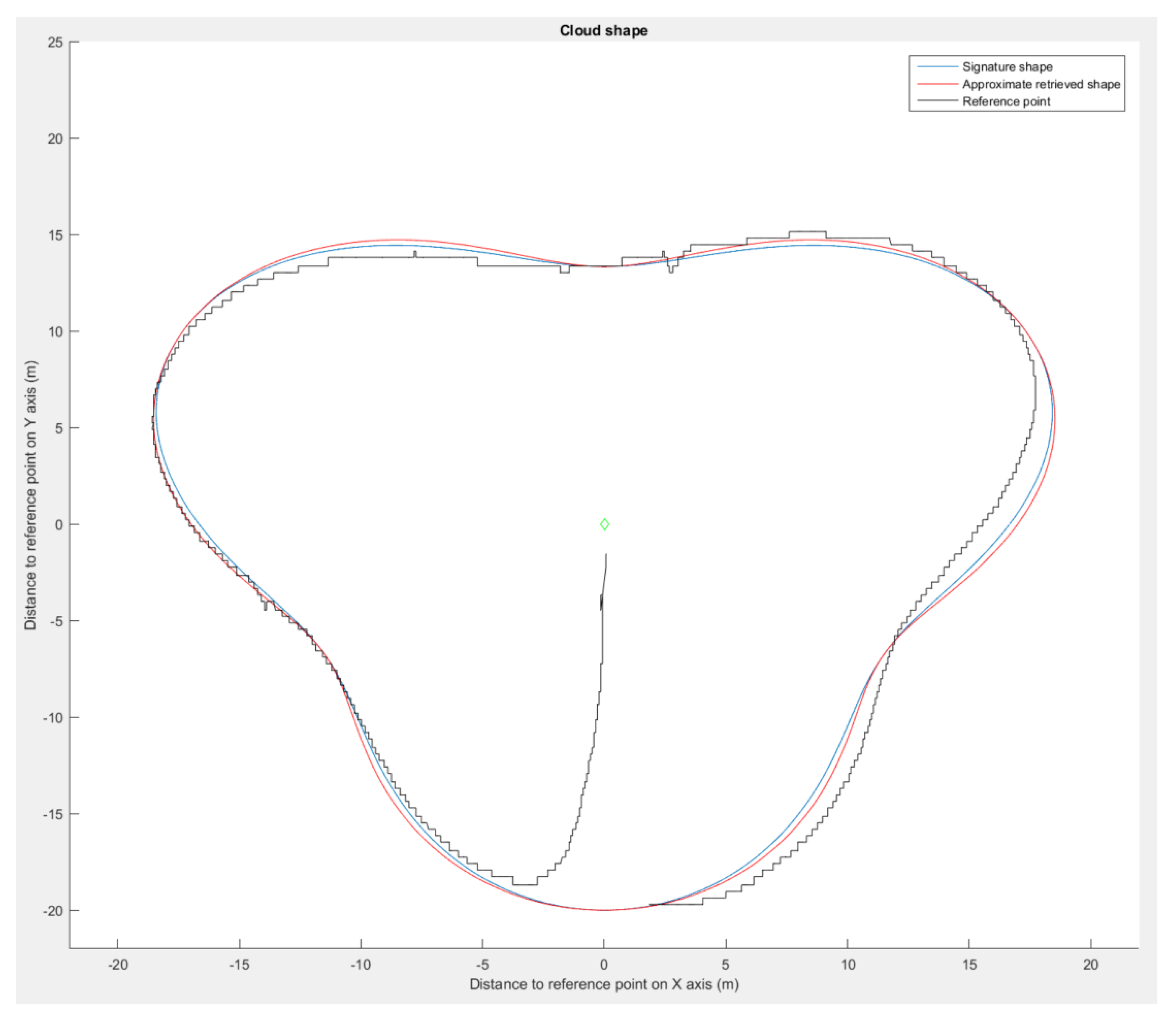

The goal of the experiments was to check whether one UAV was able to follow the contours of a particular shape described by means of Fourier descriptors. The experimental procedure consisted of choosing a shape and its characteristics (dimensions, inclination, etc.) and programming the increase of

between 0 and

in small steps of about 2

/s. Calculation of the values of

and

was carried out using the Fourier descriptors method. An inclined pear shape (

, 50 m) was chosen for the experiments. We registered the GPS coordinates during the flight in order to represent the trajectory followed by the UAV (

Figure 22).

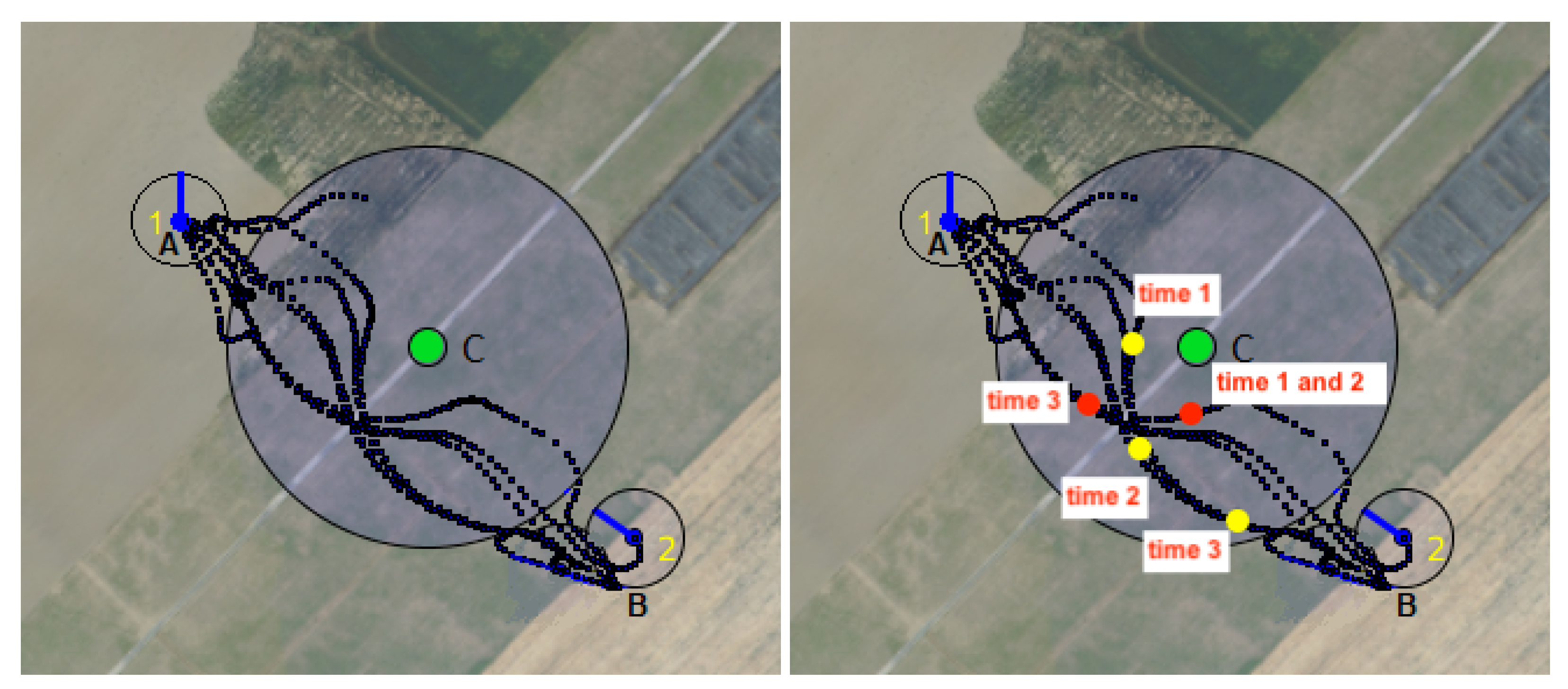

To validate the collision avoidance method, we carried out an experiment in which two UAVs flew to two opposite points (A and B) while avoiding a central point (C). In this experiment, the first UAV is initially at point A and the second is at point B. The two UAVs repeat this operation in a loop until the operator sends them the order to land. Taking disturbances (wind, etc.) into account, the UAVs can avoid each other by moving right, left, up, or down. Both UAVs are able to send their information (GPS coordinates, velocities, etc.) through the communication network via UDP multicast protocol and threads. We registered the GPS coordinates during the flight in order to represent the trajectory followed by the UAVs (

Figure 23 (left image)).

In the right-hand image, the red and yellow dots illustrate the way in which the drones were able to avoid collisions with static obstacles and with other drones, respectively. At time stamp 1, the drones avoid collision with the obstacle and with each other. The first drone (the red dot) randomly changes its position while moving toward its destination (time 2). Its movement opsens up the space and allows the other drone (the yellow dot) to move towards its own destination (time 3).

6. Conclusions and Perspectives

In the aftermath of the industrial accident at Lubrizol in Normandy, France, which occurred in 2019, numerous questions were raised regarding the potential assistance drones could have provided in supporting the efforts of the firefighting teams. It became evident that a multi-UAV system could potentially provide a comprehensive 360 degree view of the operational theater, provided that a way could be found to offer such coverage. To achieve this objective, considering the source of the fire’s origin, several challenges must be overcome: (i) precisely describing the area to of interest; (ii) allowing changes in the scale and orientation of the shape surrounding this area; and (iii) ensuring continuous 360 degree vision by allowing the group’s composition to be modified as drones depart from the formation and new drones arrive.

In this context, we have proposed a method for multi-UAV formation control. This method relies on Fourier descriptors and transform along with decentralized algorithms for allocating the positions of drones within the group. The decentralized algorithms for allocating bearing angles have only been tested through simulation. However, the restricted number of exchanged messages (with respect to the number of drones) required to reach a consensus provides optimism for their performance in real-world settings. For the description of the surrounding shape, polar signature functions were tested as formations and several transformations have been applied for the astroidal shape. In addition, continuous rotation around the shape border was tested for a UAV. The proposed method demonstrates great accuracy in terms of shape reproduction even with transformations. The simulated multi-UAV system further validates the method, showing satisfying placements. Further experiments and developments could be achieved by using virtual actions to smooth the UAVs’ movements and prevent deadlock situations. An improvement in the robustness of shape retrieval should be made to prevent border effects caused by discontinuous signature functions. Finally, this system could be used to approximately model objects by referencing points one-by-one at its border.