A Deep Anomaly Detection System for IoT-Based Smart Buildings

Abstract

:1. Introduction

1.1. Contribution

- Design and development of a neural model for identifying anomalies in large data streams from IoT sensors. Specifically, a neural architecture inspired by the Sparse U-Net (widely used in other contexts, such as cybersecurity applications [10]) has been employed. Basically, it can be figured out as an autoencoder (AE) embedding several skip connections to facilitate the network learning process and some sparse dense layers to make the AE more robust to noise. The approach is unsupervised and lightweight, making it suitable for deployment at the network’s edge.

- An extensive experimental evaluation was conducted on the test case generated using the abovementioned strategy. Numerical results demonstrate the effectiveness and efficiency of our approach.

1.2. Organization of the Paper

2. Related Works

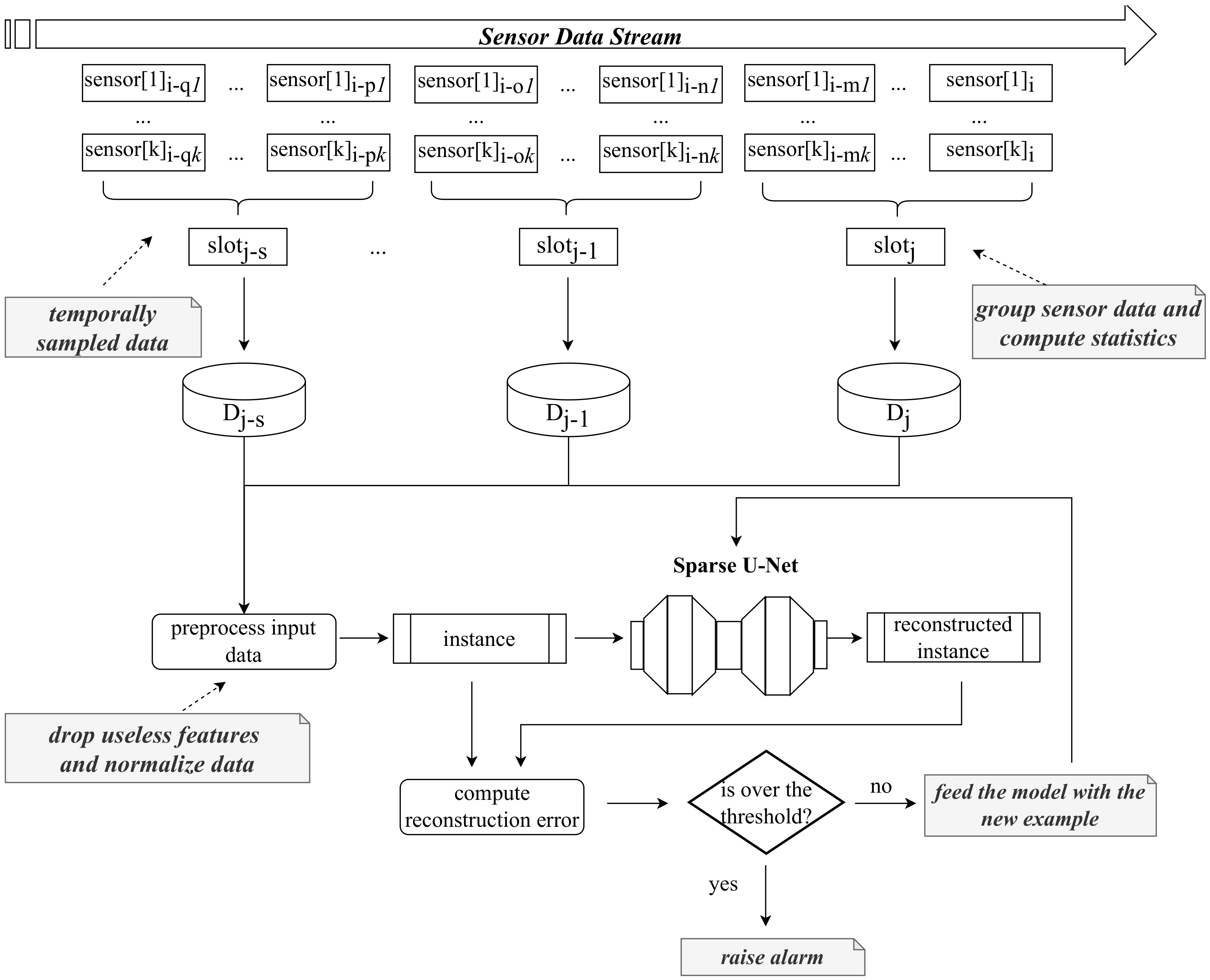

3. Proposed Approach

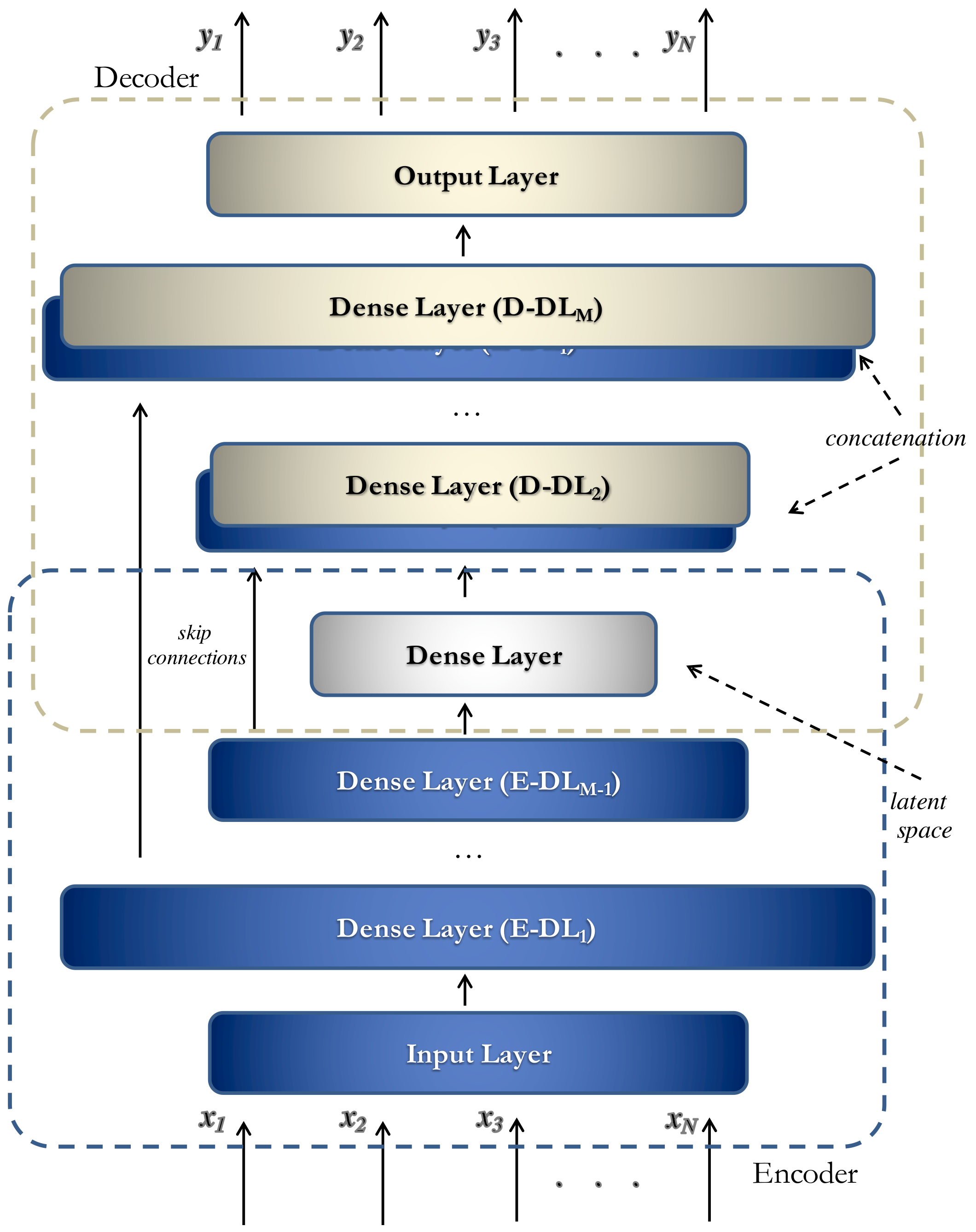

3.1. Neural Detector Architecture

- Skip Connections: These connections allow the layers of a neural network to be connected in a way that enables a direct flow of information from one layer to another, bypassing one or more intermediate layers and, in this way, preserving information and gradients [24]. Skip connections allow the construction of much deeper neural networks without suffering from performance or training issues. This is particularly useful because deeper neural networks can capture more complex data representations. Moreover, they enable neural networks to learn residual differences between input data and the predicted data. Hence, skip connections enhance the model’s predictive performance and reduce the number of iterations required for the convergence of the learning algorithm.

- Hybrid Approach: The architecture incorporates the use of “Sparse Dense Layers” to make the autoencoder more robust to noise, particularly because the anomalies to be identified often exhibit slight differences from normal behaviors. Sparse Dense Layers used in our solutions fall within the Sparse-AE framework. In this scenario, a Sparse Dense Layer is essentially a dense layer with a significantly larger number of neurons compared to the size of its input. However, what makes it “sparse” is that the learning process actively encourages sparsity in the activations within this layer. This means that only a subset of neurons is encouraged to be active, with non-zero activations for a given input. The primary purpose of this design is to reduce the complexity of the representations learned by the network. By promoting sparsity in the activations, the Sparse Dense Layer effectively learns a more concise and efficient representation of the input data. This can be particularly advantageous in scenarios where data dimensionality reduction or feature selection is desired. The architecture’s Sparse Dense Layers are placed in the first layer of the encoder and the last layer of the decoder. Both the encoder and the decoder consist of M hidden layers, resulting in a symmetrical architecture.

3.2. Detection Protocol

4. Case Study

- A microcontroller for preprocessing the data.

- A ZigBee radio is connected to the microcontroller for collecting data from the sensors and transmitting the information to a recording station.

- A digital camera to determine room occupancy.

Injecting Synthetic Anomalies

- Peak Anomalies. In this case, we replace the actual value with . The anomaly is computed using the following formula:where is a real number sampled from the interval , is the mean value of the feature i, and represents its variance.

- Sensor Fault Anomalies. The feature of is set to zero to simulate the breakdown of the corresponding sensor. It is assumed that each fault generates a 15-min window in which the sensor does not detect any measurements, meaning it consistently records a null value.

- Expert-Induced Anomalies. These are anomalies conveniently added by a domain expert that simulate three different scenarios: (i) a fire, (ii) a window left open in the room, and (iii) people staying in the room at night. These kinds of anomalies involve changes in different features together since a real event in the environment is simulated (e.g., in the case of fire, the CO dramatically increases together with the temperature, while the humidity decreases; in the case of a window opened, the CO slowly decreases together with the temperature, while the humidity increases).

- 100 peak anomalies (corresponding to 100 modified tuples);

- 25 sensor fault anomalies (i.e., modified tuples);

- 10 expert-induced anomalies (equal to 160 modified tuples).

5. Experimental Section

5.1. Parameter Settings and Evaluation Metrics

- 98th percentile. The threshold is the percentile of the training reconstruction errors;

- max_value. The threshold is the maximum value among the reconstruction errors of the training set data;

- max + tolerance. It is computed according to the following formula:

- Accuracy: defined as the fraction of cases correctly classified, i.e., ;

- Precision and Recall: metrics employed for assessing a system’s ability to detect anomalies, as they offer a measure of accuracy in identifying anomalies while minimizing false alarms. Specifically, Precision is defined as , while Recall as ;

- F-Measure: summarizes the model performance and computed as the harmonic mean of Precision and Recall.

5.2. Quantitative Evaluation: Comparison with the Baseline and Sensitivity Analysis

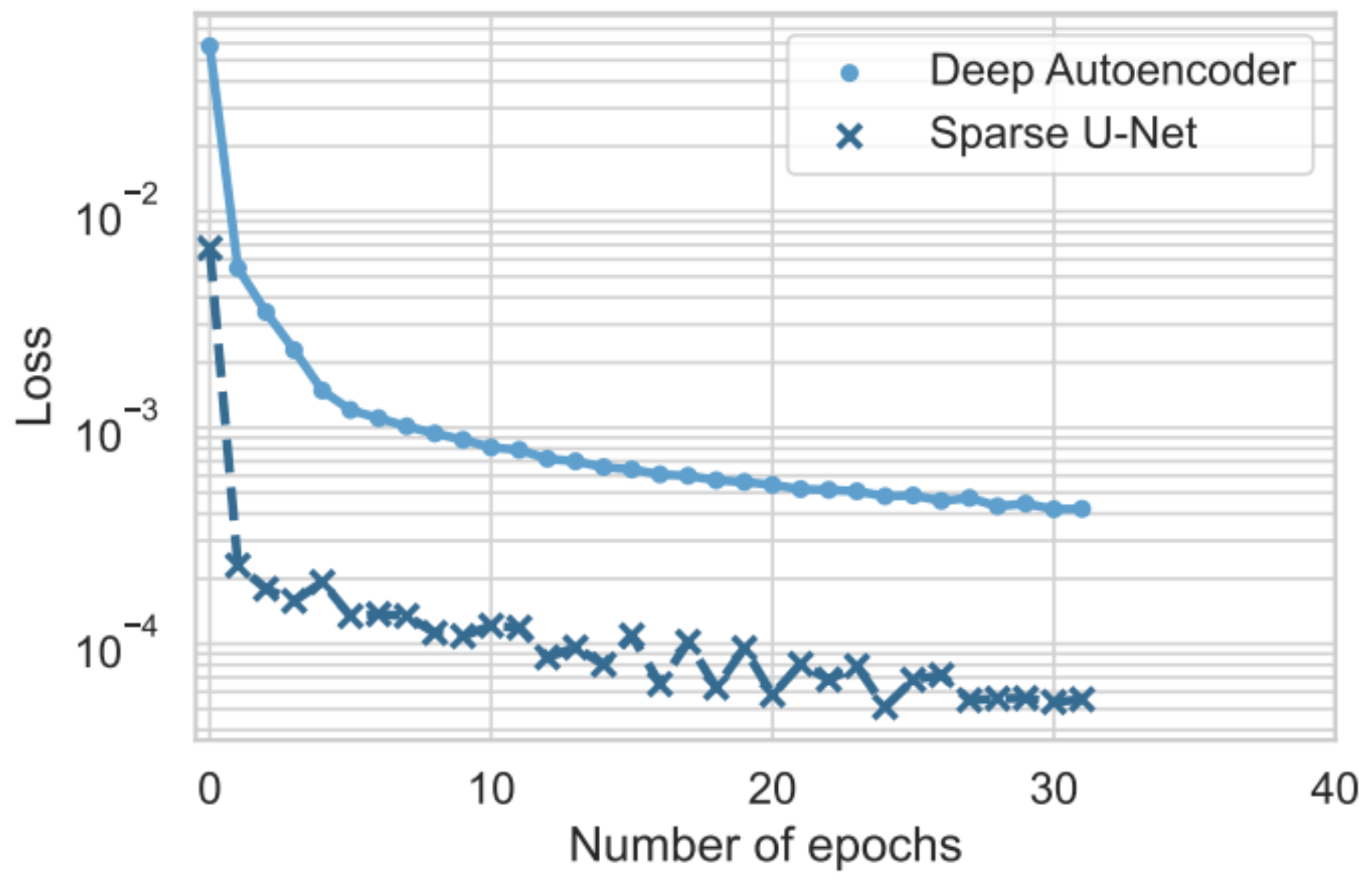

5.3. Convergence

6. Conclusions

Challenges and Opportunities

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Syed, A.S.; Sierra-Sosa, D.; Kumar, A.; Elmaghraby, A. IoT in Smart Cities: A Survey of Technologies, Practices and Challenges. Smart Cities 2021, 4, 429–475. [Google Scholar] [CrossRef]

- Jia, M.; Komeily, A.; Wang, Y.; Srinivasan, R.S. Adopting Internet of Things for the development of smart buildings: A review of enabling technologies and applications. Autom. Constr. 2019, 101, 111–126. [Google Scholar] [CrossRef]

- Daissaoui, A.; Boulmakoul, A.; Karim, L.; Lbath, A. IoT and Big Data Analytics for Smart Buildings: A Survey. Procedia Comput. Sci. 2020, 170, 161–168. [Google Scholar] [CrossRef]

- Wu, X.; Zhu, X.; Wu, G.Q.; Ding, W. Data mining with big data. IEEE Trans. Knowl. Data Eng. 2014, 26, 97–107. [Google Scholar] [CrossRef]

- Ditzler, G.; Roveri, M.; Alippi, C.; Polikar, R. Learning in Nonstationary Environments: A Survey. IEEE Comput. Intell. Mag. 2015, 10, 12–25. [Google Scholar] [CrossRef]

- Alanne, K.; Sierla, S. An overview of machine learning applications for smart buildings. Sustain. Cities Soc. 2022, 76, 103445. [Google Scholar] [CrossRef]

- Aguilar, J.; Garces-Jimenez, A.; R-Moreno, M.; García, R. A systematic literature review on the use of artificial intelligence in energy self-management in smart buildings. Renew. Sustain. Energy Rev. 2021, 151, 111530. [Google Scholar] [CrossRef]

- Le Cun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Cassavia, N.; Folino, F.; Guarascio, M. Detecting DoS and DDoS Attacks through Sparse U-Net-like Autoencoders. In Proceedings of the 2022 IEEE 34th International Conference on Tools with Artificial Intelligence (ICTAI), Macao, China, 31 October–2 November 2022; pp. 1342–1346. [Google Scholar] [CrossRef]

- Shahraki, A.; Taherkordi, A.; Haugen, O. TONTA: Trend-based Online Network Traffic Analysis in ad-hoc IoT networks. Comput. Netw. 2021, 194, 108125. [Google Scholar] [CrossRef]

- Zhu, K.; Chen, Z.; Peng, Y.; Zhang, L. Mobile Edge Assisted Literal Multi-Dimensional Anomaly Detection of In-Vehicle Network Using LSTM. IEEE Trans. Veh. Technol. 2019, 68, 4275–4284. [Google Scholar] [CrossRef]

- Gao, H.; Qiu, B.; Barroso, R.J.D.; Hussain, W.; Xu, Y.; Wang, X. TSMAE: A Novel Anomaly Detection Approach for Internet of Things Time Series Data Using Memory-Augmented Autoencoder. IEEE Trans. Netw. Sci. Eng. 2023, 10, 2978–2990. [Google Scholar] [CrossRef]

- Weston, J.; Chopra, S.; Bordes, A. Memory Networks. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Sater, R.A.; Hamza, A.B. A Federated Learning Approach to Anomaly Detection in Smart Buildings. ACM Trans. Internet Things 2021, 2, 1–23. [Google Scholar] [CrossRef]

- Li, S.; Cheng, Y.; Liu, Y.; Wang, W.; Chen, T. Abnormal Client Behavior Detection in Federated Learning. arXiv 2019, arXiv:1910.09933. [Google Scholar]

- Folino, F.; Guarascio, M.; Pontieri, L. Context-Aware Predictions on Business Processes: An Ensemble-Based Solution. In Proceedings of the New Frontiers in Mining Complex Patterns— First International Workshop, NFMCP 2012, Held in Conjunction with ECML/PKDD 2012, Bristol, UK, 24 September 2012; Revised Selected Papers; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2012; Volume 7765, pp. 215–229. [Google Scholar] [CrossRef]

- Khan, W.Z.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge computing: A survey. Future Gener. Comput. Syst. 2019, 97, 219–235. [Google Scholar] [CrossRef]

- Yahyaoui, A.; Abdellatif, T.; Yangui, S.; Attia, R. READ-IoT: Reliable Event and Anomaly Detection Framework for the Internet of Things. IEEE Access 2021, 9, 24168–24186. [Google Scholar] [CrossRef]

- Lydia, E.L.; Jovith, A.A.; Devaraj, A.F.S.; Seo, C.; Joshi, G.P. Green Energy Efficient Routing with Deep Learning Based Anomaly Detection for Internet of Things (IoT) Communications. Mathematics 2021, 9, 500. [Google Scholar] [CrossRef]

- Hinton, G.; Salakhutdinov, R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Bengio, Y.; Pascal, L.; Dan, P.; Larochelle, H. Greedy Layer-Wise Training of Deep Networks. In Advances in Neural Information Processing Systems (NeurIPS); MIT Press: Cambridge, MA, USA, 2007; Volume 19, pp. 153–160. [Google Scholar]

- Rosasco, L.; De Vito, E.D.; Caponnetto, A.; Piana, M.; Verri, A. Are Loss Functions All the Same? Neural Comput. 2004, 15, 1063–1076. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Candanedo, L.M.; Feldheim, V. Accurate occupancy detection of an office room from light, temperature, humidity and CO2 measurements using statistical learning models. Energy Build. 2016, 112, 28–39. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on International Conference on Machine Learning (ICML’10), Madison, WI, USA, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2017, arXiv:cs.LG/1609.04747. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Rajagopal, S.M.; Supriya, M.; Buyya, R. FedSDM: Federated learning based smart decision making module for ECG data in IoT integrated Edge–Fog–Cloud computing environments. Internet Things 2023, 22, 100784. [Google Scholar] [CrossRef]

- Khan, I.; Delicato, F.; Greco, E.; Guarascio, M.; Guerrieri, A.; Spezzano, G. Occupancy Prediction in Multi-Occupant IoT Environments leveraging Federated Learning. In Proceedings of the 2023 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), to Appear, Abu Dhabi, United Arab Emirates, 13–17 November 2023; pp. 1–7. [Google Scholar]

- Xia, Q.; Ye, W.; Tao, Z.; Wu, J.; Li, Q. A survey of federated learning for edge computing: Research problems and solutions. High-Confid. Comput. 2021, 1, 100008. [Google Scholar] [CrossRef]

- Caviglione, L.; Comito, C.; Guarascio, M.; Manco, G. Emerging challenges and perspectives in Deep Learning model security: A brief survey. Syst. Soft Comput. 2023, 5, 200050. [Google Scholar] [CrossRef]

- Williams, P.; Dutta, I.K.; Daoud, H.; Bayoumi, M. A survey on security in internet of things with a focus on the impact of emerging technologies. Internet Things 2022, 19, 100564. [Google Scholar] [CrossRef]

| Dataset | Features | Number of Tuples | Further information |

|---|---|---|---|

| Training | 7 | 8143 | Measurements mainly obtained with the closed door while the room is occupied |

| Testing_1 | 7 | 2665 | Measurements mainly obtained with the closed door while the room is occupied |

| Testing_2 | 7 | 9752 | Measurements mainly obtained with the opened door while the room is occupied |

| Dataset | Features | Number of Tuples | Further information |

|---|---|---|---|

| Training_1_plus | 7 | 13,019 | Measurements obtained both with the closed and the opened door while the room is occupied |

| Testing_1 | 7 | 2665 | Measurements mainly obtained with the closed door while the room is occupied |

| Testing_2_sampled | 7 | 4876 | Measurements mainly obtained with the opened door while the room is occupied |

| Parameters | Values |

|---|---|

| batch_size | 16 |

| num_epoch | 32 |

| optimizer | adam |

| loss | mse |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.956 | 0.801 | 0.996 | 0.888 |

| max value | 0.889 | 1.000 | 0.359 | 0.529 | |

| max + tolerance | 0.868 | 1.000 | 0.242 | 0.390 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.960 | 0.814 | 0.996 | 0.896 |

| max value | 0.943 | 0.984 | 0.682 | 0.806 | |

| max + tolerance | 0.926 | 1.000 | 0.571 | 0.727 |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.969 | 0.851 | 1.000 | 0.919 |

| max value | 0.903 | 1.000 | 0.458 | 0.628 | |

| max + tolerance | 0.885 | 1.000 | 0.352 | 0.521 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.948 | 0.850 | 0.859 | 0.854 |

| max value | 0.940 | 0.985 | 0.675 | 0.801 | |

| max + tolerance | 0.893 | 1.000 | 0.399 | 0.570 |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.944 | 0.586 | 0.850 | 0.694 |

| max value | 0.959 | 1.000 | 0.450 | 0.621 | |

| max + tolerance | 0.948 | 1.000 | 0.305 | 0.467 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.955 | 0.645 | 0.900 | 0.752 |

| max value | 0.967 | 0.959 | 0.590 | 0.731 | |

| max + tolerance | 0.959 | 1.000 | 0.460 | 0.630 |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.852 | 0.395 | 0.973 | 0.562 |

| max value | 0.945 | 0.986 | 0.449 | 0.617 | |

| max + tolerance | 0.927 | 1.000 | 0.249 | 0.399 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.875 | 0.438 | 0.966 | 0.603 |

| max value | 0.968 | 0.952 | 0.711 | 0.814 | |

| max + tolerance | 0.961 | 1.000 | 0.604 | 0.753 |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.846 | 0.381 | 0.928 | 0.541 |

| max value | 0.947 | 0.987 | 0.463 | 0.630 | |

| max + tolerance | 0.939 | 1.000 | 0.371 | 0.541 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.864 | 0.405 | 0.838 | 0.546 |

| max value | 0.965 | 0.966 | 0.667 | 0.790 | |

| max + tolerance | 0.943 | 1.000 | 0.415 | 0.586 |

| Neural Model | Threshold | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| Deep Autoencoder (baseline) | 98th percentile | 0.831 | 0.185 | 0.915 | 0.308 |

| max value | 0.979 | 0.971 | 0.510 | 0.669 | |

| max + tolerance | 0.974 | 1.000 | 0.370 | 0.540 | |

| Sparse U-Net (Proposed Model) | 98th percentile | 0.862 | 0.225 | 0.960 | 0.364 |

| max value | 0.985 | 0.893 | 0.710 | 0.791 | |

| max + tolerance | 0.980 | 1.000 | 0.510 | 0.675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cicero, S.; Guarascio, M.; Guerrieri, A.; Mungari, S. A Deep Anomaly Detection System for IoT-Based Smart Buildings. Sensors 2023, 23, 9331. https://doi.org/10.3390/s23239331

Cicero S, Guarascio M, Guerrieri A, Mungari S. A Deep Anomaly Detection System for IoT-Based Smart Buildings. Sensors. 2023; 23(23):9331. https://doi.org/10.3390/s23239331

Chicago/Turabian StyleCicero, Simona, Massimo Guarascio, Antonio Guerrieri, and Simone Mungari. 2023. "A Deep Anomaly Detection System for IoT-Based Smart Buildings" Sensors 23, no. 23: 9331. https://doi.org/10.3390/s23239331