Abstract

Shape from focus enables microscopic 3D sensing by combining it with a microscope system. However, edge bleeding artifacts of estimated depth easily occur in this environment. Therefore, this study analyzed artifacts and proposed a method to reduce edge bleeding artifacts. As a result of the analysis, the artifact factors are the depth of field of the lens, object texture, brightness difference between layers, and the slope of the object. Additionally, to reduce artifacts, a weighted focus measure value method was proposed based on the asymmetry of local brightness in artifacts. The proposed reduction method was evaluated through simulation and implementation. Edge bleeding artifact reduction rates of up to 60% were shown in various focus measure operators. The proposed method can be used with postprocessing algorithms and reduces edge bleeding artifacts.

1. Introduction

Three-dimensional sensing computes a depth map with data obtained by sensing an object and restoring the object in a 3D image close to the real object based on the obtained depth map. Image depth estimation methods for 3D sensing include structured light methods, infrared light or laser methods, stereoscopy, and shape-from-focus (SFF). The method using structured light estimates the depth using the shape transformation of captured structured light by projecting structured light onto an object [1,2,3]. Infrared or laser light methods estimate the depth from the differences in the angle or phase of reflected light [4,5]. Stereoscopy is a method of estimating depth using the shape difference between the images captured by two cameras at different positions [6,7,8]. SFF estimates depth using the degree to which each image is in the focus of the image sequences captured with different focal distances with a single camera [9,10,11]. The focal distance means the distance between the lens and the focus position in the object plane. Unlike other methods, SFF has a simple structure because it does not require additional devices other than a camera. Furthermore, SFF can easily realize μm-scale 3D sensing by combining it with a microscope system [12,13].

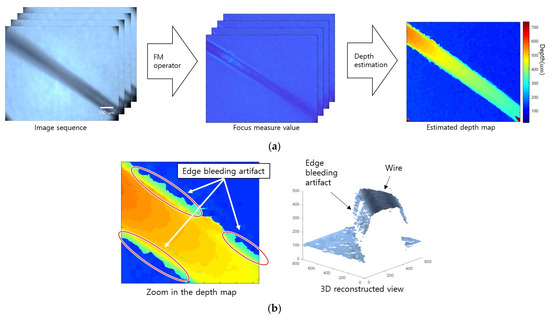

Figure 1a presents a depth estimation method using SFF. First, sequence images with different focal distances in the same scene are obtained. The focal distance could be changed using the lens focus adjustment ring or by changing the distance between the lens and the object. Second, the focus measure (FM) operator is applied to obtain local focus information and measure the degree of focus of each image. The FM operators are most important in the quality of SFF results. Operators based on Laplacian, wavelet, gradient, and variance are commonly used and have different advantages and disadvantages depending on the characteristics of the sensing environment [14]. In addition, the FM value can be corrected according to the purpose. Next, the distance corresponding to the index with the largest FM value is estimated as the depth for the corresponding pixel of the object by comparing the FM value according to the focal distance in the FM value volume. The estimated depth map is noisy in low-light images or low-texture objects, so it requires postprocessing.

Figure 1.

Example of shape from focus and edge bleeding artifact: (a) traditional method of shape from focus and (b) examples of edge bleeding artifact. The estimated depth is bleeding at the wire edge.

When blurred by defocus, components, such as contrast, gradient, variance, and high frequency of the image, are reduced. Various FM operators have been proposed to measure the degree of focus using the image sharpness based on these characteristics. Gradient-based operators calculate the first derivative or gradient of images [15,16]. This assumes that the defocused image is blurrier than the in-focused image. Laplacian-based operators compute the second derivative or Laplacian of images [17]. This operator has the same assumptions as gradient-based operators. Wavelet-based operators calculate the frequency component of images using a discrete wavelet transform [18,19]. The defocused image has fewer high-frequency components than the in-focus image. Statistics-based operators compute some statistical values, such as the variance [20,21]. This assumes that the defocused image has a smaller variance component than the in-focused image.

When SFF is performed in a microscopic environment, depth blurring occurs more often at the edges than in a general environment. This artifact is called an edge bleeding artifact, in which the depth estimated from the object edge spreads into continuous values. Figure 1b shows that depth is blurred at the edge between the wire and the background. In microscopic images, the depth of field (DoF) is very short, so defocus blurring affects a wide area [22,23]. When an object is blurred, the gradient component in the area around the edge increases, which is measured by the FM operator. Nevertheless, the FM value around the edge becomes larger than the FM value of the object if the texture is weak, which causes edge bleeding artifacts, regardless of whether the texture of the object is strong. This artifact has the characteristic that postprocessing algorithms do not easily remove it because it forms a continuous value with the depth of the surrounding area.

In general, postprocessing methods remove discontinuous depths and interpolate to nearby depths [24,25,26]. It is effective in removing noise in low-texture areas, but sometimes, the boundaries of objects become unclear, and edge bleeding artifacts cannot be removed. The FM operator’s adaptive window method was proposed to compensate for this disadvantage [27]. A large window is used in the low-texture area, and a small window is used at the edge of the object to make the boundary of the object clear. However, the edge bleeding artifact cannot be removed because it is not related to the window size. As another method, a correlation-based guide filtering method was proposed [28]. The boundary of the object is made clear by estimating a depth map with a high correlation between the image and FM value. This method also does not significantly remove edge bleeding artifacts.

In some applications, such as bonding wire inspection, edge bleeding artifacts affect the inspection adversely, so there is a need to eliminate them as much as possible. Therefore, this study analyzed the edge bleeding artifact, which was only known empirically, using a formula based on the gradient at the edges. The edge bleeding artifacts were analyzed about the cause of the artifact and the factors affecting the artifact with microscope images. Various FM operators are compared and verified through simulations because the analysis may vary depending on the type of FM operator. Furthermore, based on the analyzed content, this paper proposes an edge bleeding artifact reduction method to alleviate bleeding artifacts. Lastly, this study compared and verified the performance of the proposed edge bleeding artifact reduction method using microscopy images.

2. Analysis of Edge Bleeding Artifact

2.1. Principles of Blur Effect by Defocus

General optical principles of SFF are introduced before analyzing edge bleeding artifacts. SFF estimates the depth using focal information. Focal information indicates the degree of focus and defocus of the image. Focal information cannot be obtained if the image does not defocus when the focal distance changes. Therefore, the focusing range must be narrowed to increase the depth resolution. The allowable focal range of the camera is the depth of field (DoF) expressed as Equation (1) [29].

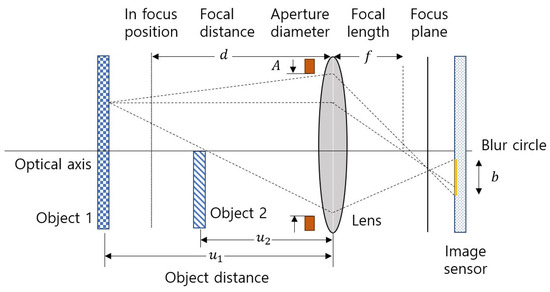

where is the focal length; is the focal distance, which is the distance to the focus position; and is the circle of confusion. is the f-number, , and a parameter that is proportional to the DoF. is the aperture diameter. Equation development was simplified by approximating the DoF using the thin-lens formula [30]. The effect of the defocus blur occurring at the object edge, except for the effect by the object texture, was checked by placing objects 1 and 2 at object distance and , respectively, as shown in Figure 2. Assume that the image of object 1 in focus is a single-tone image and the image of object 2 in focus is a single-tone image . Here, is the unit step function, and domain and are coordinates in the image sensor plane. Because object 1 is masked by object 2, the defocus image at focal distance taken by applying the transmittance map, , of the object 2 layer, as shown in Equation (2).

where is the point spread function (PSF) at focal distance . The defocused image is obtained by convolution of the object image and the PSF corresponding to object distance . The defocused image of object 1 is masked by element-wise product () with the defocused transmittance map of object 2. The PSF depends on the structure of the camera lens and the shape of the aperture but can be approximated in the form of a Gaussian function [31].

where is the blur parameter of the PSF, which is simplified by the object distance and camera parameters when applying the thin-lens formula, as expressed in Equation (4) [32].

Figure 2.

Defocus formation using the lens model.

For the focus plane to exist, the focal distance must satisfy the condition . The blur parameter is inversely proportional to the f-number . Therefore, the blur parameter increases when the f-number is reduced to shorten the DoF, and the blur caused by defocus becomes stronger.

2.2. Occurrence Conditions for Edge Bleeding Artifact

This study analyzed the conditions for the occurrence of edge bleeding artifacts. To analyze why edge bleeding artifact occurs, it is necessary to know the maximum value of the image plane gradient according to the focal distance in the image sequence in the near edge area and the corresponding focal distance. First, the image gradient is calculated by defocus using the Euclidean norm of the gradient, as shown in Equation (5).

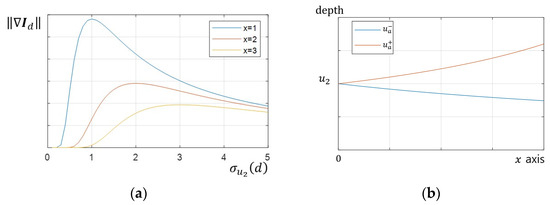

The gradient increases close to the edge and when the difference in brightness between two objects is large. The depth estimation method in SFF finds the focus distance when the FM value is a maximum. Therefore, the gradient according to the focus distance is important. Figure 3a shows the graph to see the change according to blur parameter of the gradient . The gradient had a single peak and gradually decreased after passing the peak. In addition, the value and position of the peak of the gradient changed when the distance from the edge changed. This maximum value and position were calculated by a partial derivative of the gradient using the parameter .

Figure 3.

Tendency of variables: (a) blur parameter vs. gradient graph, gradient has a single maximum; (b) depth of edge bleeding artifact depending on position.

Here, has a maximum value, as shown in Equation (7), when .

Ignoring the texture of the object, a formula was developed to determine the maximum value and position of the gradient because of the differences in brightness. Object 2 was in focus when and increased as the focal distance changed, according to Equation (4). The maximum gradient decreased gradually in proportion to . In addition, the focal distance when the gradient was maximum at position was the depth of the edge bleeding artifact and was calculated as an inverse function of Equation (4).

was calculated with two solutions except for , as shown in Figure 3b.

In general, the FM operator detected the edge blur component and the object texture component together because the SFF is performed in an environment with the object texture. Equation (9) expresses the FM value calculated using the FM operator for the image taken at the focal distance .

where is a function of the FM operator, which outputs the FM value when image is input. The depth was estimated as the distance at which the FM value has the maximum value for the , points of the image. Therefore, the conditions under which edge bleeding artifacts occur are as follows.

Edge bleeding artifacts occur when the depth is estimated instead of object depth because the FM value at is greater than the FM value .

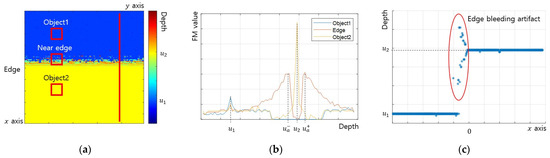

Figure 4 presents the results of performing SFF in an environment where the texture of object 1 is weak and the texture of object 2 is strong. The edge bleeding artifact occurred near the edge toward object 1, where the texture was weak (Figure 4a). Figure 4b shows the FM values in the three areas marked with squares in Figure 4a. At the object 1 point, the FM value was maximized at depth , and the object 2 point, the FM value was maximized at depth , so the depth was correctly estimated. On the other hand, at the near edge point, the FM value was maximized at depth or , so the depth was misestimated. Figure 4c presents the depth for the vertical line marked in Figure 4a and clearly shows the edge bleeding artifact.

Figure 4.

Example of SFF results: (a) estimated depth map; (b) FM value at points; (c) estimated depth along x axis.

2.3. Factors Causing Edge Bleeding Artifact

The factors that cause edge bleeding artifacts by defocus include the DoF, object texture intensity, the brightness difference between objects, and object slope. First, the DoF affects the blur parameter by defocus. The f-number is reduced when the DoF is shortened, the blur parameter is increased, and the area where the edge bleeding artifact occurs is widened.

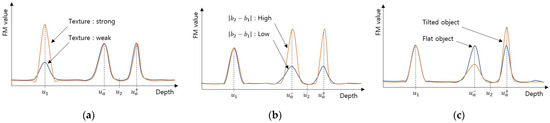

Second, the object texture intensity affects the FM value of the object, as shown in Figure 5a. An edge bleeding artifact occurs when the FM value by edge blur is greater than the FM value of the object, as expressed in Equation (10). Therefore, the less the object texture, the smaller the FM value of the object, resulting in more edge bleeding artifacts.

Figure 5.

The FM value according to factors causing edge bleeding artifact: (a) comparison according to object texture; (b) comparison according to ; (c) comparison according to object slope.

Third, the difference in brightness between the two objects is proportional to the maximum value of the gradient , as expressed in Equation (7). Therefore, the FM value at the near edge area increases as the difference in brightness increases, as shown in Figure 5b, resulting in more edge bleeding artifacts.

Finally, when the object is tilted, the distance changes depending on the position, and the blur parameter also changes. The blur gradient at the near edge area becomes stronger when the object is tilted in such a way that the object distance increases as it moves away from the object edge. The blur gradient at the near edge area is weakened when the slope is reversed. Therefore, the FM value at the near edge tilted to one side, resulting in a unidirectional edge bleeding artifact, as shown in Figure 5c.

3. Method to Reduce Edge Bleeding Artifact

The FM value in the near edge area includes the gradient component by defocus blur. As a result of the analysis in Section 2.2, an edge bleeding artifact occurs when the FM value of the gradient component becomes larger than that of the object texture component. Therefore, this paper proposes a method that reduces the edge bleeding artifacts by reducing the FM value of the defocus blur component. The image brightness changes rapidly in the depth of the edge bleeding artifact because the edges are blurred by defocus. The image brightness calculated from Equation (2) is as follows.

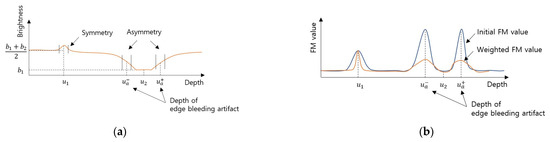

where is the Gaussian error function and is expressed as . Image brightness according to depth at a position near the edge is shown in Figure 6a. The local brightness was the same as the brightness of the object when in focus in the near edge area. On the other hand, when defocused, the local brightness was intermediate between the brightness of the two objects because of the blur effect. The local brightness was asymmetric based on the depth at which the edge bleeding artifact occurred. Nevertheless, the local average brightness between in-focus and defocus did not change significantly at locations other than the edge. In addition, the local brightness is symmetrical based on the in-focus because defocus in the direction close to the lens and defocus in the direction far away from the focal distance have a similar degree of blur effect. Therefore, the edge bleeding artifact reduction method uses measurements of the symmetry and asymmetry of the local brightness.

Figure 6.

Local brightness and FM value near the edge: (a) Depth vs. local brightness. Asymmetry in the depth of the edge bleeding artifacts. (b) Comparison of initial FM value and weighted FM value.

The window size for calculating local average brightness uses the same size as the FM operator. The equation for calculating the local average brightness in the image sequence is as follows.

where is half the window size and is calculated as . The symmetry and asymmetry of the reference point in the depth domain were calculated. For the offset invariant, subtracting the offset from each element of the reference point gives . Here, the even component is , and the odd component is . Therefore, the symmetric degree and asymmetric degree in the range were calculated using Equation (13).

The local average brightness is asymmetric where the edge bleeding artifact occurs and symmetric at the in-focus point on the object. Therefore, the FM value of the points where the local average brightness is asymmetric should be reduced to reduce the edge bleeding artifact. FM value weight for reducing edge bleeding artifacts is as follows.

where is the symmetry sensitivity coefficient and is adjusted so that is calculated as symmetry when the local average brightness is flat. The range of is [0, 1]. In the case of complete even symmetry, , and in the case of asymmetry, is close to zero. The weighted FM value is calculated by multiplying the FM value obtained with the FM operator by the edge bleeding artifact reduction weight .

Figure 6b shows the weighted FM value. Finally, the depth map is estimated as the index with the maximum weighted FM value.

4. Results

4.1. Simulation

Simulations were performed to verify the method analyzed and proposed in this paper. First, the degree of edge bleeding artifact was compared according to the FM operator type. The factors affecting the edge bleeding artifact were then verified. Lastly, the proposed edge bleeding artifact reduction method was verified.

The FM operators to be compared and verified are the gradient-based operator (GRA) [16], Laplacian-based operator (LAP) [17], statistic-based operator (STA) [20], and Wavelet-based operator (WAV) [18]. Table 1 lists the simulation parameters. The size of the object and the real image are the same because the focal distance is twice the focal length. Therefore, one image pixel corresponds to 1 μm of the object plane because the image sensor cell size is 1 μm. In addition, the calculated DoF was 20 μm when the circle of confusion value was substituted into the image sensor cell size.

Table 1.

Simulation parameter.

The evaluation measurements for comparing the performance of simulation results include the root mean square error (RMSE) and the width of the edge bleeding artifact. The RMSE measures the error between the ground truth and the estimated depth map. The RMSE measurement was performed around the edge as a region of interest to increase the impact of the edge bleeding artifact. When the size of the region of interest is , the ground truth is , and the estimated depth map is , the RMSE is as follows.

The edge bleeding artifact occurs when the RSME is larger. On the other hand, the width of the edge bleeding artifact was also measured as a measurement independent of the depth difference because the RMSE is dependent on the depth difference between the two objects. The width of the edge bleeding artifact is the average width of the edge bleeding artifact in the direction perpendicular to the object edge. The average width was calculated by excluding the top and bottom 20% to remove outlier data.

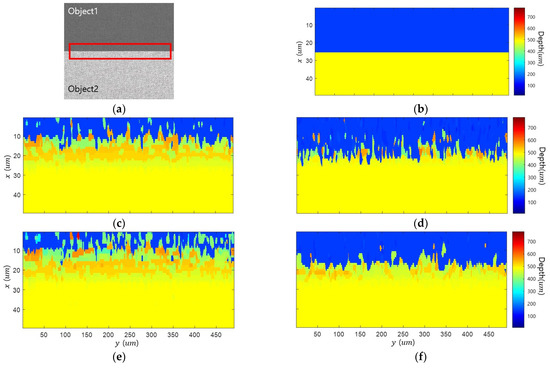

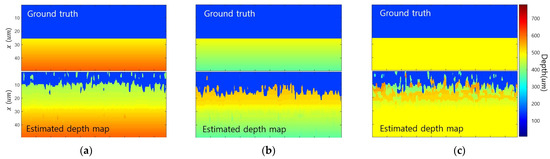

Figure 7 shows the edge bleeding artifact according to FM operators. The area corresponding to the red box shown in Figure 7a was enlarged in all result figures to check edge bleeding artifacts. Results were expressed in the same color scale. The edge bleeding artifact occurs in all types of FM operators, even though there are differences in the degree. Table 2 presents a quantitative evaluation of the performance. Blurring affects the gradient and statistic-based FM operators, resulting in many edge bleeding artifacts. Wavelet-based FM operator detects high-frequency components and is indirectly affected by blur, resulting in weak edge bleeding artifacts. The Laplacian-based FM operator is the second derivative and is most robust against edge bleeding artifacts as it is affected by the amount of gradient change caused by blur.

Figure 7.

Simulation results of various FM operators: (a) all-in-focus image; (b) ground truth; (c) gradient-based FM operator; (d) Laplacian-based FM operator; (e) statistic-based FM operator; (f) wavelet-based FM operator.

Table 2.

Evaluations of various FM operators.

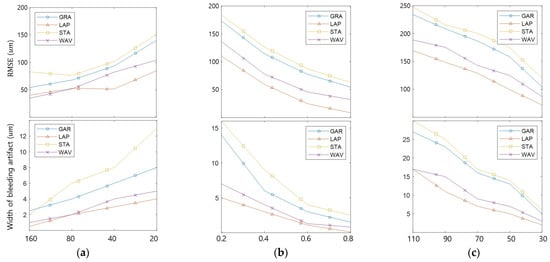

Figure 8 compares the edge bleeding artifacts according to the DoF, texture, and brightness differences between objects. The DoF was adjusted by modifying the f-number, and the texture was adjusted by changing the contrast of object 1. The texture strength ratio is the ratio of the contrast of object 2 and that of object 1. Figure 8a compares the DoF. As the DoF becomes shorter, the blur widens, and the width of the bleeding artifact widens. The longer DoF reduces edge bleeding artifacts but reduces depth resolution and increases noise. Therefore, RMSE decreases as the DoF lengthens as the edge bleeding artifact decreases but does not decrease continuously due to noise. Figure 8b compares the edge bleeding artifacts according to texture. As the texture weakens, the edge bleeding artifact becomes stronger in all FM operators. Figure 8c shows the differences in brightness between the objects. The edge bleeding artifact becomes stronger in all FM operators as the brightness difference increases.

Figure 8.

Comparison of edge bleeding artifact occurrence tendencies according to factors: (a) lens DoF; (b) object texture strength; (c) brightness difference between objects.

Figure 9 shows the edge bleeding artifact according to the slope of object 2. Edge bleeding artifacts were compared for the following: a positive slope, where the object distance decreases as the distance from the edge increases; a negative slope, where the object distance increases; and a flat, where the object distance is constant. When the object was tilted, the defocus gradient in one direction became stronger, and the defocus gradient in the other direction became weaker. Therefore, in the case of flat, the edge bleeding artifact was a mixture of cases where depth increases and decreases. On the other hand, with a positive slope, only edge bleeding artifacts occurred in the direction that the depth decreases. In contrast, with negative slopes, only edge bleeding artifacts occurred in the direction that the depth increased.

Figure 9.

Comparison of edge bleeding artifact according to the slope of object: (a) positive slope; (b) negative slope; (c) flat.

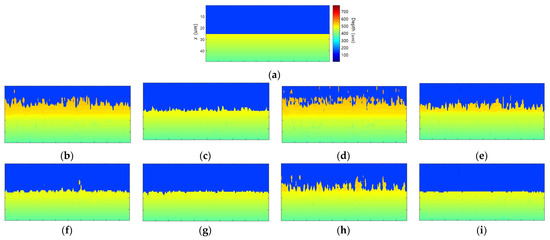

Figure 10 shows the results of the proposed edge bleeding artifact reduction method. Table 3 compares the performance of the proposed method. When the proposed method was applied, the edge bleeding artifact was reduced in all FM operators. In particular, this shows excellent performance in Gradient-based and Wavelet-based FM operators. RMSE and width decreased by 68.0% and 83.2% in GRA and by 65.8% and 85.3% in WAV. In STA, it decreased by 39.5% and 50.9%, but edge bleeding artifacts still remain. Compared to other FMOs, STA has a larger difference between the FM value of the object and the FM value of the edge. Even if the proposed method was applied, the FM value of the edge was not sufficiently reduced, so the reduction rate of the edge bleeding artifact was low. LAP had the lowest reduction rate, but there was almost no edge bleeding artifact, even in the initial depth map. Because each FM operator has a different sensitivity to object texture and edge blur, the performance of the proposed method differs for each FM operator.

Figure 10.

Simulation results of proposed edge bleeding artifact reduction method: (a) ground truth; (b–e) initial depth map of (b) GRA, (c) LAP, (d) STA, and (e) WAV; (f–i) depth map estimated with the proposed weighted FM value of each FM operator.

Table 3.

Performance evaluations of the proposed edge bleeding artifact reduction method.

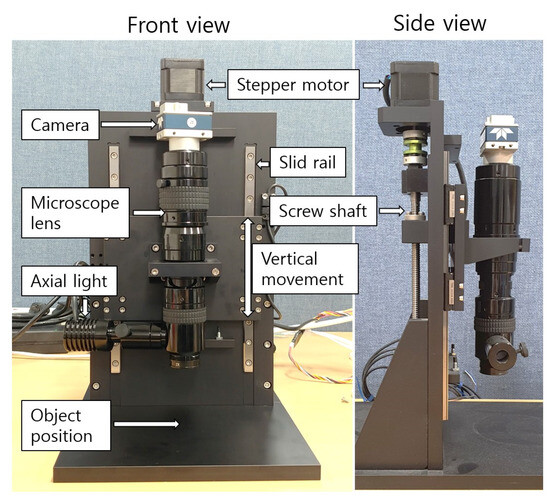

4.2. Implementation in Microscopic System

An experimental environment was configured to verify the edge bleeding artifact reduction method proposed in this paper in a microscope environment, as shown in Figure 11. The microscope lens used was SZ6CHIF3-B5 from Sunny Optical. The DoF was 20 µm, and the lateral resolution was approximately 1 µm. The camera used was Teledyne DALSA’s G3-GM10-M1280. The image resolution was 1280 × 1024, and the sensor size was 1/2 inch. A method of adjusting the actual distance between the object and the lens was adopted without using a focal distance adjustment ring to ensure linearity of depth according to the image sequence. The stepper motor moves the microscope vertically by moving the screw shaft. The moving distance per step was 2.5 µm. A gradient-based operator was used as the FM operator, and the window size was 21. The compared methods are adaptive window size [27] and guided filtering [28]. The proposed method was used together with a postprocessing algorithm. The resulting quality of the compared method and the proposed method may differ. Therefore, when looking at the results, attention should be paid to the edge bleeding artifact, which is the subject of this study.

Figure 11.

Microscopic device used in the experiment.

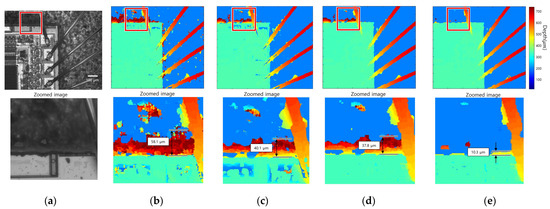

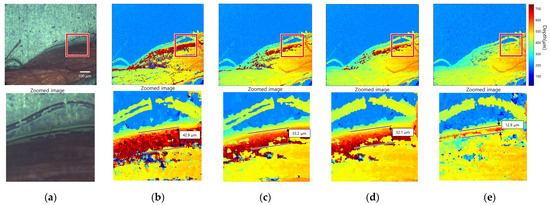

Figure 12 presents the result of 3D imaging of the bonding wire connecting the semiconductor and lead of the IC. The above line figures are the all-in-focus image and the result of applying each FM operator, and the bottom line figures are enlarged images of the red boxes. Figure 12a,b show a focus image and initial depth map performed by SFF using a gradient-based FM operator. The width of the edge bleeding artifact in the initial map is 58.1 µm. Figure 12c shows the results of the adaptive window size method, and the width of the artifact is measured to be 40.1 µm, which has a 30.9% reduction performance. Figure 12d shows the results of the guided filtering method, and the width of the artifact is measured to be 37.8 µm, resulting in a 34.9% reduction performance. Figure 12e shows the results of the proposed method, and the width of the artifact is measured to be 10.3 µm, resulting in a reduction performance of 82.2%. A closer look at the all-in-focus image showed that the semiconductor, bottom layer, and bonding wire have strong textures. On the other hand, the shaded area of the bottom layer had a weak texture. As a result of SFF, the depth of the wire bonded to the semiconductor was calculated. Nevertheless, noise occurred due to an incorrect depth estimation in some low-contrast areas. Furthermore, edge bleeding artifacts occurred in locations where the brightness difference between the objects was large, and the texture was weak. The positions marked with red squares were enlarged and shown below in the original results image. Many edge bleeding artifacts occurred between the semiconductor and the bottom layer. In addition, an edge bleeding artifact occurred between the wire and the bottom layer. The noise was reduced in the results of the adaptive window size method and guided filtering method, but edge bleeding artifacts remained. The edge bleeding artifact was greatly reduced after the proposed edge bleeding artifact reduction method.

Figure 12.

Bonding wire connecting the semiconductor and lead of the IC: (a) all-in-focus image; (b) initial depth map; (c) adaptive window size method; (d) guided filtering method; (e) proposed method.

Figure 13 presents the result of 3D imaging of nylon thread. The above line figures are the all-in-focus image and the result of applying each FM operator, and the bottom line figures are enlarged images of the red boxes. The width of the measured edge bleeding artifact is 42.9 µm for the initial method, 33. 2 µm for the adaptive window method, 32.1 µm for the guided filtering method, and 12.9 µm for the proposed method. The reduction percentage of the compared methods is about 22%, while the reduction percentage of the proposed method is 70%. In the focus image, Figure 13a, the bottom layer has a strong texture, but some areas of the nylon thread have a weak texture. Furthermore, there was a large difference in brightness between the nylon thread and the bottom layer. In this case, edge bleeding artifacts occurred in the direction of the nylon thread with a weak texture. Figure 13b shows that considerable noise occurred in the weak-texture area because SFF was performed using the gradient-based FM operator. Figure 13c,d show that some noise was removed in compared methods, but edge bleeding artifacts remained. Figure 13e shows that the edge bleeding artifact was reduced noticeably after applying the edge bleeding artifact reduction method.

Figure 13.

Nylon thread: (a) all-in-focus image; (b) initial depth map; (c) adaptive window size method; (d) guided filtering method; (e) proposed method.

5. Conclusions

Edge bleeding artifacts, where depths near the object edge are bleeding, frequently occur when performing SFF on microscopic images. This study analyzed the causes and influencing factors of edge bleeding artifacts. The gradient component due to the difference in brightness between objects during defocus was calculated after excluding the effect of the object texture. As a result of the calculation, an edge bleeding artifact occurred when the FM value of the defocus gradient was greater than that of the object texture. Furthermore, factors that cause edge bleeding artifacts include the camera’s short DoF, weak object texture, large brightness difference between two objects, and object tilt. The analysis results were verified through simulation. In addition, the degree of the edge bleeding artifact was evaluated in various FM operators.

Second, this paper proposed a method that alleviates edge bleeding artifacts based on the previous analysis. Local brightness is symmetry based on the in-focus depth and asymmetry based on the depth where edge bleeding artifacts occur. Therefore, the weight of the FM value was calculated using the symmetry and asymmetry of local brightness. The proposed method reduces the FM value at the depth where edge bleeding artifacts occur. Therefore, the FM value of the object texture becomes larger than the FM value of the defocus gradient, thereby relieving the edge bleeding artifacts. The proposed method was verified through simulation and implementation. As a result of the simulation, the edge bleeding artifacts were reduced regardless of the type of FM operator. In particular, RMSE decreased by more than 60% in the gradient-based FM operator and wavelet-based FM operator. In the implementation, a qualitative evaluation was performed because there was no ground truth information. The analysis showed that edge bleeding artifacts occurred in areas where the object texture was weak and the brightness difference was large. A significant amount of edge bleeding artifacts remained even when applying the existing compared algorithms, but the edge bleeding artifact was reduced after applying the proposed method.

A limitation of the proposed method is that the depth estimation accuracy in the weak-texture areas was lowered. The FM value decreased slightly because the local brightness was not perfectly symmetrical based on the focus. This phenomenon does not affect the depth estimation in general texture areas but causes an inaccurate depth estimation in weak-texture areas because of the slight decrease in FM value. Therefore, the depth estimation noise increased when the proposed method was used alone. Hence, it is recommended to use it together with a postprocessing algorithm.

The depth estimation problem in low-contrast and weak-texture areas is a challenge in most SFF-related papers. The method proposed in this paper also showed poor performance in the weak-texture area. On the other hand, the deterioration of the depth estimation performance can be prevented if the proposed method is combined with information, such as reliability [33] or FM value entropy [24]. Future work will examine ways of improving the edge bleeding artifact reduction method using weak-texture area estimations to overcome the limitations of the proposed method.

Author Contributions

S.-H.P., G.-R.P. and K.-R.B. participated in the study design and data collection, performed statistical analyses, contributed to the interpretation of the results, wrote the manuscript, and approved the final manuscript as presented. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ikura, M.; Pathak, S.; Louhi Kasahara, J.Y.; Yamashita, A.; Asama, H. AdjustSense: Adaptive 3D Sensing System with Adjustable Spatio-Temporal Resolution and Measurement Range Using High-Speed Omnidirectional Camera and Direct Drive Motor. Sensors 2021, 21, 6975. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; An, T.; Cappelleri, D.; Xu, J.; Zhang, S. High-accuracy, high-speed 3D structured light imaging techniques and potential applications to intelligent robotics. Int. J. Intell. Robot. Appl. 2017, 1, 86–103. [Google Scholar] [CrossRef]

- Zhang, S. High-speed 3D shape measurement with structured light methods: A review. Opt. Laser Eng. 2018, 106, 119–131. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, S. 3D imaging millimeter wave circular synthetic aperture radar. Sensors 2017, 17, 1419. [Google Scholar] [CrossRef] [PubMed]

- Murry, D.; Little, J.J. Using Real-Time Stereo Vision for Mobile Robot Navigation. Auton. Robots 2000, 8, 161–171. [Google Scholar] [CrossRef]

- Fleischmann, P.; Berns, K. A Stereo Vision Based Obstacle Detection System for Agricultural Applications. In Field and Service Robotics; Springer Tracts in Advanced Robotics; Wettergreen, D., Barfoot, T., Eds.; Springer: Cham, Switzerland, 2016; Volume 113, pp. 217–231. [Google Scholar]

- Perek, P.; Mielczarek, A.; Makowski, D. High-performance image acquisition and processing for stereoscopic diagnostic systems with the application of graphical processing units. Sensors 2022, 22, 471. [Google Scholar] [CrossRef]

- Billiot, B.; Cointault, F.; Journaux, L.; Simon, J.C.; Gouton, P. 3D image acquisition system based on shape from focus technique. Sensors 2013, 13, 5040–5053. [Google Scholar] [CrossRef]

- Hou, L.; Zou, J.; Zhang, W.; Chen, Y.; Shao, W.; Li, Y.; Chen, S. An Improved Shape from Focus Method for Measurement of Three-Dimensional Features of Fuel Nozzles. Sensors 2022, 23, 265. [Google Scholar] [CrossRef]

- Gladines, J.; Sels, S.; Blom, J.; Vanlanduit, S. A fast shape-from-focus-based surface topography measurement method. Sensors 2021, 21, 2574. [Google Scholar] [CrossRef]

- Muhammad, M.; Choi, T.S. Sampling for shape from focus in optical microscopy. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 564–573. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Jia, H.; Jia, P.; Chen, K.; Zhang, X. A novel algorithm for three-dimensional shape reconstruction for microscopic objects based on shape from focus. Opt. Laser Technol. 2023, 168, 109931. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Huang, W.; Jing, Z. Evaluation of focus measures in multi-focus image fusion. Pattern Recognit. Lett. 2007, 28, 493–500. [Google Scholar] [CrossRef]

- Choi, T.S.; Yun, J. Three-dimensional shape recovery from the focused-image surface. Opt. Eng. 2000, 39, 1321–1326. [Google Scholar] [CrossRef]

- Nayar, S.K.; Nakagawa, Y. Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 824–831. [Google Scholar] [CrossRef]

- Yang, G.; Nelson, B.J. Wavelet-based autofocusing and unsupervised segmentation of microscopic images. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27 October–1 November 2003; pp. 2143–2148. [Google Scholar]

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient simplification of point-sampled surfaces. In Proceedings of the VIS 2002 IEEE Visualization, Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar]

- Malik, A.S.; Choi, T.S. A novel algorithm for estimation of depth map using image focus for 3D shape recovery in the presence of noise. Pattern Recognit. 2008, 41, 2200–2225. [Google Scholar] [CrossRef]

- Humayun, J.; Malik, A.S. Real-time processing for shape-from-focus techniques. J. Real-Time Image Process. 2016, 11, 49–62. [Google Scholar] [CrossRef]

- Pentland, A.P. A new sense for depth of field. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 4, 523–531. [Google Scholar] [CrossRef]

- Sheppard, C.J.R.; Wilson, T. Depth of field in the scanning microscope. Optics letters 1978, 3, 115–117. [Google Scholar] [CrossRef]

- Tseng, C.Y.; Wang, S.J. Shape-from-focus depth reconstruction with a spatial consistency model. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 2063–2076. [Google Scholar] [CrossRef]

- Mahmood, M.T.; Choi, T.S. Nonlinear approach for enhancement of image focus volume in shape from focus. IEEE Trans. Image Process. 2012, 21, 2866–2873. [Google Scholar] [CrossRef] [PubMed]

- Gaganov, V.; Ignatenko, A. Robust shape from focus via Markov random fields. In Proceedings of the Graphicon Conference, Moscow, Russia, 5–9 October 2009; pp. 74–80. [Google Scholar]

- Li, L.; Pan, Z.; Cui, H.; Liu, J.; Yang, S.; Liu, L.; Tian, Y.; Wang, W. Adaptive window iteration algorithm for enhancing 3D shape recovery from image focus. Chin. Opt. Lett. 2019, 17, 061001. [Google Scholar] [CrossRef]

- Ali, U.; Mahmood, M.T. Depth enhancement through correlation-based guided filtering in shape from focus. Microsc. Res. Tech. 2021, 84, 1368–1374. [Google Scholar] [CrossRef]

- Allen, E.; Triantaphillidou, S. The Manual of Photography, 10th ed.; Routledge: London, UK, 2011; p. 111. [Google Scholar]

- Jenkins, F.A.; White, H.E. Fundamentals of Optics, 4th ed.; McGraw-Hill: New York, NY, USA, 2001; pp. 60–62. [Google Scholar]

- Tao, Q.C.; He, X.H.; Deng, H.B.; Liu, Y.; Zhao, J. Wavelet transform based Gaussian point spread function estimation. In Advances in Visual Computing: First International Symposium, Proceedings of the ISVC 2005, NV, USA, 5–7 December 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 396–405. [Google Scholar]

- Ghita, O.; Whelan, P.F. A video-rate range sensor based on depth from defocus. Opt. Laser Technol. 2001, 33, 167–176. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; Garcia, M.A. Reliability measure for shape-from-focus. Image Vis. Comput. 2013, 31, 725–734. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).