Integral Imaging Display System Based on Human Visual Distance Perception Model

Abstract

:1. Introduction

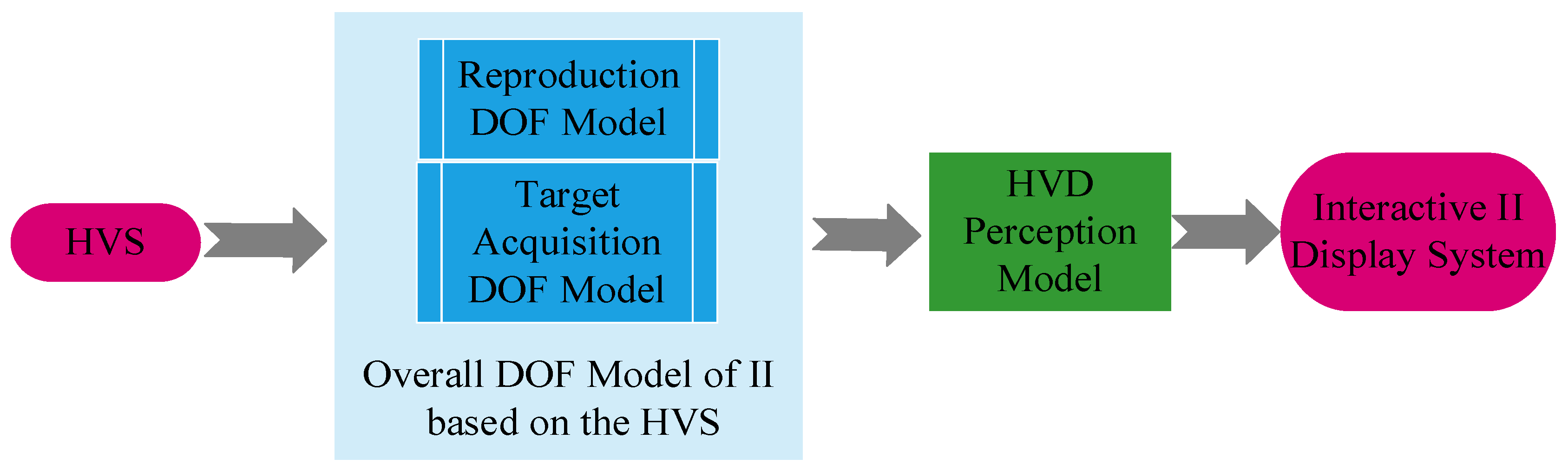

2. Overall DOF Model of II System Based on the Human Visual System

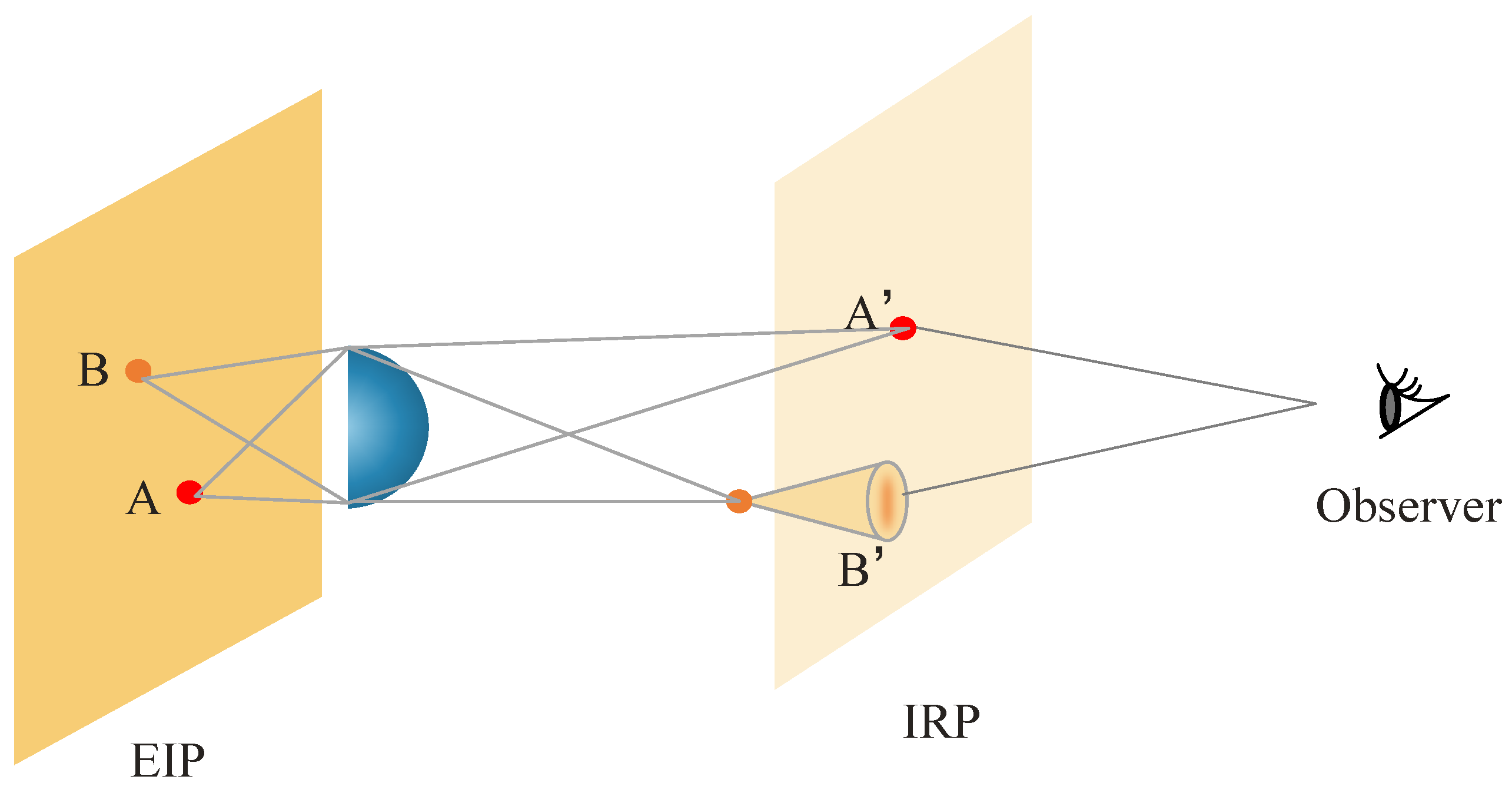

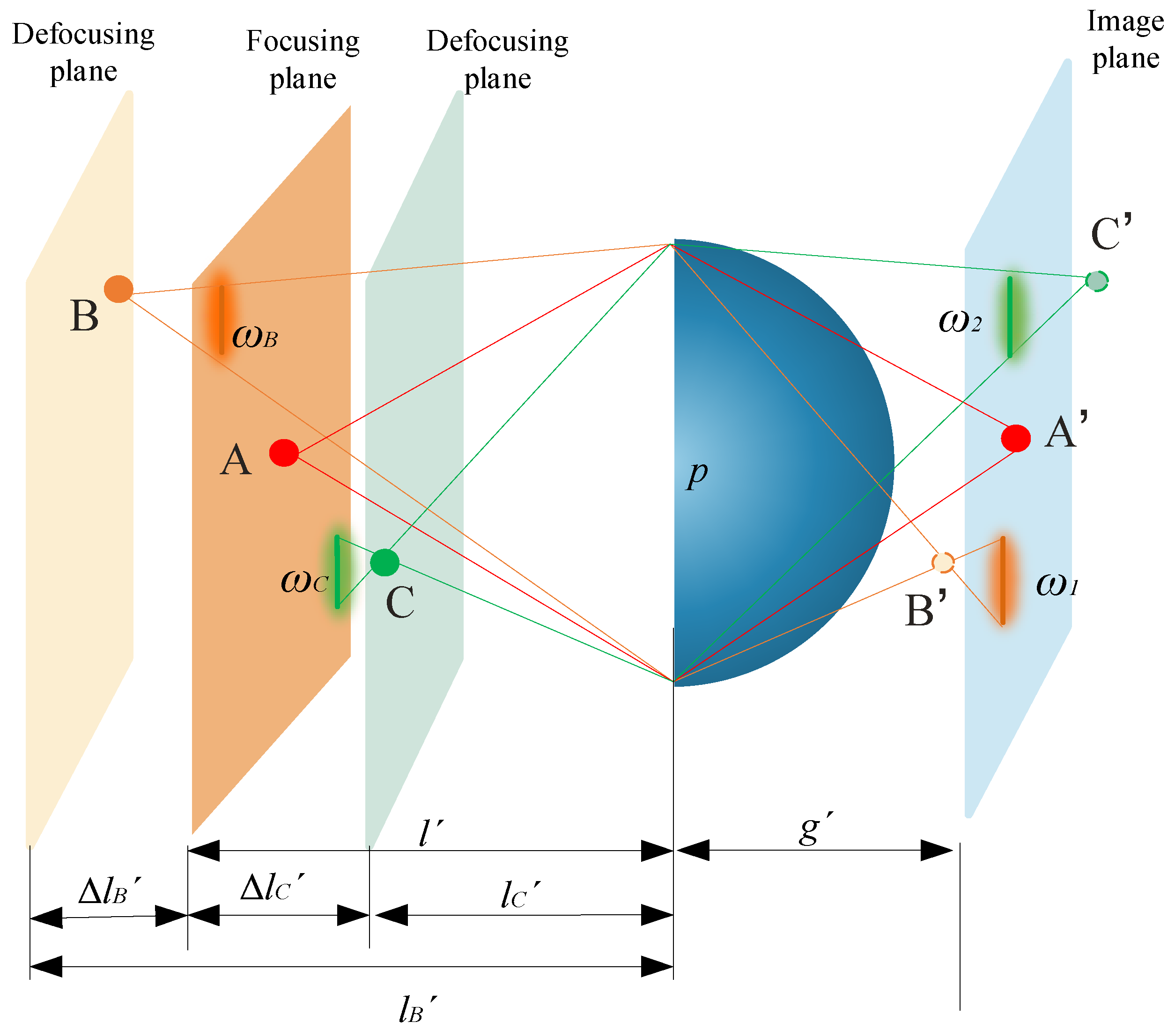

2.1. Reproduction DOF Model

2.2. Target Acquisition DOF Model

3. Research of II System Based on the Human Visual Distance (HVD) Perception Model

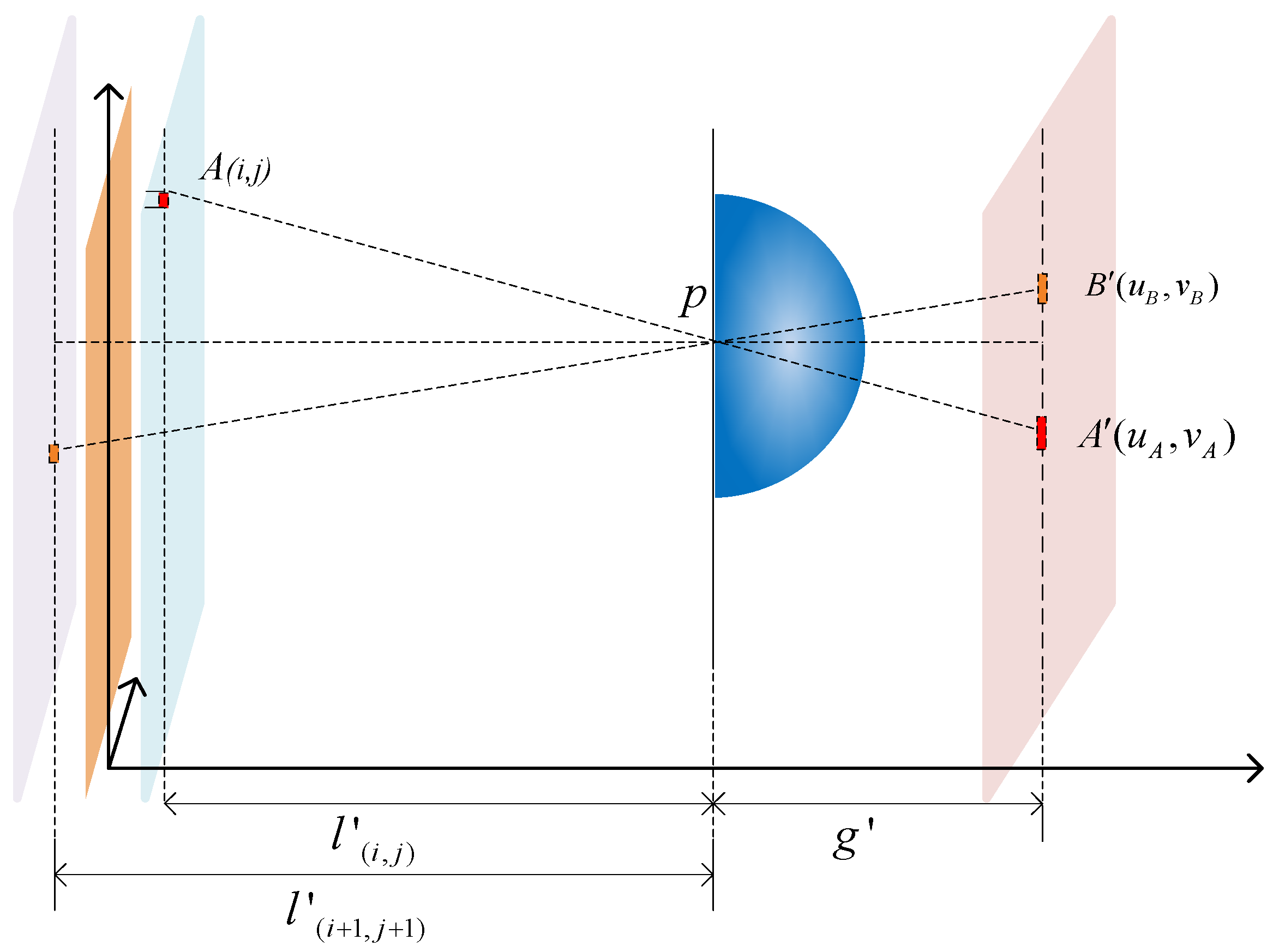

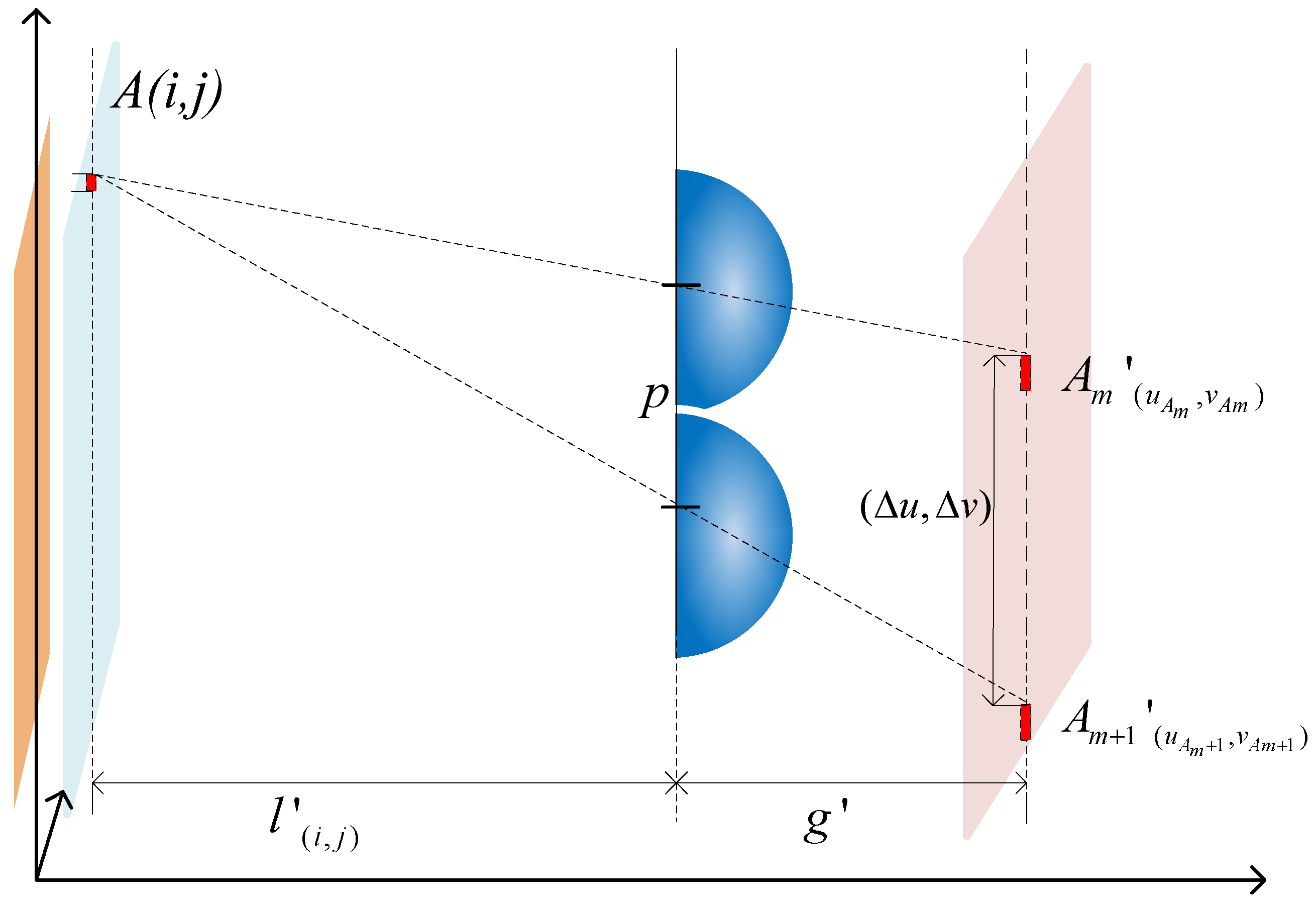

3.1. Research on Generating EIA Based on the HVD Perception

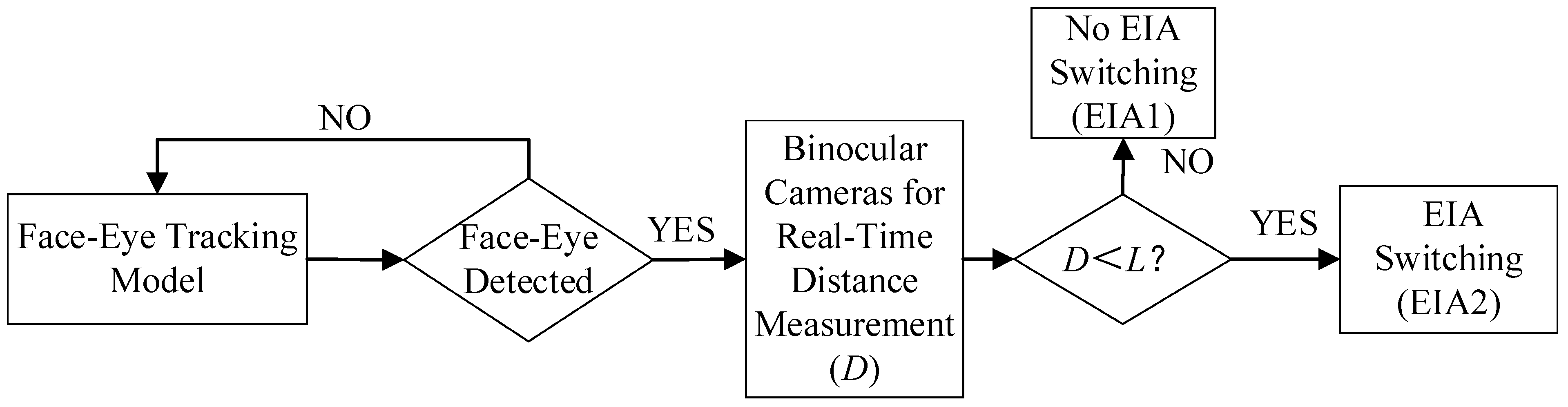

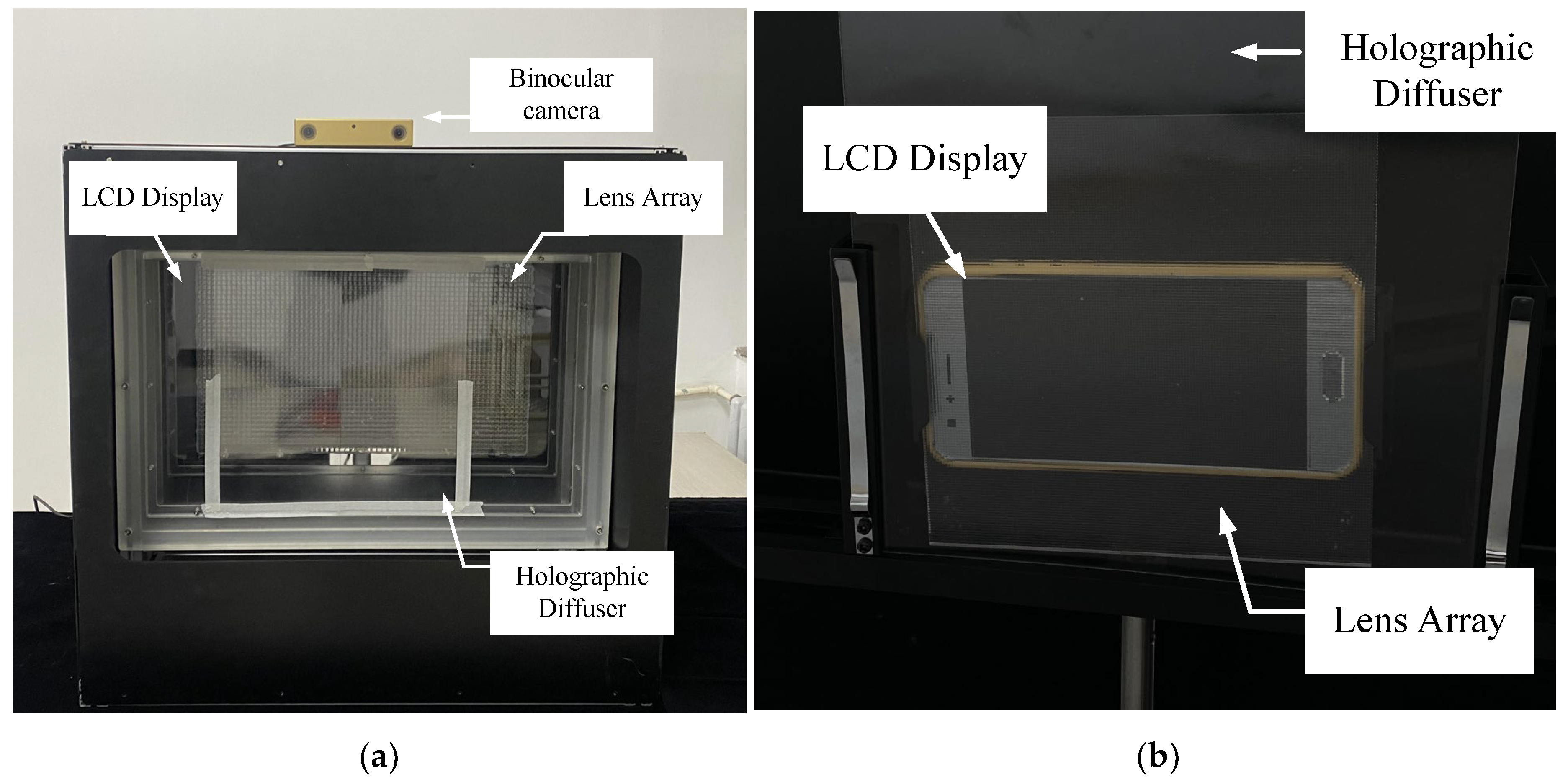

3.2. Design of Interactive II Display System

4. Experimental Results

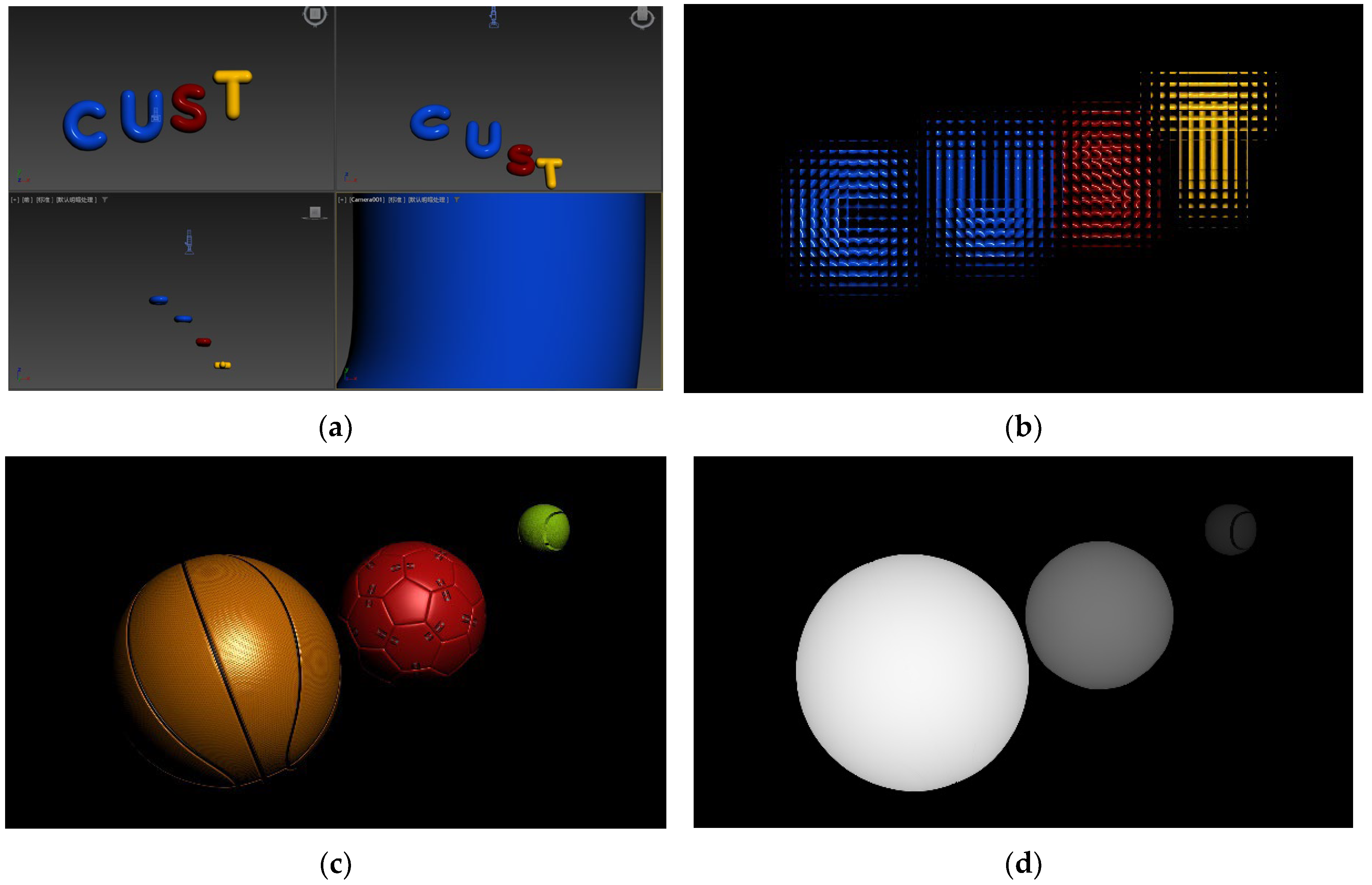

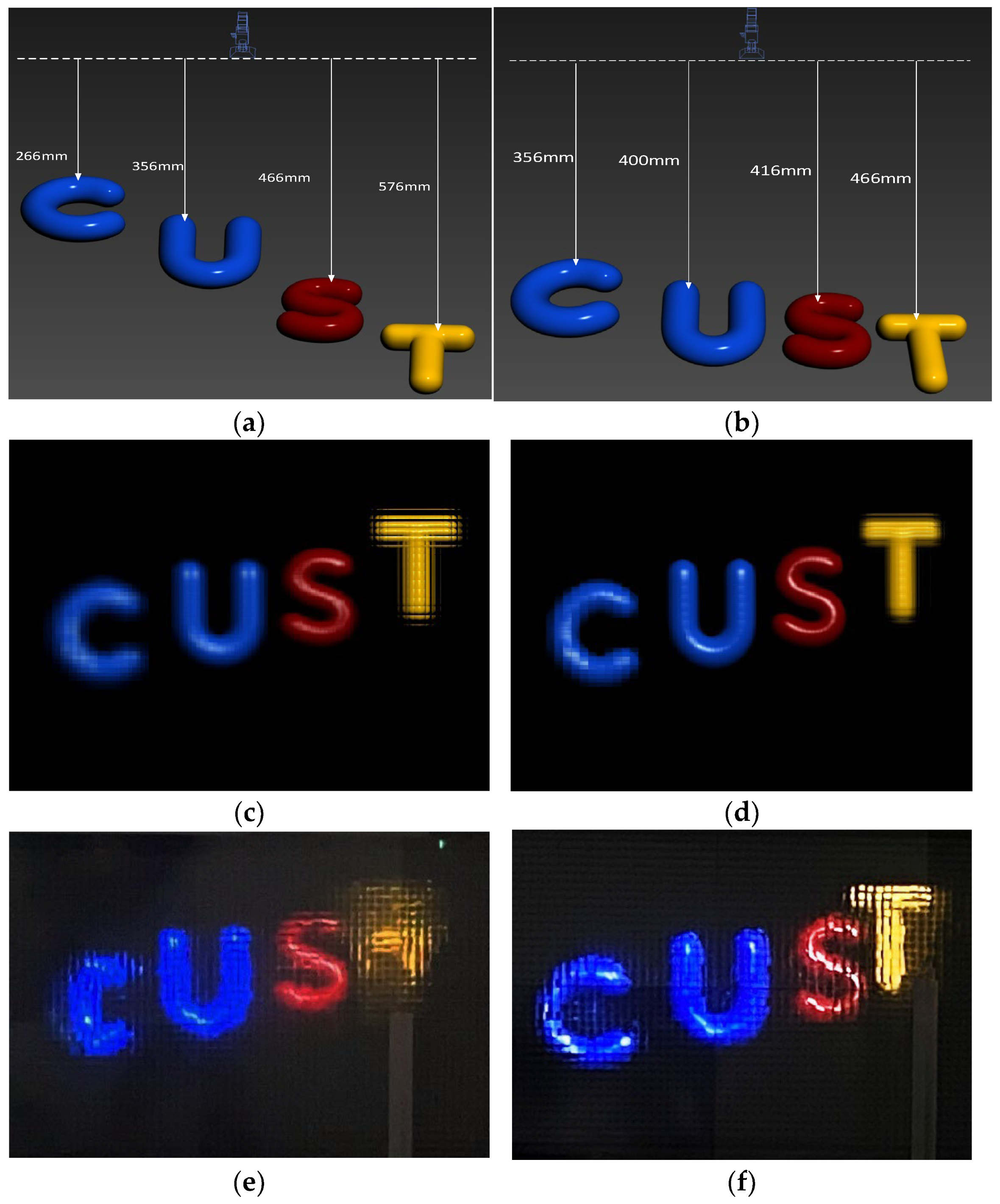

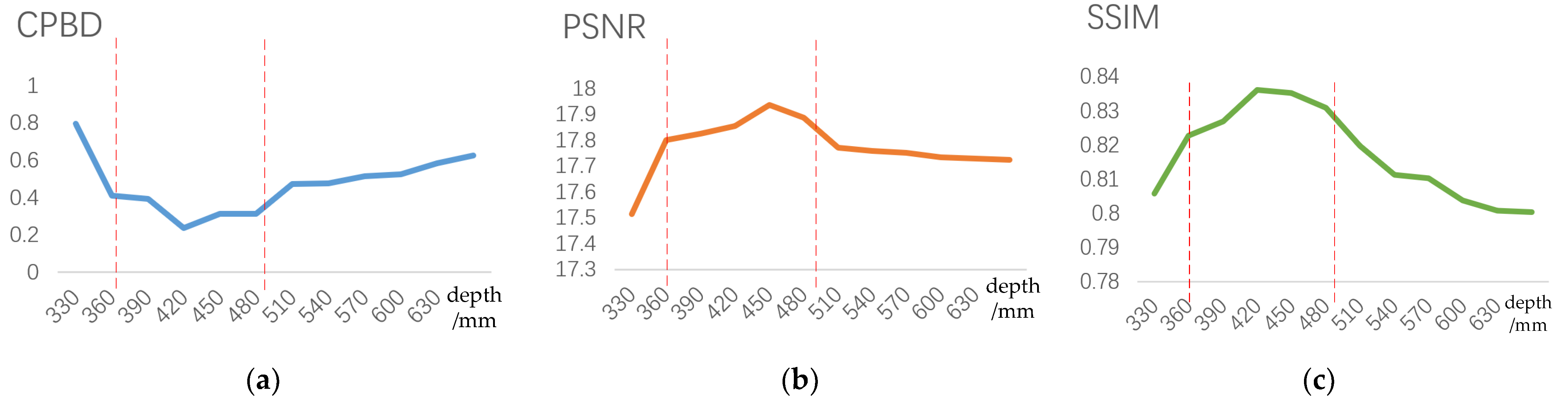

4.1. Experimental Validation of the II Overall DOF Model Based on HVS

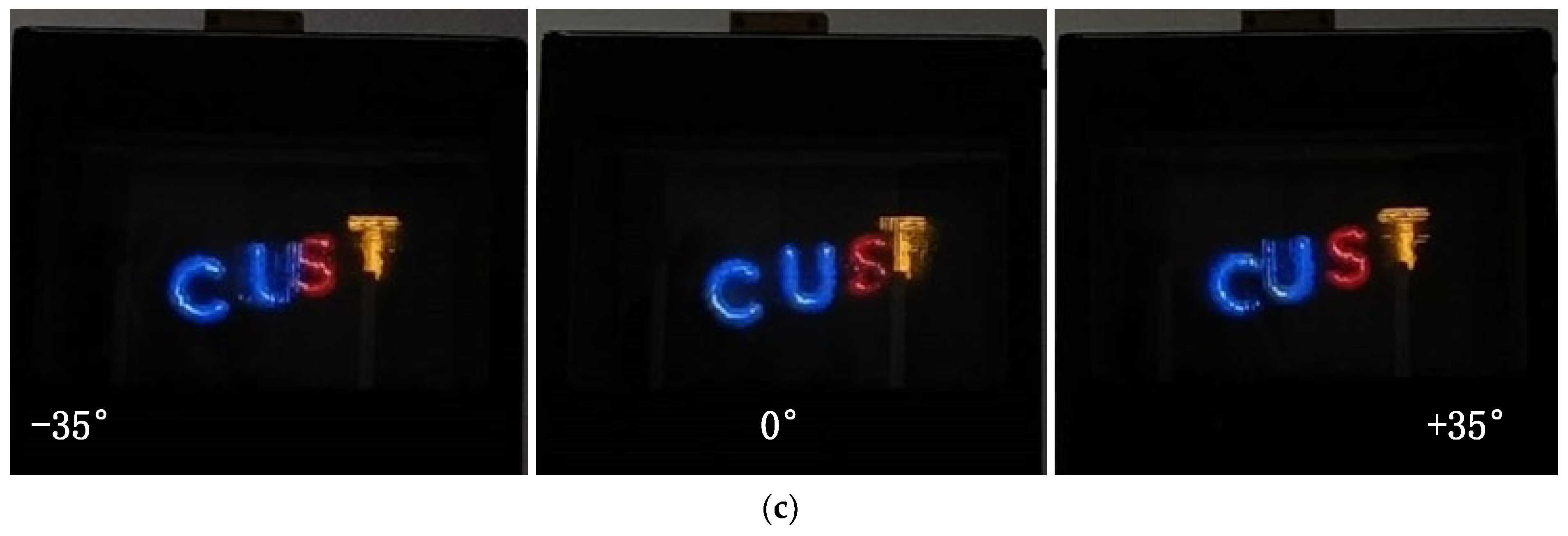

4.2. Verification Experiment of the HVD Perception Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Son, J.Y.; Lee, H.; Lee, B.R.; Lee, K.H. Holographic and light-field imaging as future 3-D displays. Proc. IEEE 2017, 105, 789–804. [Google Scholar]

- Luo, C.G.; Wang, Q.H.; Deng, H.; Gong, X.X.; Li, L.; Wang, F.N. Depth calculation method of integral imaging based on Gaussian beam distribution model. J. Disp. Technol. 2012, 8, 112–116. [Google Scholar] [CrossRef]

- Deng, H.; Wang, Q.H.; Li, L.; Li, D.H. An integral-imaging three-dimensional display with wide viewing angle. J. Soc. Inf. Disp. 2011, 19, 679–684. [Google Scholar] [CrossRef]

- Algorri, J.F.; Urruchi, V.; Bennis, N.; Morawiak, P.; Sánchez-Pena, J.M.; Otón, J.M. Integral imaging capture system with tunable field of view based on liquid crystal microlenses. IEEE Photonics Technol. Lett. 2016, 28, 1854–1857. [Google Scholar]

- Shen, X.; Javidi, B. Large depth of focus dynamic micro integral imaging for optical see-through augmented reality display using a focus-tunable lens. Appl. Opt. 2018, 57, B184–B189. [Google Scholar]

- Guo, M.; Si, Y.; Lyu, Y.; Wang, S.; Jin, F. Elemental image array generation based on discrete viewpoint pickup and window interception in integral imaging. Appl. Opt. 2015, 54, 876–884. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Sang, X.; Gao, X.; Yu, X.; Yan, B.; Yuan, J.; Wang, K. Influences of the pickup process on the depth of field of integral imaging display. Opt. Commun. 2017, 386, 22–26. [Google Scholar] [CrossRef]

- Jiao, X.X.; Zhang, L.; Sun, Y.; Zhou, L.Q.; Zhao, X. Three-dimensional reconstruction for a large scene of integral imaging based on color-position characteristics. Optoelectron. Lett. 2015, 11, 268–272. [Google Scholar] [CrossRef]

- Yang, C.; Wang, J.; Stern, A.; Gao, S.; Gurev, V.; Javidi, B. Three-dimensional super resolution reconstruction by integral imaging. J. Disp. Technol. 2015, 11, 947–952. [Google Scholar] [CrossRef]

- Kim, C.J.; Chang, M.; Lee, M.; Kim, J.; Won, Y.H. Depth plane adaptive integral imaging using a varifocal liquid lens array. Appl. Opt. 2015, 54, 2565–2571. [Google Scholar] [CrossRef]

- Lee, J.; Cho, M. Three-dimensional integral imaging with enhanced lateral and longitudinal resolutions using multiple pickup positions. Sensors 2022, 22, 9199. [Google Scholar] [CrossRef] [PubMed]

- Erdenebat, M.U.; Amgalan, T.; Khuderchuluun, A.; Nam, O.S.; Jeon, S.H.; Kwon, K.C.; Kim, N. Comprehensive High-Quality Three-Dimensional Display System Based on a Simplified Light-Field Image Acquisition Method and a Full-Connected Deep Neural Network. Sensors 2023, 23, 6245. [Google Scholar] [CrossRef] [PubMed]

- Piao, Y.; Zhang, M.; Wang, X.; Li, P. Extended depth of field integral imaging using multi-focus fusion. Opt. Commun. 2018, 411, 8–14. [Google Scholar] [CrossRef]

- Xing, Y.; Wang, Q.H.; Ren, H.; Luo, L.; Deng, H.; Li, D.H. Optical arbitrary-depth refocusing for large-depth scene in integral imaging display based on reprojected parallax image. Opt. Commun. 2019, 433, 209–214. [Google Scholar] [CrossRef]

- Peng, Y.; Zhou, X.; Zhang, Y.; Guo, T. Fabrication of a micro-lens array for improving depth-of-field of integral imaging 3D display. Appl. Opt. 2020, 59, 9104–9107. [Google Scholar] [CrossRef]

- Yun, H.; Llavador, A.; Saavedra, G.; Cho, M. Three-dimensional imaging system with both improved lateral resolution and depth of field considering non-uniform system parameters. Appl. Opt. 2018, 57, 9423–9431. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.; Lou, Y.; Hu, J.; Wu, F. Enhancing integral imaging performance using time-multiplexed convergent backlight. Appl. Opt. 2020, 59, 3165–3173. [Google Scholar] [CrossRef]

- Hong, J.; Kim, Y.; Choi, H.J.; Hahn, J.; Park, J.H.; Kim, H.; Lee, B. Three-dimensional display technologies of recent interest: Principles, status, and issues. Appl. Opt. 2011, 50, H87–H115. [Google Scholar] [CrossRef]

- Martínez-Cuenca, R.; Saavedra, G.; Pons, A.; Javidi, B.; Martínez-Corral, M. Facet braiding: A fundamental problem in integral imaging. Opt. Lett. 2007, 32, 1078–1080. [Google Scholar] [CrossRef]

- Navarro, H.; Martínez-Cuenca, R.; Molina-Martín, A.; Martínez-Corral, M.; Saavedra, G.; Javidi, B. Method to remedy image degradations due to facet braiding in 3D integral-imaging monitors. J. Disp. Technol. 2010, 6, 404–411. [Google Scholar] [CrossRef]

- Deng, L.; Piao, Y.; Gu, Y. Naked-eye 3D display with undistorted imaging system based on human visual system. Jpn. J. Appl. Phys. 2020, 59, 092006. [Google Scholar] [CrossRef]

- Luo, C.G.; Xiao, X.; Martínez-Corral, M.; Chen, C.W.; Javidi, B.; Wang, Q.H. Analysis of the depth of field of integral imaging displays based on wave optics. Opt. Express 2013, 21, 31263–31273. [Google Scholar] [CrossRef] [PubMed]

- Yi, F.; Lee, J.; Moon, I. Simultaneous reconstruction of multiple depth images without off-focus points in integral imaging using a graphics processing unit. Appl. Opt. 2014, 53, 2777–2786. [Google Scholar] [CrossRef]

- Frintrop, S.; Rome, E.; Christensen, H.I. Computational visual attention systems and their cognitive foundations: A survey. ACM Trans. Appl. Percept. TAP 2010, 7, 1–39. [Google Scholar] [CrossRef]

- Qi, D.; Tan, W.; Yao, Q.; Liu, J. YOLO5Face: Why reinventing a face detector. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Cho, M.; Javidi, B. Computational reconstruction of three-dimensional integral imaging by rearrangement of elemental image pixels. J. Disp. Technol. 2009, 5, 61–65. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Li, G.; Kwon, K.C.; Shin, G.H.; Jeong, J.S.; Yoo, K.H.; Kim, N. Simplified integral imaging pickup method for real objects using a depth camera. J. Opt. Soc. Korea 2012, 16, 381–385. [Google Scholar] [CrossRef]

- Gu, Y.J.N.; Piao, Y. Elemental image generation algorithm using reverse iteration along optical path. J. Russ. Laser Res. 2019, 40, 559–570. [Google Scholar] [CrossRef]

- Zhang, J.; Piao, Y.; Deng, L.J.; Wang, Q. Integral imaging reconstruction system based on the human eye viewing mechanism. Opt. Express 2023, 31, 9981–9995. [Google Scholar]

| Parameters | Optical Experimental Platform 1 | Optical Experimental Platform 2 |

|---|---|---|

| Lens’ focal length (f) | 36.76 mm | 3.00 mm |

| Lens’ diameter (p) | 8.50 mm | 1.00 mm |

| Lens array (m × n) | 70 × 39 | 112 × 63 |

| Distance between lens and display (g) | 40.44 mm | 3.60 mm |

| Distance between lens and CDP (D) | 403.96 mm | 18.00 mm |

| EIA resolution | 3818 × 2127 | 1904 × 1071 |

| Resolution of display | 3840 × 2160 | 1920 × 1080 |

| Pixel size of display (PD) | 0.1558 mm | 0.0588 mm |

| Optimal viewing distance (Lbest) | 3.89 m | 0.92 m |

| Parameters | Optical Experimental Platform 1 | Optical Experimental Platform 2 |

|---|---|---|

| Focal Plane (l′) | 403.96 mm | 18.00 mm |

| Captured Depth Range (Δl′) | 109.54 mm | 11.30 mm |

| Captured Distance Range (l′(i,j)) | 356.48–466.02 mm | 13.70–25.00 mm |

| Real Depth Range Related to Captured Distance (Z(i,j)) | 361.01–471.95 mm | 14.20–25.47 mm |

| Methods | CPBD | PSNR | SSIM |

|---|---|---|---|

| RODC algorithm [28] | 0.7904 | 19.79 | 0.2313 |

| RIOP algorithm [29] | 0.6700 | 20.32 | 0.6186 |

| LFR algorithm [30] | 0.4619 | 29.93 | 0.7726 |

| Our method | 0.3873 | 31.12 | 0.8656 |

| Parameters | Optical Experimental Platform 1 |

|---|---|

| Horizontal distance offset of the 3D object from the center O of the micro-lens array (u) | (−25 mm, +25 m) |

| Vertical distance offset of the 3D object from the center O of the micro-lens array (v) | (−16.6 mm, +16.66 m) |

| Visual perception range (L) | L ≥ 2.74 m |

| The threshold of visual line resolution of the human eye () | 4.18 × 10−3 (mm−1) |

| Collection distance range when L ≥ 2.74 m (l1′(i,j)) | 356.48–466.02 mm |

| Adjustment range of collection distance when L < 2.74 m (l″(i,j)) | −0.91–−1.10 mm |

| Collection distance range when L < 2.74 m (l2′(i,j)) | 355.57–464.92 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, L.; Li, Z.; Gu, Y.; Wang, Q. Integral Imaging Display System Based on Human Visual Distance Perception Model. Sensors 2023, 23, 9011. https://doi.org/10.3390/s23219011

Deng L, Li Z, Gu Y, Wang Q. Integral Imaging Display System Based on Human Visual Distance Perception Model. Sensors. 2023; 23(21):9011. https://doi.org/10.3390/s23219011

Chicago/Turabian StyleDeng, Lijin, Zhihong Li, Yuejianan Gu, and Qi Wang. 2023. "Integral Imaging Display System Based on Human Visual Distance Perception Model" Sensors 23, no. 21: 9011. https://doi.org/10.3390/s23219011

APA StyleDeng, L., Li, Z., Gu, Y., & Wang, Q. (2023). Integral Imaging Display System Based on Human Visual Distance Perception Model. Sensors, 23(21), 9011. https://doi.org/10.3390/s23219011