1. Introduction

The implementation guidelines set out in Articles 9 and 10 of the WHO Framework Convention on Tobacco Control (FCTC) require manufacturers and importers of tobacco products to disclose the contents of tobacco products to government authorities, including the type of tobacco shred and blending ratio of each type of tobacco shred. Tobacco manufacturers must also have equipment and methods for detecting and measuring tobacco shred components [

1,

2]. According to the National Bureau of Statistics, China’s cumulative cigarette production for the year 2021 reached 2418.24 billion, a cumulative increase of 1.3%. By May 2022, China’s cigarette production stood at 188.25 billion, up 10.4% year-on-year. At present, the construction of tobacco information technology is an important path to achieving industry transformation and industrial upgrading [

3]. The relative proportions of each tobacco shred type (tobacco silk, cut stem, expanded tobacco silk, and reconstituted tobacco shred) impact the smoke characteristics, physical indicators, and sensory quality of cigarettes [

4,

5]. Therefore, efficient and accurate determination of tobacco shred type and the unbroken tobacco shred rate is essential for intelligent information reform, process quality assurance, and production consistency in the tobacco industry.

Machine vision and deep learning methods have been studied in depth in the field of tobacco component detection. Niu et al. [

2] constructed a single tobacco shred dataset with four types by shooting single tobacco shreds and adopting a threshold preprocessing algorithm. The Resnet model was improved by changing the block parameters, multi-scale fusion, and focal loss function. The classification accuracy reached 96.56%. Zhong et al. [

6] shot single tobacco shreds from four types of tobacco shreds (tobacco silk, cut stem, expended tobacco silk, and reconstituted tobacco shred) for dataset construction. The loading weight was used to improve the Resnet model for classification of the four types of tobacco shreds. The accuracy and recall rate of the model were both higher than 96%. Niu et al. [

7] constructed a single tobacco shred dataset by shooting single tobacco shreds from each of the four types and adopting an image preprocessing algorithm. The VGG model was improved by adding a residual module and global pooling layer instead of a fully connected layer for tobacco shred classification. The improved model reduced the amount of parameters by 96.5% and achieved an accuracy of 95.5%. Liu et al. [

8] also constructed a single tobacco shred dataset by shooting four types of tobacco shreds. An improved Resnet model was proposed with an efficient channel attention mechanism, multi-scale fusion, and activation function optimization. The final classification accuracy was 97.23%. Wang et al. [

9] constructed a single-target 24-type overlapped tobacco shred dataset by shooting random overlapped tobacco shreds of two types. The Mask-RCNN network was improved with densenet121, U-FPN, and optimized anchor parameters. The COT algorithm was proposed to solve the overlapped area calculation of tobacco shreds. The final segmentation accuracy and recall rate were 89.1% and 73.2%, respectively, and the average area detection rate was 90%.

The above tobacco shred research work either studied only the identification of non-overlapped objects of different types of tobacco shreds with a single target in a single tobacco shred picture or the identification of overlapped objects of different types of tobacco shreds with only a single target in a single tobacco shred picture. Thus, it is difficult to apply the above research work and conclusions in real cigarette quality inspections in the field. In the actual cigarette quality inspection of a tobacco factory, tobacco shred pictures taken online contain complex tobacco shred forms within a single picture, containing both non-overlapped and overlapped tobacco shreds. The different types of these blended tobacco shreds need to be identified, but the component ratio of each type of tobacco shred, the unbroken tobacco shred rate, and other index parameters also need to be calculated in real time to judge the quality of cigarettes. On top of that, the time and accuracy requirements are extremely high. It is a huge challenge to complete all of these tasks efficiently and accurately. At present, there are few studies on multi-object rapid detection of blended tobacco shreds for practical application in the field.

With the development of deep learning algorithms, the single-stage YOLO detection algorithm has been widely used in agriculture [

10] and other fields [

11,

12,

13,

14] to meet the demand for fast detection of multiple targets in a single image. Chen et al. [

15] proposed an improved YOLOv7 model for fast citrus detection for unmanned citrus picking. The model achieved multi-scale feature fusion and lightweighting by adding a small object detection layer, GhostConv, and the CBAM attention module. The final average accuracy reached 97.29% with 69.38 ms prediction time. Sun et al. [

16] proposed an improved YOLOv5 model for automatic pear picking when fruits are against a disordered background and in the shade of other objects. CBS of the backbone and the fourth stage’s CBS were replaced with the shuffle block and inverted shuffle block, respectively. The final average detection supervision was 97.6% and the model parameters were compressed by 59.4%. He et al. [

17] proposed an output estimation method based on the number of soybean pods for multiple pods in a soybean plant. The YOLOv5 model was improved by embedding a CA attention mechanism and modifying the boundary regression loss function. The actual weight of the pods was predicted using a pod weight estimation algorithm. The average accuracy reached 91.7%. Lai et al. [

18] proposed an improved YOLOv7 model for the identification of pineapples with different maturity levels in complex field environments. The model was improved by adding SimAM to improve the feature extraction capability, improving MPConv to optimize the feature loss, and adopting soft-NMS to replace NMS. The accuracy and recall rate were 95.82% and 89.83%, respectively. Cai et al. [

19] proposed an improved YOLOv7 model for detecting fake banana stems under complex growth conditions. Focal loss function was adopted to solve category imbalance and classification difficulty. Mixup data augmentation was employed to expand the dataset and further improve the accuracy of the model. The accuracy and prediction time were 81.45% and 8 ms, respectively. Based on an improved YOLOv8 model, Li et al. [

20] realized efficient multi-target detection under different target size, occlusion, and illumination conditions. The idea of Bi-PAN-FPN was introduced to improve the neck part of YOLOv8-s and enhance the feature fusion of the model. The results showed that the proposed aerial image detection model obtained obvious effects and advantages. Lou et al. [

21] proposed an improved YOLOv8 model for small-size target detection in special scenarios. A new downsampling method that could better preserve the context feature information was proposed. Three authoritative public datasets were used in the experiment, all of which improved by more than 0.5%.

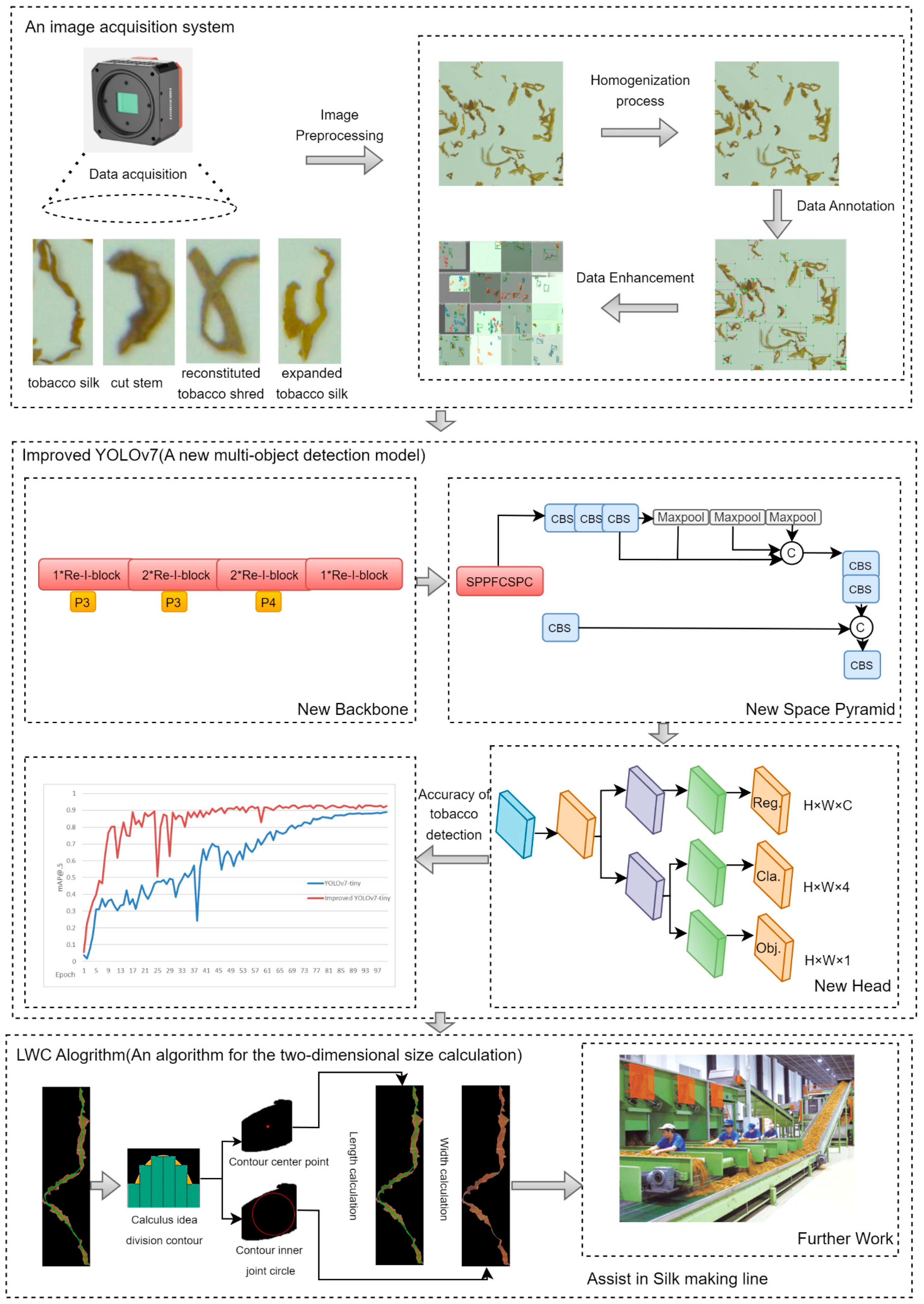

For multi-object rapid detection of blended tobacco shreds for practical application in the field, this study proposes an overall solution based on an improved YOLOv7 multi-object detection model and an algorithm for two-dimensional size calculation of blended tobacco shreds to identify blended tobacco shred types and calculate the size of tobacco shreds. The focus is on the identification of blended tobacco shred types, as our research object is determining the best method of identifying tobacco shred components and the unbroken tobacco shred rate for real-world use in quality inspection lines.

For this paper, the main contributions are as follows:

- (1)

Establishing two types of original blended tobacco shred image datasets: 4000 non-overlapped tobacco shreds consisting of images captured from four tobacco shred varieties and 5300 blended tobacco shreds consisting of images captured four non-overlapped tobacco shred varieties and seven types of overlapped tobacco shreds. Dataset 1 was established as the training base model and dataset 2 was used to mimic the actual field and increase the robustness of the model.

- (2)

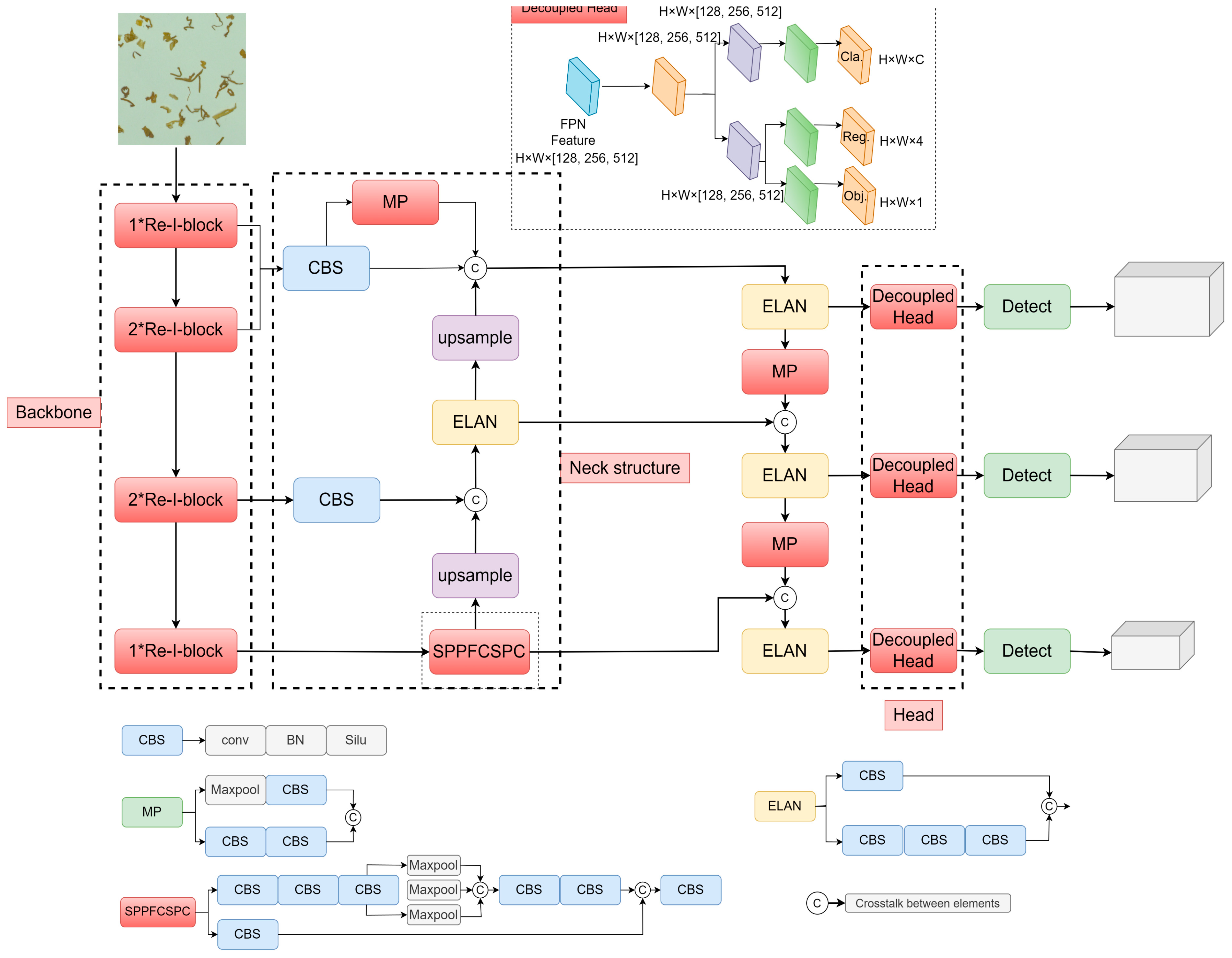

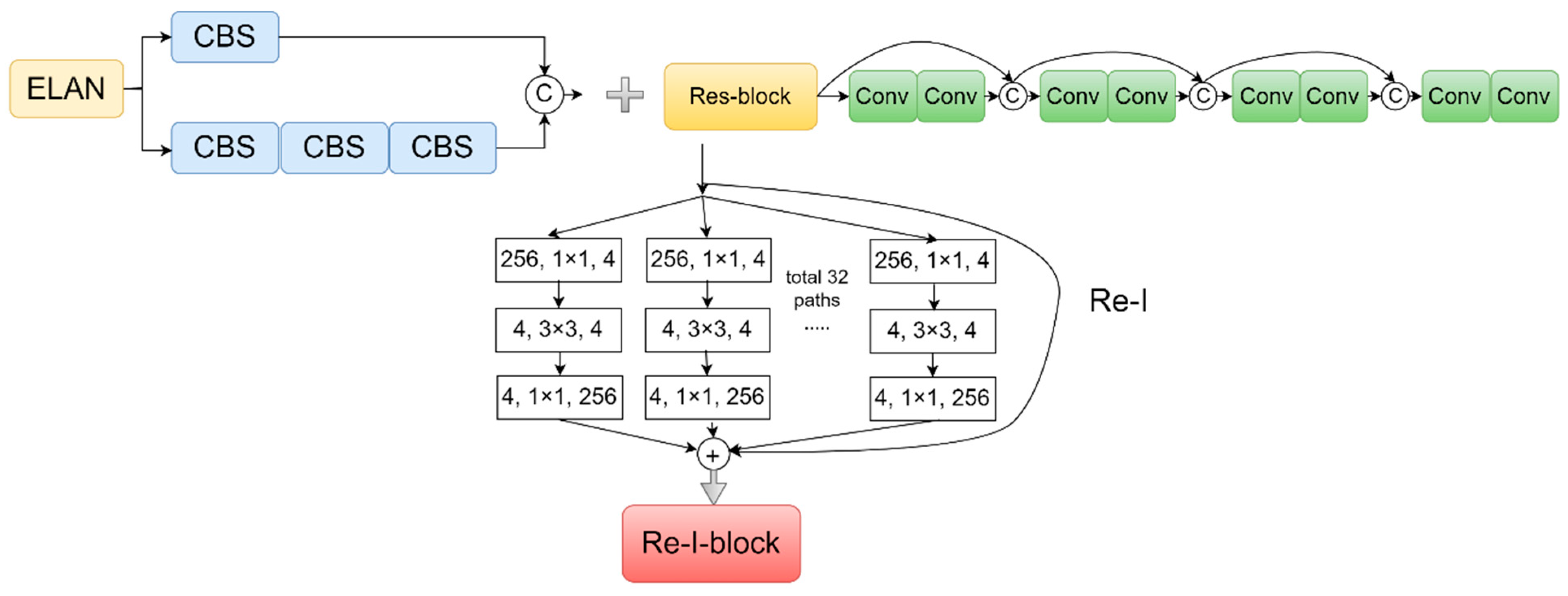

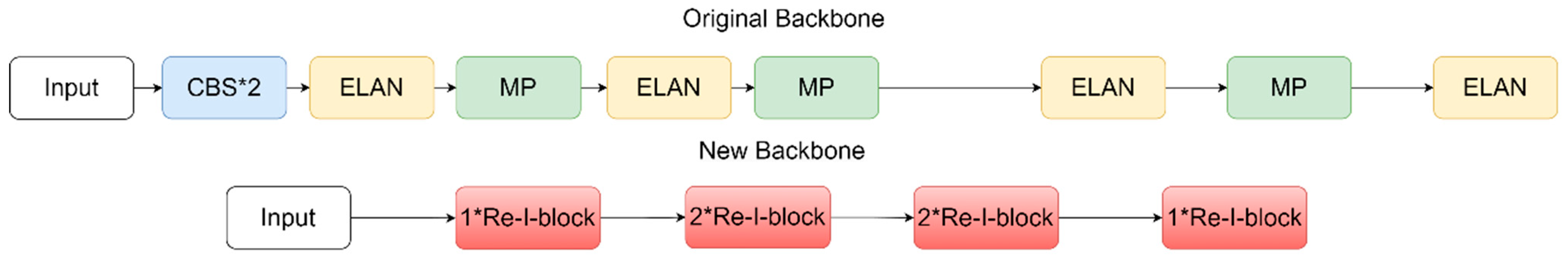

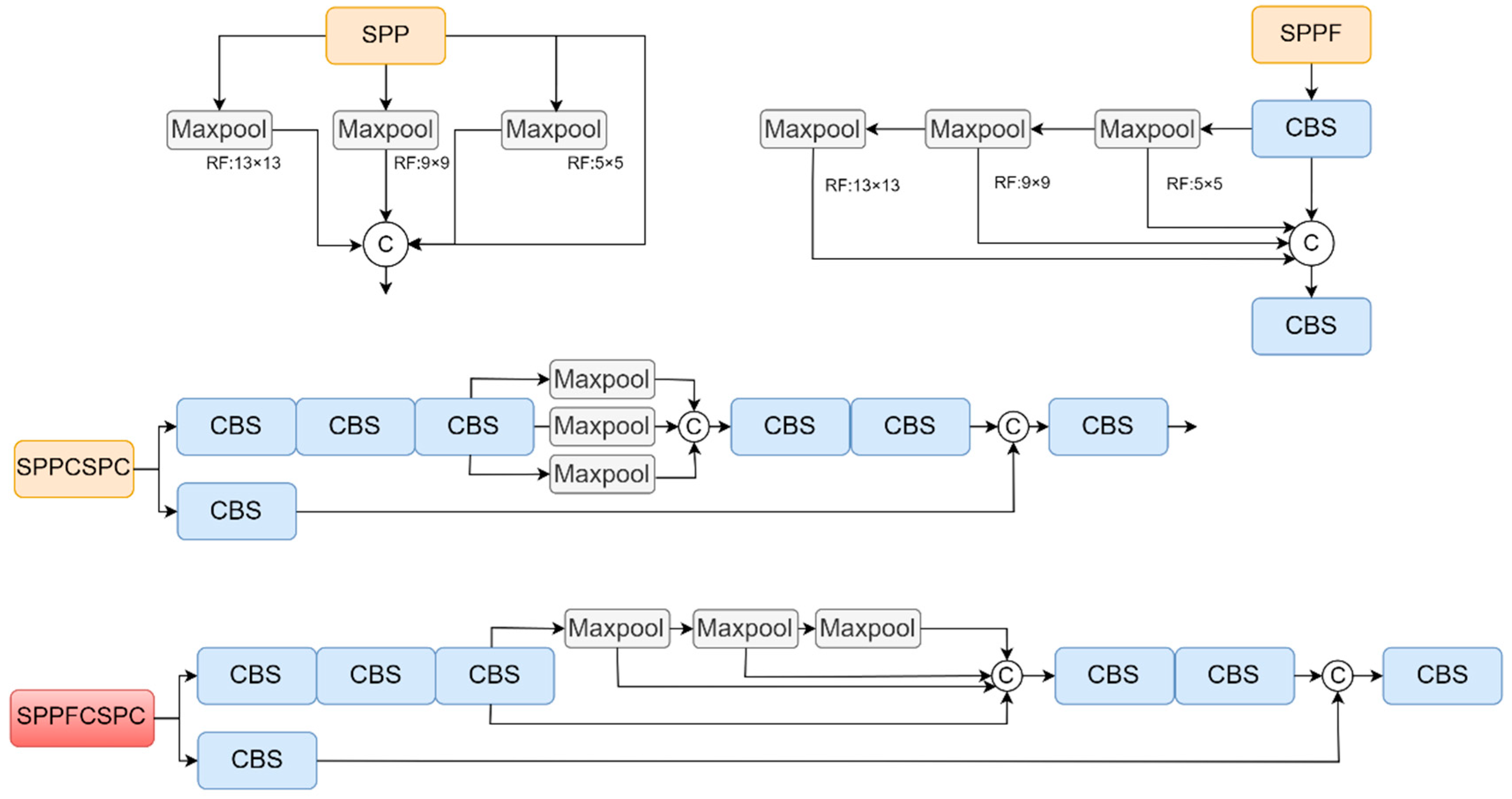

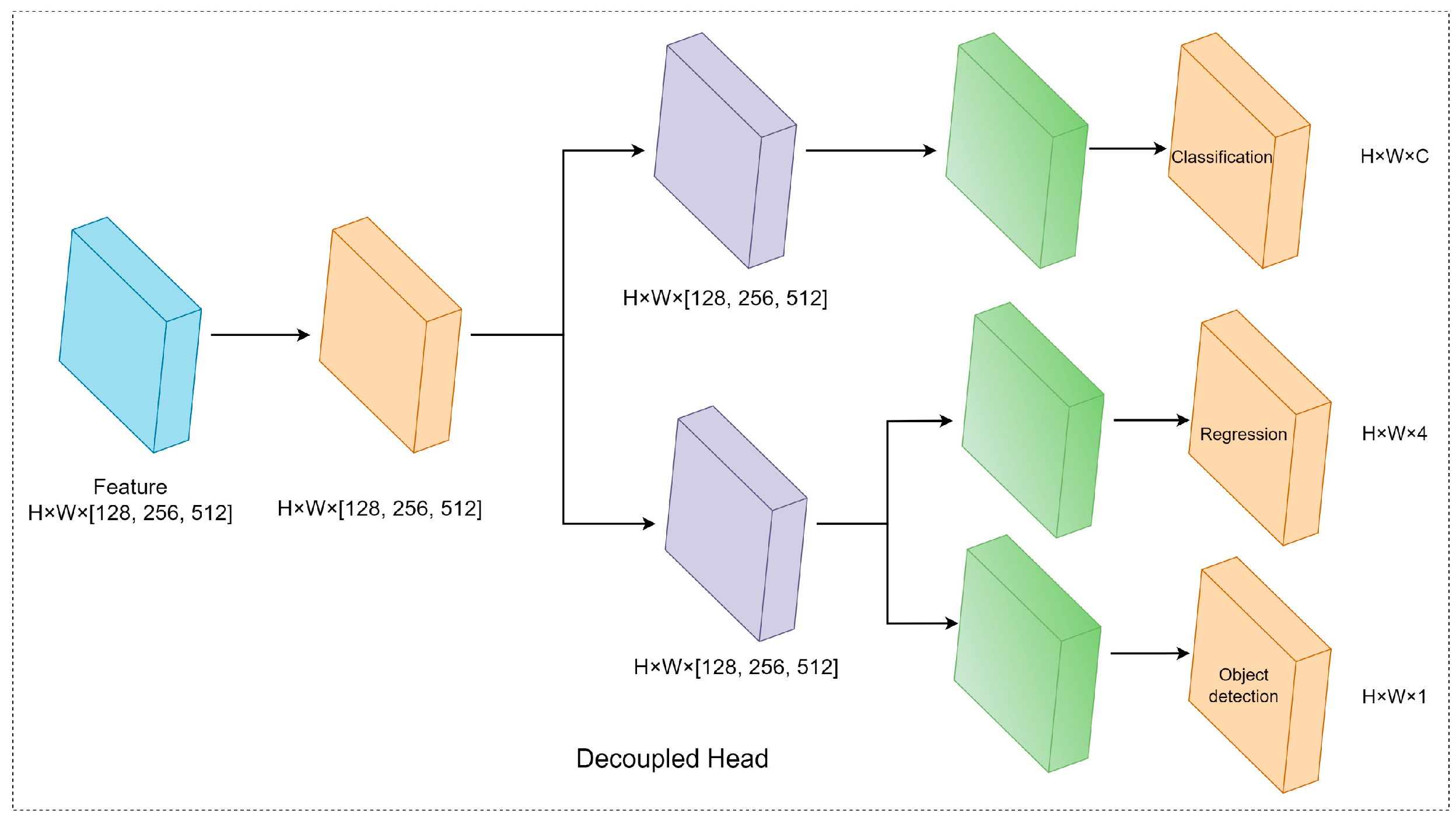

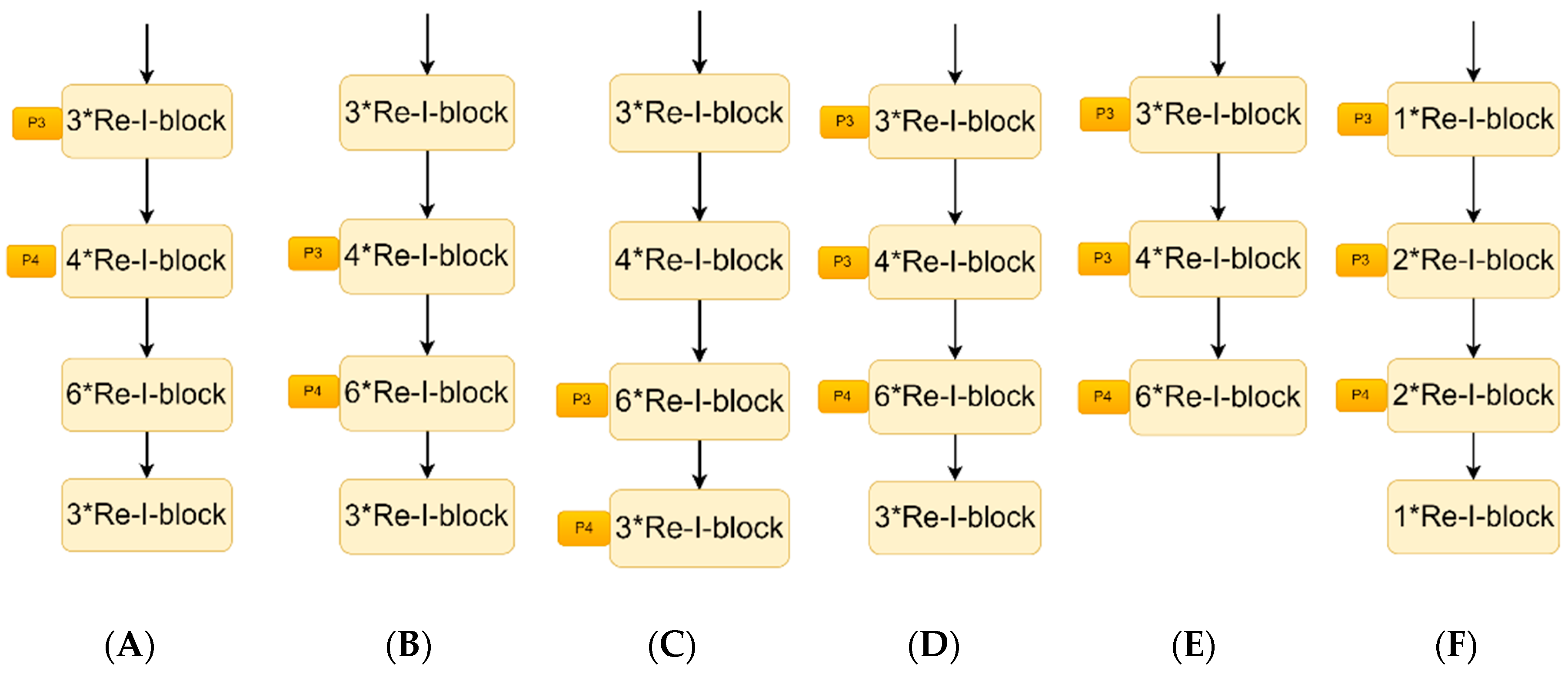

Developing an accurate YOLOv7-tiny model to achieve multi-object blended tobacco shred detection utilizing digital images. Detection models are developed and compared using Faster RCNN, RetinaNet, and SSD architectures with the chosen datasets. The performances of different tobacco shred detection methods with Resnet50, Light-VGG, MS-Resnet, Ince-Resnet, and Mask RCNN are also compared. The constructed improved YOLOv7 network (ResNetxt19, SPPFCSPC, decoupled head) demonstrated the highest detection accuracy. It provided good detection capability for blended tobacco shred images with different sizes and types, outperforming other similar detection models.

- (3)

Proposing a tobacco shred two-dimensional size calculation algorithm (LWC) to be first applied to not only single tobacco shreds, but also to overlapped tobacco shreds. This algorithm accurately detects and calculates the length and width in images of blended tobacco shreds.

- (4)

Providing a new implementation method for the identification of tobacco shred type and two-dimensional size calculation of blended tobacco shreds and a new approach for other similar muti-object image detection tasks.

2. Data Collection

The experimental tobacco shreds were blended tobacco shreds (four varieties of tobacco silk, cut stem, expended tobacco silk, and reconstituted tobacco shred) from one batch of a brand, obtained on the blending line. Prior to obtaining the image datasets, the tobacco shreds were stored in a constant temperature and humidity chamber at 25 °C to reduce the effect of external factors on the image data [

9].

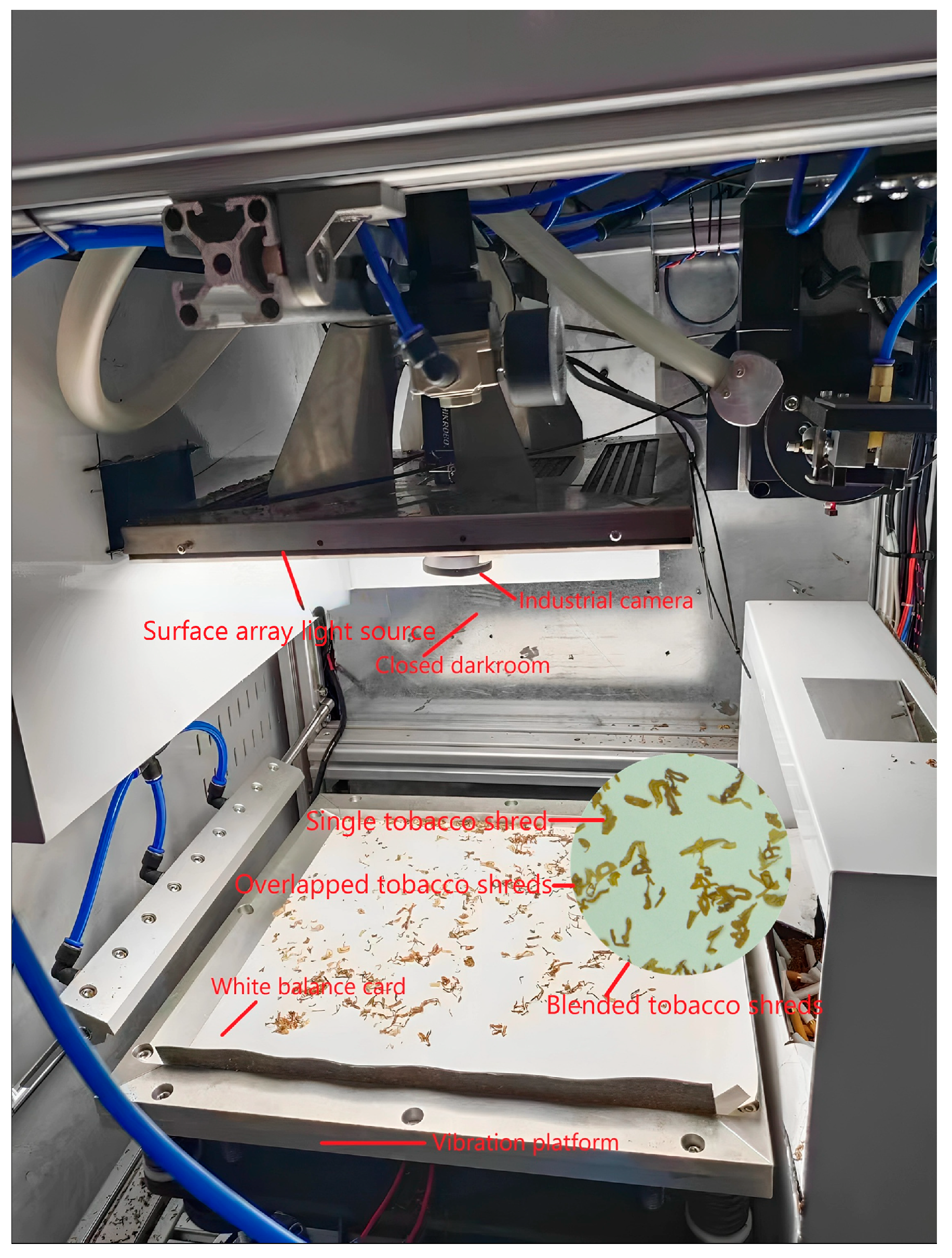

In order to achieve rapid and efficient acquisition of blended tobacco shred images, an image acquisition device was designed. It consisted of the following components: industrial camera, surface array light source, vibration platform, closed darkroom, and white balance card. The industrial camera was MV-CH250-90TC-C-NF, which had a resolution of 25 megapixels and captured high-quality tobacco shred images even for of large-area tobacco shred collection. The surface array light source used was MV-LBES-H-250-250-W. Compared with other light sources, it greatly ensured that the brightness of the shooting field of view was uniform, excluding the influence of tobacco shred shadow. A 320 mm × 320 mm laboratory vibration platform was used to avoid the dispersion effect for a single 0.6 g cigarette. A white balance card was used with an 18 degree calibration exposure white board, which met the exposure and color balance needs of the benchmark image. The enclosed darkroom was made of four aluminum plates, which avoided the influence of external light on image acquisition. The overall blended tobacco shred image acquisition device is shown in

Figure 1.

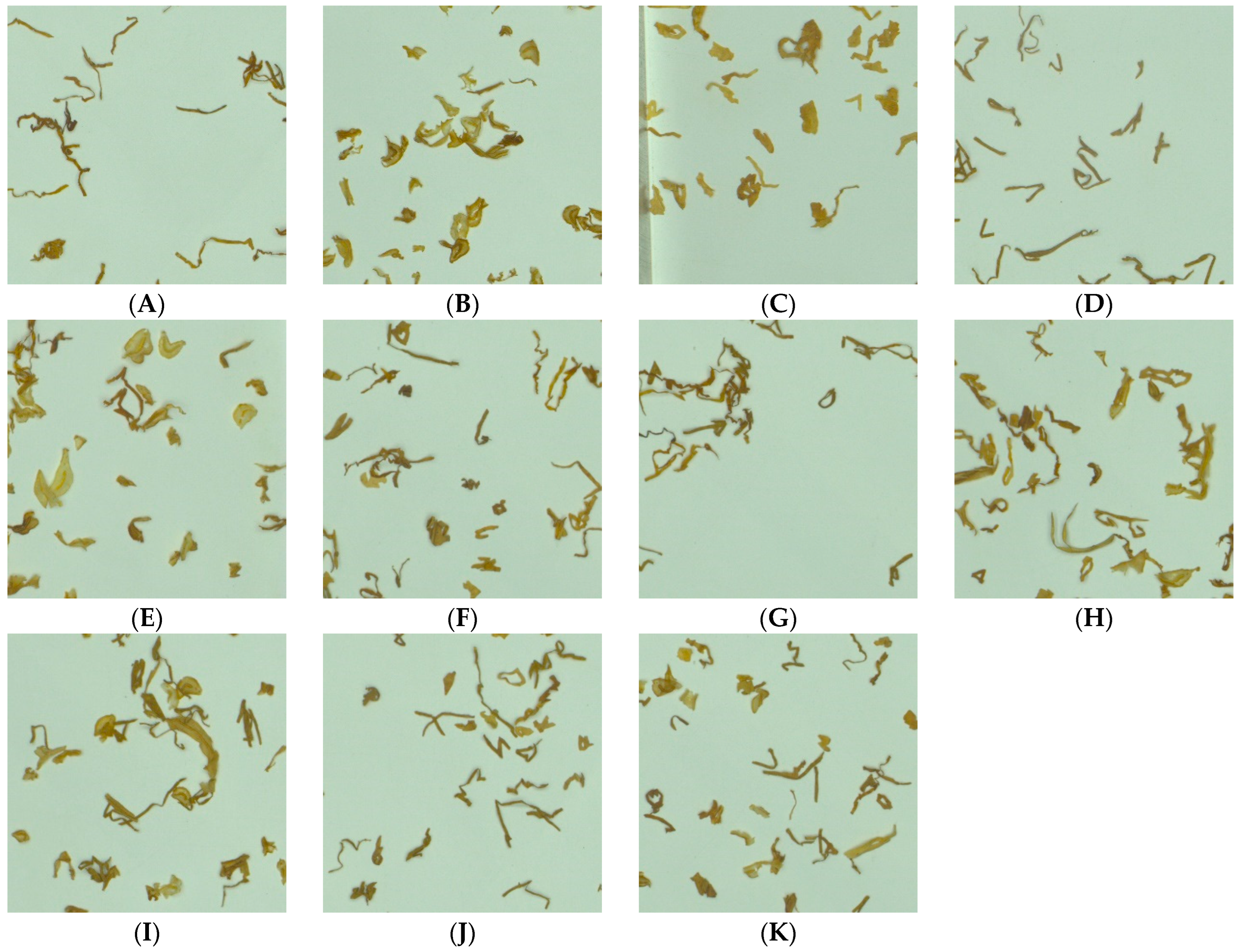

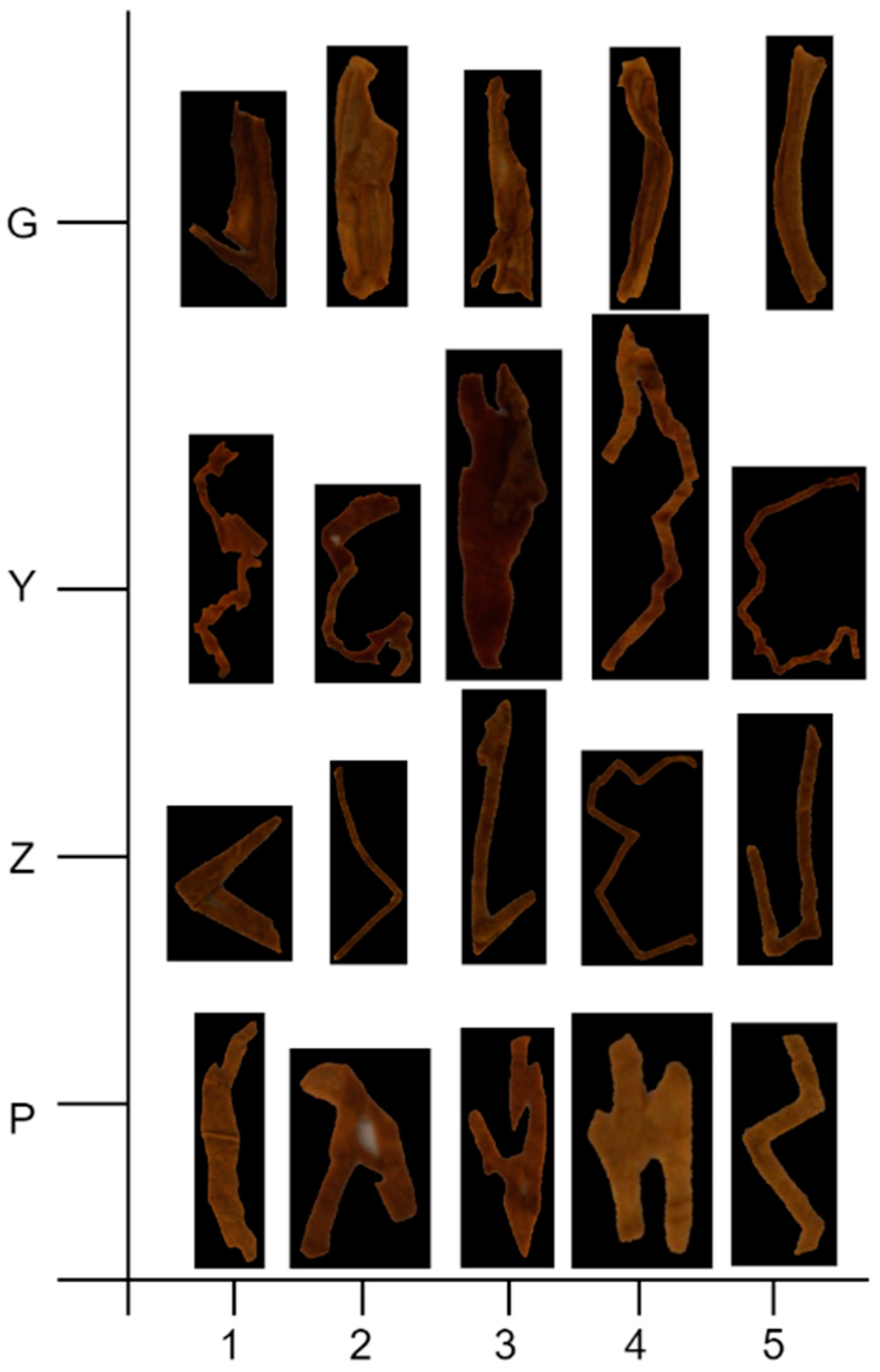

Considering the diversity and complexity of dataset construction, 11 types of tobacco shred images were shot, consisting of pure tobacco silk, pure cut stem, pure expended tobacco silk, pure reconstituted tobacco shred, tobacco silk-cut stem, tobacco silk-expended tobacco silk, tobacco silk-reconstituted tobacco shred, cut stem-expended tobacco silk, cut stem-reconstituted tobacco shred, expended tobacco silk-reconstituted tobacco shred, and tobacco silk-cut stem-expended tobacco silk-reconstituted tobacco shred. The four types of pure tobacco shreds were shot to enhance later feature learning. Six types of two blended tobacco shred varieties were shot to enhance the diversity and complexity of the datasets. The final type of blended tobacco shred was shot to simulate tobacco shred distribution in the real field situation. The specific dataset construction sources, quantities, and sample images of 11 types of tobacco shred samples are shown in

Table 1 and

Figure 2.

Based on the different shot objects, two datasets were constructed in this study. Dataset 1 contained only four types of pure tobacco shred images (Y-pure tobacco silk, G-pure cut stem, P-pure expended tobacco silk, Z-pure reconstituted tobacco shred), with a total of 4000 images for training of the baseline model. Dataset 2 contained all of the samples in dataset 1 and added seven additional types of overlapped tobacco samples (tobacco silk-cut stem, tobacco silk-expended tobacco silk, tobacco silk-reconstituted tobacco shred, cut stem-expended tobacco silk, cut stem-reconstituted tobacco shred, expended tobacco silk-reconstituted tobacco shred, and tobacco silk-cut stem-expended tobacco silk-reconstituted tobacco shred), respectively, for a total of 6800 samples, to enhance the generalization capability of the network model and to cope with the overlapped tobacco shred situation in real applications. Both datasets 1 and 2 were divided into training and testing sets at a ratio of 7:3. A total of 2800 and 1200 samples were used for the training and testing sets from tobacco shred dataset 1, respectively (see

Table 2). A total of 4760 and 2040 samples were used for the training and testing sets from tobacco shred dataset 2, respectively (see

Table 3). In

Table 3, blended Y represents the blended tobacco silk image dataset, blended G represents the blended cut stem image dataset, blended P represents the blended expended tobacco silk image dataset, and blended Z represents the blended reconstituted tobacco shred image dataset.

2.1. Data Preprocessing

2.1.1. Background Shadow Elimination Algorithm

Although the image acquisition system ensured efficient and accurate acquisition of blended tobacco shred images to a large extent, the captured tobacco shred images still had problems such as picture background inconsistency and tobacco shred shadows. Therefore, this paper proposes a tobacco shred image background shadow elimination algorithm based on the OpenCV algorithm to optimize image quality, strengthen the foreground features, and keep the image background homogenized. The algorithm flowchart is shown in

Figure 3, and the specific steps are:

Step 1—Grayscale processing and expansion treatment: Grayscale the image and perform expansion to expand the foreground information and weaken the background information while preventing foreground information from being lost.

Step 2—Median filtering: The kernel is selected as 3 × 3 to suppress the foreground information and enhance the shadows in the background information.

Step 3—Find the background difference between the original and processed image: Calculate the difference between the original and processed images, with the same bit as black and the tobacco shred as light white. Complete elimination of the shadows in the background with the help of this difference.

Step 4—Background difference normalization treatment: The picture difference value range is normalized to the 0–255 range.

Step 5—Adaptive threshold processing and filtration enhancement: The filter window is adjusted according to the size of the content in the image, and the output image is enhanced.

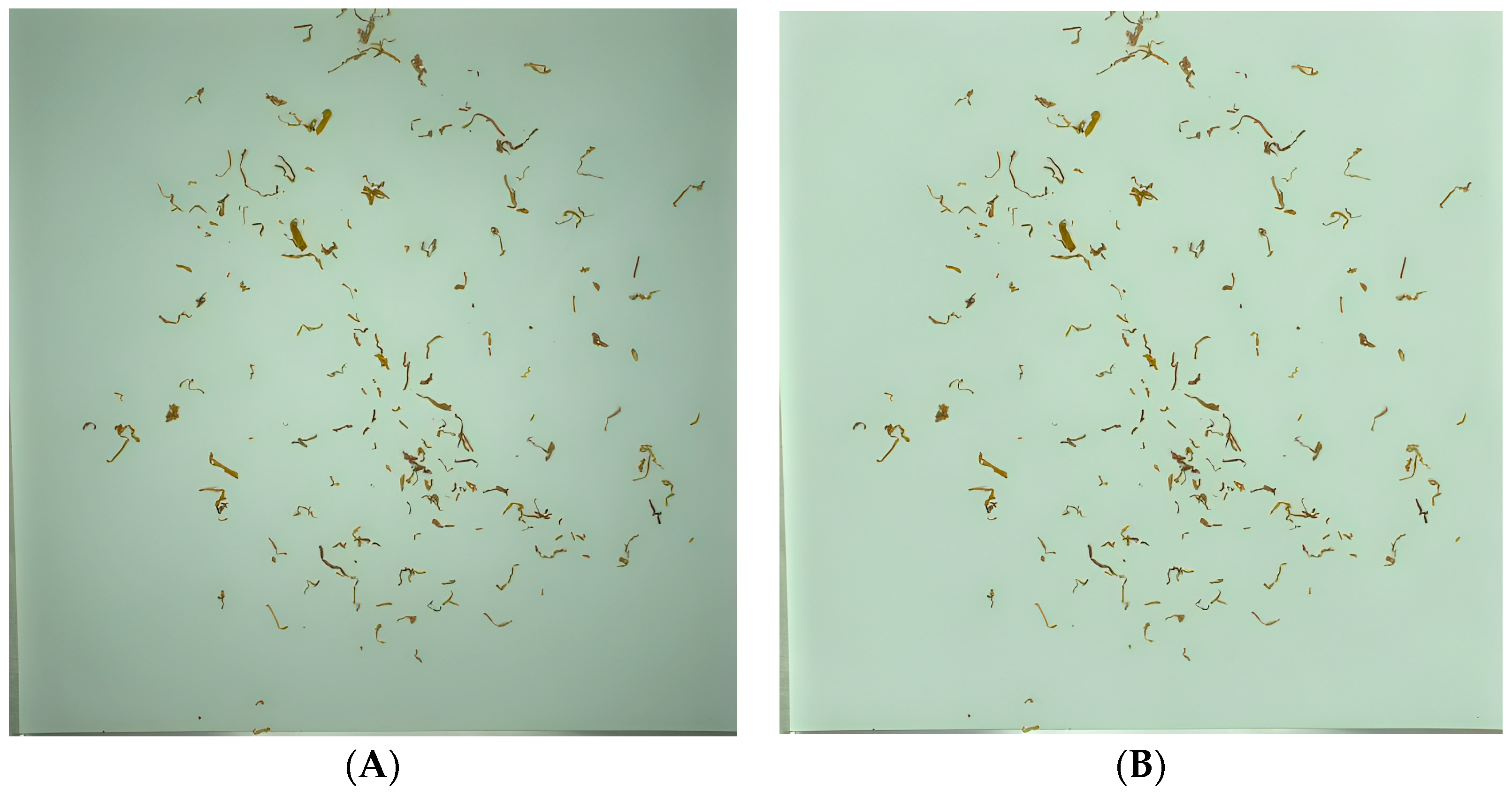

Figure 4 shows a comparison of the preprocessed results of one blended tobacco shred sample image;

Figure 4A is the original unprocessed sample image and

Figure 4B is the processed sample image. From

Figure 4, it can be seen that the background shadow elimination algorithm proposed in this paper can efficiently remove the shadow interference in the background information and ensure that the texture, color, and morphological information of the tobacco shreds are not distorted.

2.1.2. Data Enhancement

Considering the different morphologies of the four types of tobacco shreds and overlap-type complexity of the blended tobacco shreds, data enhancement was performed in seven ways, including hsv, translate, scale, filplr, mosaic, mixup, and paste_in [

22], to extend the dataset sample amount. First, the image was randomly enhanced with hsv in each stage, followed by translation, scaling, and left–right rotation enhancement. Finally, multiple images were blended using mosaic for random stitching and mixup. The specific hyperparameter parameters are shown in

Table 4.

2.1.3. Data Annotation

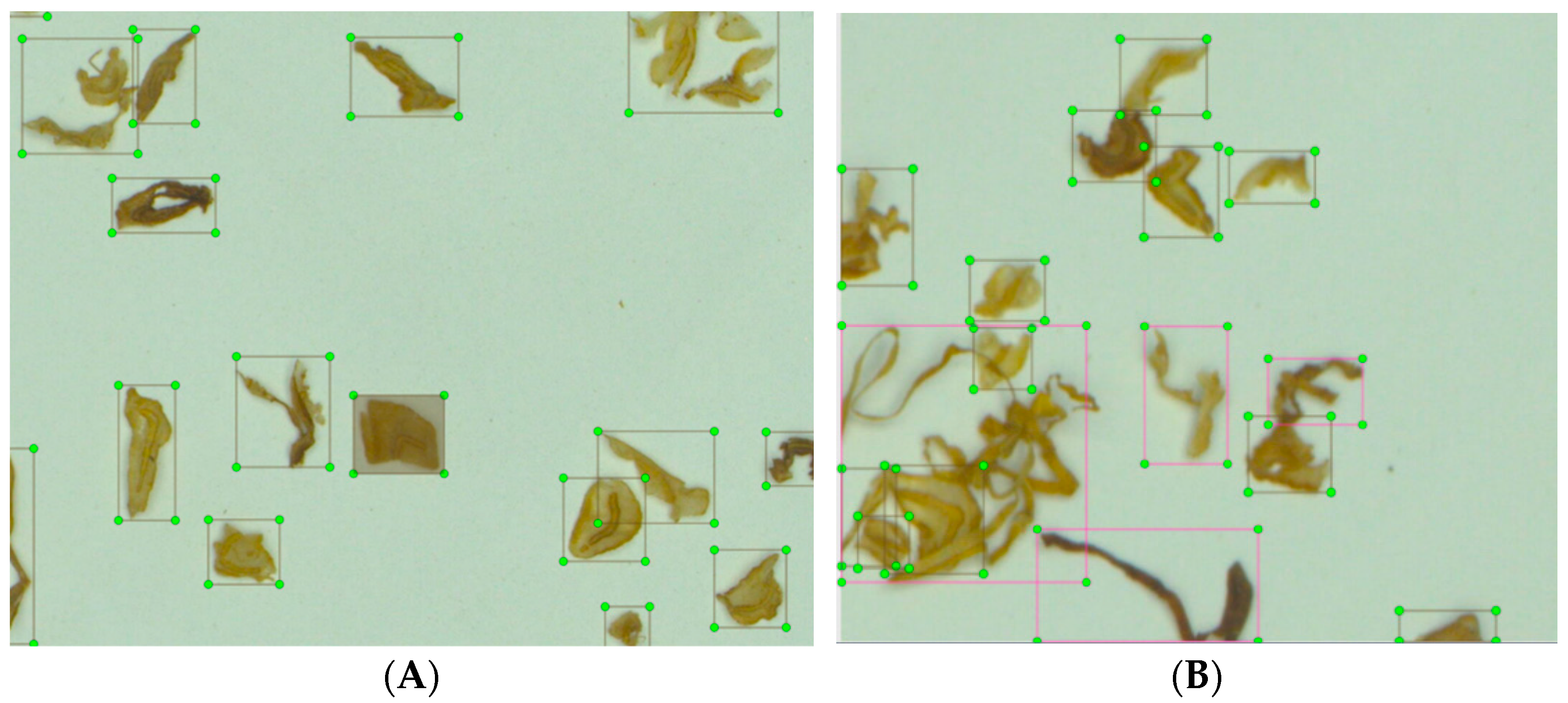

The preprocessed blended tobacco shred images were labeled using labellmg, an image annotation tool. The label types were divided into four types, named Y, G, P, and Z (tobacco silk, cut stem, expanded tobacco silk, and reconstituted tobacco shred, respectively). During the labeling process, non-overlapped tobacco shreds were labeled normally. The labeled images of pure cut stem, as an example, are shown in

Figure 5A. For the overlapped tobacco shreds, the process needed to be repeating with multi-labeling, which is shown in

Figure 5B, using tobacco silk-cut stem as an example.

5. Summary

Practical cigarette quality inspection line applications pose significant difficulties for rapid and accurate multi-target detection of blended tobacco shreds and calculating the unbroken tobacco shred rate. This study develops an improved YOLOv7-tiny multi-object detection model with an LWC algorithm to overcome the problems of identifying both tiny single and various overlapped tobacco shreds with complex morphological characteristics at once, that is, fast blended tobacco shred detection based on multiple targets and unbroken tobacco shred rate calculation tasks. This model was successfully applied to the multi-object detection of blended tobacco shreds and unbroken tobacco shred rate calculation to meet practical field needs. Based on the aforementioned statements, the following innovations were achieved:

A data collection platform for blended tobacco shreds was built to mimic the actual inspection environment. Datasets based on four conventional, pure, tobacco shreds and blended tobacco shreds were both created and preprocessed for homogenization to solve background inconsistencies, aiming at accurately identifying blended tobacco shreds in the actual production process. A 5300-sample dataset was enhanced with hsv, translate, scale, filplr, mosaic, mixup and paste_in, taking into account different complexity characteristics of blended tobacco shreds, which effectively avoided overfitting and ensured suitability for actual field use.

An improved YOLOv7 model is proposed with improvements such as multi-scale convolution, feature reuse, different PANet connections, and compression. Resnet19 as a new backbone of YOLOv7-tiny is constructed to enhance the depth and width of model feature maps without significantly increasing the network parameters. Secondly, SPPCSPC in the neck structure is changed to SPPFCSPC to increase model inference speed without increasing the model parameters. Finally, the head structure is optimized to a decoupled head, which decouples convolution for the detection task to enhance model multi-object detection performance. In the end, comparing different object detection models and different blended tobacco shred detection algorithms, Improved YOLOv7 had the best performance and was able to quickly and accurately complete multi-object detection of blended tobacco shreds in the field.

An LWC algorithm is proposed to obtain the unbroken tobacco shred rate of blended tobacco shreds. The LWC algorithm had an accuracy error of −1.7% in the length calculation and 13.1% in the width calculation. The LWC algorithm significantly improves the detection accuracy of the total size of the blended tobacco shreds.

The precision, mAP@.5, parameters, and prediction time of the proposed Improved YOLOv7, which were 0.883, 0.932, 20,572,002, and 4.12 ms, respectively, meet the practical requirements of cigarette inspections in the field in terms of accuracy and timeliness. The proposed system is very promising for actual production processes in terms of multi-object tobacco shred detection and unbroken tobacco shred rate calculation.

However, this study has several limitations, namely that some of the blended tobacco shreds are missed in the detection process, and the accuracy of tobacco shred detection and width calculation by the LWC algorithm needs to be further improved.

Follow-up work should consider the aspects below:

- (1)

Further optimize the performance of the model according to the missed detection of blended tobacco.

- (2)

Optimize the LWC algorithm to minimize width measurement error of tobacco shreds and overcome severe curvature reflection.

- (3)

The scheme proposed in this paper must be installed and applied in an actual tobacco quality inspection line to further optimize and verify the model and LWC algorithm.