Machine Learning-Based Human Posture Identification from Point Cloud Data Acquisitioned by FMCW Millimetre-Wave Radar

Abstract

:1. Introduction

- (1)

- This paper presents the application of FMCW millimetre-wave radar in multiple human body posture characteristics data measurements. The experiment shows that it can reflect the posture characteristics of the human body effectively.

- (2)

- To delete the non-interesting reflection points and realize the grouping of objects from the generated point cloud data, the clustering technique (DBSCAN algorithm) is introduced to traverse all the points in the space based on the density characteristics of the point cloud distribution.

- (3)

- To achieve feature importance ranking, Gini index-based random forest algorithm is utilized to obtain the normalized contribution of the feature, and further sort the feature according to the size of the contribution.

- (4)

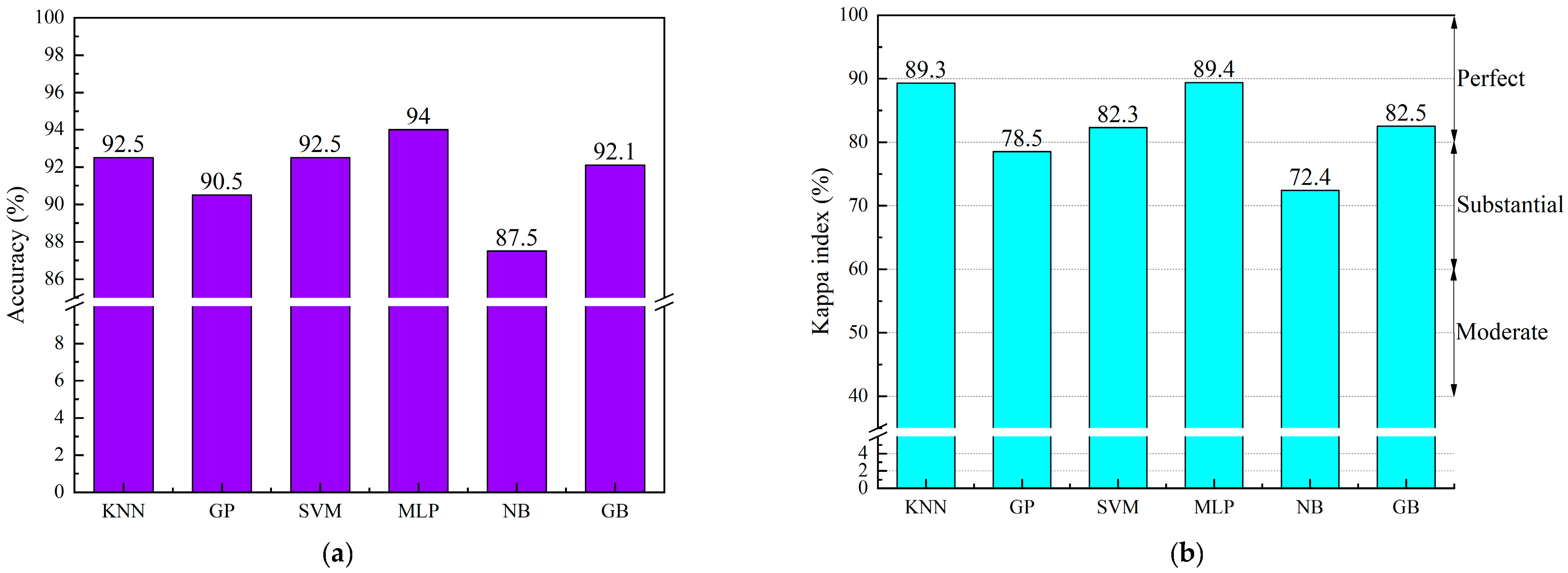

- To avoid the side effects from the uneven number of samples and compare the classification performance of different machine learning models, the Kappa Index is included along with other traditional evaluation criteria to evaluate the classification performance based on the proposed signal processing methods.

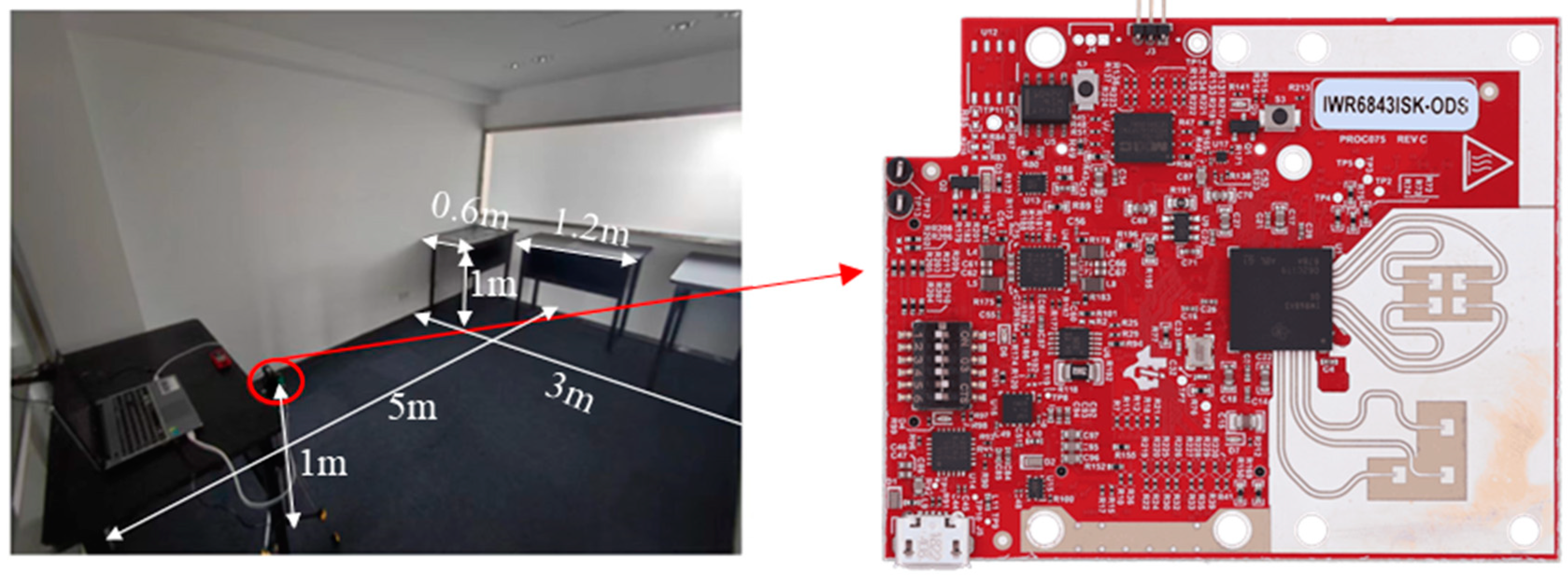

2. Data Collection and Processing

2.1. Data Collection

2.2. Data Processing

3. The Proposed Classification Research Method

3.1. Target Detection

3.2. Feature Extraction

3.3. Machine Learning Model

- (1)

- KNN: K-nearest neighbor is a non-parametric learning method. When a new sample is input, the algorithm can find the K training samples that are most similar to the new sample, so the adjustable parameters of KNN are only K values. By calculating the Euclidean distance or Manhattan distance between samples as the dissimilarity index of each sample.

- (2)

- GP: The probabilistic-based parameter-free model for regression and classification problems. Its principle is based on Bayesian inference, which treats the input data as random variables and models the output data as Gaussian distributions. The algorithm is based on probabilistic and kernel functions, which are used to model correlations between input data points and to make predictions using Bayesian inference. It is suitable for regression and classification problems and provides predictions with confidence.

- (3)

- SVM: The support vector machine is a classic supervised learning algorithm. Around the concept of “margin”, either side of the hyperplane separates two data classes, so SVM is a binary classification algorithm, as well as multiple binary classification problems, can be constructed to solve the multi-classification problem. Because of its robustness in multiple application types, it is regarded as a must-try method [38].

- (4)

- MLP: The multi-layer perceptron is a forward-structured artificial neural network, consisting of an input layer, hidden layer, and output layer. Feature data has been passed from the input layer to the hidden layer, which implements the nonlinear mapping to the input space, as well as the output layer implements classification. It is noteworthy that features can be classified even with only one hidden layer because enough units are included in the hidden layer.

- (5)

- NB: The Bayes theorem and the premise of feature condition independence underpin the Naive Bayes classification algorithm. The idea is to use the prior probability to calculate the posterior probability that a variable belongs to a certain category. The algorithm is also a type of supervised learning.

- (6)

- GB: Gradient boosting is an efficient ensemble learning algorithm based on the lifting principle. The algorithm continuously iterates through a weak prediction model composed of decision trees to train a strong prediction model in a way that minimizes the error of the previous round [39]. It can handle large datasets with high accuracy but is slower to train due to the sequential nature of gradient boosting.

3.4. Multi-Class Evaluation Index

4. Results and Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, X.Y.; Wang, S.; Liu, B.; Chen, W.; Fan, W.Q.; Tian, Z.J. Improved YOLOv4 network using infrared images for personnel detection in coal mines. J. Electron. Imaging 2022, 31, 13017. [Google Scholar]

- Batistela, R.A.; Oates, A.; Moraes, R. Haptic information and cognitive-visual task reduce postural sway in faller and non-faller older adults. Hum. Mov. Sci. 2018, 60, 150–161. [Google Scholar]

- Arshad, M.H.; Bilal, M.; Gani, A. Human Activity Recognition: Review, Taxonomy and Open Challenges. Sensors 2022, 22, 6463. [Google Scholar]

- Alanazi, M.A.; Alhazmi, A.K.; Alsattam, O.; Gnau, K.; Brown, M.; Thiel, S.; Jackson, K.; Chodavarapu, V.P. Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning. Sensors 2022, 22, 5470. [Google Scholar]

- Hussein, F.; Mughaid, A.; AlZu’bi, S.; El-Salhi, S.M.; Abuhaija, B.; Abualigah, L.; Gandomi, A.H. Hybrid CLAHE-CNN Deep Neural Networks for Classifying Lung Diseases from X-ray Acquisitions. Electronics 2022, 11, 3075. [Google Scholar]

- Koo, J.H.; Cho, S.W.; Baek, N.R.; Kim, M.C.; Park, K.R. CNN-Based Multimodal Human Recognition in Surveillance Environments. Sensors 2018, 18, 3040. [Google Scholar]

- Milon, I.M.; Sheikh, N.; Fakhri, K.; Ghulam, M. Multi-level feature fusion for multimodal human activity recognition in Internet of Healthcare Things. Inf. Fusion 2023, 94, 17–31. [Google Scholar]

- Kim, J.; Jeong, H.; Lee, S. Simultaneous Target Classification and Moving Direction Estimation in Millimeter-Wave Radar System. Sensors 2021, 21, 5228–5241. [Google Scholar]

- Wang, X.Y.; Wang, X.S.; Zhou, Z.Q. A high-accuracy calibration method for fusion systems of millimeter-wave radar and camera. Meas. Sci. Technol. 2022, 34, 15103. [Google Scholar]

- Guo, P.; Wu, F.; Tang, S.; Jiang, C.; Liu, C. Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion. Remote Sens. 2023, 15, 476. [Google Scholar]

- Park, J.; Park, S.; Kim, D.; Park, S. Leakage mitigation in heterodyne FMCW radar for small drone detection with stationary point concentration technique. IEEE Trans. Microw. Theory Tech. 2019, 67, 1221–1232. [Google Scholar]

- Marshall, H.; Koh, G. FMCW radars for snow research. Cold Reg. Sci. Technol. 2008, 52, 118–131. [Google Scholar]

- Mutschler, M.A.; Scharf, P.A.; Rippl, P.; Gessler, T.; Walter, T.; Waldschmidt, C. River Surface Analysis and Characterization Using FMCW Radar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2493–2502. [Google Scholar]

- Lang, S.N.; Cui, X.B.; Zhao, Y.K.; Xu, B.; Liu, X.J.; Cai, Y.H.; Wu, Q. A Novel Range Processing Method of Surface-Based FMCW Ice-Sounding Radar for Accurately Mapping the Internal Reflecting Horizons in Antarctica. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3633–3643. [Google Scholar]

- Yan, J.M.; Zhang, G.P.; Hong, H.; Chu, H.; Li, C.Z.; Zhu, X.H. Phase-based human target 2-D identification with a mobile FMCW radar platform. IEEE Trans. Microw. Theory Tech. 2019, 67, 5348–5359. [Google Scholar]

- Li, Y.; Feng, B.; Zhang, W. Mutual Interference Mitigation of Millimeter-Wave Radar Based on Variational Mode Decomposition and Signal Reconstruction. Remote Sens. 2023, 15, 557. [Google Scholar]

- Wu, J.M.; Wang, J.; Gao, Q.H.; Pan, M.; Zhang, H.X. Path-independent device-free gait recognition using mmwave signals. IEEE Trans. Veh. Technol. 2021, 70, 11582–11592. [Google Scholar]

- He, J.; Terashima, S.; Yamada, H.; Kidera, S. Diffraction Signal-Based Human Recognition in Non-Line-of-Sight (NLOS) Situation for Millimeter Wave Radar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4370–4380. [Google Scholar]

- Zhang, Z.L.; Meng, W.G.; Song, M.Q.; Liu, Y.H.; Zhao, Y.N.; Feng, X.; Li, F.C. Application of multi-angle millimeter-wave radar detection in human motion behavior and micro-action recognition. Meas. Sci. Technol. 2022, 33, 105107. [Google Scholar]

- Shrestha, A.; Li, H.B.; Le Kernec, J.; Fioranelli, F. Continuous human activity classification from FMCW radar with Bi-LSTM networks. IEEE Sens. J. 2020, 20, 13607–13619. [Google Scholar]

- Liang, T.X.; Xu, H.T. A posture recognition-based fall detection system using a 24GHz CMOS FMCW radar SoC. In Proceedings of the 2021 IEEE MTT-S International Wireless Symposium (IWS), Nanjing, China, 23–26 May 2021. [Google Scholar]

- Zhou, T.; Xia, Z.Y.; Wang, X.F.; Xu, F. Human sleep posture recognition based on millimeter-wave radar. In Proceedings of the 2021 Signal Processing Symposium (SPSympo), Lodz, Poland, 20–23 September 2021. [Google Scholar]

- Huang, Y.; Da, F.P. Three-dimensional face point cloud hole-filling algorithm based on binocular stereo matching and a B-spline. Front. Inf. Technol. Electron. Eng. 2022, 23, 398–408. [Google Scholar] [CrossRef]

- Wang, J.L.; Zhang, H.Y.; Gao, J.X.; Xiao, D. Dust Removal from 3D Point Cloud Data in Mine Plane Areas Based on Orthogonal Total Least Squares Fitting and GA-TELM. Comput. Intell. Neurosci. 2021, 2021, 9927982. [Google Scholar]

- Poux, F.; Mattes, C.; Kobbelt, L. Unsupervised Segmentation of Indoor 3d Point Cloud: Application to Object-Based Classification. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 44, 111–118. [Google Scholar] [CrossRef]

- Zhao, P.J.; Lu, C.X.X.; Wang, J.N.; Chen, C.H.; Wang, W.; Trigoni, N.; Markham, A. Human tracking and identification through a millimeter wave radar. Ad Hoc Netw. 2021, 116, 102475. [Google Scholar]

- Meng, Z.; Fu, S.; Yan, J.; Liang, H.Y.; Zhou, A.; Zhu, S.L.; Ma, H.D.; Liu, J.H.; Yang, N. Gait recognition for co-existing multiple people using millimeter wave sensing. Proc. AAAI Conf. Artif. Intell. 2020, 34, 849–856. [Google Scholar] [CrossRef]

- Alujaim, I.; Park, I.; Kim, Y. Human motion detection using planar array FMCW Radar through 3D point clouds. In Proceedings of the 2020 14th European Conference on Antennas and Propagation (EuCAP), Copenhagen, Denmark, 15–20 March 2020. [Google Scholar]

- Fang, Q.; Ibarra-Castanedo, C.; Maldague, X. Automatic Defects Segmentation and Identification by Deep Learning Algorithm with Pulsed Thermography: Synthetic and Experimental Data. Big Data Cogn. Comput. 2021, 5, 9. [Google Scholar] [CrossRef]

- Massaro, A.; Dipierro, G.; Cannella, E.; Galiano, A.M. Comparative Analysis among Discrete Fourier Transform, K-Means and Artificial Neural Networks Image Processing Techniques Oriented on Quality Control of Assembled Tires. Information 2020, 11, 257. [Google Scholar]

- Wang, L.; Xu, X.; Gui, R.; Yang, R.; Pu, F. Learning Rotation Domain Deep Mutual Information Using Convolutional LSTM for Unsupervised PolSAR Image Classification. Remote Sens. 2020, 12, 4075. [Google Scholar]

- Diraco, G.; Leone, A.; Siciliano, P. Human posture recognition with a time-of-flight 3D sensor for in-home applications. Expert Syst. Appl. 2013, 40, 744–751. [Google Scholar] [CrossRef]

- Werghi, N.; Xiao, Y.J. Recognition of human body posture from a cloud of 3D data points using wavelet transform coefficients. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 20–21 May 2002. [Google Scholar]

- Zhang, G.C.; Geng, X.Y.; Lin, Y.J. Comprehensive mpoint: A method for 3d point cloud generation of human bodies utilizing fmcw mimo mm-wave radar. Sensors 2021, 21, 6455. [Google Scholar]

- Cao, Z.H.; Fang, W.W.; Song, Y.Y.; He, L.; Song, C.Y.; Xu, Z.W. DNN-based peak sequence classification CFAR detection algorithm for high-resolution FMCW radar. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X.W. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, KDD, Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- Song, J.H.; Wang, Y.; Fang, Z.C.; Peng, L.; Hong, H.Y. Potential of ensemble learning to improve tree-based classifiers for landslide susceptibility mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4642–4662. [Google Scholar]

- Kotsiantis, S.B.; Zaharakis, I.; Pintelas, P. Supervised machine learning: A review of classification techniques. Emerg. Artif. Intell. Appl. Comput. Eng. 2007, 160, 3–24. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Rehman, A.U.; Lie, T.T.; Vallès, B.; Tito, S.R. Comparative evaluation of machine learning models and input feature space for non-intrusive load monitoring. J. Mod. Power Syst. Clean Energy 2021, 9, 1161–1171. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Parameter | Description |

|---|---|

| Start frequency | 60 GHz |

| Bandwidth | 3.92 GHz |

| Sampling frequency | 2200 ksps |

| Frequency slope | 98 MHz/μs |

| Frame rate | 5 fps |

| ADC Samples | 64 |

| Number of Chirps per frame | 200 |

| Serial Number | Symbol | Explanation |

|---|---|---|

| F0 | L | The length of human 3D point clouds |

| F1 | W | The width of human 3D point clouds |

| F2 | H | The height of human 3D point clouds |

| F3 | Xmean | The mean value of human 3D point clouds in the length direction |

| F4 | Ymean | The mean value of human 3D point clouds in the width direction |

| F5 | Zmean | The mean of human 3D point clouds in the height direction |

| F6 | Xsd | The standard deviation of human 3D point clouds in the length direction |

| F7 | Ysd | The standard deviation of human 3D point clouds in the width direction |

| F8 | Zsd | The standard deviation of human 3D point clouds in the height direction |

| F9 | Xc | The center coordinate of the reflection intensity of human 3D point clouds in the length direction |

| F10 | Yc | The center coordinate of the reflection intensity of human 3D point clouds in the width direction |

| F11 | Zc | The center coordinate of the reflection intensity of human 3D point clouds in the height direction |

| Kappa Index (%) | Label |

|---|---|

| Less than 0 | Poor |

| 0–20 | Slight |

| 21–40 | Fair |

| 41–60 | Moderate |

| 61–80 | Substantial |

| 81–100 | Nearly perfect |

| ML Model | Parameter Detail |

|---|---|

| KNN | n_neighbors = 5, weights = ‘uniform’, algorithm = ‘auto’ |

| GP | kernel = 1.0 ∗ rbf(1.0), random_state = 0 |

| SVM | C = 33, kernel = ‘rbf’ |

| MLP | hidden_layer_sizes = (175), activation = ‘relu’, solver = ‘lbfgs’ |

| NB | priors = None |

| GB | Loss = deviance, learning_rate = 0.1, n_estimators = 100 |

| Posture | KNN | GP | SVM | MLP | NB | GB | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | |

| hands up | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 96 | 100 | 98 | 100 | 91 | 95 | 100 | 96 | 98 |

| horse stance | 88 | 100 | 93 | 78 | 100 | 88 | 76 | 93 | 84 | 100 | 86 | 92 | 85 | 79 | 81 | 92 | 86 | 89 |

| lunge | 95 | 95 | 95 | 94 | 84 | 89 | 94 | 89 | 92 | 100 | 100 | 100 | 89 | 89 | 89 | 95 | 95 | 95 |

| lying down | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 95 | 100 | 98 | 100 | 100 | 100 |

| sitting | 96 | 89 | 93 | 92 | 85 | 88 | 96 | 89 | 92 | 93 | 96 | 95 | 89 | 89 | 89 | 89 | 93 | 91 |

| standing | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 89 | 100 | 94 | 94 | 100 | 97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Li, S.; Zhang, K.; Lin, Y.-J. Machine Learning-Based Human Posture Identification from Point Cloud Data Acquisitioned by FMCW Millimetre-Wave Radar. Sensors 2023, 23, 7208. https://doi.org/10.3390/s23167208

Zhang G, Li S, Zhang K, Lin Y-J. Machine Learning-Based Human Posture Identification from Point Cloud Data Acquisitioned by FMCW Millimetre-Wave Radar. Sensors. 2023; 23(16):7208. https://doi.org/10.3390/s23167208

Chicago/Turabian StyleZhang, Guangcheng, Shenchen Li, Kai Zhang, and Yueh-Jaw Lin. 2023. "Machine Learning-Based Human Posture Identification from Point Cloud Data Acquisitioned by FMCW Millimetre-Wave Radar" Sensors 23, no. 16: 7208. https://doi.org/10.3390/s23167208

APA StyleZhang, G., Li, S., Zhang, K., & Lin, Y.-J. (2023). Machine Learning-Based Human Posture Identification from Point Cloud Data Acquisitioned by FMCW Millimetre-Wave Radar. Sensors, 23(16), 7208. https://doi.org/10.3390/s23167208