Cellular Network Fault Diagnosis Method Based on a Graph Convolutional Neural Network

Abstract

1. Introduction

- Bayesian Networks: Bayesian networks are probabilistic graphical models that represent variables and their dependencies using directed acyclic graphs. They can be used to model network faults and their relationships, allowing for diagnosis and prediction of faults based on observed data. However, GCN was considered in this paper for the reason that it can leverage parallel processing and optimization techniques, making them scalable and efficient for large-scale network analysis. They can handle millions of nodes and edges efficiently, which is crucial for real-world network fault diagnosis. Bayesian networks, especially when dealing with complex and large networks, can be computationally expensive and may face challenges in scalability.

- Support Vector Machines (SVM): SVMs are supervised learning models that can classify data into different categories. They can be trained on labeled network data to detect and diagnose faults based on patterns and features extracted from the network. We chose GCNs because they have the ability to learn end-to-end representations of the network data, automatically extracting relevant features directly from the graph structure. This can be advantageous for fault diagnosis as it avoids the need for manual feature engineering. SVMs, on the other hand, typically rely on handcrafted features to perform classification or anomaly detection, which may require domain expertise and time-consuming feature engineering.

- Construction of Graph Data: Our method constructs graph data by leveraging the similarity between nodes, capturing the intricate relationships within the network.

- Utilization of GCN: The constructed graph data is then fed into GCN, enabling effective network fault diagnosis by leveraging the power of graph-based learning.

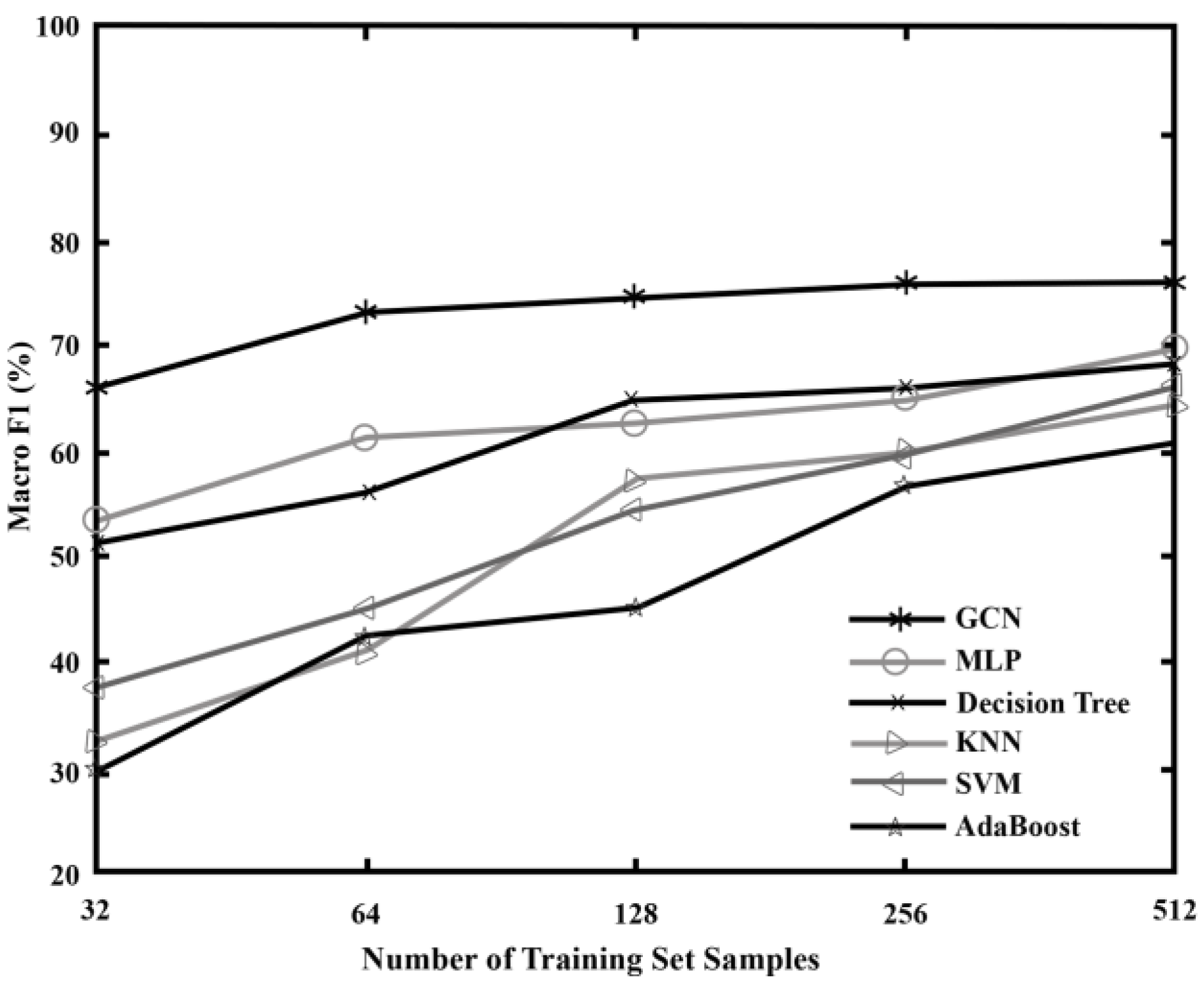

- High Diagnostic Accuracy: Experimental simulations on the drive test dataset demonstrate the effectiveness of our proposed algorithm. Even with a reduced number of training samples, our method achieves high diagnostic accuracy, outperforming traditional algorithms.

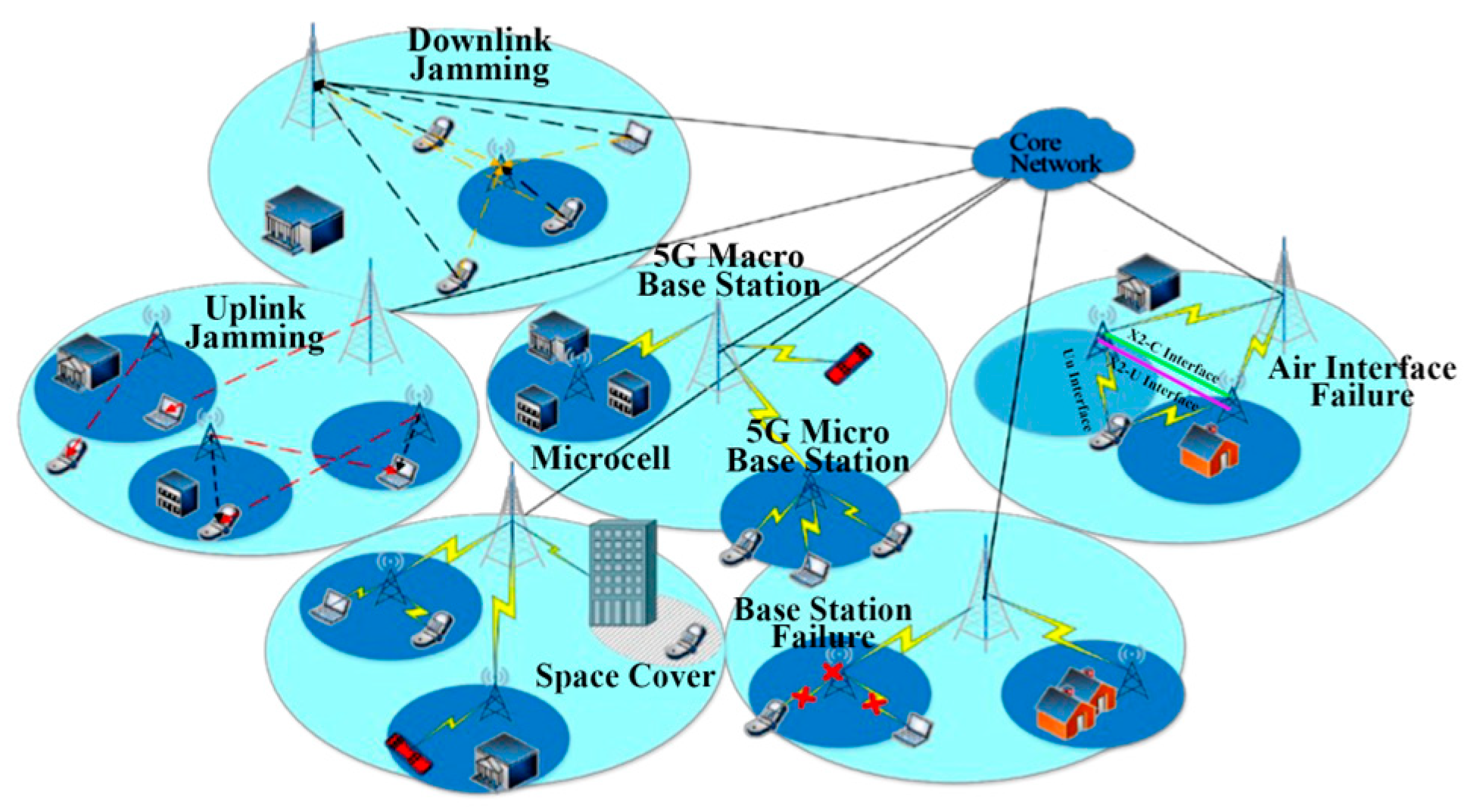

2. System Parameters

3. Feature Parameter Selection

4. Fault Diagnosis Method Based on Graph Convolutional Neural Network

4.1. Generation of Graph Data

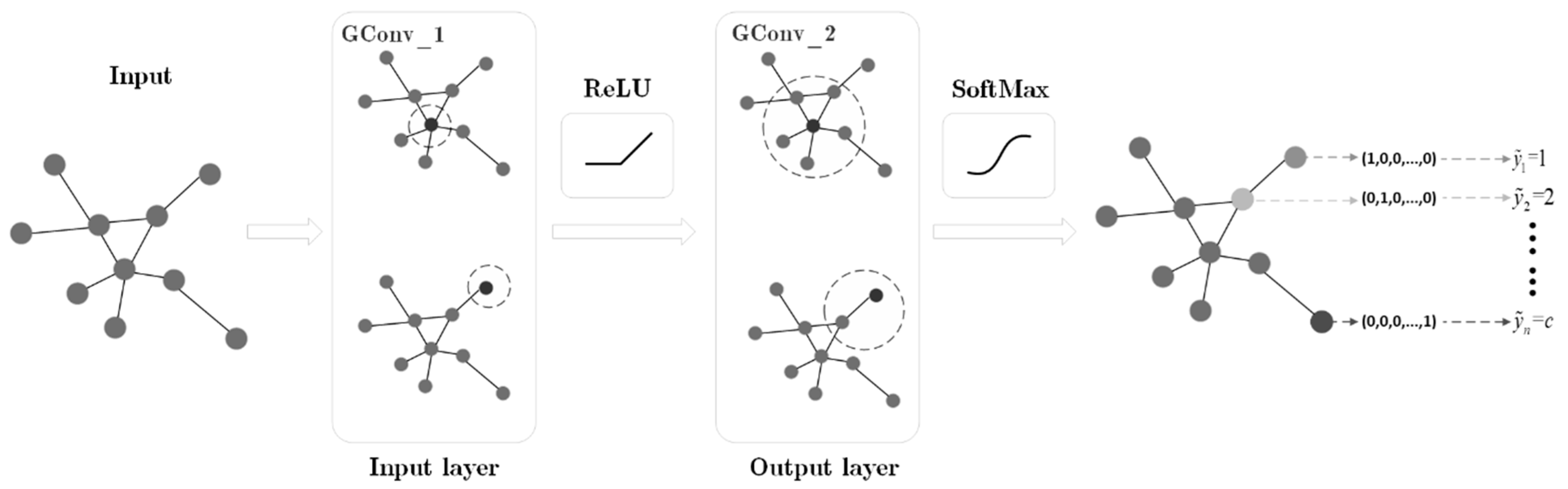

4.2. Graph Convolutional Neural Networks

4.3. Building a Fault Diagnosis Model Based on Graph Convolutional Neural Network

4.4. GCN Fault Diagnosis Process

| Algorithm 1 Network Fault Diagnosis Algorithm Based on Graph Convolutional Neural Network |

| A. Graph data construction Input: network failure dataset after dimensionality reduction, Gaussian bandwidth parameter , threshold ; Output: feature matrix X, label matrix Y and adjacency matrix A. 1. The network failure dataset after dimensionality reduction {(x1, y1),…,(xl, yl), (x1+1, 0),…, (xn, 0)} The eigenvectors of each sample are superimposed onto an eigenmatrix X. 2. Single-hot encoding is performed on the predefined c common network faults, and the label matrix Y of the network fault data set is obtained according to the corresponding encoding. for i = 1 to n do for j = 1 to c do (1) if xi is labeled and yi = j then Yi,j = 1 else Yi,j = 0 end for end for 3. Construct the adjacency matrix A of the graph. for i = 1 to n do for j = 1 to c and do (1) , if (2) if then Ai,j = 1 else Ai,j = 0 end for end for 4. Output feature matrix X, label matrix Y, and adjacency matrix A. B. Network fault diagnosis based on graph convolutional neural networks Input: feature matrix X, label matrix Y, and adjacency matrix A; Output: set Z of network failures.

CE loss, Error backpropagation updates the parameters of the filter matrix in each graph convolutional layer in the GCN. end for

|

5. Experimental Simulation and Performance Evaluation

5.1. Dataset Description

5.2. Feature Filtering

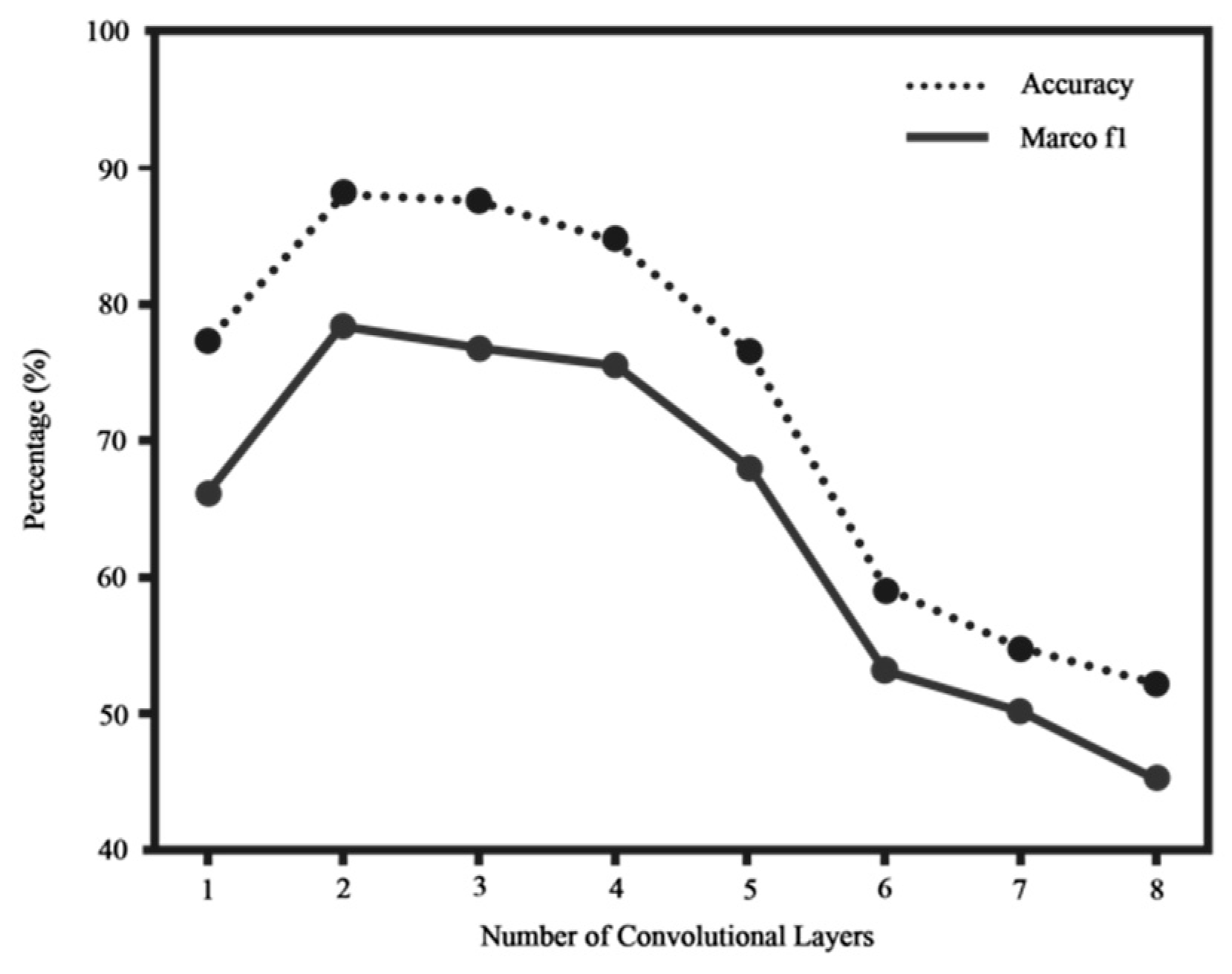

5.3. GCN Parameter Settings

5.4. Comparative Experimental Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mfula, H.; Nurminen, J.K. Adaptive Root Cause Analysis for Self-Healing in 5G Networks. In Proceedings of the 2017 International Conference on High Performance Computing & Simulation (HPCS), Genoa, Italy, 17–21 July 2017; pp. 136–143. [Google Scholar] [CrossRef]

- Khanafer, R.M.; Solana, B.; Triola, J.; Barco, R.; Moltsen, L.; Altman, Z.; Lazaro, P. Automated Diagnosis for UMTS Networks Using Bayesian Network Approach. IEEE Trans. Veh. Technol. 2008, 57, 2451–2461. [Google Scholar] [CrossRef]

- Muñoz, P.; de la Bandera, I.; Khatib, E.J.; Gómez-Andrades, A.; Serrano, I.; Barco, R. Root Cause Analysis Based on Temporal Analysis of Metrics Toward Self-Organizing 5G Networks. IEEE Trans. Veh. Technol. 2017, 66, 2811–2824. [Google Scholar] [CrossRef]

- Szilagyi, P.; Novaczki, S. An Automatic Detection and Diagnosis Framework for Mobile Communication Systems. IEEE Trans. Netw. Serv. Manag. 2012, 9, 184–197. [Google Scholar] [CrossRef]

- Gómez-Andrades, A.; Muñoz, P.; Serrano, I.; Barco, R. Automatic Root Cause Analysis for LTE Networks Based on Unsupervised Techniques. IEEE Trans. Veh. Technol. 2016, 65, 2369–2386. [Google Scholar] [CrossRef]

- Gómez-Andrades, A.; Barco, R.; Muñoz, P.; Serrano, I. Data Analytics for Diagnosing the RF Condition in Self-Organizing Networks. IEEE Trans. Mob. Comput. 2017, 16, 1587–1600. [Google Scholar] [CrossRef]

- Mismar, F.B.; Choi, J.; Evans, B.L. A Framework for Automated Cellular Network Tuning With Reinforcement Learning. IEEE Trans. Commun. 2019, 67, 7152–7167. [Google Scholar] [CrossRef]

- Chen, K.; Chang, T.; Wang, K.; Lee, T. Machine Learning Based Automatic Diagnosis in Mobile Communication Networks. IEEE Trans. Veh. Technol. 2019, 68, 10081–10093. [Google Scholar] [CrossRef]

- Zhang, K. The railway turnout fault diagnosis algorithm based on BP neural network. In Proceedings of the 2014 IEEE International Conference on Control Science and Systems Engineering, Yantai, China, 29–30 December 2014; pp. 135–138. [Google Scholar] [CrossRef]

- Jin, C.; Li, L.E.; Bu, T.; Sanders, S.W. System and Method for Root Cause Analysis of Mobile Network Performance Problems. U.S. Patent Application 13/436,212, 3 October 2013. [Google Scholar]

- Yang, S.; Zhou, Y.; Chen, X.; Li, C.; Song, H. Fault diagnosis for wind turbines with graph neural network model based on one-shot learning. R. Soc. Open Sci. 2023, 10, 230706. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Yang, X.; Yang, X. A graph neural network-based bearing fault detection method. Sci. Rep. 2023, 13, 5286. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Xu, J.; Peng, T.; Yang, C. Graph Convolutional Network-Based Method for Fault Diagnosis Using a Hybrid of Measurement and Prior Knowledge. IEEE Trans. Cybern. 2021, 512, 9157–9169. [Google Scholar] [CrossRef] [PubMed]

- Bingbing, X.; Curtin, C.; Junjie, H.; Huawei, S.; Xueqi, C. A Survey of Graph Convolutional Neural Networks. J. Comput. 2020, 43, 755–780. [Google Scholar]

- Michal, D.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Lausanne, Switzerland, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Thomas, N.K.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Liao, W.; Yang, D.; Wang, Y.; Ren, X. Fault diagnosis of power transformers using graph convolutional network. CSEE J. Power Energy Syst. 2021, 7, 241–249. [Google Scholar] [CrossRef]

- Qimai, L.; Han, Z.; Wu, X.M. Deeper insights into graph convolutional networks for semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–3 February 2018; Volume 32. [Google Scholar]

| KPI | Short Name |

|---|---|

| Reference Signal Receiving Power | RSRP |

| Reference Signal Receiving Quality | RSRQ |

| Packet Drop_Uplink | PD_UL |

| Packet Drop_Downlink | PD_DL |

| Signal-to-noise Ratio_Uplink | SNR_UL |

| Signal-to-noise Ratio_Downlink | SNR_DL |

| Radio Resource Control | RRC |

| Evolved Radio Access Bearer | E-RAB |

| Dropped Call Rate | DCR |

| Handover Success Rate | HO |

| Throughput_Uplink | Throughput_UL |

| Throughput_Downlink | Throughput_DL |

| Link Throughput (Out) | LT (out) |

| Link Throughput (in) | LT(in) |

| Handover Delay | HO_d |

| Link bit Error Rate | LER |

| Category Label | Type of Network Failure | Coding |

|---|---|---|

| 1 | Normal Conditions | 1 0 0 0 0 0 |

| 2 | Uplink Interference | 0 1 0 0 0 0 |

| 3 | Downlink Interference | 0 0 1 0 0 0 |

| 4 | Space Coverage | 0 0 0 1 0 0 |

| 5 | Air Interface Failure | 0 0 0 0 1 0 |

| 6 | Base Station Failure | 0 0 0 0 0 1 |

| Serial Number | Fault Type | Quantity |

|---|---|---|

| 1 | Normal Circumstances | 1420 |

| 2 | Uplink Interference | 175 |

| 3 | Downlink Interference | 175 |

| 4 | Cover The Holes | 496 |

| 5 | Air Interface Failure | 496 |

| 6 | Base Station Failure | 496 |

| Layers | Layer (Type) | Output Feature Size |

|---|---|---|

| 1 | Input Layer | 3258 × 11 |

| 2 | Dropout Layer 1 (rate = 0.25) | 3258 × 11 |

| 3 | Graph Convolutional Layer 1 | 3258 × 7 |

| 4 | Dropout Layer 2 (rate = 0.25) | 3258 × 7 |

| 5 | Graph Convolutional Layer 2 | 3258 × 6 |

| 6 | SoftMax Layer | 3258 × 6 |

| α Value | Number of Connected Edges in the Graph | Accuracy | Macro F1 |

|---|---|---|---|

| 0.95 | 4495 | 73.09% | 66.12% |

| 0.90 | 64,203 | 84.46% | 68.32% |

| 0.85 | 223,448 | 87.84% | 76.77% |

| 0.80 | 403,692 | 88.06% | 78.80% |

| 0.75 | 556,621 | 86.26% | 75.49% |

| 0.70 | 793,262 | 78.72% | 68.09% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amuah, E.A.; Wu, M.; Zhu, X. Cellular Network Fault Diagnosis Method Based on a Graph Convolutional Neural Network. Sensors 2023, 23, 7042. https://doi.org/10.3390/s23167042

Amuah EA, Wu M, Zhu X. Cellular Network Fault Diagnosis Method Based on a Graph Convolutional Neural Network. Sensors. 2023; 23(16):7042. https://doi.org/10.3390/s23167042

Chicago/Turabian StyleAmuah, Ebenezer Ackah, Mingxiao Wu, and Xiaorong Zhu. 2023. "Cellular Network Fault Diagnosis Method Based on a Graph Convolutional Neural Network" Sensors 23, no. 16: 7042. https://doi.org/10.3390/s23167042

APA StyleAmuah, E. A., Wu, M., & Zhu, X. (2023). Cellular Network Fault Diagnosis Method Based on a Graph Convolutional Neural Network. Sensors, 23(16), 7042. https://doi.org/10.3390/s23167042