Railway Track Fault Detection Using Selective MFCC Features from Acoustic Data

Abstract

1. Introduction

2. Related Work

3. Proposed Methodology

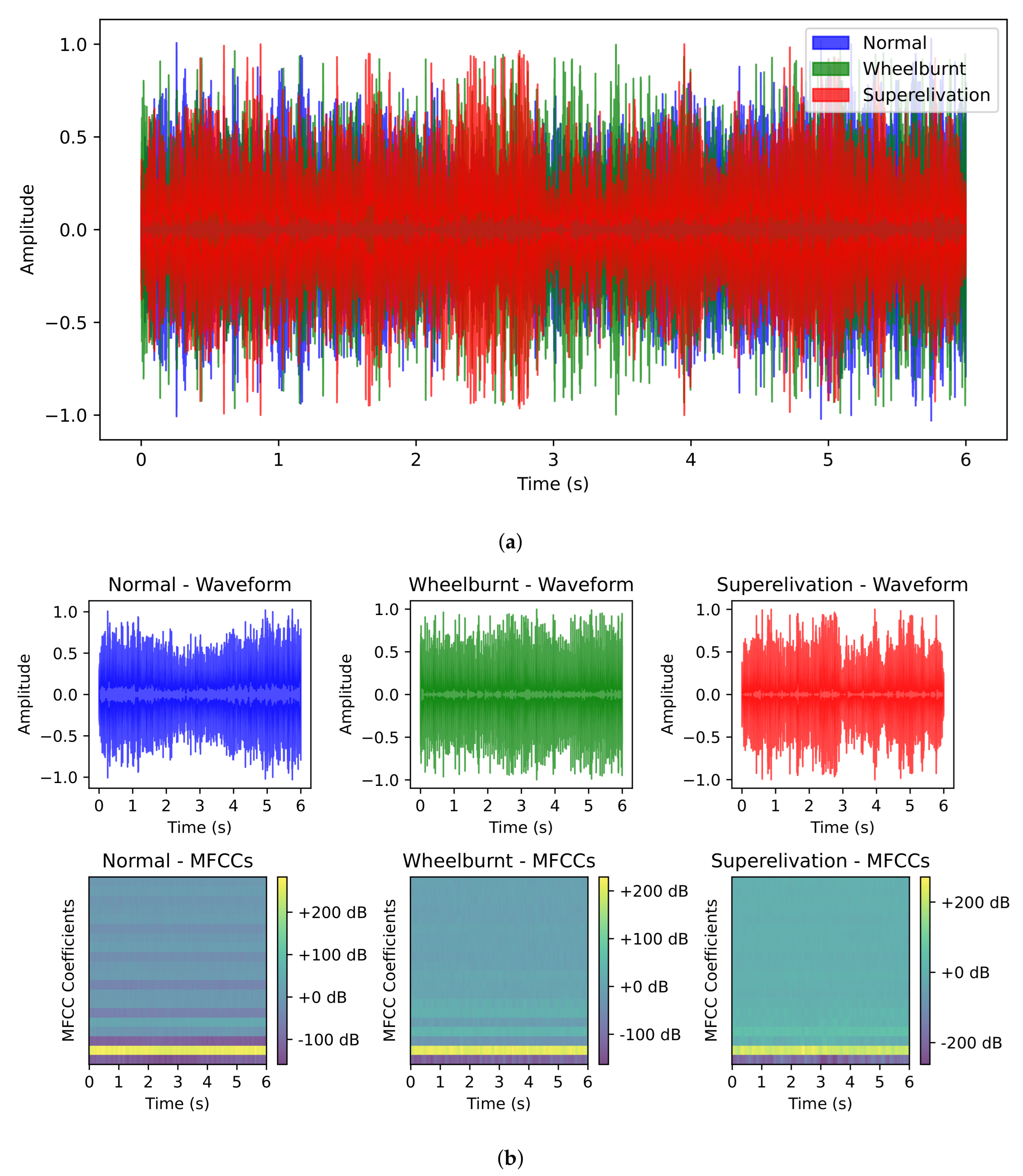

3.1. Dataset

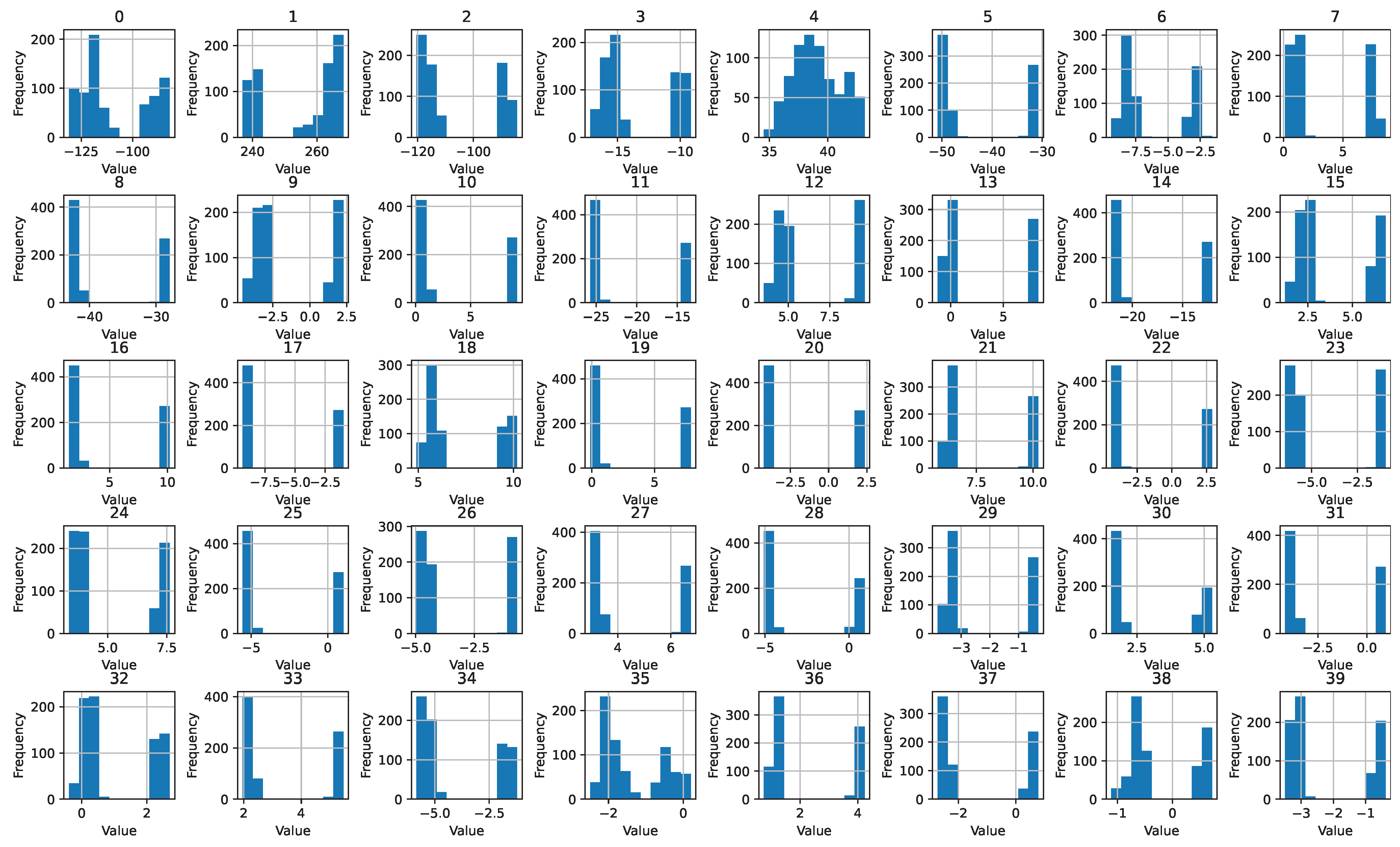

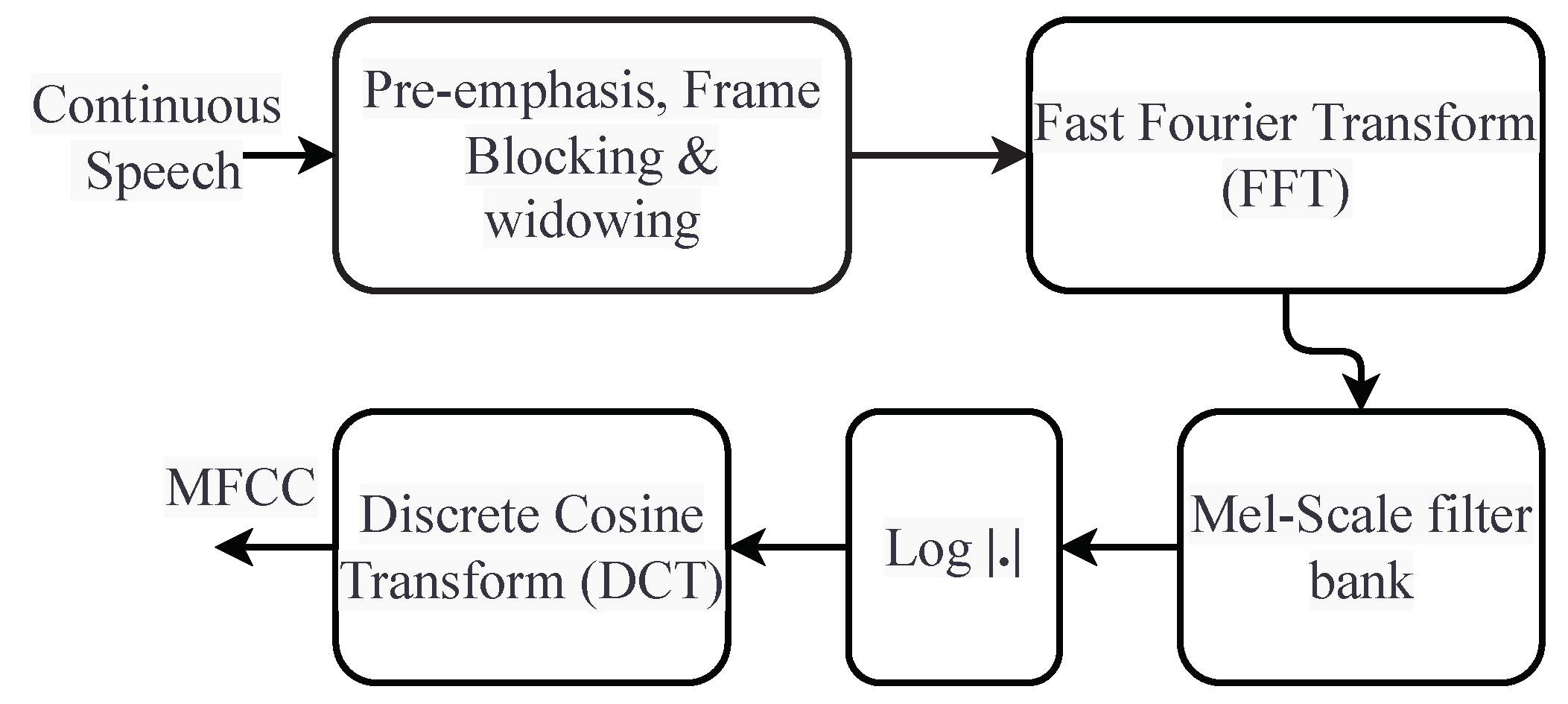

3.2. MFCC (Mel Frequency Cepstral Coefficients)

- Shorten the length of the signal by dividing it into short frames.

- For each frame, the estimated power spectrum period gram is calculated.

- For each filter’s total energy, apply the mel-filter bank to the power spectra.

- The filter bank energies are added.

- Take the DCT of the log filter bank energy.

- The first 40 DCT coefficients should be kept, while the rest should be discarded.

3.3. Chi Square

3.4. Machine Learning Models

3.4.1. Logistic Regression

3.4.2. Random Forest

3.4.3. K-Nearest Neighbor

3.4.4. Support Vector Machine

3.4.5. Adaboost Classifier

3.4.6. Extra Tree Classifier

3.4.7. Gradient Boosting Classifier

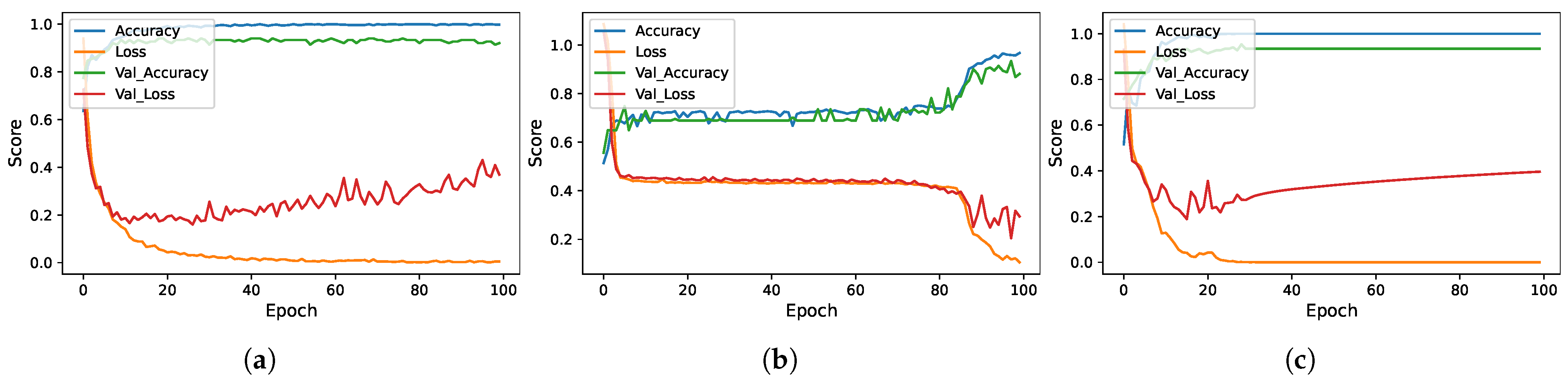

3.5. Deep Learning Models

3.5.1. Convolutional Neural Network

3.5.2. Long Short-Term Memory

3.5.3. CNN-LSTM Ensemble

4. Results and Discussion

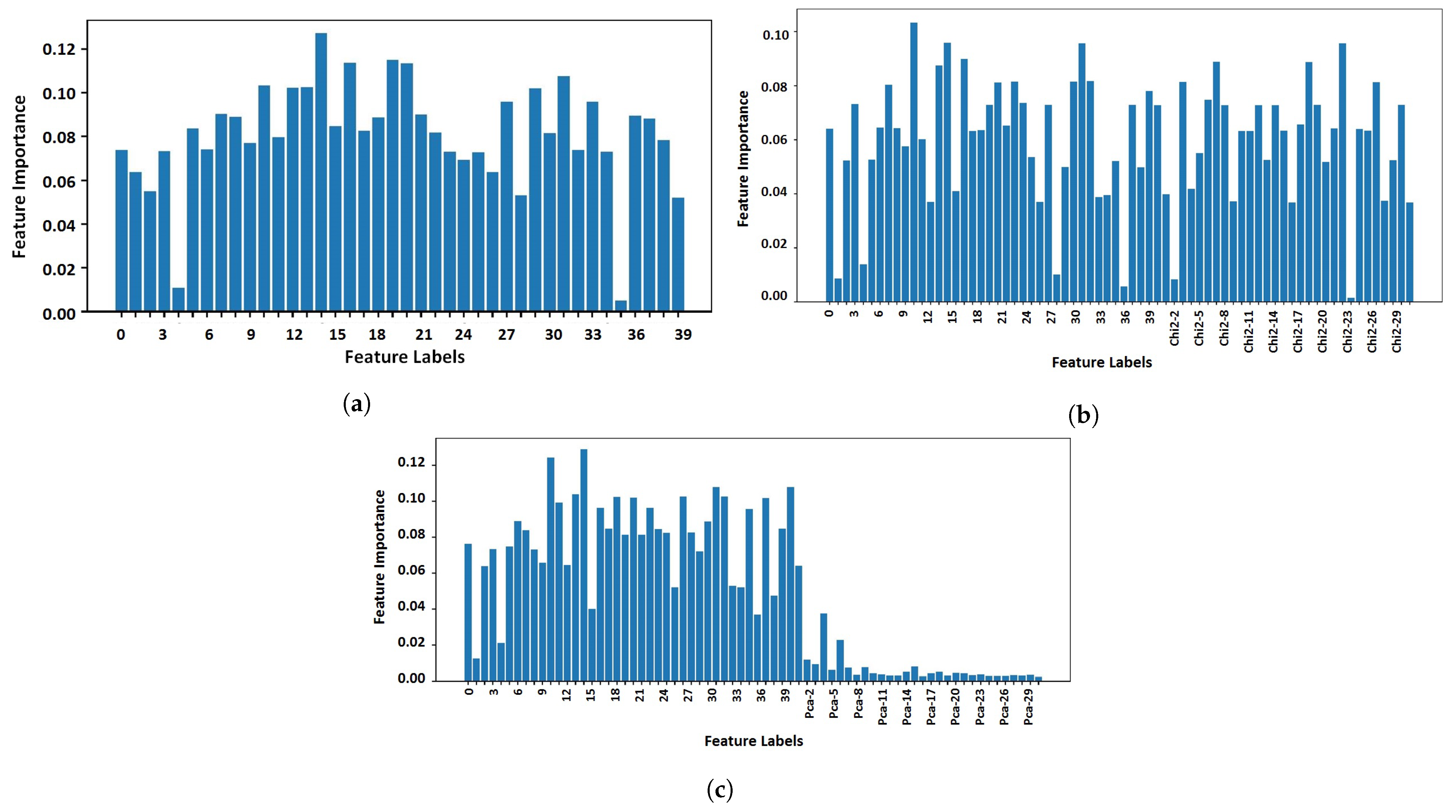

4.1. Experiments Using Original Features

4.2. Impact of Number of Features

4.3. Analysis of Feature Space

4.4. Computational Complexity of Models

4.5. Comparison with Previous Studies

4.6. Statistical t-Test Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cannon, D.; Edel, K.O.; Grassie, S.; Sawley, K. Rail defects: An overview. Fatigue Fract. Eng. Mater. Struct. 2003, 26, 865–886. [Google Scholar] [CrossRef]

- Beeck, F. Track Circuit Monitoring Tool: Standardization and Deployment at CTA; Technical Report; The National Academies of Sciences: Washington, DC, USA, 2017. [Google Scholar]

- Rail Defects Handbook. Available online: https://extranet.artc.com.au/docs/eng/track-civil/guidelines/rail/RC2400.pdf (accessed on 5 May 2022).

- Ji, A.; Woo, W.L.; Wong, E.W.L.; Quek, Y.T. Rail track condition monitoring: A review on deep learning approaches. Intell. Robot 2021, 1, 151–175. [Google Scholar] [CrossRef]

- British Broadcasting Corporation. Pakistan Train Fire: Are Accidents at a Record High? Available online: https://www.bbc.com/news/world-asia-50252409 (accessed on 5 May 2022).

- Statista. Number of Rail Accidents and Incidents in the United States from 2013 to 2020. Available online: https://www.statista.com/statistics/204569/rail-accidents-in-the-us/ (accessed on 5 May 2022).

- Mustafa, A.; Rasheed, O.; Rehman, S.; Ullah, F.; Ahmed, S. Sensor Based Smart Railway Accident Detection and Prevention System for Smart Cities Using Real Time Mobile Communication. Wirel. Pers. Commun. 2023, 128, 1133–1152. [Google Scholar] [CrossRef]

- Pakistan Railways Achieves Record Income in 2018–2019. Available online: https://www.railjournal.com/news/pakistan-railways-achieves-record-income-in-2018-19/ (accessed on 5 May 2022).

- Auditor General of Pakistan. Audit Report on the Accounts of Pakistan Railways Audit Year 2019–2020; Ministry of Railways Govt of Pakistan: Lahore, Pakistan, 2020. Available online: https://agp.gov.pk/SiteImage/Policy/Audit%20Report%202019-20%20Railways..pdf (accessed on 5 May 2022).

- Outrage over train crash that killed 20 in Pakistan. Available online: https://gulfnews.com/world/asia/pakistan/outrage-over-train-crash-that-killed-20-in-pakistan-1.70052037 (accessed on 5 May 2022).

- Asber, J. A Machine Learning-Based Approach for Fault Detection of Railway Track and Its Components. Master’s Thesis, Luleå University of Technology, Operation, Maintenance and Acoustics, Lulea, Sweden, 2020. [Google Scholar]

- Zhuang, L.; Wang, L.; Zhang, Z.; Tsui, K.L. Automated vision inspection of rail surface cracks: A double-layer data-driven framework. Transp. Res. Part C Emerg. Technol. 2018, 92, 258–277. [Google Scholar] [CrossRef]

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A survey of deep learning applications to autonomous vehicle control. IEEE Trans. Intell. Transp. Syst. 2020, 22, 712–733. [Google Scholar] [CrossRef]

- Kaewunruen, S.; Osman, M.H. Dealing with disruptions in railway track inspection using risk-based machine learning. Sci. Rep. 2023, 13, 2141. [Google Scholar] [CrossRef]

- James, A.; Jie, W.; Xulei, Y.; Chenghao, Y.; Ngan, N.B.; Yuxin, L.; Yi, S.; Chandrasekhar, V.; Zeng, Z. Tracknet-a deep learning-based fault detection for railway track inspection. In Proceedings of the 2018 International Conference on Intelligent Rail Transportation (ICIRT), Singapore, 12–14 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Wei, X.; Wei, D.; Suo, D.; Jia, L.; Li, Y. Multi-target defect identification for railway track line based on image processing and improved YOLOv3 model. IEEE Access 2020, 8, 61973–61988. [Google Scholar] [CrossRef]

- Shafique, R.; Siddiqui, H.U.R.; Rustam, F.; Ullah, S.; Siddique, M.A.; Lee, E.; Ashraf, I.; Dudley, S. A novel approach to railway track faults detection using acoustic analysis. Sensors 2021, 21, 6221. [Google Scholar] [CrossRef]

- Mendieta, M.; Neff, C.; Lingerfelt, D.; Beam, C.; George, A.; Rogers, S.; Ravindran, A.; Tabkhi, H. A Novel Application/Infrastructure Co-design Approach for Real-time Edge Video Analytics. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- George, A.; Ravindran, A. Distributed middleware for edge vision systems. In Proceedings of the 2019 IEEE 16th International Conference on Smart Cities: Improving Quality of Life Using ICT & IoT and AI (HONET-ICT), Charlotte, NC, USA, 6–9 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 193–194. [Google Scholar]

- Rustam, F.; Ashraf, I.; Mehmood, A.; Ullah, S.; Choi, G.S. Tweets classification on the base of sentiments for US airline companies. Entropy 2019, 21, 1078. [Google Scholar] [CrossRef]

- Rustam, F.; Reshi, A.A.; Aljedaani, W.; Alhossan, A.; Ishaq, A.; Shafi, S.; Lee, E.; Alrabiah, Z.; Alsuwailem, H.; Ahmad, A.; et al. Vector mosquito image classification using novel RIFS feature selection and machine learning models for disease epidemiology. Saudi J. Biol. Sci. 2022, 29, 583–594. [Google Scholar] [CrossRef]

- George, A.; Ravindran, A.; Mendieta, M.; Tabkhi, H. Mez: An adaptive messaging system for latency-sensitive multi-camera machine vision at the iot edge. IEEE Access 2021, 9, 21457–21473. [Google Scholar] [CrossRef]

- Bhushan, M.; Sujay, S.; Tushar, B.; Chitra, P. Automated vehicle for railway track fault detection. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 263, p. 052045. [Google Scholar]

- Hashmi, M.S.A.; Ibrahim, M.; Bajwa, I.S.; Siddiqui, H.U.R.; Rustam, F.; Lee, E.; Ashraf, I. Railway track inspection using deep learning based on audio to spectrogram conversion: An on-the-fly approach. Sensors 2022, 22, 1983. [Google Scholar] [CrossRef]

- Ritika, S.; Rao, D. Data Augmentation of Railway Images for Track Inspection. arXiv 2018, arXiv:1802.01286. [Google Scholar]

- Faghih-Roohi, S.; Hajizadeh, S.; Núñez, A.; Babuska, R.; De Schutter, B. Deep convolutional neural networks for detection of rail surface defects. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2584–2589. [Google Scholar]

- Manikandan, R.; Balasubramanian, M.; Palanivel, S. An efficient framework to detect cracks in rail tracks using neural network classifier. Am.-Eurasian J. Sci. Res. 2017, 12, 218–222. [Google Scholar]

- Santur, Y.; Karaköse, M.; Akin, E. Random forest-based diagnosis approach for rail fault inspection in railways. In Proceedings of the 2016 National Conference on Electrical, Electronics and Biomedical Engineering (ELECO), Bursa, Turkey, 1–3 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 745–750. [Google Scholar]

- Tastimur, C.; Yetis, H.; Karaköse, M.; Akin, E. Rail defect detection and classification with real time image processing technique. Int. J. Comput. Sci. Softw. Eng. 2016, 5, 283. [Google Scholar]

- Chen, Z.; Wang, Q.; Yang, K.; Yu, T.; Yao, J.; Liu, Y.; Wang, P.; He, Q. Deep learning for the detection and recognition of rail defects in ultrasound B-scan images. Transp. Res. Rec. 2021, 2675, 888–901. [Google Scholar] [CrossRef]

- Li, H.; Wang, F.; Liu, J.; Song, H.; Hou, Z.; Dai, P. Ensemble model for rail surface defects detection. PLoS ONE 2022, 17, e0268518. [Google Scholar] [CrossRef]

- Nandhini, S.; Mohammed Saif, K.V.P.S.E. Robust Automatic Railway Track Crack Detection Using Unsupervised Multi-Scale CNN. Available online: https://ijarsct.co.in/Paper2423.pdf (accessed on 5 May 2022).

- Chauhan, P.M.; Desai, N.P. Mel frequency cepstral coefficients (MFCC) based speaker identification in noisy environment using wiener filter. In Proceedings of the 2014 International Conference on Green Computing Communication and Electrical Engineering (ICGCCEE), Coimbatore, India, 6–8 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–5. [Google Scholar]

- Alim, S.A.; Rashid, N.K.A. Some Commonly Used Speech Feature Extraction Algorithms; IntechOpen: London, UK, 2018. [Google Scholar]

- Tiwari, V. MFCC and its applications in speaker recognition. Int. J. Emerg. Technol. 2010, 1, 19–22. [Google Scholar]

- Zhai, Y.; Song, W.; Liu, X.; Liu, L.; Zhao, X. A chi-square statistics based feature selection method in text classification. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 160–163. [Google Scholar]

- ML|Extra Tree Classifier for Feature Selection. Available online: https://www.geeksforgeeks.org/ml-extra-tree-classifier-for-feature-selection/ (accessed on 5 November 2022).

- Al Omari, M.; Al-Hajj, M.; Hammami, N.; Sabra, A. Sentiment classifier: Logistic regression for arabic services’ reviews in lebanon. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Gasso, G. Logistic Regression. 2019. Available online: https://moodle.insa-rouen.fr/pluginfile.php/7984/mod_resource/content/6/Parties_1_et_3_DM/RegLog_Eng.pdf (accessed on 5 November 2022).

- Ranjan, S.; Sood, S. Social network investor sentiments for predicting stock price trends. Int. J. Sci. Res. Rev. 2019, 7, 90–97. [Google Scholar]

- Kaiser, K.R.; Kaiser, D.M.; Kaiser, R.M.; Rackham, A.M. Using social media to understand and guide the treatment of racist ideology. Glob. J. Guid. Couns. Sch. Curr. Perspect. 2018, 8, 38–49. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Rupapara, V.; Rustam, F.; Shahzad, H.F.; Mehmood, A.; Ashraf, I.; Choi, G.S. Impact of SMOTE on imbalanced text features for toxic comments classification using RVVC model. IEEE Access 2021, 9, 78621–78634. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Rustam, F.; Mehmood, A.; Ullah, S.; Ahmad, M.; Khan, D.M.; Choi, G.S.; On, B.W. Predicting pulsar stars using a random tree boosting voting classifier (RTB-VC). Astron. Comput. 2020, 32, 100404. [Google Scholar] [CrossRef]

- Hadji, I.; Wildes, R.P. What do we understand about convolutional networks? arXiv 2018, arXiv:1803.08834. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Ishaq, A.; Umer, M.; Mushtaq, M.F.; Medaglia, C.; Siddiqui, H.U.R.; Mehmood, A.; Choi, G.S. Extensive hotel reviews classification using long short term memory. J. Ambient Intell. Humaniz. Comput. 2021, 12, 9375–9385. [Google Scholar] [CrossRef]

| Ref. | Results | Models | Dataset | Limitation |

|---|---|---|---|---|

| [17] | 97% accuracy RF and DT | SVM, LR, RF, DT, MLP, CNN | Self-made | Simple state-of-the-art approach |

| [25] | 97.5% precision SunKink | SunKink, inception3 | Self-made | High computational cost |

| [23] | 94.1% accuracy | Sensors and GSM module | Self-made | High computational cost and poor performance in terms of accuracy. |

| [24] | 99.7% accuracy LSTM | CONV1D, CONV2D, RNN and LSTM | Shafique et al. [17] | High computational cost because of deep learning approach and spectral features |

| [26] | 92% accuracy DCNN-Large | DCNN-small, DCNN-medium, DCNN-large | Self-made | High computational cost and poor performance |

| [27] | 94.9% Accuracy FFBP | SVM with PCA, Radial NN, FFBP | Self-made | Poor performance in terms of accuracy |

| [28] | 98% accuracy RF with HM features | RF with PCA, RF with KPCA, RF with SVD, RF with HM | Self-made | High computational cost because of vision-based approach |

| [32] | 89% accuracy Multi-scale CNN | Bayes weighted vector, SVM (LDA, PCA), CNN | Kaggle | High computational cost because of deep learning approach |

| [29] | 94.73% accuracy for defect detection, 87% for defect classification | AdaBoost | Self-made | High computational cost because of image processing approach |

| [30] | 87.41% precision | YOLOV3, improved YOLOV3 | Self-made | Poor performance whether they used a complex model |

| [31] | 0.75 mAP, WBDA | MBDA, YOLOV5S, YOLOV5S6, YOLOV5m, Faster RCNN R50, Faster RCNN R101 | National academy of railway sciences test centre dataset | Poorperformance as model achieved approximately 0.75 accuracy. |

| 1 | 2 | 3 | … | 40 | Label |

|---|---|---|---|---|---|

| −1.4621756 | 1.3114967 | −2.4462814 | … | −3.2169747 | 1 |

| −0.51381445 | 4.131112 | 0.76316893 | … | −0.70693094 | 2 |

| −2.1898634 | 1.3600227 | −2.3395789 | … | −3.2751813 | 3 |

| Model | Hyperparameters | Hyperparameters Values Range |

|---|---|---|

| LR | solver = ‘saga’, multi_class = ‘multinomial’ C = 3.0 | solver = {liblinear, sag, saga}, multi_class = ‘multinomial’ C = {1.0 to 10.0} |

| SVM | kernel = ‘linear’, C = 3.0 | kernel = {linear, sigmoid, poly} C = {1.0 to 10.0} |

| RF | n_estimators = 200, max_depth = 200, random_state = 2 | n_estimators = {10 to 500}, max_depth = {10 to 500}, random_state = {0 to 100} |

| GBM | n_estimators = 200, max_depth = 200, learning_rat = 0.2 | n_estimators = {10 to 500}, max_depth = {10 to 500}, learning_rat = {0.1 to 0.9} |

| ADA | n_estimators = 200, max_depth = 200, learning_rat = 0.2 | n_estimators = {10 to 500}, max_depth = {10 to 500}, learning_rat = {0.1 to 0.9} |

| ETC | n_estimators = 200, max_depth = 200, random_state = 2 | n_estimators = {10 to 500}, max_depth = {10 to 500}, random_state = {0 to 100} |

| KNN | n_neighbour = 3 | n_neighbour = {1 to 5} |

| CNN | LSTM | CNN-LSTM |

|---|---|---|

| Embedding (1000, 100,) Dropout (0.5) Conv1D (64, 3, activation = ‘relu’) MaxPooling1D (pool_size = 3) Flatten () Dense (16) Dense (3, activation = ‘softmax’) | Embedding (1000, 100,) Dropout (0.5) LSTM (64) Dense (32) Dense (3, activation = ‘softmax’) | Embedding (1000, 100,) Dropout (0.5) Conv1D (64, 3, activation = ‘relu’) MaxPooling1D (pool_size = 3) LSTM (32) Dense (16) Dense (3, activation = ‘softmax’) |

| loss = ‘categorical_crossentropy’, optimizer = ‘adam’, epochs100 | ||

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| RF | 0.99 | 0.99 | 0.99 | 0.99 |

| GBM | 0.95 | 0.95 | 0.95 | 0.95 |

| ADA | 0.99 | 0.99 | 0.99 | 0.99 |

| LR | 0.89 | 0.89 | 0.89 | 0.89 |

| SVM | 0.95 | 0.95 | 0.95 | 0.95 |

| ETC | 0.99 | 0.99 | 0.99 | 0.99 |

| KNN | 0.99 | 0.99 | 0.99 | 0.99 |

| LSTM | 0.88 | 0.88 | 0.88 | 0.88 |

| CNN | 0.92 | 0.92 | 0.92 | 0.92 |

| CNN-LSTM | 0.93 | 0.93 | 0.93 | 0.93 |

| Model | Accuracy | SD |

|---|---|---|

| RF | 0.99 | ±0.01 |

| GBM | 0.98 | ±0.02 |

| ADA | 0.99 | ±0.01 |

| LR | 0.87 | ±0.07 |

| SVM | 0.94 | ±0.04 |

| ETC | 0.99 | ±0.01 |

| KNN | 0.96 | ±0.04 |

| LSTM | 0.74 | ±0.02 |

| CNN | 0.92 | ±0.01 |

| CNN-LSTM | 0.91 | ±0.01 |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| GBM | 1.00 | 1.00 | 1.00 | 1.00 |

| ADA | 0.99 | 0.99 | 0.99 | 0.99 |

| LR | 0.85 | 0.85 | 0.85 | 0.85 |

| SVM | 0.97 | 0.96 | 0.96 | 0.96 |

| ETC | 1.00 | 1.00 | 1.00 | 1.00 |

| KNN | 1.00 | 1.00 | 1.00 | 1.00 |

| LSTM | 0.88 | 0.87 | 0.87 | 0.87 |

| CNN | 0.94 | 0.94 | 0.94 | 0.94 |

| CNN-LSTM | 0.94 | 0.94 | 0.94 | 0.94 |

| Model | Accuracy | SD |

|---|---|---|

| RF | 0.99 | ±0.01 |

| GBM | 0.98 | ±0.02 |

| ADA | 0.99 | ±0.01 |

| LR | 0.86 | ±0.07 |

| SVM | 0.95 | ±0.03 |

| ETC | 0.99 | ±0.01 |

| KNN | 0.95 | ±0.04 |

| LSTM | 0.87 | ±0.02 |

| CNN | 0.94 | ±0.01 |

| CNN-LSTM | 0.93 | ±0.01 |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| GBM | 0.99 | 0.99 | 0.99 | 0.99 |

| ADA | 1.00 | 1.00 | 1.00 | 1.00 |

| LR | 0.89 | 0.89 | 0.89 | 0.89 |

| SVM | 0.97 | 0.97 | 0.97 | 0.97 |

| ETC | 1.00 | 1.00 | 1.00 | 1.00 |

| KNN | 1.00 | 1.00 | 1.00 | 1.00 |

| LSTM | 0.88 | 0.87 | 0.87 | 0.87 |

| CNN | 0.92 | 0.92 | 0.92 | 0.92 |

| CNN-LSTM | 0.96 | 0.95 | 0.95 | 0.95 |

| Model | Accuracy | SD |

|---|---|---|

| RF | 0.99 | ±0.01 |

| GBM | 0.98 | ±0.02 |

| ADA | 0.99 | ±0.01 |

| LR | 0.86 | ±0.06 |

| SVM | 0.91 | ±0.03 |

| ETC | 1.00 | ±0.01 |

| KNN | 0.95 | ±0.04 |

| LSTM | 0.87 | ±0.02 |

| CNN | 0.93 | ±0.01 |

| CNN-LSTM | 0.95 | ±0.01 |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| RF | 0.99 | 0.99 | 0.99 | 0.99 |

| GBM | 0.97 | 0.97 | 0.97 | 0.97 |

| ADA | 1.00 | 1.00 | 1.00 | 1.00 |

| LR | 0.88 | 0.88 | 0.88 | 0.88 |

| SVM | 0.92 | 0.92 | 0.92 | 0.92 |

| ETC | 1.00 | 1.00 | 1.00 | 1.00 |

| KNN | 1.00 | 1.00 | 1.00 | 1.00 |

| LSTM | 0.87 | 0.87 | 0.87 | 0.87 |

| CNN | 0.91 | 0.91 | 0.91 | 0.91 |

| CNN-LSTM | 0.93 | 0.93 | 0.93 | 0.93 |

| Model | Accuracy | SD |

|---|---|---|

| RF | 0.99 | ±0.01 |

| GBM | 0.97 | ±0.01 |

| ADA | 0.99 | ±0.01 |

| LR | 0.87 | ±0.03 |

| SVM | 0.90 | ±0.02 |

| ETC | 1.00 | ±0.01 |

| KNN | 0.99 | ±0.01 |

| LSTM | 0.87 | ±0.02 |

| CNN | 0.91 | ±0.01 |

| CNN-LSTM | 0.92 | ±0.01 |

| Model | Number of Features | |||

|---|---|---|---|---|

| 40 | 50 | 60 | 70 | |

| RF | 1.04 | 1.47 | 2.01 | 2.11 |

| GBM | 2.82 | 3.47 | 3.59 | 4.01 |

| ADA | 2.03 | 2.39 | 2.51 | 2.48 |

| LR | 0.18 | 0.22 | 0.49 | 0.48 |

| SVM | 0.31 | 0.34 | 1.11 | 1.17 |

| ETC | 0.61 | 0.59 | 1.36 | 1.41 |

| KNN | 0.08 | 0.09 | 0.17 | 0.19 |

| LSTM | 121.66 | 116.91 | 145.22 | 148.21 |

| CNN | 90.07 | 123.70 | 127.02 | 111.87 |

| CNN-LSTM | 102.81 | 158.38 | 211.47 | 215.01 |

| Reference | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| [17] | RF | 0.97 | 0.97 | 0.97 | 0.97 |

| [24] | LSTM | 0.997 | 0.995 | 0.995 | 0.995 |

| This Study | ETC | 1.00 | 1.00 | 1.00 | 1.00 |

| ADA | 1.00 | 1.00 | 1.00 | 1.00 | |

| KNN | 1.00 | 1.00 | 1.00 | 1.00 | |

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| Case | T-Score | CV | Null Hypothesis |

|---|---|---|---|

| ML using Original Features vs. ML using 60 Features | 6.23 | 6.63 | Reject |

| ML using 50 Features vs. ML using 60 Features | 1.7 | 6.63 | Reject |

| ML using 70 Features vs. ML using 60 Features | 1.7 | 6.63 | Reject |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rustam, F.; Ishaq, A.; Hashmi, M.S.A.; Siddiqui, H.U.R.; López, L.A.D.; Galán, J.C.; Ashraf, I. Railway Track Fault Detection Using Selective MFCC Features from Acoustic Data. Sensors 2023, 23, 7018. https://doi.org/10.3390/s23167018

Rustam F, Ishaq A, Hashmi MSA, Siddiqui HUR, López LAD, Galán JC, Ashraf I. Railway Track Fault Detection Using Selective MFCC Features from Acoustic Data. Sensors. 2023; 23(16):7018. https://doi.org/10.3390/s23167018

Chicago/Turabian StyleRustam, Furqan, Abid Ishaq, Muhammad Shadab Alam Hashmi, Hafeez Ur Rehman Siddiqui, Luis Alonso Dzul López, Juan Castanedo Galán, and Imran Ashraf. 2023. "Railway Track Fault Detection Using Selective MFCC Features from Acoustic Data" Sensors 23, no. 16: 7018. https://doi.org/10.3390/s23167018

APA StyleRustam, F., Ishaq, A., Hashmi, M. S. A., Siddiqui, H. U. R., López, L. A. D., Galán, J. C., & Ashraf, I. (2023). Railway Track Fault Detection Using Selective MFCC Features from Acoustic Data. Sensors, 23(16), 7018. https://doi.org/10.3390/s23167018