The hyperparameter settings of the sound classification model using CNN proposed in this paper are shown in

Table 2. Each epoch represents the result of the entire model training “once”. This model was trained for 500 epochs, but during the training process, we added Early Stopping technology [

31]. We first used a part of the training set as our validation set. At the end of each epoch, the accuracy of the validation set was calculated. If it is found that the performance on the verification set is getting worse and the verification performance exceeds our pre-set value (accuracy > 0.5 and epoch > 50), it may be that overfitting has occurred, and the training process will be terminated. The model uses categorical_crossentropy as the cross-validation method of the final classification result, the optimizer used is Adam, and the learning rate of the model is set to 0.001.

The confusion matrix is a method used to verify the classification effect [

32], which is used to evaluate the performance of the classification model. True positive (

TP) indicates that the result of the forecast data is the same as the actual data, and true negative (

TN) indicates that the result of the forecast data is not the same as the actual data. False positive (

FP) indicates that while the forecast data result is the same as the actual data, the true result is not the same as the actual data, and false negative (

FN) means that while the result of forecast data is not the same as the actual data, the true result is the same as the actual data. Based on the results of the confusion matrix, the accuracy, precision, recall, and

F1 score will be discussed separately to evaluate the proposed model’s classification performance.

4.1. Experimental Results Based on the ESC-50 Dataset

The ESC-50 [

29] dataset consists of 5 s-long recordings organized into 50 semantical classes (with 40 examples per class) arranged into 5 major categories, as shown in

Table 3. The dataset can be divided into three parts: the training set, the validation set, and the testing set. We assigned 81% of the data to the training set, which was utilized to train the model and adjust its parameters based on the training data. Additionally, 9% of the data were allocated to the validation set, allowing for fine-tuning of the model’s hyperparameters and evaluating its performance during training. The validation set plays a crucial role in selecting the best-performing model based on its performance on unseen data. Finally, the remaining 10% of the data were set aside for the testing set, remaining completely separate from the model development process. This subset was reserved for evaluating the final performance of the trained model and providing an unbiased evaluation of its generalization capabilities on unseen data.

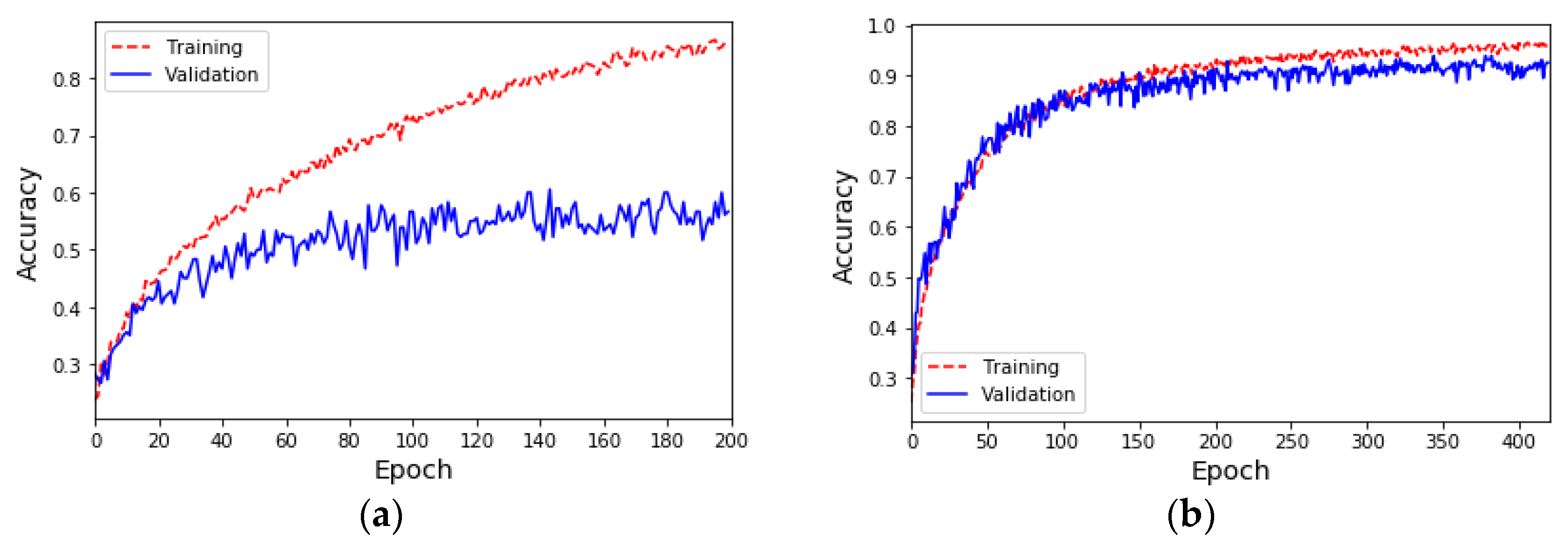

Due to the insufficient amount of data in the ESC-50 dataset, the results of the sound classification model for the five major categories using only the original dataset (i.e.,

K = 1) are shown in

Figure 3a. During the 200th training epoch, the Early Stopping mechanism was triggered to avoid overfitting problems and problems where training cannot converge. The results show that although the accuracy of the training is close to 90%, the accuracy of the validation is only about 62%. Furthermore, the results of the sound classification model for the five major categories after doubling the original data (i.e.,

K = 2) using the proposed data augmentation method are shown in

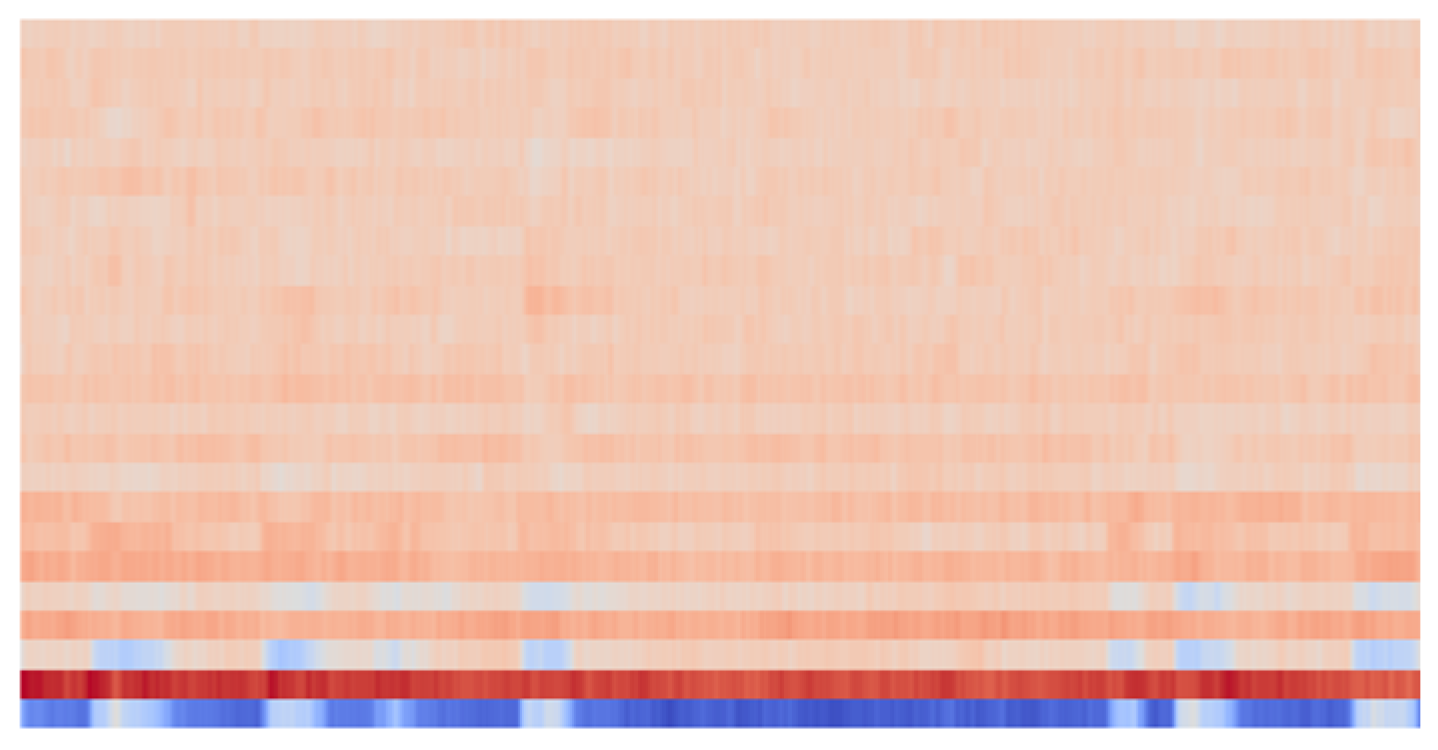

Figure 3b. During the 420th training epoch, the Early Stopping mechanism was triggered to avoid overfitting problems and problems where training cannot converge. The results show that the accuracy of both the training set and the validation set is 90%. The confusion matrix and evaluation indicators for each category of sound classification using the original dataset and data augmentation are shown in

Table 4 and

Table 5, respectively. The results show that the data augmentation method can effectively improve the performance by 30% compared with the original dataset, meaning that the average accuracy, average precision, and average recall of classification can reach 90%, and the F1 score can reach 89%.

Since the ESC-50 dataset has 5 major categories, it can be subdivided into 50 semantic classes. The method of data augmentation by

K times, where

K = 1, 2, …, 5, was used to demonstrate the accuracy, precision, recall, and F1 score performance of the sound classification model, as shown in

Figure 4,

Figure 5,

Figure 6 and

Figure 7. In

Figure 4, when

K = 1 (data not augmented), the overall average accuracy is only 63%. The accuracy of class numbers 7, 10, 11, 17, 18, 22, 30, 31, 37, 38, 41, 47, and 48 is less than 50%. Among them, the accuracy of class numbers 10, 11, 31, and 41 is zero, showing that they cannot be classified at all. When

K = 2, the data are doubled, and the overall average accuracy is increased to 80%. Only the accuracy of class number 9 is low, at 40%. As

K increases to 3, 4, and 5, the overall average accuracy increases to 87%, 94%, and 97%, respectively, showing that the accuracy of each class is excellent.

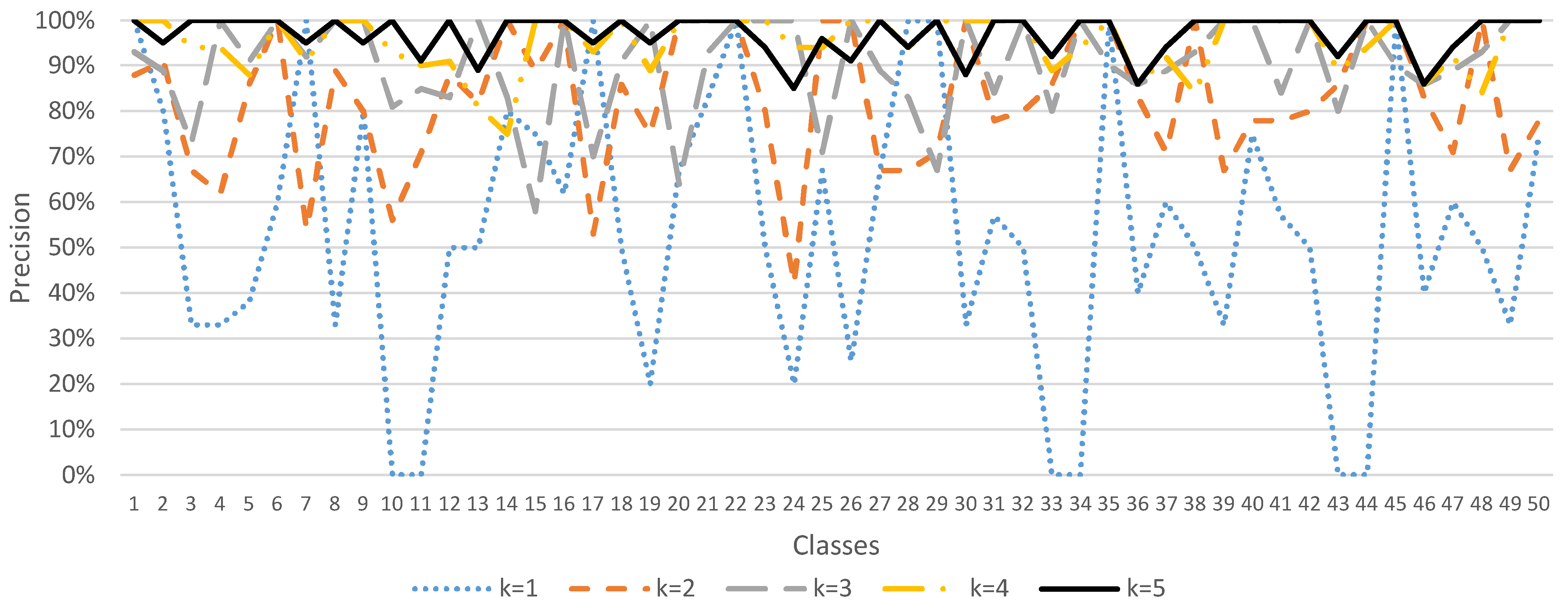

In

Figure 5, when

K = 1 (data not augmented), the overall average precision is 54%. The precision of class numbers 3, 4, 5, 8, 10, 11, 19, 24, 33, 34, 36, 39, 43, 44, 46, and 49 all have less than 50%. When

K = 2, the data are doubled, and the overall average precision increases to 83%. As

K increases to 3, 4, and 5, the overall average precision increases to 90%, 95%, and 97%, respectively, showing that the precision of each class is excellent.

Similarly, in

Figure 6, when

K = 1 (data not augmented), the overall average recall is 59%. There are a certain number of classes (class numbers 7, 10, 11, 17, 18, 24, 30, 33, 34, 36, 47, and 48) with low recall, indicating that the classification performance is bad. However, as

K increases to 2, 3, 4, and 5, the overall average recall increases to 80%, 88%, 95%, and 96%, respectively, showing that the recall of each class is excellent.

In

Figure 7, when

K = 1 (data not augmented), the overall mean F1 score is 53%. There are a certain number of classes (class numbers 3, 7, 8, 10, 11, 17, 18, 19, 24, 26, 30, 33, 34, 36, 39, 44, 47, and 48) with a low F1 score, indicating that the classification performance is bad. However, as

K increases to 2, 3, 4, and 5, the overall average F1 score increases to 78%, 87%, 95%, and 96%, respectively, showing that the F1 score in each class is excellent.

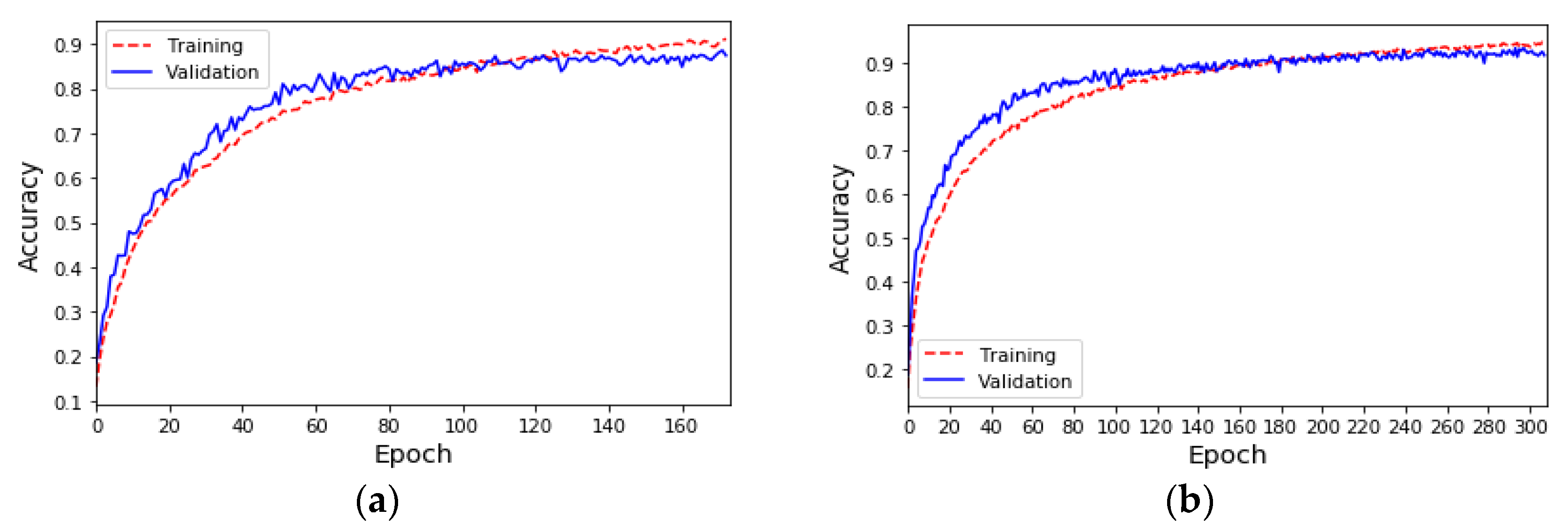

4.2. Experimental Results Based on the UrbanSound8K Dataset

The UrbanSound8K [

30] dataset contains 8732 labeled sound excerpts of urban sounds from 10 classes: air_conditioner, car_horn, children_playing, dog_bark, drilling, enginge_idling, gun_shot, jackhammer, siren, and street_music. The length of each sound excerpt is 5 s. The amount of data in the 10 classes in the UrbanSound8K dataset is shown in

Table 6. Due to the sufficient amount of data in the UrbanSound8K dataset, the results of the 10-class sound classification model using only the original dataset (

K = 1) are shown in

Figure 8a. In the 500 epochs of training, the Early Stopping mechanism was triggered to stop training at the 173rd epoch, and the accuracy of the training and the validation sets was very similar and reached 90%. In addition, the results of the sound classification model for the 10 classes after doubling the original data (

K = 2) using the proposed data augmentation method are shown in

Figure 8b. During the 308th training epoch, the Early Stopping mechanism was triggered to stop training. The results show that the data augmentation method can only slightly increase the classification accuracy by 2%, compared with the original dataset when the original dataset has sufficient data. The evaluation indicators for each class of sound classification using the original dataset and data augmentation are shown in

Table 7. The average accuracy, average precision, average recall, and average F1 score of classification can reach 92%.

Half of the data for each category were extracted from the UrbanSound8K dataset (that is, the number of data was 4366), and the data augmentation mechanism (

K = 2) was used to generate a new training set with a data size of 8732. The sound classification results using this new training set are shown in

Figure 9. During the 272nd training epoch, the Early Stopping mechanism was triggered to stop training. The accuracy of the training and validation sets was very similar and reached 91%. The evaluation indicators for each class of sound classification using the new dataset are shown in

Table 8. The average classification accuracy and F1 score were 91%, the average precision was 92%, and the average recall was 90%. The experimental results show that, in the case of sufficient data, an excellent sound classification model can be established by using only half of the training data and through the proposed data augmentation mechanism.

A comparison of the proposed sound classification with the state-of-the-art is shown in

Table 9. Based on the ESC-50 dataset, when the proposed method does not use data augmentation (

K = 1), the classification accuracy is only 63%, which is much lower than the classification accuracy rates of the other three methods of 88.65%, 83.8%, and 87.1%. This is because the ESC-50 dataset has many classes and the amount of data for each class is insufficient, which makes the classification efficiency of the proposed method using only this dataset low. When the proposed method uses data enhancement (K = 4 or 5), the accuracy can be greatly improved and exceeds 94%. Based on the UrbanSound8K dataset, when the proposed method does not use data augmentation (

K = 1), the classification accuracy has already reached 90%, which is better than the classification accuracy of the other two methods, which are 80.3% and 84.45%. This is because the amount of data in each class of the UrbanSound8K dataset is sufficient, making the classification performance of the proposed method excellent. If data augmentation (

K = 2) is used, the accuracy of the proposed method can be slightly increased to 92%.