BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications

Abstract

1. Introduction

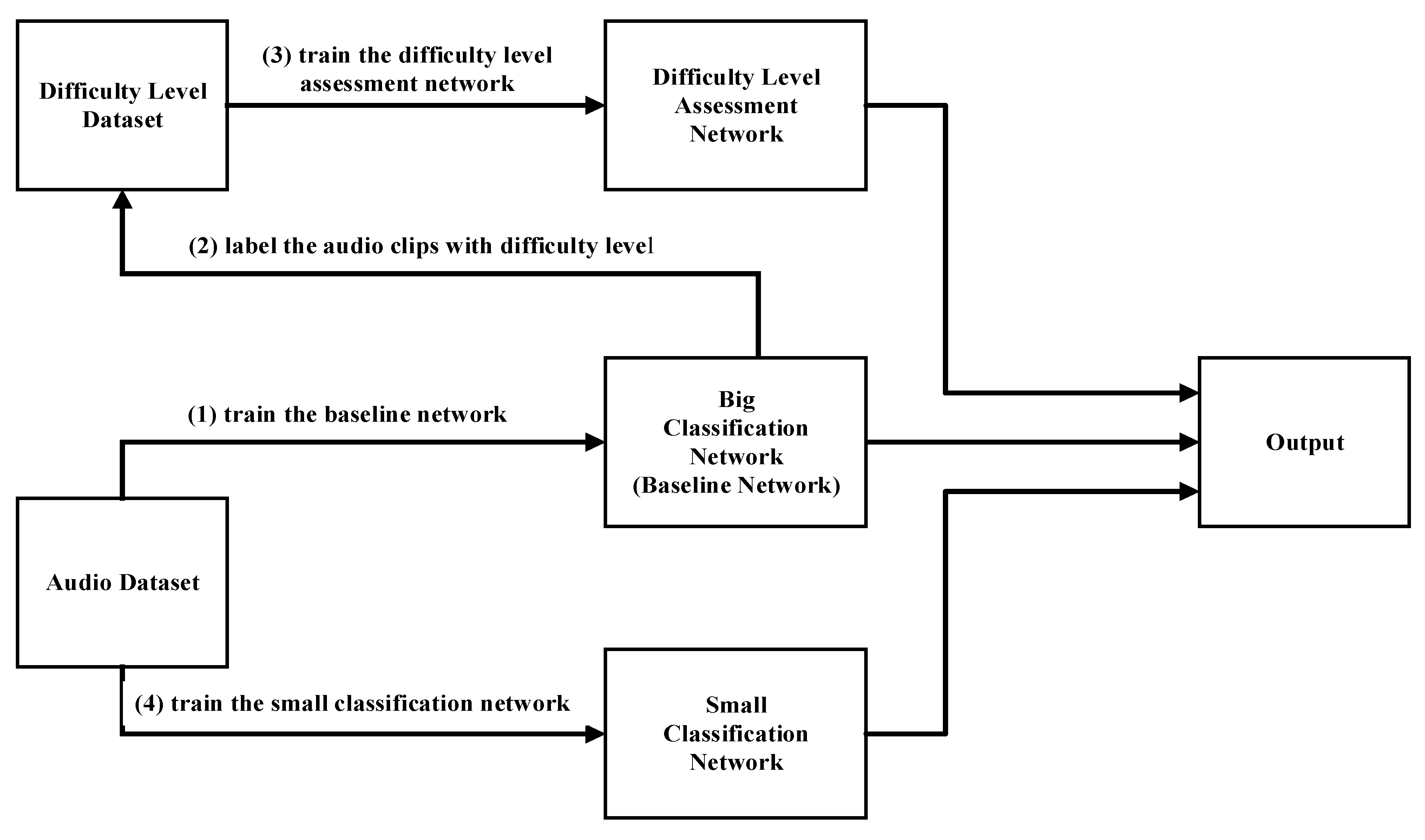

- A big–small network-based ESC model is proposed to reduce the amount of computation while achieving high classification accuracy by assessing the classification difficulty level of the input audio clip and adaptively activating a big or small network for classification.

- A pre-classification processing technique with logmel spectrogram refining is proposed to further improve the classification accuracy.

- The proposed BSN-ESC model is implemented on FPGA hardware for the evaluation of processing time of AIoT devices.

- The proposed BSN-ESC method is evaluated on the commonly used ESC50 dataset. Compared with several state-of-the-art methods, the number of FLOPs is significantly reduced (up to 2309 times) while achieving a high classification accuracy of 89.25%.

- The rest of this paper is organized as follows. Section 2 reviews the related work. Section 3 presents the details of the proposed BSN-ESC method. Section 4 presents the hardware implementation of the BSN-ESC on the FPGA. Section 5 shows and discusses the experimental results, and Section 6 concludes the paper.

2. Related Work

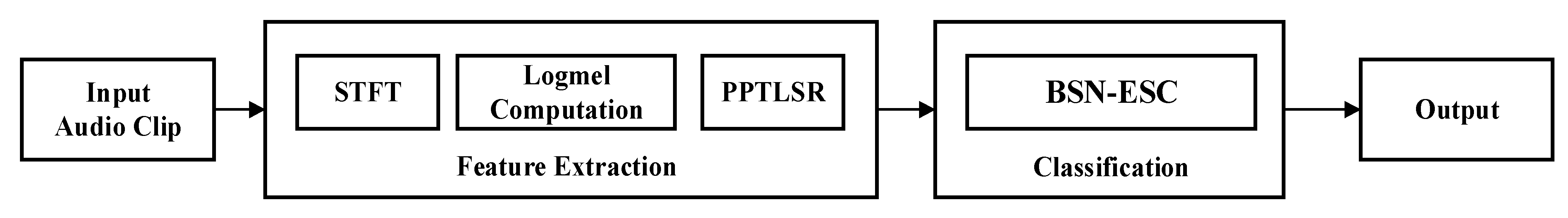

3. Proposed ESC Method

3.1. Proposed BSN-ESC Model

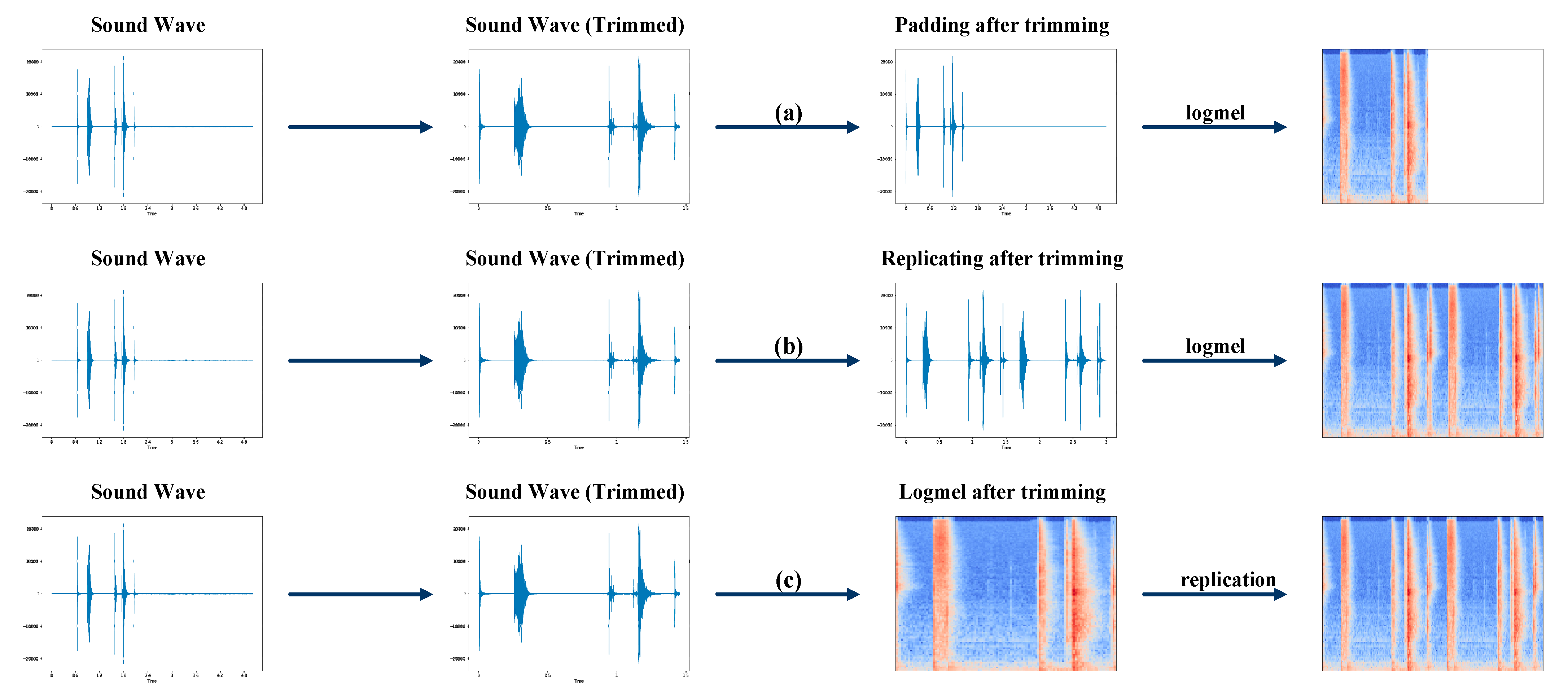

3.2. Proposed PPTLSR Technique

4. FPGA Based BSN-ESC Hardware Implementation

5. Experiments and Discussion

5.1. Dataset

5.2. Pre-Processing and Data Augmentation

5.3. Training and Testing Method

5.4. Results and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Han, X.; Lv, T.; Wu, S.; Li, Y.; He, B. A remote human activity detection system based on partial-fiber LDV and PTZ camera. Opt. Laser Technol. 2019, 111, 575–584. [Google Scholar] [CrossRef]

- Lv, T.; Zhang, H.Y.; Yan, C.H. Double mode surveillance system based on remote audio/video signals acquisition. Appl. Acoust. 2018, 129, 316–321. [Google Scholar] [CrossRef]

- Weninger, F.; Schuller, B. Audio recognition in the wild: Static and dynamic classification on a real-world database of animal vocalizations. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 337–340. [Google Scholar]

- Vacher, M.; Serignat, J.-F.; Chaillol, S. Sound classification in a smart room environment: An approach using GMM and HMM methods. Proc. Conf. Speech Technol. Hum.-Comput. Dialogue 2007, 1, 135–146. [Google Scholar]

- Anil, S.; Sanjit, K. Two-stage supervised learning-based method to detect screams and cries in urban environments. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 7, 290–299. [Google Scholar]

- Harte, C.; Sandler, M.; Gasser, M. Detecting Harmonic Change in Musical Audio. In Proceedings of the AMCMM’06: The 14th ACM International Conference on Multimedia 2006, Santa Barbara, CA, USA, 23–27 October 2006; pp. 21–25. [Google Scholar]

- Holdsworth, J.; Nimmo-Smith, I. Implementing a gammatone filter bank. SVOS Final. Rep. Part. A Audit. Filter. Bank. 1988, 1, 1–5. [Google Scholar]

- Zhang, Z.; Xu, S.; Cao, S.; Zhang, S. Deep convolutional neural network with mixup for environmental sound classification. Chin. Conf. Pattern Recognit. Comput. Vis. (PRCV) 2018, 2, 356–367. [Google Scholar]

- Li, J.; Dai, W.; Metze, F.; Qu, S.; Das, S. A comparison of deep learning methods for environmental sound detection. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 126–130. [Google Scholar]

- Lavner, Y.; Ruinskiy, D. A decision-tree-based algorithm for speech/music classification and segmentation. EURASIP J. Audio Speech Music. Process. 2009, 2009, 239892. [Google Scholar] [CrossRef]

- Jia-Ching, W.; Jhing-Fa, W.; Wai, H.K.; Cheng-Shu, H. Environmental sound classification using hybrid SVM/KNN classifier and MPEG-7 audio low-level descriptor. In Proceedings of the The 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006; pp. 1731–1735. [Google Scholar]

- Bonet-Sola, D.; Alsina-Pages, R.M. A comparative survey of feature extraction and machine learning methods in diverse acoustic environments. Sensors 2021, 21, 1274. [Google Scholar] [CrossRef]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Boddapati, V.; Petef, A.; Rasmusson, J.; Lundberg, L. Classifying environmental sounds using image recognition networks. Procedia Comput. Sci. 2017, 112, 2048–2056. [Google Scholar] [CrossRef]

- Tokozume, Y.; Ushiku, Y.; Harada, T. Learning from between-class examples for deep sound recognition. arXiv 2017, arXiv:1711.10282. [Google Scholar]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal. Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Xinyu, L.; Venkata, C.; Katrin, K. Multi-stream network with temporal attention for environmental sound classification. In Interspeech 2019; ISCA: New York, NY, USA, 2019; pp. 3604–3608. [Google Scholar]

- Zhang, Z.; Xu, S.; Zhang, S.; Qiao, T.; Cao, S. Learning attentive representations for environmental sound classification. IEEE Access 2019, 7, 130327–130339. [Google Scholar] [CrossRef]

- Nasiri, A.; Hu, J. SoundCLR: Contrastive learning of representations for improved environmental sound classification, Sound(cs. SD). arXiv 2021, arXiv:2103.01929. [Google Scholar]

- Guzhov, A.; Raue, F.; Hees, J.; Dengel, A. ESResNet: Environmental sound classification based on visual domain models. Comput. Vis. Pattern Recognit. cs.CV. arXiv 2021, arXiv:2004.07301. [Google Scholar]

- Guo, J.; Li, C.; Sun, Z.; Li, J.; Wang, P. A Deep Attention Model for Environmental Sound Classification from Multi-Feature Data. Appl. Sci. 2022, 12, 5988. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Xu, G. Environmental Sound Classification Based on Continual Learning. SSRN. 2023. Available online: https://ssrn.com/abstract=4418615 (accessed on 14 April 2023).

- Fei, H.; Wu, W.; Li, P.; Cao, Y. Acoustic scene classification method based on Mel-spectrogram separation and LSCNet. J. Harbin Inst. Technol. 2022, 54, 124. [Google Scholar]

- Park, H.; Yoo, C.D. CNN-based learnable gammatone filterbank and equal loudness normalization for environmental sound classification. IEEE Signal. Process. Lett. 2020, 27, 411–415. [Google Scholar] [CrossRef]

- Verbitskiy, S.; Berikov, V.; Vyshegorodtsev, V. ERANNs: Efficient residual audio neural networks for audio pattern recognition. Pattern Recognit. Lett. 2022, 161, 38–44. [Google Scholar] [CrossRef]

- Cerutti, G.; Prasad, R.; Brutti, A.; Farella, E. Neural network distillation on IoT platforms for sound event detection. In Interspeech 2019; ISCA: New York, NY, USA, 2019; pp. 3609–3613. [Google Scholar]

- Yang, M.; Peng, L.; Liu, L.; Wang, Y.; Zhang, Z.; Yuan, Z.; Zhou, J. LCSED: A low complexity CNN based SED model for IoT devices. Neurocomputing 2022, 485, 155–165. [Google Scholar] [CrossRef]

- Peng, L.; Yang, J.; Xiao, J.; Yang, M.; Wang, Y.; Qin, H.; Zhou, J. ULSED: An ultra-lightweight SED model for IoT devices. J. Parallel Distrib. Comput. 2022, 166, 104–110. [Google Scholar] [CrossRef]

- Piczak, K.J. Esc: Dataset for environmental sound classification. In Proceedings of the 23rd ACM International Conference on Multimedia 2015, Brisbane, Australia, 26–30 October 2015; pp. 1015–1018. [Google Scholar]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.-C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. SpecAugment: A simple data augmentation method for automatic speech recognition. arXiv 2019, arXiv:1904.08779. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

| Difficulty Level Assessment Nework | Big Classification Network | Small Classification Network |

|---|---|---|

| 1 × 5 Standard Conv1 | 3 × 5 × 32 Standard Conv1 | 3 × 5 × 32 Separable Conv1 |

| 3 × 3 Maxpooling1 | 3 × 5 × 32 Separable Conv2 | 4 × 3 Maxpooling1 |

| (easy/hard) FC-sigmoid | 4 × 3 Maxpooling1 | 3 × 1 × 64 Standard Conv2 |

| 3 × 1 × 64 Standard Conv3 | 4 × 1 Maxpooling2 | |

| 3 × 1 × 64 Separable Conv4 | 1 × 5 × 128 Separable Conv3 | |

| 4 × 1 Maxpooling2 | 1 × 3 Maxpooling3 | |

| 1 × 5 × 128 Standard Conv5 | 3 × 3 × 256 Separable Conv4 | |

| 1 × 5 × 128 Standard Conv6 | 2 × 2 Maxpooling4 | |

| 1 × 3 Maxpooling3 | (# of classes) FC-softmax | |

| (# of classes) FC-softmax |

| Methods | Processing Time | Computational | |

|---|---|---|---|

| Complexity | |||

| CPU | FPGA | (No. of FLOPs) | |

| Proposed PPTLSR +Baseline Network | 30.66 s | 3.36 s | 0.417 G |

| Proposed PPTLSR +Proposed BSN-ESC Network | 14.66 s | 2.35 s | 0.123 G |

| Methods | Classification Accuracy | Computational Complexity |

|---|---|---|

| ESC-50 Dataset | No. of FLOPs | |

| Multi-Stream CNN [17] 2019 | 84.0% | 284.060 G |

| ZhangCNN [18] 2019 | 86.5% | 0.485 G |

| SoundCLR [19] 2021 | 92.9% | 258.74 G |

| ESResNet [20] 2021 | 91.5% | 183.36 G |

| LGTFB [24] 2020 | 86.2% | 0.812 G |

| LCSED [27] 2022 | 83.0% | 2.640 G |

| ULSED [28] 2022 | 88.3% | 0.249 G |

| Multi-Feature CNN [21] 2022 | 89.0% | 1.82 G |

| ERANN [25] 2022 | 89.2% | 10.01 G |

| LSCNet [23] 2022 | 88.0% | 0.65 G |

| FGR-ES [22] 2023 | 82.08% | 1.82 G |

| Ours | 89.25% | 0.123 G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, L.; Yang, J.; Yan, L.; Chen, Z.; Xiao, J.; Zhou, L.; Zhou, J. BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications. Sensors 2023, 23, 6767. https://doi.org/10.3390/s23156767

Peng L, Yang J, Yan L, Chen Z, Xiao J, Zhou L, Zhou J. BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications. Sensors. 2023; 23(15):6767. https://doi.org/10.3390/s23156767

Chicago/Turabian StylePeng, Lujie, Junyu Yang, Longke Yan, Zhiyi Chen, Jianbiao Xiao, Liang Zhou, and Jun Zhou. 2023. "BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications" Sensors 23, no. 15: 6767. https://doi.org/10.3390/s23156767

APA StylePeng, L., Yang, J., Yan, L., Chen, Z., Xiao, J., Zhou, L., & Zhou, J. (2023). BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications. Sensors, 23(15), 6767. https://doi.org/10.3390/s23156767