Augmented Reality (AR) for Surgical Robotic and Autonomous Systems: State of the Art, Challenges, and Solutions

Abstract

1. Introduction

1.1. Current Knowledge of XR, AR, and VR Platforms

1.2. Definition and Scope of Augmented Reality in Surgery

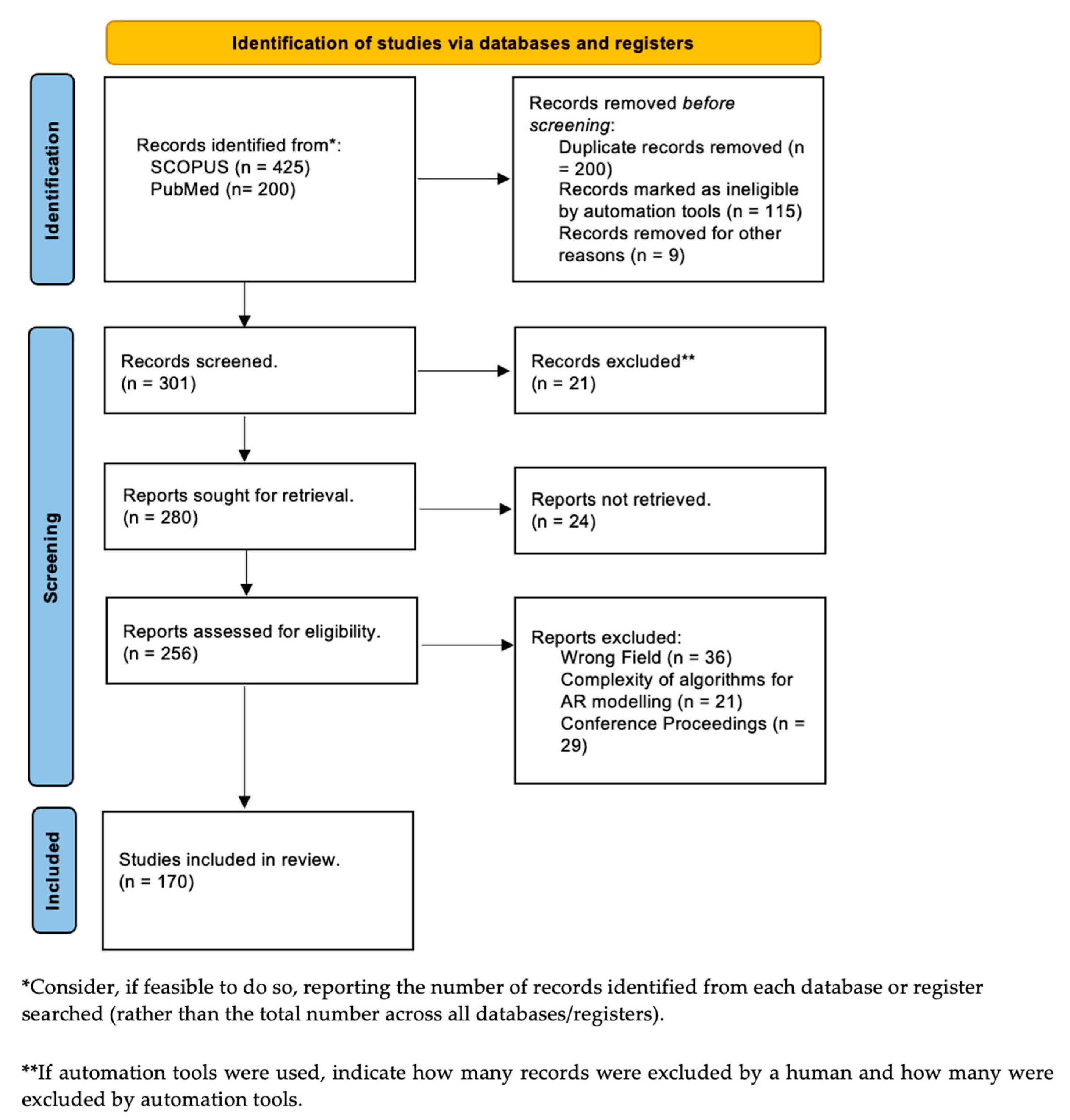

2. Research Background

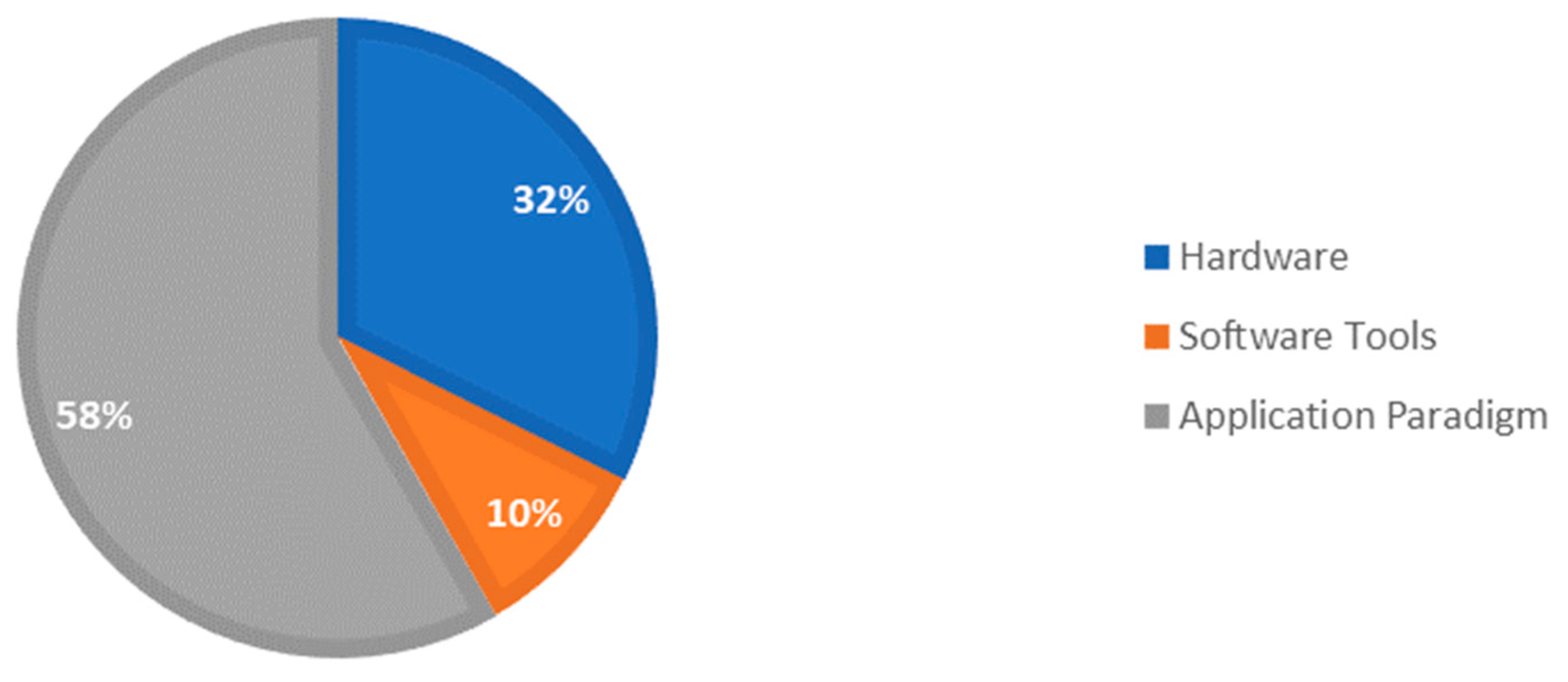

2.1. Classification of AR–RAS Collaboration in Meta-Analysis Study

2.2. Review of Commercial Robots and Proof-of-Concept Systems

- What is the current state-of-the-art research in integrating AR technologies with surgical robotics?

- What are the various hardware and software components used in the development of AR-assisted surgical robots and how are they intertwined?

- What are some of the current application paradigms that have enhanced these robotic platforms? How can we solve the research gaps in previous literature reviews and promote faster performance and accuracy in image reconstruction and encourage high LoA surgical robots with computer vision methods?

3. Hardware Components

3.1. Patient-to-Image Registration Devices

- (i)

- Electromagnetic Tracking Systems (EMTs)

- (ii)

- Optical tracking systems (OTSs)

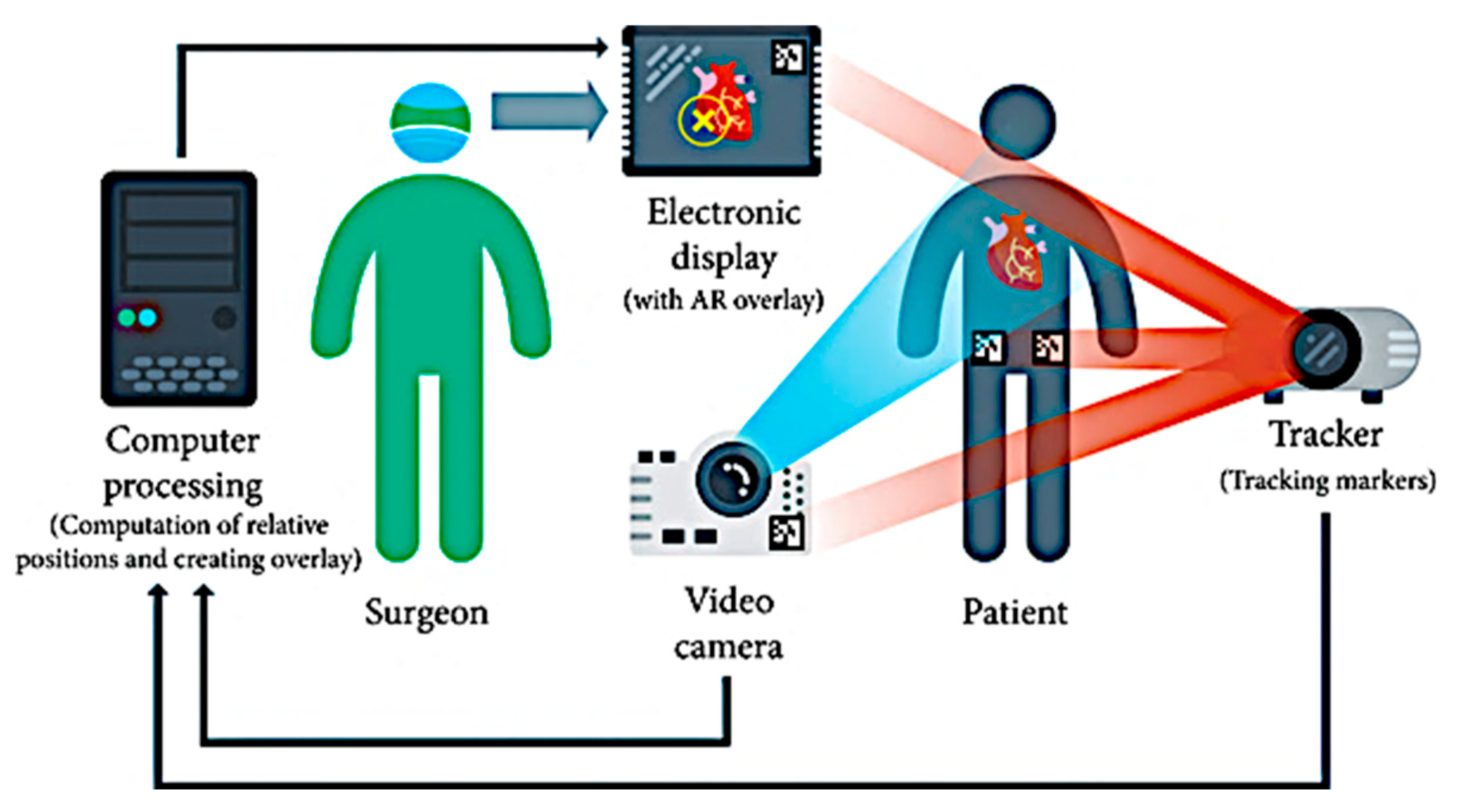

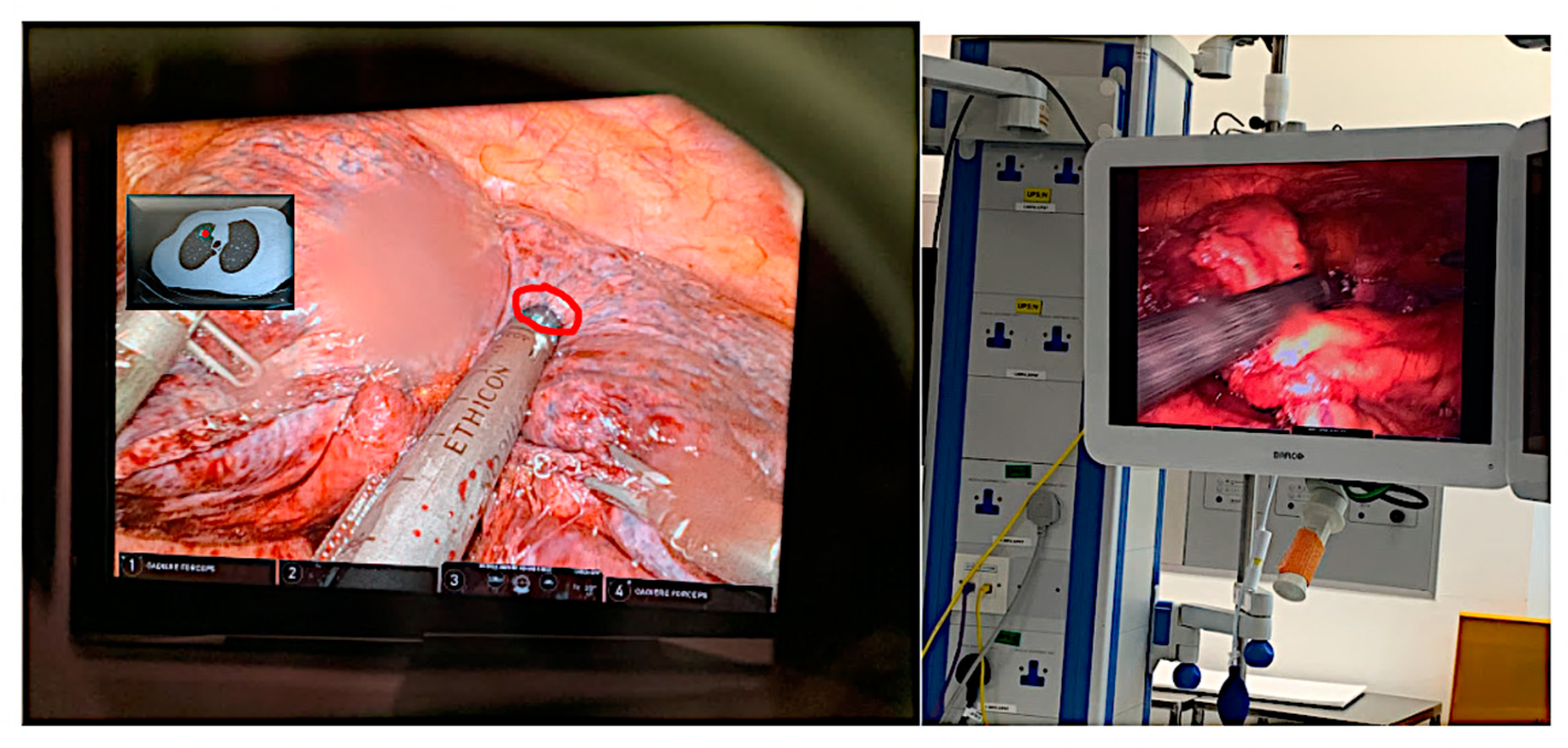

3.2. Object Detection and AR Alignment for Robotic Surgery

3.2.1. Intraoperative Planning for Surgical Robots

- (i)

- Marker-based AR

- (ii)

- Markerless AR

- (iii)

- HMD-Based AR for Surgery and Rehabilitation

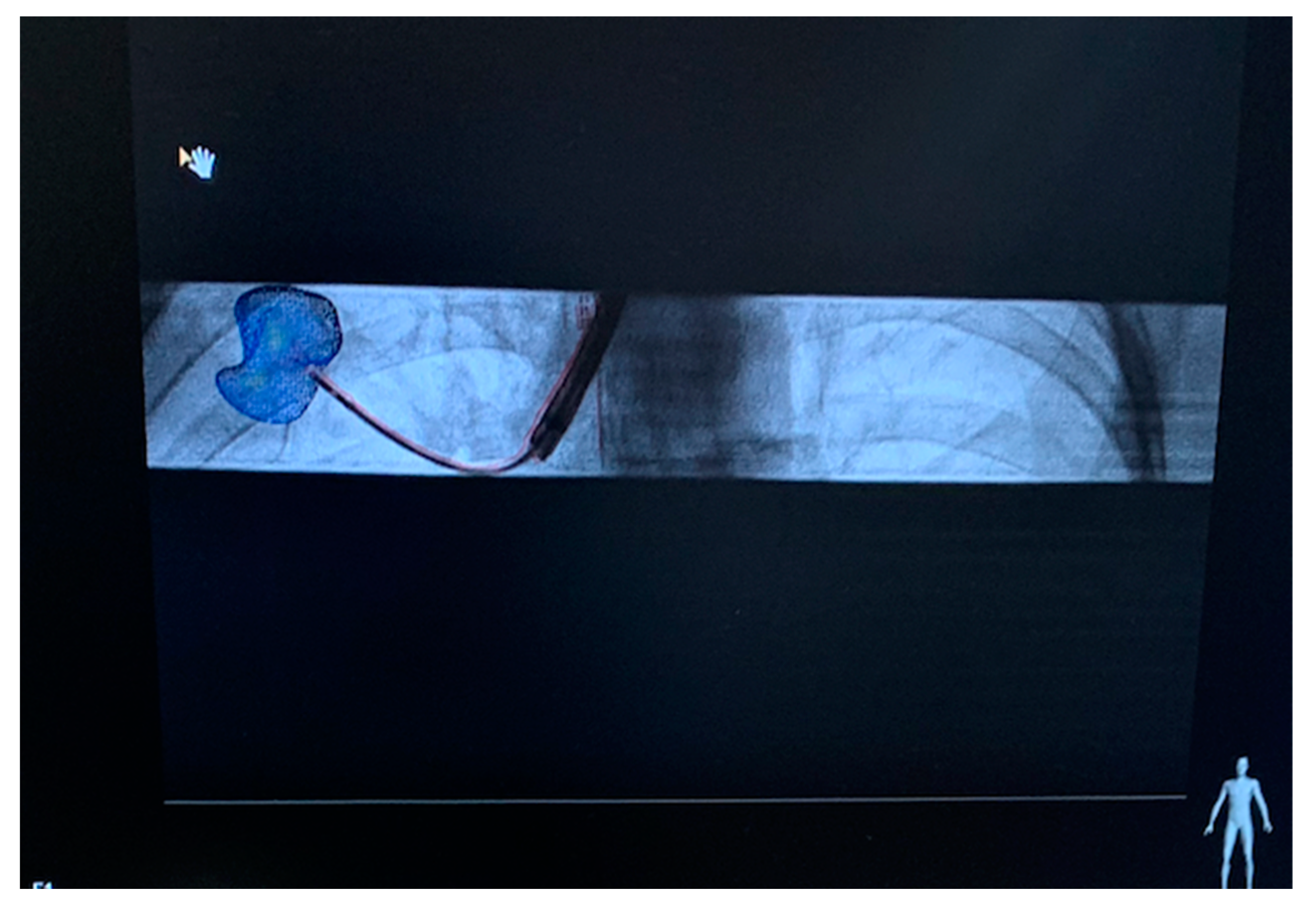

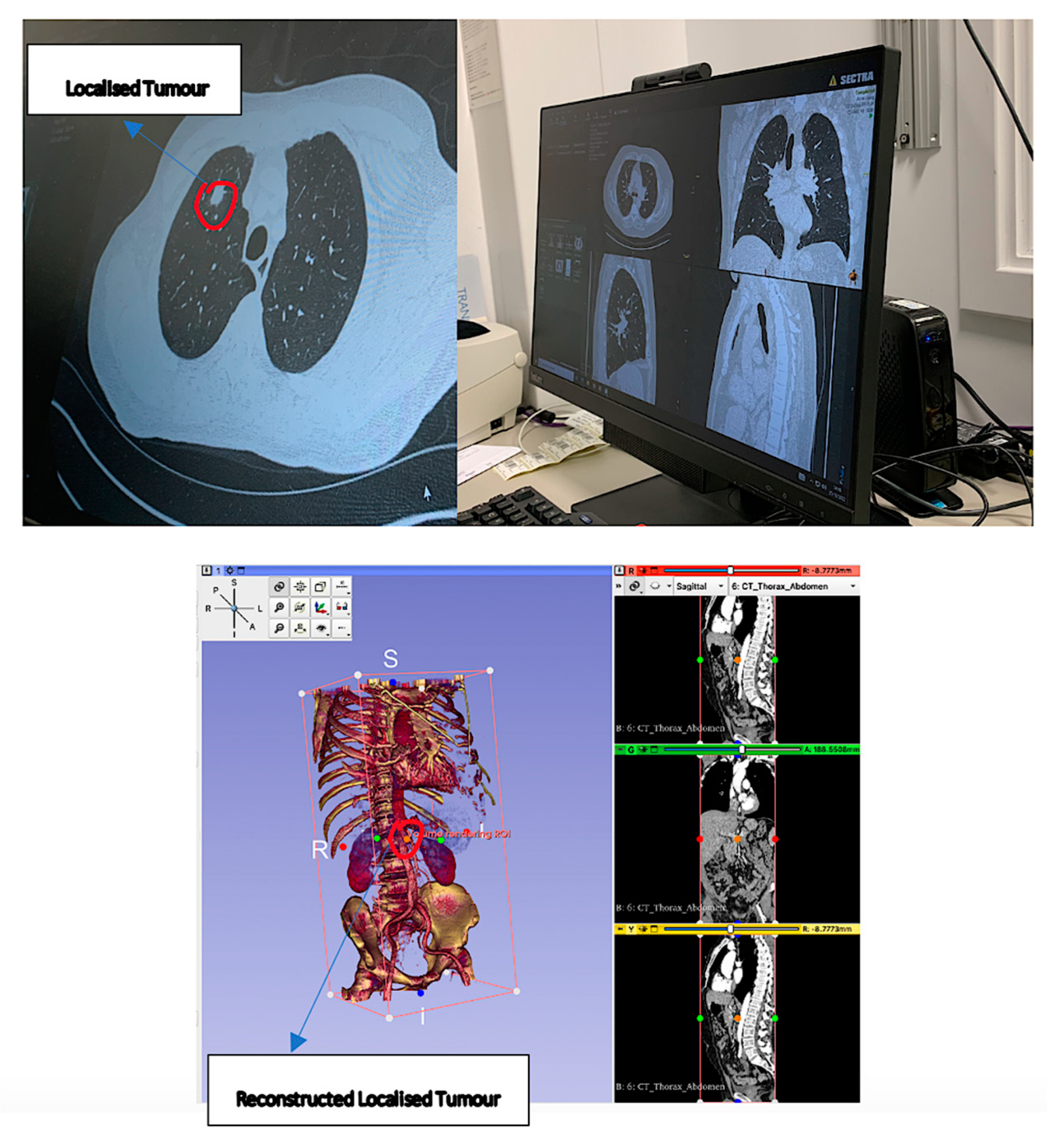

3.2.2. Preoperative Planning for Surgical Robots

- (i)

- Superimposition-based AR

- (ii)

- Projection-based AR

- (iii)

- HMD-based AR

4. Software Integration

4.1. Patient-To-Image Registration

4.2. Camera Calibration for Optimal Alignment

4.3. 3D Visualization using Direct Volume Rendering

4.4. Surface Rendering after Segmentation of Pre-Processed Data

4.5. Path Computational Framework for Navigation and Planning

5. Applications of Computer Vision in Surgical Robot Operation (DL-Based)

5.1. Medical Image Registration

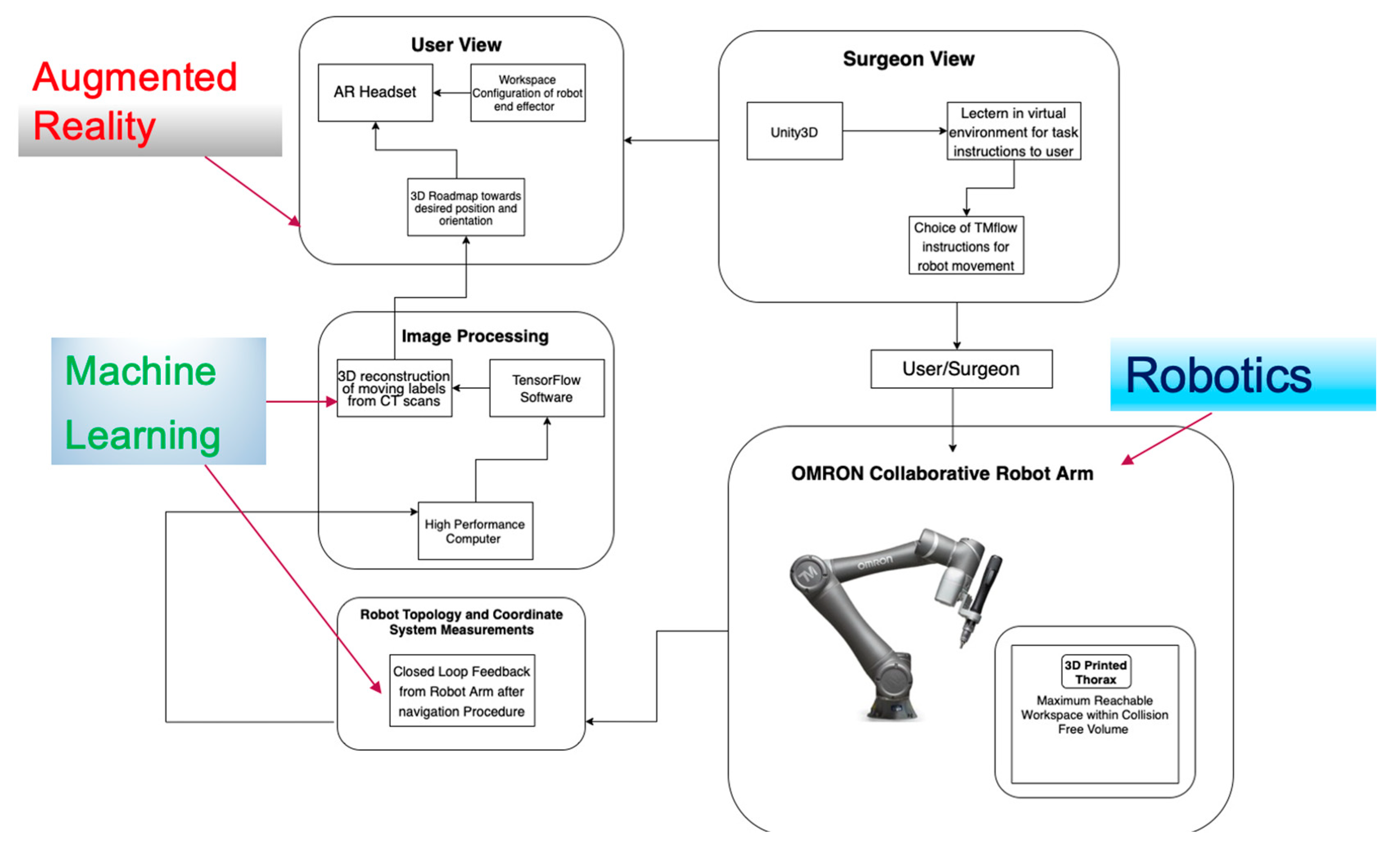

5.2. Increased Optimization of Robot Orientation Using Motion Planning and Camera Projection

5.3. Collision Detection during Surgical End-Effector Motion

5.4. Reconfiguration and Workspace Visualization of Surgical Robots

5.5. Increased Haptic Feedback for Virtual Scene Guidance

5.6. Improved Communication and Patient Safety

5.7. Digital Twins (DT) to Guide End-Effectors

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A. State of the Art and Proof of Concept in AR-Based Surgical Robots from Existing Literature

| Author/ Company | Name of Device | Parameters Studied | AR Interface | Type of AR Display | Operating Principle | Surgical Specialization | CE Marking |

| Mazor Robotics Inc., Caesarea, Israel | SpineAssist [172] | CT-scan-based image reconstruction, path planning of screw placement, and needle tracking. | Graphical user interface for fluoroscopy guidance using fiducial markers. | Marker-based | The system is fixed to the spine, attached to a frame triangulated by percutaneously placed guidewires. | Transpedicular screw placement (orthopedic) Brain surgery | Yes (2011) |

| Renaissance [173] | 3D reconstruction of spine with selection of desired vertebral segments. | Hologram generation for localization of screw placement. | Superposition-based | Ten-times faster software processing for target localization due to DL algorithms. | Thoracolumbar screw placement (orthopedic) | Yes (2011) | |

| Zimmer Biomet, Warsaw, Indiana | ROSA Spine [174] | Image reconstruction, path planning of screw placement, and needle tracking. | 3D intraoperative planning software for robotic arm control. | Superposition-based | Robotic arm with floor-flexible base, which can readjust its orientation. | Transpedicular screw placement (orthopedic) Brain surgery | Yes (2015) |

| MedRobotics, Raynham, MA, USA | MazorX [175] | Image reconstruction, 3D volumetric assay of the surgical field. | 3D intraoperative planning software for robotic arm control and execution. | Superposition-based | Matching preoperative and intraoperative fluoroscopy to reconstruct inner anatomy. | General spine and brain surgery | Yes (2017) |

| Flex Robotic System [176] | Intraoperative visualization to give surgeons a clear view of the area of interest. | Built-in AR software with magnified HD for viewing of anatomy. | Superposition-based | Can navigate around paths at 180 degrees to reach deeper areas of interest in the body by a steering instrument, i.e., joystick. Use of two working channels. | Transoral robotic surgery (TORS), transoral laser microsurgery (TLM), and Flex® procedures | Yes (2014) | |

| Novarad®, Pasig, Philippines | VisAR [5] | Instrument tracking and navigation guidance, submillimeter accuracy. | Reconstructs patient imaging data into 3D holograms superimposed onto patient. | Superposition-based | Hands-free voice recognition for facilitated robot control. Voice User Interface (VUI). Automatic data uploading to the system. | Neurosurgery | Yes (May 2022) |

| Medacta, Castel San Pietro, Switzerland | NextAR [177] | Instrument tracking and 3D navigation guidance, submillimeter accuracy. | Use of smart glasses to deliver an immersive experience to surgeons. | Superposition/marker-based | Overlays 3D reconstructed models adapted to the patient’s anatomy and biomechanics. | CT-based knee ligament balance and other hip, shoulder, and joint arthroplasty interventions. | Yes (2021) |

| IMRIS Inc., Winnipeg, MB, Canada | NeuroARM [178] | MRI-based image-guided navigation, force feedback from controllers for tumor localization and resection. | AR-based immersive environment for recreation of haptic, olfactory, and touch stimuli. | Marker-based | Image-guided robotic interventions inside an MRI, with sensory stimulus from workstation to guide the end-effector. | Brain surgery | Yes (2016) |

| Ma et al., Chinese University of Hong Kong | 6-DoF robotic stereo flexible endoscope (RSFE) [179] | Denavit–Hartenberg derivations of Jacobian, servo control, and head tracking for wider angle view, user evaluation, task load comparison. | HoloLens-based tracking using HMD for image-guided endoscopic tracking. | Marker-based | Use of head tracking HoloLens for camera calibration and visualization of tool placement of flexible endoscope | Cardiothoracic | No |

| Fotouhi et al., John Hopkins University | KUKA robot-based reflective AR [125] | User evaluation, camera-to-joint reference frame Euclidean distance compared for no AR, reflective mirror AR, and single-view AR, joint error calculation. | HMD-based robotic arm guidance and positioning using reflective mirrors. | Marker-based | Digital twin with ghost robot for mapping of virtual-to-real robot linkages from a reference point. | Cardiothoracic | No |

| Forte et al., Max Planck Institute for Intelligent Systems | Robotic dry-lab lymphadenectomy [180] | Distance computation for Euclidean arm measurements, user evaluation of AR alignment accuracy. | Stereo-view capture of medical images acquired by robot and HD visualization. | Marker-based | AR-based HMD used to visualize the motion of surgical tip in an image-guided procedure. Image processing of CT scans to locate pixels of virtual marker placed in virtual scene. | Custom laparoscopic box trainer containing a piece of simulated tissue | No |

| Qian et al., John Hopkins University | Augmented reality assistance for minimally invasive surgery [181] | Point cloud generation for localization of markers, system evaluation using accuracy parameters such as frame rate, peg transfer experiment. | Overlay of point clouds on test anatomy. | Superposition/rigid marker-based | AR-based experimental setup for guiding of a surgical tool to a defect in anatomy. | General surgery | No |

Appendix B. Types of Neural Networks Used in Image Registration for AR Reconstruction in Surgery

| Authors | Model | Performance Metrics | Purpose | Accuracy | Optimization Algorithm | Equipment |

| Von Atzigen et al. [80] | Stereo neural networks (adapted from YOLO) | Bending parameters such as axial displacement, reorientation, bending time, frame rate. | Markerless navigation and localization of pedicles of screw heads. | 67.26% to 76.51% | Perspective-n-point algorithm and random sample consensus (RANSAC), SLAM. | Head-mounted AR device (HoloLens) with C++ |

| Doughty et al. [182] | SurgeonAssistNet composed of EfficientNet-Lite-B0 for feature extraction and gated recurrent unit RNN | Parameters of the GRU cell and dense layer, model size, inference time, accuracy, precision, and recall. | Evaluating the online performance of the HoloLens during virtual augmentation of anatomical landmarks. | 5.2× decrease in CPU inference time. | 7.4× fewer model parameters, achieved 10.2× faster FLOPS, and used 3× less time for inference with respect to SV-RCNet. | Optical see-through head-mounted displays |

| Tanzi et al. [118] | CNN-based architectures such as UNet, ResNet, MobileNet for semantic segmentation of data | Intersection over union (IoU), Euclidean distance between points of interest, geodesic distance, number of iterations per second (it/s). | Semantic segmentation of intraoperative proctectomy, for 3D reconstruction of virtual models to preserve nerves of the prostate. | IoU = 0.894 (σ = 0.076) compared to 0.339 (σ = 0.195). | CNN with encoder–decoder structure for real-time image segmentation and training of a dataset in Keras and TensorFlow. | In vivo robot-assisted radical prostatectomy using DaVinci surgical console |

| Brunet et al. [183] | Adapted UNet architecture for simulation of preoperative organs | Image registration frequency, latency between data acquisition, input displacements, stochastic gradients, target registration error (TRE). | Use of an artificial neural network to learn and predict mesh deformation in human anatomical boundaries. | Mean target registration error = 2.9 mm, 100× faster. | Immersed boundary methods (FEM, MJED, Multiplicative Jacobian Energy Decomposition) for discretization of non-linear material on mesh. | RGB-D cameras |

| Marahrens et al. [184] | Visual deep learning algorithm such as UNet, DC-Net | For autonomous robotic ultrasound using deep-learning-based control, for better kinematic sensing and orientation of the US probe with respect to the organ surface. | Semantic segmentation of vessel scans for organ deformation analysis using a dVRK and Philips L15-7io probe. | Final model Dice score of 0.887 as compared to 0.982 in [179]. | DC-Net with images in the propagation direction feed through, binary classification task, IMU-fused kinematics for trajectory comparison. | Philips L15-i07 probe driven by US machine, dVRK software |

References

- Chen, B.; Marvin, S.; While, A. Containing COVID-19 in China: AI and the robotic restructuring of future cities. Dialogues Hum. Geogr. 2020, 10, 238–241. [Google Scholar] [CrossRef]

- Raje, S.; Reddy, N.; Jerbi, H.; Randhawa, P.; Tsaramirsis, G.; Shrivas, N.V.; Pavlopoulou, A.; Stojmenović, M.; Piromalis, D. Applications of Healthcare Robots in Combating the COVID-19 Pandemic. Appl. Bionics Biomech. 2021, 2021, 7099510. [Google Scholar] [CrossRef] [PubMed]

- Leal Ghezzi, T.; Campos Corleta, O. 30 years of robotic surgery. World J. Surg. 2016, 40, 2550–2557. [Google Scholar] [CrossRef] [PubMed]

- Wörn, H.; Mühling, J. Computer- and robot-based operation theatre of the future in cranio-facial surgery. Int. Congr. Ser. 2001, 1230, 753–759. [Google Scholar] [CrossRef]

- VisAR: Augmented Reality Surgical Navigation. Available online: https://www.novarad.net/visar (accessed on 6 March 2022).

- Proximie: Saving Lives by Sharing the World’s Best Clinical Practice. Available online: https://www.proximie.com/ (accessed on 6 March 2022).

- Haidegger, T. Autonomy for Surgical Robots: Concepts and Paradigms. IEEE Trans. Med. Robot. Bionics 2019, 1, 65–76. [Google Scholar] [CrossRef]

- Attanasio, A.; Scaglioni, B.; De Momi, E.; Fiorini, P.; Valdastri, P. Autonomy in surgical robotics. Annu. Rev. Control. Robot. Auton. Syst. 2021, 4, 651–679. [Google Scholar] [CrossRef]

- Ryu, J.; Joo, H.; Woo, J. The safety design concept for surgical robot employing degree of autonomy. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1918–1921. [Google Scholar]

- IEC 80601-2-77:2019. Medical Electrical Equipment—Part 2-77: Particular Requirements for the Basic Safety and Essential Performance of Robotically Assisted Surgical Equipment. Available online: https://www.iso.org/standard/68473.html (accessed on 20 March 2023).

- IEC 60601-1-11:2015. Medical Electrical Equipment—Part 1-11: General Requirements for Basic Safety and Essential Performance—Collateral Standard: Requirements for Medical Electrical Equipment and Medical Electrical Systems Used in the Home Healthcare Environment. Available online: https://www.iso.org/standard/65529.html (accessed on 20 March 2023).

- Simaan, N.; Yasin, R.M.; Wang, L. Medical technologies and challenges of robot-assisted minimally invasive intervention and diagnostics. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 465–490. [Google Scholar] [CrossRef]

- Hoeckelmann, M.; Rudas, I.J.; Fiorini, P.; Kirchner, F.; Haidegger, T. Current capabilities development potential in surgical robotics. Int. J. Adv. Robot. Syst. 2015, 12, 61. [Google Scholar] [CrossRef]

- Van Krevelen, D.; Poelman, R. Augmented Reality: Technologies, Applications, and Limitations; Departement of Computer Sciences, Vrije University Amsterdam: Amsterdam, The Netherlands, 2007. [Google Scholar]

- Microsoft. HoloLens 2; Microsoft: Redmond, WA, USA, 2019; Available online: https://www.micro-soft.com/en-us/hololens (accessed on 10 March 2022).

- Peugnet, F.; Dubois, P.; Rouland, J.F. Virtual reality versus conventional training in retinal photocoagulation: A first clinical assessment. Comput. Aided Surg. 1998, 3, 20–26. [Google Scholar] [CrossRef]

- Khor, W.S.; Baker, B.; Amin, K.; Chan, A.; Patel, K.; Wong, J. Augmented and virtual reality in surgery-the digital surgical environment: Applications, limitations and legal pitfalls. Ann. Transl. Med. 2016, 4, 454. [Google Scholar] [CrossRef]

- Oculus.com. Oculus Rift S: PC-Powered VR Gaming Headset|Oculus. 2022. Available online: https://www.oculus.com/rift-s/?locale=en_GB (accessed on 6 April 2022).

- MetaQuest. Available online: https://www.oculus.com/experiences/quest/?locale=en_GB (accessed on 10 March 2022).

- Limmer, M.; Forster, J.; Baudach, D.; Schüle, F.; Schweiger, R.; Lensch, H.P.A. Robust Deep-Learning-Based Road-Prediction for Augmented Reality Navigation Systems at Night. In Proceedings of the IEEE 19th International Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1888–1895. [Google Scholar]

- Chen, C.; Zhu, H.; Li, M.; You, S. A Review of Visual-Inertial Simultaneous Localization and Mapping from Filtering-Based and Optimization-Based Perspectives. Robotics 2018, 7, 45. [Google Scholar] [CrossRef]

- Venkatesan, M.; Mohan, H.; Ryan, J.R.; Schürch, C.M.; Nolan, G.P.; Frakes, D.H.; Coskun, A.F. Virtual and augmented reality for biomedical applications. Cell Rep. Med. 2021, 2, 100348. [Google Scholar] [CrossRef] [PubMed]

- Nilsson, N.J. The Quest for Artificial Intelligence: A History of Ideas and Achievements; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- Kerr, B.; O’Leary, J. The training of the surgeon: Dr. Halsted’s greatest legacy. Am. Surg. 1999, 65, 1101–1102. [Google Scholar] [CrossRef] [PubMed]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented Reality: A class of displays on the reality-virtuality continuum. In Proceedings of the Photonics for Industrial Applications, Boston, MA, USA, 31 October–4 November 1994. [Google Scholar]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Kress, B.C.; Cummings, W.J. Optical architecture of HoloLens mixed reality headset. In Digital Optical Technologies; SPIE: Bellingham, WA, USA, 2017; Volume 10335, pp. 124–133. [Google Scholar]

- Kendoul, F. Towards a Unified Framework for UAS Autonomy and Technology Readiness Assessment (ATRA). In Autonomous Control Systems and Vehicles; Nonami, K., Kartidjo, M., Yoon, K.J., Budiyono, A., Eds.; Springer: Tokyo, Japan, 2013; pp. 55–71. [Google Scholar]

- Pott, P.P.; Scharf, H.P.; Schwarz, M.L. Today’s state of the art in surgical robotics. Comput. Aided Surg. 2005, 10, 101–132. [Google Scholar] [PubMed]

- Tsuda, S.; Kudsi, O.Y. Robotic Assisted Minimally Invasive Surgery; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Barcali, E.; Iadanza, E.; Manetti, L.; Francia, P.; Nardi, C.; Bocchi, L. Augmented Reality in Surgery: A Scoping Review. Appl. Sci. 2022, 12, 6890. [Google Scholar] [CrossRef]

- Brito, P.Q.; Stoyanova, J. Marker versus markerless augmented reality. Which has more impact on users? Int. J. Hum. Comput. Interact. 2018, 34, 819–833. [Google Scholar] [CrossRef]

- Estrada, J.; Paheding, S.; Yang, X.; Niyaz, Q. Deep-Learning- Incorporated Augmented Reality Application for Engineering Lab Training. Appl. Sci. 2022, 12, 5159. [Google Scholar] [CrossRef]

- Rothberg, J.M.; Ralston, T.S.; Rothberg, A.G.; Martin, J.; Zahorian, J.S.; Alie, S.A.; Sanchez, N.J.; Chen, K.; Chen, C.; Thiele, K.; et al. Ultrasound-on-chip platform for medical imaging, analysis, and collective intelligence. Proc. Natl. Acad. Sci. USA 2021, 118, e2019339118. [Google Scholar] [CrossRef]

- Alam, M.S.; Gunawan, T.; Morshidi, M.; Olanrewaju, R. Pose estimation algorithm for mobile augmented reality based on inertial sensor fusion. Int. J. Electr. Comput. Eng. 2022, 12, 3620–3631. [Google Scholar] [CrossRef]

- Attivissimo, F.; Lanzolla, A.M.L.; Carlone, S.; Larizza, P.; Brunetti, G. A novel electromagnetic tracking system for surgery navigation. Comput. Assist. Surg. 2018, 23, 42–52. [Google Scholar] [CrossRef]

- Lee, D.; Yu, H.W.; Kim, S.; Yoon, J.; Lee, K.; Chai, Y.J.; Choi, Y.J.; Koong, H.-J.; Lee, K.E.; Cho, H.S.; et al. Vision-based tracking system for augmented reality to localize recurrent laryngeal nerve during robotic thyroid surgery. Sci. Rep. 2020, 10, 8437. [Google Scholar] [CrossRef]

- Scaradozzi, D.; Zingaretti, S.; Ferrari, A.J.S.C. Simultaneous localization and mapping (SLAM) robotics techniques: A possible application in surgery. Shanghai Chest 2018, 2, 5. [Google Scholar] [CrossRef]

- Konolige, K.; Bowman, J.; Chen, J.D.; Mihelich, P.; Calonder, M.; Lepetit, V.; Fua, P. View-based maps. Int. J. Robot. Res. 2010, 29, 941–957. [Google Scholar] [CrossRef]

- Cheein, F.A.; Lopez, N.; Soria, C.M.; di Sciascio, F.A.; Lobo Pereira, F.; Carelli, R. SLAM algorithm applied to robotics assistance for navigation in unknown environments. J. Neuroeng. Rehabil. 2010, 7, 10. [Google Scholar] [CrossRef]

- Geist, E.; Shimada, K. Position error reduction in a mechanical tracking linkage for arthroscopic hip surgery. Int. J. Comput. Assist. Radiol. Surg. 2011, 6, 693–698. [Google Scholar] [CrossRef]

- Bucknor, B.; Lopez, C.; Woods, M.J.; Aly, H.; Palmer, J.W.; Rynk, E.F. Electromagnetic Tracking with Augmented Reality Systems. U.S. Patent and Trademark Office. U.S. Patent No. US10948721B2, 16 March 2021. Available online: https://patents.google.com/patent/US10948721B2/en (accessed on 23 March 2023).

- Pagador, J.B.; Sánchez, L.F.; Sánchez, J.A.; Bustos, P.; Moreno, J.; Sánchez-Margallo, F.M. Augmented reality haptic (ARH): An approach of electromagnetic tracking in minimally invasive surgery. Int. J. Comput. Assist. Radiol. Surg. 2011, 6, 257–263. [Google Scholar] [CrossRef]

- Liu, S.; Feng, Y. Real-time fast-moving object tracking in severely degraded videos captured by unmanned aerial vehicle. Int. J. Adv. Robot. Syst. 2018, 15, 1–10. [Google Scholar] [CrossRef]

- Diaz, R.; Yoon, J.; Chen, R.; Quinones-Hinojosa, A.; Wharen, R.; Komotar, R. Real-time video-streaming to surgical loupe mounted head-up display for navigated meningioma resection. Turk. Neurosurg. 2017, 28, 682–688. [Google Scholar] [CrossRef]

- Zhu, S.; Morin, L.; Pressigout, M.; Moreau, G.; Servières, M. Video/GIS registration system based on skyline matching method. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3632–3636. [Google Scholar] [CrossRef]

- Amarillo, A.; Oñativia, J.; Sanchez, E. RoboTracker: Collaborative robotic assistant device with electromechanical patient tracking for spinal surgery. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1312–1317. [Google Scholar] [CrossRef]

- Zhou, Z.; Wu, B.; Duan, J.; Zhang, X.; Zhang, N.; Liang, Z. Optical surgical instrument tracking system based on the principle of stereo vision. J. Biomed. Opt. 2017, 22, 65005. [Google Scholar] [CrossRef] [PubMed]

- Sorriento, A.; Porfido, M.B.; Mazzoleni, S.; Calvosa, G.; Tenucci, M.; Ciuti, G.; Dario, P. Optical and Electromagnetic Tracking Systems for Biomedical Applications: A Critical Review on Potentialities and Limitations. IEEE Rev. Biomed. Eng. 2020, 13, 212–232. [Google Scholar] [CrossRef]

- Sirokai, B.; Kiss, M.; Kovács, L.; Benyó, B.I.; Benyó, Z.; Haidegger, T. Best Practices in Electromagnetic Tracking System Assessment. 2012. Available online: https://repozitorium.omikk.bme.hu/bitstream/handle/10890/4783/137019.pdf?sequence=1 (accessed on 22 March 2023).

- Pfister, S.T. Algorithms for Mobile Robot Localization and Mapping, Incorporating Detailed Noise Modeling and Multi-Scale Feature Extraction. Ph.D. Thesis, California Institute of Technology, Pasadena, CA, USA, 2006. [Google Scholar] [CrossRef]

- Komorowski, J.; Rokita, P. Camera Pose Estimation from Sequence of Calibrated Images. arXiv 2018, arXiv:1809.11066. [Google Scholar]

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661. [Google Scholar] [CrossRef]

- Lee, T.; Jung, C.; Lee, K.; Seo, S. A study on recognizing multi-real world object and estimating 3D position in augmented reality. J. Supercomput. 2022, 78, 7509–7528. [Google Scholar] [CrossRef]

- Portalés, C.; Gimeno, J.; Salvador, A.; García-Fadrique, A.; Casas-Yrurzum, S. Mixed Reality Annotation of Robotic-Assisted Surgery videos with real-time tracking and stereo matching. Comput. Graph. 2023, 110, 125–140. [Google Scholar] [CrossRef]

- Yavas, G.; Caliskan, K.E.; Cagli, M.S. Three-dimensional-printed marker-based augmented reality neuronavigation: A new neuronavigation technique. Neurosurg. Focus 2021, 51, E20. [Google Scholar] [CrossRef]

- Van Duren, B.H.; Sugand, K.; Wescott, R.; Carrington, R.; Hart, A. Augmented reality fluoroscopy simulation of the guide-wire insertion in DHS surgery: A proof of concept study. Med. Eng. Phys. 2018, 55, 52–59. [Google Scholar] [CrossRef]

- Luciano, C.J.; Banerjee, P.P.; Bellotte, B.; Oh, G.M.; Lemole, M., Jr.; Charbel, F.T.; Roitberg, B. Learning retention of thoracic pedicle screw placement using a high-resolution augmented reality simulator with haptic feedback. Neurosurgery 2011, 69 (Suppl. Operative), ons14–ons19, discussion ons19. [Google Scholar] [CrossRef]

- Virtual Reality Simulations in Healthcare. Available online: https://www.forbes.com/sites/forbestechcouncil/2022/01/24/virtual-reality-simulations-in-healthcare/?sh=46cb0870382a (accessed on 2 May 2022).

- Hou, L.; Dong, X.; Li, K.; Yang, C.; Yu, Y.; Jin, X.; Shang, S. Comparison of Augmented Reality-assisted and Instructor-assisted Cardiopulmonary Resuscitation: A Simulated Randomized Controlled Pilot Trial. Clin. Simul. Nurs. 2022, 68, 9–18. [Google Scholar] [CrossRef]

- Liu, W.P.; Richmon, J.D.; Sorger, J.M.; Azizian, M.; Taylor, R.H. Augmented reality and cone beam CT guidance for transoral robotic surgery. J. Robotic Surg. 2015, 9, 223–233. [Google Scholar] [CrossRef]

- Taha, M.; Sayed, M.; Zayed, H. Digital Vein Mapping Using Augmented Reality. Int. J. Intell. Eng. Syst. 2020, 13, 512–521. [Google Scholar] [CrossRef]

- Kuzhagaliyev, T.; Clancy, N.T.; Janatka, M.; Tchaka, K.; Vasconcelos, F.; Clarkson, M.J.; Gurusamy, K.; Hawkes, D.J.; Davidson, B.; Stoyanov, D. Augmented Reality Needle Ablation Guidance Tool for Irreversible Electroporation in the Pancreas. In Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling; Proc. SPIE: Houston, TX, USA, 2018; Volume 10576. [Google Scholar]

- AccuVein® Vein Vein Visualization: The Future of Healthcare Is Here. Available online: https://www.accuvein.com/why-accuvein/ar/ (accessed on 1 May 2022).

- NextVein. Available online: https://nextvein.com (accessed on 1 May 2022).

- Ai, D.; Yang, J.; Fan, J.; Zhao, Y.; Song, X.; Shen, J.; Shao, L.; Wang, Y. Augmented reality based real-time subcutaneous vein imaging system. Biomed. Opt. Express 2016, 7, 2565–2585. [Google Scholar] [CrossRef] [PubMed]

- Kästner, L.; Frasineanu, V.; Lambrecht, J. A 3D-Deep-Learning-based Augmented Reality Calibration Method for Robotic Environments using Depth Sensor Data. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1135–1141. [Google Scholar] [CrossRef]

- Von Atzigen, M.; Liebmann, F.; Hoch, A.; Miguel Spirig, J.; Farshad, M.; Snedeker, J.; Fürnstahl, P. Marker-free surgical navigation of rod bending using a stereo neural network and augmented reality in spinal fusion. Med. Image Anal. 2022, 77, 102365. [Google Scholar] [CrossRef]

- Pratt, P.; Ives, M.; Lawton, G.; Simmons, J.; Radev, N.; Spyropoulou, L.; Amiras, D. Through the HoloLens™ looking glass: Augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur. Radiol. Exp. 2018, 2, 2. [Google Scholar] [CrossRef] [PubMed]

- Thøgersen, M.; Andoh, J.; Milde, C.; Graven-Nielsen, T.; Flor, H.; Petrini, L. Individualized augmented reality training reduces phantom pain and cortical reorganization in amputees: A proof of concept study. J. Pain 2020, 21, 1257–1269. [Google Scholar] [CrossRef] [PubMed]

- Rothgangel, A.; Bekrater-Bodmann, R. Mirror therapy versus augmented/virtual reality applications: Towards a tailored mechanism-based treatment for phantom limb pain. Pain Manag. 2019, 9, 151–159. [Google Scholar] [CrossRef]

- Mischkowski, R.A.; Zinser, M.J.; Kubler, A.C.; Krug, B.; Seifert, U.; Zoller, J.E. Application of an augmented reality tool for maxillary positioning in orthognathic surgery: A feasibility study. J. Craniomaxillofac. Surg. 2006, 34, 478–483. [Google Scholar] [CrossRef]

- Wang, J.; Suenaga, H.; Hoshi, K.; Yang, L.; Kobayashi, E.; Sakuma, I.; Liao, H. Augmented reality navigation with automatic marker-free image registration using 3-D image overlay for dental surgery. IEEE Trans. Bio Med. Eng. 2014, 61, 1295–1304. [Google Scholar] [CrossRef]

- Liu, K.; Gao, Y.; Abdelrehem, A.; Zhang, L.; Chen, X.; Xie, L.; Wang, X. Augmented reality navigation method for recontouring surgery of craniofacial fibrous dysplasia. Sci. Rep. 2021, 11, 10043. [Google Scholar] [CrossRef]

- Pfefferle, M.; Shahub, S.; Shahedi, M.; Gahan, J.; Johnson, B.; Le, P.; Vargas, J.; Judson, B.O.; Alshara, Y.; Li, O.; et al. Renal biopsy under augmented reality guidance. In Proceedings of the SPIE Medical Imaging, Houston, TX, USA, 16 March 2020. [Google Scholar] [CrossRef]

- Nicolau, S.; Soler, L.; Mutter, D.; Marescaux, J. Augmented reality in laparoscopic surgical oncology. Surg. Oncol. 2011, 20, 189–201. [Google Scholar] [CrossRef]

- Salah, Z.; Preim, B.; Elolf, E.; Franke, J.; Rose, G. Improved navigated spine surgery utilizing augmented reality visualization. In Bildverarbeitung für die Medizin; Springer: Berlin/Heidelberg, Germany, 2011; pp. 319–323. [Google Scholar]

- Pessaux, P.; Diana, M.; Soler, L.; Piardi, T.; Mutter, D.; Marescaux, J. Towards cybernetic surgery: Robotic and augmented reality-assisted liver segmentectomy. Langenbecks Arch. Surg. 2015, 400, 381–385. [Google Scholar] [CrossRef] [PubMed]

- Hussain, R.; Lalande, A.; Marroquin, R.; Guigou, C.; Grayeli, A.B. Video-based augmented reality combining CT-scan and instrument position data to microscope view in middle ear surgery. Sci. Rep. 2020, 10, 6767. [Google Scholar] [CrossRef] [PubMed]

- MURAB Project. Available online: https://www.murabproject.eu (accessed on 30 April 2022).

- Zeng, F.; Wei, F. Hole filling algorithm based on contours information. In Proceedings of the 2nd International Conference on Information Science and Engineering, Hangzhou, China, 4–6 December 2010. [Google Scholar] [CrossRef]

- Chen, X.; Xu, L.; Wang, Y.; Wang, H.; Wang, F.; Zeng, X.; Wang, Q.; Egger, J. Development of a surgical navigation system based on augmented reality using an optical see-through head-mounted display. J. Biomed. Inf. 2015, 55, 124–131. [Google Scholar] [CrossRef]

- Ma, L.; Zhao, Z.; Chen, F.; Zhang, B.; Fu, L.; Liao, H. Augmented reality surgical navigation with ultrasound-assisted registration for pedicle screw placement: A pilot study. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 2205–2215. [Google Scholar] [CrossRef] [PubMed]

- Hajek, J.; Unberath, M.; Fotouhi, J.; Bier, B.; Lee, S.C.; Osgood, G.; Maier, A.; Navab, N. Closing the Calibration Loop: An Inside-out-tracking Paradigm for Augmented Reality in Orthopedic Surgery. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018; Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11073. [Google Scholar]

- Elmi-Terander, A.; Nachabe, R.; Skulason, H.; Pedersen, K.; Soderman, M.; Racadio, J.; Babic, D.; Gerdhem, P.; Edstrom, E. Feasibility and accuracy of thoracolumbar minimally invasive pedicle screw placement with augmented reality navigation technology. Spine 2018, 43, 1018–1023. [Google Scholar] [CrossRef] [PubMed]

- Dickey, R.M.; Srikishen, N.; Lipshultz, L.I.; Spiess, P.E.; Carrion, R.E.; Hakky, T.S. Augmented reality assisted surgery: A urologic training tool. Asian J. Androl. 2016, 18, 732–734. [Google Scholar] [PubMed]

- Wu, J.R.; Wang, M.L.; Liu, K.C.; Hu, M.H.; Lee, P.Y. Real-time advanced spinal surgery via visible patient model and augmented reality system. Comput. Methods Programs Biomed. 2014, 113, 869–881. [Google Scholar] [CrossRef]

- Wen, R.; Yang, L.; Chui, C.K.; Lim, K.B.; Chang, S. Intraoperative Visual Guidance and Control Interface for Augmented Reality Robotic Surgery; IEEE: Piscataway, NJ, USA, 2010; pp. 947–952. [Google Scholar]

- Simoes, M.; Cao, C.G. Leonardo: A first step towards an interactive decision aid for port-placement in robotic surgery. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 491–496. [Google Scholar]

- Burström, G.; Nachabe, R.; Persson, O.; Edström, E.; Elmi Terander, A. Augmented and Virtual Reality Instrument Tracking for Minimally Invasive Spine Surgery: A Feasibility and Accuracy Study. Spine 2019, 44, 1097–1104. [Google Scholar] [CrossRef]

- Lee, D.; Kong, H.J.; Kim, D.; Yi, J.W.; Chai, Y.J.; Lee, K.E.; Kim, H.C. Preliminary study on application of augmented reality visualization in robotic thyroid surgery. Ann. Surg. Treat Res. 2018, 95, 297–302. [Google Scholar] [CrossRef]

- Agten, C.A.; Dennler, C.; Rosskopf, A.B.; Jaberg, L.; Pfirrmann, C.W.A.; Farshad, M. Augmented Reality-Guided Lumbar Facet Joint Injections. Investig. Radiol. 2018, 53, 495–498. [Google Scholar] [CrossRef]

- Ghaednia, H.; Fourman, M.S.; Lans, A.; Detels, K.; Dijkstra, H.; Lloyd, S.; Sweeney, A.; Oosterhoff, J.H.; Schwab, J.H. Augmented and virtual reality in spine surgery, current applications and future potentials. Spine J. 2021, 21, 1617–1625. [Google Scholar] [CrossRef]

- Nachabe, R.; Strauss, K.; Schueler, B.; Bydon, M. Radiation dose and image quality comparison during spine surgery with two different, intraoperative 3D imaging navigation systems. J. Appl. Clin. Med. Phys. 2019, 20, 136–145. [Google Scholar] [CrossRef]

- Londoño, M.C.; Danger, R.; Giral, M.; Soulillou, J.P.; Sánchez-Fueyo, A.; Brouard, S. A need for biomarkers of operational tolerance in liver and kidney transplantation. Am. J. Transplant. 2012, 12, 1370–1377. [Google Scholar] [CrossRef]

- Georgi, M.; Patel, S.; Tandon, D.; Gupta, A.; Light, A.; Nathan, A. How is the Digital Surgical Environment Evolving? The Role of Augmented Reality in Surgery and Surgical Training. Preprints.org 2021, 2021100048. [Google Scholar] [CrossRef]

- Calhoun, V.D.; Adali, T.; Giuliani, N.R.; Pekar, J.J.; Kiehl, K.A.; Pearlson, G.D. Method for multimodal analysis of independent source differences in schizophrenia: Combining gray matter structural and auditory oddball functional data. Hum. Brain Mapp. 2006, 27, 47–62. [Google Scholar] [CrossRef] [PubMed]

- Kronman, A.; Joskowicz, L. Image segmentation errors correction by mesh segmentation and deformation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2013; pp. 206–213. [Google Scholar]

- Tamadazte, B.; Voros, S.; Boschet, C.; Cinquin, P.; Fouard, C. Augmented 3-d view for laparoscopy surgery. In Workshop on Augmented Environments for Computer-Assisted Interventions; Springer: Berlin/Heidelberg, Germany, 2012; pp. 117–131. [Google Scholar]

- Wang, A.; Wang, Z.; Lv, D.; Fang, Z. Research on a novel non-rigid registration for medical image based on SURF and APSO. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 6, pp. 2628–2633. [Google Scholar]

- Pandey, P.; Guy, P.; Hodgson, A.J.; Abugharbieh, R. Fast and automatic bone segmentation and registration of 3D ultrasound to CT for the full pelvic anatomy: A comparative study. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1515–1524. [Google Scholar] [CrossRef] [PubMed]

- Hacihaliloglu, I. Ultrasound imaging and segmentation of bone surfaces: A review. Technology 2017, 5, 74–80. [Google Scholar] [CrossRef]

- El-Hariri, H.; Pandey, P.; Hodgson, A.J.; Garbi, R. Augmented reality visualisation for orthopaedic surgical guidance with pre-and intra-operative multimodal image data fusion. Healthc. Technol. Lett. 2018, 5, 189–193. [Google Scholar] [CrossRef]

- Wittmann, W.; Wenger, T.; Zaminer, B.; Lueth, T.C. Automatic correction of registration errors in surgical navigation systems. IEEE Trans. Biomed. Eng. 2011, 58, 2922–2930. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, K.; Jiang, J.; Tan, Q. Research on intraoperative organ motion tracking method based on fusion of inertial and electromagnetic navigation. IEEE Access 2021, 9, 49069–49081. [Google Scholar] [CrossRef]

- Jiang, Z.; Gao, Z.; Chen, X.; Sun, W. Remote Haptic Collaboration for Virtual Training of Lumbar Puncture. J. Comput. 2013, 8, 3103–3110. [Google Scholar] [CrossRef]

- Wu, C.; Wan, J.W. Multigrid methods with newton-gauss-seidel smoothing and constraint preserving interpolation for obstacle problems. Numer. Math. Theory Methods Appl. 2015, 8, 199–219. [Google Scholar] [CrossRef]

- Livyatan, H.; Yaniv, Z.; Joskowicz, L. Gradient-based 2-D/3-D rigid registration of fluoroscopic X-ray to CT. IEEE Trans. Med. Imaging 2003, 22, 1395–1406. [Google Scholar] [CrossRef] [PubMed]

- Martínez, H.; Skournetou, D.; Hyppölä, J.; Laukkanen, S.; Heikkilä, A. Drivers and Bottlenecks in the Adoption of AugmentedReality Applications. J. Multimed. Theory Appl. 2014, 2. [Google Scholar]

- Govers, F.X. Artificial Intelligence for Robotics: Build Intelligent Robots that Perform Human Tasks Using AI Techniques; Packt Publishing Limited: Birmingham, UK, 2018. [Google Scholar]

- Conti, A.; Guerra, M.; Dardari, D.; Decarli, N.; Win, M.Z. Network experimentation for cooperative localization. IEEE J. Sel. Areas Commun. 2012, 30, 467–475. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Wang, T.; Ji, X.; Shen, Y.; Sun, Z.; Zhang, X. A markerless automatic deformable registration framework for augmented reality navigation of laparoscopy partial nephrectomy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1285–1294. [Google Scholar] [CrossRef] [PubMed]

- Garon, M.; Lalonde, J.F. Deep 6-DOF tracking. IEEE Trans. Vis. Comput. Graph. 2017, 23, 2410–2418. [Google Scholar] [CrossRef] [PubMed]

- Abu Alhaija, H.; Mustikovela, S.K.; Mescheder, L.; Geiger, A.; Rother, C. Augmented reality meets deep learning for car instance segmentation in urban scenes. In Proceedings of the BMVC 2017 and Workshops, London, UK, 4–7 September 2017. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Canalini, L.; Klein, J.; Miller, D.; Kikinis, R. Segmentation-based registration of ultrasound volumes for glioma resection in image-guided neurosurgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1697–1713. [Google Scholar] [CrossRef]

- Doughty, M.; Singh, K.; Ghugre, N.R. SurgeonAssist-Net: Towards Context-Aware Head-Mounted Display-Based Augmented Reality for Surgical Guidance. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2021; pp. 667–677. [Google Scholar]

- Tanzi, L.; Piazzolla, P.; Porpiglia, F.; Vezzetti, E. Real-time deep learning semantic segmentation during intra-operative surgery for 3D augmented reality assistance. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1435–1445. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Adhami, L.; Maniere, E.C. Optimal Planning for Minimally Invasive Surgical Robots. IEEE Trans. Robot. Autom. 2003, 19, 854–863. [Google Scholar] [CrossRef]

- Gonzalez-Barbosa, J.J.; Garcia-Ramirez, T.; Salas, J.; Hurtado-Ramos, J.B. Optimal camera placement for total coverage. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 844–848. [Google Scholar]

- Yildiz, E.; Akkaya, K.; Sisikoglu, E.; Sir, M.Y. Optimal camera placement for providing angular coverage in wireless video sensor networks. IEEE Trans. Comput. 2013, 63, 1812–1825. [Google Scholar] [CrossRef]

- Gadre, S.Y.; Rosen, E.; Chien, G.; Phillips, E.; Tellex, S.; Konidaris, G. End-User Robot Programming Using Mixed Reality. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 2707–2713. [Google Scholar]

- Fotouhi, J.; Song, T.; Mehrfard, A.; Taylor, G.; Wang, Q.; Xian, F.; Martin-Gomez, A.; Fuerst, B.; Armand, M.; Unberath, M.; et al. Reflective-ar display: An interaction methodology for virtual-to-real alignment in medical robotics. IEEE Robot. Autom. Lett. 2020, 5, 2722–2729. [Google Scholar] [CrossRef]

- Fang, H.C.; Ong, S.K.; Nee, A.Y.C. Orientation planning of robot end-effector using augmented reality. Int. J. Adv. Manuf. Technol. 2013, 67, 2033–2049. [Google Scholar] [CrossRef]

- Bade, A.; Devadas, S.; Daman, D.; Suaib, N.M. Modeling and Simulation of Collision Response between Deformable Objects. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=9d62cae770ce2e6e5d76013782cee973a3be87e7 (accessed on 22 March 2023).

- Sun, L.W.; Yeung, C.K. Port placement and pose selection of the da Vinci surgical system for collision-free intervention based on performance optimization. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1951–1956. [Google Scholar]

- Lee, I.; Lee, K.K.; Sim, O.; Woo, K.S.; Buyoun, C.; Oh, J.H. Collision detection system for the practical use of the humanoid robot. In Proceedings of the IEEE-RAS 15th International Conference on Humanoid Robots, Seoul, Republic of Korea, 3–5 November 2015; pp. 972–976. [Google Scholar]

- Zhang, Z.; Xin, Y.; Liu, B.; Li, W.X.; Lee, K.H.; Ng, C.F.; Stoyanov, D.; Cheung, R.C.C.; Kwok, K.-W. FPGA-Based High-Performance Collision Detection: An Enabling Technique for Image-Guided Robotic Surgery. Front. Robot. AI 2016, 3, 51. [Google Scholar] [CrossRef]

- Coste-Manière, È.; Olender, D.; Kilby, W.; Schulz, R.A. Robotic whole body stereotactic radiosurgery: Clinical advantages of the CyberKnife® integrated system. Int. J. Med. Robot. Comput. Assist. Surg. 2005, 1, 28–39. [Google Scholar] [CrossRef]

- Weede, O.; Mehrwald, M.; Wörn, H. Knowledge-based system for port placement and robot setup optimization in minimally invasive surgery. IFAC Proc. Vol. 2012, 45, 722–728. [Google Scholar] [CrossRef]

- Gao, S.; Lv, Z.; Fang, H. Robot-assisted and conventional freehand pedicle screw placement: A systematic review and meta-analysis of randomized controlled trials. Eur. Spine J. 2018, 27, 921–930. [Google Scholar] [CrossRef]

- Wang, L. Collaborative robot monitoring and control for enhanced sustainability. Int. J. Adv. Manuf. Technol. 2015, 81, 1433–1445. [Google Scholar] [CrossRef]

- Du, G.; Long, S.; Li, F.; Huang, X. Active Collision Avoidance for Human-Robot Interaction with UKF, Expert System, and Artificial Potential Field Method. Front. Robot. AI 2018, 5, 125. [Google Scholar] [CrossRef]

- Hongzhong, Z.; Fujimoto, H. Suppression of current quantization effects for precise current control of SPMSM using dithering techniques and Kalman filter. IEEE Transac. Ind. Inform. 2014, 10, 1361–1371. [Google Scholar] [CrossRef]

- Das, N.; Yip, M. Learning-based proxy collision detection for robot motion planning applications. IEEE Trans. Robot. 2020, 36, 1096–1114. [Google Scholar] [CrossRef]

- Torres, L.G.; Kuntz, A.; Gilbert, H.B.; Swaney, P.J.; Hendrick, R.J.; Webster, R.J.; Alterovitz, R. A motion planning approach to automatic obstacle avoidance during concentric tube robot teleoperation. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 2361–2367. [Google Scholar]

- Killian, L.; Backhaus, J. Utilizing the RRT*-Algorithm for Collision Avoidance in UAV Photogrammetry Missions. 2021. Available online: https://arxiv.org/abs/2108.03863 (accessed on 22 March 2023).

- Ranne, A.; Clark, A.B.; Rojas, N. Augmented Reality-Assisted Reconfiguration and Workspace Visualization of Malleable Robots: Workspace Modification Through Holographic Guidance. IEEE Robot. Autom. Mag. 2022, 29, 10–21. [Google Scholar] [CrossRef]

- Lipton, J.I.; Fay, A.J.; Rus, D. Baxter’s homunculus: Virtual reality spaces for teleoperation in manufacturing. IEEE Robot. Autom. Lett. 2017, 3, 179–186. [Google Scholar] [CrossRef]

- Bolano, G.; Fu, Y.; Roennau, A.; Dillmann, R. Deploying Multi-Modal Communication Using Augmented Reality in a Shared Workspace. In Proceedings of the 2021 18th International Conference on Ubiquitous Robots (UR), Gangneung, Republic of Korea, 12–14 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 302–307. [Google Scholar]

- Da Col, T.; Caccianiga, G.; Catellani, M.; Mariani, A.; Ferro, M.; Cordima, G.; De Momi, E.; Ferrigno, G.; de Cobelli, O. Automating Endoscope Motion in Robotic Surgery: A Usability Study on da Vinci-Assisted Ex Vivo Neobladder Reconstruction. Front. Robot. AI 2021, 8, 707704. [Google Scholar] [CrossRef]

- Gao, W.; Tang, Q.; Yao, J.; Yang, Y. Automatic motion planning for complex welding problems by considering angular redundancy. Robot. Comput. Integr. Manuf. 2020, 62, 101862. [Google Scholar] [CrossRef]

- Zhang, Z.; Munawar, A.; Fischer, G.S. Implementation of a motion planning framework for the davinci surgical system research kit. In Proceedings of the Hamlyn Symposium on Medical Robotics, London, UK, 12–15 July 2014. [Google Scholar]

- Moon, H.C.; Park, S.J.; Kim, Y.D.; Kim, K.M.; Kang, H.; Lee, E.J.; Kim, M.-S.; Kim, J.W.; Kim, Y.H.; Park, C.-K.; et al. Navigation of frameless fixation for gamma knife radiosurgery using fixed augmented reality. Sci. Rep. 2022, 12, 4486. [Google Scholar] [CrossRef]

- Srinivasan, M.A.; Beauregard, G.L.; Brock, D.L. The impact of visual information on the haptic perception of stiffness in virtual environments. Proc. ASME Dyn. Syst. Control Div. 1996, 58, 555–559. [Google Scholar]

- Basdogan, C.; Ho, C.; Srinivasan, M.A.; Small, S.; Dawson, S. Force interactions in laparoscopic simulations: Haptic rendering of soft tissues. In Medicine Meets Virtual Reality; IOS Press: Amsterdam, The Netherlands, 1998; pp. 385–391. [Google Scholar]

- Latimer, C.W. Haptic Interaction with Rigid Objects Using Real-Time Dynamic Simulation. Ph.D. Thesis, Massachusetts Institute of Technology, Boston, MA, USA, 1997. [Google Scholar]

- Balanuik, R.; Costa, I.; Salisbury, J. Long Elements Method for Simulation of Deformable Objects. US Patent 2003/0088389 A1, 8 May 2003. [Google Scholar]

- Okamura, A.M. Methods for haptic feedback in teleoperated robot-assisted surgery. Ind. Rob. 2004, 31, 499–508. [Google Scholar] [CrossRef]

- Westebring-Van Der Putten, E.; Goossens, R.; Jakimowicz, J.; Dankelman, J. Haptics in minimally invasive surgery—A review. Minim. Invasive Ther. Allied. Technol. 2008, 17, 3–16. [Google Scholar] [CrossRef]

- Wurdemann, H.A.; Secco, E.L.; Nanayakkara, T.; Althoefer, K.; Mucha, L.; Rohr, K. Mapping tactile information of a soft manipulator to a haptic sleeve in RMIS. In Proceedings of the 3rd Joint Workshop on New Technologies for Computer and Robot Assisted Surgery, Verona, Italy, 11–13 September 2013; pp. 140–141. [Google Scholar]

- Li, M.; Konstantinova, J.; Secco, E.L.; Jiang, A.; Liu, H.; Nanayakkara, T.; Seneviratne, L.D.; Dasgupta, P.; Althoefer, K.; Wurdemann, H.A. Using visual cues to enhance haptic feedback for palpation on virtual model of soft tissue. Med. Biol. Eng. Comput. 2015, 53, 1177–1186. [Google Scholar] [CrossRef] [PubMed]

- Tsai, M.D.; Hsieh, M.S. Computer-based system for simulating spine surgery. In Proceedings of the 22nd IEEE International Symposium on Computer-Based Medical Systems, Albuquerque, NM, USA, 2–5 August 2009; pp. 1–8. [Google Scholar]

- Schendel, S.; Montgomery, K.; Sorokin, A.; Lionetti, G. A surgical simulator for planning and performing repair of cleft lips. J. Cranio-Maxillofac. Surg. 2005, 33, 223–228. [Google Scholar] [CrossRef] [PubMed]

- Olsson, P.; Nysjö, F.; Hirsch, J.M.; Carlbom, I.B. A haptics-assisted cranio-maxillofacial surgery planning system for restoring skeletal anatomy in complex trauma cases. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 887–894. [Google Scholar] [CrossRef] [PubMed]

- Richter, F.; Zhang, Y.; Zhi, Y.; Orosco, R.K.; Yip, M.C. Augmented reality predictive displays to help mitigate the effects of delayed telesurgery. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 444–450. [Google Scholar]

- Ye, M.; Zhang, L.; Giannarou, S.; Yang, G.Z. Real-time 3D tracking of articulated tools for robotic surgery. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2016; pp. 386–394. [Google Scholar]

- Marohn CM, R.; Hanly CE, J. Twenty-first century surgery using twenty-first century technology: Surgical robotics. Curr. Surg. 2004, 61, 466–473. [Google Scholar] [CrossRef]

- Dake, D.K.; Ofosu, B.A. 5G enabled technologies for smart education. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 201–206. [Google Scholar] [CrossRef]

- Grieco, M.; Elmore, U.; Vignali, A.; Caristo, M.E.; Persiani, R. Surgical Training for Transanal Total Mesorectal Excision in a Live Animal Model: A Preliminary Experience. J. Laparoendosc. Adv. Surg. Tech. 2022, 32, 866–870. [Google Scholar] [CrossRef]

- Takahashi, Y.; Hakamada, K.; Morohashi, H.; Akasaka, H.; Ebihara, Y.; Oki, E.; Hirano, S.; Mori, M. Verification of delay time and image compression thresholds for telesurgery. Asian J. Endosc. Surg. 2022, 16, 255–261. [Google Scholar] [CrossRef]

- Sun, T.; He, X.; Li, Z. Digital twin in healthcare: Recent updates and challenges. Digit. Health 2023, 9, 20552076221149651. [Google Scholar] [CrossRef]

- Niederer, S.A.; Plank, G.; Chinchapatnam, P.; Ginks, M.; Lamata, P.; Rhode, K.S.; Rinaldi, C.A.; Razavi, R.; Smith, N.P. Length-dependent tension in the failing heart and the efficacy of cardiac resynchronization therapy. Cardiovasc. Res. 2011, 89, 336–343. [Google Scholar] [CrossRef]

- Lebras, A.; Boustia, F.; Janot, K.; Lepabic, E.; Ouvrard, M.; Fougerou-Leurent, C.; Ferre, J.-C.; Gauvrit, J.-Y.; Eugene, F. Rehearsals using patient-specific 3D-printed aneurysm models for simulation of endovascular embolization of complex intracranial aneurysms: 3D SIM study. J. Neuroradiol. 2021, 50, 86–92. [Google Scholar] [CrossRef]

- Hernigou, P.; Safar, A.; Hernigou, J.; Ferre, B. Subtalar axis determined by combining digital twins and artificial intelligence: Influence of the orientation of this axis for hindfoot compensation of varus and valgus knees. Int. Orthop. 2022, 46, 999–1007. [Google Scholar] [CrossRef]

- Diachenko, D.; Partyshev, A.; Pizzagalli, S.L.; Bondarenko, Y.; Otto, T.; Kuts, V. Industrial collaborative robot Digital Twin integration and control using Robot Operating System. J. Mach. Eng. 2022, 22, 57–67. [Google Scholar] [CrossRef]

- Riedel, P.; Riesner, M.; Wendt, K.; Aßmann, U. Data-Driven Digital Twins in Surgery utilizing Augmented Reality and Machine Learning. In Proceedings of the 2022 IEEE International Conference on Communications Workshops (ICC Workshops), Seoul, Republic of Korea, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 580–585. [Google Scholar]

- Qian, L.; Wu, J.Y.; DiMaio, S.P.; Navab, N.; Kazanzides, P. A review of augmented reality in robotic-assisted surgery. IEEE Trans. Med. Robot. Bionics 2019, 2, 1–16. [Google Scholar] [CrossRef]

- Yang, Z.; Shang, J.; Liu, C.; Zhang, J.; Liang, Y. Identification of oral cancer in OCT images based on an optical attenuation model. Lasers Med. Sci. 2020, 35, 1999–2007. [Google Scholar] [CrossRef] [PubMed]

- SpineAssist: Robotic Guidance System for Assisting in Spine Surgery. Available online: https://www.summitspine.com/spineassist-robotic-guidance-system-for-assisting-in-spine-surgery-2/ (accessed on 12 April 2022).

- Renaissance. Available online: https://neurosurgicalassociatespc.com/mazor-robotics-renaissance-guidance-system/patient-information-about-renaissance/ (accessed on 12 April 2022).

- ROSA Spine. Available online: https://www.zimmerbiomet.lat/en/medical-professionals/robotic-solutions/rosa-spine.html (accessed on 12 April 2022).

- MAZOR X STEALTH EDITION: Robotic Guidance System for Spinal Surgery. Available online: https://www.medtronic.com/us-en/healthcare-professionals/products/spinal-orthopaedic/spine-robotics/mazor-x-stealth-edition.html (accessed on 12 April 2022).

- Flex Robotic System. Available online: https://novusarge.com/en/medical-products/flex-robotic-system/ (accessed on 12 April 2022).

- Medacta Announces First Surgeries in Japan with NextAR Augmented Reality Surgical Platform. Available online: https://www.surgicalroboticstechnology.com/news/medacta-announces-first-surgeries-in-japan-with-nextar-augmented-reality-surgical-platform/ (accessed on 10 June 2022).

- Sutherland, G.R.; McBeth, P.B.; Louw, D.F. NeuroArm: An MR compatible robot for microsurgery. In International Congress Series; Elsevier: Amsterdam, The Netherlands, 2003; Volume 1256, pp. 504–508. [Google Scholar]

- Ma, X.; Song, C.; Qian, L.; Liu, W.; Chiu, P.W.; Li, Z. Augmented reality-assisted autonomous view adjustment of a 6-DOF robotic stereo flexible endoscope. IEEE Trans. Med. Robot. Bionics 2022, 4, 356–367. [Google Scholar] [CrossRef]

- Forte, M.P.; Gourishetti, R.; Javot, B.; Engler, T.; Gomez, E.D.; Kuchenbecker, K.J. Design of interactive augmented reality functions for robotic surgery and evaluation in dry-lab lymphadenectomy. Int. J. Med. Robot. Comput. Assist. Surg. 2022, 18, e2351. [Google Scholar] [CrossRef]

- Qian, L.; Zhang, X.; Deguet, A.; Kazanzides, P. Aramis: Augmented reality assistance for minimally invasive surgery using a head-mounted display. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, 13–17 October 2019, Proceedings, Part V 22; Springer International Publishing: New York, NY, USA, 2019; pp. 74–82. [Google Scholar]

- Doughty, M.; Ghugre, N.R.; Wright, G.A. Augmenting performance: A systematic review of optical see-through head-mounted displays in surgery. J. Imaging 2022, 8, 203. [Google Scholar] [CrossRef]

- Brunet, J.N.; Mendizabal, A.; Petit, A.; Golse, N.; Vibert, E.; Cotin, S. Physics-based deep neural network for augmented reality during liver surgery. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2019; Springer: Cham, Switzerland, 2019; pp. 137–145. [Google Scholar]

- Marahrens, N.; Scaglioni, B.; Jones, D.; Prasad, R.; Biyani, C.S.; Valdastri, P. Towards Autonomous Robotic Minimally Invasive Ultrasound Scanning and V essel Reconstruction on Non-Planar Surfaces. Front. Robot. AI 2022, 9, 178. [Google Scholar] [CrossRef] [PubMed]

| Technical Bottlenecks | Description |

|---|---|

| Compatibility with social practices | Wearable devices such as Google Glass may create privacy issues. |

| Complexity (user-friendliness or learning) | AR is easy to learn by novice surgeons and can increase the learning curve. |

| Lack of accuracy in alignment | Modern DL algorithms such as deep transfer learning and supervised and unsupervised learning are used to tackle the issues in real-to-virtual world mapping. Lighting conditions can be adjusted for better alignment. |

| Trialability to general public | Easily deployed but may be expensive to test in several regions simultaneously. |

| Author(s) | Collision Avoidance Technique | Learning Method | Accuracy |

|---|---|---|---|

| Wang et al. [134] | Zero robot programming for vision-based human–robot interactions, linking two Kinect sensors for retrieval of robot pose in 3D from a robot mesh model. | Wise-ShopFloor framework is used to determine initial and final pose. | N/A |

| Du et al. [135] | Fast path planning using virtual potential fields, representing obstacles and targets, as well as Kinect sensors. | Human tracking using unscented Kalman filter, for mean and variance determination of a set of sigma points. | Lower avoidance time (>689.41 Hz). |

| Hongzhong et al. [136] | Preliminary filtering of mesh models to reduce the number of cuboids in experiment. Virtual fixtures known as active constraints used in generating resistive force. Automatic cube tessellation used for 3D point detection and collision avoidance. | Use of oriented bounding boxes (OBBs) and filtering algorithms: Separating Axis Test and Sweep and Prune. Use of field-programmable gate arrays to design a faster GPU system. | Frame rates of 17.5 k OBBs using a bit width of 20, update rate of 25 Hz compared to 1 kHz. |

| Das et al. [137] | OPML motion planning using standard geometric collision checkers such as proxy collision detectors. | Learning-based Fastron algorithm used to generate robot motion in complex obstacle-prone surroundings. | 100-times faster collision detection than C-space modeling. |

| Torres et al. [138] | Concentric tube robot teleoperation using automatic, collision avoidance roadmaps. | Rapidly exploring random graph (RRG) algorithm aids roadmap construction in maximum reachable insertion workspace. | Tip error between 0.18 mm and 0.21 mm of tip width. |

| Killian et al. [139] | Multicopter collision avoidance by redirecting a drone onto a planned path; connects random nodes within a search space on a virtual line. | Use of the probabilistic RRT algorithm for collision detection. | Speed of up to 6 m/s. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seetohul, J.; Shafiee, M.; Sirlantzis, K. Augmented Reality (AR) for Surgical Robotic and Autonomous Systems: State of the Art, Challenges, and Solutions. Sensors 2023, 23, 6202. https://doi.org/10.3390/s23136202

Seetohul J, Shafiee M, Sirlantzis K. Augmented Reality (AR) for Surgical Robotic and Autonomous Systems: State of the Art, Challenges, and Solutions. Sensors. 2023; 23(13):6202. https://doi.org/10.3390/s23136202

Chicago/Turabian StyleSeetohul, Jenna, Mahmood Shafiee, and Konstantinos Sirlantzis. 2023. "Augmented Reality (AR) for Surgical Robotic and Autonomous Systems: State of the Art, Challenges, and Solutions" Sensors 23, no. 13: 6202. https://doi.org/10.3390/s23136202

APA StyleSeetohul, J., Shafiee, M., & Sirlantzis, K. (2023). Augmented Reality (AR) for Surgical Robotic and Autonomous Systems: State of the Art, Challenges, and Solutions. Sensors, 23(13), 6202. https://doi.org/10.3390/s23136202