Considering that our approach was applied in two different scenarios—indoor short term and outdoor long term—we split this section into two subsections, one for each scenario.

3.1. Indoors Experiment

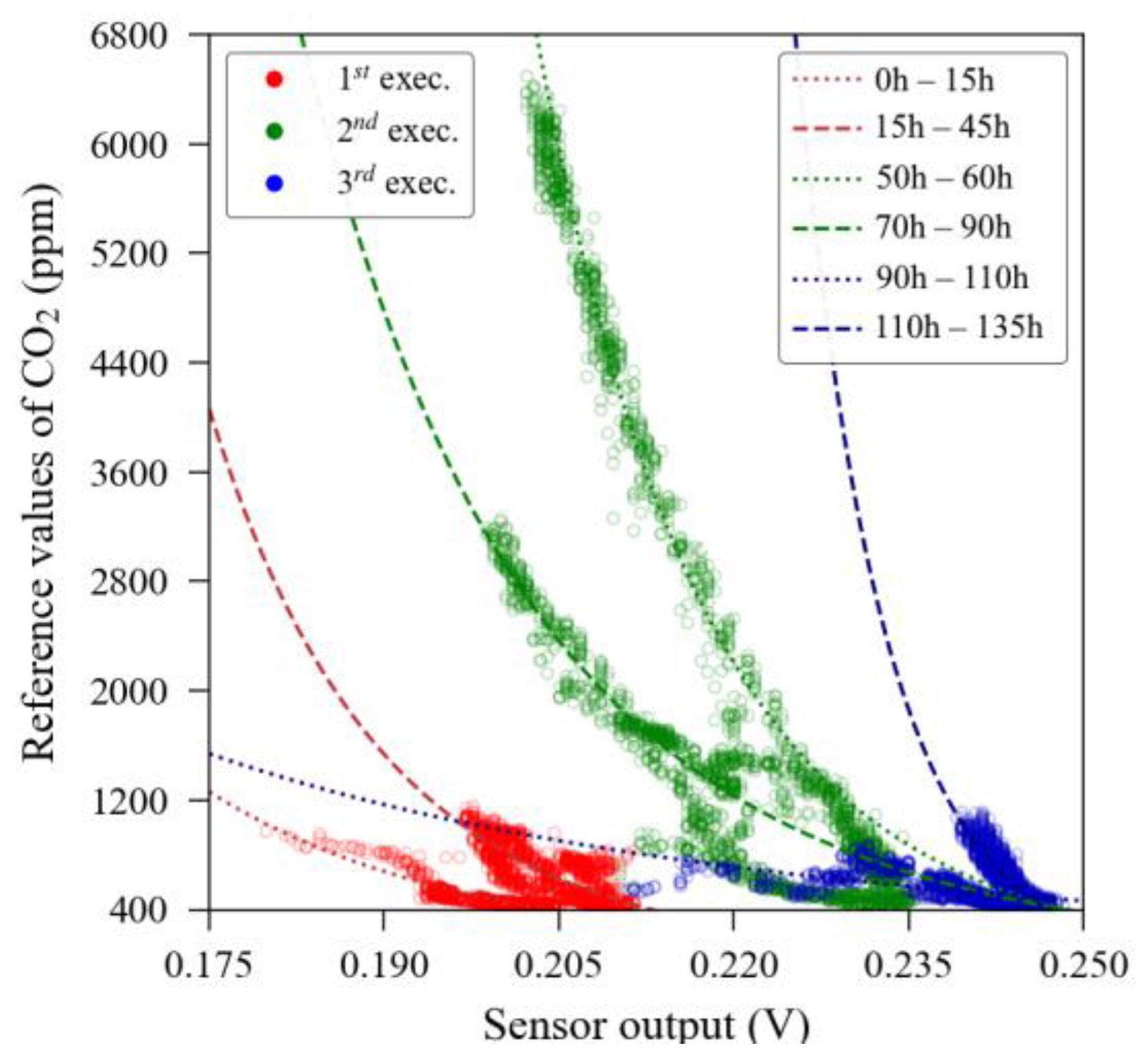

After joining the raw data from the indoor exposure experiments, the k-fold split method was used to randomly extract points from each sensor dataset and create modelling subsets. Each subset for modelling the curve equation was formed with 20% of the respective sensor dataset, sampled randomly along the time series. We had not specified seeds in the split process to enhance the randomness and avoid eventual unbalanced sampling to be repeated in all sensor units.

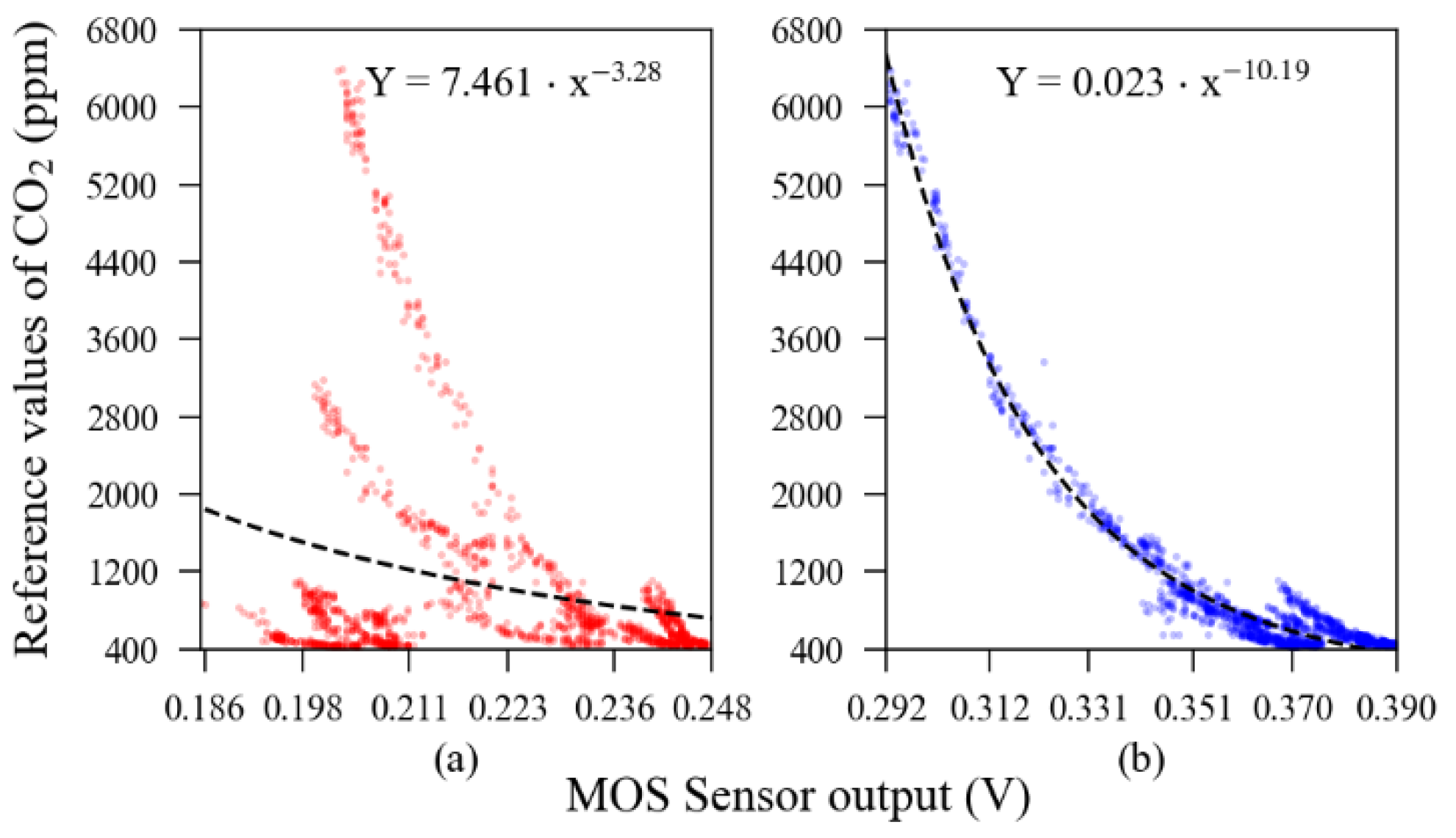

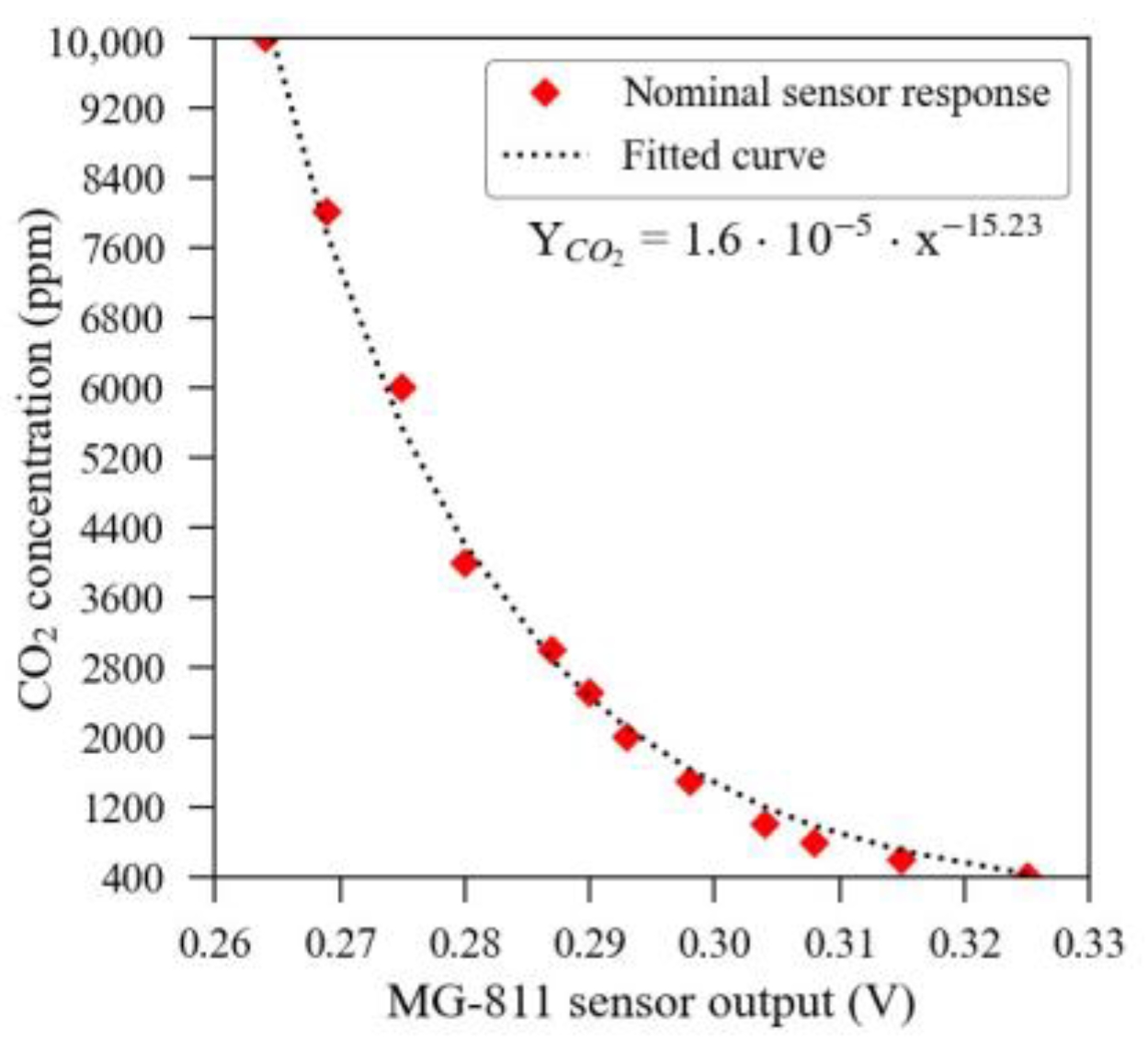

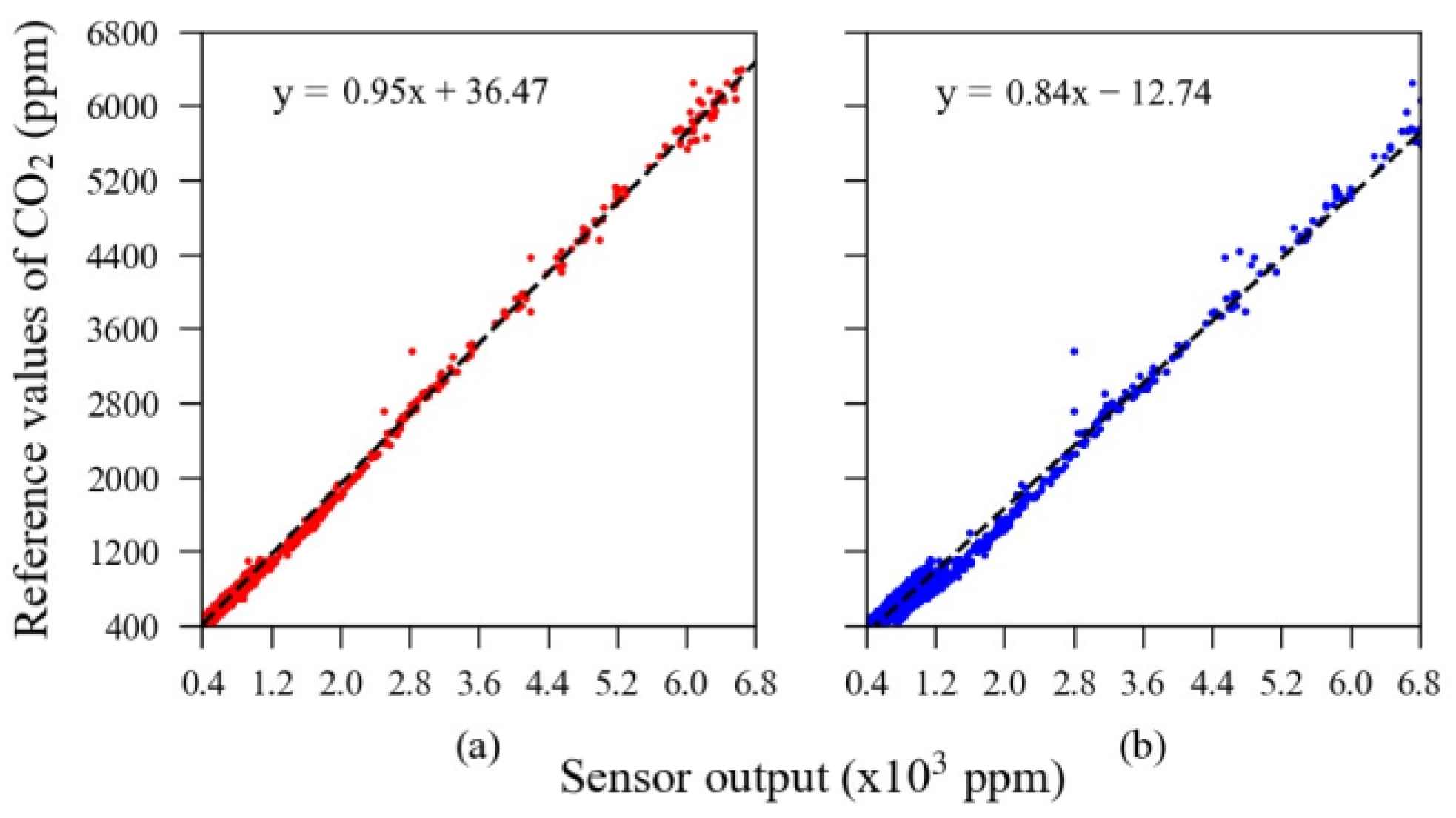

The MOS sensors’ response and curve fitting model is presented in

Figure 5, whilst the theoretical curve, obtained from the manufacturer datasheet, is illustrated in

Figure 6. In these figures, one can notice a significant discrepancy between the theoretical response and the experimentally observed output that prevented these sensors from being analysed in a raw condition.

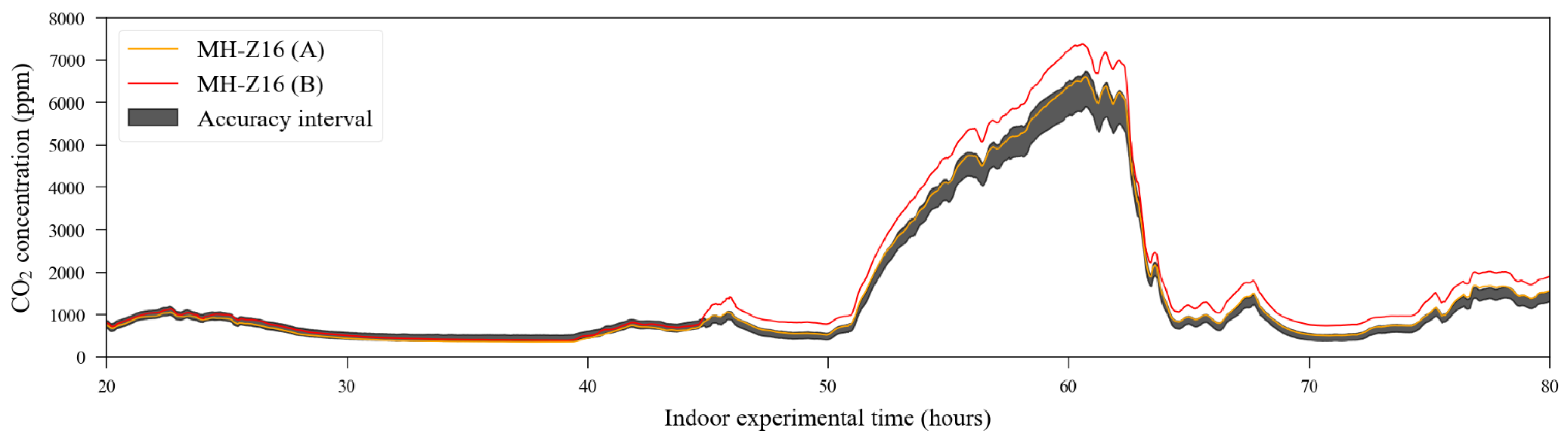

The NDIR sensors, which were previously calibrated using their automatic one-point calibration feature, used in fresh air to detect the 400 ppm level, have their raw response curve (also using 20% of the dataset, randomly chosen by the algorithm) depicted in

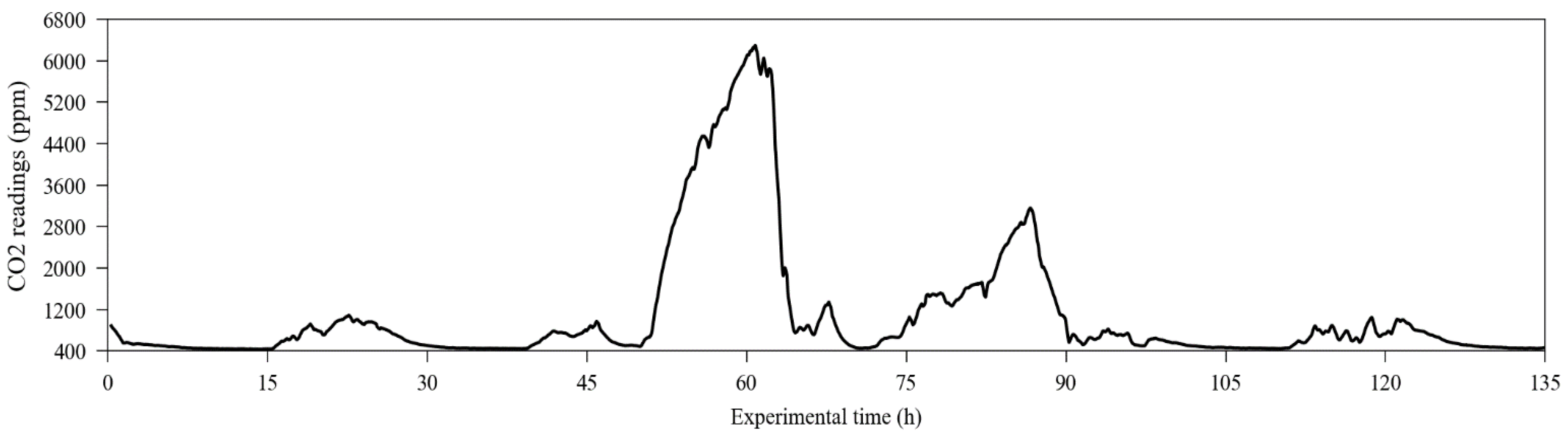

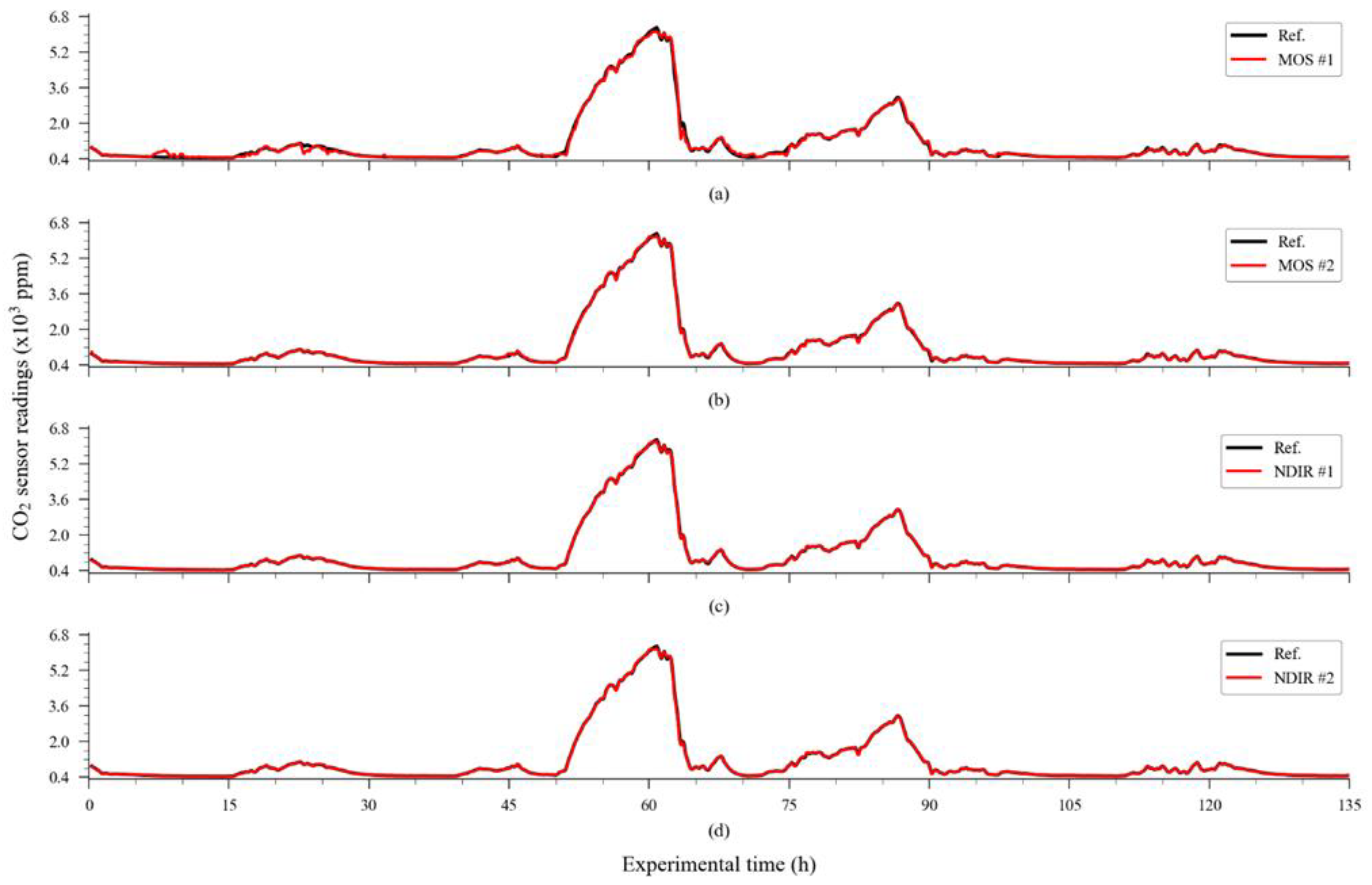

Figure 7. The time series containing the carbon dioxide readings from the reference instrument, used as the target dataset, is presented in

Figure 8.

From this information, it was possible to compare the performance of the raw readings of the sensors with the performance obtained through calibrating the dataset using the response curve obtained from the modelling subsets. The numerical results of this comparison are presented in

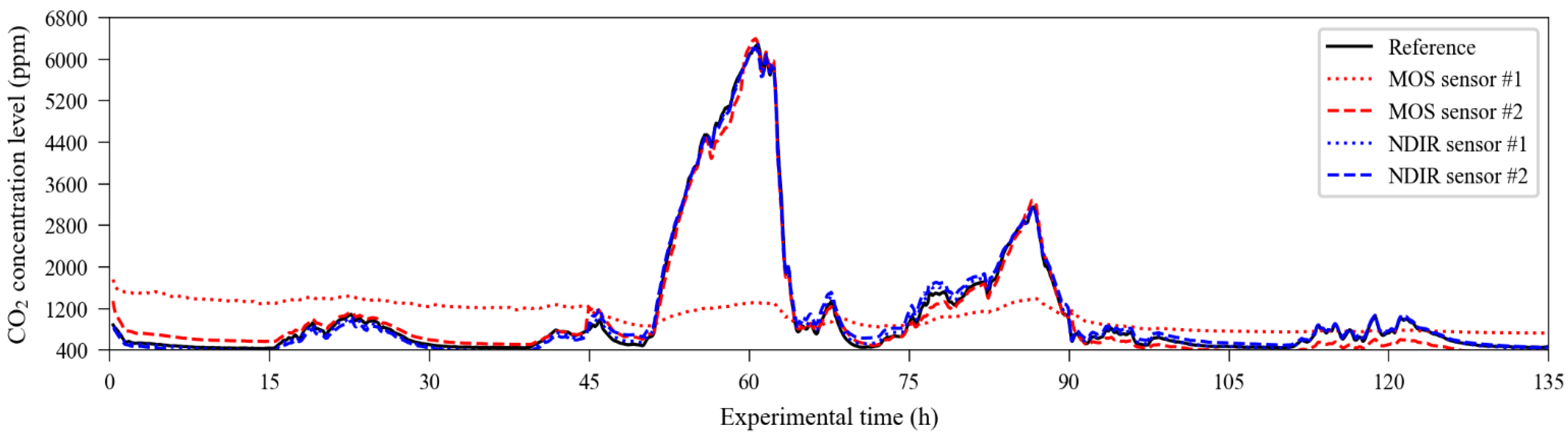

Table 1. In terms of accuracy, direct calibration achieved good results for NDIR unit 1, moderate results for NDIR unit 2 and MOS unit 2, and poor results for MOS unit 1, which presented a hysteresis-like response. The time series containing the sensor readings adjusted using the extrapolation of the conversion equations to the entire dataset are presented in

Figure 9.

Although one-dimensional direct calibration could make most sensors achieve moderate to good accuracy, this technique may be insufficient to meet the requirements of some applications. In the case of MOS sensor unit #1, the direct calibration curve was insufficient to even extract any useful information from its raw readings.

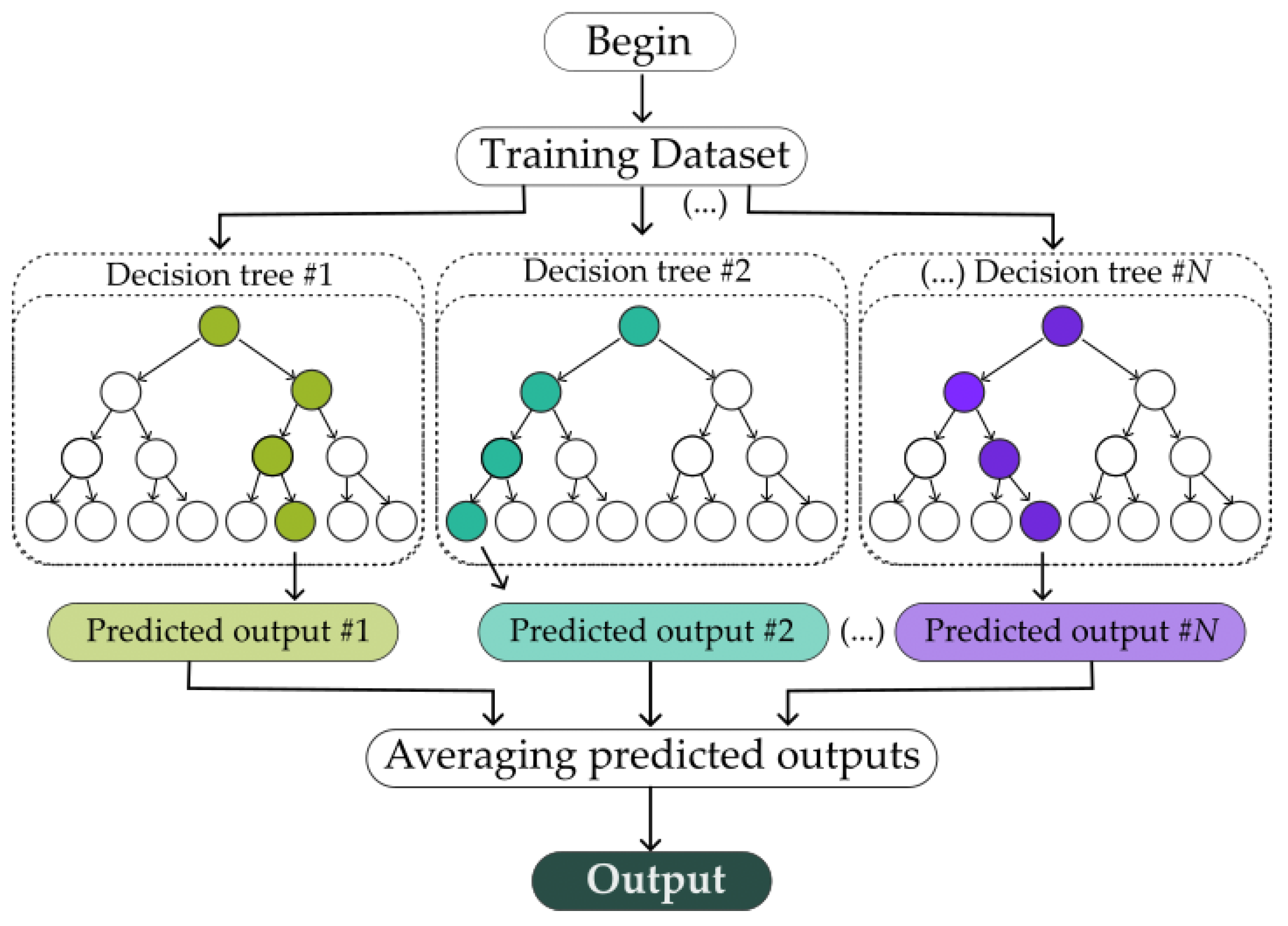

As performed in direct calibration and nominal conversion curves, we used 20% of the dataset for training the ExtRa-Trees algorithm using k-fold with k = 5 and picking the smallest part as the training subset. As the algorithm is repeated “

k” times, each one using a new subset picked randomly. In each execution, we calculated the statistical parameters between the reference and the validation subset and stored them to calculate the average. The sensor performance obtained using the machine learning model is presented in

Table 2.

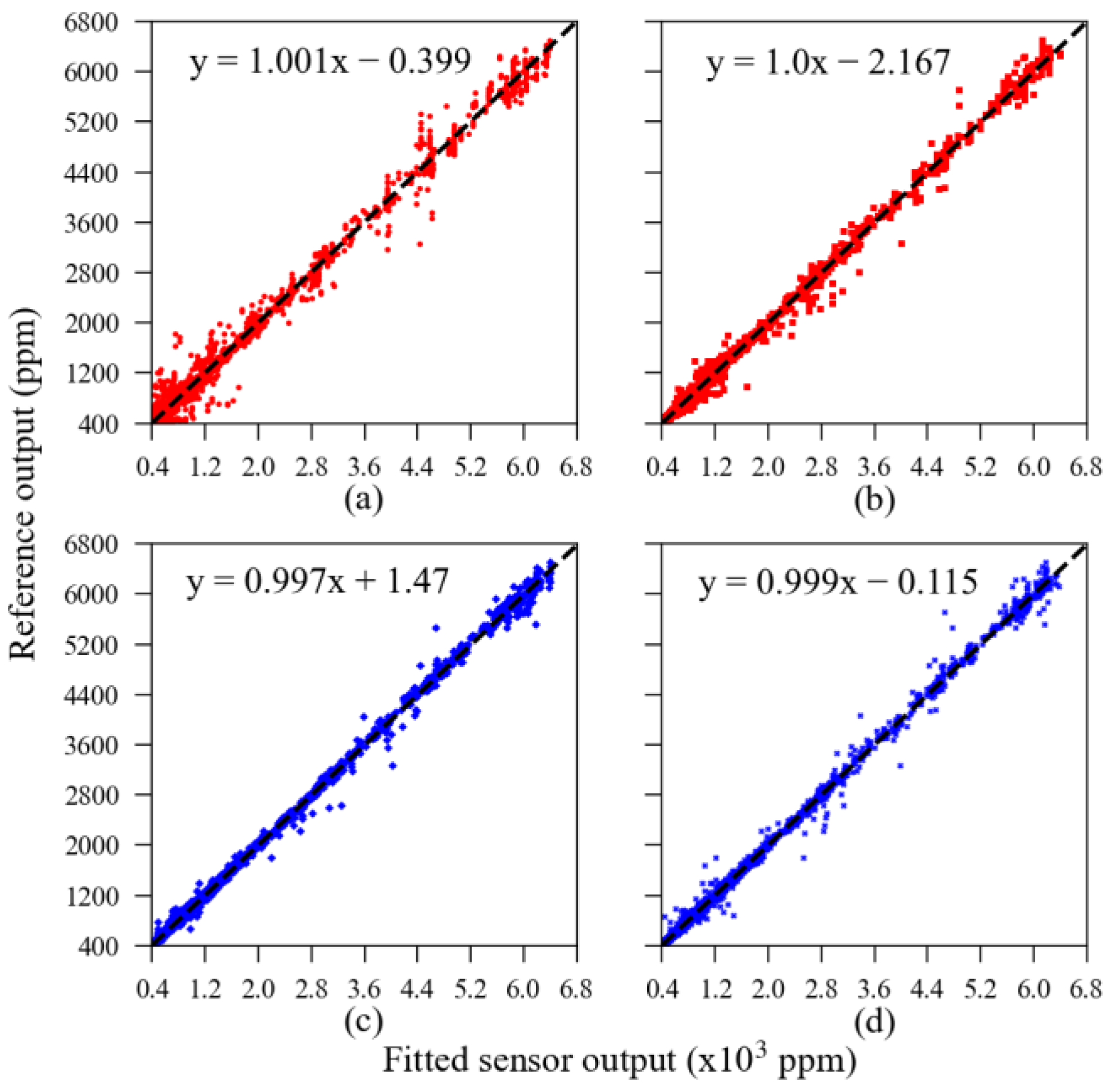

The linear relationships between each sensor unit’s validation subset calibrated using the machine learning algorithm and the reference output are presented in

Figure 10. All sensors presented very high linearity after calibration. The residual error scattered throughout the dashed dark line is a visualization of the error’s parameters presented in

Table 2. For example, in

Figure 10, one can note that the red dots, representing the MOS sensors, are more scattered than the blue dots, representing the NDIR sensors; that is, the overall error observed in MOS sensors’ output was higher than in NDIR sensors’ output.

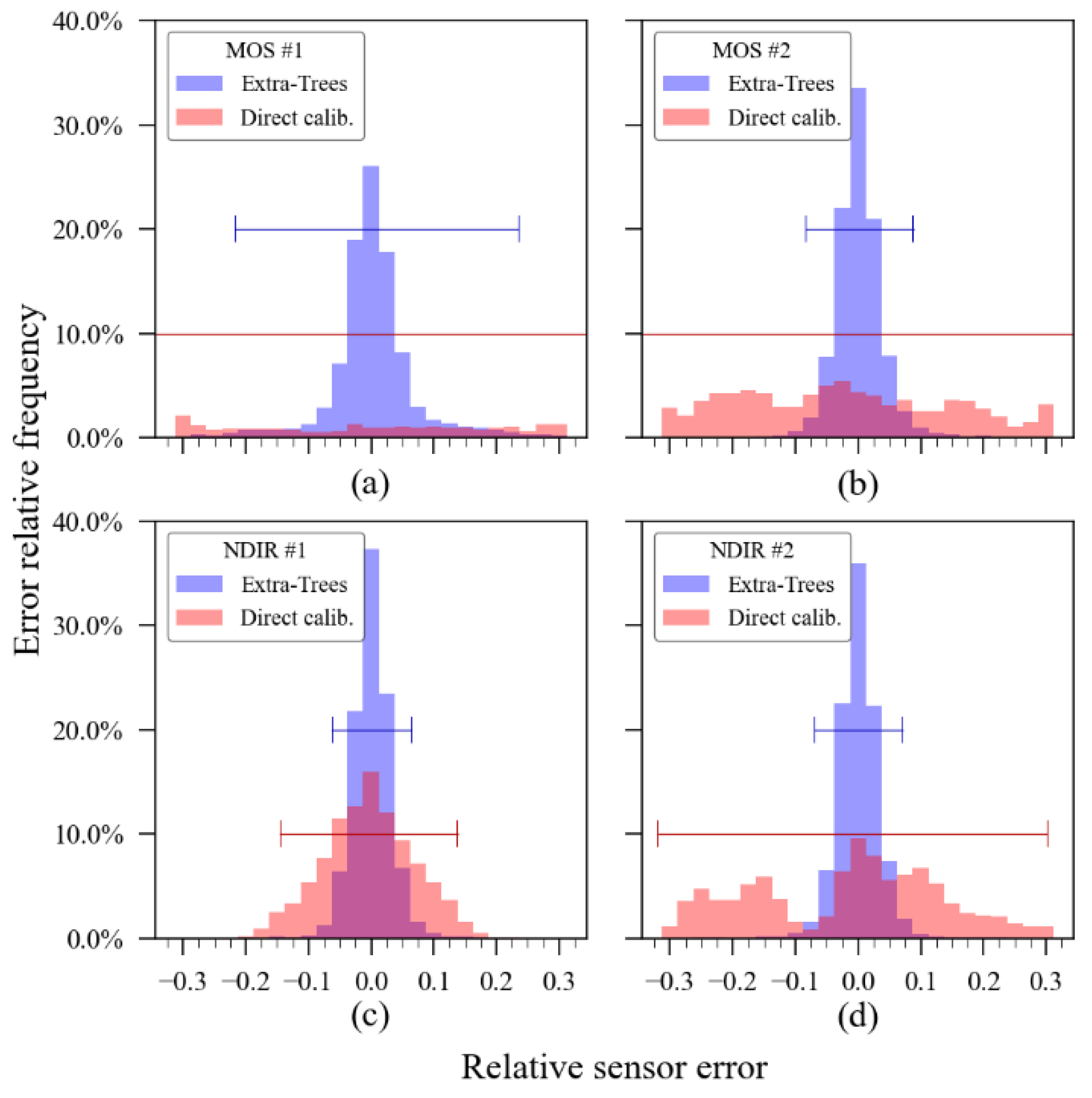

Figure 11, in turn, shows a histogram comparison of the performance achieved using the ExtRa-Trees algorithm versus the performance achieved using the unidimensional curve calibration model. The graphs were built using the relative error of each sensor and each bin has its width corresponding to 2.5% of the relative sensor error. The horizontal lines annotated on the graph correspond to the 95% confidence interval. Note that the MOS sensors with direct calibration showed a confidence interval higher than the axis limits, which means that the unidimensional calibration (annotated as “direct calibration”) for these sensors was ineffective in terms of accuracy enhancement.

From the data presented so far, it is prominent that the most critical performance was observed in the MOS sensor unit #1 data, which presented evidence of significant interference of hysteresis in its readings, making its recovery much slower than it should be when compared to the behaviour observed in MOS sensor unit #2. However, despite the anomalous pattern in the sensor response, the machine learning model was able to extract useful information from the sensor readings, whilst the single-dimension direct calibration was not.

Figure 12 contains the analysis of the feature’s importance plot, that is, the impact of each input parameter on the algorithm result, in a relative value. One can observe that to calibrate the MOS sensor unit #1, the algorithm resorted much more to the relative humidity data than to the output of the sensor itself. This fact can be an indicator of failure in some internal structures of the sensor since the temperature also showed significant relevance for this sensor (about 12% of relative importance), whilst for the other sensors, it had no explanatory role (<5%). The relative humidity also mildly affected MOS sensor unit #2, as it was found that this feature had about 27% of relative importance in the calibration of this sensor and had a lesser explanatory role for NDIR sensors, with a relative importance of around 13% and 15%. The recurrent observation of this graphic on a periodic calibration scenario for long-term use might be useful to identify the sensor health since the register of the features’ importance history would provide an “expected pattern” for each sensor unit and, consequently, variations on the sensor’s feature importance pattern could be a signal of ageing or incoming failure (e.g., a decrease in sensor output importance along time, such as that observed in the MOS sensor unit #1).

The accuracy enhancement obtained through using the ExtRa-Trees ensemble regressor can be visualized in detail in

Figure 13, which details the time series containing the extrapolation of the model obtained with the training subset to the entire dataset of each evaluated sensor.

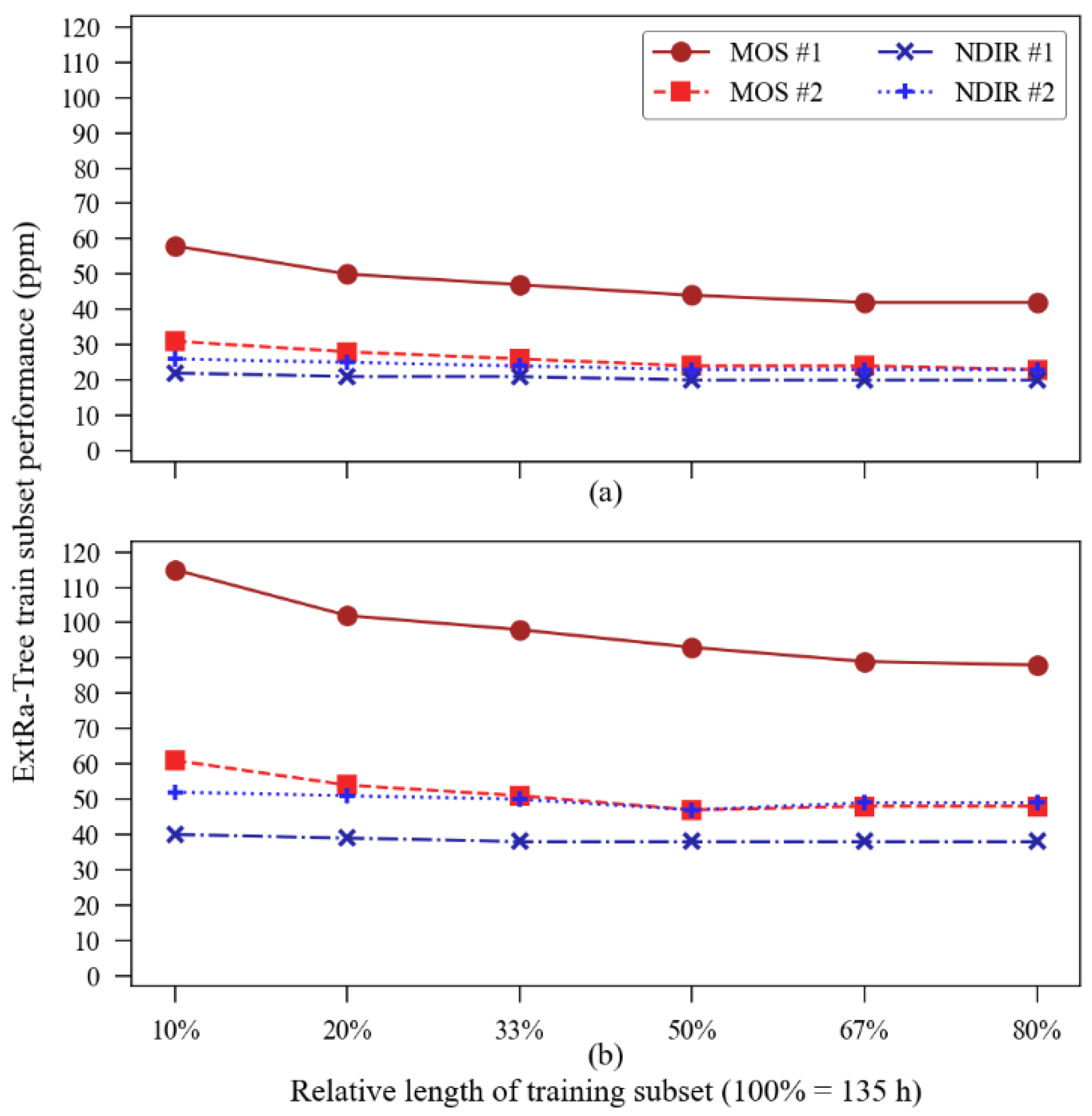

Although the satisfactory results presented so far were obtained from a training subset containing about 27 h of data (20% of 135 h in total) randomly picked from the original dataset and tested into a 108 h dataset built with the points left out of training, we wanted to know the accuracy performance’s dependence on the training subset size. Given this, we conducted an additional analysis to verify the accuracy of the model with different training subset sizes. We intended to investigate how much better (or worse) the results could be with different training sub-dataset sizes. The summary of the training-subset size influence on the accuracy metrics is illustrated in

Figure 14.

The MOS sensors presented the most accentuated improvement in accuracy with a longer training subset: MOS sensor unit #1 reduced its MAE from 58 ppm at 10% of subset length to 42 ppm at 80% of subset length and reduced its RMSE from 115 ppm to 88 ppm, while MOS unit #2 reduced its MAE from 31 to 23 and its RMSE from 61 to 48 within the same interval. The calibration of the NDIR sensors showed to be less influenced by the size of the training subset since the highest variation observed in MAE was with sensor unit #2, which has its value reduced from 26 ppm to 23 ppm.

Figure 14 also suggests that there is a saturation point in the “training subset size vs. accuracy improvement” relationship, a phenomenon noted by the authors in [

41,

42]. Direct calibration through the regression function showed no influence of training subset size variations on its accuracy metrics.

3.2. Outdoors Experiment

In the dataset resulting from the outdoor exposure experiment, the outputs from the fourteen carbon dioxide sensors were already synchronized with the reference outputs. However, intermittency in data continuity for all sensors was observed and, for this reason, it was necessary to apply an intersection between the reference station data and each sensor’s data to ensure that the machine learning model would receive only valid readings either in its input or target data. Moreover, a new feature was created in the sensors’ timestamps dataset. This new information contains the total exposure time of each sensor in days fractions. Its goal is to verify if the algorithm can identify and compensate for the ageing drift of the sensors, considering that the reference was being periodically recalibrated and, thus, was not influenced by ageing.

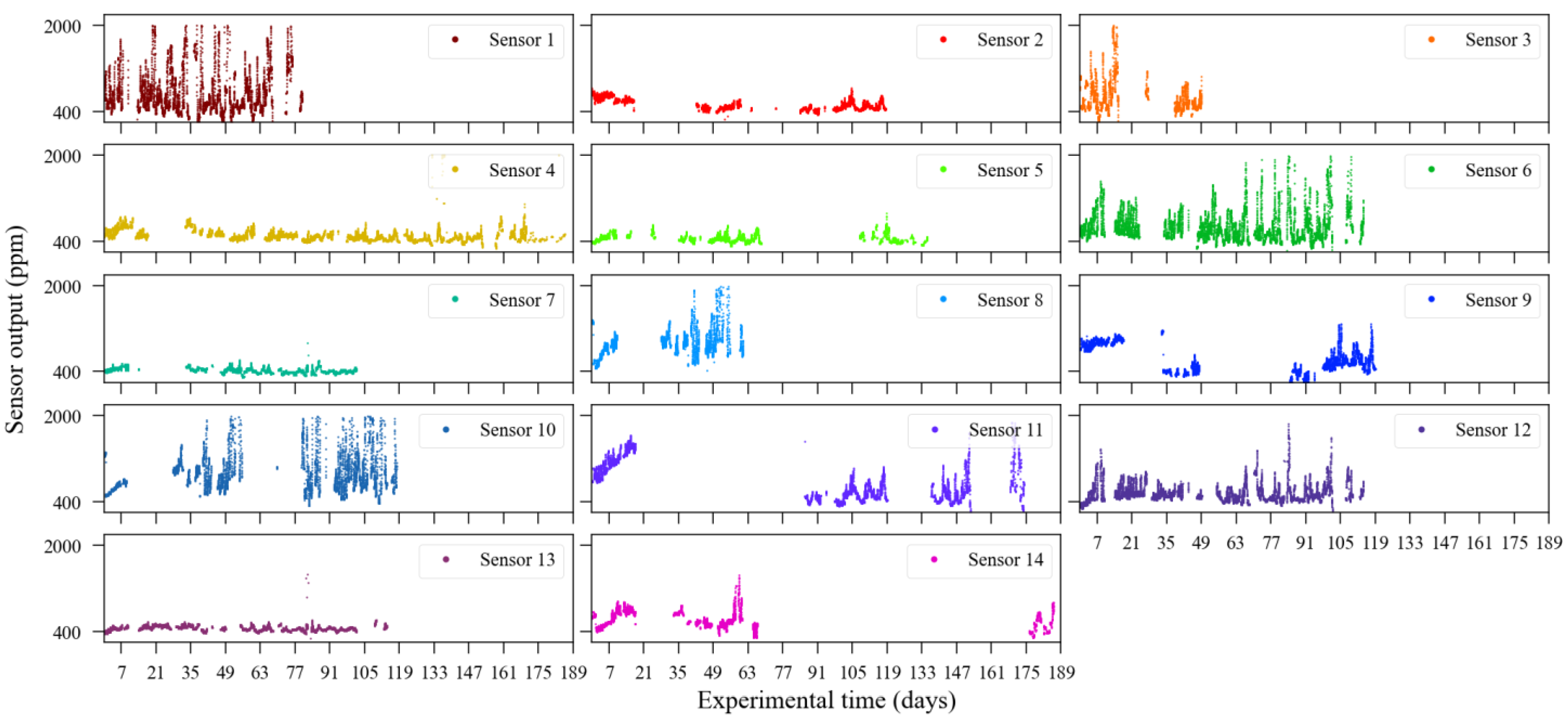

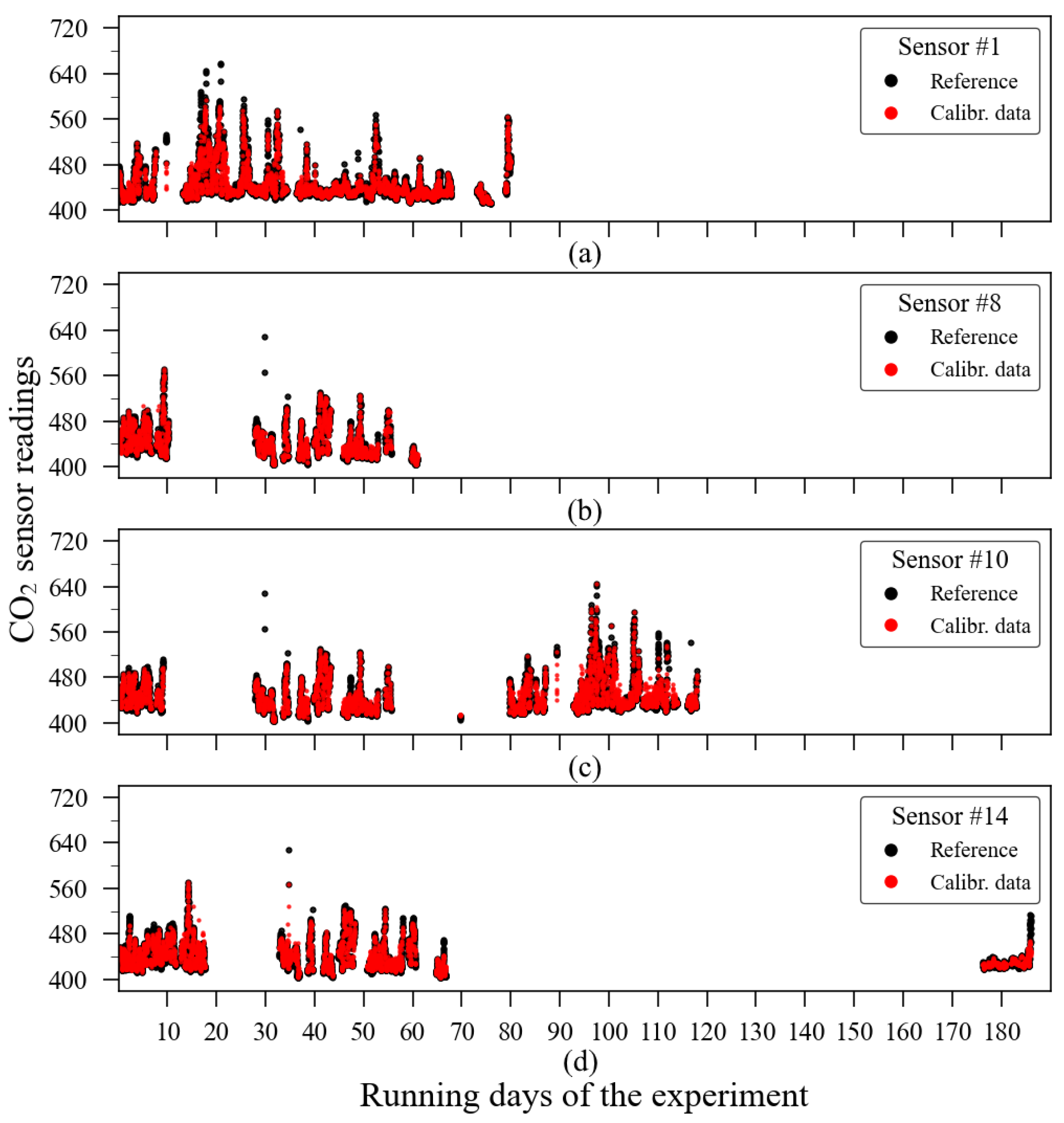

The reference sensor output is illustrated in

Figure 15. As the experiment was conducted outdoors, carbon dioxide sources have relatively less impact on the gas concentration level in the air, unlike what happens in the indoor scenario. Thus, during the observation period (approximately 186 days), and according to the reference data, CO

2 concentration levels were contained between 400 ppm and 700 ppm. The individual outputs from the 14 CO

2 sensors are shown in

Figure 16. In both

Figure 15 and

Figure 16, it is possible to identify discontinuity in the sensor readings. The biggest data gaps on each sensor time series were caused due to the rotation of sensor deployment during the monitoring campaign since not all sensors were collocated at the same time.

A simple visual analysis of

Figure 16 allows one to note that not all evaluated sensors provided their readings according to the values observed by the reference instrument (

Figure 15). As an example, it can be cited that the sensors units 1, 6, 8, and 10 presented readings reaching 2000 ppm, the sensor’s maximum range.

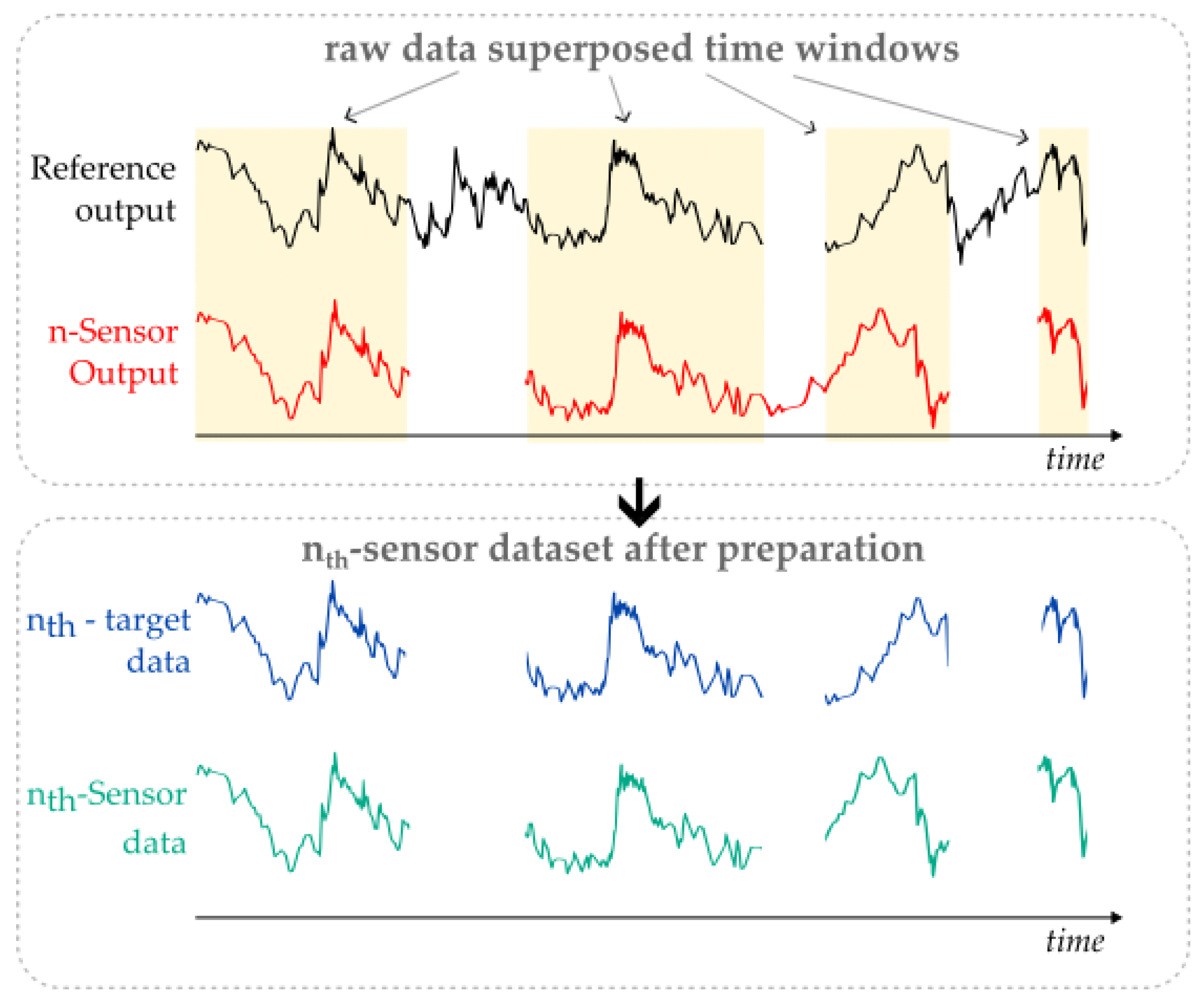

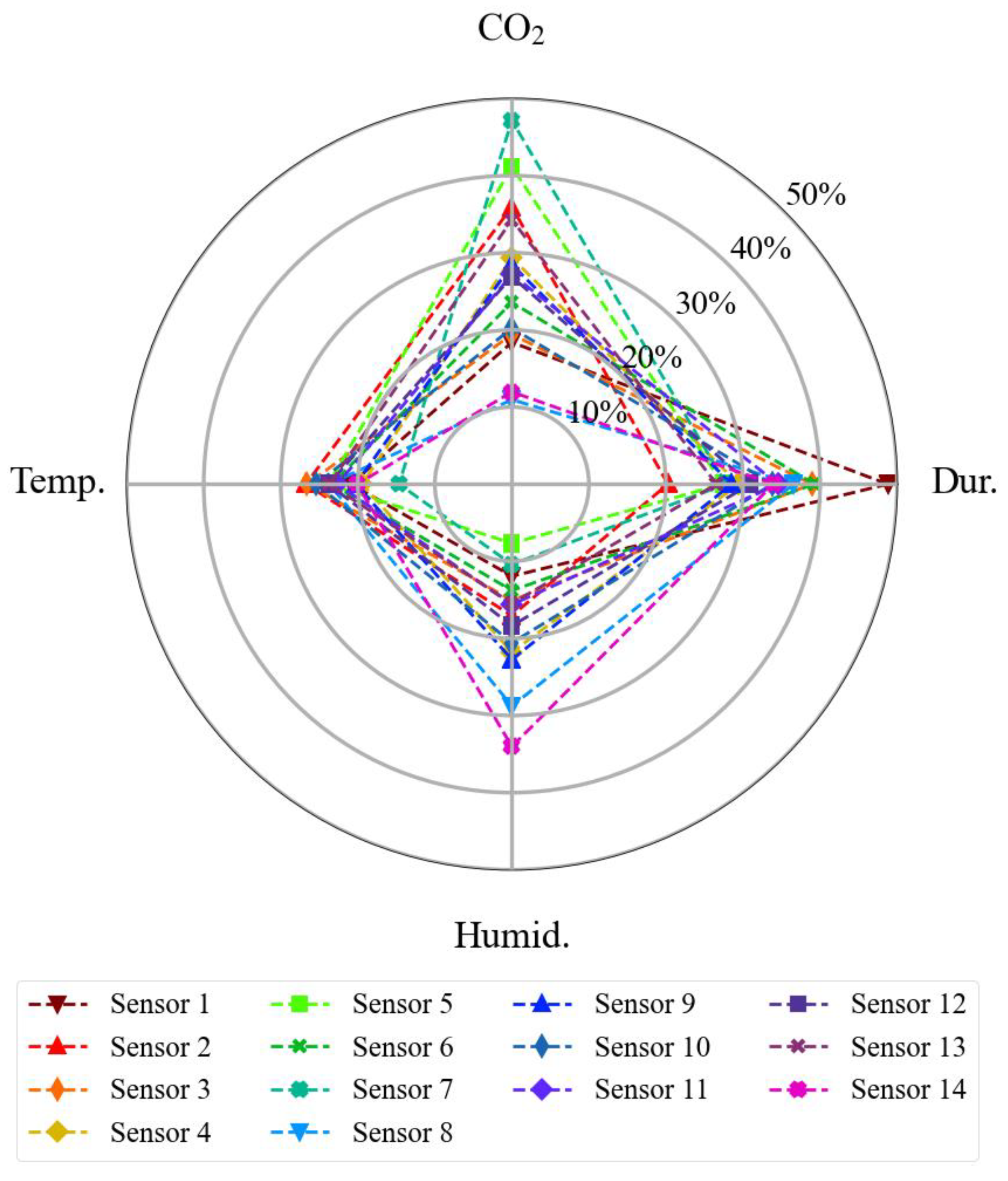

To prepare each sensor dataset to be used by the algorithm, it was necessary to overlay the data availability of each sensor unit with the reference sensor through its timestamps, avoiding using input data without target data, or vice versa (see

Figure 3). As the SST CO

2S-A sensor also provides temperature and relative humidity readings together with the carbon dioxide readings, it was not necessary to take any additional action towards these features regarding data preparation. All the temperature and relative humidity readings of the fourteen sensors are summarized in

Figure 17. Some sensors were exposed to higher environmental variations than others, for example, sensor unit 4 versus sensor unit 8 in terms of temperature, or sensor unit 4 versus sensor unit 12 in terms of relative humidity.

To guarantee the possibility of a comparative view of the performance of these sensors before and after the use of the ExtRa-Trees algorithm, the accuracy metrics of these sensors were calculated both in raw conditions and after a linear regression calibration, obtained with 20% of each sensor dataset, randomly chosen using the split process as described further, in the machine learning paragraph. The numerical performance of this comparison is given in

Table 3.

Although the linear regression was able to numerically reduce the mean absolute error (MAE) and the root mean squared error (RMSE), this fact is not satisfactory per se in terms of accuracy enhancement because both the Pearson and Spearman’s rank correlation resulted in low values for all sensors, suggesting that the linear regression will not suffice for reaching higher accuracy with these sensors. Some sensors demonstrated a very low degree of correlation with the reference instrument, with the most critical cases being observed in sensor units 8 and 11, which had a Pearson correlation coefficient of 0.160 and 0.151, respectively. This suggests that the reduction of the error parameters of these sensors could also be achieved through simply applying an average over their readings and correcting its bias. Moreover, except for sensor units 5 and 7, all sensor units also presented a low degree of correlation (r < 0.35) even after the linear regression adjustment. An important finding after this analysis is that a single-dimensional direct calibration, such as linear regression, was not efficient enough to achieve higher accuracy using this low-cost sensor model in outdoor exposure.

Regarding the machine learning algorithm, the data split process which separates the datasets between training and validation data subsets was designed differently from the common way. Although the experimental data are limited in time, these sensors were exposed outdoors for a significant time and we noted that the input parameters were not homogeneously distributed over the observation time. We assume the uncalibrated sensor error is a function of four variables: carbon dioxide, air temperature, relative humidity, and exposure time. Using, thus, a continuous range of data to train the model may not include all combinations of climatic variables existing throughout the dataset (e.g., imagine a calibration model being trained with data collected during winter and being tested over data gathered during spring). To circumvent this heterogeneous distribution of input parameters, the collection of points used for training the calibration models occurred randomly throughout the entire dataset. Aware of the risk of implying a dependency between training and test data, we reduced the size of the training dataset to 20% of the total to avoid creating two correlated datasets.

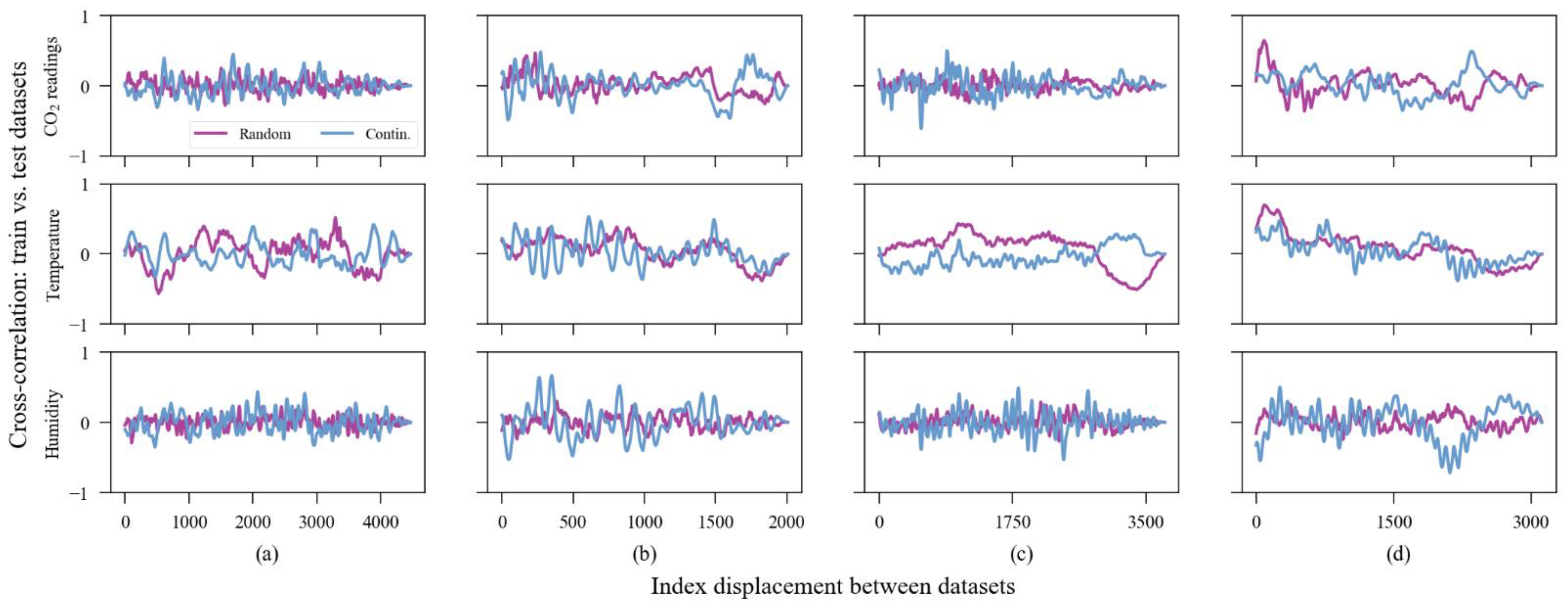

Figure 18 demonstrates the cross-correlation between training and test subsets in two situations: the training dataset created with 20% of random points and when the training dataset was created with 80% of continuous points from the beginning of the time series. Considering that there was no consistent strong correlation between the datasets, we assume that we created an independent dataset for testing the model. However, some spurious correlations appear occasionally. We assume that this occurred due to the periodicity of the weather. Moreover, from this point on, we have chosen to present only the data of four sensor units to keep data visualization clearer, reducing the number of subplots and yet ensuring a closer look into the graphics. The sensors chosen for visualization were units 1, 8, 10, and 14. They were chosen randomly between those that presented the highest MAE values in raw conditions (>100 ppm). Those are the sensors with the most critical accuracy issues.

Given the described split method, we used k-fold with k = 5 to test five different combinations. Considering that each sensor has a different continuous exposition time, the training subset size, in absolute time, may differ between sensor units. The algorithm is repeated k times, each one using new data points for training, and the presented metrics are an average of these executions. As mentioned in the indoor experiment analysis, we have performed no additional fine-tuning on the algorithm hyperparameters once the results obtained with its default configuration could be considered satisfactory. However, we do not discard that a deeper investigation on this topic could enhance, even slightly, the accuracy metrics.

Figure 19 contains the scatter plot between all sensors in all conditions versus the reference: raw, linear regression, and ML-calibrated.

Table 4, in turn, presents the metrics achieved with the ExtRa-Trees algorithm using k = 5 in the k-Fold split process.

Sensor units 8 and 11 in the uncalibrated (raw) dataset presented the lowest correlation degrees with the reference, with r-values of 0.16 and 0.15, respectively. However, after the calibration with the machine learning model, these sensor units reached the r values of 0.913 and 0.948, respectively. In simple terms, the algorithm was efficient enough to transform the poor-quality and nearly uncorrelated readings of these sensors into meaningful information with a very strong degree of correlation with the reference instrument. The highest Pearson correlation after the machine learning calibration was observed in sensor unit 7, with an r-value of 0.96. When uncalibrated, the same sensor presented an r-value of 0.525.

Although the numerical parameters presented in

Table 4 together with the information contained in

Figure 19 can transmit a good idea about the sensor accuracy, a graphic dedicated to visualizing the distribution of the relative errors after ML calibration along with the measurements is necessary for enhanced analysis. The relative error plot of these sensors is presented in

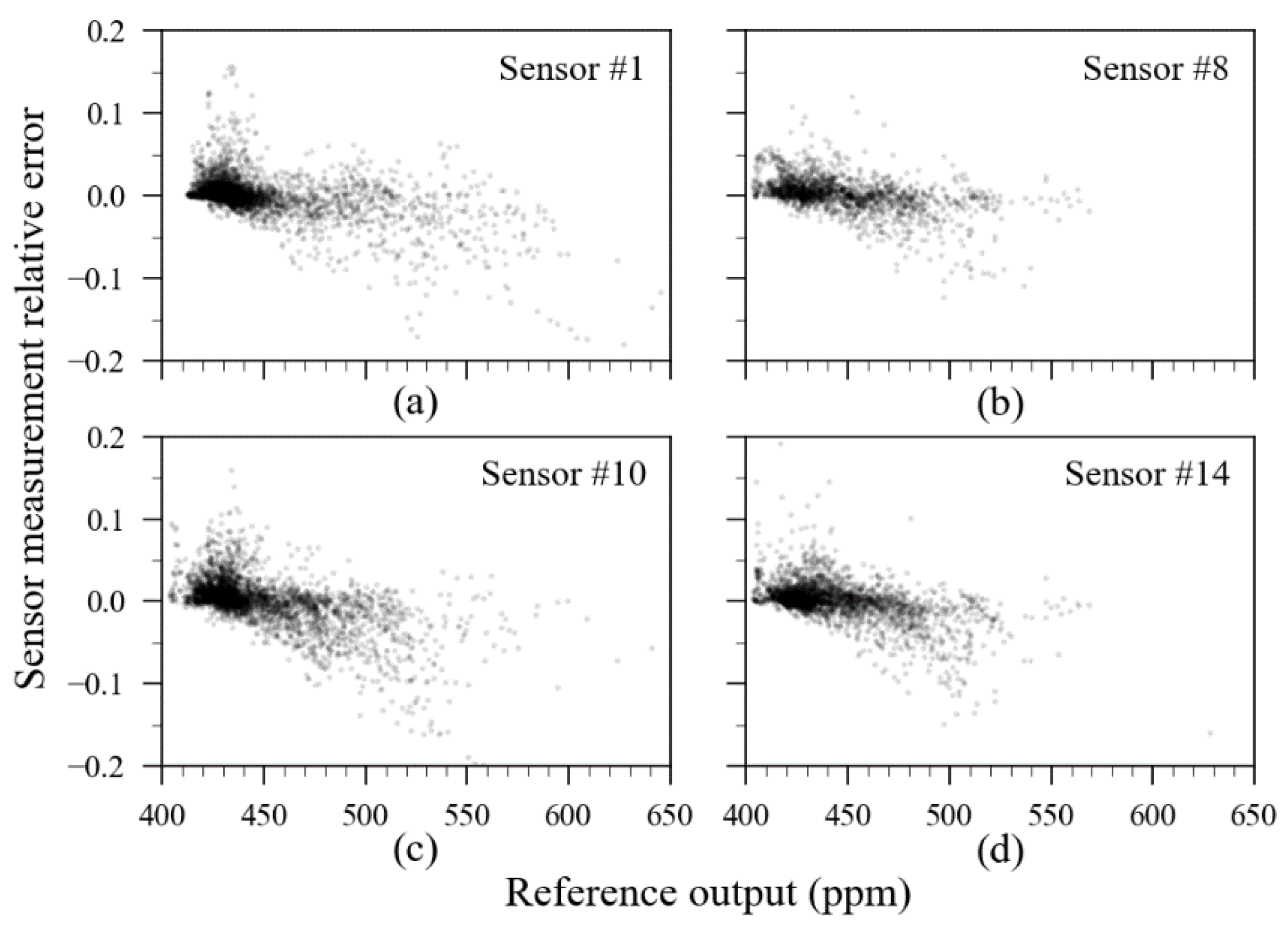

Figure 20.

Transparency was applied in the data points presented in

Figure 20 to create a density-like plot. A darker region means more readings. On this, it is noticeable that the highest density of readings is contained in the 400 to 500 ppm range. Relative errors beyond the ±5% range are rare; however, they are visually more noticeable when CO

2 concentration levels increase.

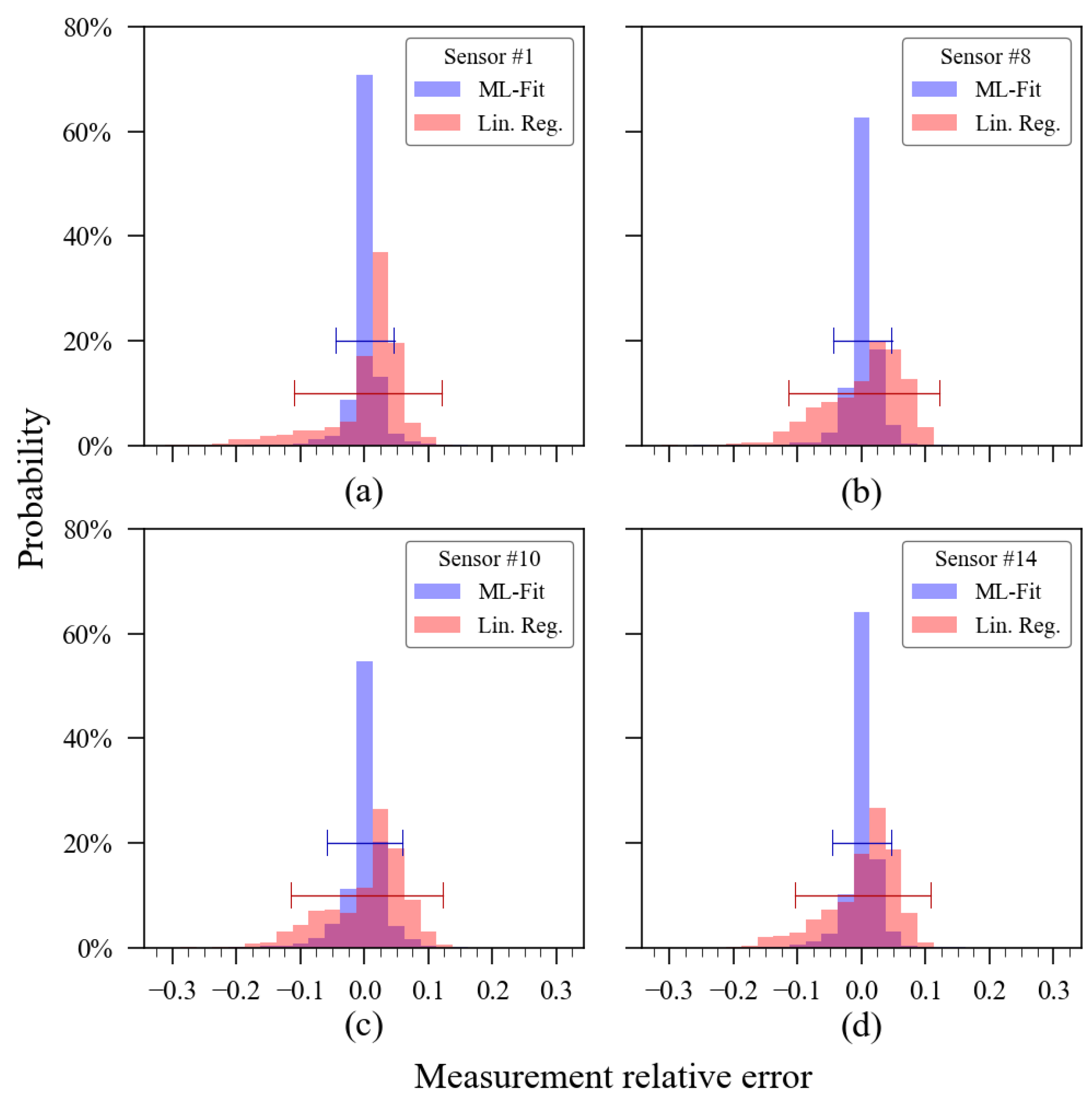

Figure 21 presents histograms of the relative errors extracted from the validation dataset of these sensor units. For comparison purposes, the relative error histogram of the validation dataset obtained via linear regression for the same sensor units was also added to the plots.

In the relative error histogram (

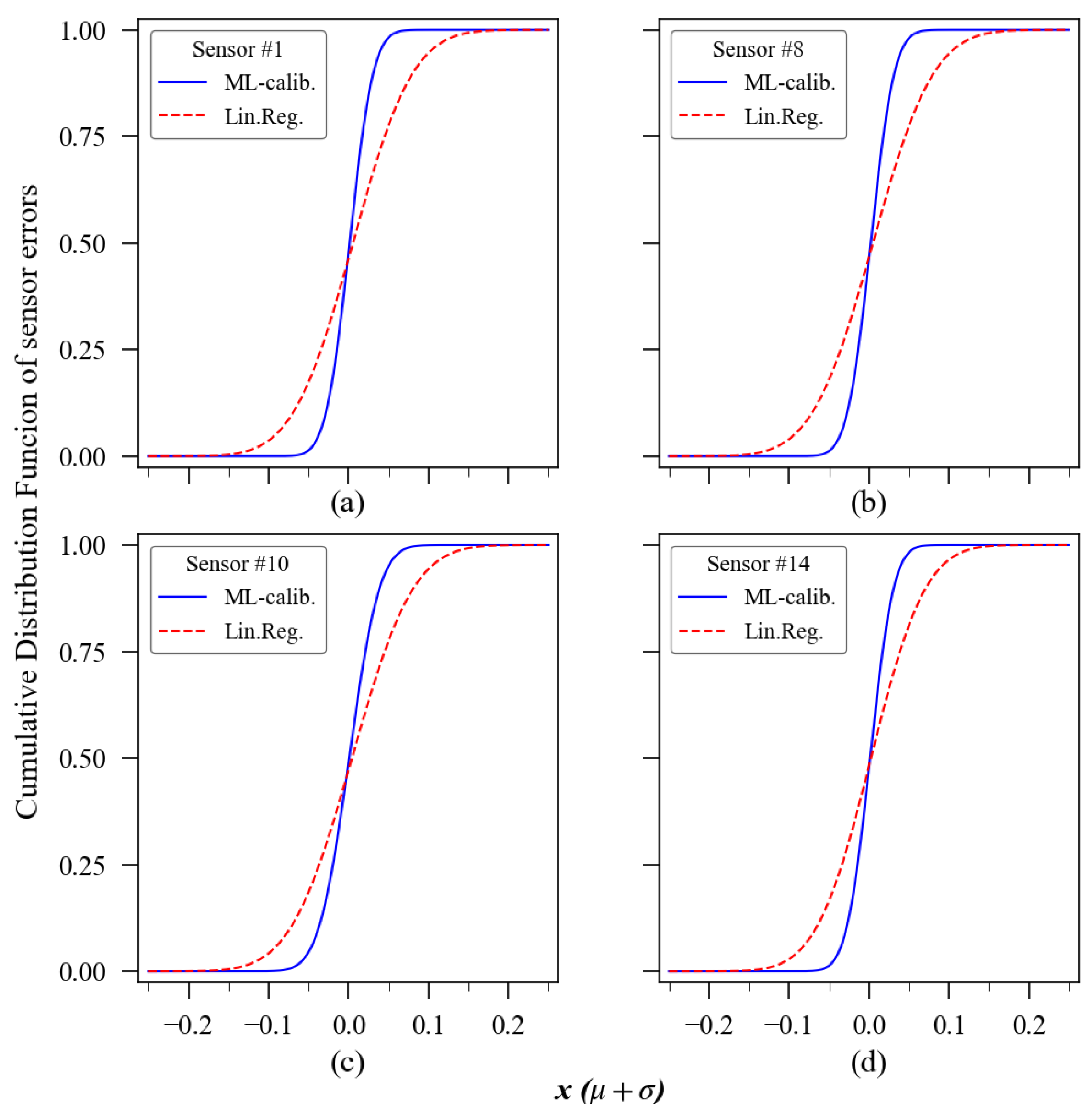

Figure 19), each bin has a width of 0.025 (2.5% of measurement relative error). Comparing the shape formed by the red and blue bars on it, one can have a better understanding of the accuracy enhancement achieved using the ExtRa-Trees model individually trained for each sensor unit. Choosing sensor 1 to exemplify, when using linear regression, 95% of its relative errors were contained in the ±12.5% margin, whilst after ML calibration, the 95% interval was delimited between the ±4.6% range of relative error. The other sensors’ confidence interval (95%) of the relative measurement error also showed satisfactory results. For sensor units 2 to 14, the 95% confidence interval was, respectively, ±4.4%, ±2.4%, ±5.1%, ±4.6%, ±5.2%, ±3.9%, ±4.6%, ±4.8%, ±5.8%, ±4.2%, ±3.9%, ±3.6%, and ±4.6%. The theoretical cumulative distribution function (CDF) of the relative error, complementing this histogram, is presented in

Figure 22, which also includes the parameters from the linear regression calibration to compare the performance with the machine learning model results. The ideal result for such a graphic would be a step-like curve, with the transition nearly vertical.

The time series of the calibration model on the example sensors are presented in

Figure 23. This image allows one to identify where and when the discrepancies between the reference and the calibrated data exactly happened. It is important to emphasize that this image contains only data points from when both reference and the evaluated sensors’ readings were available at the same time.

The ExtRa-Trees calibration model is an ensemble regressor that does not perform its prediction via a weighted equation using multiple input parameters but rather using estimators and decision nodes based on each input parameter value. Given this, it is possible to visualize the relative relevance of each input parameter for sensor error reduction.

Figure 24 presents a boxplot created through the aggregation of all features’ importance extracted from the individual calibration models.

All input features presented a relevant explanatory role. The parameter that showed the highest variation in its relevance between the models was the CO

2 output, whilst the least variation was observed in the temperature parameter. In general terms, the average relative relevance was 32.5% of exposition time (minimum of 21%, in sensor #2; maximum of 47.9%, in sensor #1); 27.1% of CO

2 sensor output, in ppm (minimum of 11.1% in sensor #8; maximum of 47.7% in sensor #7); 22.2% of temperature readings (minimum of 13.7% in sensor #7; maximum of 26.7% in sensor #2); 18.2% of humidity (minimum of 7.9% in sensor #5; maximum of 34.3% in sensor #14). A composition of all features’ relative importance resulting from all calibration models is illustrated in

Figure 25.

Additional verification of the algorithm’s performance must be performed to ensure whether the importance of the features was a result of a false positive or not. A feature with falsely high importance is common in data with high cardinality [

43]. From the input parameters used, the exposition time is the feature that presents the highest cardinality, since all values are unique (each value is a fraction of a day). The most efficient way to perform this check is to repeat the algorithm’s training process without the sensor exposition time as an input variable and then recalculate the accuracy metrics obtained to compare with those achieved using the algorithm trained with the exposition time included. In the case of significative depreciation of performance metrics parameters (MAE, RMSE, and r), it can be concluded that the variable in question was truly significant in reducing the error. On this, it was observed that the exposition time was, in fact, relevant for the algorithm to optimize error reduction, considering that the removal of this variable from the input features yielded depreciation in the numerical performance parameters calculated from the same validation subset, as illustrated in

Table 5 (in comparison to the values presented previously in

Table 4). That means the algorithm could identify the sensor ageing within the given period. This influence, in turn, was more accentuated in some sensor units than in others.

Regarding the mean absolute error, the sensors presented an increase of up to 100% (minimum of 33% in sensor unit 12; maximum of 100% in sensor units 1, 3, and 7). In terms of the root mean square, the maximum increase observed was 75% in sensor unit 1, whilst the minimum increase occurred in sensor unit 10 (33%). Sensor units 10 and 12 seemed to suffer less influence from ageing than the other units. Another appropriate analysis to consolidate the importance of using the continuous exposure time of the sensors in the algorithm’s training stage is to compare the confidence interval of the relative error obtained in the test subset in both situations: with and without the use of this variable.

Table 6 shows the confidence interval (95%) of relative errors of the sensors’ dataset calibrated using the ExtRa-Trees model with (as seen in

Figure 20) and without sensor exposition time as an input parameter, as well as the confidence interval achieved via the linear-regression calibration.

The information presented in

Table 6 suggests that the use of the continuous exposure time of the sensor might be an important input parameter in machine learning algorithms for error reduction in long terms measurements.

Although the achieved results can be considered satisfactory, they were calculated using the training/testing dataset split relation of 1:4 (20%/80%). As each sensor has its own exposure time, therefore, the absolute sizes of each test subset also differ in length. To ensure that the heterogeneity of the exposure time of the sensors may have interfered with the performance metrics of the algorithm, we calculated the dependence between the total exposition time of the sensors in hours (see

Table 3) and the root mean squared error of the sensors (see

Table 4). As a result, we identified a p-value greater than 0.4 (r = 0.1987, N = 14), pointing out that there is no statistical significance between the changes observed in the values of these variables. Once the total exposition time of the sensors presented no relation with the obtained accuracy metrics, further analyses were made to assess the influence of the relative size of the training datasets on the model accuracy. Other “training vs. test” subset size relations were tested, as follows: 10/90, 33/67, 50/50, 67/33 and 80/20. To summarize this analysis, the MAE and RMSE were the only parameters considered. The influence of the training vs. testing ratio on accuracy metrics for the evaluated sensors is presented in

Figure 26.

It is noted that increasing the training subset’s relative size to, and beyond, 50%, both the MAE and RMSE metrics tend to stabilize the error mitigation rate. The error reduction saturation at larger training datasets might be explained due to an overfitting-like effect since the validation dataset is smaller. This phenomenon was not observed in the indoor experiment due to its short duration and, perhaps, due to the more stable environmental parameters which could be satisfactorily sampled with fewer data points, then reaching the optimal error reduction.