A Procedure for Taking a Remotely Controlled Elevator with an Autonomous Mobile Robot Based on 2D LIDAR

Abstract

1. Introduction

New Contribution

2. Materials and Methods

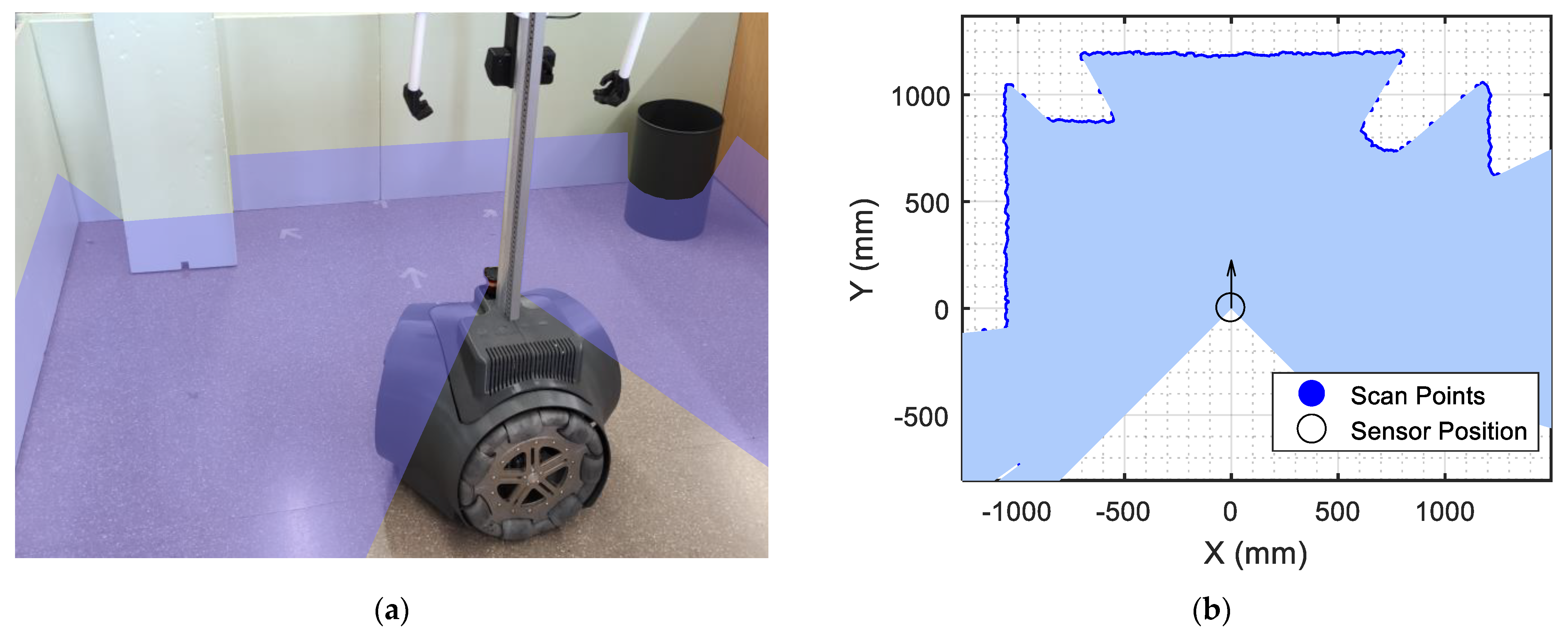

2.1. APR-02 Mobile Robot

2.2. Mobile Robot Self-Localization Based on 2D LIDAR

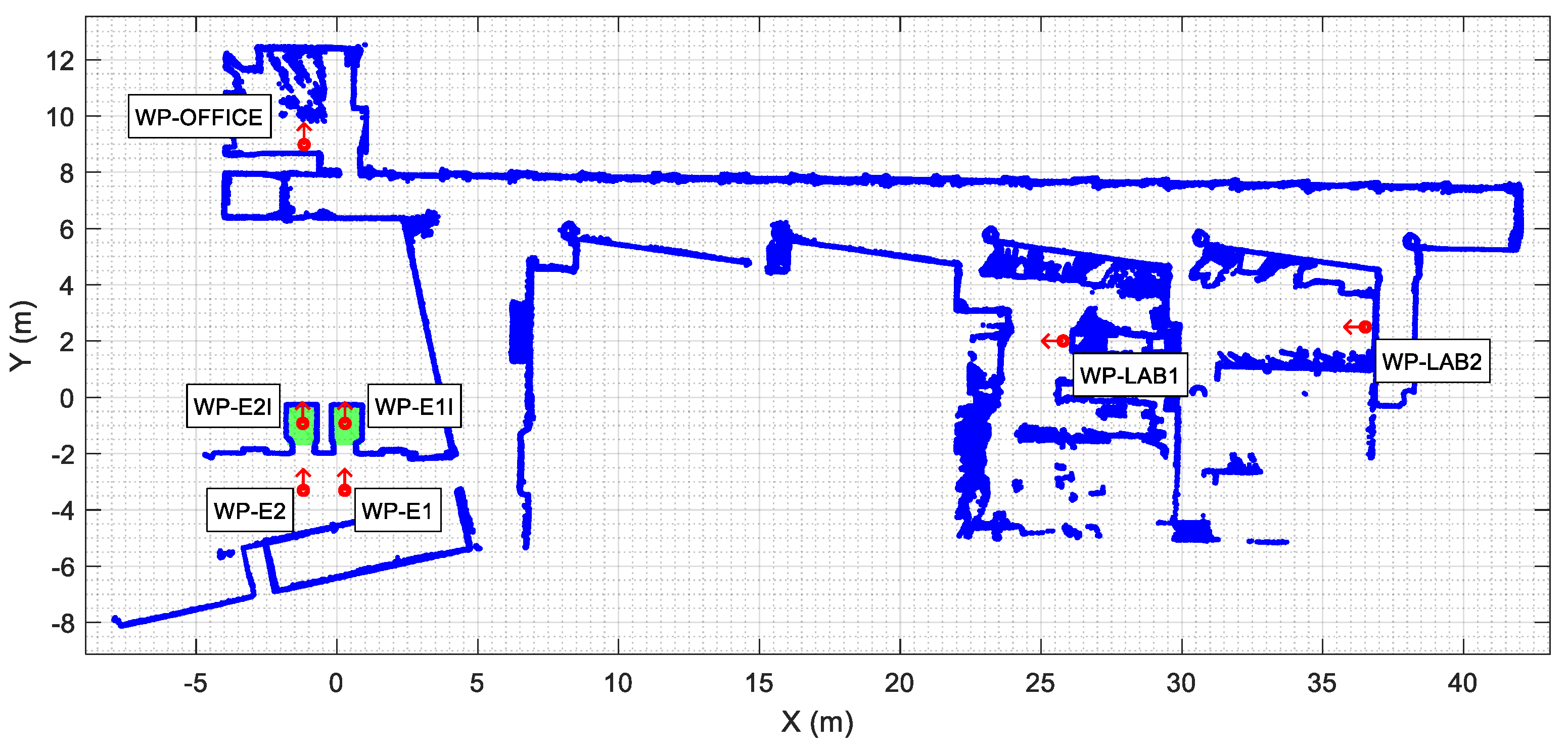

2.3. Map of the Different Floors of the Building

2.4. Remotely Controlled Elevators

3. Procedure to Take the Elevator

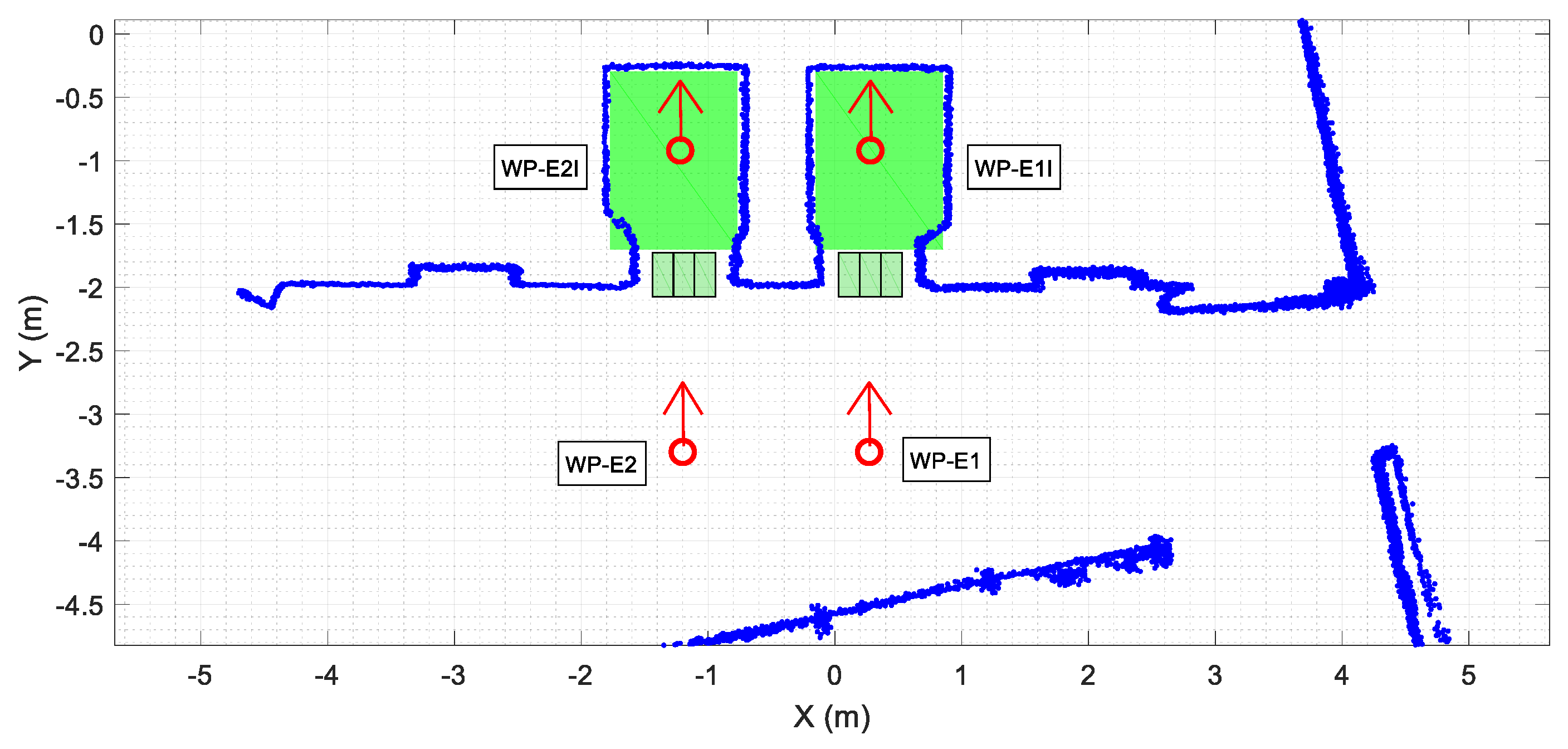

3.1. Entering the Elevator

3.2. Exiting from Inside the Elevator

3.3. Taking the Elevator

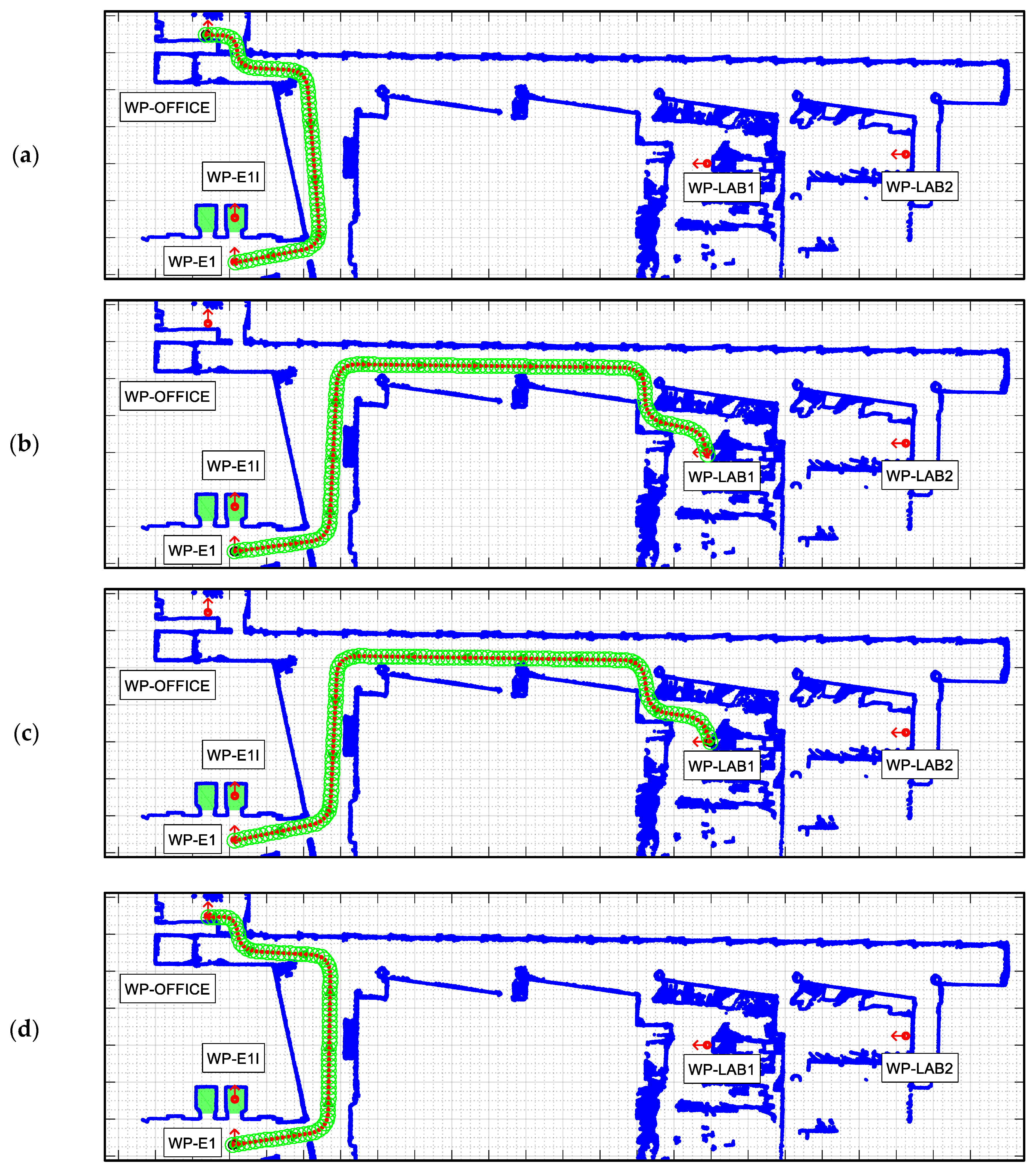

3.4. Path Planning including Navigation between Floors

4. Results

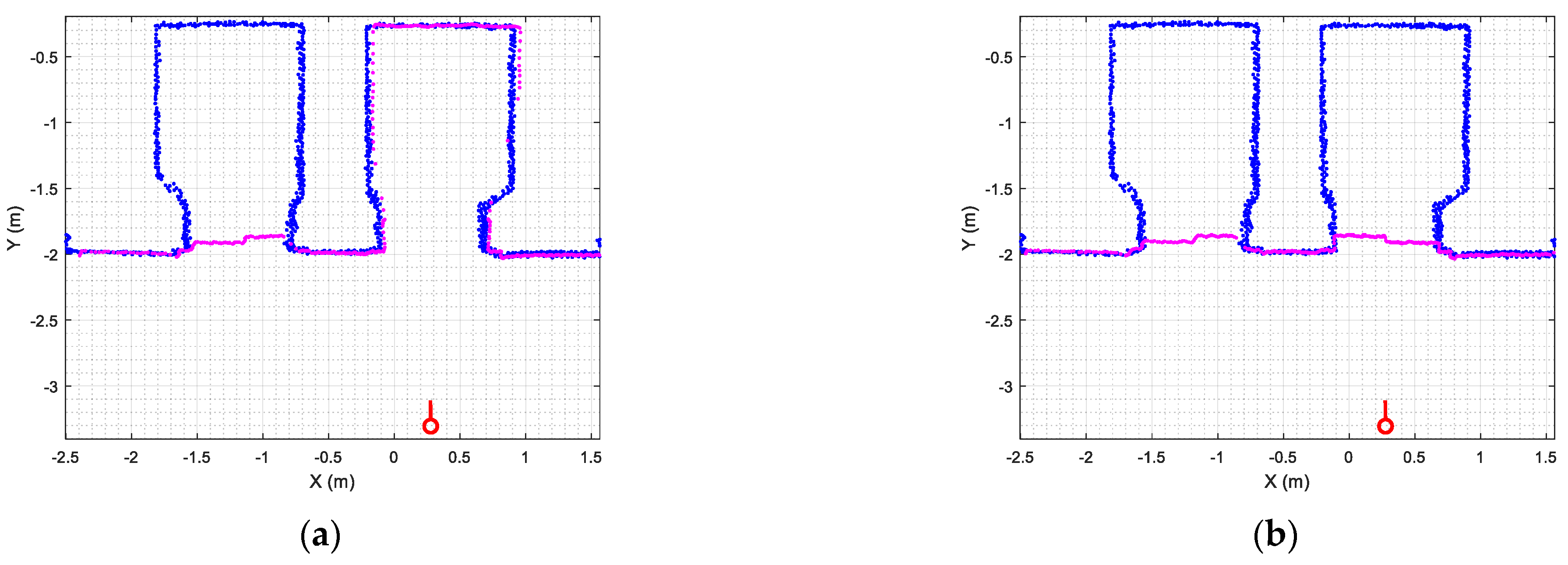

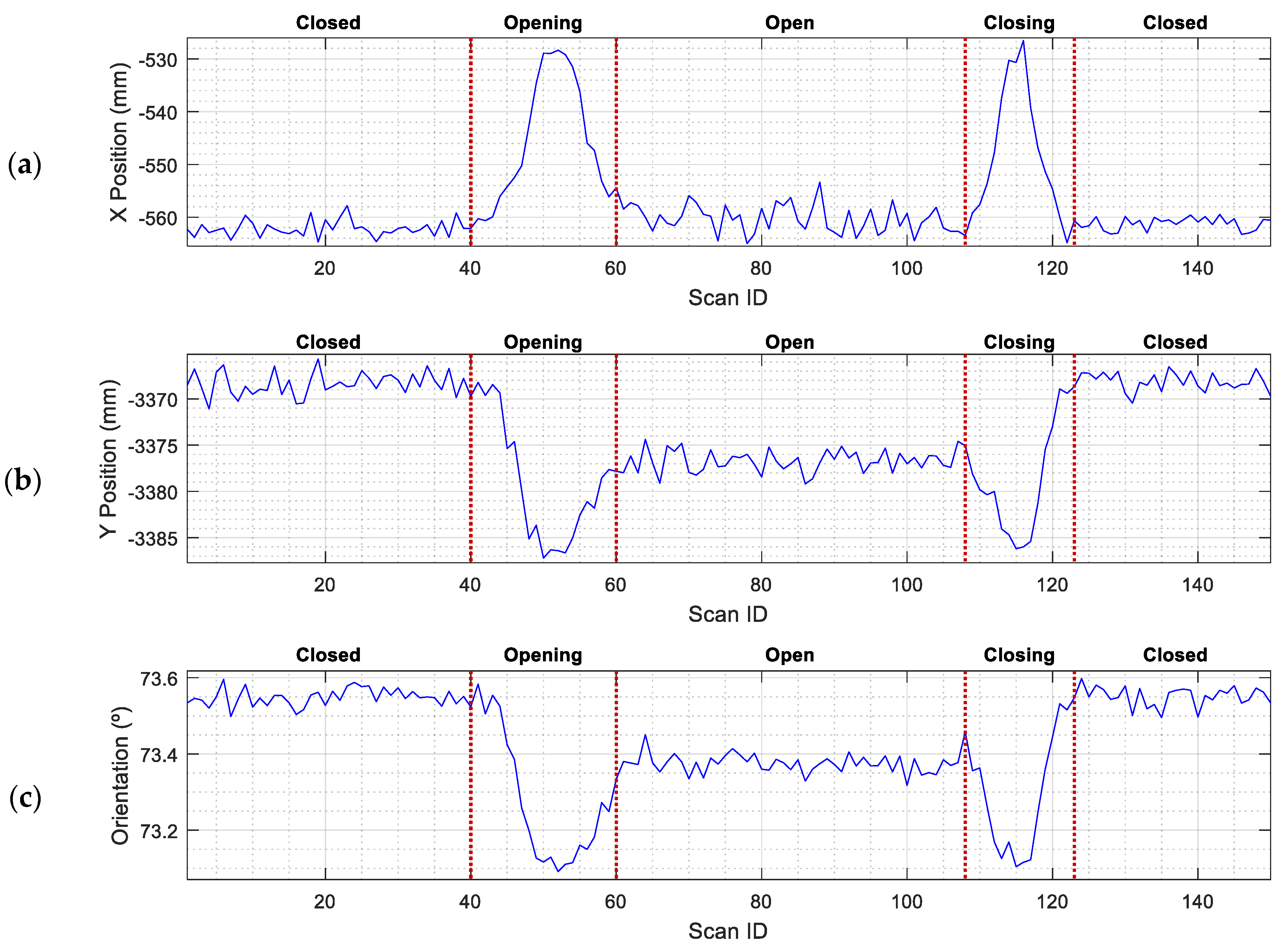

4.1. Self-Localization Next to the Elevators

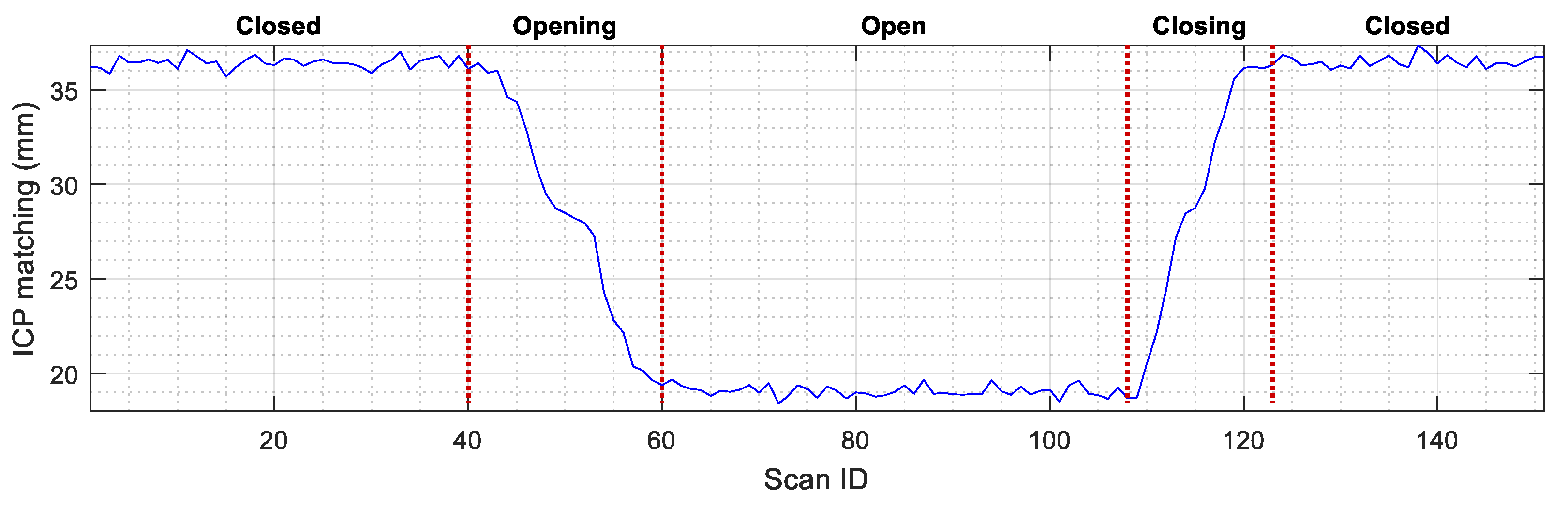

4.2. Elevator Door Status Detection

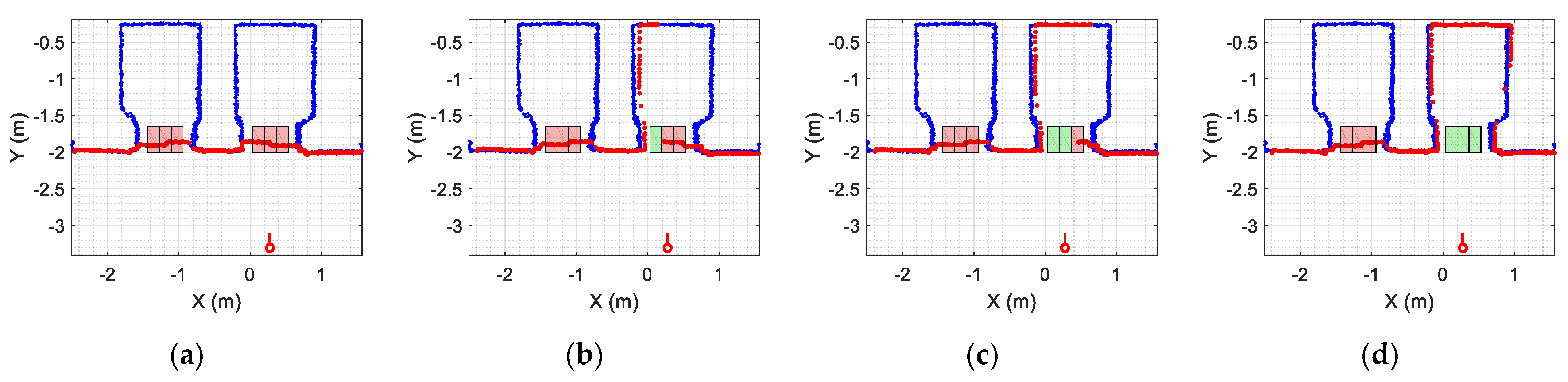

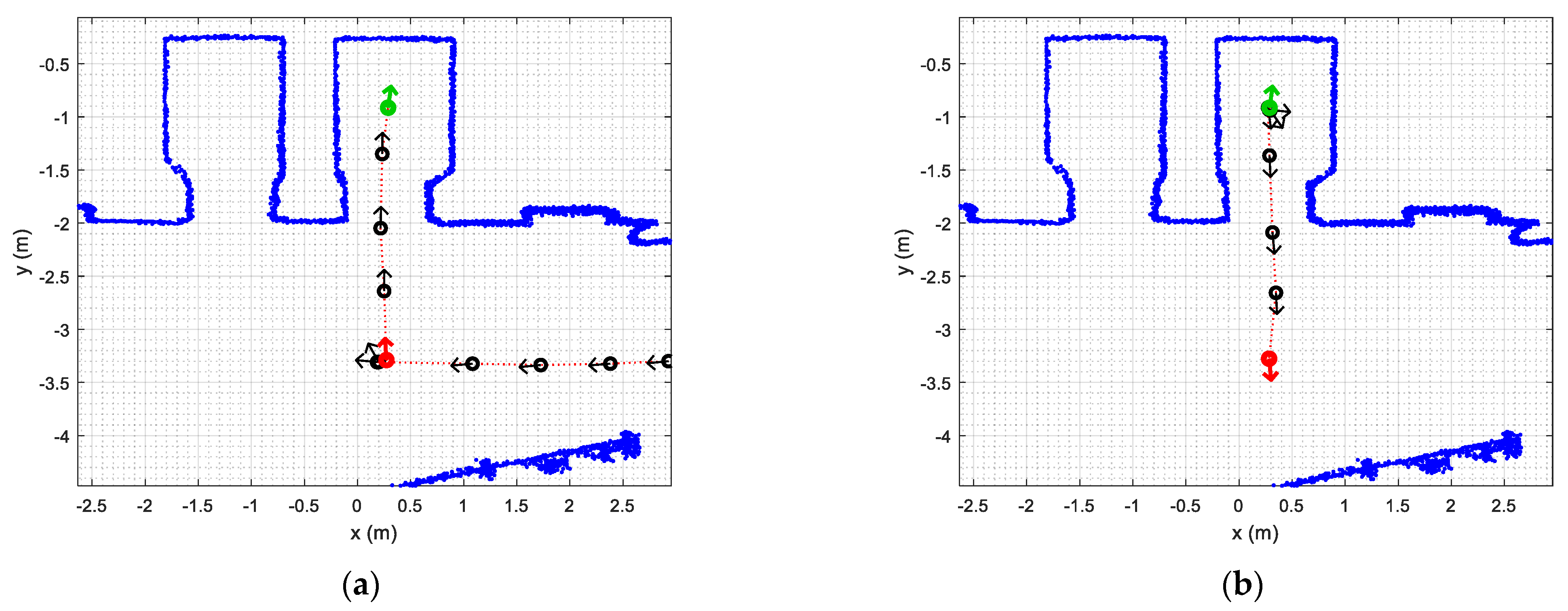

4.3. Path Tracking When Entering and Exiting the Elevator

4.4. Taking the Elevator

5. Discussions and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Hoffmann, T.; Prause, G. On the Regulatory Framework for Last-Mile Delivery Robots. Machines 2018, 6, 33. [Google Scholar] [CrossRef]

- Boysen, N.; Fedtke, S.; Schwerdfeger, S. Last-mile delivery concepts: A survey from an operational research perspective. OR Spectrum 2021, 43, 1–58. [Google Scholar] [CrossRef]

- Veloso, M.M.; Biswas, J.; Coltin, B.; Rosenthal, S. Cobots: Robust symbioticautonomous mobile service robots. In Proceedings of the International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 4423–4429. [Google Scholar]

- Alves, R.; Silva de Morais, J.; Lopes, C.R. Indoor Navigation with Human Assistance for Service Robots Using D*Lite. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Miyazaki, Japan, 7–10 October 2018; pp. 4106–4111. [Google Scholar] [CrossRef]

- Stump, E.; Michael, N. Multi-robot persistent surveillance planning as a Vehicle Routing Problem. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Trieste, Italy, 24–27 August 2011; pp. 569–575. [Google Scholar] [CrossRef]

- López, J.; Pérez, D.; Paz, E.; Santana, A. WatchBot: A building maintenance and surveillance system based on autonomous robots. Robot. Auton. Syst. 2013, 61, 1559–1571. [Google Scholar] [CrossRef]

- Hanebeck, U.D.; Fischer, C.; Schmidt, G. ROMAN: A mobile robotic assistant for indoor service applications. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robot and Systems, Innovative Robotics for Real-World Applications, Grenoble, France, 11 September 1997; pp. 518–525. [Google Scholar] [CrossRef]

- Jyh-Hwa, T.; Su Kuo, L. The development of the restaurant service mobile robot with a Laser positioning system. In Proceedings of the Chinese Control Conference, Kunming, China, 16–18 July 2008; pp. 662–666. [Google Scholar] [CrossRef]

- Chivarov, N.; Shivarov, S.; Yovchev, K.; Chikurtev, D.; Shivarov, N. Intelligent modular service mobile robot ROBCO 12 for elderly and disabled persons care. In Proceedings of the International Conference on Robotics in Alpe-Adria-Danube Region, Smolenice, Slovakia, 3–5 September 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Clotet, E.; Martínez, D.; Moreno, J.; Tresanchez, M.; Palacín, J. Assistant Personal Robot (APR): Conception and Application of a Tele-Operated Assisted Living Robot. Sensors 2016, 16, 610. [Google Scholar] [CrossRef]

- Eirale, A.; Martini, M.; Tagliavini, L.; Gandini, D.; Chiaberge, M.; Quaglia, G. Marvin: An Innovative Omni-Directional Robotic Assistant for Domestic Environments. Sensors 2022, 22, 5261. [Google Scholar] [CrossRef]

- Yu, X.; Dong, L.; Li, L.; Hoe, K.E. Lift-button detection and recognition for service robot in buildings. In Proceedings of the IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 313–316. [Google Scholar] [CrossRef]

- Klingbeil, E.; Carpenter, B.; Russakovsky, O.; Ng, A.Y. Autonomous Operation of Novel Elevators for Robot navigation. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010. [Google Scholar] [CrossRef]

- Wang, W.J.; Huang, C.H.; Lai, I.H.; Chen, H.C. A robot arm for pushing elevator buttons. In Proceedings of the SICE Annual Conference, Taipei, Taiwan, 18–21 August 2010. [Google Scholar]

- Kang, J.G.; An, S.Y.; Choi, W.S.; Oh, S.Y. Recognition and Path Planning Strategy for Autonomous Navigation in the Elevator Environment. Int. J. Control. Autom. Syst. 2010, 8, 808–821. [Google Scholar] [CrossRef]

- Troniak, D.; Sattar, J.; Gupta, A.; Little, J.J.; Chan, W.; Calisgan, E.; Croft, E.; Van der Loos, M. Charlie Rides the Elevator-Integrating Vision, Navigation and Manipulation Towards Multi-Floor Robot Locomotion. In Proceedings of the International Conference on Computer and Robot Vision, Regina, SK, Canada, 28–31 May 2013. [Google Scholar] [CrossRef]

- Abdulla, A.A.; Liu, H.; Stoll, N.; Thurow, K. A Robust Method for Elevator Operation in Semi-outdoor Environment for Mobile Robot Transportation System in Life Science Laboratories. In Proceedings of the IEEE Jubilee International Conference on Intelligent Engineering Systems, Budapest, Hungary, 30 June–2 July 2016. [Google Scholar] [CrossRef]

- Islam, K.T.; Mujtaba, G.; Raj, R.G.; Nweke, H.F. Elevator button and floor number recognition through hybrid image classification approach for navigation of service robot in buildings. In Proceedings of the International Conference on Engineering Technology and Technopreneurship, Kuala Lumpur, Malaysia, 18–20 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Jiang, S.; Yao, W.; Wong, M.S.; Hang, M.; Hong, Z.; Kim, E.J.; Joo, S.H. Automatic Elevator Button Localization Using a Combined Detecting and Tracking Framework for Multi-Story Navigation. IEEE Access 2020, 8, 1118–1134. [Google Scholar] [CrossRef]

- Manzoor, S.; Kim, E.J.; In, G.G.; Kuc, T.Y. Performance Evaluation of YOLOv3 and YOLOv4 Detectors on Elevator Button Dataset for Mobile Robot. In Proceedings of the 2021 International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 12–15 October 2021. [Google Scholar] [CrossRef]

- APR-02 Autonomous Robot Taking the Elevator. (Created March 2023). Available online: https://youtu.be/q7XyZmrdGHk (accessed on 1 March 2023).

- Palacín, J.; Rubies, E.; Clotet, E.; Martínez, D. Evaluation of the Path-Tracking Accuracy of a Three-Wheeled Omnidirectional Mobile Robot Designed as a Personal Assistant. Sensors 2021, 21, 7216. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Wang, C.; Liu, X.; Yang, X.; Hu, F.; Jiang, A.; Yang, C. Trajectory Tracking of an Omni-Directional Wheeled Mobile Robot Using a Model Predictive Control Strategy. Appl. Sci. 2018, 8, 231. [Google Scholar] [CrossRef]

- Lee, H.J.; Yi, H. Development of an Onboard Robotic Platform for Embedded Programming Education. Sensors 2021, 21, 3916. [Google Scholar] [CrossRef]

- Popovici, A.T.; Dosoftei, C.-C.; Budaciu, C. Kinematics Calibration and Validation Approach Using Indoor Positioning System for an Omnidirectional Mobile Robot. Sensors 2022, 22, 8590. [Google Scholar] [CrossRef]

- Palacín, J.; Clotet, E.; Martínez, D.; Moreno, J.; Tresanchez, M. Automatic Supervision of Temperature, Humidity, and Luminance with an Assistant Personal Robot. J. Sens. 2017, 2017, 1480401. [Google Scholar] [CrossRef]

- Palacín, J.; Clotet, E.; Martínez, D.; Martínez, D.; Moreno, J. Extending the Application of an Assistant Personal Robot as a Walk-Helper Tool. Robotics 2019, 8, 27. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 July 2012. [Google Scholar] [CrossRef]

- Louis, J. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Boost C++ Libraries. Available online: http://boost.org (accessed on 26 January 2023).

- Liu, B.; Bi, X.; Gu, L. 3D Point Cloud Construction and Display Based on LiDAR. In Proceedings of the International Conference on Computer, Control and Robotics, Shanghai, China, 18–20 March 2022; pp. 268–272. [Google Scholar] [CrossRef]

- Munguia, R.; Trujillo, J.-C.; Guerra, E.; Grau, A. A Hybrid Visual-Based SLAM Architecture: Local Filter-Based SLAM with KeyFrame-Based Global Mapping. Sensors 2022, 22, 210. [Google Scholar] [CrossRef]

- Nagla, S. 2D Hector SLAM of Indoor Mobile Robot using 2D LIDAR. In Proceedings of the International Conference on Power, Energy, Control and Transmission Systems, Chennai, India, 10–11 December 2020. [Google Scholar] [CrossRef]

- Tee, Y.K.; Han, Y.C. Lidar-Based 2D SLAM for Mobile Robot in an Indoor Environment: A Review. In Proceedings of the International Conference on Green Energy, Computing and Sustainable Technology, Miri, Malaysia, 7–9 July 2021. [Google Scholar] [CrossRef]

- Donso, F.A.; Austin, K.J.; McAree, P.R. How do ICP variants perform when used for scan matching terrain point clouds? Robot. Auton. Syst. 2017, 87, 147–161. [Google Scholar] [CrossRef]

- Hu, W.; Zhang, K.; Shao, L.; Lin, Q.; Hua, Y.; Qin, J. Clustering Denoising of 2D LiDAR Scanning in Indoor Environment Based on Keyframe Extraction. Sensors 2023, 23, 18. [Google Scholar] [CrossRef]

- Liebner, J.; Scheidig, A.; Gross, H.-M. Now I Need Help! Passing Doors and Using Elevators as an Assistance Requiring Robot. International Conference on Social Robotics. Lect. Notes Comput. Sci. 2019, 11876, 527–537. [Google Scholar] [CrossRef]

- Babel, F.; Hock, P.; Kraus, J.; Baumann, M. Human-Robot Conflict Resolution at an Elevator—The Effect of Robot Type, Request Politeness and Modality. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Sapporo, Japan, 7–10 March 2022. [Google Scholar] [CrossRef]

- Rubies, E.; Bitriá, R.; Clotet, E.; Palacín, J. Non-Contact and Non-Intrusive Add-on IoT Device for Wireless Remote Elevator Control. Appl. Sci. 2023, 13, 3971. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, K.; Liu, H. An Elevator Monitoring System Based On The Internet Of Things. Procedia Comput. Sci. 2018, 131, 541–544. [Google Scholar] [CrossRef]

- Teja, S.R.; Tez, D.S.P.; Nagarjuna, K.; Kumar, M.K.; Ahammad, S.H. Development of IoT Application for Online Monitoring of Elevator System. In Proceedings of the IEEE Mysore Sub Section International Conference, Mysuru, India, 16–17 October 2022. [Google Scholar] [CrossRef]

- Ullo, S.L.; Sinha, G.R. Advances in Smart Environment Monitoring Systems Using IoT and Sensors. Sensors 2020, 20, 3113. [Google Scholar] [CrossRef]

- Bo, W.; Zheyi, L. Design of the overall epidemic prevention system for the healthy operation of elevators. In Proceedings of the International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 25–27 December 2020. [Google Scholar] [CrossRef]

- Lai, S.C.; Wu, H.H.; Hsu, W.L.; Wang, R.J.; Shiau, Y.C.; Ho, M.C.; Hsieh, H.N. Contact-Free Operation of Epidemic Prevention Elevator for Buildings. Buildings 2022, 12, 411. [Google Scholar] [CrossRef]

- Sezer, V.; Gokasan, M. A novel obstacle avoidance algorithm: Follow the Gap Method. Robot. Auton. Syst. 2012, 60, 1123–1134. [Google Scholar] [CrossRef]

- Palacín, J.; Martínez, D.; Clotet, E.; Pallejà, T.; Burgués, J.; Fonollosa, J.; Pardo, A.; Marco, S. Application of an Array of Metal-Oxide Semiconductor Gas Sensors in an Assistant Personal Robot for Early Gas Leak Detection. Sensors 2019, 19, 1957. [Google Scholar] [CrossRef]

- Li, Y.; Dai, S.; Shi, Y.; Zhao, L.; Ding, M. Navigation Simulation of a Mecanum Wheel Mobile Robot Based on an Improved A* Algorithm in Unity3D. Sensors 2019, 19, 2976. [Google Scholar] [CrossRef]

- Lau, B.; Sprunk, C.; Burgard, W. Kinodynamic Motion Planning for Mobile Robots Using Splines. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Sprunk, C.; Lau, B.; Pfaffz, P.; Burgard, W. Online Generation of Kinodynamic Trajectories for Non-Circular Omnidirectional Robots. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Kuenemund, F.; Kirsch, C.; Hess, D.; Roehrig, C. Fast and Accurate Trajectory Generation for Non-Circular Omnidirectional Robots in Industrial Applications. In Proceedings of the ROBOTIK: German Conference on Robotics, Munich, Germany, 21–22 May 2012. [Google Scholar]

- Cao, Z.; Bryant, D.; Molteno, T.C.A.; Fox, C.; Parry, M. V-Spline: An Adaptive Smoothing Spline for Trajectory Reconstruction. Sensors 2021, 21, 3215. [Google Scholar] [CrossRef]

- Zeng, Z.; Lu, H.; Zheng, Z. High-speed trajectory tracking based on model predictive control for omni-directional mobile robots. In Proceedings of the Chinese Control and Decision Conference, Guiyang, China, 25–27 May 2013; pp. 3179–3184. [Google Scholar] [CrossRef]

- Gao, W.; Liu, C.; Zhan, Y.; Luo, Y.; Lan, Y.; Li, S.; Tang, M. Automatic task scheduling optimization and collision-free path planning for multi-areas problem. Intell. Serv. Robot 2021, 14, 583–596. [Google Scholar] [CrossRef]

- Zhu, Z.; Fu, Y.; Shen, W.; Mihailids, A.; Lui, S.; Zhou, W.; Huang, Z. CBASH: A CareBot-Assisted Smart Home System Architecture to Support Aging-in-Place. IEEE Access 2023, 11, 33542–33553. [Google Scholar] [CrossRef]

- Palacín, J.; Rubies, E.; Bitrià, R.; Clotet, E. Non-Parametric Calibration of the Inverse Kinematic Matrix of a Three-Wheeled Omnidirectional Mobile Robot Based on Genetic Algorithms. Appl. Sci. 2023, 13, 1053. [Google Scholar] [CrossRef]

- Siwek, M.; Panasiuk, J.; Baranowski, L.; Kaczmarek, W.; Prusaczyk, P.; Borys, S. Identification of Differential Drive Robot Dynamic Model Parameters. Materials 2023, 16, 683. [Google Scholar] [CrossRef] [PubMed]

- Toscano, E.; Lo Bello, L. A topology management protocol with bounded delay for Wireless Sensor Networks. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Hamburg, Germany, 15–18 September 2008; pp. 942–951. [Google Scholar] [CrossRef]

- Wollschlaeger, M.; Sauter, T.; Jasperneite, J. The Future of Industrial Communication: Automation Networks in the Era of the Internet of Things and Industry 4.0. IEEE Ind. Electron. Mag. 2017, 11, 17–27. [Google Scholar] [CrossRef]

- Grau, A.; Indri, M.; Bello, L.L.; Sauter, T. Industrial robotics in factory automation: From the early stage to the Internet of Things. In Proceedings of the IEEE Industrial Electronics Society Conference, Beijing, China, 29 October–1 November 2017; pp. 6159–6164. [Google Scholar] [CrossRef]

| Function | Description |

|---|---|

| send_elevator (ID, FLOOR) | ID: is the identification of the elevator. FLOOR: is the destination floor of the elevator. In this work, the valid floors are: −1, 0, 1, 2 and 3. |

| send_elevator (ID, ACTION) | ID: is the identification of the elevator. ACTION: is an action implemented in the original button panel of the elevator. In this work, the valid actions are: KeepOpen, maintain the button that keeps the door open pressed. Close, releases the button that keeps the door open. Alarm, press the alarm button of the elevator. |

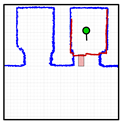

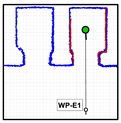

| Sequence: Function | Description | Map (Blue) + LIDAR (Red) |

|---|---|---|

| START of the procedure to enter elevator 1 (E1) |  | |

| 1: navigate_to (WP-E1) | The mobile robot must navigate to the waypoint located in front of elevator 1 (WP-E1). | |

| The mobile robot reaches the waypoint located in front of elevator 1 (WP-E1) and is facing the door. |  | |

| 2: send_elevator (E1, Floor2) | The mobile robot calls the elevator (E1) from floor 2 (where the mobile robot currently is). | |

| 3: waitfor_door_open (E1) | The mobile robot waits until it detects that the sliding door of the elevator is starting to open. | |

| The sliding door of elevator 1 is detected as opening (green area without scans). |  | |

| 4: send_elevator (E1, KeepOpen) | The mobile robot sends elevator 1 the order to keep the door open in order to prevent unexpected door closing. | |

| 5: waitfor_door_fullyopen (E1) | The mobile robot waits until the door of the elevator is fully open. | |

| The mobile robot detects full opening of the sliding door (all three green areas in the door area without scan points). |  | |

| 6: navigate_to (WP-E1I) | The mobile robot navigates to the waypoint inside the elevator (WP-E1I). |  |

| The mobile robot reaches the waypoint located inside elevator 1 (WP-E1I). |  | |

| The mobile robot is inside elevator 1 (E1) END of this partial sequence |

| Sequence: Function | Description | Map (Blue) + LIDAR (Red) |

|---|---|---|

| START of this partial sequence |  | |

| 7: send_elevator (E1, Floor1) | Sends the elevator (E1) the destination floor | |

| 8: send_elevator (E1, Close) | Allows automatic closing of the elevator door | |

| 9: rotate (180°) | The mobile robot rotates 180° to exit |  |

| The mobile robot completes the rotation | ||

| 10: waitfor_door_closed (E1) | The mobile robot waits until the door of the elevator is fully closed |  |

| The mobile robot detects full closure of the sliding door of the elevator |  | |

| 11: waitfor_door_open (E1) | The mobile robot waits for full opening of the door of the elevator | |

| The mobile robot detects full opening of the sliding door |  | |

| 12: send_elevator (E1, KeepOpen) | Send elevator the order to keep the door open | |

| 13: navigate_to (WP-E1) | The mobile robot navigates to the waypoint outside the elevator (WP-E1) |  |

| The mobile robot reaches the waypoint outside the elevator (WP-E1) |  | |

| 14: send_elevator (E1, Close) | Allow automatic closing of the door | |

| END of the procedure to take elevator 1 CONTINUE navigation |

| Function | Description |

|---|---|

| goto_floor (FLOOR) | FLOOR: is the destination floor of the mobile robot. In this work, the valid floors are −1, 0, 1, 2 and 3. This macro function defines the external waypoint of elevator 1 (WP-E1) as the new mobile robot trajectory destination, enters and exits from the elevator, and ends with the mobile robot located at (WP-E1) on the specified destination FLOOR (sequences in Table 2 and Table 3) |

| Single-Floor Mission Sequence: Function | Dual-Floor Mission Sequence: Function |

|---|---|

| SP1: start_at (Floor2, WP-OFFICE) SP2: move_to (WP-LAB1) SP3: move_to (WP-OFFICE) | DP1: start_at (Floor2, WP-OFFICE) DP2: goto_floor (Floor1) DP3: move_to (WP-LAB1) DP4: goto_floor (Floor2) DP5: move_to (WP-OFFICE) |

| Experiment | Starting Floor | Destination Floor | Navigation Problem | Arrival Floor |

|---|---|---|---|---|

| 1 | 2 | 1 | No | 1 |

| 2 | 1 | 2 | No | 2 |

| 3 | 2 | 3 | No | 3 |

| 4 | 3 | −1 | No | 0 1 |

| 5 | 0 | 2 | No | 2 |

| 6 | 2 | 0 | No | 0 |

| 7 | 0 | 3 | No | 3 |

| 8 | 1 | 0 | No | 0 |

| 9 | 0 | 3 | No | 2 1 |

| 10 | 2 | −1 | No | −1 |

| 11 | −1 | 0 | No | 0 |

| 12 | 0 | 2 | No | 2 |

| 13 | 2 | 1 | No | 1 |

| 14 | 1 | 3 | No | 3 |

| 15 | 3 | 2 | No | 2 |

| Concept | Number of Experiments | Successful Experiments | Failed Experiments | Success Rate |

|---|---|---|---|---|

| Entering the elevator | 15 | 15 | 0 | 100% |

| Exiting the elevator | 15 | 15 | 0 | 100% |

| Arriving at the planned destination floor | 15 | 13 | 2 1 | 86% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palacín, J.; Bitriá, R.; Rubies, E.; Clotet, E. A Procedure for Taking a Remotely Controlled Elevator with an Autonomous Mobile Robot Based on 2D LIDAR. Sensors 2023, 23, 6089. https://doi.org/10.3390/s23136089

Palacín J, Bitriá R, Rubies E, Clotet E. A Procedure for Taking a Remotely Controlled Elevator with an Autonomous Mobile Robot Based on 2D LIDAR. Sensors. 2023; 23(13):6089. https://doi.org/10.3390/s23136089

Chicago/Turabian StylePalacín, Jordi, Ricard Bitriá, Elena Rubies, and Eduard Clotet. 2023. "A Procedure for Taking a Remotely Controlled Elevator with an Autonomous Mobile Robot Based on 2D LIDAR" Sensors 23, no. 13: 6089. https://doi.org/10.3390/s23136089

APA StylePalacín, J., Bitriá, R., Rubies, E., & Clotet, E. (2023). A Procedure for Taking a Remotely Controlled Elevator with an Autonomous Mobile Robot Based on 2D LIDAR. Sensors, 23(13), 6089. https://doi.org/10.3390/s23136089