An Automated Sitting Posture Recognition System Utilizing Pressure Sensors

Abstract

1. Introduction

2. Related Work

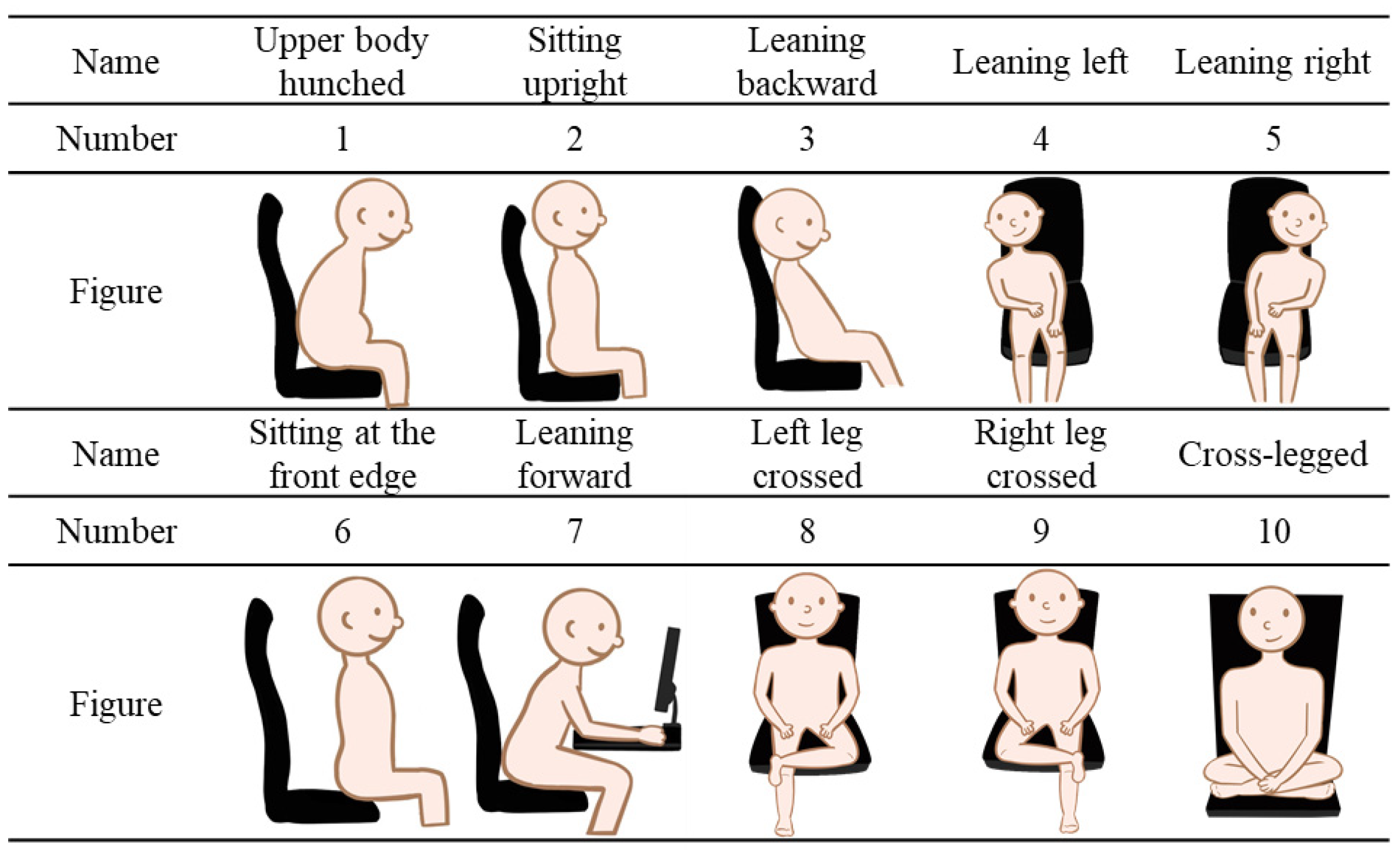

3. Design and Implementation of the Sitting Posture Recognition System

3.1. Design Requirements and Challenges

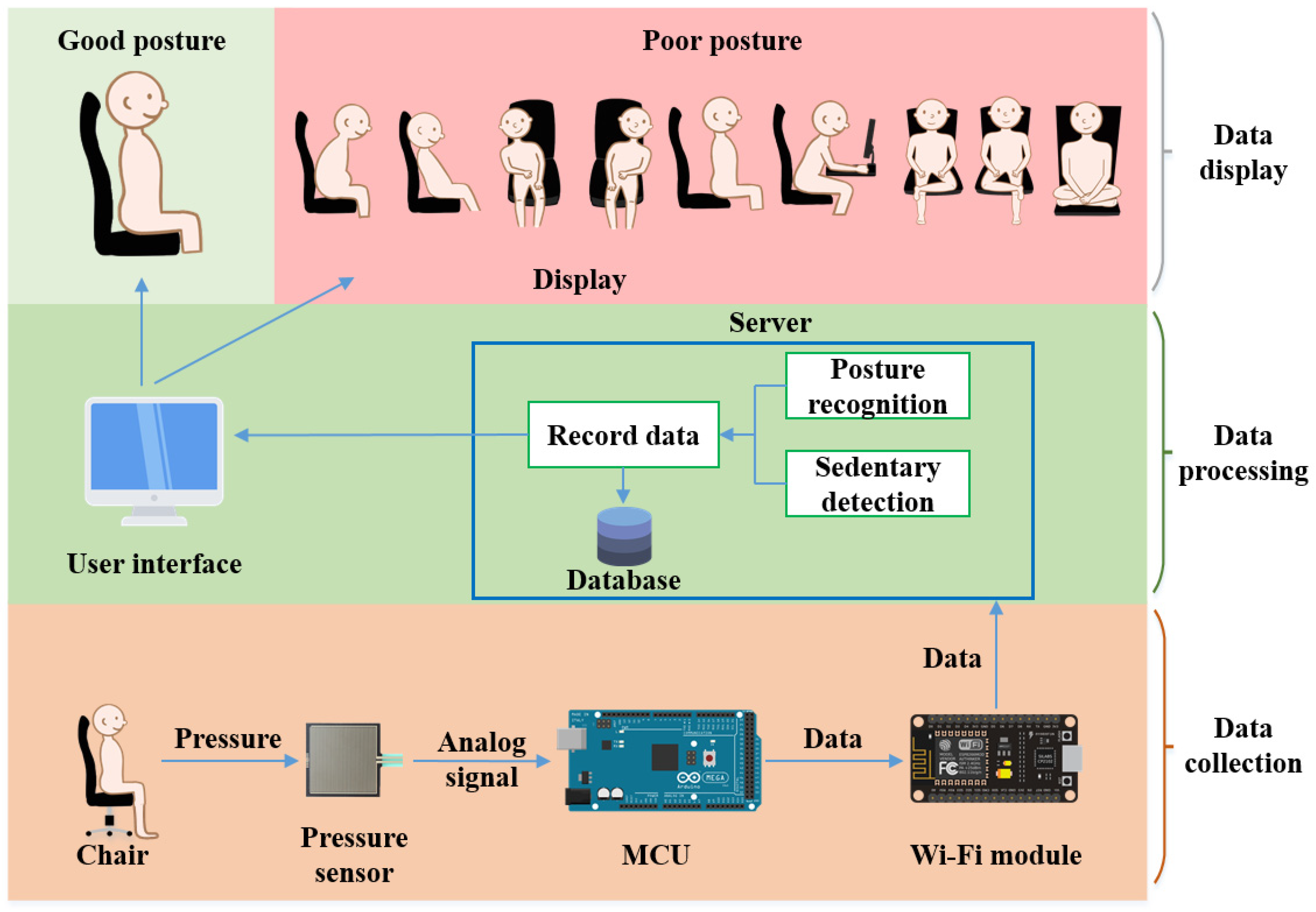

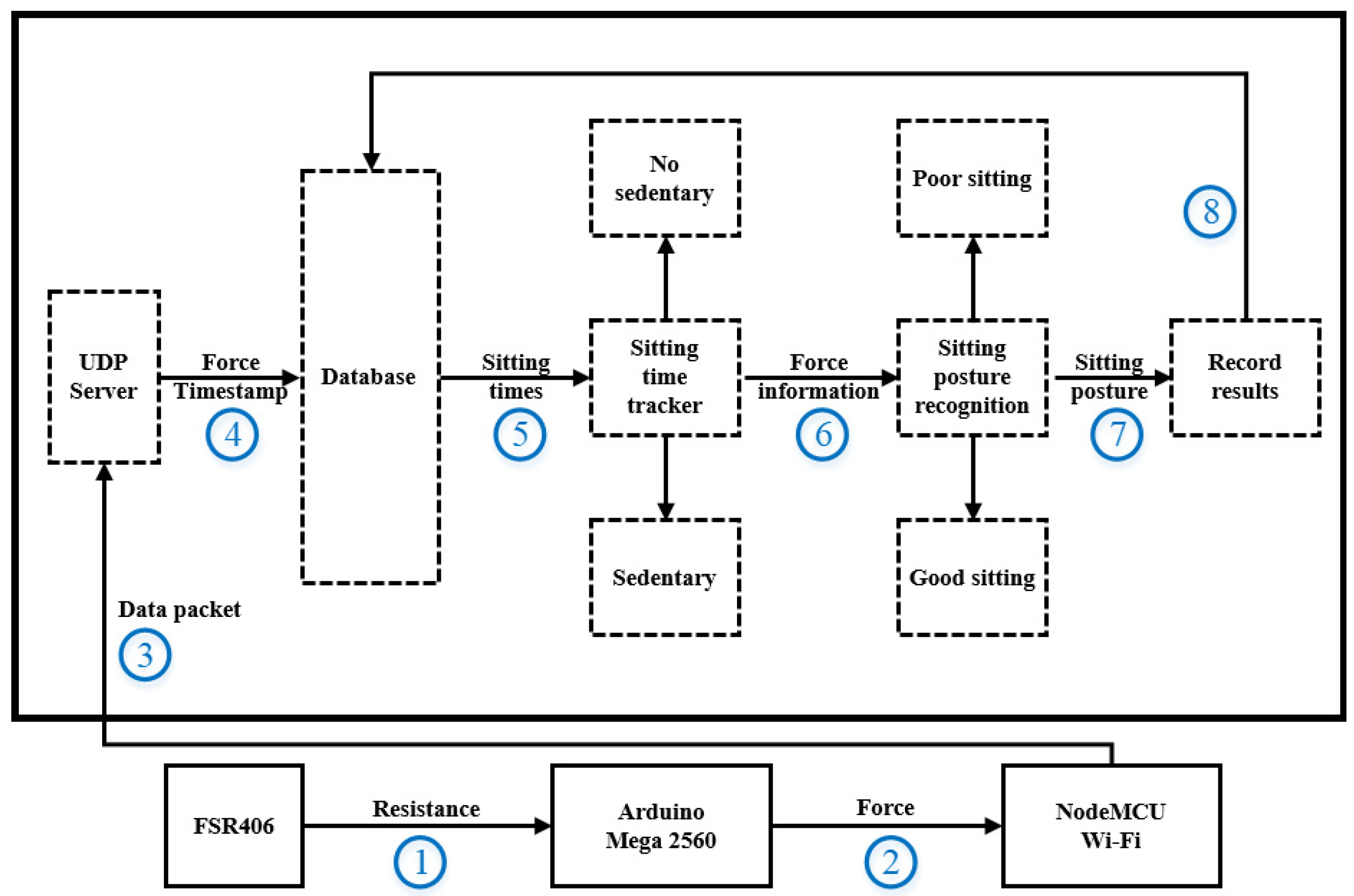

3.2. SPRS Overview

3.3. Smart Cushion Hardware

3.4. Posture Recognition Methods

3.4.1. Z-Score Standardization

3.4.2. Support Vector Machine (SVM)

3.4.3. K-Nearest Neighbor (KNN)

3.4.4. Decision Tree

3.4.5. Random Forest

3.4.6. Logistic Regression

3.5. Posture Recognition Application Software

3.5.1. Software Architecture

3.5.2. Software Interface

4. Experimental Results and Discussion

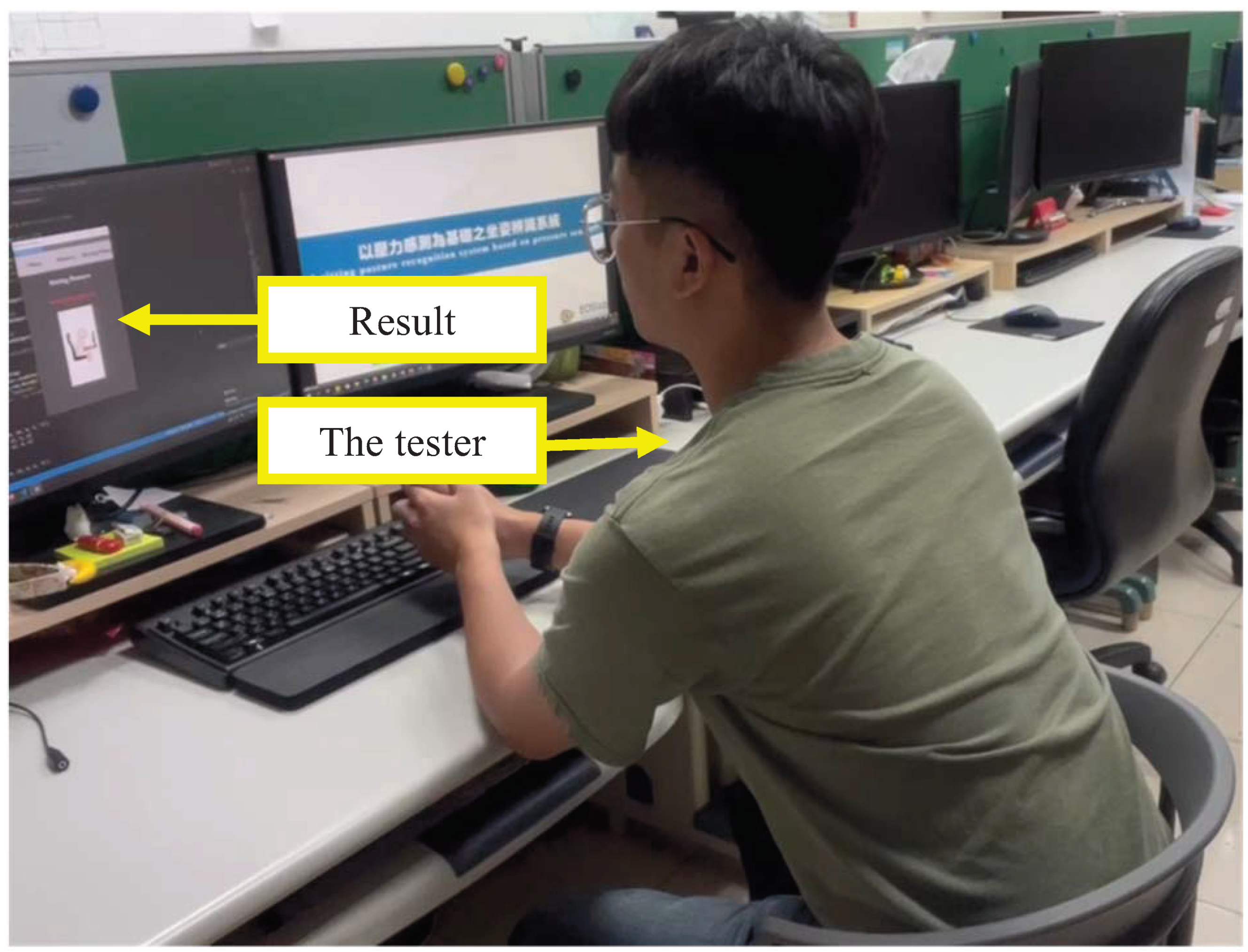

4.1. Experimental Design

4.1.1. Stage One: Model Establishment

4.1.2. Stage Two: Accuracy Validation

4.2. SVM Accuracy

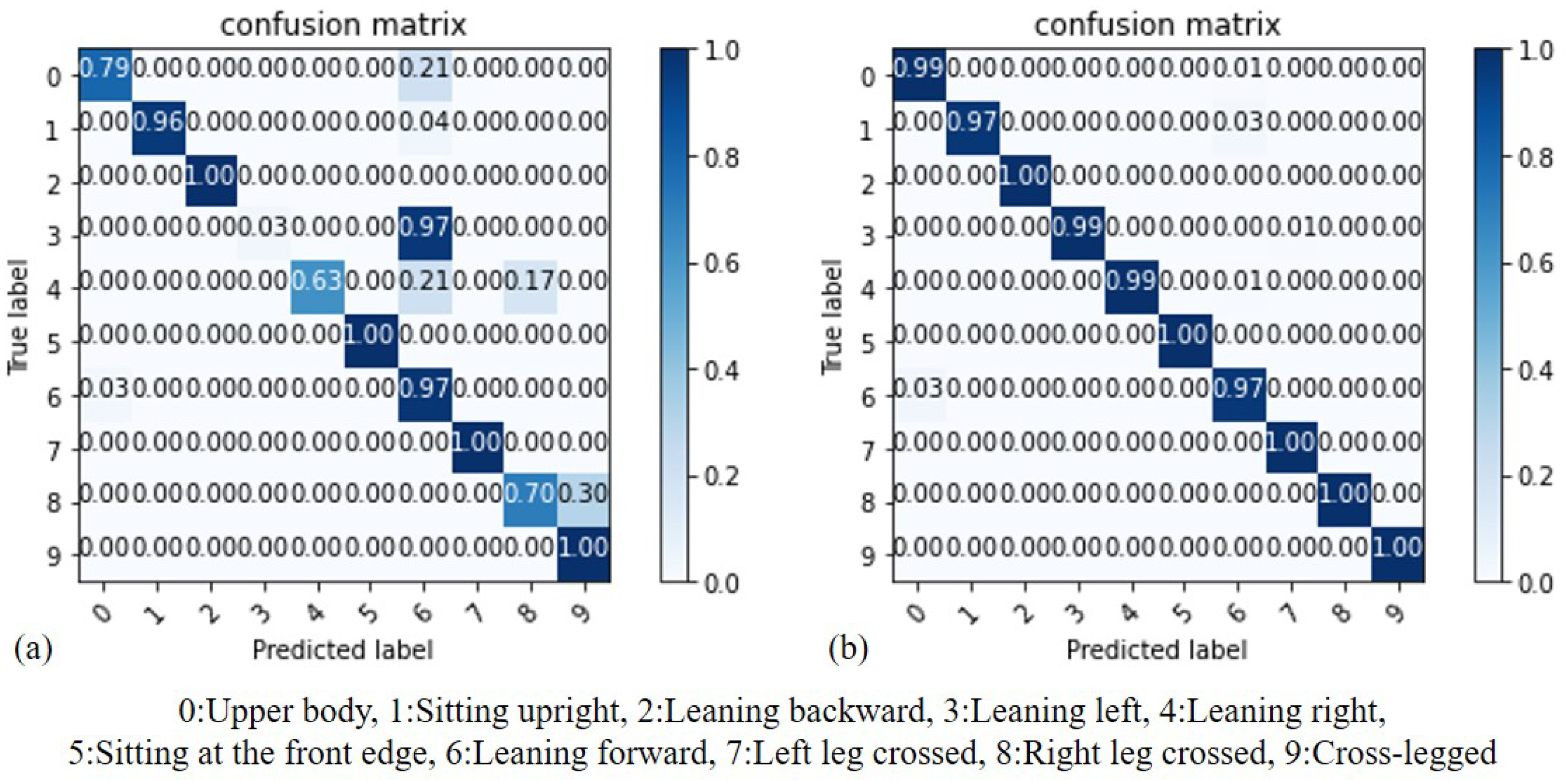

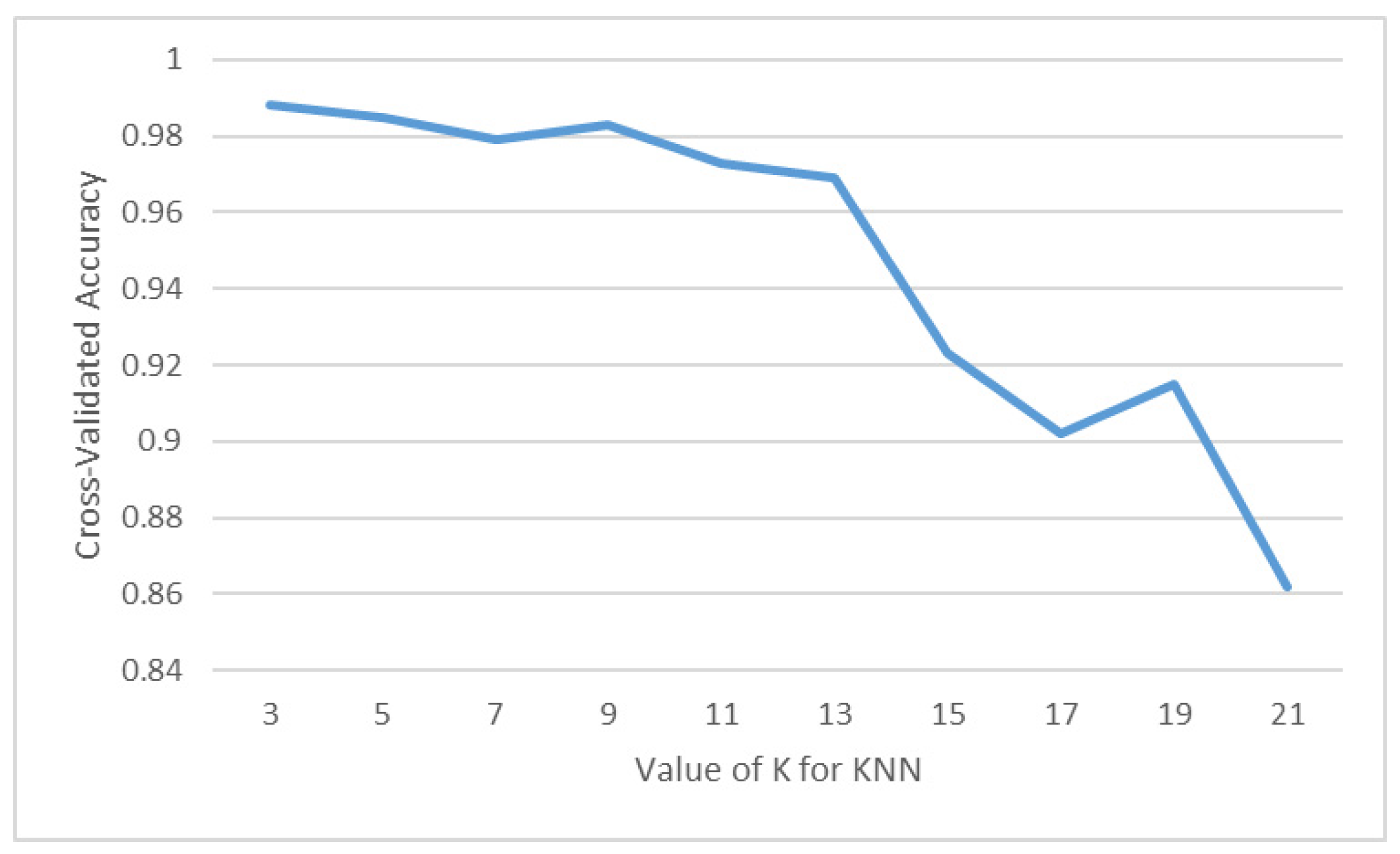

4.3. KNN Accuracy

4.4. Decision Tree Accuracy

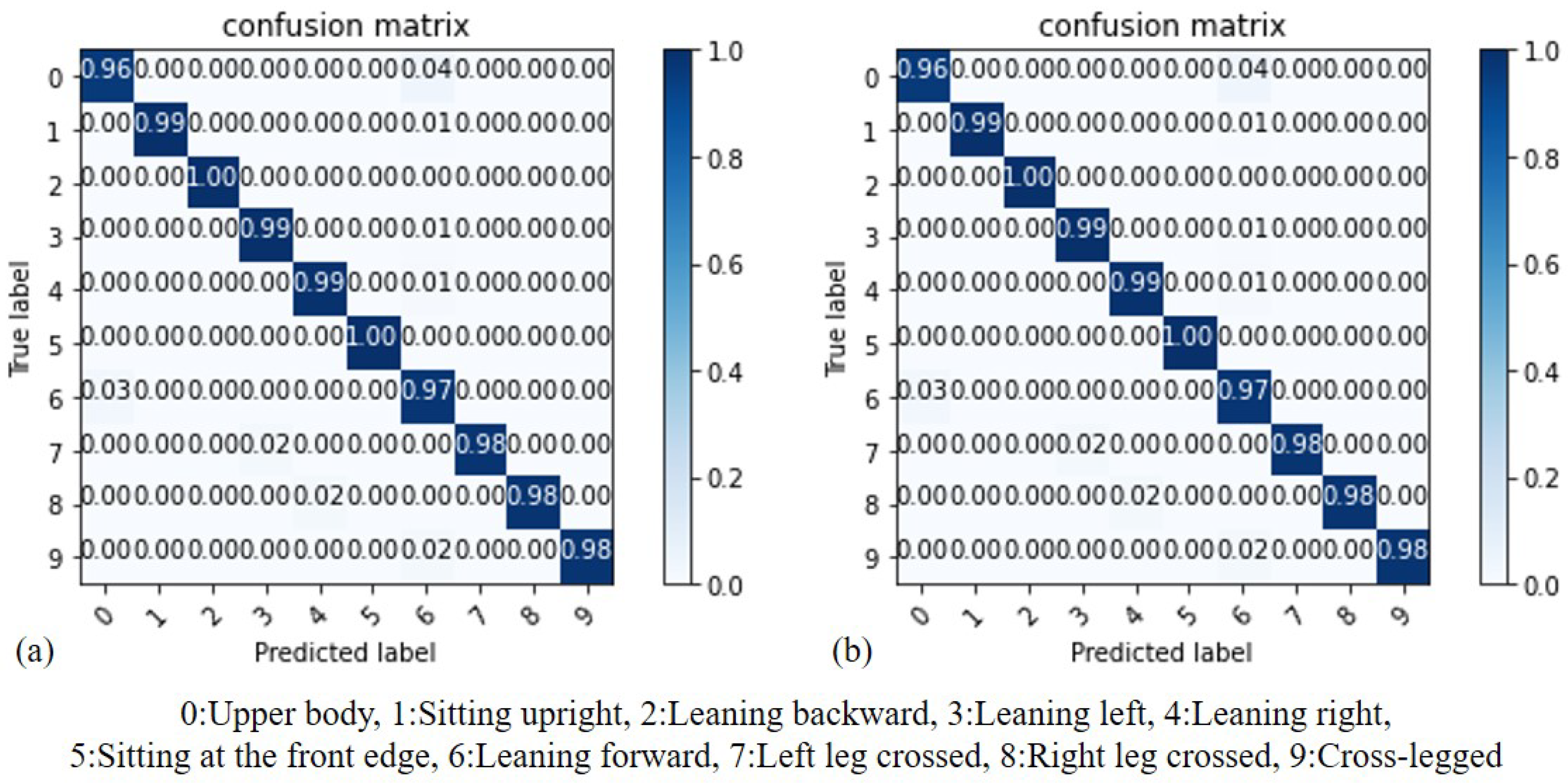

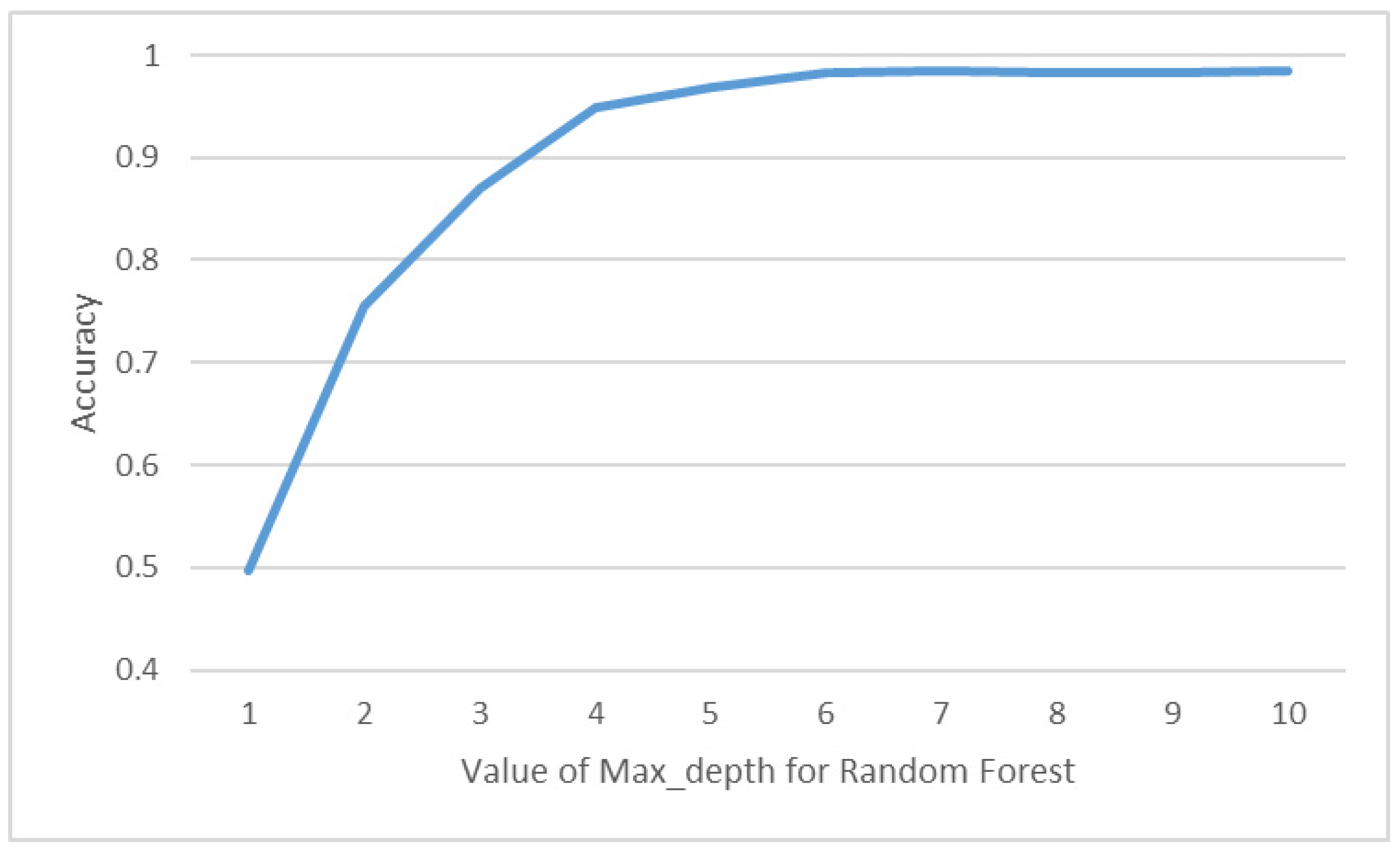

4.5. Random Forest Accuracy

4.6. Logistic Regression Accuracy

4.7. Comparison of Algorithms

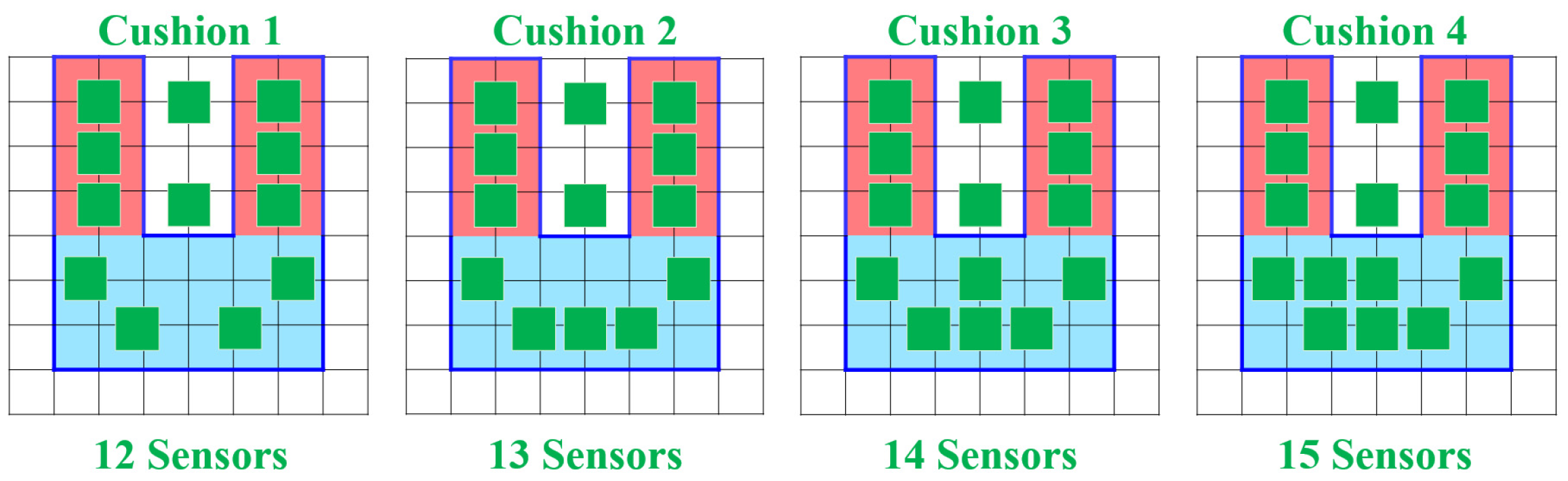

4.8. Influence of Sensor Placement on Accuracy

4.9. System Response Time

4.10. Usability Analysis

4.11. User Interface Satisfaction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Faucett, J.; Rempel, D. VDT-related musculoskeletal symptoms: Interactions between work posture and psychosocial work factors. Am. J. Ind. Med. 1994, 26, 597–612. [Google Scholar] [CrossRef] [PubMed]

- Ortiz-Hernandez, L.; Tamez-Gonzalez, S.; Martinez-Alcantara, S.; Mendez-Ramirez, I. Computer use increases the risk of musculoskeletal disorders among newspaper office workers. Arch. Med. Res. 2003, 34, 331–342. [Google Scholar] [CrossRef] [PubMed]

- Callaghan, J.P.; McGill, S.M. Low back joint loading and kinematics during standing and unsupported sitting. Ergonomics 2001, 44, 280–294. [Google Scholar] [CrossRef] [PubMed]

- Waongenngarm, P.; van der Beek, A.J.; Akkarakittichoke, N.; Janwantanakul, P. Perceived musculoskeletal discomfort and its association with postural shifts during 4-h prolonged sitting in office workers. Appl. Ergon. 2020, 89, 103225. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.; Chen, S.; Lach, J. Detecting and Preventing Forward Head Posture with Wireless Inertial Body Sensor Networks. In Proceedings of the 2011 International Conference on Body Sensor Networks, Dallas, TX, USA, 23–25 May 2011; pp. 125–126. [Google Scholar]

- Estrada, J.E.; Vea, L.A. Real-time human sitting posture detection using mobile devices. In Proceedings of the 2016 IEEE Region 10 Symposium (TENSYMP), Bali, Indonesia, 9–11 May 2016; pp. 140–144. [Google Scholar] [CrossRef]

- Gupta, R.; Gupta, S.H.; Agarwal, A.; Choudhary, P.; Bansal, N.; Sen, S. A Wearable Multisensor Posture Detection System. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; pp. 818–822. [Google Scholar] [CrossRef]

- Qian, Z.; Bowden, A.E.; Zhang, D.; Wan, J.; Liu, W.; Li, X.; Baradoy, D.; Fullwood, D.T. Inverse Piezoresistive Nanocomposite Sensors for Identifying Human Sitting Posture. Sensors 2018, 18, 1745. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Li, Z.; Liu, C.; Chen, X.; Yin, X.; Fang, D. SitR: Sitting Posture Recognition Using RF Signals. IEEE Internet Things J. 2020, 7, 11492–11504. [Google Scholar] [CrossRef]

- Ma, S.; Cho, W.H.; Quan, C.H.; Lee, S. A sitting posture recognition system based on 3 axis accelerometer. In Proceedings of the 2016 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Chiang Mai, Thailand, 5–7 October 2016; pp. 1–3. [Google Scholar] [CrossRef]

- Chin, L.; Eu, K.S.; Tay, T.T.; Teoh, C.Y.; Yap, K.M. A Posture Recognition Model Dedicated for Differentiating between Proper and Improper Sitting Posture with Kinect Sensor. In Proceedings of the 2019 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE), Subang Jaya, Malaysia, 3–4 October 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Sun, H.; Zhu, G.a.; Cui, X.; Wang, J.X. Kinect-based intelligent monitoring and warning of students’ sitting posture. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; pp. 338–342. [Google Scholar] [CrossRef]

- Ho, E.S.; Chan, J.C.; Chan, D.C.; Shum, H.P.; Cheung, Y.m.; Yuen, P.C. Improving posture classification accuracy for depth sensor-based human activity monitoring in smart environments. Comput. Vis. Image Underst. 2016, 148, 97–110. [Google Scholar] [CrossRef]

- Yao, L.; Min, W.; Cui, H. A New Kinect Approach to Judge Unhealthy Sitting Posture Based on Neck Angle and Torso Angle. In Image and Graphics: 9th International Conference, ICIG 2017, Shanghai, China, 13–15 September 2017; Springer International Publishing: Cham, Switzerland, 2017; pp. 340–350. [Google Scholar] [CrossRef]

- Min, W.; Cui, H.; Han, Q.; Zou, F. A Scene Recognition and Semantic Analysis Approach to Unhealthy Sitting Posture Detection during Screen-Reading. Sensors 2018, 18, 3119. [Google Scholar] [CrossRef] [PubMed]

- Paliyawan, P.; Nukoolkit, C.; Mongkolnam, P. Prolonged sitting detection for office workers syndrome prevention using kinect. In Proceedings of the 2014 11th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Nakhon Ratchasima, Thailand, 14–17 May 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Mu, L.; Li, K.; Wu, C. A sitting posture surveillance system based on image processing technology. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Bali Island, Indonesia, 26–29 March 2010; Volume 1, pp. V1–692–V1–695. [Google Scholar] [CrossRef]

- Cho, H.; Choi, H.J.; Lee, C.E.; Sir, C.W. Sitting Posture Prediction and Correction System using Arduino-Based Chair and Deep Learning Model. In Proceedings of the 2019 IEEE 12th Conference on Service-Oriented Computing and Applications (SOCA), Kaohsiung, Taiwan, 18–21 November 2019; pp. 98–102. [Google Scholar] [CrossRef]

- Hu, Q.; Tang, X.; Tang, W. A Smart Chair Sitting Posture Recognition System Using Flex Sensors and FPGA Implemented Artificial Neural Network. IEEE Sens. J. 2020, 20, 8007–8016. [Google Scholar] [CrossRef]

- Xu, W.; Huang, M.C.; Amini, N.; He, L.; Sarrafzadeh, M. eCushion: A Textile Pressure Sensor Array Design and Calibration for Sitting Posture Analysis. IEEE Sens. J. 2013, 13, 3926–3934. [Google Scholar] [CrossRef]

- Wang, J.; Hafidh, B.; Dong, H.; Saddik, A.E. Sitting Posture Recognition Using a Spiking Neural Network. IEEE Sens. J. 2021, 21, 1779–1786. [Google Scholar] [CrossRef]

- Fan, Z.; Hu, X.; Chen, W.M.; Zhang, D.W.; Ma, X. A deep learning based 2-dimensional hip pressure signals analysis method for sitting posture recognition. Biomed. Signal Process. Control 2022, 73, 103432. [Google Scholar] [CrossRef]

- Wan, Q.; Zhao, H.; Li, J.; Xu, P. Hip Positioning and Sitting Posture Recognition Based on Human Sitting Pressure Image. Sensors 2021, 21, 426. [Google Scholar] [CrossRef] [PubMed]

- Ran, X.; Wang, C.; Xiao, Y.; Xuliang, G.; Zhu, Z.; Chen, B. A Portable Sitting Posture Monitoring System Based on A Pressure Sensor Array and Machine Learning. Sens. Actuators Phys. 2021, 331, 112900. [Google Scholar] [CrossRef]

- Jeong, H.; Park, W. Developing and Evaluating a Mixed Sensor Smart Chair System for Real-Time Posture Classification: Combining Pressure and Distance Sensors. IEEE J. Biomed. Health Inform. 2021, 25, 1805–1813. [Google Scholar] [CrossRef] [PubMed]

- EOSLAB. A Sitting Posture Recognition System Based on Pressure Sensors. 2022. Available online: https://youtu.be/ICw2KKHE1Rc (accessed on 29 July 2022).

- Abdullah, N.F.; Rashid, N.; Othman, K.A.; Musirin, I. Vehicles classification using Z-score and modelling neural network for forward scattering radar. In Proceedings of the 2014 15th International Radar Symposium (IRS), Gdansk, Poland, 16–18 June 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Arenas-Garcia, J.; Perez-Cruz, F. Multi-class support vector machines: A new approach. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003, (ICASSP ’03), Hong Kong, China, 6–10 April 2003; Volume 2, pp. II–781. [Google Scholar] [CrossRef]

- Zhang, Z. Introduction to machine learning: K-nearest neighbors. Ann. Transl. Med. 2016, 4, 218. [Google Scholar] [CrossRef] [PubMed]

- Wu, K.; Zheng, Z.; Tang, S. BVDT: A Boosted Vector Decision Tree Algorithm for Multi-Class Classification Problems. Int. J. Pattern Recognit. Artif. Intell. 2017, 31, 1750016. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote. Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Wooff, D. Logistic Regression: A Self-learning Text. J. R. Stat. Soc. Ser. (Stat. Soc.) 2004, 167, 192–194. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A quick and dirty usability scale. In Usability Evaluation In Industry; CRC Press: Boca Raton, FL, USA, 1995; Volume 189. [Google Scholar]

- Chin, J.; Diehl, V.; Norman, K. Development of an Instrument Measuring User Satisfaction of the Human-Computer Interface. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Washington, DC, USA, 15–19 May 1988. [Google Scholar]

- Kett, A.R.; Sichting, F.; Milani, T.L. The Effect of Sitting Posture and Postural Activity on Low Back Muscle Stiffness. Biomechanics 2021, 1, 214–224. [Google Scholar] [CrossRef]

| Reference | Sensor | Position of Sensor | Method | Accuracy Rate | Number of Sitting Postures (Figure 1) |

|---|---|---|---|---|---|

| Cho et al. [18] 2019 | Pressure and ultrasonic sensors | Hip and back | LBCNet | 96% | 1, 2, 3, 4, 5, 8, 9 |

| Wang et al. [21] 2021 | Pressure sensor | Hip and back | SNN | 88.50% | 1, 2, 3, 4, 5, 6, 8, 9 |

| Hu et al. [19] 2020 | Pressure sensor | Hip, armrests and back | ANN | 97.70% | 2, 3, 4, 5, 8, 9 |

| Fan et al. [22] 2022 | Large pressure pad | Hip | CNN | 99.80% | 1, 2, 3, 4, 5 |

| Wan et al. [23] 2021 | Pressure pad | Hip | SVM | 89.60% | 2, 3, 4, 5 |

| Ran et al. [24] 2021 | Large pressure pad | Hip | RF | 96.20% | 1, 2, 3, 4, 5, 8, 9 |

| Jeong et al. [25] 2021 | Pressure and distance sensor | Hip and back | KNN | 92% | 1, 2, 3, 4, 5, 6 |

| SPRS (This work) | Pressure sensor | Hip | SVM | 99.18% | ALL |

| Sensor ID | 16 | 20 | 22 | 24 | 13 |

| Weights | 0.15 | 0.12 | 0.10 | 0.10 | 0.08 |

| Sensor ID | 11 | 15 | 10 | 1 | 3 |

| Weights | 0.08 | 0.07 | 0.07 | 0.05 | 0.04 |

| Sensor ID | 5 | 6 | 23 | 18 | 17 |

| Weights | 0.03 | 0.03 | 0.02 | 0.02 | 0.01 |

| Kernel Function | Accuracy |

|---|---|

| Linear | 99.1% |

| Sigmoid | 98.5% |

| RBF | 98.4% |

| Polynomial | 99.0% |

| Algorithms | Parameter | Accuracy |

|---|---|---|

| SVM | Linear | 99.18% |

| KNN | K = 3 | 98.86% |

| Decision Tree | Max_depth = 10 | 97.83% |

| Random Forest | Max_depth = 7 | 98.41% |

| Logistic Regression | – | 98.19% |

| Algorithms | Cusion 1 | Cusion 2 | Cusion 3 | Cusion 4 |

|---|---|---|---|---|

| SVM | 96.03% | 99.18% | 99.23% | 99.23% |

| KNN | 95.65% | 98.86% | 98.91% | 98.91% |

| DT | 94.25% | 97.83% | 97.89% | 97.89% |

| RF | 95.42% | 98.41% | 98.64% | 98.64% |

| LR | 94.89% | 98.19% | 98.53% | 98.53% |

| Question | Average | Standard Deviation | Percentage of Each Point of Likert’s 5-Point Scale (%) | ||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| Q1 | 4.44 | 0.73 | 0.0 | 0.0 | 15.0 | 30.0 | 55.0 |

| Q2 | 1.22 | 0.44 | 80.0 | 20.0 | 0.0 | 0.0 | 0.0 |

| Q3 | 4.44 | 0.73 | 0.0 | 0.0 | 5.0 | 40.0 | 55.0 |

| Q4 | 1.67 | 0.71 | 45.0 | 40.0 | 15.0 | 0.0 | 0.0 |

| Q5 | 4.78 | 0.44 | 0.0 | 0.0 | 5.0 | 30.0 | 65.0 |

| Q6 | 1.44 | 0.53 | 40.0 | 55.0 | 5.0 | 0.0 | 0.0 |

| Q7 | 4.56 | 0.53 | 0.0 | 0.0 | 0.0 | 45.0 | 55.0 |

| Q8 | 1.44 | 0.53 | 50.0 | 45.0 | 5.0 | 0.0 | 0.0 |

| Q9 | 4.22 | 0.83 | 0.0 | 0.0 | 10.0 | 40.0 | 50.0 |

| Q10 | 1.78 | 0.67 | 35.0 | 40.0 | 20.0 | 5.0 | 0.0 |

| Aspects | Question | Average | Average Score of Questions | Standard Deviation | Percentage of Each Point of 10-Point Scale (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |||||

| Overall reactions to the software | Q1 | 7.75 | 7.53 | 0.91 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 25.0 | 45.0 | 20.0 |

| Q2 | 7.45 | 1.93 | 0.0 | 5.0 | 0.0 | 0.0 | 0.0 | 5.0 | 10.0 | 20.0 | 25.0 | 35.0 | ||

| Q3 | 7.10 | 1.48 | 0.0 | 0.0 | 5.0 | 0.0 | 0.0 | 0.0 | 20.0 | 25.0 | 45.0 | 5.0 | ||

| Q4 | 7.75 | 1.37 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 10.0 | 25.0 | 20.0 | 40.0 | ||

| Q5 | 7.60 | 1.23 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 15.0 | 25.0 | 25.0 | 30.0 | ||

| Screen | Q6 | 8.15 | 7.76 | 1.04 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 15.0 | 25.0 | 50.0 |

| Q7 | 7.70 | 0.98 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 15.0 | 20.0 | 45.0 | 20.0 | ||

| Q8 | 7.45 | 1.05 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 15.0 | 20.0 | 50.0 | 10.0 | ||

| Q9 | 7.65 | 0.93 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 35.0 | 35.0 | 20.0 | ||

| Terminology and system information | Q10 | 7.25 | 7.73 | 1.21 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 25.0 | 30.0 | 20.0 | 20.0 |

| Q11 | 7.75 | 1.12 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 5.0 | 30.0 | 30.0 | 30.0 | ||

| Q12 | 8.20 | 0.89 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 15.0 | 35.0 | 45.0 | ||

| Q13 | 7.80 | 1.06 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 35.0 | 20.0 | 35.0 | ||

| Q14 | 8.05 | 1.19 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 10.0 | 5.0 | 35.0 | 45.0 | ||

| Q15 | 7.35 | 1.57 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 5.0 | 15.0 | 20.0 | 30.0 | 25.0 | ||

| Learning | Q16 | 8.05 | 7.77 | 1.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 25.0 | 25.0 | 45.0 |

| Q17 | 7.45 | 1.23 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 10.0 | 25.0 | 35.0 | 20.0 | ||

| Q18 | 7.65 | 1.04 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 15.0 | 30.0 | 30.0 | 25.0 | ||

| Q19 | 7.80 | 1.01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 30.0 | 30.0 | 30.0 | ||

| Q20 | 7.95 | 1.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 30.0 | 15.0 | 45.0 | ||

| Q21 | 7.70 | 0.98 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 15.0 | 20.0 | 45.0 | 20.0 | ||

| System capabilities | Q22 | 7.50 | 7.53 | 0.95 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 15.0 | 35.0 | 35.0 | 15.0 |

| Q23 | 7.85 | 1.14 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 5.0 | 25.0 | 30.0 | 35.0 | ||

| Q24 | 7.40 | 1.19 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 20.0 | 25.0 | 30.0 | 20.0 | ||

| Q25 | 7.40 | 1.6 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 0.0 | 25.0 | 20.0 | 15.0 | 35.0 | ||

| Usability and user interface | Q26 | 7.55 | 7.76 | 1.36 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 0.0 | 5.0 | 30.0 | 40.0 | 20.0 |

| Q27 | 7.75 | 0.91 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 15.0 | 10.0 | 60.0 | 15.0 | ||

| Q28 | 7.85 | 1.23 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 10.0 | 20.0 | 25.0 | 40.0 | ||

| Q29 | 7.70 | 0.98 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 35.0 | 40.0 | 20.0 | ||

| Q30 | 7.95 | 1.19 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 10.0 | 10.0 | 35.0 | 40.0 | ||

| ALL | 7.68 | 1.17 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 3.0 | 12.0 | 24.0 | 32.0 | 28.0 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsai, M.-C.; Chu, E.T.-H.; Lee, C.-R. An Automated Sitting Posture Recognition System Utilizing Pressure Sensors. Sensors 2023, 23, 5894. https://doi.org/10.3390/s23135894

Tsai M-C, Chu ET-H, Lee C-R. An Automated Sitting Posture Recognition System Utilizing Pressure Sensors. Sensors. 2023; 23(13):5894. https://doi.org/10.3390/s23135894

Chicago/Turabian StyleTsai, Ming-Chih, Edward T.-H. Chu, and Chia-Rong Lee. 2023. "An Automated Sitting Posture Recognition System Utilizing Pressure Sensors" Sensors 23, no. 13: 5894. https://doi.org/10.3390/s23135894

APA StyleTsai, M.-C., Chu, E. T.-H., & Lee, C.-R. (2023). An Automated Sitting Posture Recognition System Utilizing Pressure Sensors. Sensors, 23(13), 5894. https://doi.org/10.3390/s23135894