Challenges in Developing a Real-Time Bee-Counting Radar

Abstract

1. Introduction

2. Materials and Methods

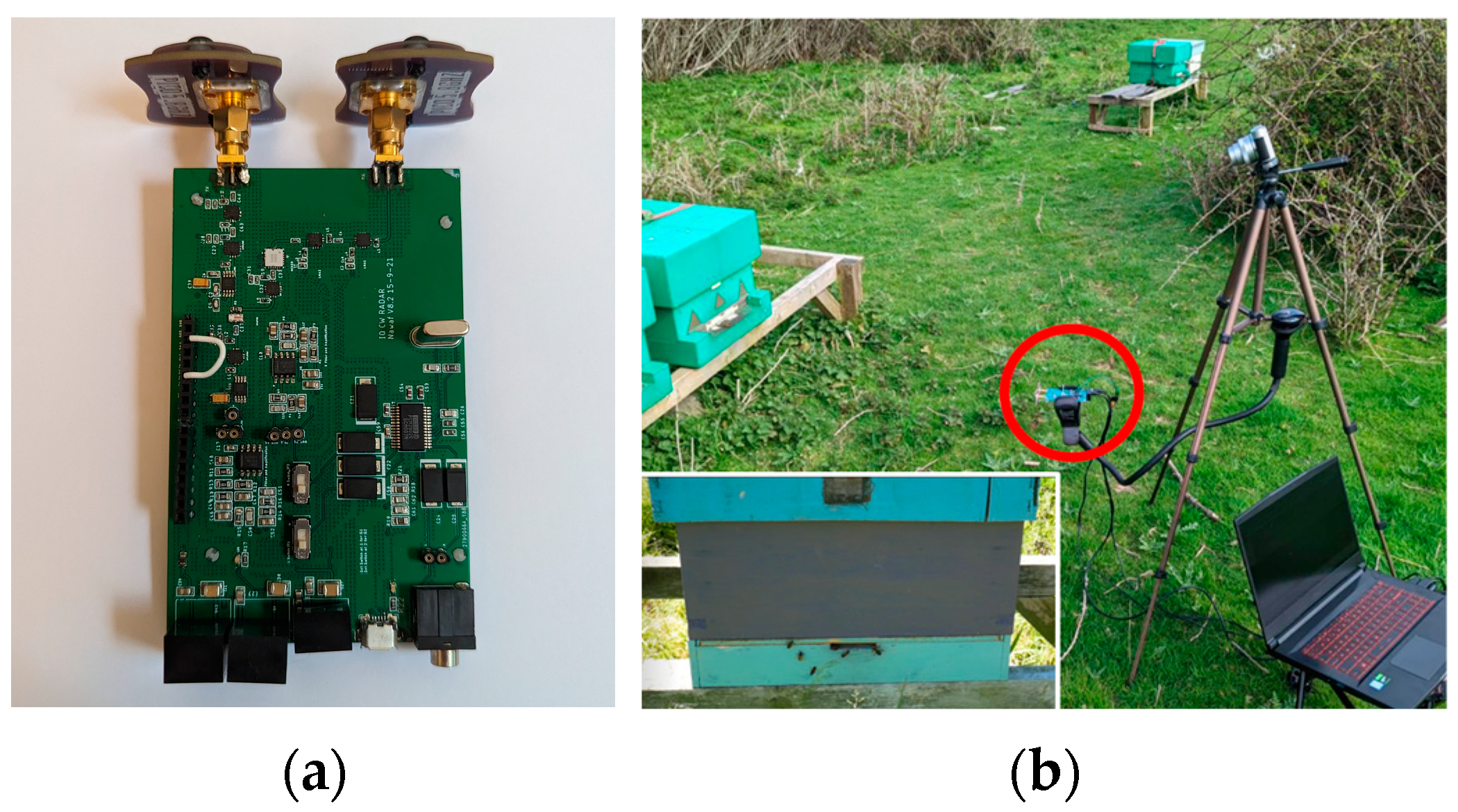

2.1. Radar Receiver and Modelling Approach

2.2. The Processing Equipment

- It removed the need to modify the hive, which is advisable given that the system may be used on wild bees.

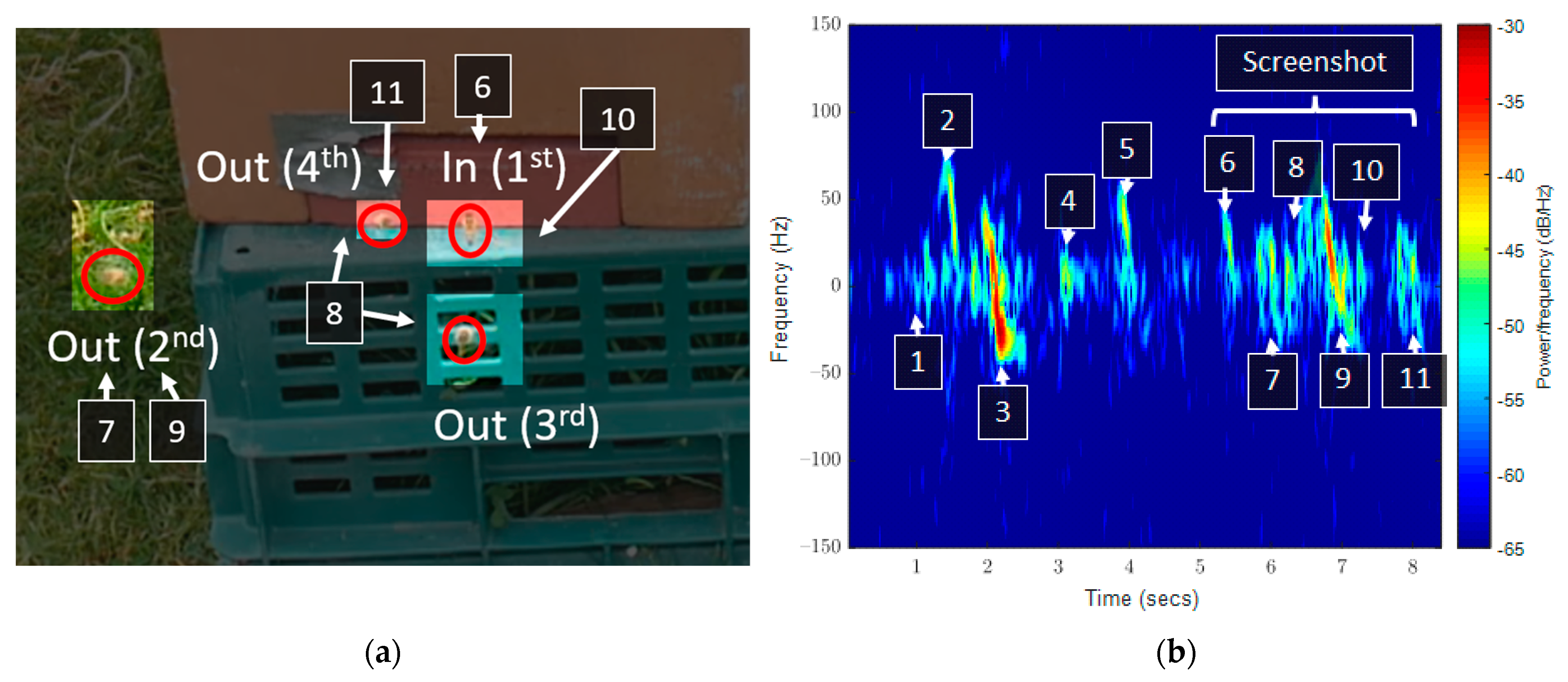

- Bees crawl at the entrance and may cover either antenna, as in Figure 2.

- Antennae have a radiation pattern that may cause flights to be lost from the detection cone if, for example, they walk to the edge of the hive before takeoff.

- While offering some protection against hovering bees, surface-mounted radar may still be obscured more infrequently.

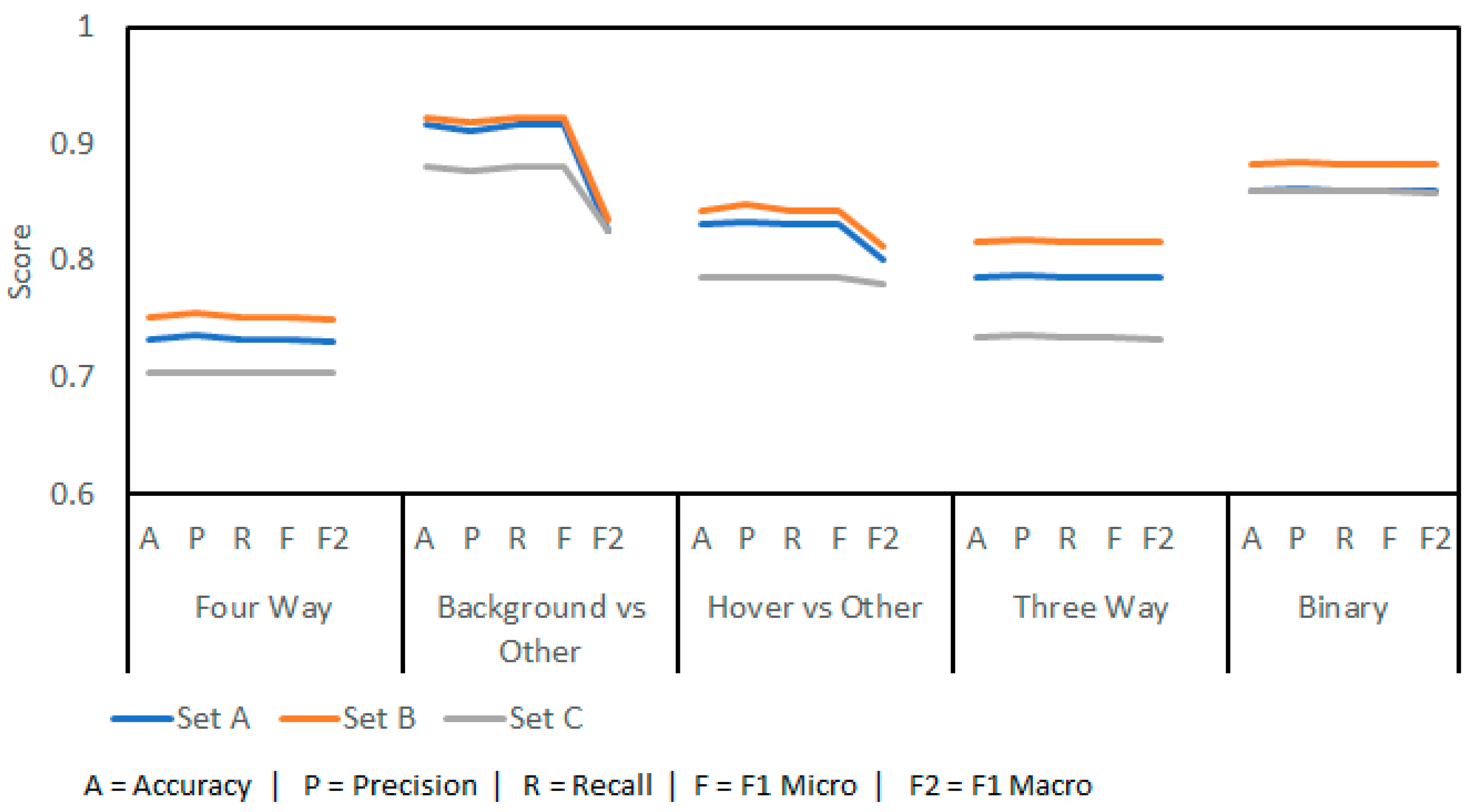

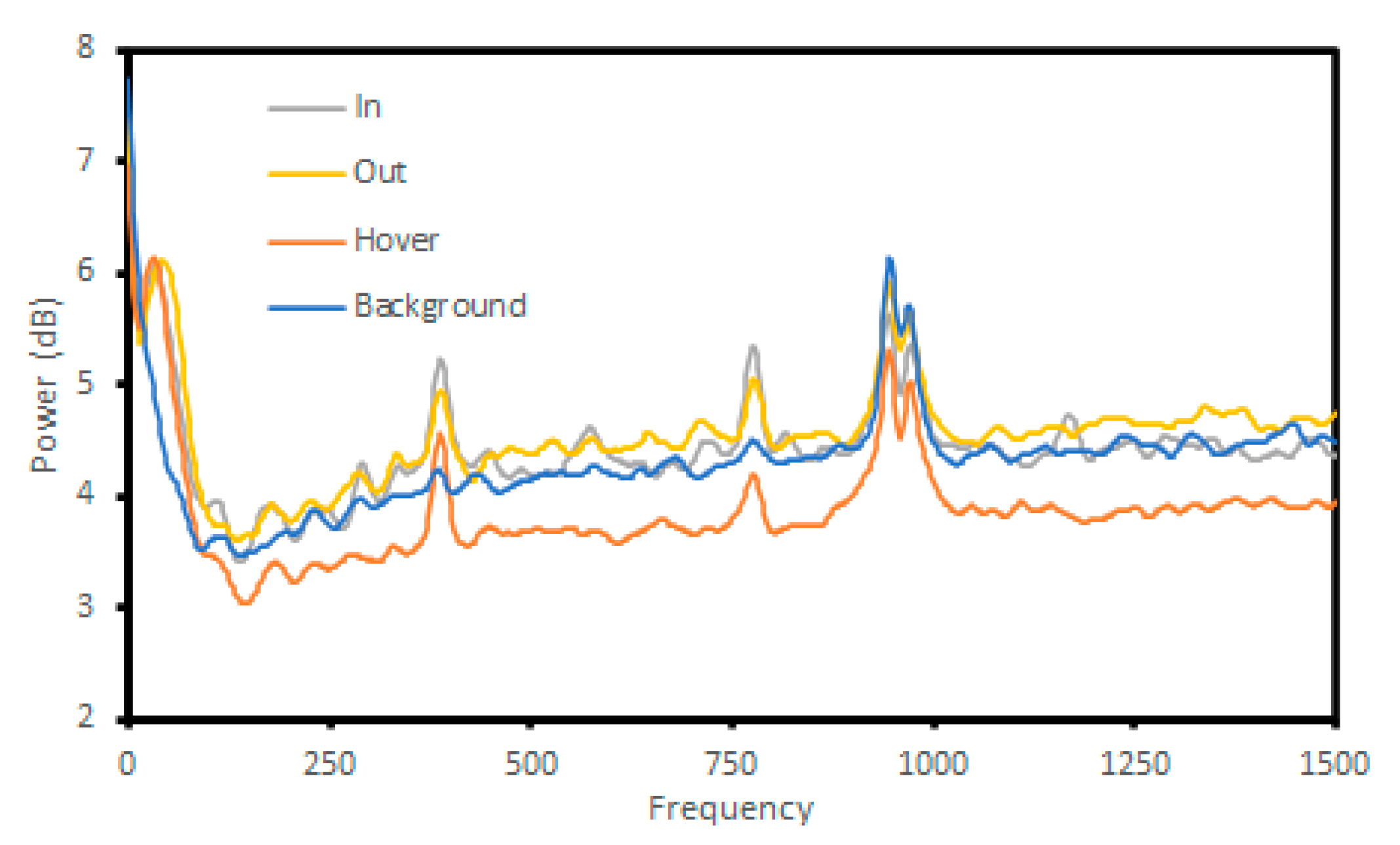

- Four-way classification.

- Background samples versus all others.

- Hover samples versus in and out.

- Three-way classification (hover, in, and out).

- Binary classification (in and out).

3. Results

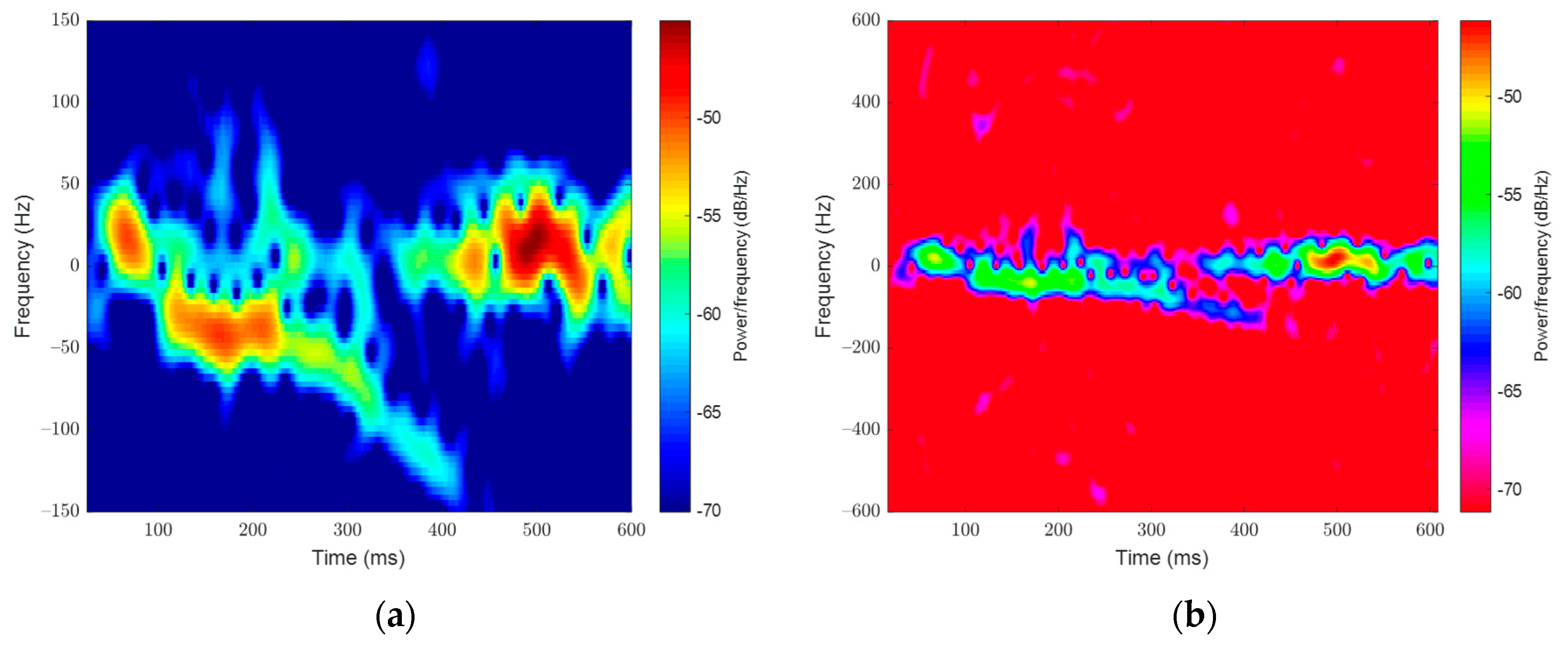

3.1. Preliminary Results

- Set A: the single channel, manually gathered Doppler data from the radar.

- Set B: the dual channel, manually gathered IQ data from the radar.

- Set C: the dual channel, complete IQ dataset including both the manual set and the full recording breakdown dataset.

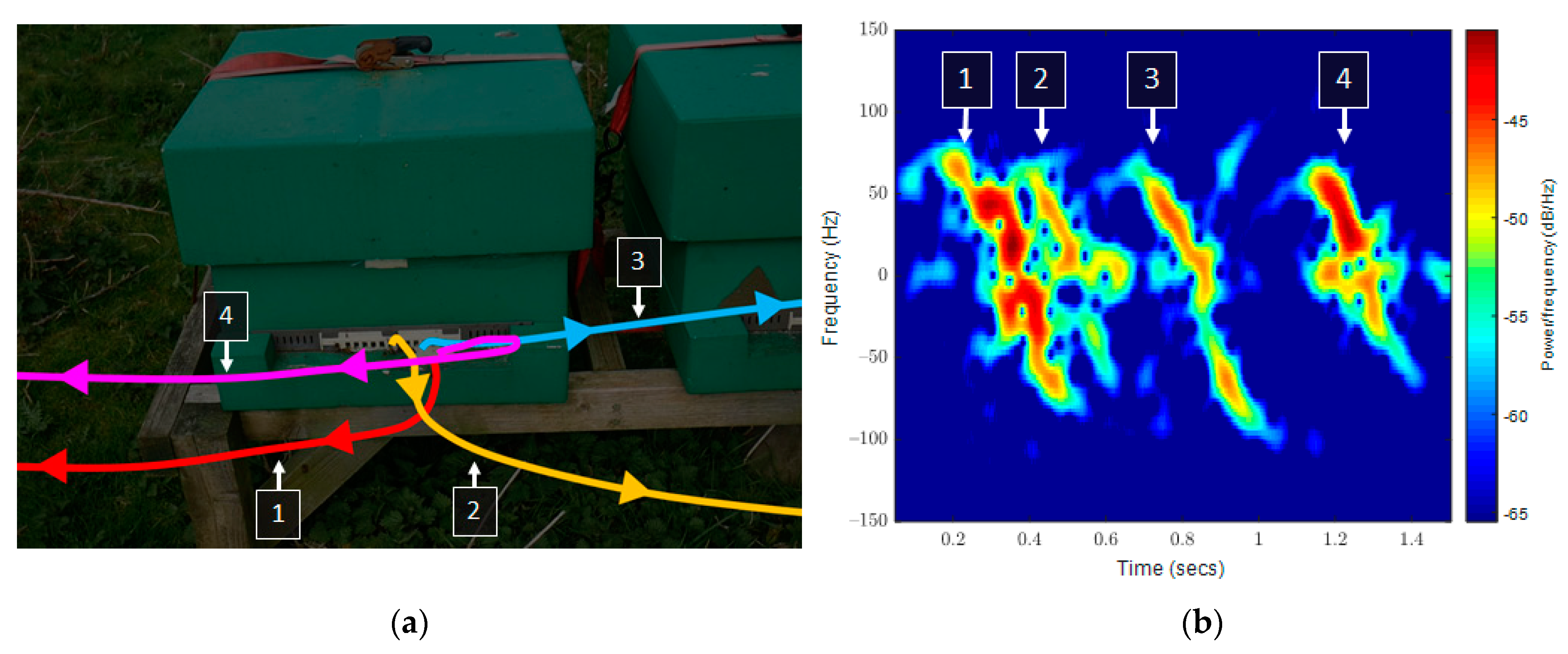

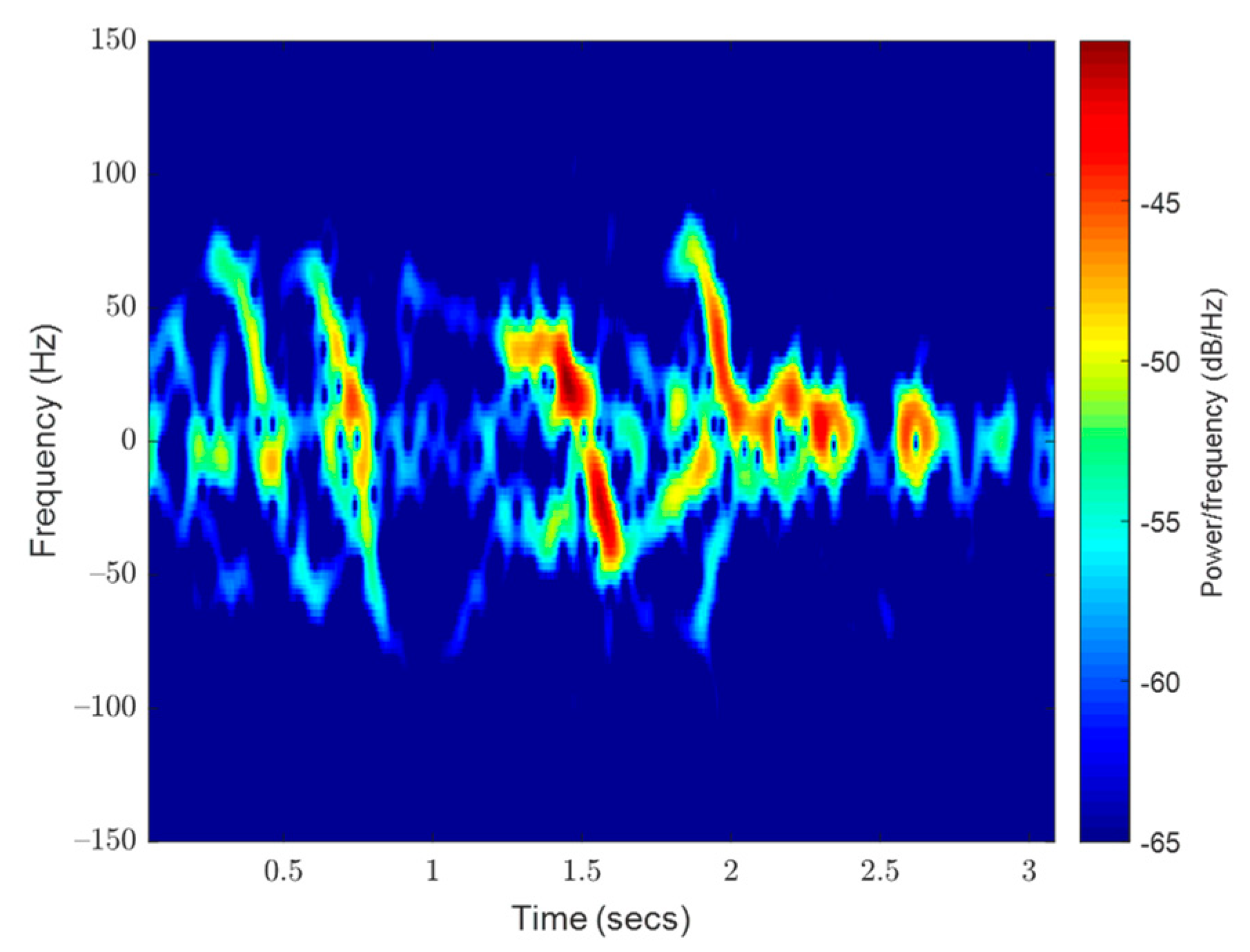

3.2. Exploring the Weaker Results

- Takeoff for a single bee.

- Flight of the first bee to the right and behind the radar.

- A hovering bee emerges from under the radar and flies off-screen to the left.

- Vertical takeoff of two bees, one does not approach the radar.

- The second of the two bees loops, increasing speed, and exits the frame.

- The inward bee from the screenshot appears.

- The first of the three bees in the screenshot takes off.

- Two more bees take off after the first.

- Closest approach of the exiting bees.

- Inward bee enters the hive.

- The last view of the exiting bees, flying away from the radar both left and right.

3.3. Testing Stage

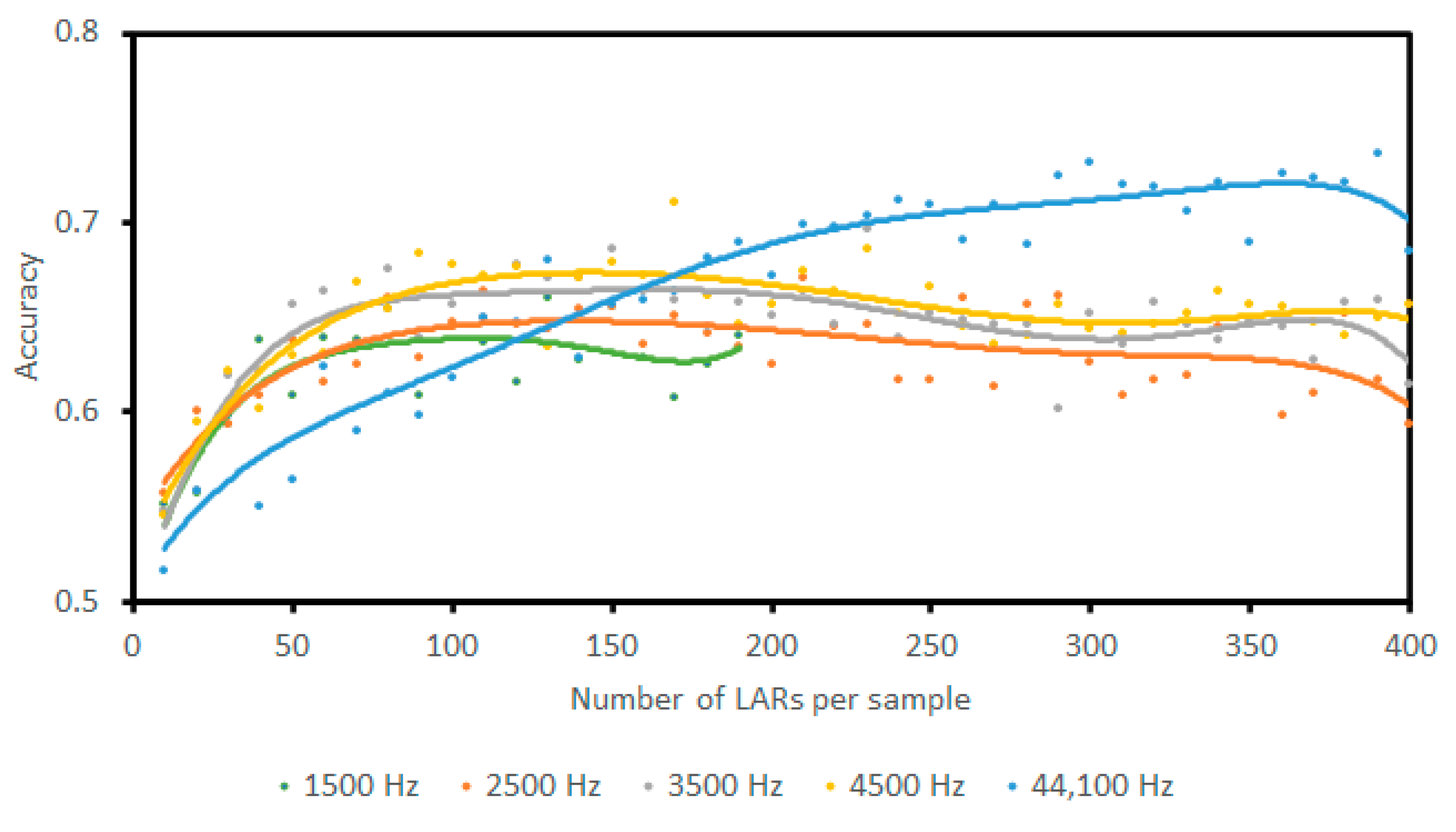

- Set A: the complete training dataset was used, sampled at 44.1 KHz with 240 LARs.

- Set B: the complete training dataset was used, sampled at 3.5 KHz with 100 LARs.

- Set C: the smaller, manually extracted dataset with higher training accuracy was used, sampled at 44.1 KHz with 240 LARs.

- Set D: the smaller, manually extracted dataset with higher training accuracy was used, sampled at 3.5 KHz with 100 LARs.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kleijn, D.; Winfree, R.; Bartomeus, I.; Carvalheiro, L.G.; Henry, M.; Isaacs, R.; Klein, A.-M.; Kremen, C.; M’Gonigle, L.K.; Rader, R.; et al. Delivery of crop pollination services is an insufficient argument for wild pollinator conservation. Nat. Commun. 2015, 6, 7414. [Google Scholar] [CrossRef]

- Patel, V.; Pauli, N.; Biggs, E.; Barbour, L.; Boruff, B. Why bees are critical for achieving sustainable development. Ambio 2021, 50, 49–59. [Google Scholar] [CrossRef]

- Potts, S.G.; Roberts, S.P.; Dean, R.; Marris, G.; Brown, M.A.; Jones, R.; Neumann, P.; Settele, J. Declines of managed honey bees and beekeepers in Europe. J. Apic. Res. 2010, 49, 15–22. [Google Scholar] [CrossRef]

- Koh, I.; Lonsdorf, E.V.; Williams, N.M.; Brittain, C.; Isaacs, R.; Gibbs, J.; Ricketts, T.H. Modeling the status, trends, and impacts of wild bee abundance in the United States. Proc. Natl. Acad. Sci. USA 2016, 113, 140–145. [Google Scholar] [CrossRef] [PubMed]

- Odemer, R. Approaches, challenges and recent advances in automated bee counting devices: A review. Ann. Appl. Biol. 2022, 180, 73–89. [Google Scholar] [CrossRef]

- Bermig, S.; Odemer, R.; Gombert, A.J.; Frommberger, M.; Rosenquist, R.; Pistorius, J. Experimental validation of an electronic counting device to determine flight activity of honey bees (Apis mellifera L.). J. fur. Kult. 2020, 72, 132–140. [Google Scholar] [CrossRef]

- Struye, M.H.; Mortier, H.J.; Arnold, G.; Miniggio, C.; Borneck, R. Microprocessor-controlled monitoring of honeybee flight activity at the hive entrance. Apidologie 1994, 25, 384–395. [Google Scholar] [CrossRef]

- Cunha, A.S.; Rose, J.; Prior, J.; Aumann, H.; Emanetoglu, N.; Drummond, F. A novel non-invasive radar to monitor honey bee colony health. Comput. Electron. Agric. 2020, 170, 105241. [Google Scholar] [CrossRef]

- Aumann, H.M.; Emanetoglu, N.W. The radar microphone: A new way of monitoring honey bee sounds. In Proceedings of the 2016 IEEE Sensors, 30 October–3 November, Orlando, FL, USA; 2017. [Google Scholar] [CrossRef]

- Williams, S.; Bariselli, S.; Palego, C.; Holland, R.; Cross, P. A comparison of machine-learning assisted optical and thermal camera systems for beehive activity counting. Smart Agric. Technol. 2022, 2, 100038. [Google Scholar] [CrossRef]

- Aldabashi, N.; Williams, S.; Eltokhy, A.; Palmer, E.; Cross, P.; Palego, C. Integration of 5.8GHz Doppler Radar and Machine Learning for Automated Honeybee Hive Surveillance and Logging. In Proceedings of the IEEE MTT-S International Microwave Symposium Digest, Atlanta, GA, USA, 7–25 June 2021. [Google Scholar] [CrossRef]

- Susanto, F.; Gillard, T.; de Souza, P.; Vincent, B.; Budi, S.; Almeida, A.; Pessin, G.; Arruda, H.; Williams, R.N.; Engelke, U.; et al. Addressing RFID misreadings to better infer bee hive activity. IEEE Access 2018, 6, 31935–31949. [Google Scholar] [CrossRef]

- Arruda, H.; Imperatriz-Fonseca, V.; de Souza, P.; Pessin, G. Identifying Bee Species by Means of the Foraging Pattern Using Machine Learning. In Proceedings of the International Joint Conference on Neural Networks, Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar] [CrossRef]

- Hu, C.; Kong, S.; Wang, R.; Long, T.; Fu, X. Identification of Migratory Insects from their Physical Features using a Decision-Tree Support Vector Machine and its Application to Radar Entomology. Sci. Rep. 2018, 8, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Kong, S.; Wang, R.; Zhang, F.; Wang, L. Insect mass estimation based on radar cross section parameters and support vector regression algorithm. Remote Sens. 2020, 12, 1903. [Google Scholar] [CrossRef]

- Dall’Asta, L.; Egger, G. Preliminary results from beehive activity monitoring using a 77 GHz FMCW radar sensor. In Proceedings of the 2021 IEEE International Workshop on Metrology for Agriculture and Forestry, MetroAgriFor, Trento-Bolzano, Italy, 3–5 November 2021. [Google Scholar] [CrossRef]

- Javier, R.J.; Kim, Y. Application of linear predictive coding for human activity classification based on micro-doppler signatures. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1831–1834. [Google Scholar] [CrossRef]

- Chilson, C.; Avery, K.; McGovern, A.; Bridge, E.; Sheldon, D.; Kelly, J. Automated detection of bird roosts using NEXRAD radar data and Convolutional Neural Networks. Remote Sens. Ecol. Conserv. 2019, 5, 20–32. [Google Scholar] [CrossRef]

- Shrestha, A.; Loukas, C.; Le Kernec, J.; Fioranelli, F.; Busin, V.; Jonsson, N.; King, G.; Tomlinson, M.; Viora, L.; Voute, L. Animal lameness detection with radar sensing. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1189–1193. [Google Scholar] [CrossRef]

- Aldabashi, N.; Williams, S.M.; Eltokhy, A.; Palmer, E.; Cross, P.; Palego, C. A Machine Learning Integrated 5.8-GHz Continuous-Wave Radar for Honeybee Monitoring and Behavior Classification. IEEE Trans. Microw. Theory Tech. 2023. [Google Scholar] [CrossRef]

- Makhoul, J. Linear Prediction: A Tutorial Review. Proc. IEEE 1975, 63, 561–580. [Google Scholar] [CrossRef]

- Drucker, H.; Surges, C.J.C.; Kaufman, L.; Smola, A.; Vapnik, V. In Proceedings of the Support vector regression machines. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Aumann, H.M. A technique for measuring the RCS of free-flying honeybees with a 24 GHz CW Doppler radar. In Proceedings of the 12th European Conference on Antennas and Propagation, London, UK, 9–13 April 2018; IET Conference Publications: London, UK, 2018. [Google Scholar] [CrossRef]

- Aumann, H.; Payal, B.; Emanetoglu, N.W.; Drummond, F. An index for assessing the foraging activities of honeybees with a Doppler sensor. In Proceedings of the SAS IEEE Sensors Applications Symposium, Glassboro, NJ, USA, 13–15 March 2017. [Google Scholar] [CrossRef]

- Thielens, A.; Greco, M.K.; Verloock, L.; Martens, L.; Joseph, W. Radio-Frequency Electromagnetic Field Exposure of Western Honey Bees. Sci. Rep. 2020, 10, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Favre, D. Mobile phone-induced honeybee worker piping. Apidologie 2011, 42, 270–279. [Google Scholar] [CrossRef]

- Silva, D.F.; Souza, V.M.A.; Ellis, D.P.W.; Keogh, E.J.; Batista, G.E.A.P.A. Exploring Low Cost Laser Sensors to Identify Flying Insect Species. J. Intell. Robot. Syst. 2015, 80, 313–330. [Google Scholar] [CrossRef]

- Khan, M.I.; Jan, M.A.; Muhammad, Y.; Do, D.-T.; Rehman, A.U.; Mavromoustakis, C.X.; Pallis, E. Tracking vital signs of a patient using channel state information and machine learning for a smart healthcare system. Neural Comput. Appl. 2021, 1–15. [Google Scholar] [CrossRef]

- Mathur, A.; Foody, G.M. Multiclass and binary SVM classification: Implications for training and classification users. IEEE Geosci. Remote. Sens. Lett. 2008, 5, 241–245. [Google Scholar] [CrossRef]

- Duan, K.-B.; Keerthi, S.S. Which is the best multiclass SVM method? An empirical study. In Lecture Notes in Computer Science; Springer: New York, NY, USA, 2005. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

| Sub-Window Size | Encoding Limit | Total Number of Features per Channel | Time Required |

|---|---|---|---|

| 40 ms | 76 | 760 | 350 ms |

| 50 ms | 84 | 672 | 348 ms |

| 80 ms | 96 | 480 | 349 ms |

| 200 ms | 110 | 220 | 352 ms |

| 400 ms (full window) | 240 | 240 | 351 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Williams, S.M.; Aldabashi, N.; Cross, P.; Palego, C. Challenges in Developing a Real-Time Bee-Counting Radar. Sensors 2023, 23, 5250. https://doi.org/10.3390/s23115250

Williams SM, Aldabashi N, Cross P, Palego C. Challenges in Developing a Real-Time Bee-Counting Radar. Sensors. 2023; 23(11):5250. https://doi.org/10.3390/s23115250

Chicago/Turabian StyleWilliams, Samuel M., Nawaf Aldabashi, Paul Cross, and Cristiano Palego. 2023. "Challenges in Developing a Real-Time Bee-Counting Radar" Sensors 23, no. 11: 5250. https://doi.org/10.3390/s23115250

APA StyleWilliams, S. M., Aldabashi, N., Cross, P., & Palego, C. (2023). Challenges in Developing a Real-Time Bee-Counting Radar. Sensors, 23(11), 5250. https://doi.org/10.3390/s23115250