Application of Multitemporal Change Detection in Radar Satellite Imagery Using REACTIV-Based Method for Geospatial Intelligence

Abstract

1. Introduction

1.1. Related Works—Change Detection

- Change from one class of land cover into another;

- Change of shape: spreading, shrinkage, or transformation;

- Change of position;

- Division or merging of neighbouring areas.

- Short-term change;

- Repeatable (seasonal) change;

- Directional change (e.g., urban development);

- Multidirectional change (e.g., simultaneous deforestation and reclamation);

- Sudden events (e.g., a natural disaster).

1.2. Research Purpose

- How to use a single image to show changes across long SAR image time series?

- How can visualisation be effectively used in the intelligence process and support situational awareness? (In particular with regard to reconnaissance of air bases infrastructure and equipment).

2. Materials and Methods

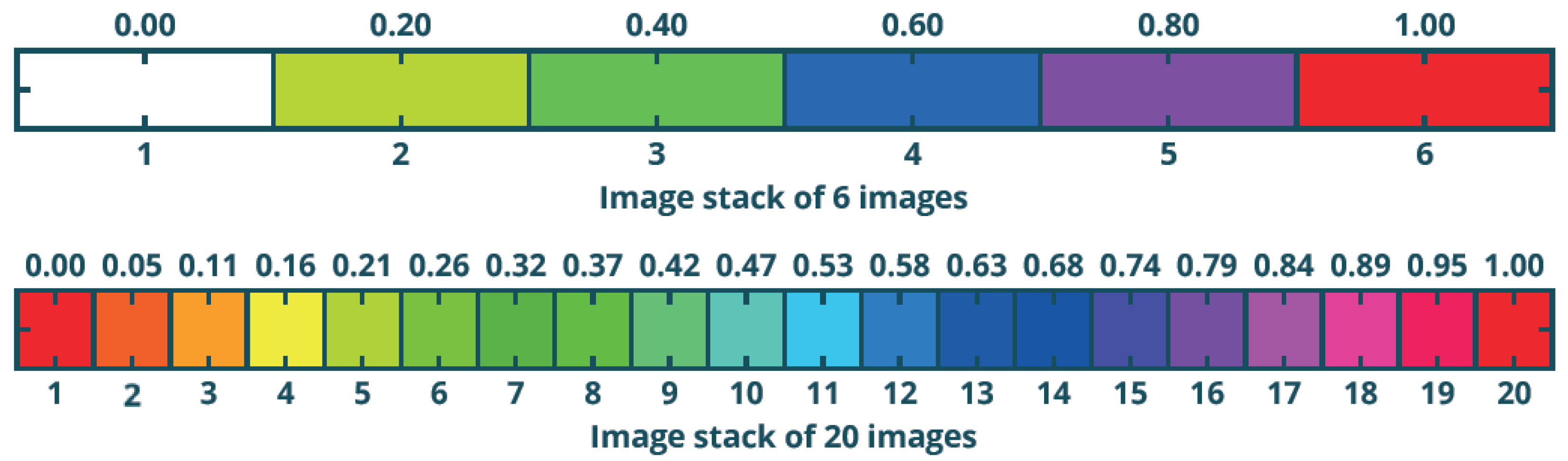

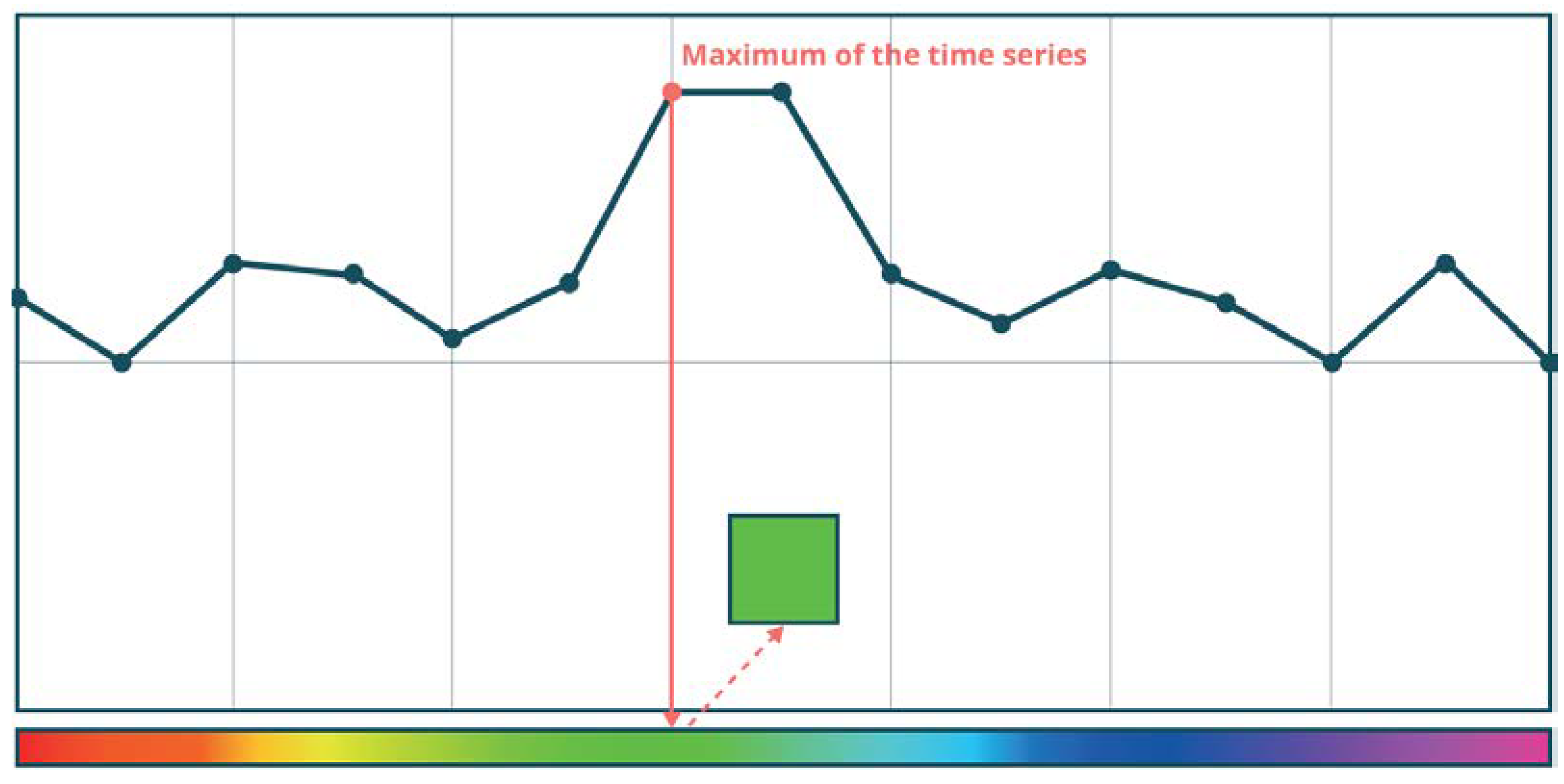

2.1. A Hue Component: The Temporal Dimension

2.2. Saturation Component: Change in Intensity

2.3. Value Component: Intensity of SAR Signal

2.4. Sentinel-1 Data

2.5. GEE Platform

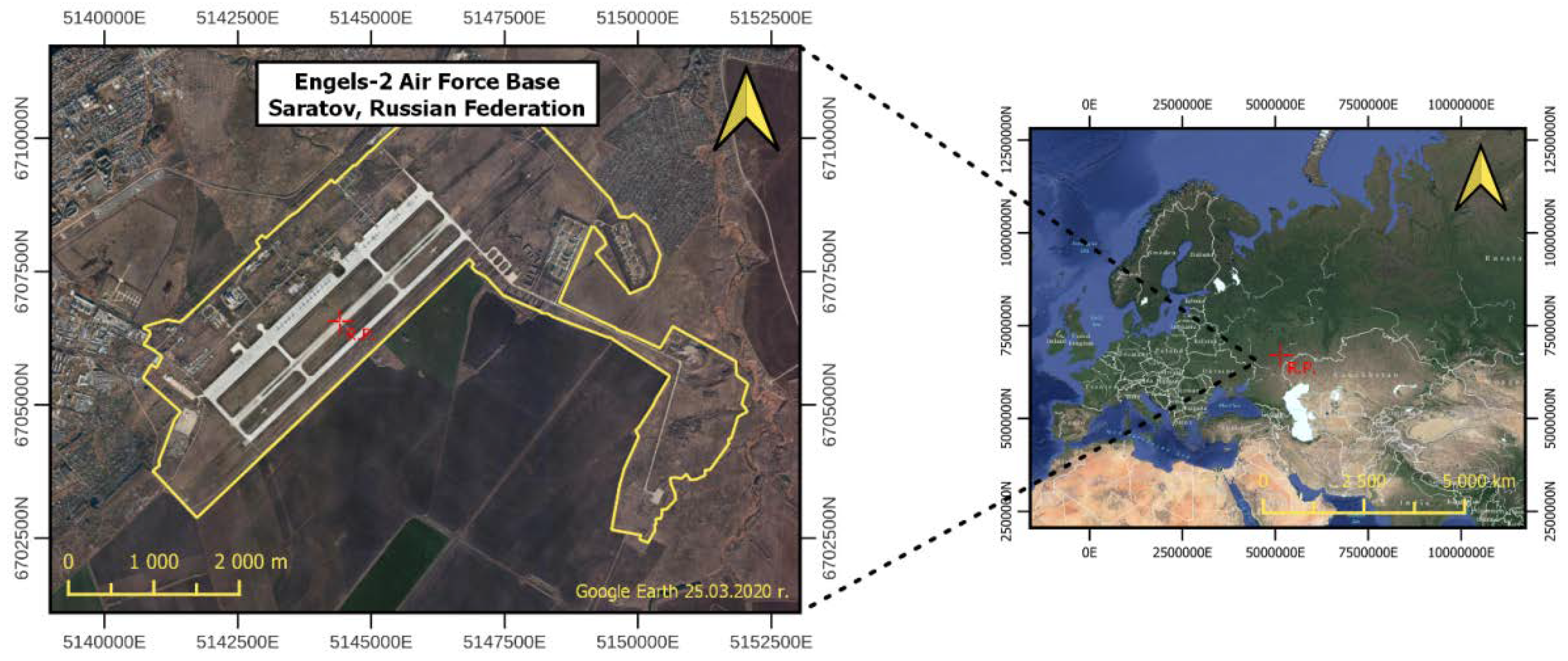

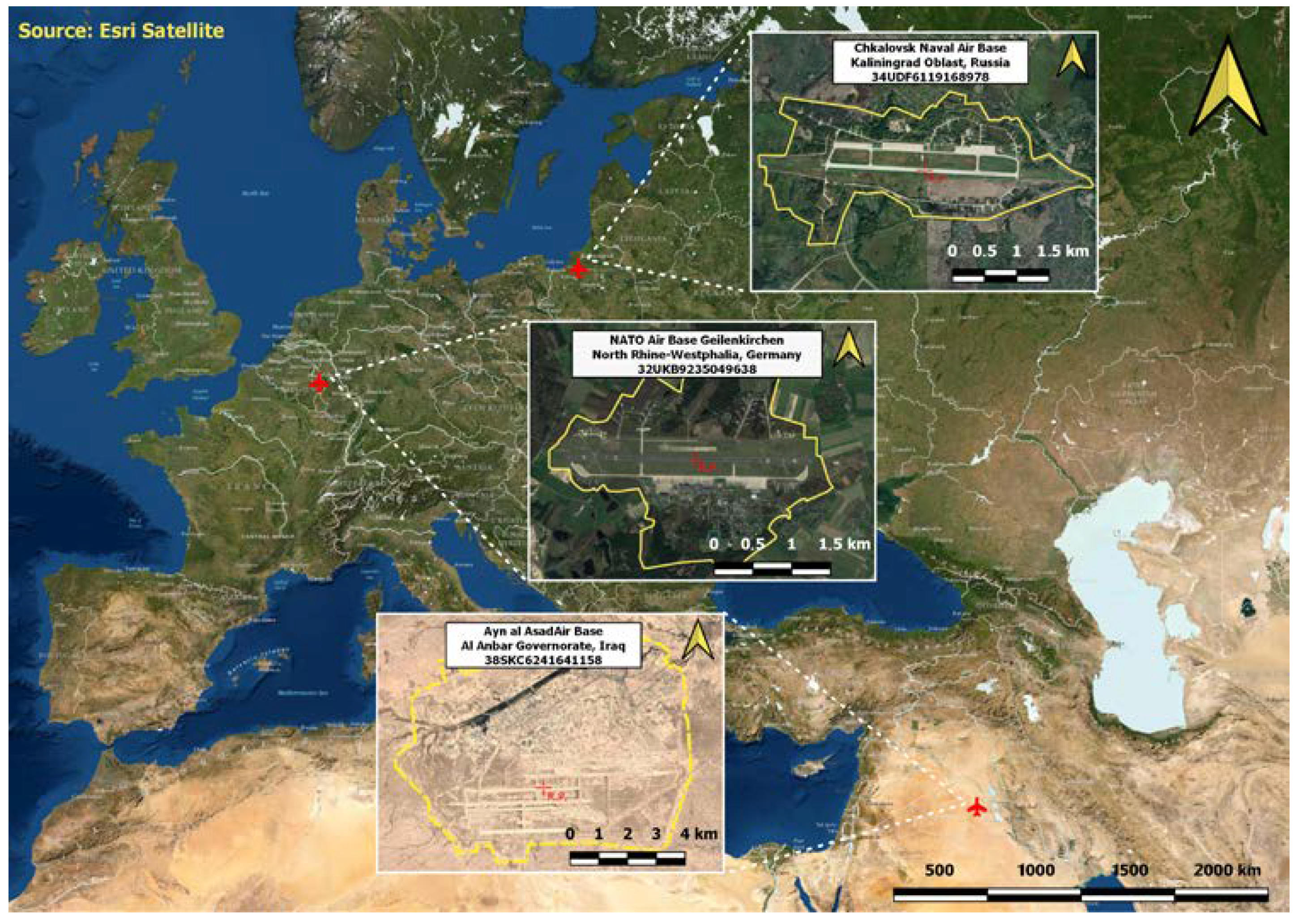

2.6. Test Area

2.7. The Proposed Methodology

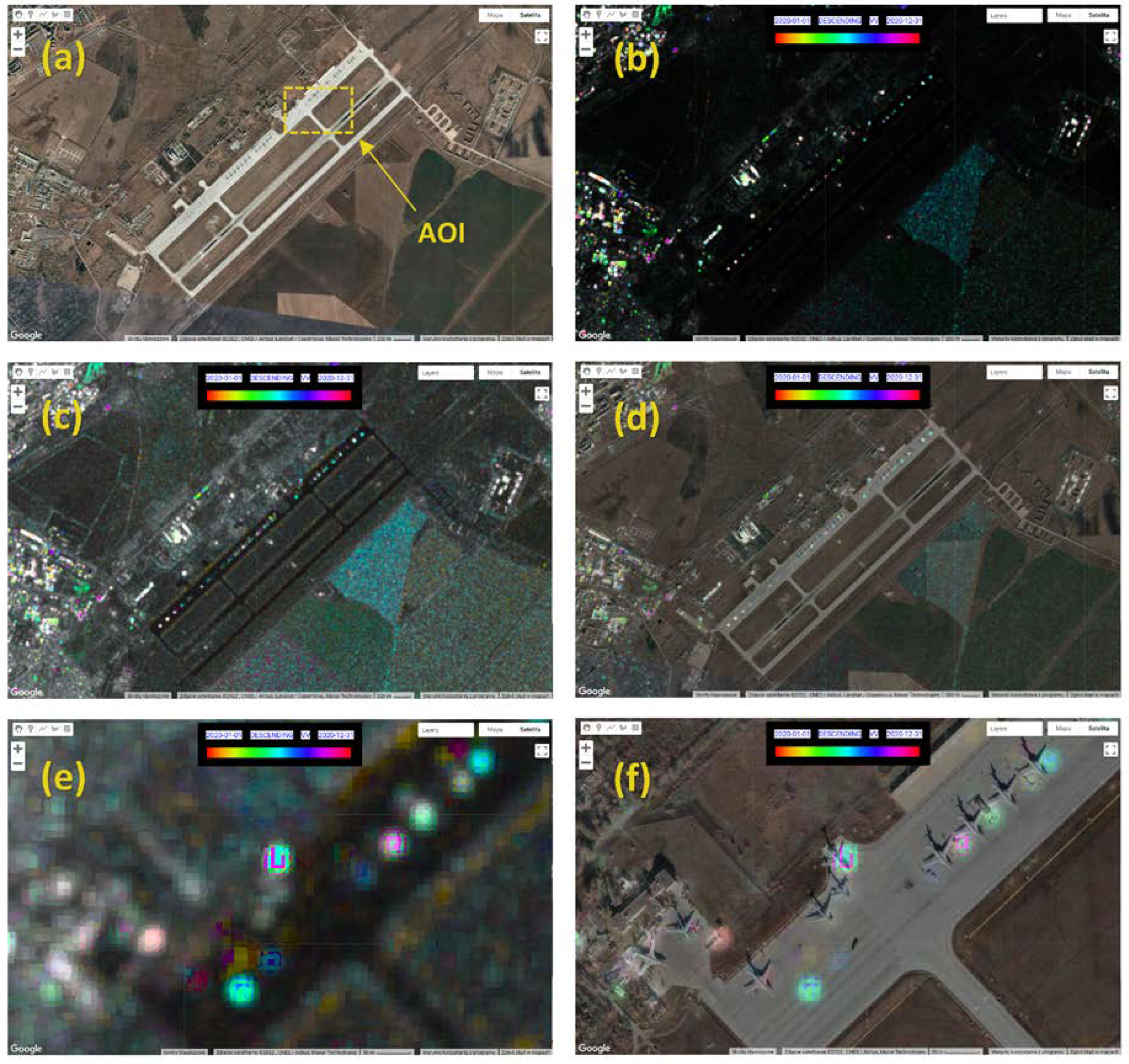

3. Results

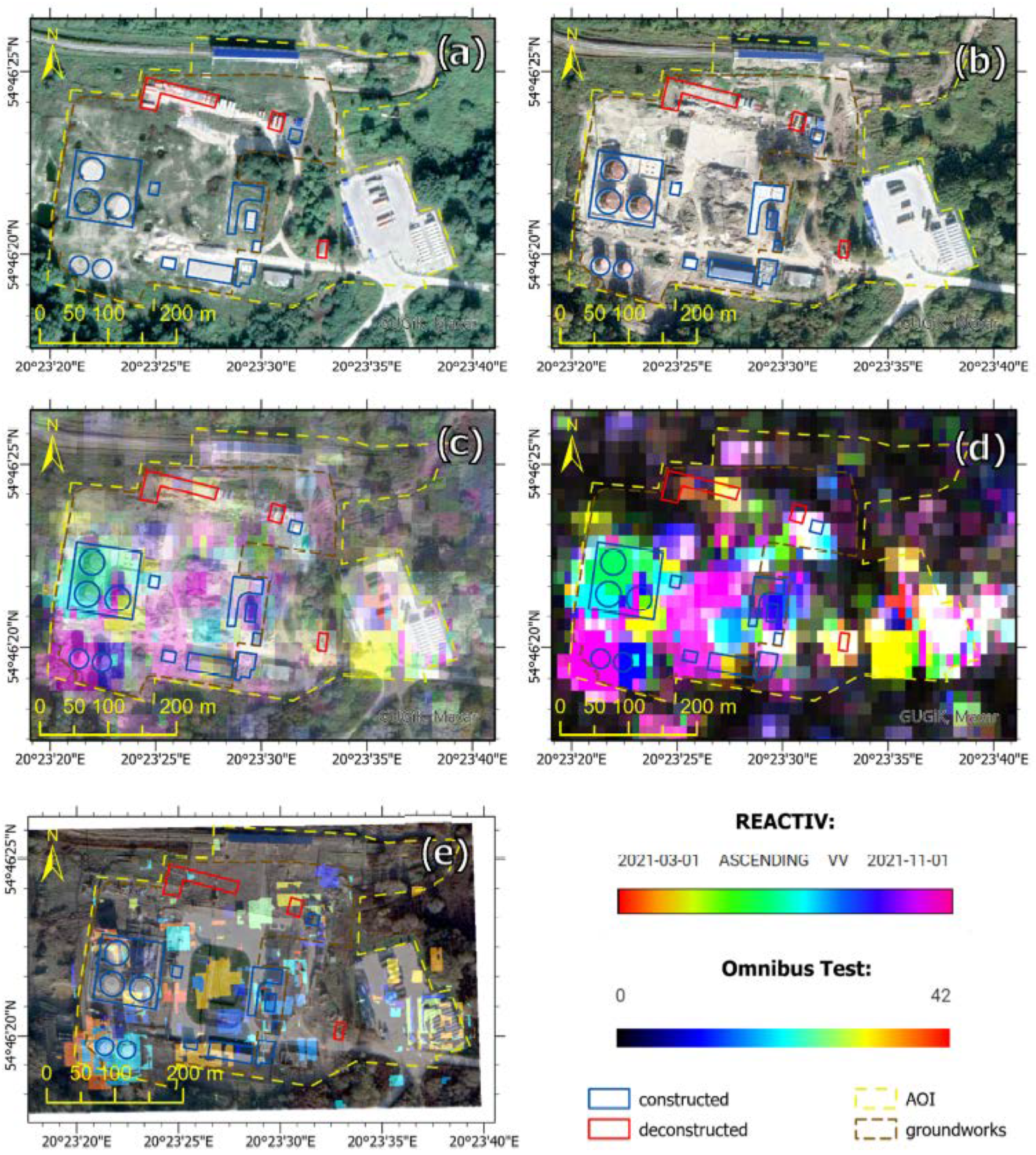

3.1. Application of the REACTIV Method in the Detection of Changes in the Infrastructure of the Airport in Chkalovsk

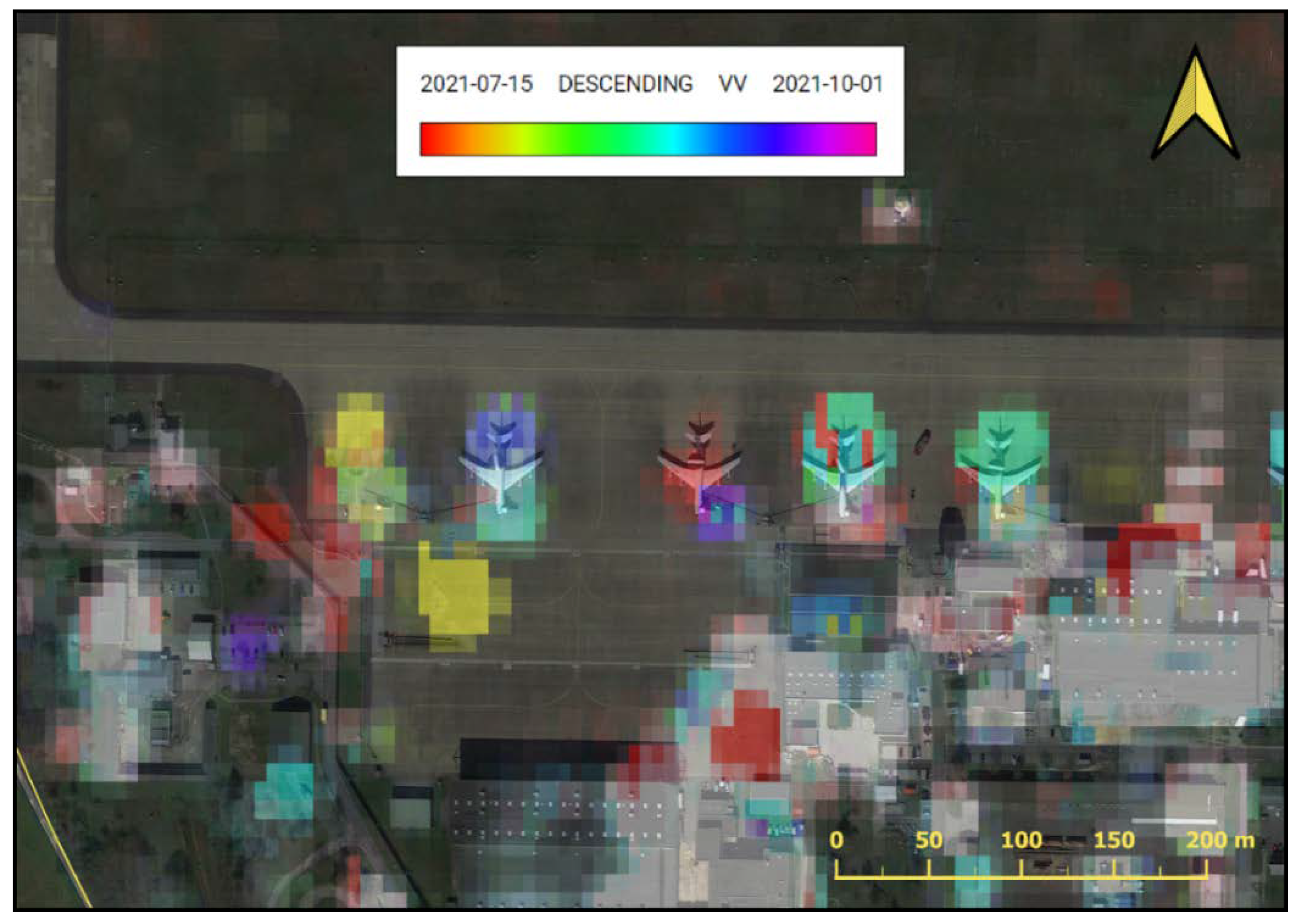

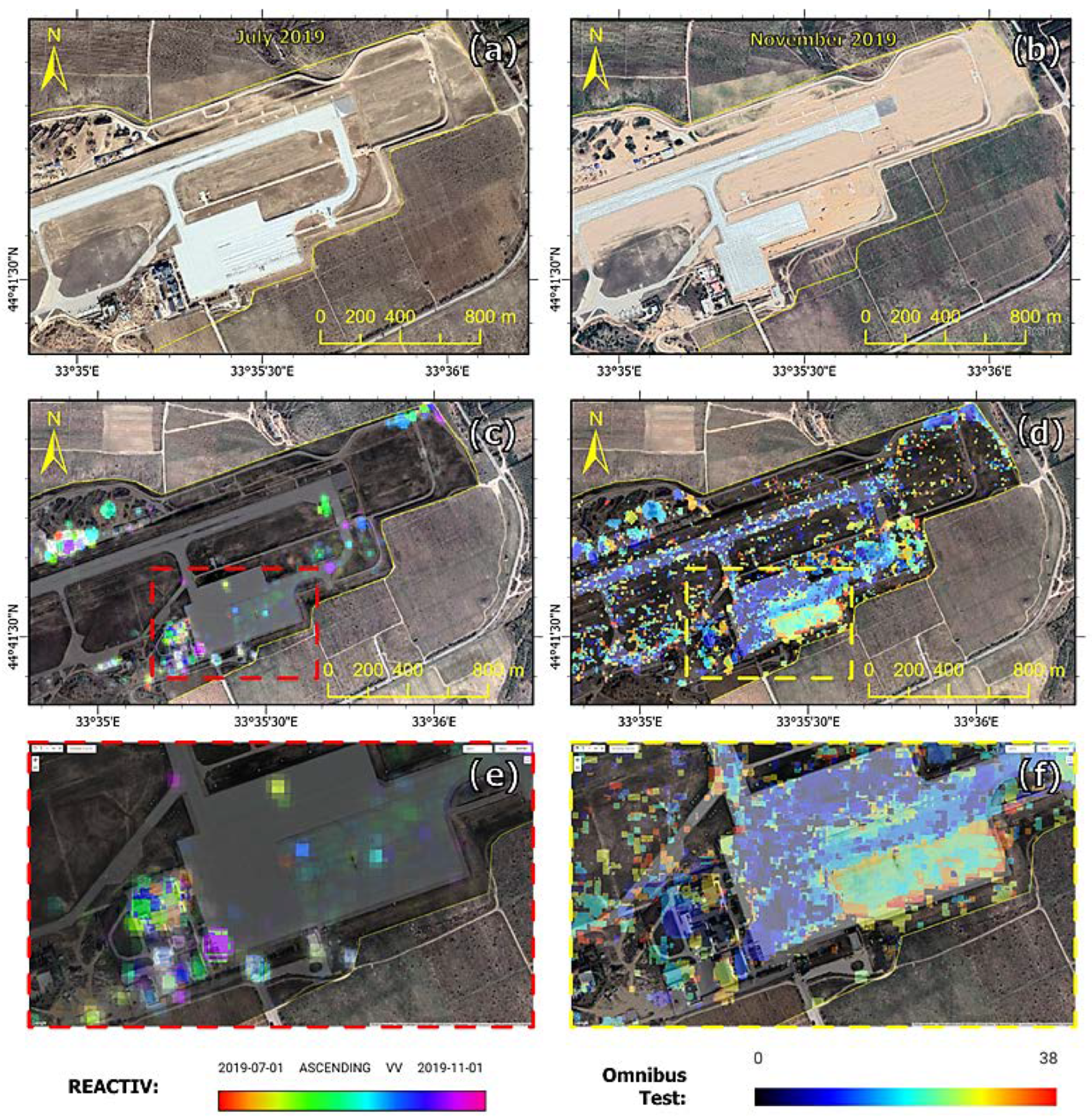

3.2. Application of the REACTIV Method in Monitoring the Activity of Equipment, Based on the Example of the NATO Air Force Base in Geilenkirchen

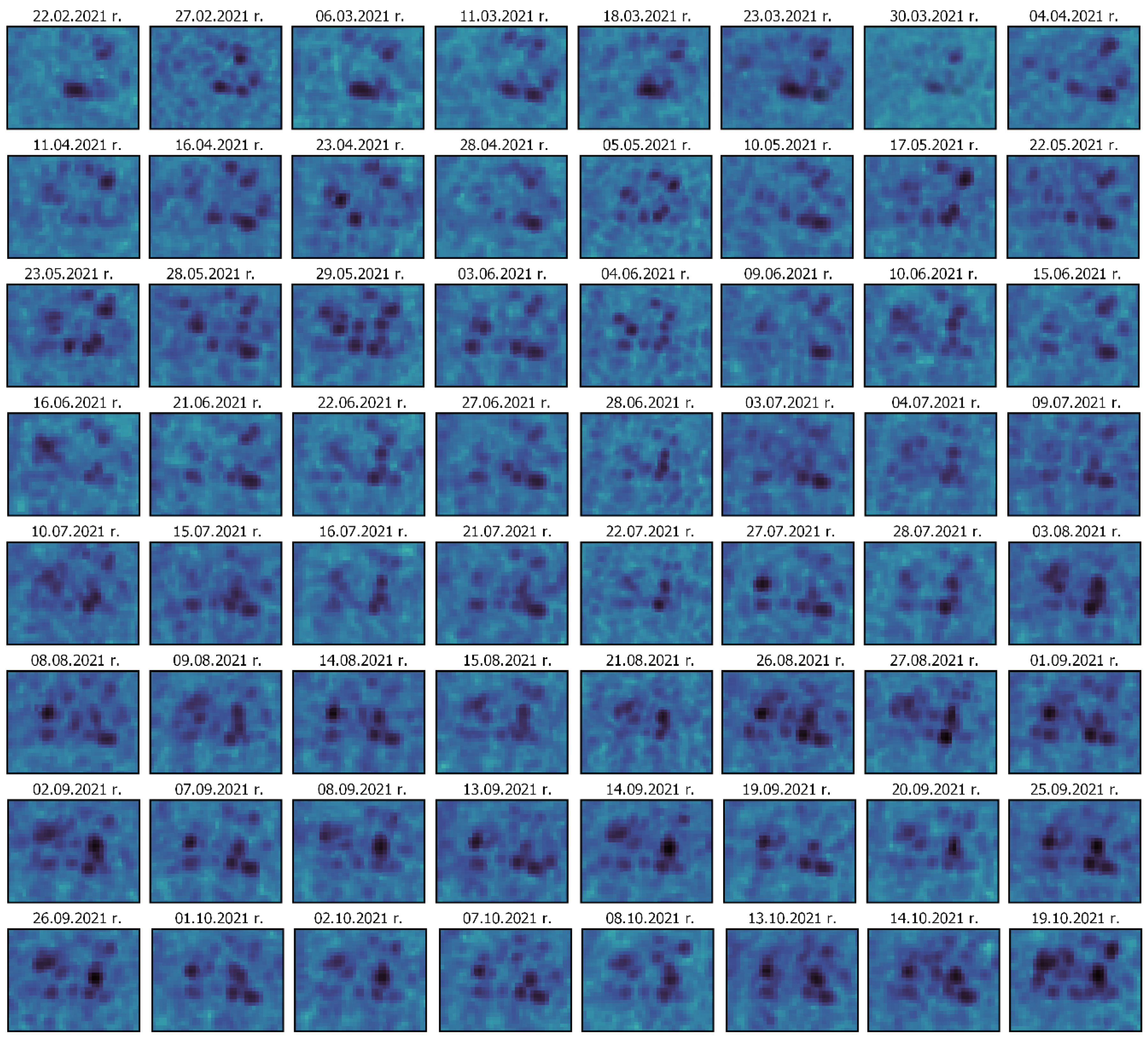

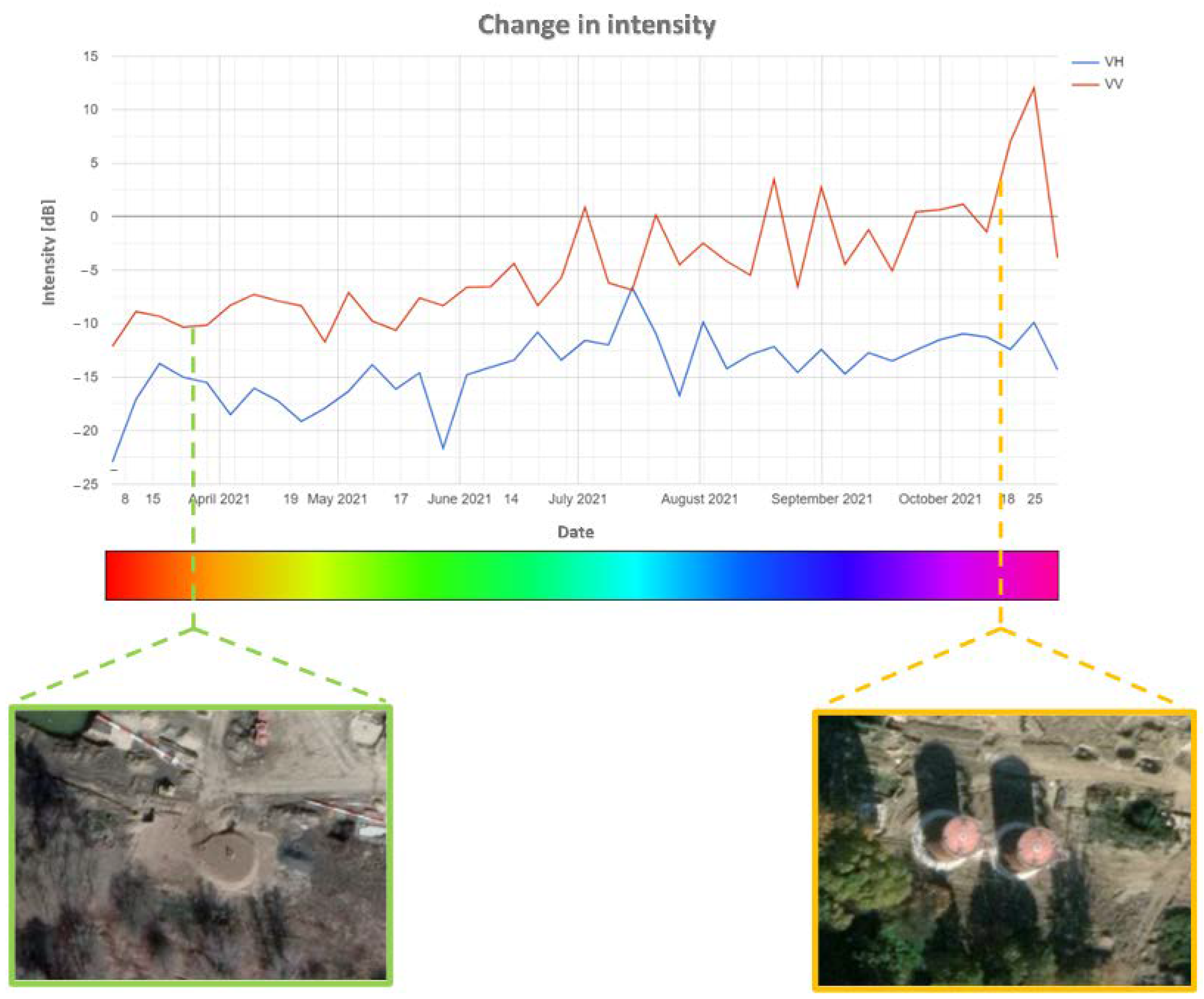

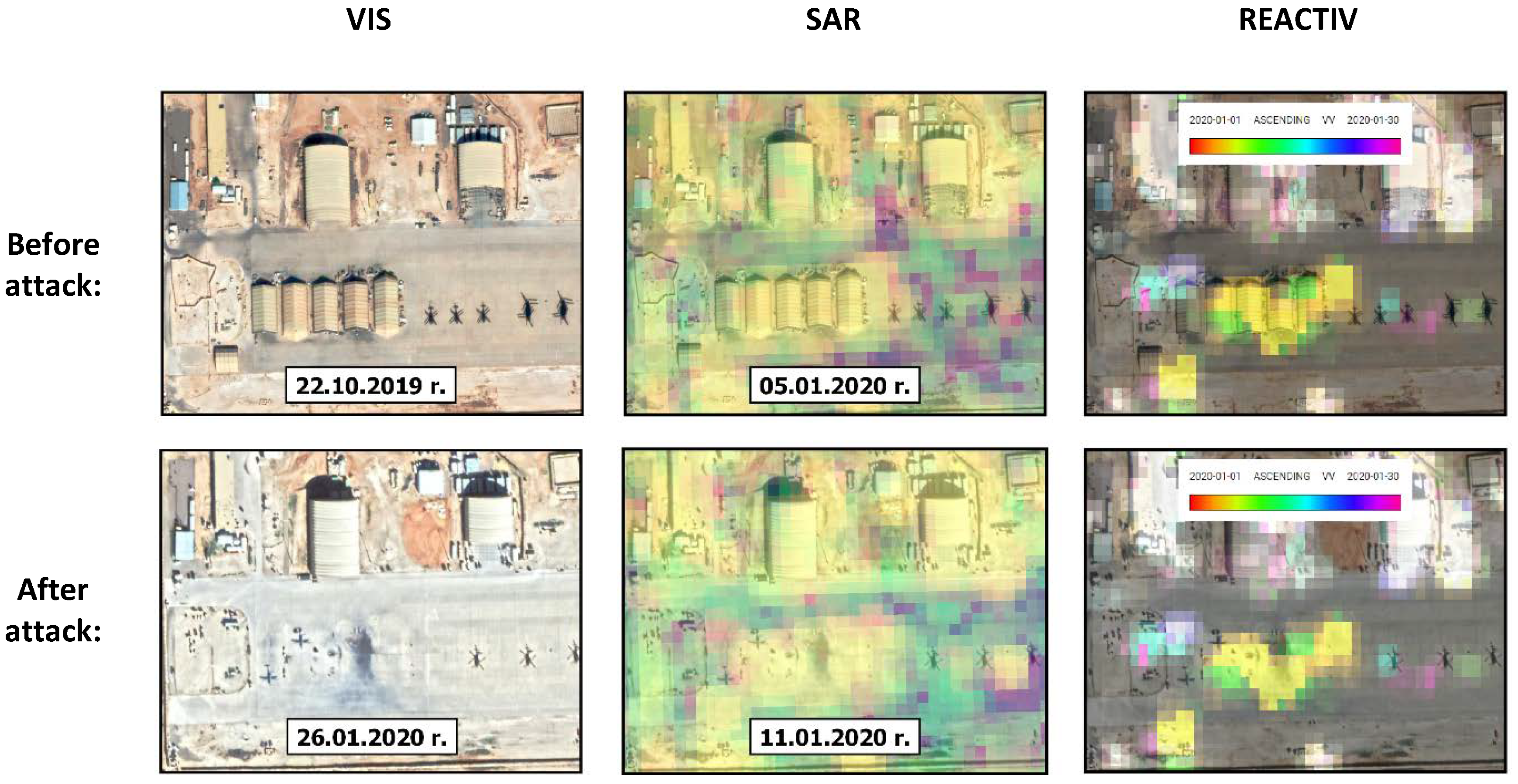

3.3. Application of the REACTIV Method in the Detection and Evaluation of the Effects of Impact on the Example of the Ayn al-Asad Base

3.4. Validation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cardillo, R. Geospatial Intelligence Basic Doctrine. Collections. 2018. Chapter 04. Available online: https://www.nga.mil/resources/GEOINT_Basic_Doctrine_Publication_10_.html (accessed on 10 May 2023).

- Clark, R.M. Intelligence Analysis: A Target-Centric Approach; CQ Press: Washington, DC, USA, 2007. [Google Scholar]

- Munir, A.; Aved, A.; Blasch, E. Situational Awareness: Techniques, Challenges, and Prospects. AI 2022, 3, 55–77. [Google Scholar] [CrossRef]

- İlsever, M.; Ünsalan, C. Two-Dimensional Change Detection Methods; SpringerBriefs in Computer Science; Springer: London, UK, 2012; ISBN 978-1-4471-4254-6. [Google Scholar]

- Zhao, W. Multitemporal SAR Images Denoising and Change Detection: Applications to Sentinel-1 Data. Ph.D. Thesis, Université Paris-Saclay (ComUE), Paris, France, 2019. [Google Scholar]

- Li, W.; Ma, P.; Wang, H.; Fang, C. SAR-TSCC: A Novel Approach for Long Time Series SAR Image Change Detection and Pattern Analysis. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5203016. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change Detection from Remotely Sensed Images: From Pixel-Based to Object-Based Approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Mikkola, J. Synthetic Aperture Radar Image Stacks in Support of Situational Awareness. Aalto University, Finland. 2018. Available online: http://urn.fi/URN:NBN:fi:aalto-201908254932 (accessed on 28 June 2022).

- Jiang, A.; Dai, J.; Yu, S.; Zhang, B.; Xie, Q.; Zhang, H. Unsupervised Change Detection around Subways Based on SAR Combined Difference Images. Remote Sens. 2022, 14, 4419. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Li, H.-C. SAR Image Change Detection Based on Multiscale Capsule Network. IEEE Geosci. Remote Sens. Lett. 2021, 18, 484–488. [Google Scholar] [CrossRef]

- Mastro, P.; Masiello, G.; Serio, C.; Pepe, A. Change Detection Techniques with Synthetic Aperture Radar Images: Experiments with Random Forests and Sentinel-1 Observations. Remote Sens. 2022, 14, 3323. [Google Scholar] [CrossRef]

- Du, Y.; Zhong, R.; Li, Q.; Zhang, F. TransUNet++SAR: Change Detection with Deep Learning about Architectural Ensemble in SAR Images. Remote Sens. 2022, 15, 6. [Google Scholar] [CrossRef]

- Dekker, R.J. Speckle Filtering in Satellite SAR Change Detection Imagery. Int. J. Remote Sens. 1998, 19, 1133–1146. [Google Scholar] [CrossRef]

- Ban, Y. (Ed.) Multitemporal Remote Sensing; Remote Sensing and Digital Image Processing; Springer International Publishing: Cham, Switerland, 2016; Volume 20, ISBN 978-3-319-47035-1. [Google Scholar]

- Méndez Domínguez, E.; Meier, E.; Small, D.; Schaepman, M.E.; Bruzzone, L.; Henke, D. A Multisquint Framework for Change Detection in High-Resolution Multitemporal SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3611–3623. [Google Scholar] [CrossRef]

- Novak, L.M. Advances in SAR Change Detection. In Radar Automatic Target Recognition (ATR) and Non-Cooperative Target Recognition (NCTR); Blacknell, D., Griffiths, H., Eds.; Institution of Engineering and Technology: London, UK, 2013; pp. 237–264. ISBN 978-1-84919-685-7. [Google Scholar]

- Hammer, H.; Kuny, S.; Thiele, A. Enhancing Coherence Images for Coherent Change Detection: An Example on Vehicle Tracks in Airborne SAR Images. Remote Sens. 2021, 13, 5010. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Zhao, J.; Yang, L. A Novel Method of Unsupervised Change Detection Using Multi-Temporal PolSAR Images. Remote Sens. 2017, 9, 1135. [Google Scholar] [CrossRef]

- Dai, K.; Li, Z.; Tomás, R.; Liu, G.; Yu, B.; Wang, X.; Cheng, H.; Chen, J.; Stockamp, J. Monitoring Activity at the Daguangbao Mega-Landslide (China) Using Sentinel-1 TOPS Time Series Interferometry. Remote Sens. Environ. 2016, 186, 501–513. [Google Scholar] [CrossRef]

- Gupta, N.; Ari, S.; Mishra, A.K. A Novel Unsupervised Thresholding Technique for Landsat Image Change Detection. In Proceedings of the Twelfth Indian Conference on Computer Vision, Graphics and Image Processing, Jodhpur, India, 19–22 December 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–9. [Google Scholar]

- Zhang, X.; Li, Z.; Hou, B.; Jiao, L. Spectral Clustering Based Unsupervised Change Detection in SAR Images. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 712–715. [Google Scholar]

- Geng, J.; Wang, H.; Fan, J.; Ma, X. Change Detection of SAR Images Based on Supervised Contractive Autoencoders and Fuzzy Clustering. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017; pp. 1–3. [Google Scholar]

- Cui, B.; Zhang, Y.; Yan, L.; Cai, X. A sar intensity images change detection method based on fusion difference detector and statistical properties. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W4, 439–443. [Google Scholar] [CrossRef]

- Chen, G.; Zhao, K.; Powers, R. Assessment of the Image Misregistration Effects on Object-Based Change Detection. ISPRS J. Photogramm. Remote Sens. 2014, 87, 19–27. [Google Scholar] [CrossRef]

- Sofiane, H.; Ferdaous, C. Comparison of Change Detection Indicators in SAR Images. In Proceedings of the 8th European Conference on Synthetic Aperture Radar, Aachen, Germany, 7–10 June 2010; pp. 1–4. [Google Scholar]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban Building Change Detection in SAR Images Using Combined Differential Image and Residual U-Net Network. Remote Sens. 2019, 11, 1091. [Google Scholar] [CrossRef]

- Almeida-Filho, R.; Rosenqvist, A.; Shimabukuro, Y.E.; Silva-Gomez, R. Detecting Deforestation with Multitemporal L-band SAR Imagery: A Case Study in Western Brazilian Amazônia. Int. J. Remote Sens. 2007, 28, 1383–1390. [Google Scholar] [CrossRef]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-Temporal Synthetic Aperture Radar Flood Mapping Using Change Detection: Multi-Temporal SAR Flood Mapping Using Change Detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Datta, U. Infrastructure Monitoring Using SAR and Multispectral Multitemporal Images. In Proceedings of the Image and Signal Processing for Remote Sensing XXVI, Online, 21–25 September 2020; Notarnicola, C., Bovenga, F., Bruzzone, L., Bovolo, F., Benediktsson, J.A., Santi, E., Pierdicca, N., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 9. [Google Scholar]

- Pang, L.; Zhang, F.; Li, L.; Huang, Q.; Jiao, Y.; Shao, Y. Assessing Buildings Damage from Multi-Temporal Sar Images Fusion Using Semantic Change Detection. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 6292–6295. [Google Scholar]

- Canty, M.J.; Nielsen, A.A.; Conradsen, K.; Skriver, H. Statistical Analysis of Changes in Sentinel-1 Time Series on the Google Earth Engine. Remote Sens. 2019, 12, 46. [Google Scholar] [CrossRef]

- Conradsen, K.; Nielsen, A.A.; Skriver, H. Determining the Points of Change in Time Series of Polarimetric SAR Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3007–3024. [Google Scholar] [CrossRef]

- Colin Koeniguer, E.; Nicolas, J.-M. Change Detection Based on the Coefficient of Variation in SAR Time-Series of Urban Areas. Remote Sens. 2020, 12, 2089. [Google Scholar] [CrossRef]

- Yuan, J.; Lv, X.; Dou, F.; Yao, J. Change Analysis in Urban Areas Based on Statistical Features and Temporal Clustering Using TerraSAR-X Time-Series Images. Remote Sens. 2019, 11, 926. [Google Scholar] [CrossRef]

- Inglada, J.; Mercier, G. A New Statistical Similarity Measure for Change Detection in Multitemporal SAR Images and Its Extension to Multiscale Change Analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Kaplan, A.; Haenlein, M. Siri, Siri, in My Hand: Who’s the Fairest in the Land? On the Interpretations, Illustrations, and Implications of Artificial Intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Fang, B.; Pan, L.; Kou, R. Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images. Remote Sens. 2019, 11, 1292. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Koeniguer, E.; Nicolas, J.M.; Pinel-Puyssegur, B.; Lagrange, J.M.; Janez, F. Visualisation des changements sur séries temporelles radar: Méthode REACTIV évaluée à l’échelle mondiale sous Google Earth Engine. Rev. Fr. Photogrammétrie Télédétection 2018, 217–218, 99–108. [Google Scholar] [CrossRef]

- Martino, T.D. REACTIV—Implementation for Sentinel Hub Custom Scripts Platform. Sentinel Hub Blog. 2022. Available online: https://medium.com/sentinel-hub/reactiv-implementation-for-sentinel-hub-custom-scripts-platform-10aa65fd9c26 (accessed on 28 June 2022).

- Kumar, L.; Mutanga, O. Google Earth Engine Applications Since Inception: Usage, Trends, and Potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef]

- AWACS Aircraft on the Modern Battlefield [ANALYSIS]. Available online: https://defence24.pl/sily-zbrojne/samoloty-awacs-na-wspolczesnym-polu-walki-analiza (accessed on 28 June 2022).

- Institute Experts Provide Analysis on Iranian Attacks on U.S. Bases in Iraq|Middlebury Institute of International Studies at Monterey. Available online: https://www.middlebury.edu/institute/news/institute-experts-provide-analysis-iranian-attacks-us-bases-iraq (accessed on 28 June 2022).

- Gruenhagen, L.; Juergens, C. Multitemporal Change Detection Analysis in an Urbanized Environment Based upon Sentinel-1 Data. Remote Sens. 2022, 14, 1043. [Google Scholar] [CrossRef]

- López-Martínez, C.; Pottier, E. Basic principles of SAR polarimetry. In Polarimetric Synthetic Aperture Radar. Principles and Application; Hajnsek, I., Desnos, Y.-L., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–58. [Google Scholar]

- Agapiou, A. Multi-Temporal Change Detection Analysis of Vertical Sprawl over Limassol City Centre and Amathus Archaeological Site in Cyprus during 2015–2020 Using the Sentinel-1 Sensor and the Google Earth Engine Platform. Sensors 2021, 21, 1884. [Google Scholar] [CrossRef]

- Manzoni, M.; Monti-Guarnieri, A.; Molinari, M.E. Joint exploitation of spaceborne SAR images and GIS techniques for urban coherent change detection. Remote Sens. Environ. 2021, 253, 112152. [Google Scholar] [CrossRef]

| Parameter | Specifications |

|---|---|

| Date of launch | Sentinel-1A—3 April 2014 |

| Sentinel-1B—25 April 2016 | |

| Orbit | Sun-synchronous polar orbit |

| Altitude | 693 km |

| Sensor | SAR C band, band 5045 GHz radar |

| Pixel spacing (Acquisition mode: Interferometric Wide Swath, IW) Resolution | • 10 × 10 m (range × azimuth), for High Resolution class Level-1 GRD • 40 × 40 m (range × azimuth), for Medium Resolution class Level-1 GRD |

| Polarization | HH + HV or VV + VH (SM, IW, EW mode) HH lub VV (WV mode) |

| Repeat ground track | • 12 days (1 satellite) • 2–6 days (2 satellites) |

| Source | Constructed | Deconstructed | Sum | |||||

|---|---|---|---|---|---|---|---|---|

| True Positive | False Positive | False Negative | True Positive | False Positive | False Negative | |||

| REACTIV | Area 1 | 13 | 2 | 3 | - | - | 3 | 59 |

| Area 2 | 26 | 3 | 6 | 2 | - | 1 | ||

| Omnibus | Area 1 | 10 | - | 6 | 1 | 1 | 2 | 58 |

| Area 2 | 32 | 1 | 2 | 2 | - | 1 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Slesinski, J.; Wierzbicki, D.; Kedzierski, M. Application of Multitemporal Change Detection in Radar Satellite Imagery Using REACTIV-Based Method for Geospatial Intelligence. Sensors 2023, 23, 4922. https://doi.org/10.3390/s23104922

Slesinski J, Wierzbicki D, Kedzierski M. Application of Multitemporal Change Detection in Radar Satellite Imagery Using REACTIV-Based Method for Geospatial Intelligence. Sensors. 2023; 23(10):4922. https://doi.org/10.3390/s23104922

Chicago/Turabian StyleSlesinski, Jakub, Damian Wierzbicki, and Michal Kedzierski. 2023. "Application of Multitemporal Change Detection in Radar Satellite Imagery Using REACTIV-Based Method for Geospatial Intelligence" Sensors 23, no. 10: 4922. https://doi.org/10.3390/s23104922

APA StyleSlesinski, J., Wierzbicki, D., & Kedzierski, M. (2023). Application of Multitemporal Change Detection in Radar Satellite Imagery Using REACTIV-Based Method for Geospatial Intelligence. Sensors, 23(10), 4922. https://doi.org/10.3390/s23104922