Efficient Autonomous Exploration and Mapping in Unknown Environments

Abstract

1. Introduction

- We analyze the impact of the regional legacy issues on the efficiency of exploration and propose a LAGS algorithm that can solve the regional legacy issues during the exploration process and improve the efficiency of exploration.

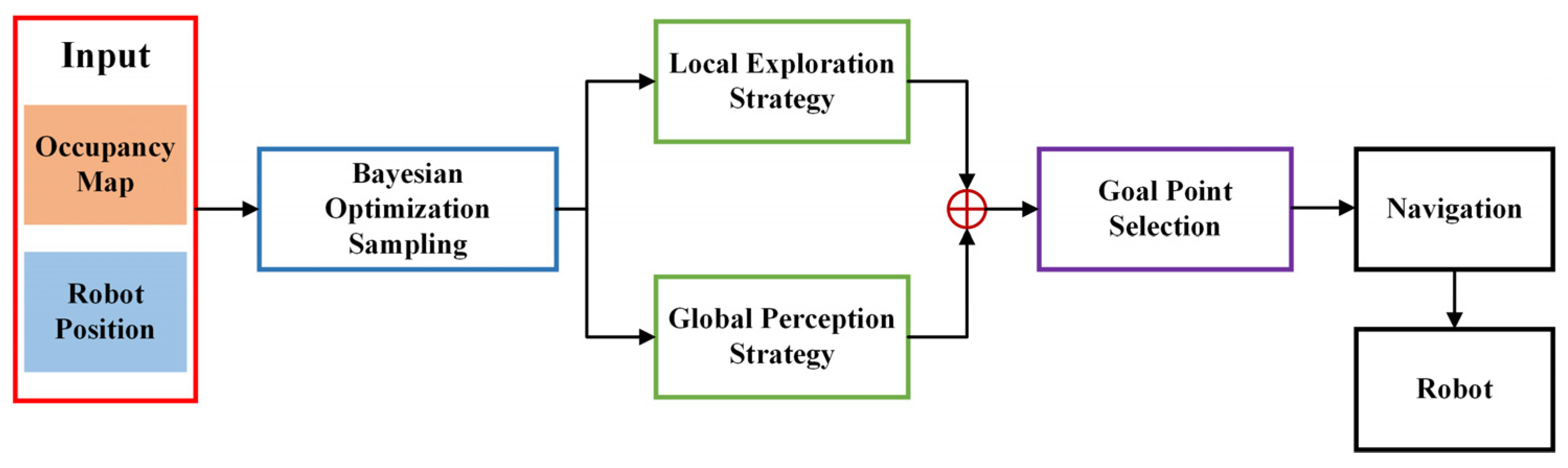

- We combine a local exploration strategy with a global perception strategy, solving the problem that a single exploration strategy is challenging to balance between optimal exploration paths and environmental robustness.

- We use Gaussian process regression (GPR) and Bayesian optimization (BO) sampling points as candidate action points for the robots. Compared to the classical frontier-based candidate point selection methods, our approach ensures that each candidate action point is safe and has a higher MI gain.

- Extensive experimental results obtained on various maps with different layouts and sizes show that the proposed method has shorter paths and higher exploration efficiency than other heuristic-based or learning-based methods.

2. Related Works

3. System Overview and Problem Formulation

3.1. System Overview

3.2. Problem Formulation

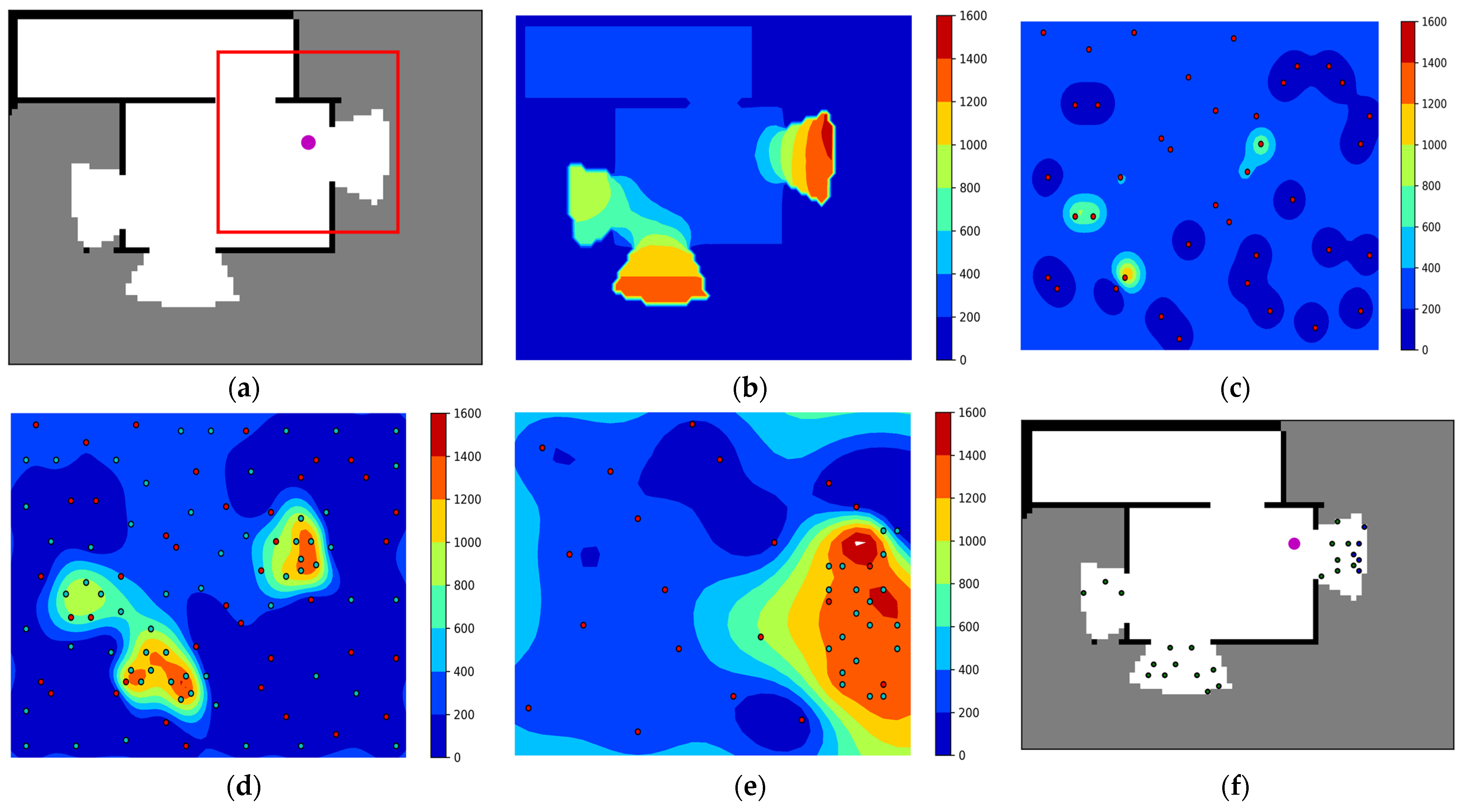

4. Bayesian Optimization Based Sampling

4.1. Mutual Information Gain

4.2. Gaussian Process Regression

4.3. Bayesian Optimal Sampling

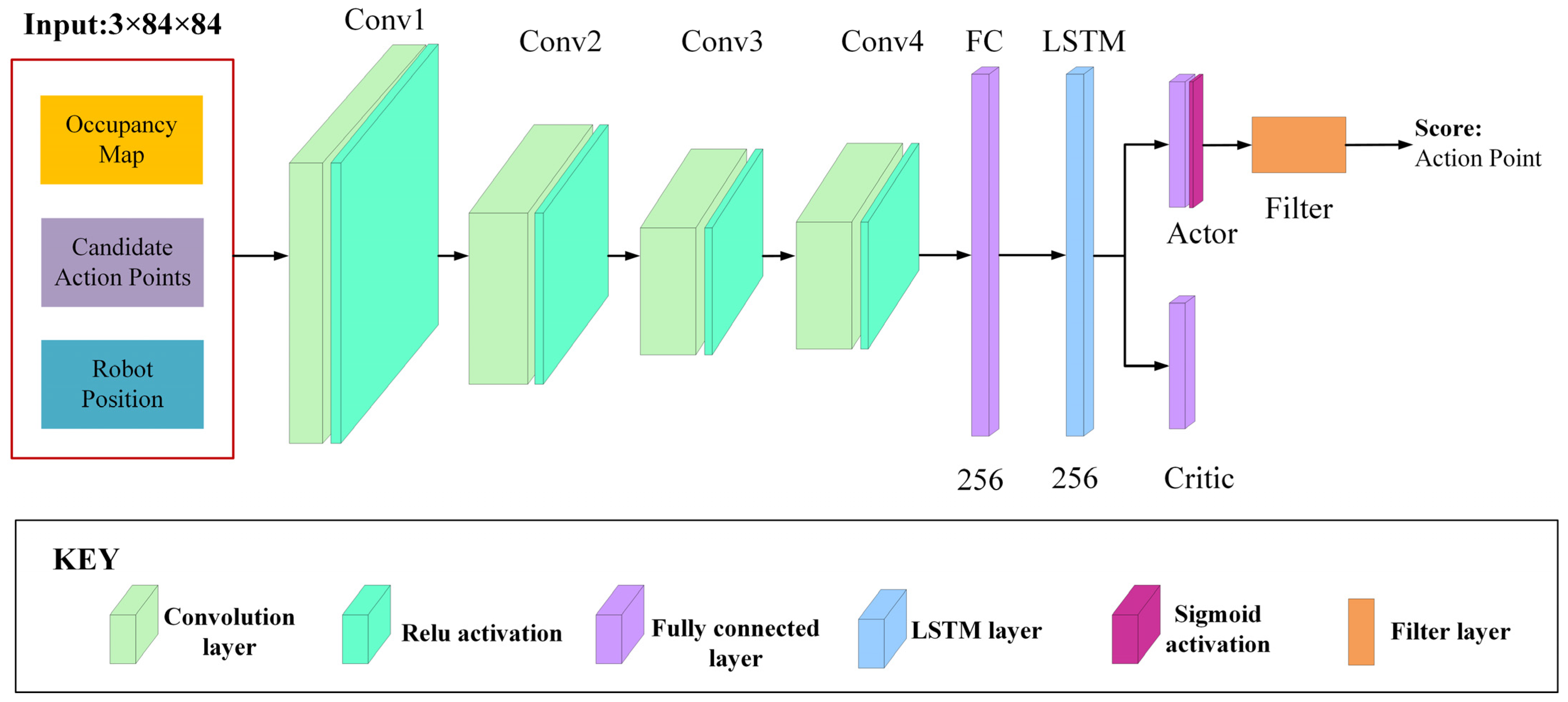

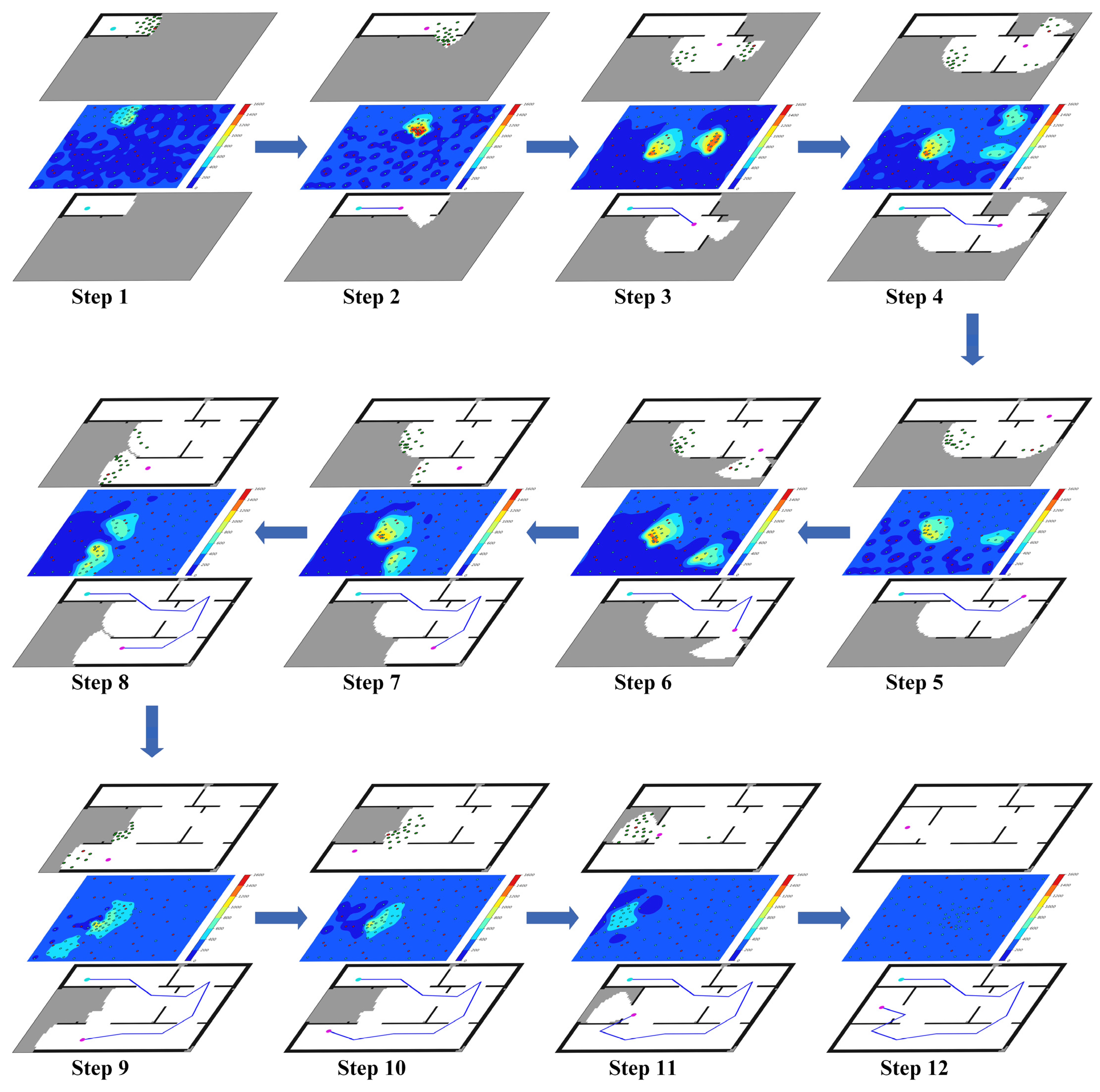

5. DRL-Based Decision Method

5.1. Local Exploration and Global Perception

5.1.1. Local Exploration

5.1.2. Global Perception

5.2. Network Structure

5.3. Asynchronous Advantage Actor–Critic Algorithm

6. Experiments

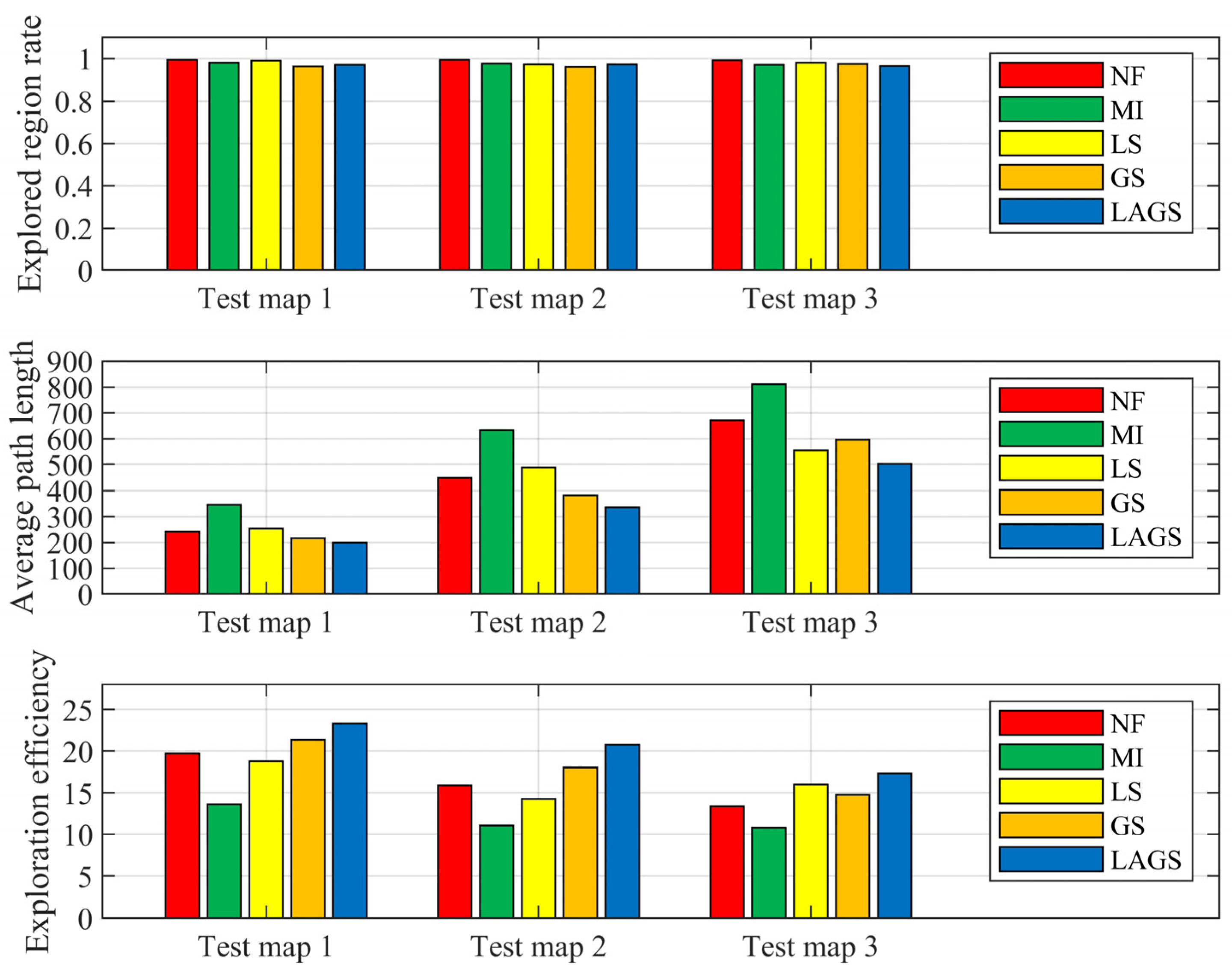

6.1. Evaluation Indicators

- Explored region rate : This metric evaluates the completeness of the map built by the robot during exploration, and it is defined as

- 2.

- Average path length : This metric evaluates the average path length taken by the robot in the total set of trials, and it is defined as

- 3.

- Exploration efficiency : This metric evaluates the entropy reduction of the robot after moving a unit distance on average over the total set of trials, and it is defined as

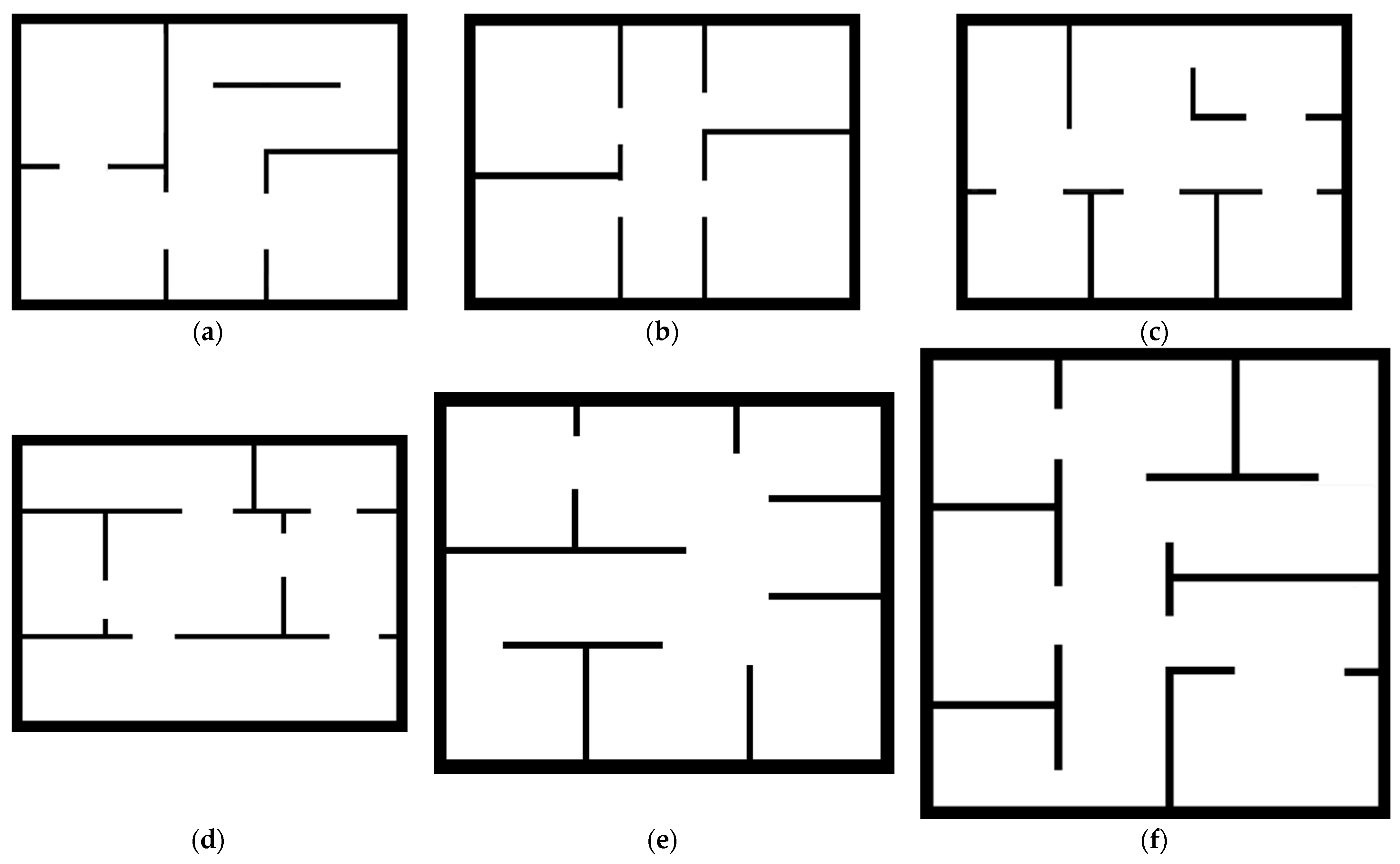

6.2. Simulation Setup

6.3. Training Setup

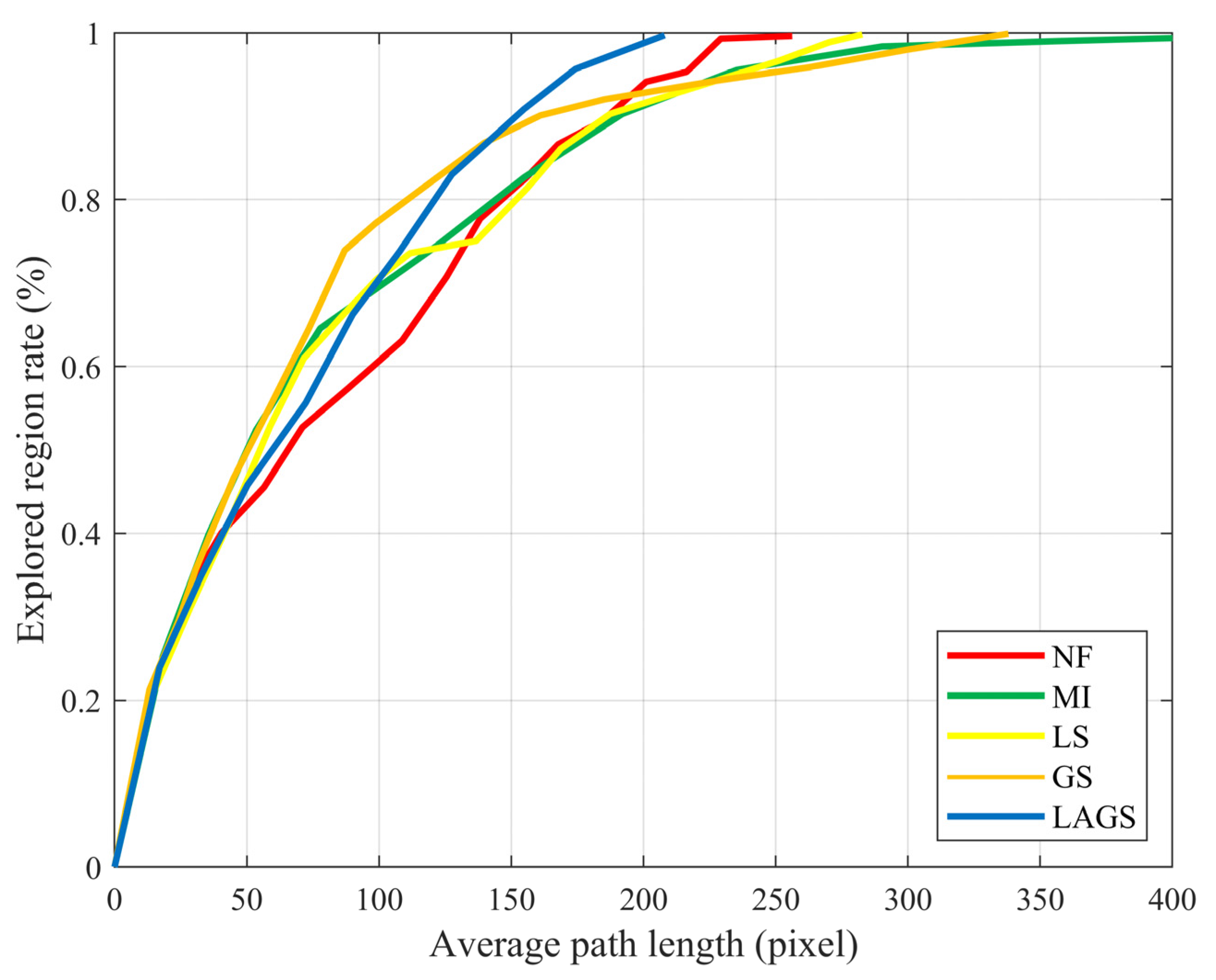

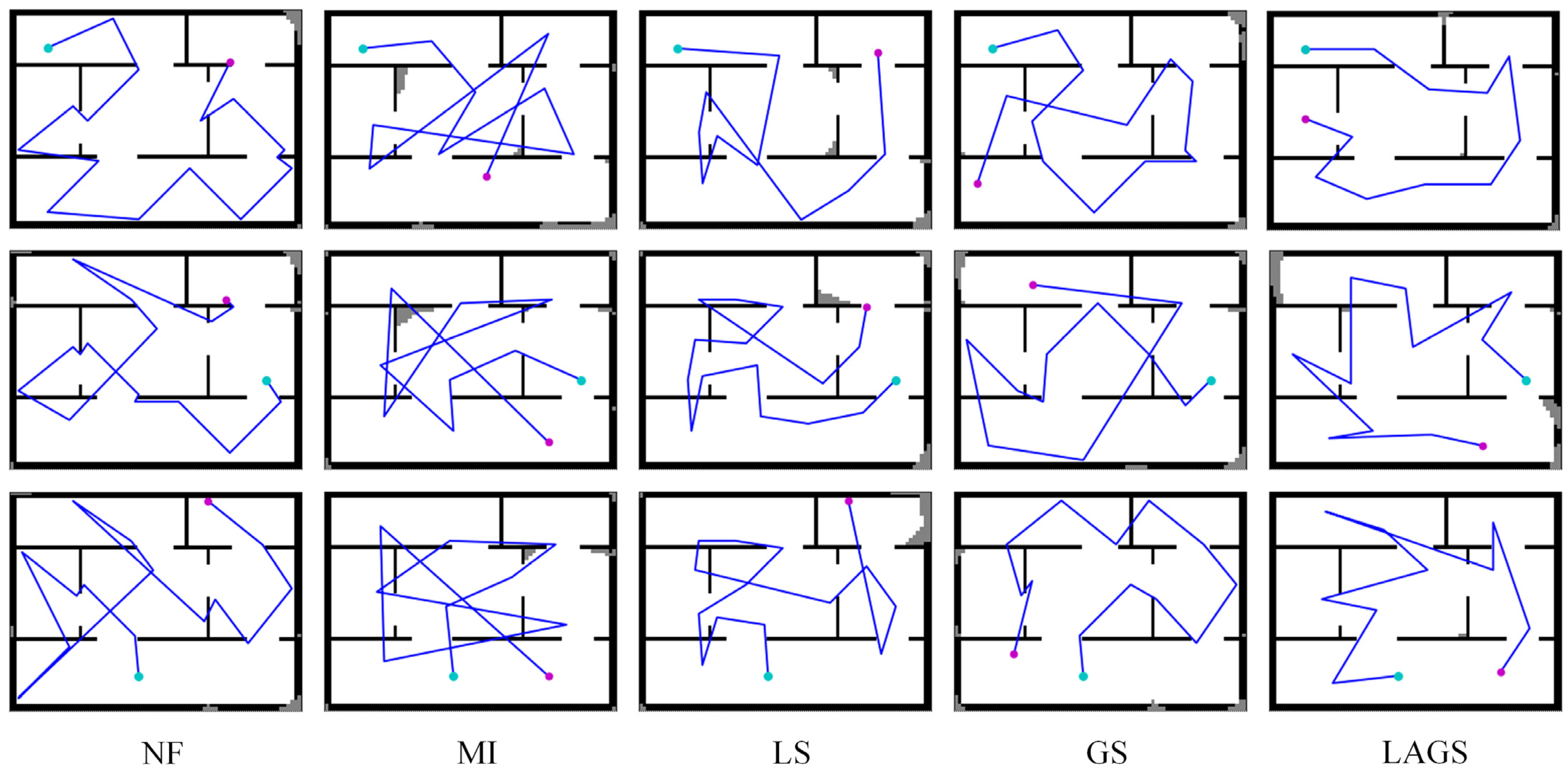

6.4. Algorithm Comparison

- NF (nearest frontier). The method is explored by selecting the nearest frontier point to the robot as the target point.

- MI (maximum mutual information). This method calculates the MI gain for each action point and explores it by selecting the action point with the maximum MI gain.

- LS (local exploration strategy). We modified the source code provided by Chen et al. [13] and applied it to our environment. The action points of the method consist of 40 Sobel sampling points in the vicinity of the robot, and the goal is to find and execute the action point with the highest gain in the local area. When the LS reaches a “dead end”, it is guided to the nearest frontier point using the FR strategy.

- GS (global exploration strategy). We replicate the method proposed by Niroui et al. [28] in our setting. The method uses the frontier points of the currently occupied map as action points, with the goal of maximizing the total information gained from the robot’s exploration path.

6.5. Summary Analysis

- LAGS has a stronger exploration performance. In cases with different initial positions, LAGS can solve the regional legacy issues and plan reasonable exploration paths during the exploration process. In addition, LAGS can achieve a higher exploration ratio for the same average path length compared to other algorithms.

- LAGS has good robustness and environmental adaptability. In test experiments in environments of various sizes and layouts, LAGS achieves better performance with shorter exploration paths and higher exploration efficiencies compared to other algorithms.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krzysiak, R.; Butail, S. Information-Based Control of Robots in Search-and-Rescue Missions With Human Prior Knowledge. IEEE Trans. Hum. Mach. Syst. 2021, 52, 52–63. [Google Scholar] [CrossRef]

- Zhai, G.; Zhang, W.; Hu, W.; Ji, Z. Coal mine rescue robots based on binocular vision: A review of the state of the art. IEEE Access 2020, 8, 130561–130575. [Google Scholar] [CrossRef]

- Zhang, J. Localization, Mapping and Navigation for Autonomous Sweeper Robots. In Proceedings of the 2022 International Conference on Machine Learning and Intelligent Systems Engineering (MLISE), Guangzhou, China, 5–7 August 2022; pp. 195–200. [Google Scholar]

- Luo, B.; Huang, Y.; Deng, F.; Li, W.; Yan, Y. Complete coverage path planning for intelligent sweeping robot. In Proceedings of the 2021 IEEE Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2021; pp. 316–321. [Google Scholar]

- Seenu, N.; Manohar, L.; Stephen, N.M.; Ramanathan, K.C.; Ramya, M. Autonomous Cost-Effective Robotic Ex-ploration and Mapping for Disaster Reconnaissance. In Proceedings of the 2022 10th International Conference on Emerging Trends in Engineering and Technology-Signal and Information Processing (ICETET-SIP-22), Nagpur, India, 29–30 April 2022; pp. 1–6. [Google Scholar]

- Narayan, S.; Aquif, M.; Kalim, A.R.; Chagarlamudi, D.; Harshith Vignesh, M. Search and Reconnaissance Robot for Disaster Management. In Machines, Mechanism and Robotics; Springer: Berlin/Heidelberg, Germany, 2022; pp. 187–201. [Google Scholar]

- Perkasa, D.A.; Santoso, J. Improved Frontier Exploration Strategy for Active Mapping with Mobile Robot. In Proceedings of the 2020 7th International Conference on Advance Informatics: Concepts, Theory and Applica-tions (ICAICTA), Tokoname, Japan, 8–9 September 2020; pp. 1–6. [Google Scholar]

- Zagradjanin, N.; Pamucar, D.; Jovanovic, K.; Knezevic, N.; Pavkovic, B. Autonomous Exploration Based on Mul-ti-Criteria Decision-Making and Using D* Lite Algorithm. Intell. Autom. Soft Comput. 2022, 32, 1369–1386. [Google Scholar] [CrossRef]

- Liu, J.; Lv, Y.; Yuan, Y.; Chi, W.; Chen, G.; Sun, L. A prior information heuristic based robot exploration method in indoor environment. In Proceedings of the 2021 IEEE International Conference on Real-time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021; pp. 129–134. [Google Scholar]

- Zhong, P.; Chen, B.; Lu, S.; Meng, X.; Liang, Y. Information-Driven Fast Marching Autonomous Exploration With Aerial Robots. IEEE Robot. Autom. Lett. 2021, 7, 810–817. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Q.; Zhao, D. Deep reinforcement learning-based automatic exploration for navigation in unknown environment. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 2064–2076. [Google Scholar] [CrossRef]

- Zhu, D.; Li, T.; Ho, D.; Wang, C.; Meng, M.Q.-H. Deep reinforcement learning supervised autonomous explora-tion in office environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7548–7555. [Google Scholar]

- Chen, F.; Bai, S.; Shan, T.; Englot, B. Self-learning exploration and mapping for mobile robots via deep rein-forcement learning. In Proceedings of the AIAA Scitech 2019 Forum, San Diego, CA, USA, 7–11 January 2019; p. 0396. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Ramezani Dooraki, A.; Lee, D.-J. An end-to-end deep reinforcement learning-based intelligent agent capable of autonomous exploration in unknown environments. Sensors 2018, 18, 3575. [Google Scholar] [CrossRef]

- Ramakrishnan, S.K.; Al-Halah, Z.; Grauman, K. Occupancy anticipation for efficient exploration and navigation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 400–418. [Google Scholar]

- Chaplot, D.S.; Gandhi, D.; Gupta, S.; Gupta, A.; Salakhutdinov, R. Learning to explore using active neural slam. arXiv 2020, arXiv:2004.05155. [Google Scholar]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Voronoi-based multi-robot autonomous exploration in un-known environments via deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 14413–14423. [Google Scholar] [CrossRef]

- Surmann, H.; Jestel, C.; Marchel, R.; Musberg, F.; Elhadj, H.; Ardani, M. Deep reinforcement learning for real autonomous mobile robot navigation in indoor environments. arXiv 2020, arXiv:2005.13857. [Google Scholar]

- Zhang, J.; Tai, L.; Liu, M.; Boedecker, J.; Burgard, W. Neural slam: Learning to explore with external memory. arXiv 2017, arXiv:1706.09520. [Google Scholar]

- Peake, A.; McCalmon, J.; Zhang, Y.; Myers, D.; Alqahtani, S.; Pauca, P. Deep Reinforcement Learning for Adap-tive Exploration of Unknown Environments. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 265–274. [Google Scholar]

- Wu, Z.; Yao, Z.; Lu, M. Deep-Reinforcement-Learning-Based Autonomous Establishment of Local Positioning Systems in Unknown Indoor Environments. IEEE Internet Things J. 2022, 9, 13626–13637. [Google Scholar] [CrossRef]

- Chen, Z.; Subagdja, B.; Tan, A.-H. End-to-end deep reinforcement learning for multi-agent collaborative explo-ration. In Proceedings of the 2019 IEEE International Conference on Agents (ICA), Jinan, China, 18–21 October 2019; pp. 99–102. [Google Scholar]

- Cimurs, R.; Suh, I.H.; Lee, J.H. Goal-Driven Autonomous Exploration Through Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2021, 7, 730–737. [Google Scholar] [CrossRef]

- Lee, W.-C.; Lim, M.C.; Choi, H.-L. Extendable Navigation Network based Reinforcement Learning for Indoor Robot Exploration. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11508–11514. [Google Scholar]

- Song, Y.; Hu, Y.; Zeng, J.; Hu, C.; Qin, L.; Yin, Q. Towards Efficient Exploration in Unknown Spaces: A Novel Hi-erarchical Approach Based on Intrinsic Rewards. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; pp. 414–422. [Google Scholar]

- Bai, S.; Chen, F.; Englot, B. Toward autonomous mapping and exploration for mobile robots through deep su-pervised learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2379–2384. [Google Scholar]

- Niroui, F.; Zhang, K.; Kashino, Z.; Nejat, G. Deep reinforcement learning robot for search and rescue applica-tions: Exploration in unknown cluttered environments. IEEE Robot. Autom. Lett. 2019, 4, 610–617. [Google Scholar] [CrossRef]

- Gkouletsos, D.; Iannelli, A.; de Badyn, M.H.; Lygeros, J. Decentralized Trajectory Optimization for Multi-Agent Ergodic Exploration. IEEE Robot. Autom. Lett. 2021, 6, 6329–6336. [Google Scholar] [CrossRef]

- Garaffa, L.C.; Basso, M.; Konzen, A.A.; de Freitas, E.P. Reinforcement learning for mobile robotics exploration: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–15. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. ‘Towards New Computational Principles for Robotics and Automation’, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar]

- Wu, C.-Y.; Lin, H.-Y. Autonomous mobile robot exploration in unknown indoor environments based on rapid-ly-exploring random tree. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, VIC, Australia, 13–15 February 2019; pp. 1345–1350. [Google Scholar]

- Dang, T.; Khattak, S.; Mascarich, F.; Alexis, K. Explore locally, plan globally: A path planning framework for autonomous robotic exploration in subterranean environments. In Proceedings of the 2019 19th International Conference on Advanced Robotics (ICAR), Belo Horizonte, Brazil, 2–6 December 2019; pp. 9–16. [Google Scholar]

- Da Silva Lubanco, D.L.; Pichler-Scheder, M.; Schlechter, T.; Scherhäufl, M.; Kastl, C. A review of utility and cost functions used in frontier-based exploration algorithms. In Proceedings of the 2020 5th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 20–22 November 2020; pp. 187–191. [Google Scholar]

- Selin, M.; Tiger, M.; Duberg, D.; Heintz, F.; Jensfelt, P. Efficient autonomous exploration planning of large-scale 3-d environments. IEEE Robot. Autom. Lett. 2019, 4, 1699–1706. [Google Scholar] [CrossRef]

- Bourgault, F.; Makarenko, A.A.; Williams, S.B.; Grocholsky, B.; Durrant-Whyte, H.F. Information based adaptive robotic exploration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; pp. 540–545. [Google Scholar]

- Ila, V.; Porta, J.M.; Andrade-Cetto, J. Information-based compact pose SLAM. IEEE Trans. Robot. 2009, 26, 78–93. [Google Scholar] [CrossRef]

- Julian, B.J.; Karaman, S.; Rus, D. On mutual information-based control of range sensing robots for mapping applications. Int. J. Robot. Res. 2014, 33, 1375–1392. [Google Scholar] [CrossRef]

- Bai, S.; Wang, J.; Chen, F.; Englot, B. Information-theoretic exploration with Bayesian optimization. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1816–1822. [Google Scholar]

- Bai, S.; Wang, J.; Doherty, K.; Englot, B. Inference-enabled information-theoretic exploration of continuous action spaces. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2018; pp. 419–433. [Google Scholar]

- Wang, H.; Cheng, Y.; Liu, N.; Zhao, Y.; Cheung-Wai Chan, J.; Li, Z. An Illumination-Invariant Shadow-Based Scene Matching Navigation Approach in Low-Altitude Flight. Remote Sens. 2022, 14, 3869. [Google Scholar] [CrossRef]

- Cai, C.; Chen, J.; Yan, Q.; Liu, F. A Multi-Robot Coverage Path Planning Method for Maritime Search and Rescue Using Multiple AUVs. Remote Sens. 2022, 15, 93. [Google Scholar] [CrossRef]

- Shao, K.; Tang, Z.; Zhu, Y.; Li, N.; Zhao, D. A survey of deep reinforcement learning in video games. arXiv 2019, arXiv:1912.10944. [Google Scholar]

- Juliá, M.; Gil, A.; Reinoso, O. A comparison of path planning strategies for autonomous exploration and mapping of unknown environments. Auton. Robots 2012, 33, 427–444. [Google Scholar] [CrossRef]

- Tai, L.; Liu, M. Mobile robots exploration through cnn-based reinforcement learning. Robot. Biomim. 2016, 3, 24. [Google Scholar] [CrossRef]

- Dooraki, A.R.; Lee, D.J. Memory-based reinforcement learning algorithm for autonomous exploration in unknown environment. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418775849. [Google Scholar] [CrossRef]

- Gardner, J.R.; Kusner, M.J.; Xu, Z.E.; Weinberger, K.Q.; Cunningham, J.P. Bayesian optimization with inequality constraints. In Proceedings of the ICML, Beijing, China, 21–26 June 2014; pp. 937–945. [Google Scholar]

- Feng, J.; Zhang, Y.; Gao, S.; Wang, Z.; Wang, X.; Chen, B.; Liu, Y.; Zhou, C.; Zhao, Z. Statistical Analysis of SF Occurrence in Middle and Low Latitudes Using Bayesian Network Automatic Identification. Remote Sens. 2023, 15, 1108. [Google Scholar] [CrossRef]

- Renardy, M.; Joslyn, L.R.; Millar, J.A.; Kirschner, D.E. To Sobol or not to Sobol? The effects of sampling schemes in systems biology applications. Math. Biosci. 2021, 337, 108593. [Google Scholar] [CrossRef]

- Schulz, E.; Speekenbrink, M.; Krause, A. A tutorial on Gaussian process regression: Modelling, exploring, and exploiting functions. J. Math. Psychol. 2018, 85, 1–16. [Google Scholar] [CrossRef]

- Croci, M.; Impollonia, G.; Meroni, M.; Amaducci, S. Dynamic Maize Yield Predictions Using Machine Learning on Multi-Source Data. Remote Sens. 2022, 15, 100. [Google Scholar] [CrossRef]

- Deng, D.; Duan, R.; Liu, J.; Sheng, K.; Shimada, K. Robotic exploration of unknown 2d environment using a frontier-based automatic-differentiable information gain measure. In Proceedings of the 2020 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Boston, MA, USA, 6–9 July 2020; pp. 1497–1503. [Google Scholar]

- Shrestha, R.; Tian, F.-P.; Feng, W.; Tan, P.; Vaughan, R. Learned map prediction for enhanced mobile robot exploration. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1197–1204. [Google Scholar]

- Zhelo, O.; Zhang, J.; Tai, L.; Liu, M.; Burgard, W. Curiosity-driven exploration for mapless navigation with deep reinforcement learning. arXiv 2018, arXiv:1804.00456. [Google Scholar]

- Shi, H.; Shi, L.; Xu, M.; Hwang, K.-S. End-to-end navigation strategy with deep reinforcement learning for mobile robots. IEEE Trans. Ind. Inform. 2019, 16, 2393–2402. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Li, T.; Ho, D.; Li, C.; Zhu, D.; Wang, C.; Meng, M.Q.-H. Houseexpo: A large-scale 2d indoor layout dataset for learning-based algorithms on mobile robots. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5839–5846. [Google Scholar]

| Map Name | Resolution (Pixel) |

|---|---|

| Train map 1 | 60 × 80 |

| Train map 2 | 60 × 80 |

| Train map 3 | 60 × 80 |

| Test map 1 | 60 × 80 |

| Test map 2 | 77 × 93 |

| Test map 3 | 95 × 95 |

| Hyperparameters | Value |

|---|---|

| Number of parallel environments | 3 |

| Number of minibatches | 30 |

| Number of episodes | 100,000 |

| Learning rate | 0.0001 |

| Learning rate decay policy | Polynomial decay |

| Optimization algorithm | Adam |

| Value loss coefficient | 0.5 |

| Entropy coefficient | 0.01 |

| Discount factor | 0.99 |

| Exploration region rate | 0.95 |

| Score proportion factor | 0.75 |

| Covariance smoothness coefficient | 2.5 |

| BO tradeoff coefficient | 5.0 |

| Layer | Hyperparameters |

|---|---|

| Conv1 | Output = 16, Kernel = 8, Stride = 4, Padding = Valid |

| Conv2 | Output = 32, Kernel = 4, Stride = 2, Padding = Valid |

| Conv3 | Output = 32, Kernel = 3, Stride = 2, Padding = Same |

| Conv4 | Output = 32, Kernel = 3, Stride = 2, Padding = Same |

| FC | Output size = 256 |

| LSTM | Output size = 256 |

| Average Path Length | Explored Region Rate | Exploration Efficiency | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Test Map 1 | Test Map 2 | Test Map 3 | Test Map 1 | Test Map 2 | Test Map 3 | Test Map 1 | Test Map 2 | Test Map 3 | |

| NF | 242.19 | 448.4 | 670.79 | 0.994 | 0.995 | 0.993 | 19.7 | 15.89 | 13.36 |

| MI | 344.86 | 633.07 | 809.82 | 0.98 | 0.976 | 0.97 | 13.64 | 11.04 | 10.81 |

| LS | 253.69 | 488.45 | 554.03 | 0.991 | 0.972 | 0.981 | 18.75 | 14.25 | 15.98 |

| GS | 216.61 | 381.47 | 595.76 | 0.963 | 0.961 | 0.975 | 21.34 | 18.04 | 14.77 |

| LAGS | 199.57 | 335.79 | 501.96 | 0.97 | 0.973 | 0.965 | 23.33 | 20.75 | 17.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, A.; Xie, Y.; Sun, Y.; Wang, X.; Jiang, B.; Xiao, J. Efficient Autonomous Exploration and Mapping in Unknown Environments. Sensors 2023, 23, 4766. https://doi.org/10.3390/s23104766

Feng A, Xie Y, Sun Y, Wang X, Jiang B, Xiao J. Efficient Autonomous Exploration and Mapping in Unknown Environments. Sensors. 2023; 23(10):4766. https://doi.org/10.3390/s23104766

Chicago/Turabian StyleFeng, Ao, Yuyang Xie, Yankang Sun, Xuanzhi Wang, Bin Jiang, and Jian Xiao. 2023. "Efficient Autonomous Exploration and Mapping in Unknown Environments" Sensors 23, no. 10: 4766. https://doi.org/10.3390/s23104766

APA StyleFeng, A., Xie, Y., Sun, Y., Wang, X., Jiang, B., & Xiao, J. (2023). Efficient Autonomous Exploration and Mapping in Unknown Environments. Sensors, 23(10), 4766. https://doi.org/10.3390/s23104766