Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets

Abstract

1. Introduction

- Anomaly Detection: We adapt two classes for time series prediction models for temporal anomaly detection in a smart manufacturing system. Such anomaly detection aims at detecting anomaly readings collected from the deployed sensors.We test our models for temporal anomaly detection through four real-world datasets collected from manufacturing sensors (e.g., vibration data). We observe that the ML-based models outperform the classical models in the anomaly detection task.

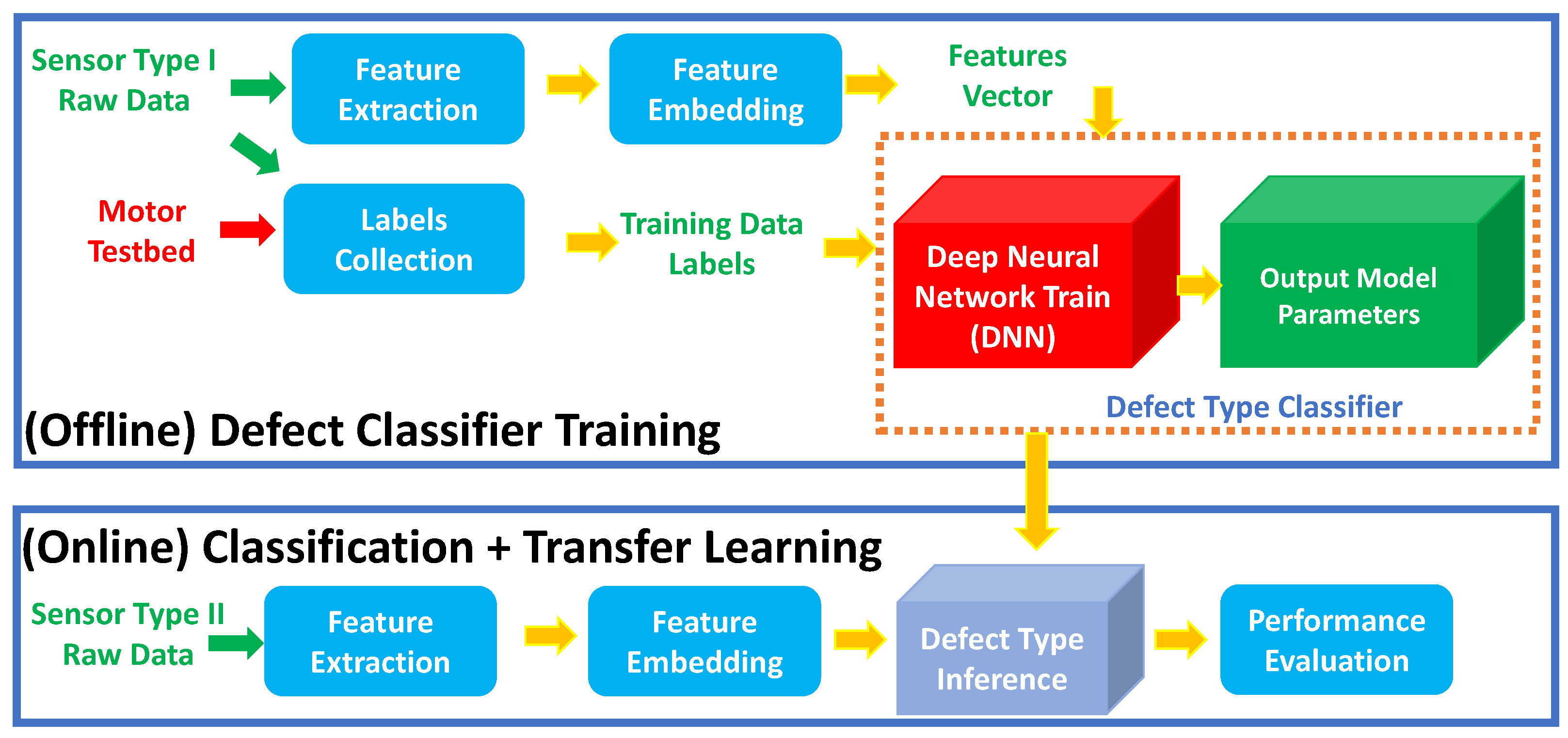

- Defect Type Classification: We detect the level of defect (i.e., normal operation, near-failure, failure) for each RPM data using deep learning (i.e., deep neural network multi-class classifier) and we transfer the learning across different instances of manufacturing sensors. We analyze the different parameters that affect the performance of prediction and classification models, such as the number of epochs, network size, prediction model, failure level, and sensor type.

- RPM Selection and Aggregation: We show that training at some specific RPMs, for testing under a variety of operating conditions gives better accuracy of defect prediction. The takeaway is that careful selection of training data by aggregating multiple predictive RPM values is beneficial.

- Benchmark Data: We release our database corpus (4 datasets) and codes for the community to access it for anomaly detection and defect type classification and to build on it with new datasets and models. (URL for our database and codes is: https://drive.google.com/drive/u/2/folders/1QX3chnSTKO3PsEhi5kBdf9WwMBmOriJ8 (accessed on 12 December 2022). The dataset details are provided in Appendix A, the dataset collection process is described in Appendix B, the dataset usage is described in Appendix C, and the main codes are presented in Appendix E). We are unveiling real failures of a pharmaceutical packaging manufacturer company.

2. Related Work

2.1. Failure Detection Models

2.2. Learning Transfer

2.3. Datasets and Benchmarks for Anomaly Detection in Smart Manufacturing

3. Materials and Methods

3.1. Temporal Anomaly Detection

- Classical forecasting models: In this category, we included Autoregressive Integrated Moving Average model (ARIMA) [24], Seasonal Naive [25] (in which each forecast equals the last observed value from the same season), Random Forest (RF) [26] (which is a tree ensemble that combines the predictions made by many decision trees into a single model), and Auto-regression [45].

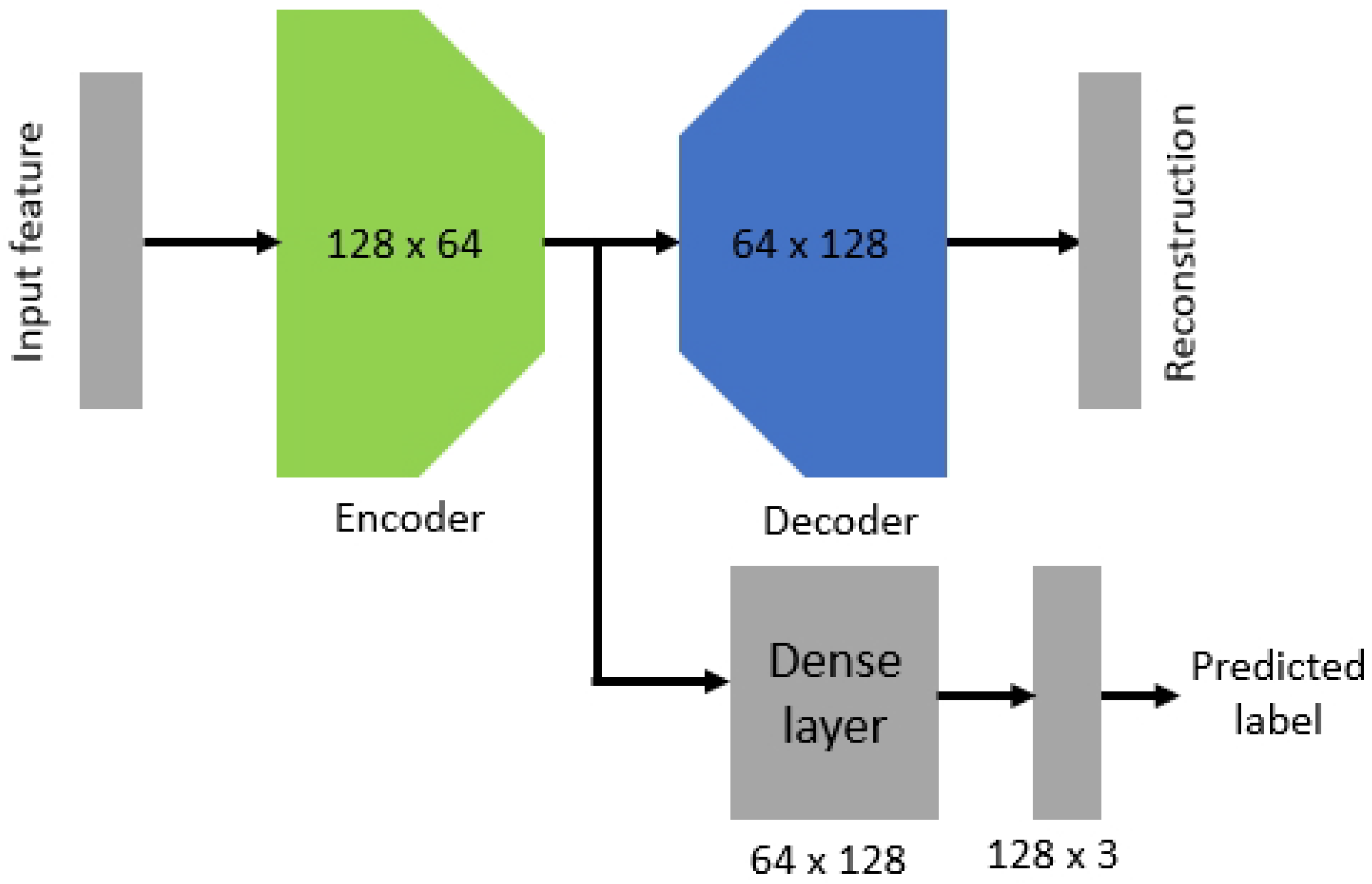

- ML-based forecasting models: We selected six popular time series forecasting models, including Recurrent Neural Network (RNN) [46], LSTM [47] (which captures time dependency in a better fashion compared to RNN) and has been used in different applications [48]), Deep Neural Network (DNN) [49], AutoEncoder [50], and the recent works DeepAR [29], DeepFactors [51].

3.2. Transfer Learning across Sensor Types

4. Results

4.1. Anomaly Detection with Manufacturing Sensors

4.1.1. Deployment Details and Datasets Explanation

4.1.2. Results and Insights

4.2. Transfer Learning across Vibration Sensors

- Can we detect the operational state effectively (i.e., with high accuracy)?

- Can we transfer the learned model across the two different types of sensors?

4.2.1. DNN Model Results

4.2.2. Data-Augmentation Model Results

4.2.3. Effect of Variation of RPMs Results

4.2.4. Relaxation of the Classification Problem

4.3. Autoencoder for Anomaly Detection

5. Discussion

5.1. Comparative Analysis with Prior Related Work

5.2. Ethical Concerns

5.3. Transfer Learning under Different Features

5.4. Reproducibility

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Explaining Datasets: Highlights, and How Can It Be Read

Appendix A.1. Datset Categories

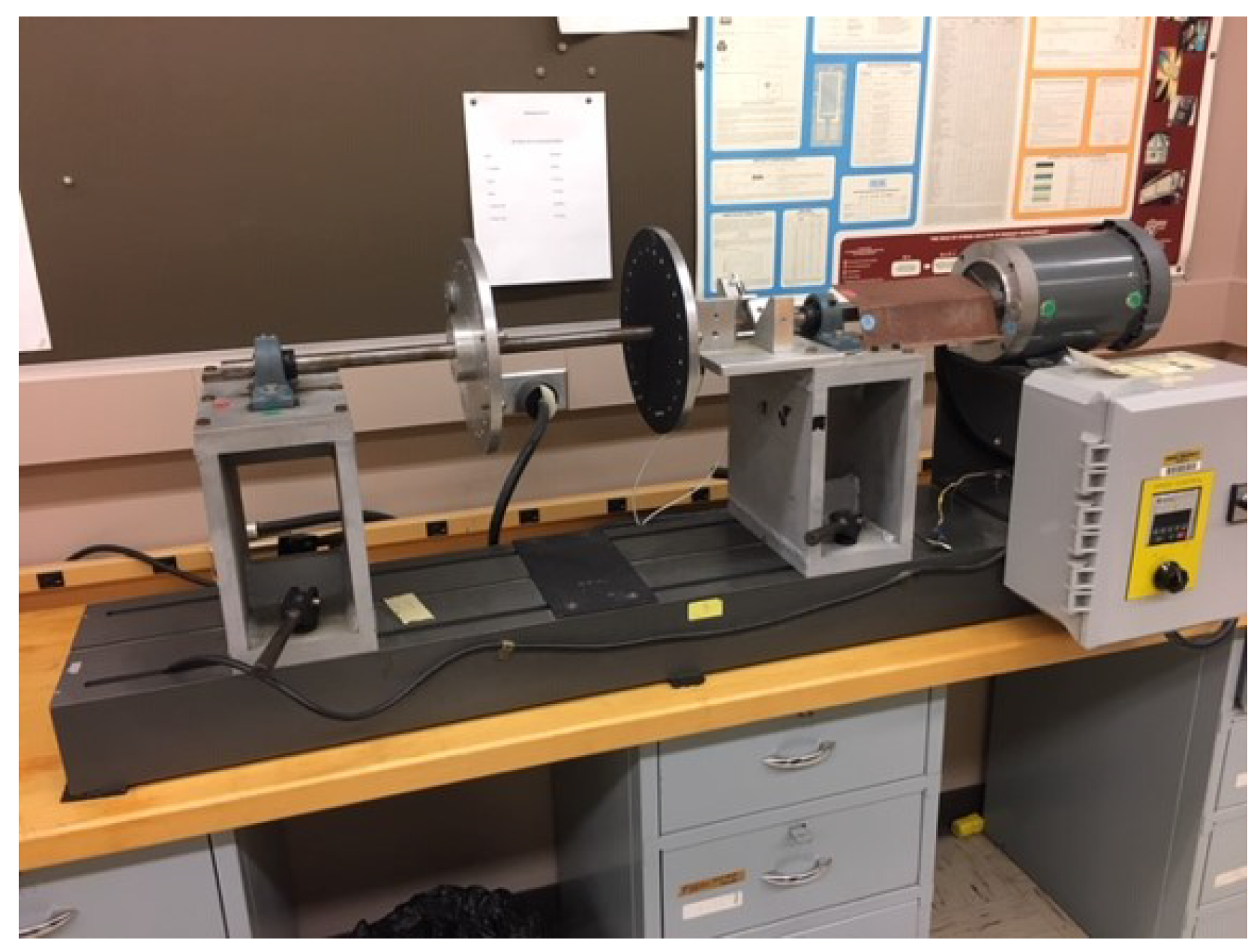

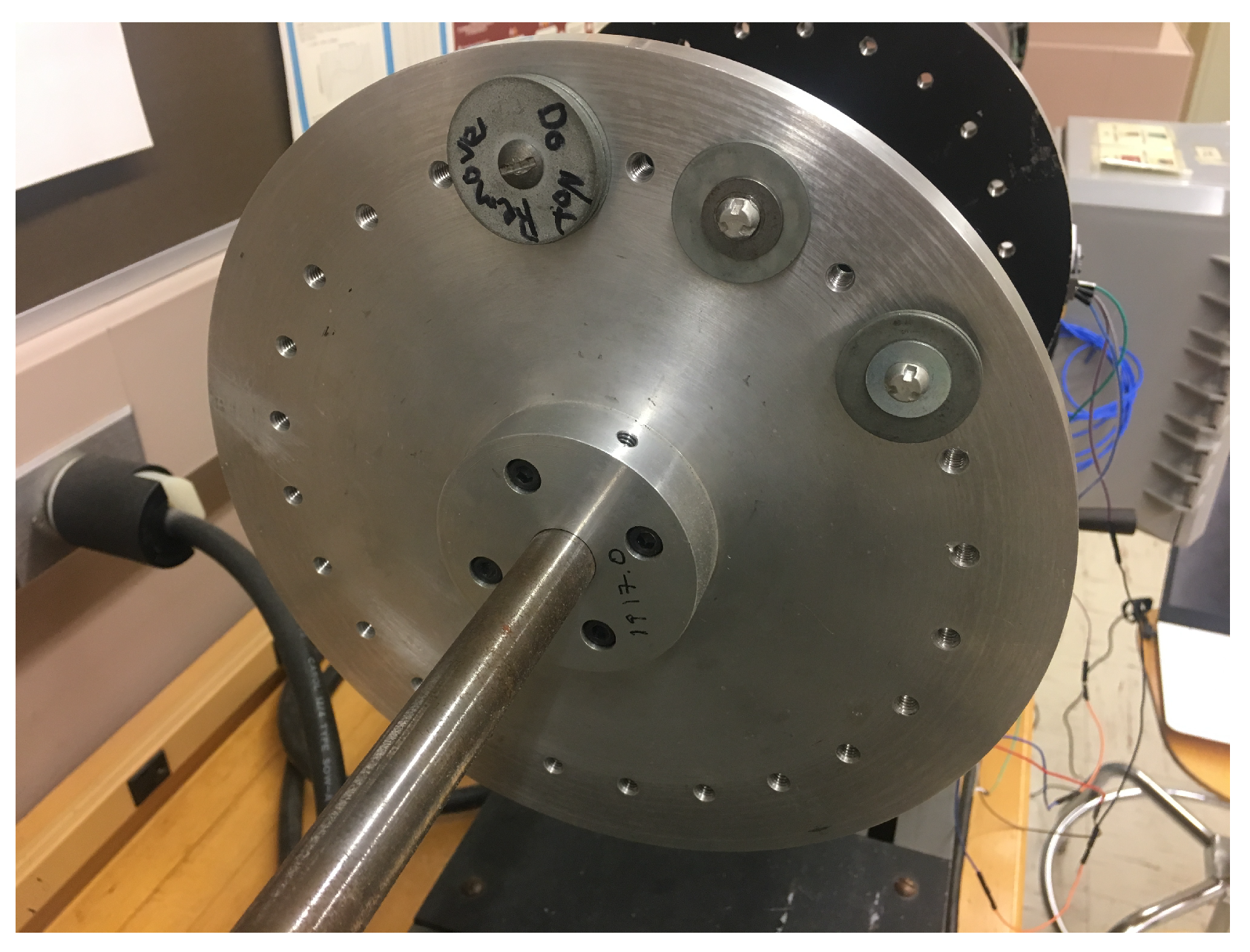

Appendix A.2. MEMS and Piezoelectric Datasets

Appendix A.3. Process Data

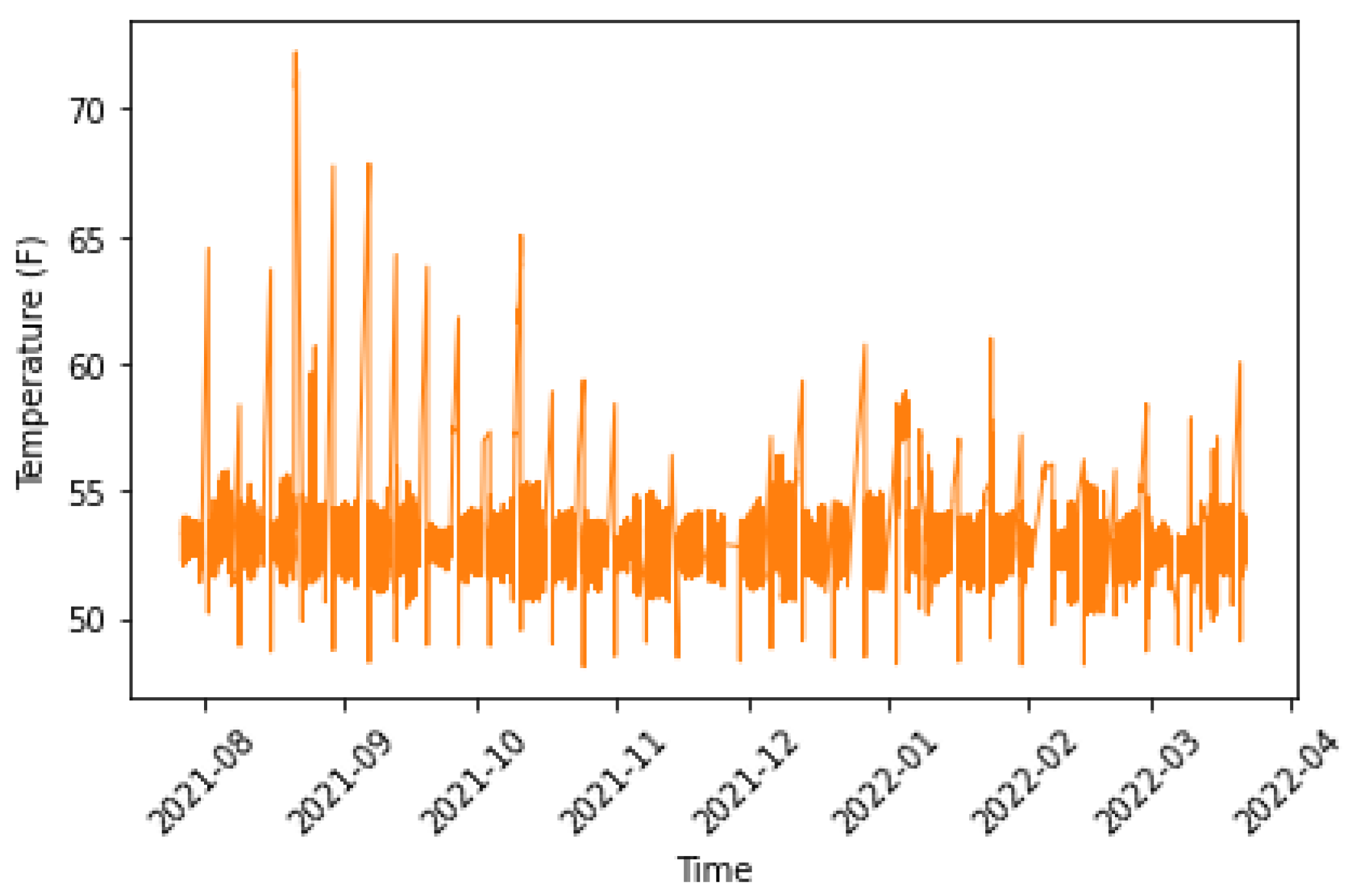

- Start date: 27 July 2021

- End date: 1 May 2022

- Measurement Columns: Air Pressure 1, Air Pressure 2, Chiller 1 Supply Tmp, Chiller 2 Supply Tmp, Outside Air Temp, Outside Humidity, and Outside Dewpoint.

- Measurement interval: 5 min (1 data point per 5 min)

- Description: We were also able to collect process related data that can potentially indicate the condition of machine operation. We call it process data and the process data has been collected with the Statistical Process Control (SPC) system. The measurement started from August 2021 until May 2022.

Appendix A.4. Pharmaceutical Packaging

- Start date: 13 November 2021, some data loss between December and January.

- End date: May 2022

- Measurement location: Air Compressor, Chiller 1, Chiller 2, and Jomar moulding machine

- Sample rate for each axis: 3.2 kHz

- Measurement interval: 30 min

- Measurement duration: 1 s

- 1st line: date and time that the measurement started

- 2nd line: x-axis vibration data (3200 data points)

- 3rd line: y-axis vibration data (3200 data points)

- 4th line: z-axis vibration data (3200 data points)

- 5th line: time difference between each data point

Appendix A.5. Instances Nature

Appendix B. Dataset Collection

Appendix B.1. Hardware Cost

Appendix B.2. Required Software Resources

Appendix B.3. Required Computational Resources

Appendix C. Uses of Datasets

Appendix C.1. Main Dataset Usage

Appendix C.2. Other Data Usage

Appendix C.3. Hosting and Maintenance

Appendix C.4. Motivation for Data Release

Appendix D. Extended Evaluation

Appendix D.1. Anomaly Detection Using Autoencoder Classification

Appendix D.2. Experimental Setup and Results

Appendix E. Benchmarks: Models, Hyper-Parameter Selection, and Code Details

Appendix E.1. Models and Hyper-Parameter Selection

Appendix E.2. Code Details and Prerequisites

Appendix E.2.1. Anomaly Detection Code

- Arima.py: Training and testing ARIMA forecasting model

- LSTM.py: Training and testing LSTM forecasting model

- AutoEncoder.py: Training and testing AutoEncoder forecasting model

- DNN.py: Training and testing DNN forecasting model

- RNN.py: Training and testing RNN forecasting model

- GluonTModels.py: That file contains the rest of the forecasting models along with the required functions and hyper-parameters.

- Autoencoder_classifier.py: Training and Testing a classifier-based model for anomaly detection (see Appendix E.2).

Appendix E.2.2. Transfer Learning Code

- Transfer_Learning_Pre_processing.py: This code prepares the CSV files used for training defect type classifiers and transfer learning.

- Transfer_Learning_Train.py: This code trains and tests the defect type classifier, including feature encoding, defect classifier building, testing, and performance reporting.

Appendix E.2.3. Running the Codes

Appendix E.2.4. Prerequisites (Libraries and Modules)

- numpy, scipy, pandas, keras, statsmodels, and sklearn

- GluonTS, matplotlib and simplejson, re, random, and csv

References

- Thomas, T.E.; Koo, J.; Chaterji, S.; Bagchi, S. Minerva: A reinforcement learning-based technique for optimal scheduling and bottleneck detection in distributed factory operations. In Proceedings of the 2018 10th International Conference on Communication Systems & Networks (COMSNETS), Bengaluru, India, 3–7 January 2018; pp. 129–136. [Google Scholar]

- Scime, L.; Beuth, J. Anomaly detection and classification in a laser powder bed additive manufacturing process using a trained computer vision algorithm. Addit. Manuf. 2018, 19, 114–126. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Y.; Zhang, L.; Gao, R.X.; Wu, D. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Ukil, A.; Bandyoapdhyay, S.; Puri, C.; Pal, A. IoT Healthcare Analytics: The Importance of Anomaly Detection. In Proceedings of the 2016 IEEE 30th International Conference on Advanced Information Networking and Applications (AINA), Crans-Montana, Switzerland, 23–25 March 2016; pp. 994–997. [Google Scholar] [CrossRef]

- Shahzad, G.; Yang, H.; Ahmad, A.W.; Lee, C. Energy-efficient intelligent street lighting system using traffic-adaptive control. IEEE Sens. J. 2016, 16, 5397–5405. [Google Scholar] [CrossRef]

- Mitchell, R.; Chen, I.R. A survey of intrusion detection techniques for cyber-physical systems. ACM Comput. Surv. (CSUR) 2014, 46, 55. [Google Scholar] [CrossRef]

- Chatterjee, B.; Seo, D.H.; Chakraborty, S.; Avlani, S.; Jiang, X.; Zhang, H.; Abdallah, M.; Raghunathan, N.; Mousoulis, C.; Shakouri, A.; et al. Context-Aware Collaborative Intelligence with Spatio-Temporal In-Sensor-Analytics for Efficient Communication in a Large-Area IoT Testbed. IEEE Internet Things J. 2020. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. (CSUR) 2009, 41, 15. [Google Scholar] [CrossRef]

- Sabahi, F.; Movaghar, A. Intrusion detection: A survey. In Proceedings of the 2008 Third International Conference on Systems and Networks Communications, Sliema, Malta, 26–31 October 2008; pp. 23–26. [Google Scholar]

- Bowler, A.L.; Bakalis, S.; Watson, N.J. Monitoring Mixing Processes Using Ultrasonic Sensors and Machine Learning. Sensors 2020, 20, 1813. [Google Scholar] [CrossRef]

- Lopez, F.; Saez, M.; Shao, Y.; Balta, E.C.; Moyne, J.; Mao, Z.M.; Barton, K.; Tilbury, D. Categorization of anomalies in smart manufacturing systems to support the selection of detection mechanisms. IEEE Robot. Autom. Lett. 2017, 2, 1885–1892. [Google Scholar] [CrossRef]

- Susto, G.A.; Terzi, M.; Beghi, A. Anomaly detection approaches for semiconductor manufacturing. Procedia Manuf. 2017, 11, 2018–2024. [Google Scholar] [CrossRef]

- Leahy, K.; Hu, R.L.; Konstantakopoulos, I.C.; Spanos, C.J.; Agogino, A.M. Diagnosing wind turbine faults using machine learning techniques applied to operational data. In Proceedings of the 2016 Ieee International Conference On Prognostics Furthermore, Health Management (ICPHM), Ottawa, ON, Canada, 20–22 June 2016; pp. 1–8. [Google Scholar]

- Francis, J.; Bian, L. Deep Learning for Distortion Prediction in Laser-Based Additive Manufacturing using Big Data. Manuf. Lett. 2019, 20, 10–14. [Google Scholar] [CrossRef]

- Lee, W.J.; Mendis, G.P.; Sutherland, J.W. Development of an Intelligent Tool Condition Monitoring System to Identify Manufacturing Tradeoffs and Optimal Machining Conditions. In Proceedings of the 16th Global Conference on Sustainable Manufacturing. Procedia Manufacturing, Buenos Aires, Argentina, 4–6 December 2019; Volume 33, pp. 256–263. [Google Scholar] [CrossRef]

- Garcia, M.C.; Sanz-Bobi, M.A.; Del Pico, J. SIMAP: Intelligent System for Predictive Maintenance: Application to the health condition monitoring of a windturbine gearbox. Comput. Ind. 2006, 57, 552–568. [Google Scholar] [CrossRef]

- Kroll, B.; Schaffranek, D.; Schriegel, S.; Niggemann, O. System modeling based on machine learning for anomaly detection and predictive maintenance in industrial plants. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–7. [Google Scholar]

- De Benedetti, M.; Leonardi, F.; Messina, F.; Santoro, C.; Vasilakos, A. Anomaly detection and predictive maintenance for photovoltaic systems. Neurocomputing 2018, 310, 59–68. [Google Scholar] [CrossRef]

- Kusiak, A. Smart manufacturing. Int. J. Prod. Res. 2018, 56, 508–517. [Google Scholar] [CrossRef]

- Lee, W.J.; Mendis, G.P.; Triebe, M.J.; Sutherland, J.W. Monitoring of a machining process using kernel principal component analysis and kernel density estimation. J. Intell. Manuf. 2019. [Google Scholar] [CrossRef]

- Alfeo, A.L.; Cimino, M.G.; Manco, G.; Ritacco, E.; Vaglini, G. Using an autoencoder in the design of an anomaly detector for smart manufacturing. Pattern Recognit. Lett. 2020, 136, 272–278. [Google Scholar] [CrossRef]

- Marjani, M.; Nasaruddin, F.; Gani, A.; Karim, A.; Hashem, I.A.T.; Siddiqa, A.; Yaqoob, I. Big IoT data analytics: Architecture, opportunities, and open research challenges. IEEE Access 2017, 5, 5247–5261. [Google Scholar]

- He, J.; Wei, J.; Chen, K.; Tang, Z.; Zhou, Y.; Zhang, Y. Multitier fog computing with large-scale iot data analytics for smart cities. IEEE Internet Things J. 2017, 5, 677–686. [Google Scholar] [CrossRef]

- Contreras, J.; Espinola, R.; Nogales, F.J.; Conejo, A.J. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- Montero-Manso, P.; Athanasopoulos, G.; Hyndman, R.J.; Talagala, T.S. FFORMA: Feature-based forecast model averaging. Int. J. Forecast. 2020, 36, 86–92. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ballard, D.H. Modular learning in neural networks. Aaai 1987, 647, 279–284. [Google Scholar]

- Salinas, D.; Flunkert, V.; Gasthaus, J.; Januschowski, T. DeepAR: Probabilistic forecasting with autoregressive recurrent networks. Int. J. Forecast. 2020, 36, 1181–1191. [Google Scholar] [CrossRef]

- Jeff, R. Considerations For Accelerometer Selection When Monitoring Complex Machinery Vibration. Available online: http://www.vibration.org/Presentation/IMI%20Sensors%20Accel%20Presentation%200116.pdf (accessed on 30 September 2019).

- Albarbar, A.; Mekid, S.; Starr, A.; Pietruszkiewicz, R. Suitability of MEMS Accelerometers for Condition Monitoring: An experimental study. Sensors 2008, 8, 784–799. [Google Scholar] [CrossRef]

- Teng, S.H.G.; Ho, S.Y.M. Failure mode and effects analysis. Int. J. Qual. Reliab. Manag. 1996, 13, 8–26. [Google Scholar] [CrossRef]

- Lee, W.J.; Wu, H.; Huang, A.; Sutherland, J.W. Learning via acceleration spectrograms of a DC motor system with application to condition monitoring. Int. J. Adv. Manuf. Technol. 2019. [Google Scholar] [CrossRef]

- Xiang, E.W.; Cao, B.; Hu, D.H.; Yang, Q. Bridging domains using world wide knowledge for transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 770–783. [Google Scholar] [CrossRef]

- Qiu, J.; Wu, Q.; Ding, G.; Xu, Y.; Feng, S. A survey of machine learning for big data processing. EURASIP J. Adv. Signal Process. 2016, 2016, 67. [Google Scholar] [CrossRef]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Abdallah, M.; Rossi, R.; Mahadik, K.; Kim, S.; Zhao, H.; Bagchi, S. AutoForecast: Automatic Time-Series Forecasting Model Selection. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management (CIKM ’22), Atlanta, GA, USA, 17–21 October 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 5–14. [Google Scholar] [CrossRef]

- Ling, X.; Dai, W.; Xue, G.R.; Yang, Q.; Yu, Y. Spectral domain-transfer learning. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; ACM: New York, NY, USA, 2008; pp. 488–496. [Google Scholar]

- Chen, T.; Goodfellow, I.; Shlens, J. Net2net: Accelerating learning via knowledge transfer. arXiv 2015, arXiv:1511.05641. [Google Scholar]

- Wang, S.; Xi, N. Calibration of Haptic Sensors Using Transfer Learning. IEEE Sens. J. 2021, 21, 2003–2012. [Google Scholar] [CrossRef]

- Udmale, S.S.; Singh, S.K.; Singh, R.; Sangaiah, A.K. Multi-Fault Bearing Classification Using Sensors and ConvNet-Based Transfer Learning Approach. IEEE Sens. J. 2020, 20, 1433–1444. [Google Scholar] [CrossRef]

- Koizumi, Y.; Kawaguchi, Y.; Imoto, K.; Nakamura, T.; Nikaido, Y.; Tanabe, R.; Purohit, H.; Suefusa, K.; Endo, T.; Yasuda, M.; et al. Description and discussion on DCASE2020 challenge task2: Unsupervised anomalous sound detection for machine condition monitoring. arXiv 2020, arXiv:2006.05822. [Google Scholar]

- Hsieh, R.J.; Chou, J.; Ho, C.H. Unsupervised Online Anomaly Detection on Multivariate Sensing Time Series Data for Smart Manufacturing. In Proceedings of the 2019 IEEE 12th Conference on Service-Oriented Computing and Applications (SOCA), Kaohsiung, Taiwan, 18–21 November 2019; pp. 90–97. [Google Scholar] [CrossRef]

- Fathy, Y.; Jaber, M.; Brintrup, A. Learning With Imbalanced Data in Smart Manufacturing: A Comparative Analysis. IEEE Access 2021, 9, 2734–2757. [Google Scholar] [CrossRef]

- Lewis, R.; Reinsel, G.C. Prediction of multivariate time series by autoregressive model fitting. J. Multivar. Anal. 1985, 16, 393–411. [Google Scholar] [CrossRef]

- Tokgöz, A.; Ünal, G. A RNN based time series approach for forecasting turkish electricity load. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Gers, F.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. In Proceedings of the 1999 Ninth International Conference on Artificial Neural Networks ICANN 99, (Conf. Publ. No. 470), Edinburgh, UK, 7–10 September 1999; Volume 2, pp. 850–855. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Sen, R.; Yu, H.F.; Dhillon, I.S. Think globally, act locally: A deep neural network approach to high-dimensional time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32, 1–10. [Google Scholar]

- Chollet, F. Building autoencoders in keras. Keras Blog 2016, 14. Available online: https://blog.keras.io/building-autoencoders-in-keras.html (accessed on 30 September 2019).

- Wang, Y.; Smola, A.; Maddix, D.; Gasthaus, J.; Foster, D.; Januschowski, T. Deep factors for forecasting. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 10–15 June 2019; pp. 6607–6617. [Google Scholar]

- Yuan, Y.; Shi, Y.; Li, C.; Kim, J.; Cai, W.; Han, Z.; Feng, D.D. DeepGene: An advanced cancer type classifier based on deep learning and somatic point mutations. BMC Bioinform. 2016, 17, 476. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Al-Marri, M.; Raafat, H.; Abdallah, M.; Abdou, S.; Rashwan, M. Computer Aided Qur’an Pronunciation using DNN. J. Intell. Fuzzy Syst. 2018, 34, 3257–3271. [Google Scholar] [CrossRef]

- Elaraby, M.S.; Abdallah, M.; Abdou, S.; Rashwan, M. A Deep Neural Networks (DNN) Based Models for a Computer Aided Pronunciation Learning System. In Proceedings of the International Conference on Speech and Computer, Budapest, Hungary, 23–27 August 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 51–58. [Google Scholar]

- Campos, G.O.; Zimek, A.; Sander, J.; Campello, R.J.; Micenková, B.; Schubert, E.; Assent, I.; Houle, M.E. On the evaluation of unsupervised outlier detection: Measures, datasets, and an empirical study. Data Min. Knowl. Discov. 2016, 30, 891–927. [Google Scholar] [CrossRef]

- Gulli, A.; Pal, S. Deep Learning with Keras; Packt Publishing: Birmingham, UK, 2017. [Google Scholar]

- Wang, K.I.K.; Zhou, X.; Liang, W.; Yan, Z.; She, J. Federated Transfer Learning Based Cross-Domain Prediction for Smart Manufacturing. IEEE Trans. Ind. Inform. 2022, 18, 4088–4096. [Google Scholar] [CrossRef]

- Abdallah, M.; Lee, W.J.; Raghunathan, N.; Mousoulis, C.; Sutherland, J.W.; Bagchi, S. Anomaly detection through transfer learning in agriculture and manufacturing IoT systems. arXiv 2021, arXiv:2102.05814. [Google Scholar]

| Dataset | Seasonal Naive | DeepAR | Deep Factors | Random Forest | AutoEncoder | Auto-Regression | ARIMA | LSTM | RNN | DNN |

|---|---|---|---|---|---|---|---|---|---|---|

| Piezoelectric | ||||||||||

| MEMS | ||||||||||

| Process Data | ||||||||||

| Pharmac. Packaging |

| Metric | Seasonal Naive | DeepAR | Deep Factors | Random Forest | AutoEncoder | Auto-Regression | ARIMA | LSTM | RNN | DNN |

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | ||||||||||

| Recall | ||||||||||

| F-1 Score |

| Model Type | Sensor Tested | Accuracy (%) |

|---|---|---|

| DNN-R | MEMS | 64.23% |

| DNN-TL | MEMS | 71.71% |

| DNN-R | Piezoelectric | 80.01% |

| Tuning Factor | Accuracy | Tuning Factor | Accuracy |

|---|---|---|---|

| None | 58.00% | Feature Selection | 64.08% |

| Feature-normalization | 68.66% | Neurons per layer (50–80–100) | 69.41% |

| Number of Hidden layers (2–3) | 70.32% | Number of Epochs (50–100) | 70.75% |

| Batch Size (50-100) | 71.71% |

| (a) Data Augmentation | (b) No Data Augmentation | |||||

|---|---|---|---|---|---|---|

| Normal | Near-Failure | Failure | Normal | Near-Failure | Failure | |

| Normal | ||||||

| Near-failure | ||||||

| Failure | ||||||

| Trained RPM | RPM-100 | RPM-200 | RPM-300 | RPM-400 | RPM-500 | RPM-600 | Average (%) |

|---|---|---|---|---|---|---|---|

| RPM-100 | 68.80% | 66.64% | 65.83% | 73.61% | 67.29% | 42.90% | 64.18% |

| RPM-200 | 63.54% | 73.71% | 58.11% | 74.67% | 67.68% | 45.93% | 63.94% |

| RPM-300 | 57.99% | 55.00% | 95.20% | 66.09% | 71.32% | 45.14% | 65.12% |

| RPM-400 | 66.37% | 69.68% | 54.79% | 87.38% | 69.52% | 32.62% | 63.39% |

| RPM-500 | 65.37% | 64.94% | 80.12% | 80.20% | 75.61% | 42.59% | 68.06% |

| RPM-600 | 49.16% | 51.12% | 63.44% | 44.23% | 55.02% | 75.16% | 56.35% |

| Augmented-data model | 67.94% | 71.31% | 62.61% | 80.06% | 69.06% | 65.88% | 69.48% |

| Trained RPM | RPM-300 | RPM-320 | RPM-340 | RPM-360 | RPM-380 | Average (%) |

|---|---|---|---|---|---|---|

| RPM-300 | 100% | 65.17% | 58.17% | 51.50% | 50.63% | 65.09% |

| RPM-320 | 99.75% | 100% | 97.58% | 78.63% | 68.17% | 88.82% |

| RPM-340 | 96.60% | 99.27% | 100% | 97.33% | 82.43% | 95.12% |

| RPM-360 | 96.60% | 99.27% | 97.33% | 99.67% | 84.43% | 95.46% |

| RPM-380 | 61.05% | 87.75% | 96.93% | 99.83% | 99.77% | 89.07% |

| Framework | Sensor Failure Detection | Transfer Learning Support | Benchmarking ML Models | Defect Type Classification | Different RPM Aggregation | Dataset Release (Opensource) |

|---|---|---|---|---|---|---|

| Alfeo [21] | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Lee [20] | ✓ | ✗ | ✓ | ✗ | ✗ | ✗ |

| Teng [32] | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ |

| Wang [40] | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ |

| Udmale [41] | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ |

| Fathi [42] | ✓ | ✗ | ✓ | ✗ | ✗ | ✓ |

| Lewis [43] | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ |

| Kevin [58] | ✗ | ✓ | ✗ | ✗ | ✗ | ✓ |

| Ours | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdallah, M.; Joung, B.-G.; Lee, W.J.; Mousoulis, C.; Raghunathan, N.; Shakouri, A.; Sutherland, J.W.; Bagchi, S. Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets. Sensors 2023, 23, 486. https://doi.org/10.3390/s23010486

Abdallah M, Joung B-G, Lee WJ, Mousoulis C, Raghunathan N, Shakouri A, Sutherland JW, Bagchi S. Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets. Sensors. 2023; 23(1):486. https://doi.org/10.3390/s23010486

Chicago/Turabian StyleAbdallah, Mustafa, Byung-Gun Joung, Wo Jae Lee, Charilaos Mousoulis, Nithin Raghunathan, Ali Shakouri, John W. Sutherland, and Saurabh Bagchi. 2023. "Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets" Sensors 23, no. 1: 486. https://doi.org/10.3390/s23010486

APA StyleAbdallah, M., Joung, B.-G., Lee, W. J., Mousoulis, C., Raghunathan, N., Shakouri, A., Sutherland, J. W., & Bagchi, S. (2023). Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets. Sensors, 23(1), 486. https://doi.org/10.3390/s23010486