A Speech Enhancement Algorithm for Speech Reconstruction Based on Laser Speckle Images

Abstract

1. Introduction

- The frequency response of long-distance speech reconstruction based on laser speckle image is proposed.

- Our algorithm is a speech enhancement algorithm designed to reduce the influence of frequency response, which greatly improves the accuracy of reconstructed speech signals.

2. Methodology

2.1. Digital Image Correlation Method

2.2. Speech Enhancement Algorithm

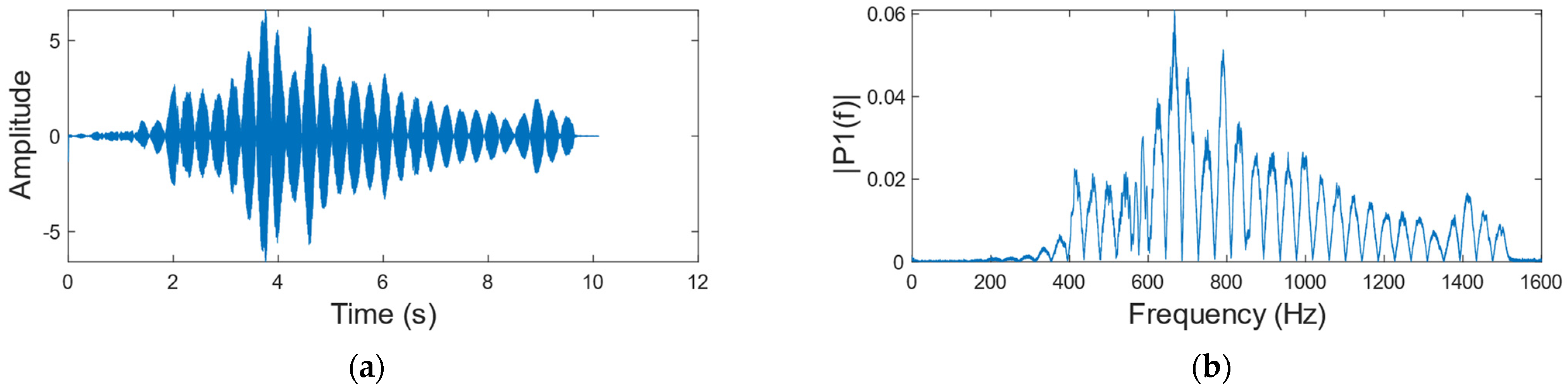

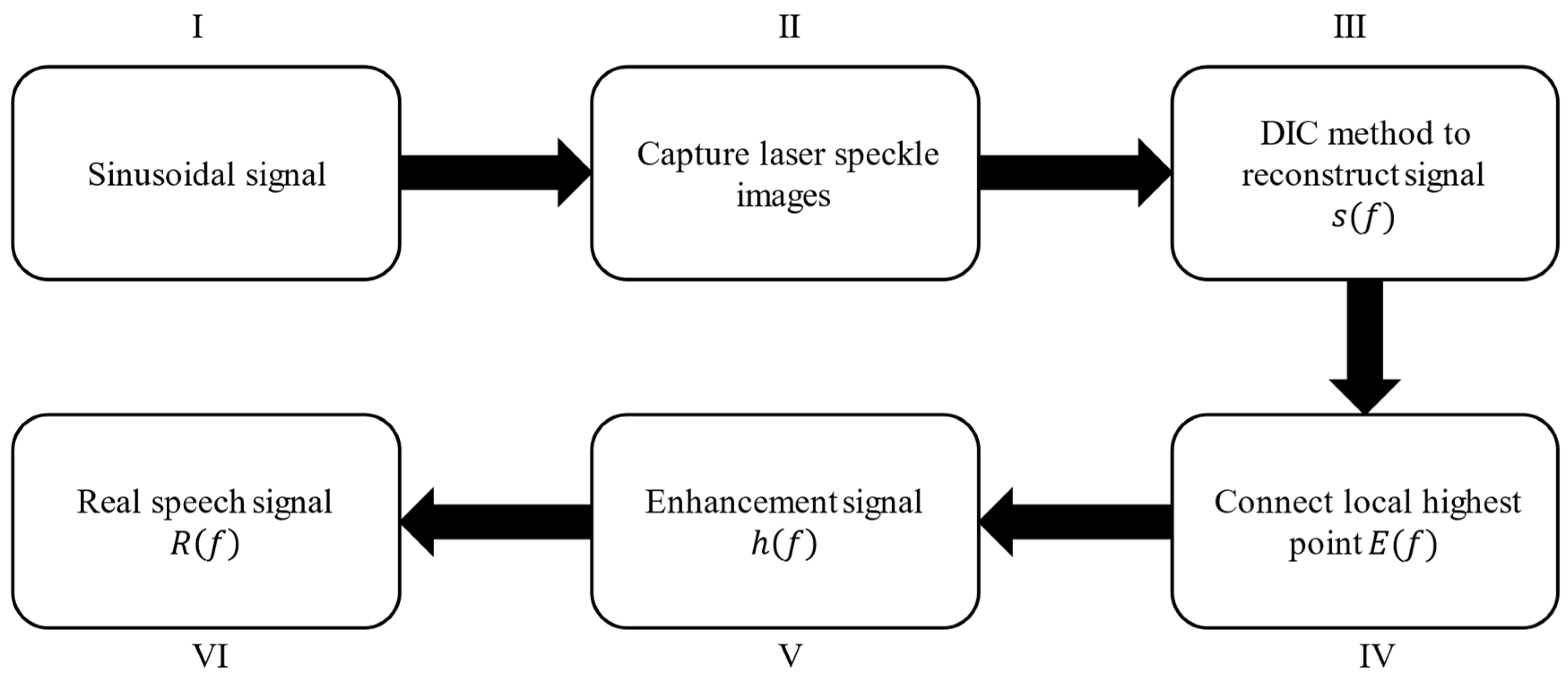

- Sinusoidal signal: Table 1 shows the common frequency range of speech. The sinusoidal signal which frequency varies from 80 to 1600 Hz and amplitude remains constant at 1.

- Capture laser speckle images: Use the high-speed camera to collect the corresponding laser speckle images.

- DIC method: Speech signals are reconstructed from speckle images using the DIC method described in Section 2.1. The discrete frequency domain sequence of the sinusoidal signal reconstructed is

- Connect local highest point: Connect the local highest points of the amplitude signal in the reconstructed speech spectrum to obtain its envelope signal. The envelope signal is named

- Enhancement signal: Equation (8) shows the calculation formula of the signal . Note that at some frequencies is very small, which will make very large. When is greater than 1000, set it to 1.

- Real speech domain: Multiply the discrete frequency domain sequence of the reconstructed real speech with the signal . The enhanced speech discrete frequency domain sequence is . According to the frequency range of speech, we process the speech with the frequency of 80–1400Hz.

3. Experimental Setup

- The high-speed camera MVCAM AI-030U815M, with a maximum frame rate of 3200 frames per second (fps);

- He-Ne laser (detailed parameters are shown in Table 2);

- Fiber laser (detailed parameters are shown in Table 3);

- Machine vision experiment frame, with fine-tuning camera clip and universal clip;

- One personal computer (PC) with universal serial bus 3.0 (USB3.0) interface.

4. Experiment Datasets and Evaluation Metrics

4.1. Data Collection

4.1.1. Sinusoidal Datasets

4.1.2. Two Laser Datasets

4.1.3. Five Vibration Object Datasets

4.2. Evaluation Metrics

5. Results

5.1. Performance on Sinusoidal Datasets

5.2. Performance on Two Laser Datasets

5.3. Performance on Five Vibration Object Datasets

5.4. Discussions

- (1)

- Loudspeaker performance: In order to provide a benchmark for frequency response, we use a sinusoidal signal which frequency varies from 80 to 1600 Hz and amplitude remains constant at 1 as the audio at the sound source. However, there will be errors in the sinusoidal signal played by the loudspeaker. These errors will be fewer if the loudspeaker performance is better.

- (2)

- Environmental noise and platform vibration: In the experiment, we find that the environmental noise and the vibration of the experimental platform seriously interfere with the sinusoidal signal reconstruction, and then affect the frequency spectrum correlation coefficient between the original sinusoidal signal and the reconstructed signal. The reconstructed sinusoidal signal contains environmental noise and vibration of the experimental platform, but the original signal is a clean and impurity-free speech, and the correlation coefficient between the two is low. Therefore, a quiet environment and a stable experimental platform are conducive to the verification of experimental results.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Clavel, C.; Ehrette, T.; Richard, G. Events Detection for an Audio-Based Surveillance System. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 1306–1309. [Google Scholar]

- Zieger, C.; Brutti, A.; Svaizer, P. Acoustic Based Surveillance System for Intrusion Detection. In Proceedings of the 2009 Sixth IEEE International Conference on Advanced Video and Signal Based Surveillance, Genova, Italy, 2–4 September 2009; pp. 314–319. [Google Scholar]

- Castellini, P.; Martarelli, M.; Tomasini, E.P. Laser doppler vibrometry: Development of advanced solutions answering to technology’s needs. Mech. Syst. Signal Process. 2006, 20, 1265–1285. [Google Scholar] [CrossRef]

- Li, R.; Wang, T.; Zhu, Z.; Xiao, W. Vibration characteristics of various surfaces using an LDV for long-range voice acquisition. IEEE Sens. J. 2010, 11, 1415–1422. [Google Scholar] [CrossRef]

- Li, R.; Madampoulos, N.; Zhu, Z.; Xie, L. Performance comparison of an all-fiber-based laser Doppler vibrometer for remote acoustical signal detection using short and long coherence length lasers. Appl. Opt. 2012, 51, 5011–5018. [Google Scholar] [CrossRef] [PubMed]

- Rothberg, S.J.; Allen, M.S.; Castellini, P.; Maio, D.D.; Dirckx, J.; Ewins, D.J.; Halkon, B.J.; Muyshondt, P.; Paone, N.; Ryan, T. An international review of laser doppler vibrometry: Making light work of vibration measurement. Opt. Lasers Eng. 2017, 99, 11–22. [Google Scholar] [CrossRef]

- Wu, S.S.; Lv, T.; Han, X.Y.; Yan, C.H.; Zhang, H.Y. Remote audio signals detection using a partial-fiber laser Doppler vibrometer. Appl. Acoust. 2018, 130, 216–221. [Google Scholar] [CrossRef]

- Garg, P.; Nasimi, R.; Ozdagli, A.; Zhang, S.; Mascarenas, D.; Taha, M.R.; Moreu, F. Measuring transverse displacements using unmanned aerial systems laser doppler vibrometer (UAS-LDV): Development and field validation. Sensors 2020, 20, 6051. [Google Scholar] [CrossRef]

- Matoba, O.; Inokuchi, H.; Nitta, K.; Awatsuji, Y. Optical voice recorder by off-axis digital holography. Opt. Lett. 2014, 39, 6549–6552. [Google Scholar] [CrossRef]

- Ishikawa, K.; Tanigawa, R.; Yatabe, K.; Oikawa, Y.; Onuma, T.; Niwa, H. Simultaneous imaging of flow and sound using high-speed parallel phase-shifting interferometry. Opt. Lett. 2018, 43, 991–994. [Google Scholar] [CrossRef]

- Bianchi, S. Vibration detection by observation of speckle patterns. Appl. Opt. 2014, 53, 931–936. [Google Scholar] [CrossRef]

- Davis, A.; Rubinstein, M.; Wadhwa, N.; Mysore, G.J.; Durand, F.; Freeman, W.T. The visual microphone: Passive recovery of sound from video. ACM Trans. Graph. 2014, 33, 1–10. [Google Scholar] [CrossRef]

- Peters, W.H.; Ranson, W.F. Digital imaging techniques in experiment stress analysis. Opt. Eng. 1982, 21, 427–431. [Google Scholar] [CrossRef]

- Yamaguchi, I. Advances in the laser speckle strain gauge. Opt. Eng. 1988, 27, 214–218. [Google Scholar] [CrossRef]

- Zhu, G.; Yao, X.R.; Qiu, P.; Mahmood, W.; Yu, W.K.; Sun, Z.B.; Zhai, G.J.; Zhao, Q. Sound recovery via intensity variations of speckle pattern pixels selected with variance-based method. Opt. Eng. 2018, 57, 026117. [Google Scholar] [CrossRef]

- Wu, N.; Haruyama, S. The 20k samples-per-second real time detection of acoustic vibration based on displacement estimation of one-dimensional laser speckle images. Sensors 2021, 21, 2938. [Google Scholar] [CrossRef] [PubMed]

- Goodman, J.W. Speckle Phenomena in Optics: Theory and Application; Roberts and Company: Placerville, CA, USA, 2007. [Google Scholar]

- Zalevsky, Z.; Beiderman, Y.; Margalit, I.; Gingold, S.; Teicher, M.; Mico, V.; Garcia, J. Simultaneous remote extraction of multiple speech sources and heart beats from secondary speckles pattern. Opt. Express 2009, 17, 21566–21580. [Google Scholar] [CrossRef] [PubMed]

- Hua, T.; Xie, H.; Wang, S.; Hu, Z.; Chen, P.; Zhang, Q. Evaluation of the quality of a speckle pattern in the digital image correlation method by mean subset fluctuation. Opt. Laser Technol. 2011, 43, 9–13. [Google Scholar] [CrossRef]

- Blaber, J.; Adair, B.; Antoniou, A. Ncorr: Open-source 2d digital image correlation matlab software. Exp. Mech. 2015, 55, 1105–1122. [Google Scholar]

- Li, L.; Gubarev, F.A.; Klenovskii, M.S.; Bloshkina, A.I. Vibration measurement by means of digital speckle correlation. In Proceedings of the 2016 International Siberian Conference on Control and Communications (SIBCON), Moscow, Russia, 12–14 May 2016; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2016; pp. 1–5. [Google Scholar]

- Hu, W.; Miao, H. Sub-pixel displacement algorithm in temporal sequence digital image correlation based on correlation coefficient weighted fitting. Opt. Lasers Eng. 2018, 110, 410–414. [Google Scholar] [CrossRef]

- Duadi, D.; Ozana, N.; Shabairou, N.; Wolf, M.; Zalevsky, Z.; Primov-Fever, A. Non-contact optical sensing of vocal fold vibrations by secondary speckle patterns. Opt. Express 2020, 28, 20040–20050. [Google Scholar] [CrossRef]

- Liushnevskaya, Y.D.; Gubarev, F.A.; Li, L.; Nosarev, A.V.; Gusakova, V.S. Measurement of whole blood coagulation time by laser speckle pattern correlation. Biomed. Eng. 2020, 54, 262–266. [Google Scholar] [CrossRef]

- Wu, N.; Haruyama, S. Real-time sound detection and regeneration based on optical flow algorithm of laser speckle images. In Proceedings of the 2019 28th Wireless and Optical Communications Conference (WOCC), Beijing, China, 9–10 May 2019; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2019; pp. 1–4. [Google Scholar]

- Wu, N.; Haruyama, S. Real-time audio detection and regeneration of moving sound source based on optical flow algorithm of laser speckle images. Opt. Express 2020, 28, 4475–4488. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Wang, C.; Huang, C.; Fu, H.; Luo, H.; Wang, H. Audio signal reconstruction based on adaptively selected seed points from laser speckle images. Opt. Commun. 2014, 331, 6–13. [Google Scholar] [CrossRef]

- Zhu, G.; Yao, X.-R.; Sun, Z.-B.; Qiu, P.; Wang, C.; Zhai, G.-J.; Zhao, Q. A High-Speed Imaging Method Based on Compressive Sensing for Sound Extraction Using a Low-Speed Camera. Sensors 2018, 18, 1524. [Google Scholar] [CrossRef]

- Heikkinen, J.; Schajer, G.S. A geometric model of surface motion measurement by objective speckle imaging. Opt. Lasers Eng. 2020, 124, 105850. [Google Scholar] [CrossRef]

- Zhu, D.; Yang, L.; Li, Z.; Zeng, H. Remote speech extraction from speckle image by convolutional neural network. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7 July 2020; pp. 1–6. [Google Scholar]

- Ma, C.; Ren, Q.; Zhao, J. Optical-numerical method based on a convolutional neural network for full-field subpixel displacement measurements. Opt. Express 2021, 29, 9137–9156. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Li, L.; Huang, X.; Wang, X.; Wang, C. Audio signal extraction and enhancement based on cnn from laser speckles. IEEE Photonics J. 2021, 14, 1–5. [Google Scholar] [CrossRef]

- Zhu, D.; Yang, L.; Zeng, H. Remote recovery of sound from speckle pattern video based on convolutional LSTM. In Proceedings of the International Conference on Information and Communications Security (ICICS), Chongqing, China, 29 November 2021; pp. 110–124. [Google Scholar]

- Barcellona, C.; Halpaap, D.; Amil, P.; Buscarino, A.; Fortuna, L.; Tiana-Alsina, J.; Masoller, C. Remote recovery of audio signals from videos of optical speckle patterns: A comparative study of signal recovery algorithms. Opt. Express 2020, 28, 8716–8723. [Google Scholar] [CrossRef]

- Boas, D.A.; Dunn, A.K. Laser speckle contrast imaging in biomedical optics. J. Biomed. Opt. 2010, 15, 011109. [Google Scholar] [CrossRef]

- Bi, R.; Du, Y.; Singh, G.; Ho, J.H.; Zhang, S.; Attia, A.B.E.; Li, X.; Olivo, M.C. Fast pulsatile blood flow measurement in deep tissue through a multimode detection fiber. J. Biomed. Opt. 2020, 25, 055003. [Google Scholar] [CrossRef]

| Sound Area | Male | Female |

|---|---|---|

| Bass | 82–392 | 82–392 |

| Midrange | 123–493 | 123–493 |

| Treble | 164–698 | 220–1100 |

| Fundamental frequency range | 64–523 | 160–1200 |

| Parameter | Quantity | Unit |

|---|---|---|

| Wavelength | 632.8 | nm |

| Operating current | 4~6 | mA |

| Rated voltage | 220 ± 22 | V |

| Rated frequency | 50 | Hz |

| Rated input power | <20 | W |

| Parameter | Quantity | Unit |

|---|---|---|

| Center wavelength | 635 | nm |

| Continuous output power | 60 | mW |

| Operating voltage | 2.55 | V |

| Threshold current | 70 | mA |

| Operating current | 190 | mA |

| Vibration Object | Number of Speckle Images | Acquisition Time (s) |

|---|---|---|

| Carton | 32,344 | 10.1 |

| A4 paper | 35,952 | 11.2 |

| Plastic cup | 31,941 | 10.0 |

| Paper cup | 32,970 | 10.3 |

| Leaf | 33,427 | 10.4 |

| Laser | Number of Speckle Images | Acquisition Time (s) |

|---|---|---|

| He-Ne laser | 16,213 | 5.1 |

| Fiber laser | 18,975 | 5.9 |

| Vibration Object | Number of Speckle Images | Acquisition Time (s) |

|---|---|---|

| Carton | 18,140 | 5.7 |

| A4 paper | 16,785 | 5.2 |

| Plastic cup | 19,443 | 6.1 |

| Paper cup | 19,247 | 6.0 |

| Leaf | 18,647 | 5.8 |

| Vibration Object | DIC | DIC + Speech Enhancement | Improve |

|---|---|---|---|

| Carton | 0.2968 | 0.4548 | 53.23% |

| A4 paper | 0.3348 | 0.4561 | 36.23% |

| Plastic cup | 0.2593 | 0.4731 | 82.45% |

| Paper cup | 0.2844 | 0.4409 | 55.03% |

| Leaf | 0.2460 | 0.3963 | 61.10% |

| Laser | DIC | DIC + Speech Enhancement | Improve |

|---|---|---|---|

| He-Ne laser | 0.3624 | 0.5668 | 56.40% |

| Fiber laser | 0.4155 | 0.5849 | 40.77% |

| Vibration Object | DIC | DIC + Speech Enhancement | Improve |

|---|---|---|---|

| Carton | 0.4107 | 0.6234 | 51.79% |

| A4 paper | 0.6280 | 0.6478 | 3.15% |

| Plastic cup | 0.4244 | 0.4786 | 12.77% |

| Paper cup | 0.3883 | 0.5862 | 50.97% |

| Leaf | 0.4416 | 0.4735 | 7.22% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, X.; Zhu, D.; Wang, X.; Yang, L.; Zeng, H. A Speech Enhancement Algorithm for Speech Reconstruction Based on Laser Speckle Images. Sensors 2023, 23, 330. https://doi.org/10.3390/s23010330

Hao X, Zhu D, Wang X, Yang L, Zeng H. A Speech Enhancement Algorithm for Speech Reconstruction Based on Laser Speckle Images. Sensors. 2023; 23(1):330. https://doi.org/10.3390/s23010330

Chicago/Turabian StyleHao, Xueying, Dali Zhu, Xianlan Wang, Long Yang, and Hualin Zeng. 2023. "A Speech Enhancement Algorithm for Speech Reconstruction Based on Laser Speckle Images" Sensors 23, no. 1: 330. https://doi.org/10.3390/s23010330

APA StyleHao, X., Zhu, D., Wang, X., Yang, L., & Zeng, H. (2023). A Speech Enhancement Algorithm for Speech Reconstruction Based on Laser Speckle Images. Sensors, 23(1), 330. https://doi.org/10.3390/s23010330