1. Introduction

The monitoring of rotating systems through bearing sensoring is part of the implementation of predictive maintenance strategies. The deployment of such approaches is motivated by the resulting benefits for industrial rotors in terms of cost reduction and increased production [

1]. A primary concern of predictive maintenance and condition monitoring is the fault diagnosis of bearings, this is for two main reasons. First, durability assessments of rolling bearings are affected by significant uncertainties [

2], given the complex interaction between a variety of parts. Additionally, it is well established that bearings are key nodes for retrieving information on the whole mechanical system [

3]. In this context, the analysis of vibration signals represents one of the most informative tools for the assessment of machine conditions [

4].

The past thirty years have seen increasingly rapid advances in this field thanks to the development of numerous signal processing techniques for fault identification. For instance, the literature on envelope analysis has been considerably developed [

2,

4,

5,

6,

7,

8,

9,

10,

11], which has shown its effectiveness in benchmark cases [

12] and it is being implemented in industry for condition monitoring purposes. The outcomes of this kind of signal processing tool have the benefit of being highly interpretable, since the models’ assumptions are sharply identifiable. On the other hand, the outcomes may be user dependent. The extraction of diagnostic information from vibration signals is often affected by the assumptions of the identification models and by the user’s experience. For instance, choosing an optimal demodulation band [

13,

14,

15,

16,

17] naturally implies an inherent arbitrariness.

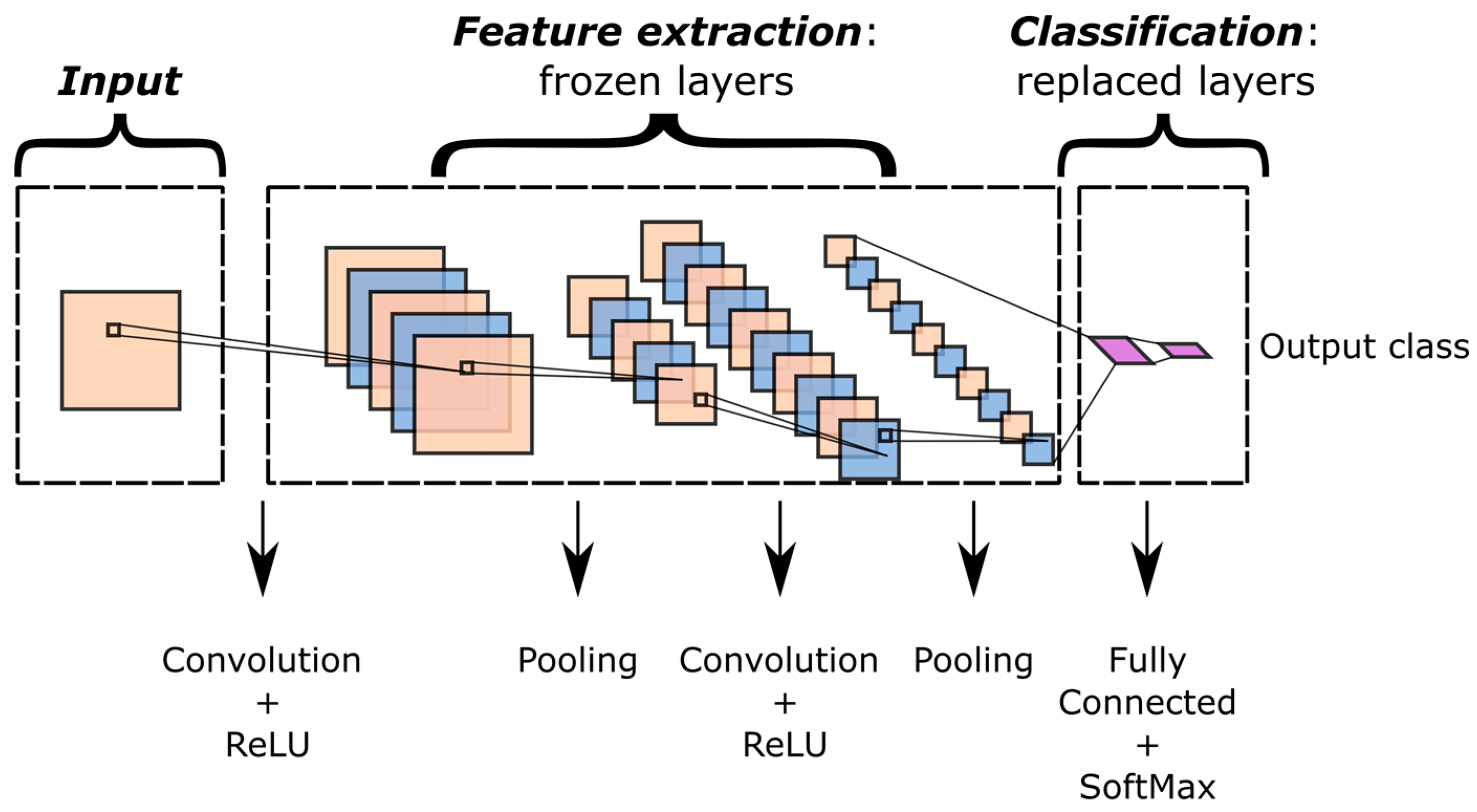

Conversely, data-driven models rely on Artificial Intelligence (AI) algorithms in order to automatically learn fault detection abilities from training data [

18,

19,

20,

21]. Although these structures can fulfil highly complex tasks, it is fairly challenging to figure out the rationale behind models’ decisions [

22,

23]. The choice and the extraction of fault features can either be manual, as in the case of the Support Vector Machine (SVM) algorithm [

24,

25,

26,

27], or automated, as in the case of the application of deep learning to several disciplines [

28,

29,

30]. Manual feature extraction is performed prior to the training by selecting features. Most of the literature concerning deep learning involves Convolutional Neural Networks (CNNs). For instance, Guo et al. trained a CNN using wavelet time-frequency images extracted from vibration signals [

31], Wen et al. [

32] developed a signal-to-image conversion method for training CNNs and Islam et al. [

33] fed a CNN by employing acoustic emission (AE) data.

One of the major drawbacks of neural networks is the amount of data needed for training, because the number of parameters to be trained is much higher than that of machine learning algorithms. Some research areas benefit from million-sample datasets to accomplish challenging tasks, as in the case of ImageNet [

34,

35] for image recognition and Audio Set [

36,

37] for audio classification. At present, the datasets involving machine vibrations [

12,

38,

39,

40] do not contain such a huge amount of samples, and industrial environments rarely have a wide range of fault data. Additionally, vibration signals are tightly connected to a specific machine and operating conditions. Therefore, the employment of large and high potential networks could produce diagnosis models that overfit training data, losing the ability to generalize diagnostic patterns to real test conditions. Additionally, the existing literature emphasizes issues in some benchmark datasets, such as the CWRU [

12,

41], which may be moreover not suitable for investigating industrial-size bearings.

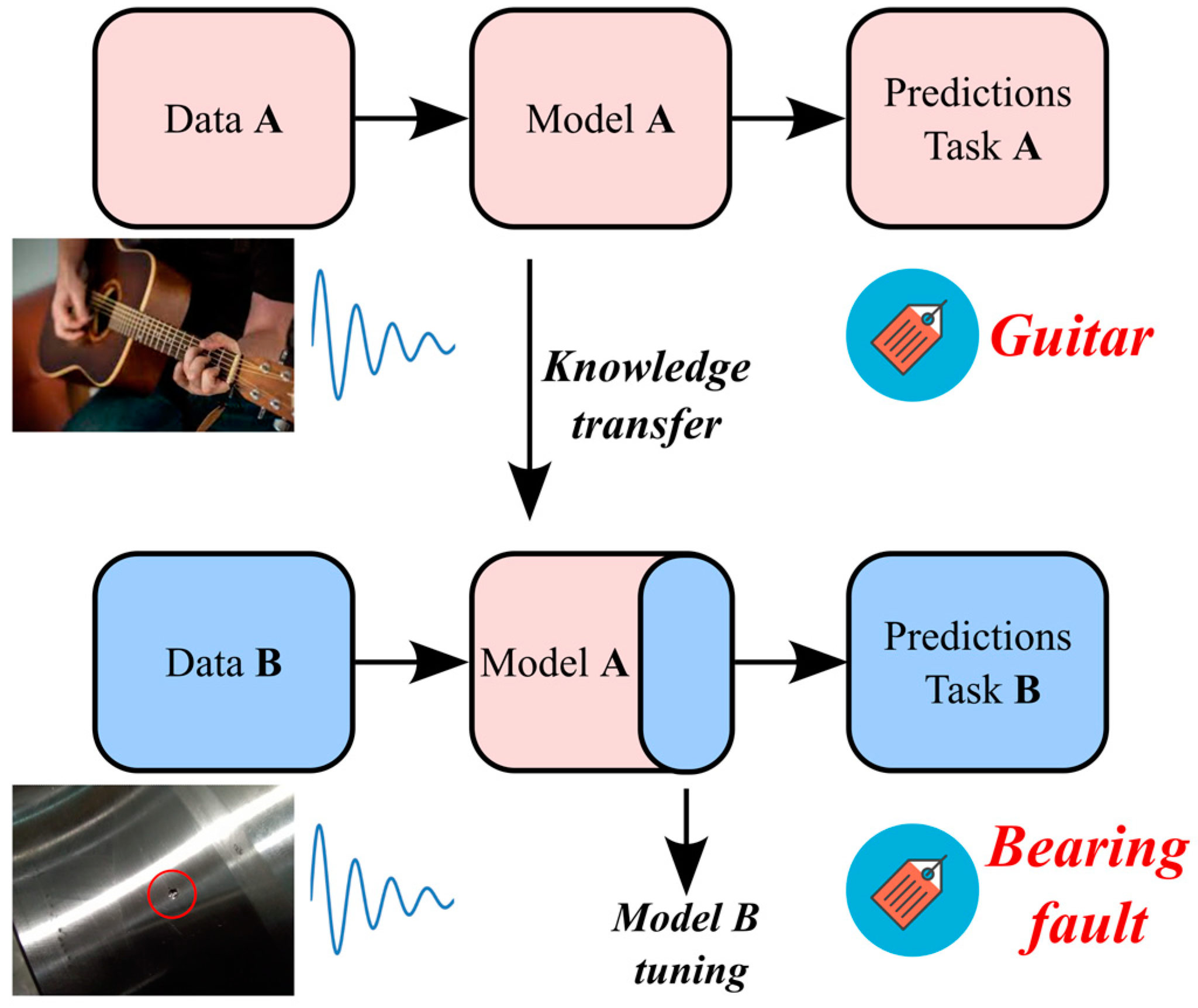

Recent evidence suggests the applicability of transfer learning (TL) [

20,

42,

43] to tackle these issues in the field of machine fault diagnosis. TL aims to reduce data collection by transferring the classification knowledge of pre-trained models to new domains or new tasks. Zhang et al. [

44] and Cao et al. [

45] showed that knowledge transfer can be realized within the same machine whenever the user wishes to apply a trained model to new operating conditions. Compound faults were analyzed by Hasan et al. [

46], whereas Wang et al. investigated RUL estimations [

47]. Instead, Guo et al. [

48] transferred a convolutional diagnosis model across different machines, whereas Chao et al. performed online domain adaptation [

49]. Similarly, improved transfer learning with hybrid feature extraction was proposed by Yang et al. [

50]. Han et al. [

51] employed joint distribution adaptation. The Generative Adversarial Networks (GANs) approach was investigated by Li et al. [

52], Shao et al. [

53] and Wang et al. [

54]. Nonetheless, recent works have showed that fault diagnosis tasks can be fulfilled on benchmark datasets by employing AI frameworks originally designed for completely different tasks such as image recognition [

55] and audio classification [

56]. However, to the best of the author’s knowledge, few studies have investigated the performances of the latter algorithms on industrial cases characterized by medium-sized bearings.

Firstly, the purpose of this investigation is to analyze a new dataset for bearing fault detection, specifically conceived for medium-sized bearings of industrial interest. Indeed, the well-known CWRU dataset presents several issues which were discussed by Smith and Randall in 2015 [

12] and by Hendriks et al. in 2022 [

57]. The findings of [

12,

57] suggest that CWRU data may be not representative of bearing faults in general and, even more so, of the industrial case analyzed in this paper. Additionally, this study is motivated by the fact that although CNNs and TL were deeply analyzed in the literature concerning bearing fault diagnosis, the capabilities of CNNs pre-trained for audio classification have been investigated very little. Indeed, the literature has mostly focused on transferring knowledge from CNNs pre-trained for image recognition [

55]. According to the author of this work, CNNs for audio classification deserve to be explored further since, unlike image recognition networks, these frameworks already contain a highly specific knowledge for extracting spectrogram features.

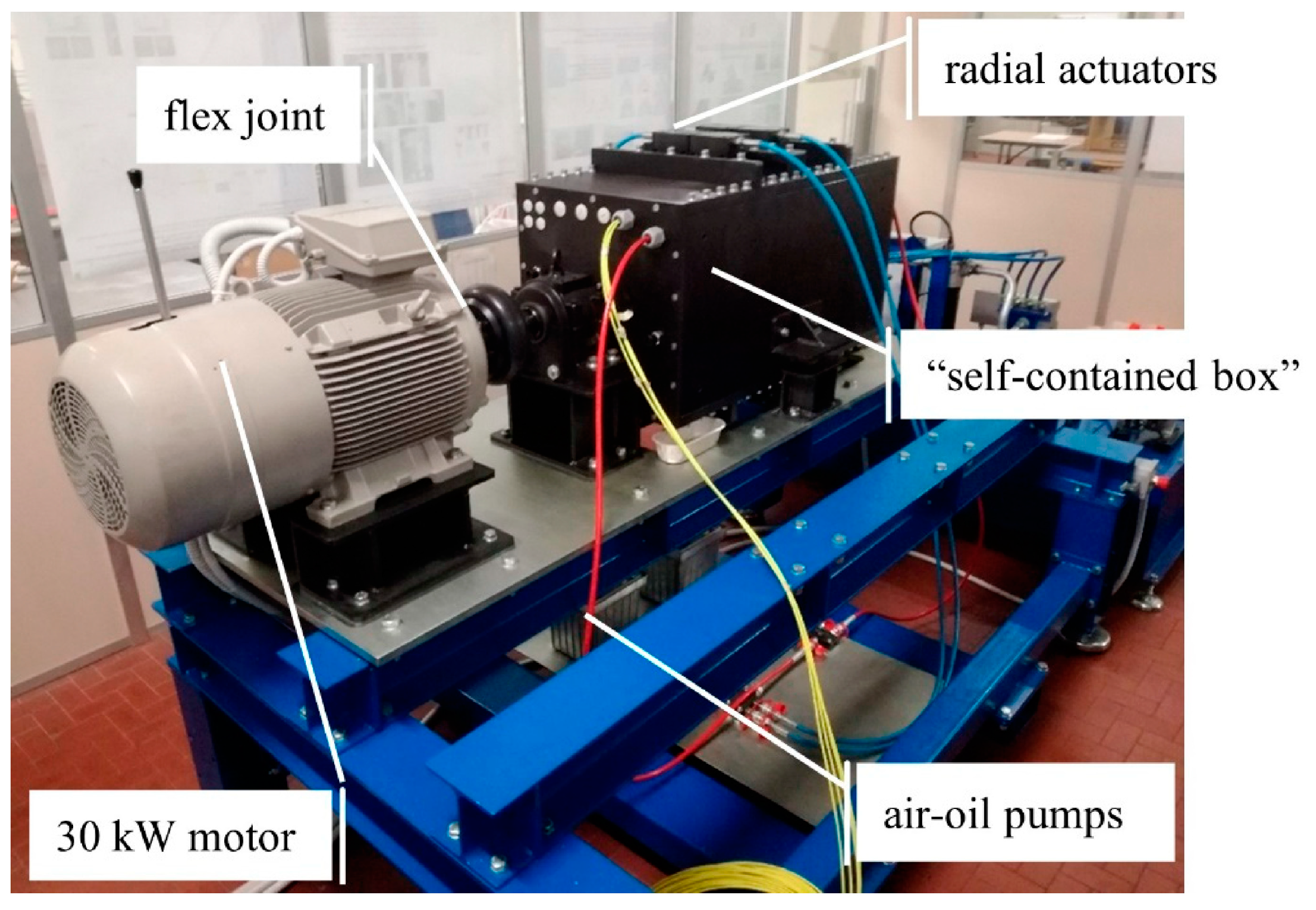

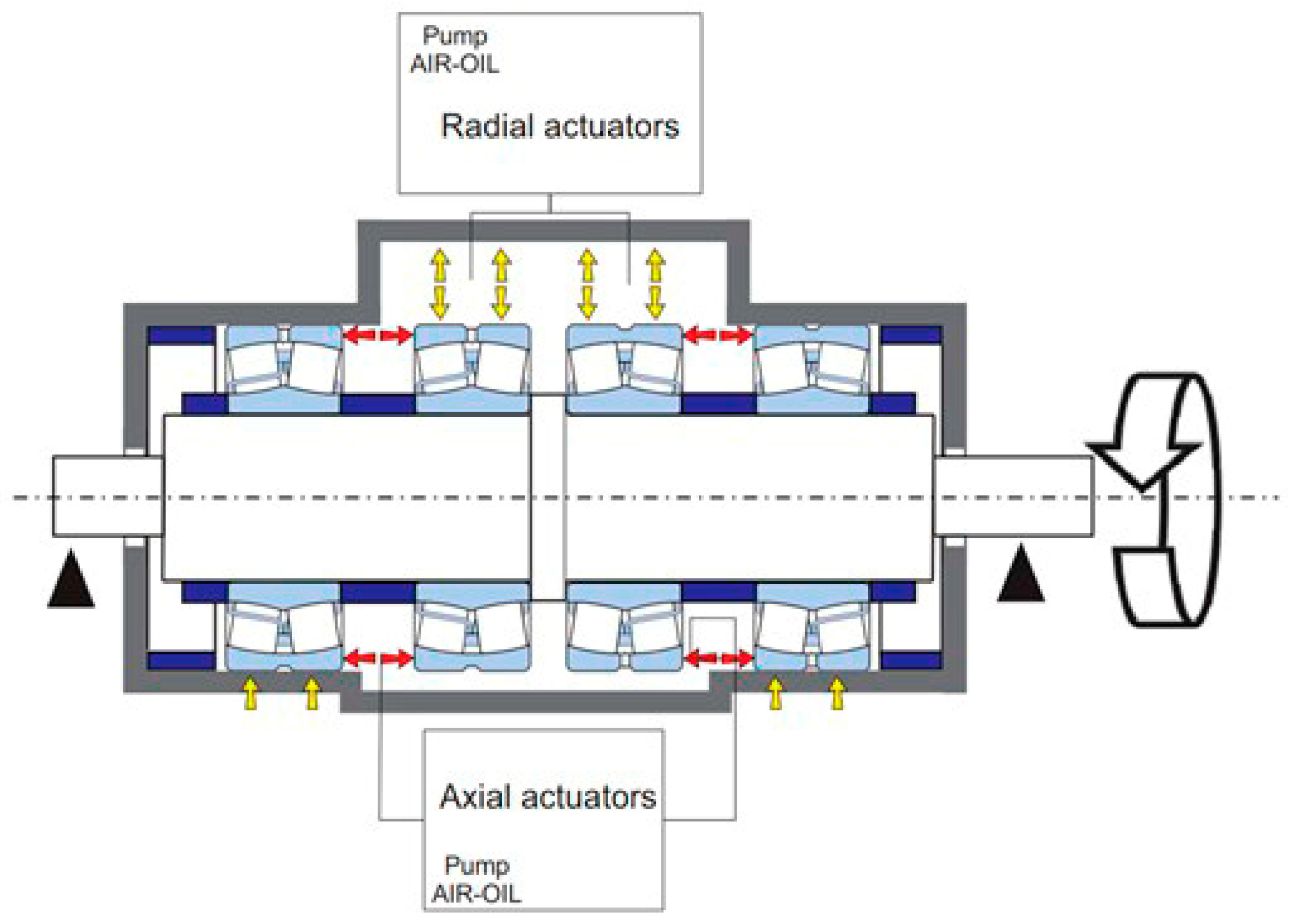

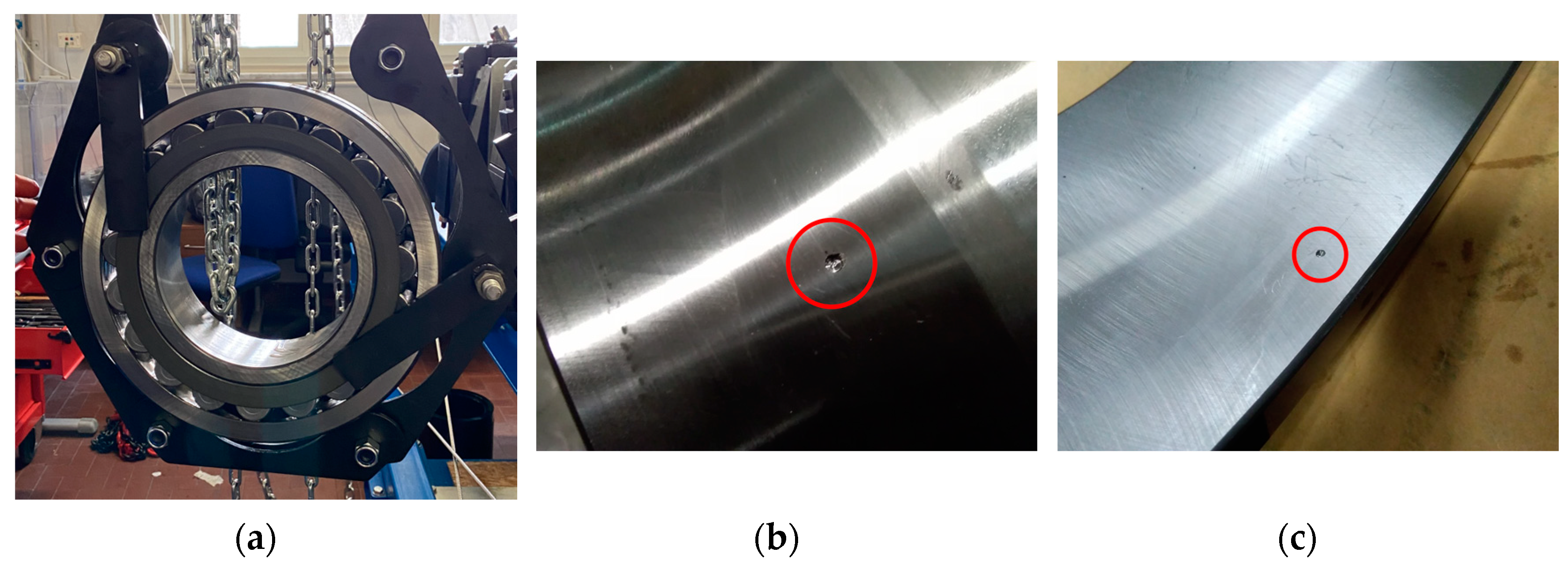

This paper discusses the application of a transfer learning methodology to the test rig available at Politecnico di Torino [

58], which was designed to accommodate medium-sized bearings of industrial interest. To the best of the author’s knowledge, this is the first work including experiments conducted on medium-size industrial bearings with localized faults. Additionally, this paper aims to explore the fault diagnosis capabilities of CNNs pre-trained for audio classification. Namely, the VGGish convolutional network [

37,

59,

60] is employed to perform bearing fault diagnosis. The VGGish network was originally trained for large-scale audio classification by using millions of audio samples extracted from YouTube® videos [

37]. In this work, the pre-trained model is fine-tuned by a few thousands vibration records retrieved under different working conditions of the machine. Such a knowledge transfer is inspired by the idea that the search of fault distinctive features in vibration spectrograms is conceptually similar to the identification of sound spectrograms [

56]. The results corroborate this hypothesis and show that the feature extraction capabilities of the pre-trained VGGish network can be effectively transferred to fault diagnosis scenarios. Thanks to the use of TL, a large-scale and high-potential classification model can be reused for the purpose of machine diagnosis by fine-tuning with a very small dataset. Furthermore, it is shown that the pre-trained VGGish model outperforms the VGGish framework trained from scratch in the presence of a thousand-sample set. Additionally, it is found that, for the case under analysis, the VGGish performs better than models pre-trained for image recognition.

The overall structure of this paper takes the form of five sections. The introductory paragraph presents the topic of intelligent fault diagnosis of industrial bearings and provides the motivations which motivated the author to perform this investigation with respect to the existing literature. The second section gives an insight into the AI methodologies involved in this study, and CNNs, transfer learning and the VGGish model are presented. A description of the test rig for industrial bearings and the vibration dataset is provided in the third section, whereas the fourth section includes results, discussion and implications. Finally, the fifth section provides the concluding remarks.

4. Results and Discussion

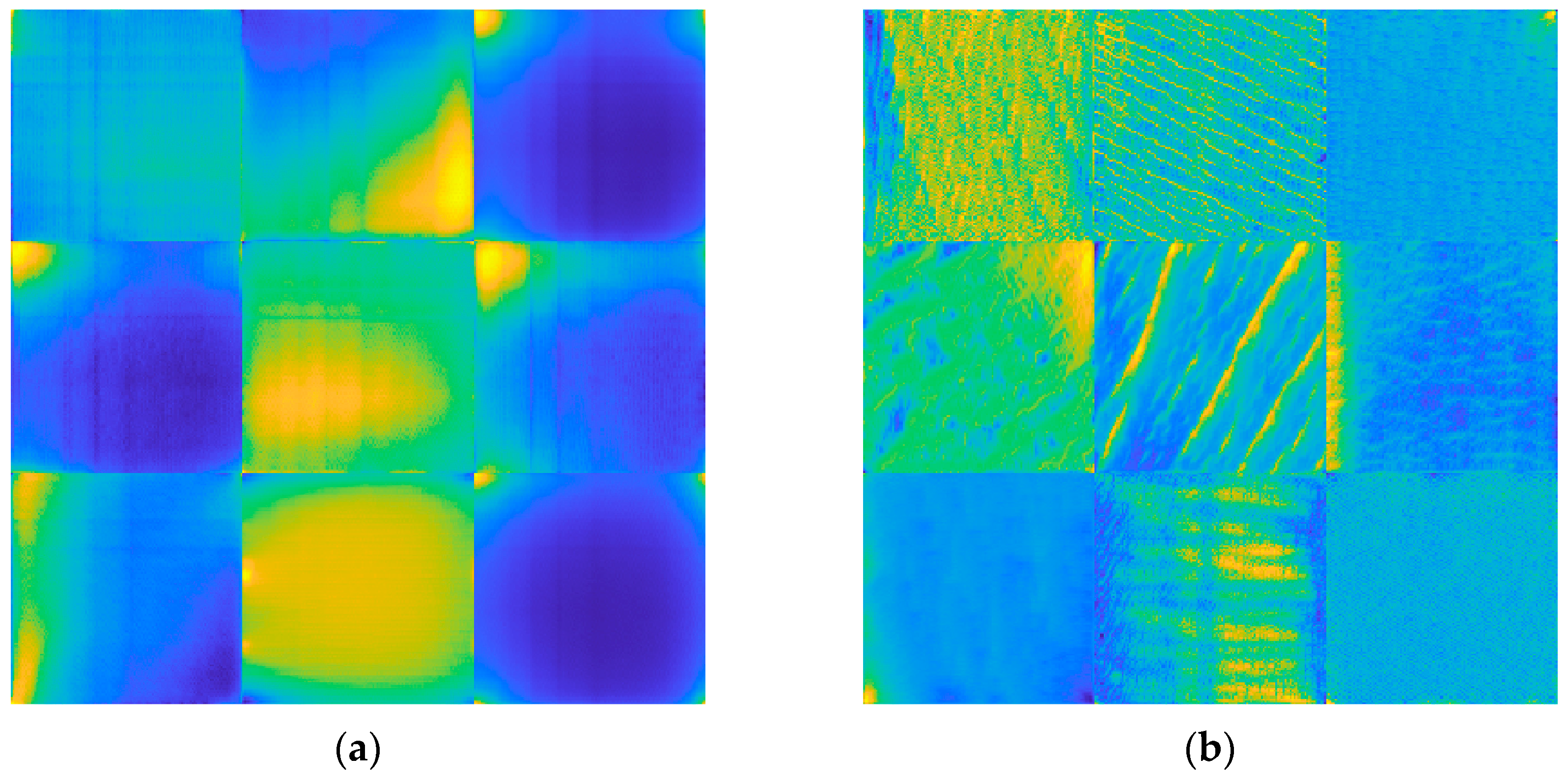

This paper investigates the capabilities of CNNs pre-trained for audio classification to perform bearing fault diagnosis. It is argued that these networks are endowed with highly specific knowledge for extracting spectrogram features. For this purpose, the vibration dataset including damaged industrial medium-sized bearings was produced by means of proper experimental activity conducted on a specifically conceived test rig. A detailed description of the hardware is provided in reference [

58]. As anticipated in

Section 2.3, the VGGish convolutional architecture can act as a spectrogram feature extractor, as long as a proper preprocessing is carried out.

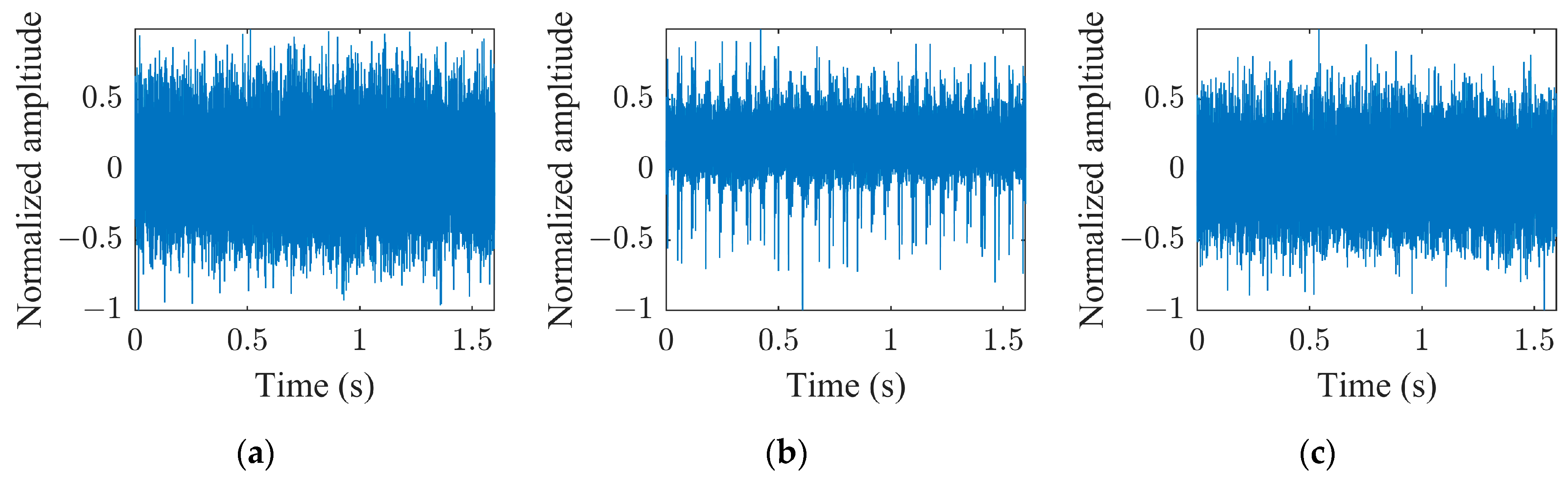

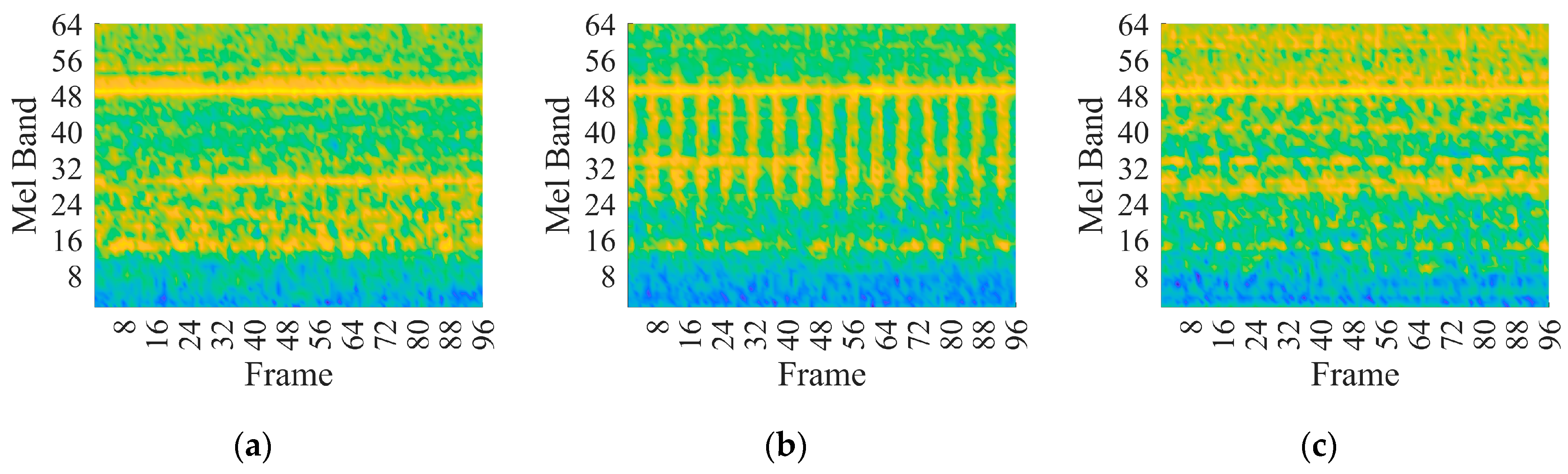

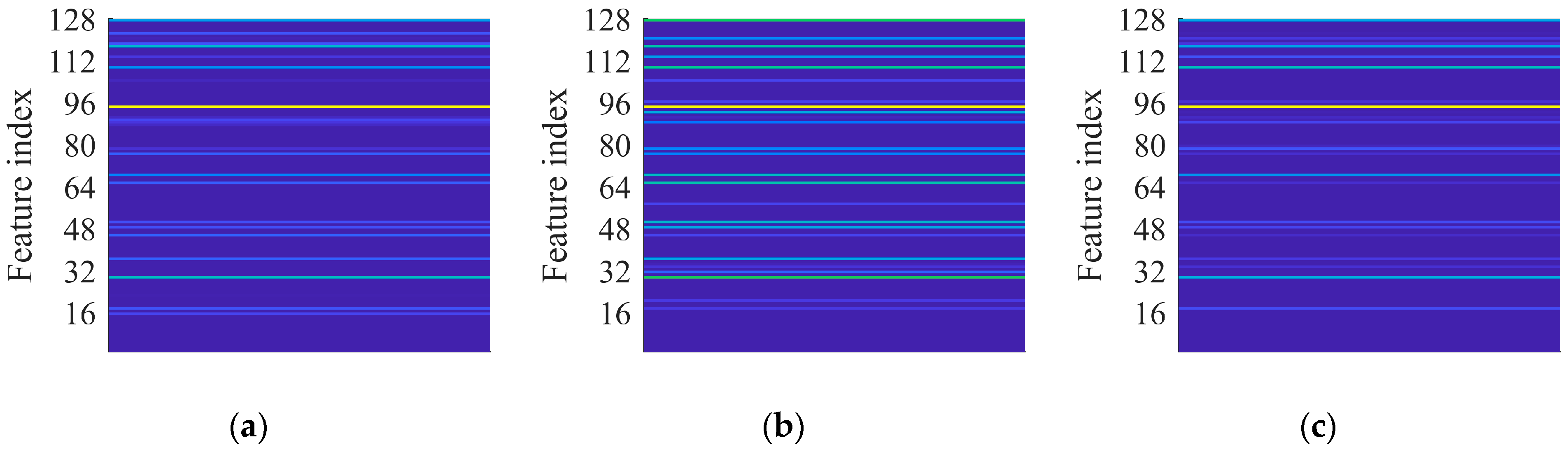

Figure 7a,

Figure 7b and

Figure 7c show examples of normalized vibration signals for the normal state, IR and OR damages, respectively.

Figure 8a–c shows the corresponding mel spectrograms obtained through the preprocessing. Finally,

Figure 9a–c shows the corresponding 128-dimensional feature embedding output from the pre-trained VGGish feature extractor. Essentially, the information dissolved in the multifaceted mel spectrograms is translated and synthetized in a low-dimensional feature space via feature embedding. The classifier can discern classes by learning the differences that establish between feature embeddings. In this particular case, the feature embedding corresponds to a vector containing 128 elements.

The model was fine-tuned using the hyperparameters reported in

Table 2. The training time was 936 s on a standard laptop without GPU acceleration (Intel

® Core i7

10510U CPU @ 1.80 GHz). The model was implemented in the Matlab

® environment by means of machine learning, deep learning and audio toolbox libraries. It is worth noting that the original VGGish structure was trained on multiple GPUs for 184 hours [

37].

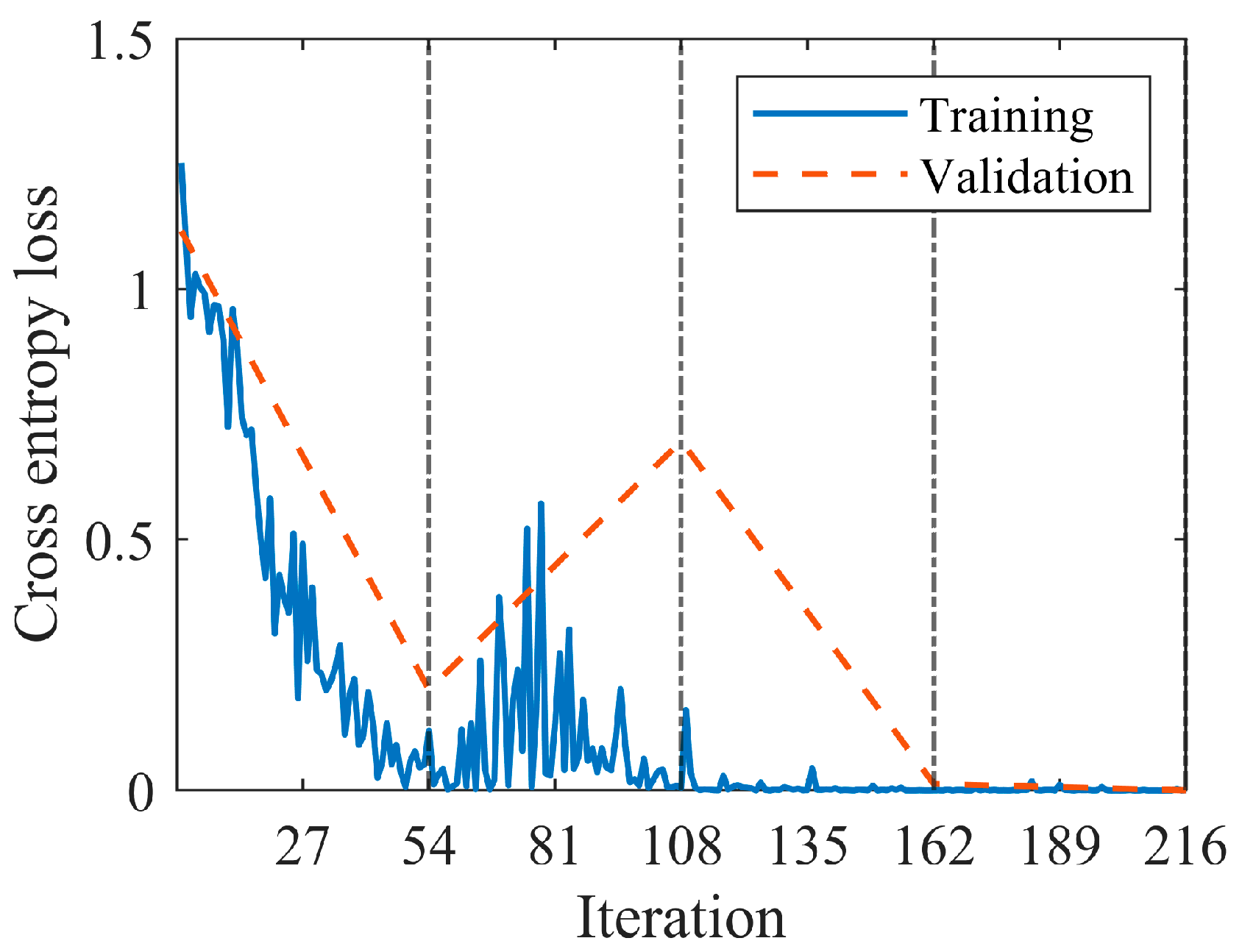

Figure 10 shows the behavior of the loss functions during the training conducted according to the parameters in

Table 2. In particular, the validation set served to monitor potential overfitting by analyzing the trend in the validation loss. The number of maximum epochs was set to four (216 iterations), since it was observed that the training process stabilized at this point and overfitting did not occur, though it was detectable during the first two epochs. The accuracies reported in

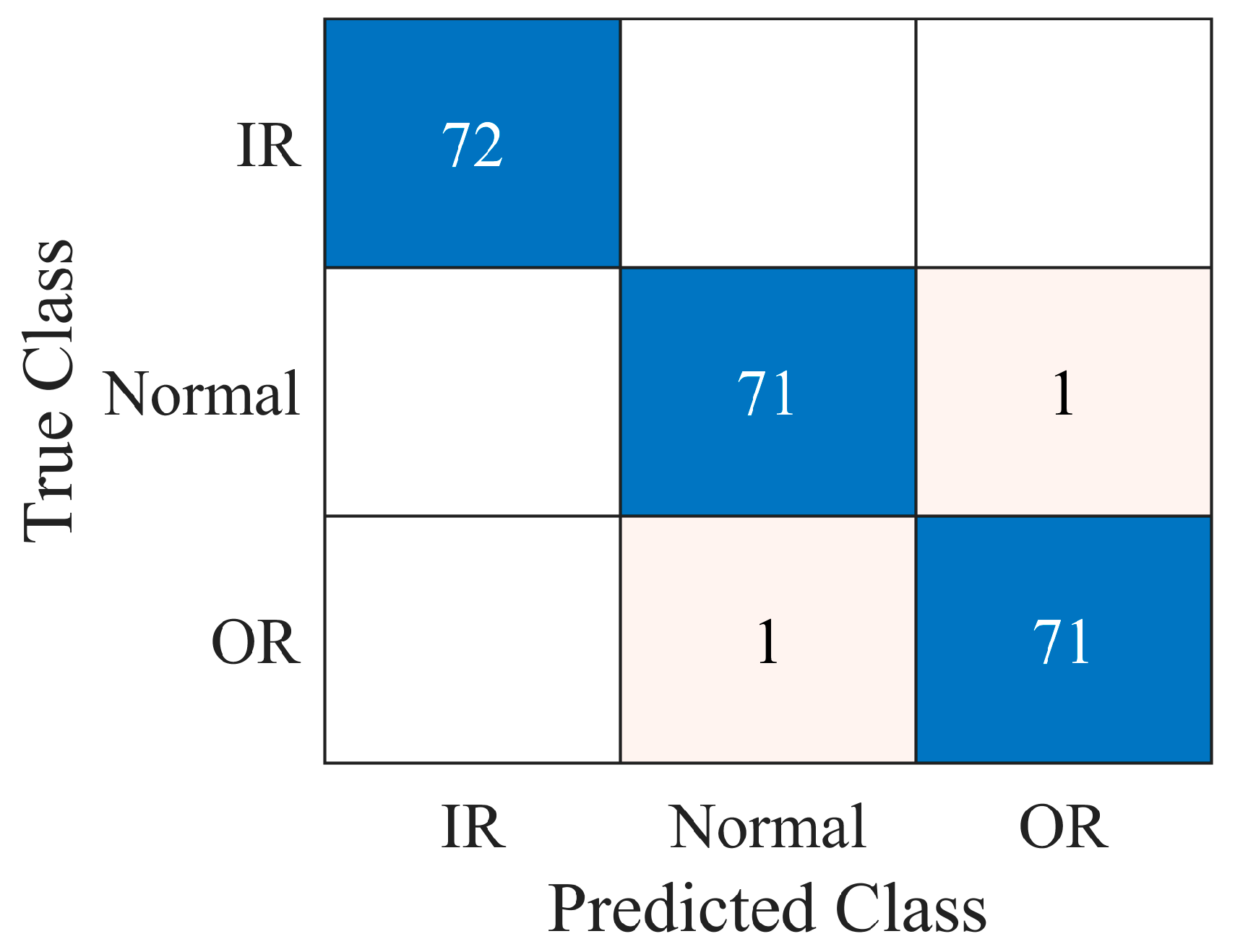

Table 7 reveal the applicability of the diagnosis model to new test data. The complete confusion matrix resulting from the test data is shown in

Figure 11. A single normal sample is predicted as OR damaged and a single OR sample is predicted as normal. Therefore, the classifier showed high precision and recall as reported in

Table 8.

Furthermore, the proposed model was compared with the VGGish model trained from scratch, the YAMNet model [

56] and the VGG16 model pre-trained on ImageNet [

34,

35] proposed by Shao et al. [

55].

Table 7 shows the accuracies obtained for the different models, whereas

Table 8 reports the precision and the recall for the different classes. The VGGish trained from scratch reaches poor diagnosis accuracies and consistent overfitting phenomena occur. This is due to the fact that the original VGGish architecture was trained on millions of samples. Therefore, the structure is inherently unsuitable for correctly learning hierarchical features over a few thousands of training samples. Given the availability of a limited amount of training data, network weights of millions are extremely prone to overfit the training set. For this reason, TL is the most effective strategy. The YAMNet model [

56] showed promising accuracies and reduced training times, but some overfitting was detectable. Finally, the VGG16 model [

55] was trained by employing wavelet time-frequency images. The training of the model under the conditions reported in [

55] required GPUs and was computationally expensive. The resulting metrics show that the VGG16 framework pre-trained on ImageNet is not suitable for the analyzed case. According to the author of this work, this is due to the fact that several convolutional layers should be retrained in the model [

55]. Consequently, more training data are required. On the other hand, few layers of the pre-trained VGGish and YAMNet need fine-tuning, since audio classification models are already capable of extracting distinctive spectrogram features. On the contrary, the knowledge contained in networks pre-trained on the ImageNet dataset cannot be considered highly specific for spectrogram recognition.

The encouraging results indicate that the TL methodology is a valuable approach for the fault diagnosis of bearings. Remarkably, the knowledge contained in a network pre-trained for sound recognition can be reused for condition monitoring tasks. Moreover, the amount of training data is considerably low with respect to the network trained from scratch. The original VGGish network was trained by using 70 million audio samples, whereas less than 2000 samples were needed for performing fault diagnosis. Therefore, deep learning frameworks endowed with high knowledge content could be exploited without the need for millions of data samples. This remarkable implication is determined by the fact that the features extracted from the pre-trained VGGish network are already capable of identifying typical spectrogram features. Then, only slight adjustments are needed to adapt the model to the classification of vibration spectrograms. The feature embedding in which the sound spectrograms are translated is therefore convenient for vibration spectrograms as well.

However, this occurrence poses an issue in the interpretation of the diagnosis outcomes. Indeed, the 128 features which flow through the classifier have no clear physical interpretation. In this case, acoustically relevant features were able to classify vibrations. In contrast to traditional signal processing tools, where some parameters (e.g., kurtosis, crest factor and ball passing frequencies) have a physical meaning, the user does not know what the features actually represent for data-driven fault diagnosis, although they may perfectly work. Therefore, it is quite challenging to estimate the features variability with respect to the changes in the input signals. Additionally, the development of proper interpretability tools is of paramount importance for the correct visualization of domains alignment in transfer learning.