Improved Spatiotemporal Framework for Human Activity Recognition in Smart Environment

Abstract

1. Introduction

2. Related Work

3. Methods

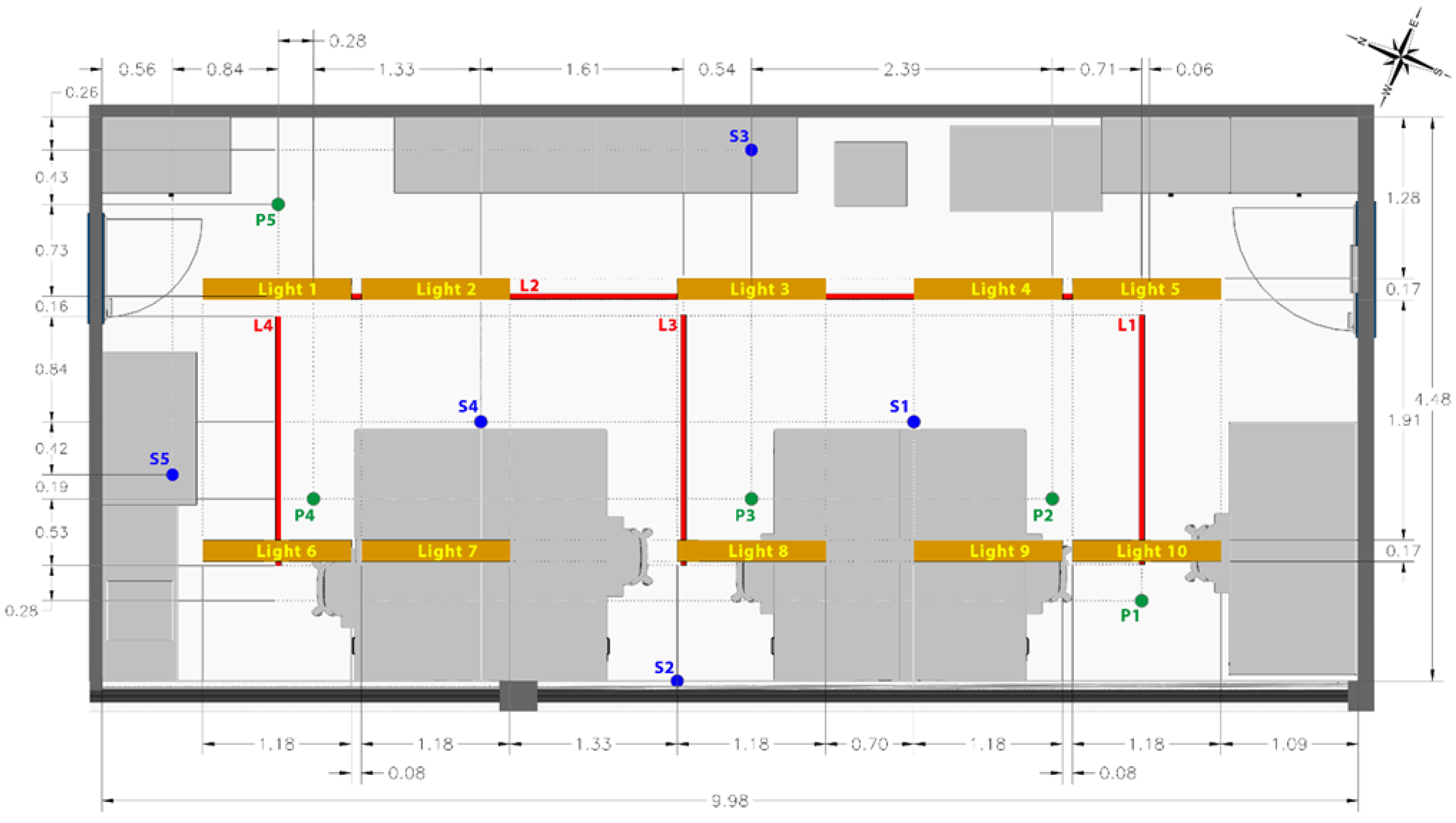

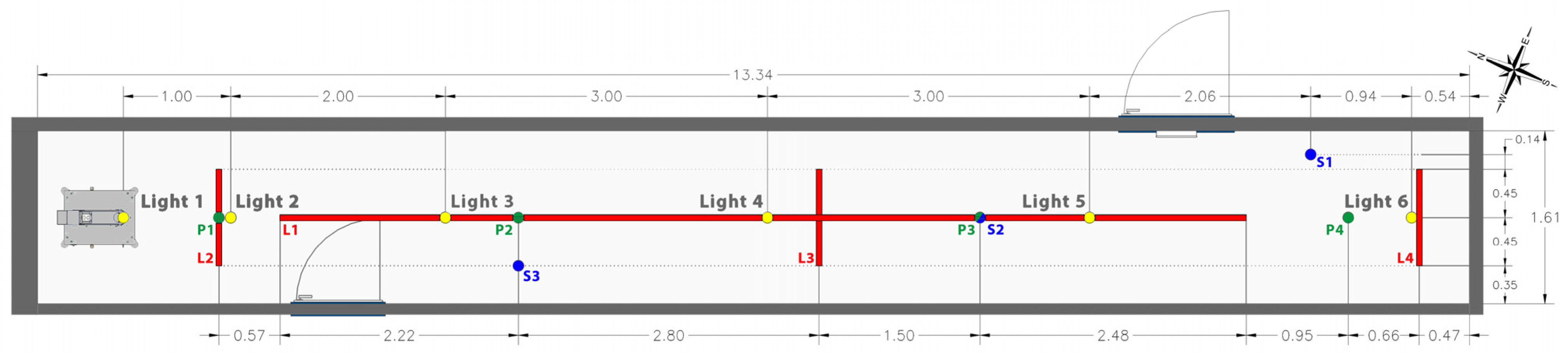

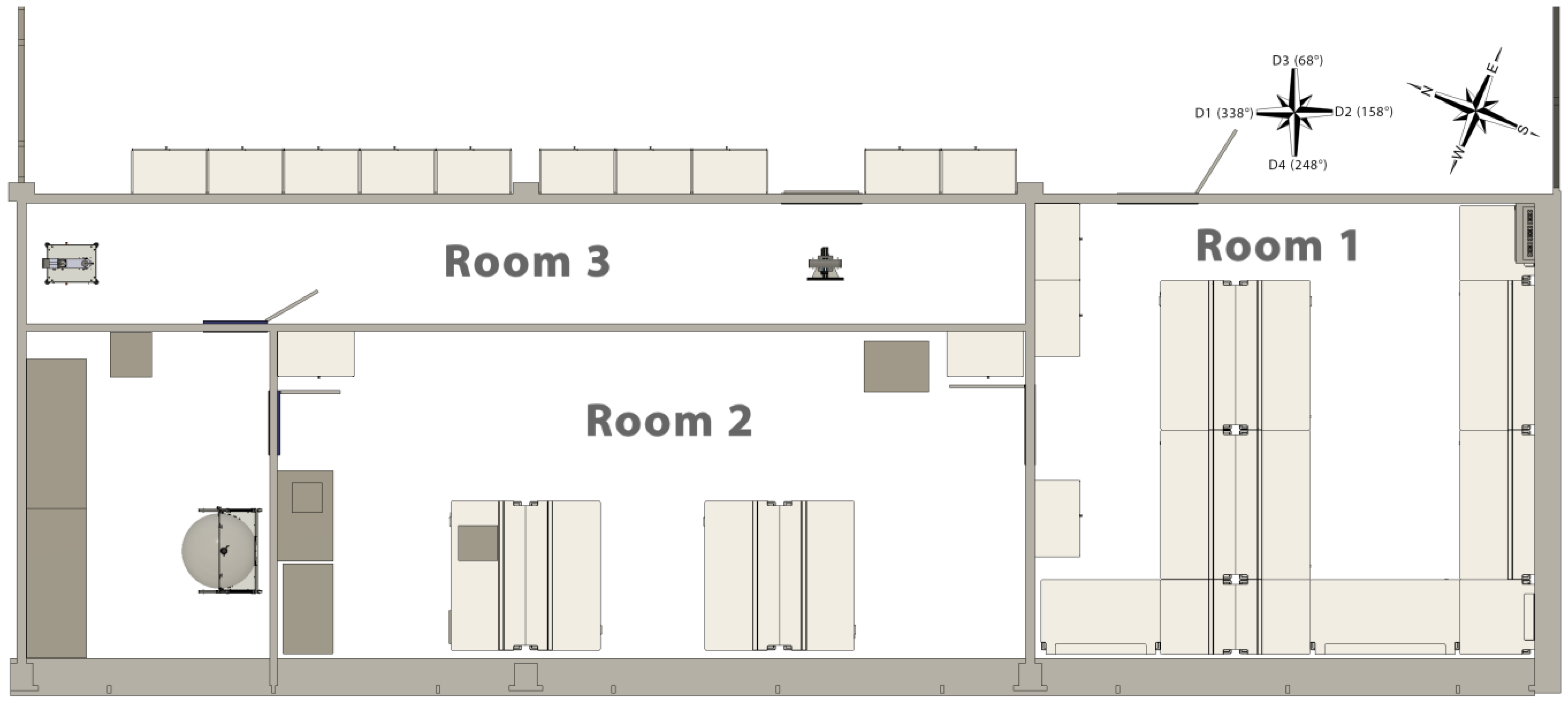

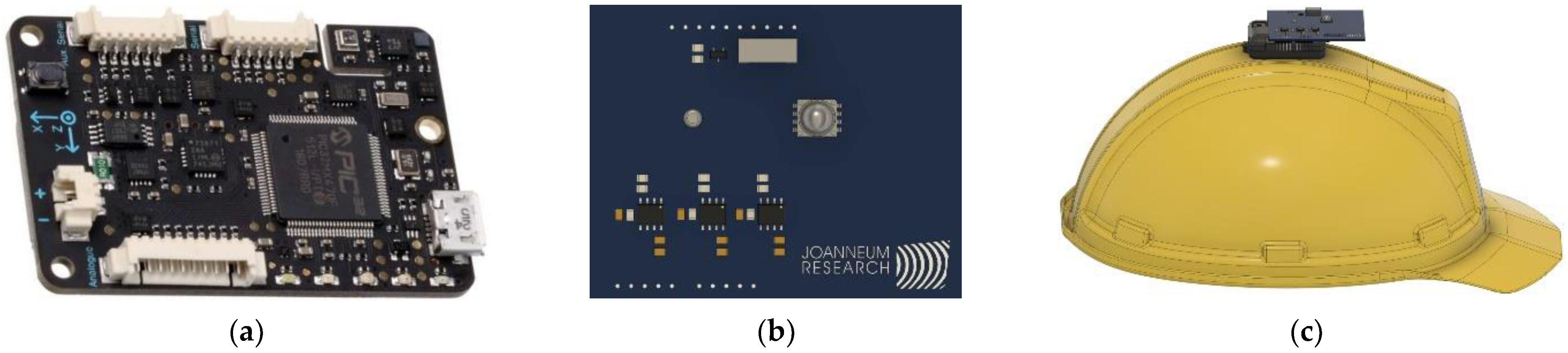

3.1. System Description

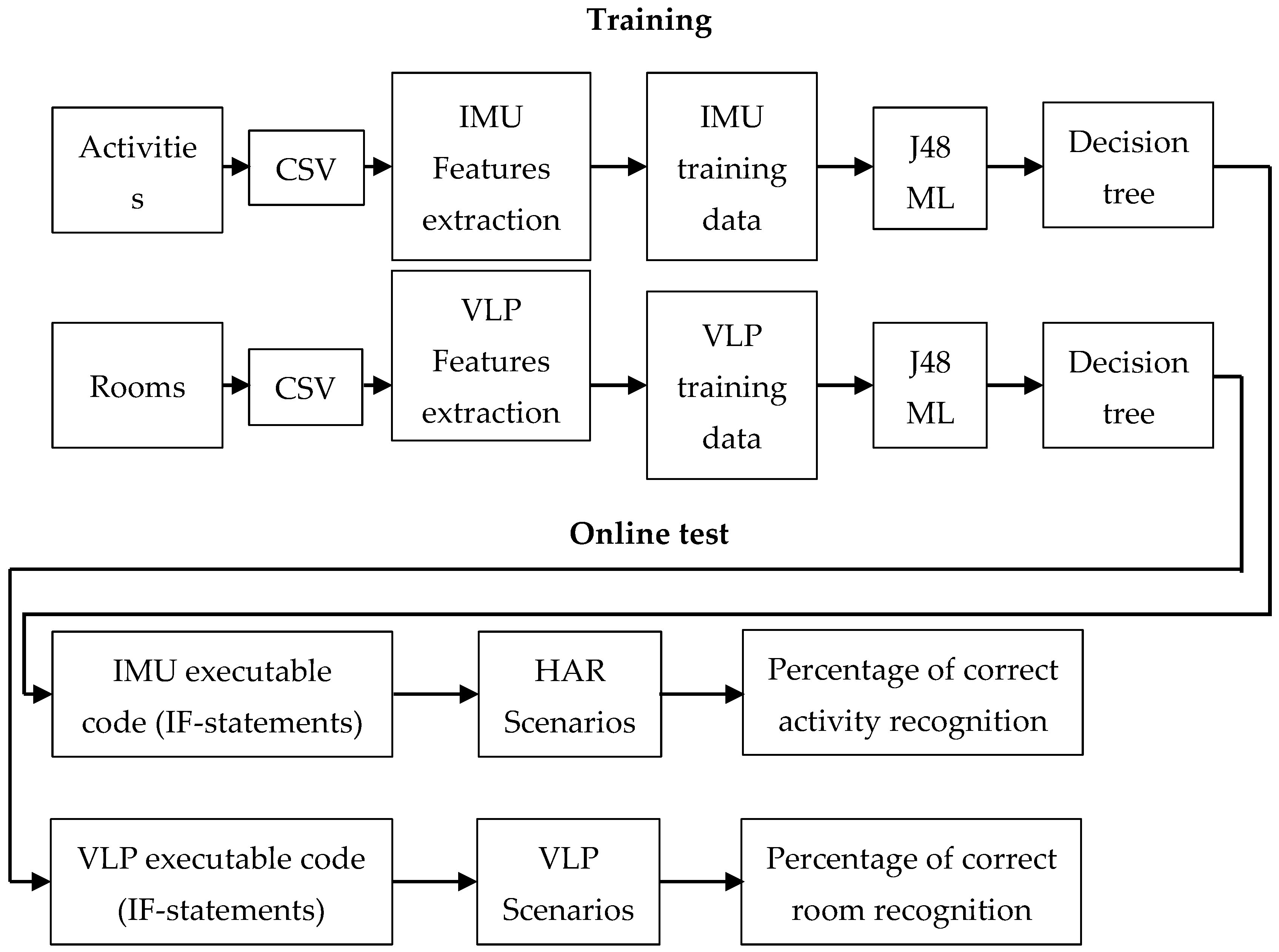

3.2. Training and Online Test Procedures

3.3. Experimental Conditions

3.4. Data Extraction

3.4.1. IMU Data

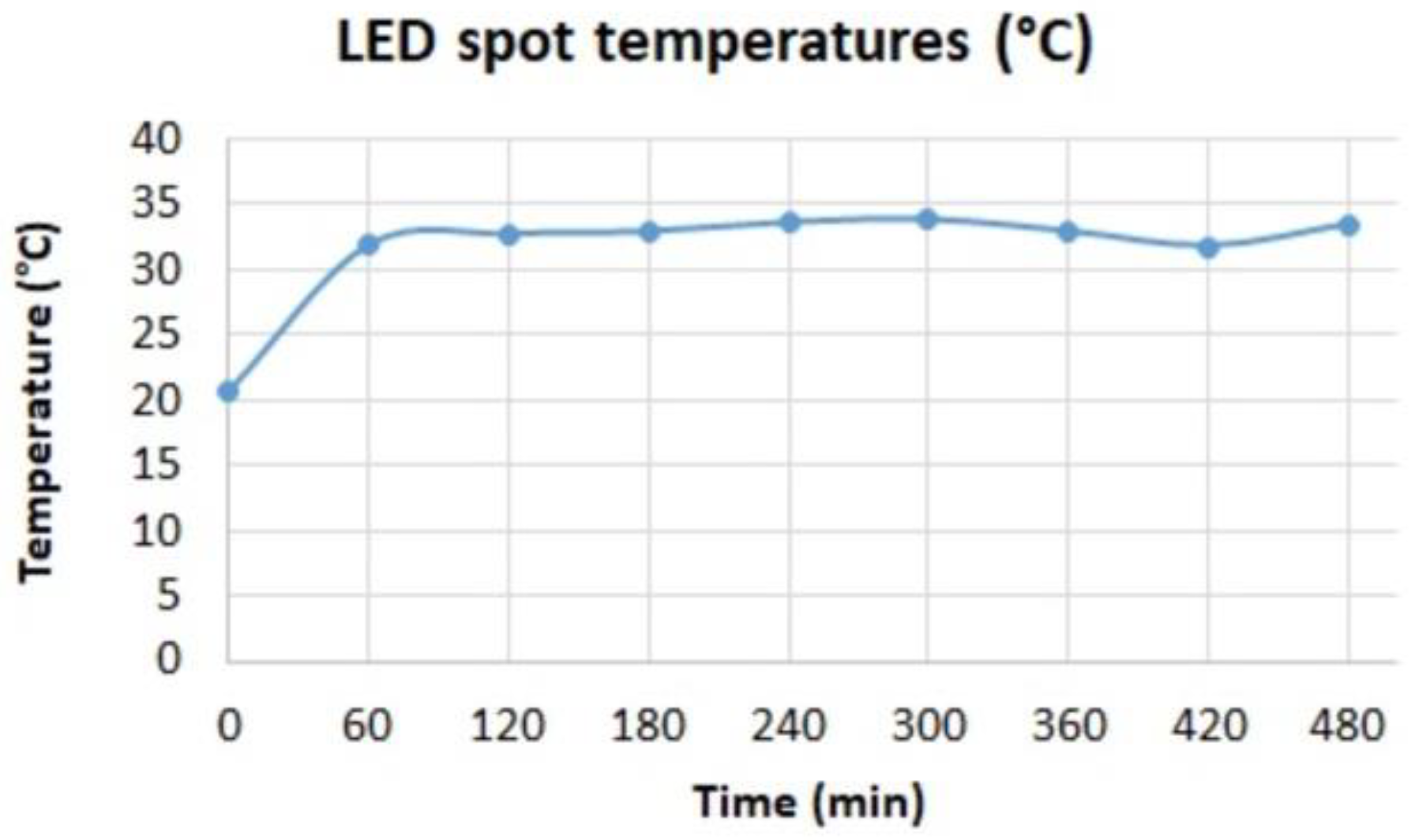

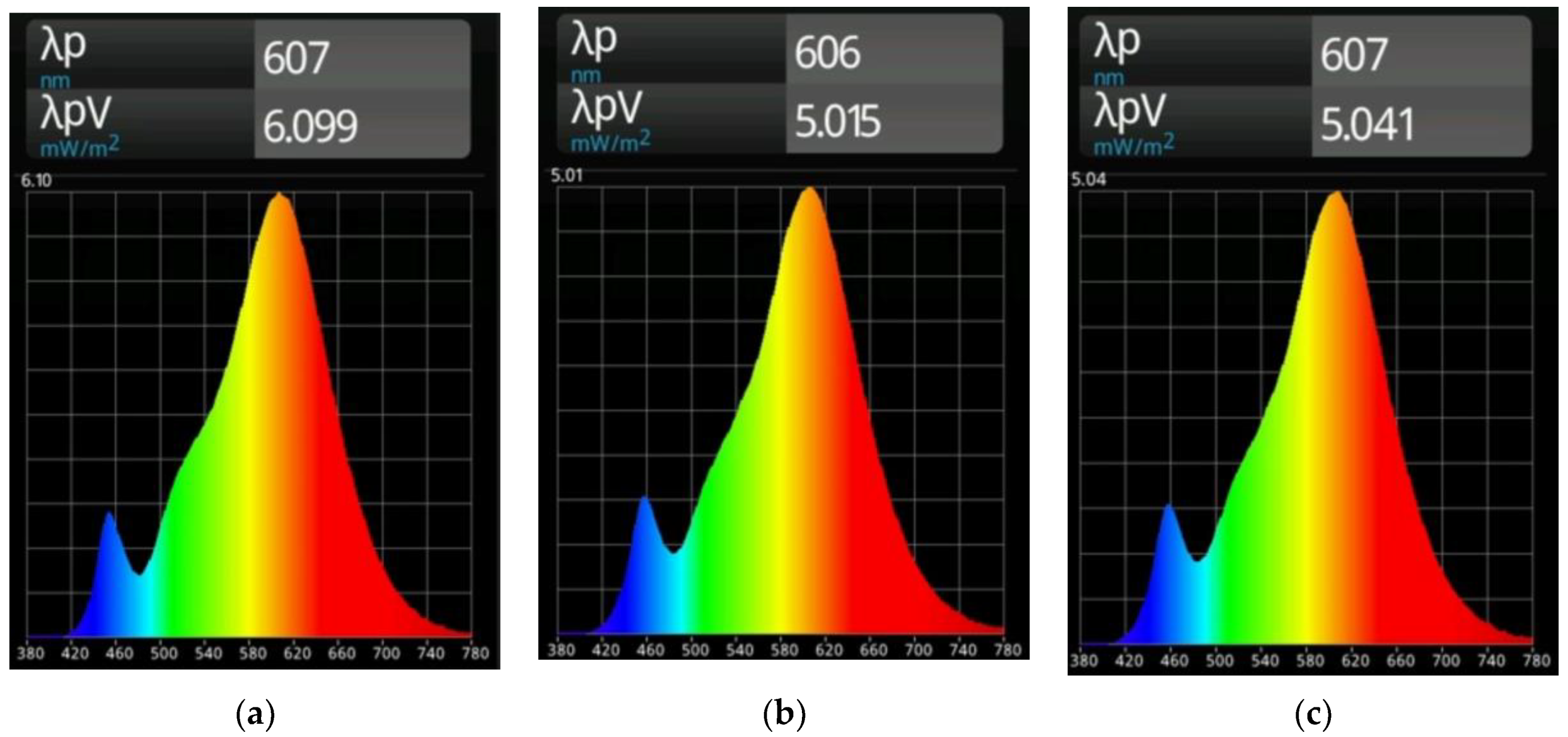

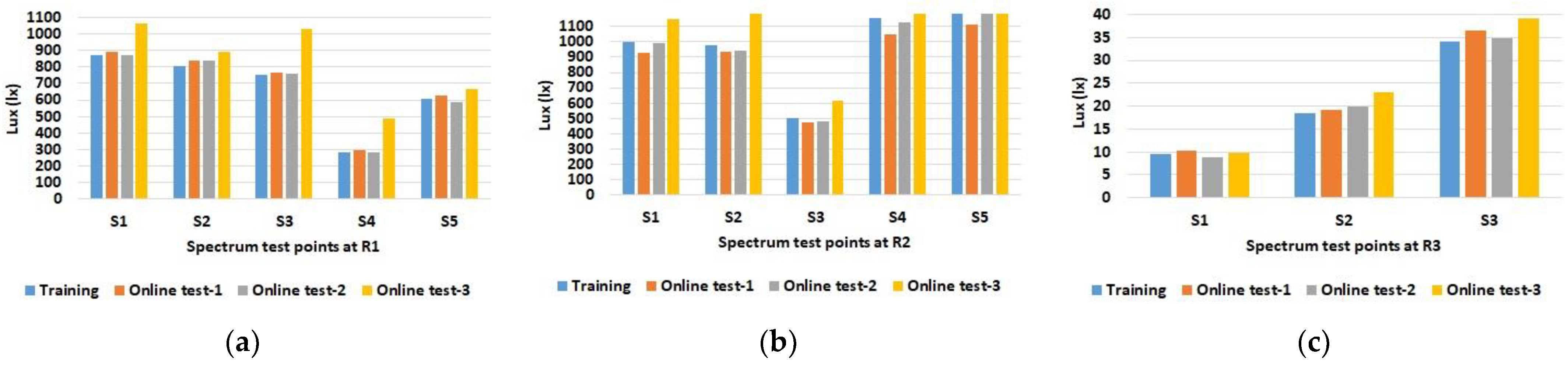

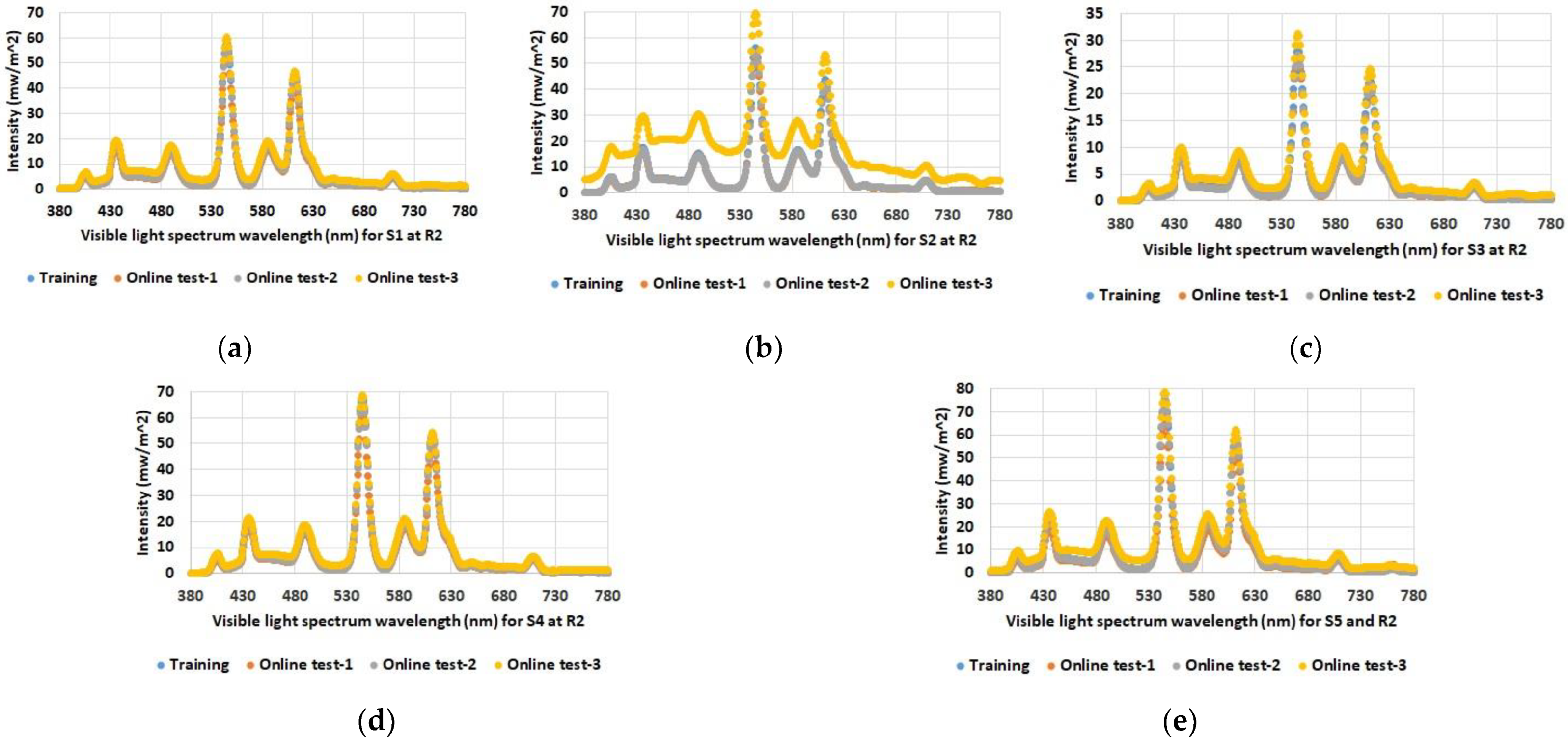

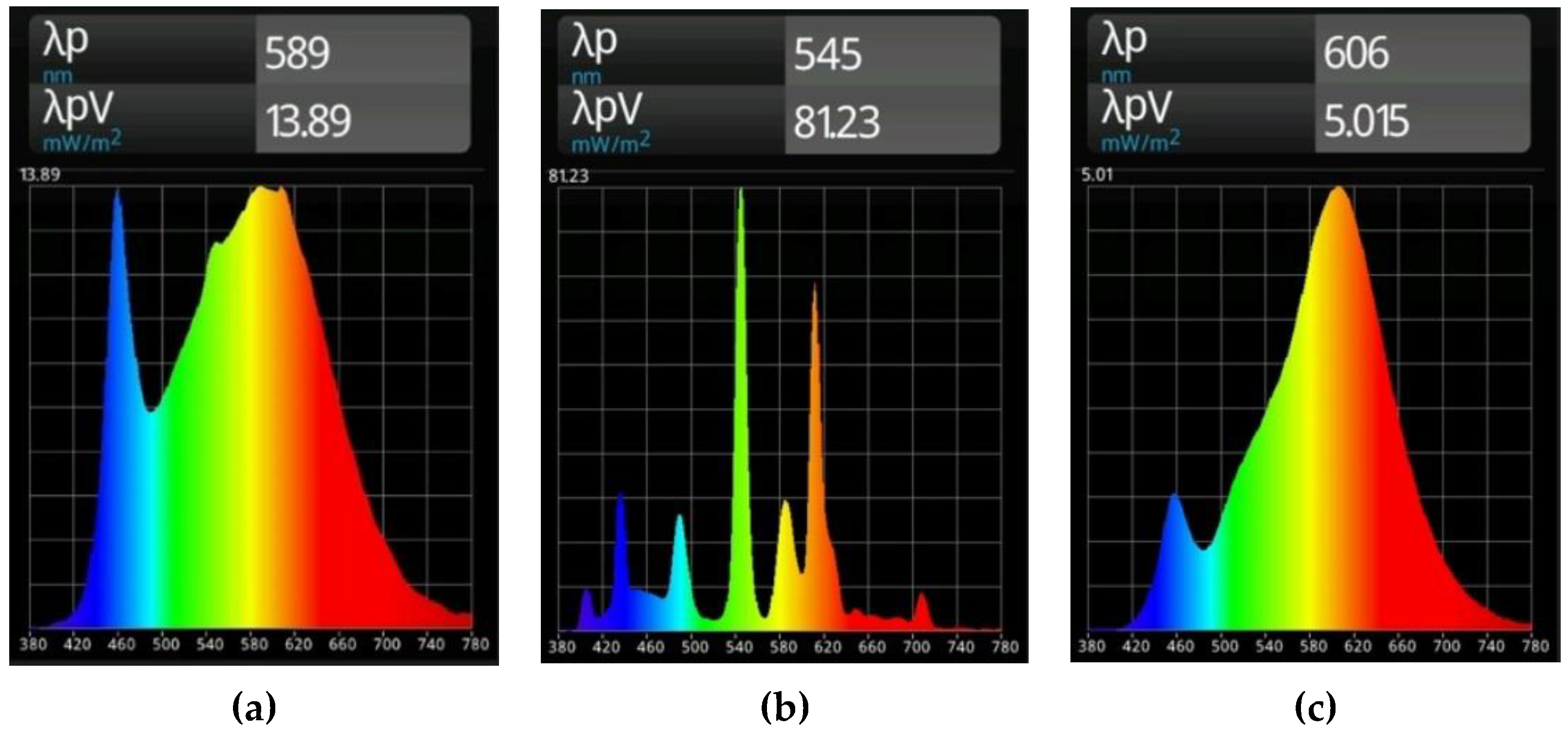

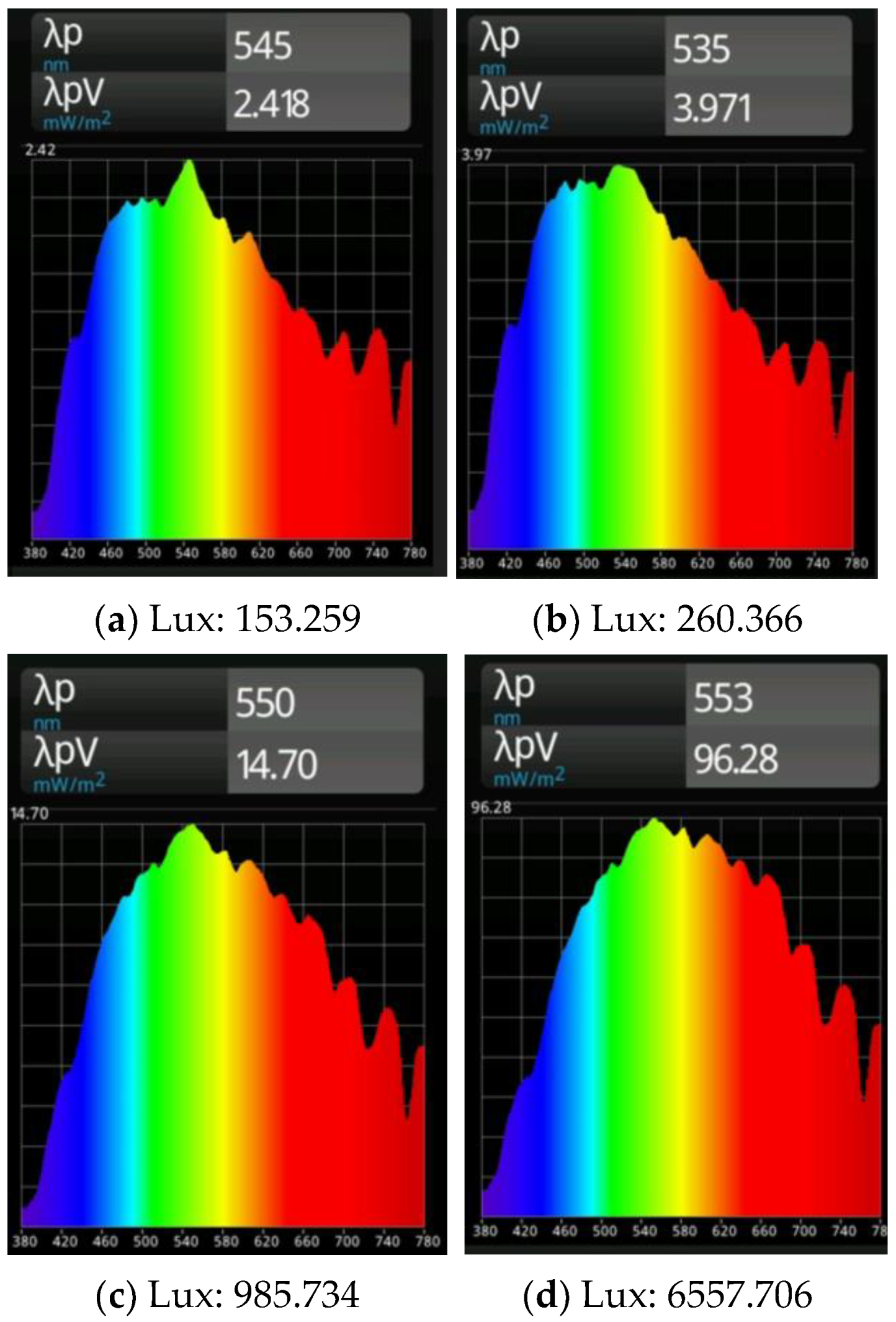

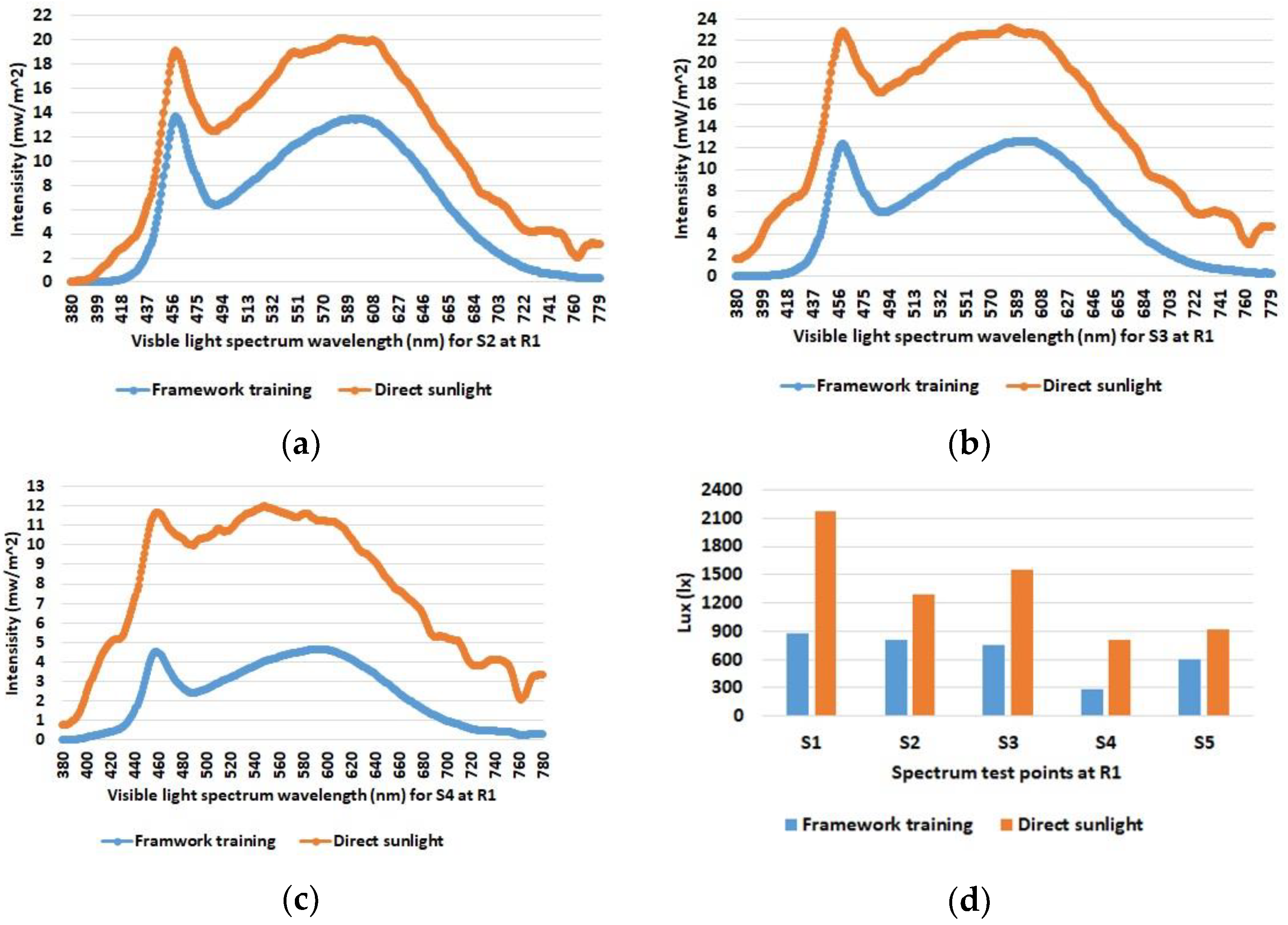

3.4.2. Light Data

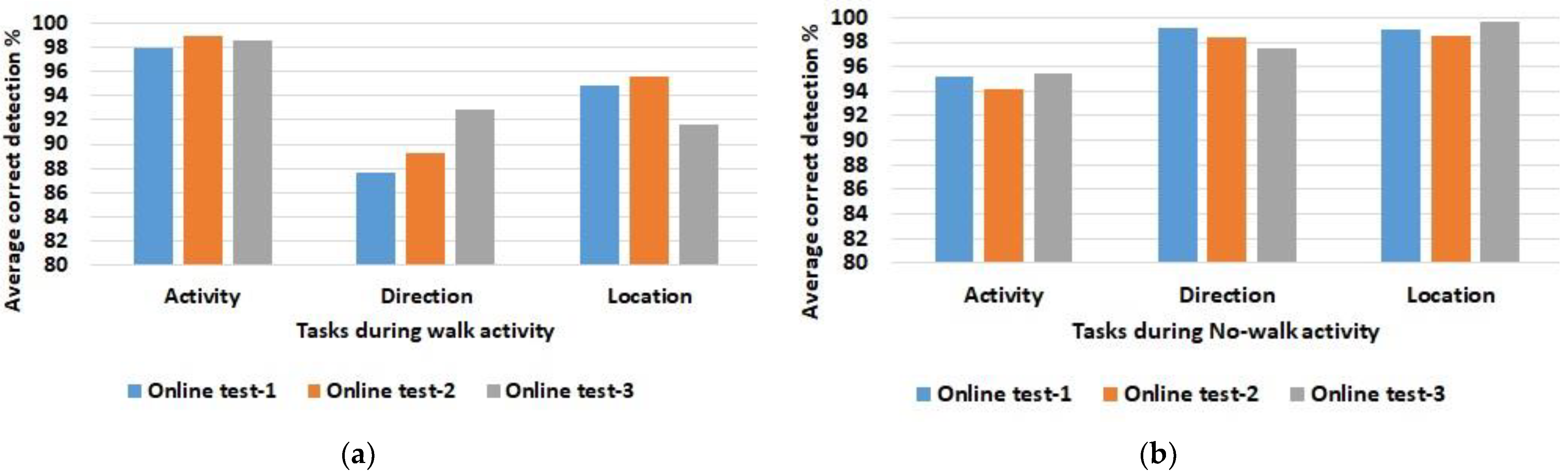

4. Results and Discussions

4.1. Activity Recognition under Changed Ambient Light Conditions

- Experiment-1: evaluation includes 100 iterations measured on day 3 and site 2, i.e., this evaluation refers to data recorded on a single day and at a single location.

- Experiment-2: evaluation includes the sum of all 140 iterations (merged data) measured on three days and at two locations. Therefore, this evaluation refers to data comprising variations from different times and sites.

4.2. Discussion of Different Ambient Light Conditions

5. Conclusions and Outlook for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Height (cm) | |||||

|---|---|---|---|---|---|

| Room | S1 | S2 | S3 | S4 | S5 |

| R1 | 118 | 180 | 40 | 180 | 118 |

| R2 | 180 | 142 | 193 | 180 | 140 |

| R3 | 185 | 180 | 140 | N/A | N/A |

Appendix B

References

- Otto, T.; Kurth, S.; Voigt, S.; Morschhauser, A.; Meinig, M.; Hiller, K.; Moebius, M.; Vogel, M. Integrated microsystems for smart applications. Sens. Mater. 2018, 30, 767–778. [Google Scholar]

- Nandy, A.; Saha, J.; Chowdhury, C. Novel features for intensive human activity recognition based on wearable and smartphone sensors. Microsyst. Technol. 2020, 26, 1889–1903. [Google Scholar] [CrossRef]

- Ghonim, A.M.; Salama, W.M.; Khalaf, A.A.; Shalaby, H.M. Indoor localization based on visible light communication and machine learning algorithms. Opto-Electron. Rev. 2022, 30, e140858. [Google Scholar]

- Toro, U.S.; Wu, K.; Leung, V.C. Backscatter wireless communications and sensing in green Internet of Things. IEEE Trans. Green Commun. Netw. 2022, 6, 37–55. [Google Scholar] [CrossRef]

- Weiss, A.P.; Wenzl, F.P. Identification and Speed Estimation of a Moving Object in an Indoor Application Based on Visible Light Sensing of Retroreflective Foils. Micromachines 2021, 12, 439. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wei, Z.; Nie, J.; Huang, L.; Wang, S.; Li, Z. A review on human activity recognition using vision-based method. J. Healthc. Eng. 2017, 2017, 3090343. [Google Scholar] [CrossRef]

- Marin, J.; Blanco, T.; Marin, J.J. Octopus: A design methodology for motion capture wearables. Sensors 2017, 17, 1875. [Google Scholar] [CrossRef]

- Ahmed, N.; Rafiq, J.I.; Islam, M.R. Enhanced human activity recognition based on smartphone sensor data using hybrid feature selection model. Sensors 2020, 20, 317. [Google Scholar] [CrossRef]

- Ranasinghe, S.; Al Machot, F.; Mayr, H.C. A review on applications of activity recognition systems with regard to performance and evaluation. Int. J. Distrib. Sens. Netw. 2016, 12, 22. [Google Scholar] [CrossRef]

- Acampora, G.; Minopoli, G.; Musella, F.; Staffa, M. Classification of Transition Human Activities in IoT Environments via Memory-Based Neural Networks. Electronics 2020, 9, 409. [Google Scholar] [CrossRef]

- Salem, Z.; Weiss, A.P.; Wenzl, F.P. A spatiotemporal framework for human indoor activity monitoring. In Proceedings of the SPIE 11525, SPIE Future Sensing Technologies, Online, 8 November 2020; p. 115251L. [Google Scholar]

- Salem, Z.; Weiss, A.P.; Wenzl, F.P. A low-complexity approach for visible light positioning and space-resolved human activity recognition. In Proceedings of the SPIE 11785, Multimodal Sensing and Artificial Intelligence: Technologies and Applications II, Online, 20 June 2021; p. 117850H. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. Found. Trends Signal Process. 2017, 11, 1–153. [Google Scholar] [CrossRef]

- Rosati, S.; Balestra, G.; Knaflitz, M. Comparison of different sets of features for human activity recognition by wearable sensors. Sensors 2018, 18, 4189. [Google Scholar] [CrossRef]

- Schuldhaus, D. Human Activity Recognition in Daily Life and Sports Using Inertial Sensors. Ph.D. Thesis, Technical Faculty of Friedrich-Alexander University Erlangen-Nuremberg, Erlangen, Germany, FAU University Press, Boca Raton, FL, USA, 26 November 2019. [Google Scholar]

- Pires, I.M.; Garcia, N.M.; Pombo, N.; Flórez-Revuelta, F.; Spinsante, S. Data Fusion on Motion and Magnetic Sensors embedded on Mobile Devices for the Identification of Activities of Daily Living. arXiv 2017, arXiv:1711.07328. Available online: https://arxiv.org/abs/1711.07328 (accessed on 18 August 2022). [CrossRef]

- Dargie, W. Analysis of time and frequency domain features of accelerometer measurements. In Proceedings of the 18th IEEE International Conference on Computer Communications and Networks, San Francisco, CA, USA, 3–6 August 2009; pp. 1–6. [Google Scholar]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. Fusion of smartphone motion sensors for physical activity recognition. Sensors 2014, 14, 10146. [Google Scholar] [CrossRef] [PubMed]

- Nossier, S.A.; Wall, J.; Moniri, M.; Glackin, C.; Cannings, N. A Comparative Study of Time and Frequency Domain Approaches to Deep Learning based Speech Enhancement. In Proceedings of the IEEE Inter Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Stuchi, J.A.; Angeloni, M.A.; Pereira, R.F.; Boccato, L.; Folego, G.; Prado, P.V.; Attux, R.R. Improving image classification with frequency domain layers for feature extraction. In Proceedings of the IEEE 27th International Workshop on Machine Learning for Signal Processing (MLSP), Tokyo, Japan, 25–29 September 2017; pp. 1–6. [Google Scholar]

- Huynh, Q.T.; Tran, B.Q. Time-Frequency Analysis of Daily Activities for Fall Detection. Signals 2021, 2, 1. [Google Scholar] [CrossRef]

- Zhao, G.; Zheng, H.; Li, Y.; Zhu, K.; Li, J. A Frequency Domain Direct Localization Method Based on Distributed Antenna Sensing. Wirel. Commun. Mob. Comput. 2021, 2021, 6616729. [Google Scholar] [CrossRef]

- Grunin, A.P.; Kalinov, G.A.; Bolokhovtsev, A.V.; Sai, S.V. Method to improve accuracy of positioning object by eLoran system with applying standard Kalman filter. J. Phys. Conf. Ser. 2018, 1015, 032050. [Google Scholar] [CrossRef]

- Ali, R.; Hakro, D.N.; He, Y.; Fu, W.; Cao, Z. An Improved Positioning Accuracy Method of a Robot Based on Particle Filter. In Proceedings of the International Conference on Advanced Computing Applications. Advances in Intelligent Systems and Computing (Online). 27–28 March 2021; Mandal, J.K., Buyya, R., De, D., Eds.; Springer: Singapore, 2022; Volume 1406, pp. 667–678. [Google Scholar]

- Zu, H.; Chen, X.; Chen, Z.; Wang, Z.; Zhang, X. Positioning accuracy improvement method of industrial robot based on laser tracking measurement. Meas. Sens. 2021, 18, 100235. [Google Scholar] [CrossRef]

- Yan, X.; Guo, H.; Yu, M.; Xu, Y.; Cheng, L.; Jiang, P. Light detection and ranging/inertial measurement unit-integrated navigation positioning for indoor mobile robots. Intern. J. Adv. Robot. Sys. 2020, 17, 1729881420919940. [Google Scholar] [CrossRef]

- Ibrahim, M.; Nguyen, V.; Rupavatharam, S.; Jawahar, M.; Gruteser, M.; Howard, R. Visible light based activity sensing using ceiling photosensors. In Proceedings of the 3rd Workshop on Visible Light Communication Systems, New York City, NY, USA, 3–7 October 2016; pp. 43–48. [Google Scholar]

- Xu, Q.; Zheng, R.; Hranilovic, S. IDyLL: Indoor localization using inertial and light sensors on smartphones. In Proceedings of the 2015 ACM Intern. Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 307–318. [Google Scholar]

- Liang, Q.; Lin, J.; Liu, M. Towards robust visible light positioning under LED shortage by visual-inertial fusion. In Proceedings of the IEEE International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–8. [Google Scholar]

- Hao, J.; Chen, J.; Wang, R. Visible light positioning using a single LED luminaire. IEEE Photonics J. 2019, 11, 7905113. [Google Scholar] [CrossRef]

- Liang, Q.; Liu, M. A tightly coupled VLC-inertial localization system by EKF. IEEE Robot. Autom. Lett. 2020, 5, 3129–3136. [Google Scholar] [CrossRef]

- Hwang, I.; Cha, G.; Oh, S. Multi-modal human action recognition using deep neural networks fusing image and inertial sensor data. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Republic of Korea, 16–18 November 2017; pp. 278–283. [Google Scholar]

- Poulose, A.; Han, D.S. Hybrid indoor localization using IMU sensors and smartphone camera. Sensors 2019, 19, 5084. [Google Scholar] [CrossRef]

- Tian, Y.; Chen, W. MEMS-based human activity recognition using smartphone. In Proceedings of the IEEE 35th Chinese Control Conference, Chengdu, China, 27–29 July 2016; pp. 3984–3989. [Google Scholar]

- Shen, C.; Chen, Y.; Yang, G. On motion-sensor behavior analysis for human-activity recognition via smartphones. In Proceedings of the IEEE International Conference on Identity, Security and Behavior Analysis (Isba), Sendai, Japan, 29 February–2 March 2016; pp. 1–6. [Google Scholar]

- Vallabh, P.; Malekian, R.; Ye, N.; Bogatinoska, D.C. Fall detection using machine learning algorithms. In Proceedings of the IEEE 24th Intern. Conf. on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 22–24 September 2016; pp. 1–9. [Google Scholar]

- Tang, C.; Phoha, V.V. An empirical evaluation of activities and classifiers for user identification on smartphones. In Proceedings of the IEEE 8th International Conference on Biometrics Theory, Applications and Systems (Btas), Niagaras Falls, NY, USA, 6–9 September 2016; pp. 1–8. [Google Scholar]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 33. [Google Scholar] [CrossRef]

- Hassan, N.U.; Naeem, A.; Pasha, M.A.; Jadoon, T.; Yuen, C. Indoor positioning using visible led lights: A survey. ACM Comput. Surv. (CSUR) 2015, 48, 20. [Google Scholar] [CrossRef]

- Carrera, V.J.L.; Zhao, Z.; Braun, T. Room recognition using discriminative ensemble learning with hidden markov models for smartphones. In Proceedings of the IEEE 29th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Bologna, Italy, 9–12 September 2018; pp. 1–7. [Google Scholar]

- Wojek, C.; Nickel, K.; Stiefelhagen, R. Activity recognition and room-level tracking in an office environment. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Heidelberg, Germany, 3–6 September 2006; pp. 25–30. [Google Scholar]

- Next Generation IMU (NGIMU). Available online: http://x-io.co.uk/ngimu (accessed on 5 September 2022).

- Lee, J.; Kim, J. Energy-efficient real-time human activity recognition on smart mobile devices. Mob. Inf. Syst. 2016, 2016, 2316757. [Google Scholar] [CrossRef]

- Wisiol, K. Human Activity Recognition. Master’s Thesis, Geomatics Science, Graz University of technology, Graz, Austria, November 2014. [Google Scholar]

- Bieber, G.; Koldrack, P.; Sablowski, C.; Peter, C.; Urban, B. Mobile physical activity recognition of stand-up and sit-down tran-sitions for user behavior analysis. In Proceedings of the 3rd International Conference on Pervasive Technologies Related to Assistive Environments, Samos, Greece, 23–25 June 2010; pp. 1–5. [Google Scholar]

- Niswander, W.; Wang, W.; Kontson, K. Optimization of IMU Sensor Placement for the Measurement of Lower Limb Joint Kinematics. Sensors 2020, 20, 5993. [Google Scholar] [CrossRef]

- Frank, E.; Hall, M.A.; Witten, I.H. The WEKA Workbench. Online Appendix for Data Mining: Practical Machine Learning Tools and Techniques, 4th ed.; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Dillon, C.B.; Fitzgerald, A.P.; Kearney, P.M.; Perry, I.J.; Rennie, K.L.; Kozarski, R.; Phillips, C.M. Number of days required to estimate habitual activity using wrist-worn GENEActiv accelerometer: A cross-sectional study. PLoS ONE 2016, 11, e0109913. [Google Scholar] [CrossRef]

- Preto, S.; Gomes, C.C. Lighting in the workplace: Recommended illuminance (LUX) at workplace environs. In Advances in Design for Inclusion; Di Bucchianico, G., Ed.; AHFE 2018; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; p. 776. [Google Scholar]

- Uprtek. Available online: https://www.uprtek.com (accessed on 5 September 2022).

- Fridriksdottir, E.; Bonomi, A.G. Accelerometer-Based Human Activity Recognition for Patient Monitoring Using a Deep Neural Network. Sensors 2020, 20, 6424. [Google Scholar] [CrossRef]

- Jordao, A.; Torres, L.A.B.; Schwartz, W.R. Novel approaches to human activity recognition based on accelerometer data. Signal Image Video Process. 2018, 12, 1387–1394. [Google Scholar] [CrossRef]

- Bersch, S.D.; Azzi, D.; Khusainov, R.; Achumba, I.E.; Ries, J. Sensor data acquisition and processing parameters for human activity classification. Sensors 2014, 14, 4239–4270. [Google Scholar] [CrossRef]

- Telgarsky, R. Dominant frequency extraction. arXiv 2013, arXiv:1306.0103. Available online: https://arxiv.org/abs/1306.0103 (accessed on 5 September 2022).

- Sukor, A.A.; Zakaria, A.; Rahim, N.A. Activity recognition using accelerometer sensor and machine learning classifiers. In Proceedings of the IEEE 14th International Colloquium on Signal Processing & Its Applications (CSPA), Penang, Malaysia, 9–10 March 2018; pp. 233–238. [Google Scholar]

- Erdaş, Ç.B.; Atasoy, I.; Açıcı, K.; Oğul, H. Integrating features for accelerometer-based activity recognition. Procedia Comput. Sci. 2016, 98, 522–527. [Google Scholar] [CrossRef]

- Gupta, S.; Kumar, A. Human Activity Recognition through Smartphone’s Tri-Axial Accelerometer using Time Domain Wave Analysis and Machine Learning. Int. J. Comp. Appl. 2015, 127, 22–26. [Google Scholar] [CrossRef]

- Zhu, J.; San-Segundo, R.; Pardo, J.M. Feature extraction for robust physical activity recognition. Hum.-Cent. Comput. Inf. Sci. 2017, 7, 16. [Google Scholar] [CrossRef]

- UT TF-K Temperature Sensor, Type K. Available online: https://www.reichelt.com/be/de/temperaturfuehler-typ-k-universal-ut-tf-k-p134706.html?CCOUNTRY=661&LANGUAGE=de&&r=1 (accessed on 5 September 2022).

| Experiments | Indoor Lighting | Blinds | Start Time of Experiment | Weather Conditions |

|---|---|---|---|---|

| Training | ON | Closed | Day 1, 10:00 | Cloudy |

| Online test-1 | ON | Closed | Day 1, 13:00 | Cloudy |

| Online test-2 | ON | Opened | Day 1, 16:00 | Cloudy |

| Online test-3 | ON | Opened | Day 2, 09:00 | Sunny, but no direct sunlight |

| Experiments | Iterations | Total no. of Iterations | No. Measurements per Activity | Total no. of Measurements | Training vs. Test Samples |

|---|---|---|---|---|---|

| Day 1, Location#1 | 10 | 50 | Sit: 2723 Si-st: 1716 Stand: 2628 St-si 2021 Walk: 2689 | 11,777 | n.a. |

| Day 2, Location#1 | 30 | 150 | Sit: 11410 Si-st: 7114 Stand: 11648 St-si: 7654 Walk: 9847 | 47,673 | n.a. |

| Day 3, Location#2 | 100 | 500 | Sit: 38580 Si-st: 24199 Stand: 32954 St-si: 21645 Walk: 33670 | 151,048 | 350 vs. 150 |

| Merged data from three days and two locations | 140 | 700 | Sit: 52713 Si-st: 33029 Stand: 47230 St-si: 31320 Walk: 46205 | 210,497 | 490 vs. 210 |

| Time (s) | Acce-X (m/s2) | Acce-Y (m/s2) | Acce-Z (m/s2) | ADL |

|---|---|---|---|---|

| 0.1 | 0.161799952 | 0.017999846 | 0.981487095 | sit |

| 0.2 | 0.164758027 | 0.023350080 | 0.977752686 | sit |

| 0.3 | 0.170689359 | 0.034476221 | 0.982251704 | sit |

| 0.4 | 0.112286702 | 0.039446317 | 1.005190372 | si-st |

| 0.5 | 0.123579949 | 0.051039595 | 1.009228349 | si-st |

| 0.6 | 0.128493294 | 0.057355978 | 1.005034924 | si-st |

| 0.7 | 0.331447363 | 0.039430257 | 0.934785128 | stand |

| 0.8 | 0.331414938 | 0.035566427 | 0.930415988 | stand |

| 0.9 | 0.332439810 | 0.041852437 | 0.935305059 | stand |

| 1.0 | 0.170633137 | 0.026200492 | 0.985943556 | st-si |

| 1.1 | 0.169641167 | 0.023775911 | 0.985902011 | st-si |

| 1.2 | 0.168163225 | 0.021850912 | 0.983475626 | st-si |

| 1.3 | 0.293405503 | −0.028735712 | 0.900109053 | walk |

| 1.4 | 0.302678615 | −0.026843587 | 0.903027654 | walk |

| 1.5 | 0.310985833 | −0.023983495 | 0.907395661 | walk |

| Domain | Features | Description |

|---|---|---|

| Time | Mean | average based on the sum of the values divided by the number of values |

| Median | middle number in a sorted list of numbers | |

| SD | Standard deviation: average amount of variability in the data | |

| IQR | Interquartile range: measure for the spread of the data | |

| Min | the lowest value | |

| Max | the highest value | |

| SVM | Signal vector magnitude: distinguishes between periods of activity and no-activity to identify when a person is doing an activity or not [45] | |

| SMA | Signal magnitude area: calculates the intensity of movement, which is important for detecting a fall [53] | |

| Frequency | PSD | Power spectral density: a measure of the signal’s power. It shows at which frequencies the variation is strong or weak |

| DF | Dominant frequency: refers to the component with highest sinusoidal magnitude [54] |

| ADL | Mean | Median | SD | IQR | Min | Max | SMA | DF | PSD |

|---|---|---|---|---|---|---|---|---|---|

| si-st | 0.0270 | 0.0112 | 0.2635 | 0.4180 | −0.418 | 0.5393 | 0.0270 | 4.0957 | 0.4166 |

| si-st | 0.1006 | 0.0410 | 0.2693 | 0.4717 | −0.350 | 0.6080 | 0.1006 | 4.0260 | 0.5076 |

| si-st | 0.0657 | −0.0195 | 0.2679 | 0.4451 | −0.336 | 0.6621 | 0.0657 | 3.9124 | 0.4950 |

| st-si | 0.1259 | 0.16115 | 0.2816 | 0.5474 | −0.348 | 0.5705 | 0.1259 | 4.0132 | 0.5882 |

| st-si | 0.0460 | 0.05314 | 0.2990 | 0.5293 | −0.450 | 0.5425 | 0.0460 | 4.3323 | 0.4854 |

| st-si | 0.0280 | 0.04247 | 0.1315 | 0.5870 | −0.484 | 0.4529 | 0.0280 | 4.3826 | 0.5209 |

| sit | −0.1633 | −0.1622 | 0.0142 | 0.0208 | −0.194 | −0.133 | −0.163 | 2.6134 | 0 |

| sit | −0.1503 | −0.2150 | 0.0096 | 0.1013 | −0.175 | −0.127 | −0.150 | 2.6521 | 0 |

| sit | −0.1326 | −0.1320 | 0.0116 | 0.0161 | −0.155 | −0.098 | −0.132 | 2.2441 | 0 |

| stand | −0.3144 | −0.3153 | 0.0139 | 0.0217 | −0.346 | −0.271 | −0.314 | 4.7428 | 0 |

| stand | −0.2801 | −0.2817 | 0.0179 | 0.0246 | −0.313 | −0.222 | −0.280 | 4.5632 | 0 |

| stand | −0.3228 | −0.3226 | 0.0121 | 0.0148 | −0.367 | −0.294 | −0.322 | 5.2891 | 0 |

| walk | −0.2271 | −0.2524 | 0.1219 | 0.1396 | −0.471 | 0.1130 | −0.227 | 4.0336 | 0 |

| walk | −0.2835 | −0.2963 | 0.1268 | 0.1864 | −0.546 | 0.1038 | −0.283 | 4.0899 | 0 |

| walk | −0.3027 | −0.3289 | 0.1239 | 0.1752 | −0.555 | 0.0192 | −0.302 | 5.2423 | 0 |

| Time | B | G | R | B2G | B2R | G2R | B-G | B-R | G-R | Class |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 161.66 | 145.61 | 152.88 | 111.01 | 105.74 | 95.247 | 16.044 | 8.7792 | −7.265 | R1 |

| 0.2 | 176.49 | 164.68 | 167.10 | 107.16 | 105.61 | 98.550 | 11.80 | 9.3847 | −2.418 | R1 |

| 0.3 | 177.40 | 155.90 | 157.11 | 113.78 | 112.90 | 99.229 | 21.494 | 20.283 | −1.210 | R1 |

| 0.6 | 139.56 | 104.14 | 107.77 | 134.01 | 129.49 | 96.629 | 35.419 | 31.797 | −3.632 | R2 |

| 0.7 | 141.98 | 103.23 | 103.83 | 137.53 | 136.73 | 99.416 | 38.749 | 38.144 | −0.605 | R2 |

| 0.8 | 145.31 | 98.691 | 102.02 | 147.23 | 142.43 | 96.735 | 46.621 | 43.291 | −3.330 | R2 |

| 1.1 | 209.18 | 207.37 | 205.55 | 100.87 | 101.76 | 100.88 | 1.8163 | 3.6328 | 1.8164 | R3 |

| 1.2 | 209.18 | 207.07 | 205.85 | 101.02 | 101.61 | 100.58 | 2.1191 | 3.3300 | 1.2109 | R3 |

| 1.3 | 209.18 | 207.37 | 205.85 | 100.85 | 101.61 | 100.73 | 1.8163 | 3.3300 | 1.5136 | R3 |

| Correct Detection Average during Walk Activity (%) | |||

|---|---|---|---|

| Experiments | Activity | Direction | Location |

| Online test-1 | 97.90 | 87.61 | 94.79 |

| Online test-2 | 98.91 | 89.29 | 95.59 |

| Online test-3 | 98.54 | 92.83 | 91.62 |

| Correct Detection Average during No-Walk Activity (%) | |||

|---|---|---|---|

| Experiments | Activity | Direction | Location |

| Online test-1 | 95.25 | 99.20 | 98.99 |

| Online test-2 | 94.12 | 98.43 | 98.58 |

| Online test-3 | 95.49 | 97.47 | 99.61 |

| Average Detection Difference between the Online Tests for Walk Activity (%) | |||

|---|---|---|---|

| Between | Activity | Direction | Location |

| Online test-1 and 2 | 1.01 | 1.68 | 0.80 |

| Online test-1 and 3 | 0.64 | 5.22 | −3.16 |

| Online test-2 and 3 | −1.37 | 3.54 | −3.96 |

| Average Detection Difference between the Online Tests for No-Walk activity (%) | |||

|---|---|---|---|

| Between | Activity | Direction | Location |

| Online test-1 and 2 | −1.12 | −0.76 | −0.41 |

| Online test-1 and 3 | 0.24 | −1.73 | 0.62 |

| Online test-2 and 3 | 1.37 | −0.97 | 1.03 |

| Domain | Exp-1 | Exp-2 | Study-1 [55] | Study-2 [16] | Study-3 [56] | Study-4 [57] | Study-5 [58] |

|---|---|---|---|---|---|---|---|

| Time & frequency | 96.67 | 91.43 | - | 90.89 | - | - | - |

| Time | 96.67 | 91.43 | 93.52 | - | 87.00 | 96.75 | 95.00 |

| Frequency | 62.67 | 94.35 | 94.34 | - | 94.00 | - | 92.70 |

| Exp.-1 | Exp.-2 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Si-st | St-si | Walk | Sit | Stand | Si-st | St-si | Walk | Sit | Stand | |||

| Si-st | 30 | 0 | 0 | 0 | 0 | Si-st | 35 | 7 | 0 | 0 | 0 | |

| St-si | 1 | 29 | 0 | 0 | 0 | St-si | 6 | 36 | 0 | 0 | 0 | |

| Walk | 1 | 0 | 29 | 0 | 0 | Walk | 0 | 0 | 42 | 0 | 0 | |

| Sit | 0 | 0 | 0 | 28 | 0 | Sit | 0 | 0 | 5 | 37 | 0 | |

| Stand | 0 | 0 | 0 | 1 | 29 | Stand | 0 | 0 | 0 | 0 | 42 | |

| Time | R1 | R3 | D3 | D4 | Walk | No-walk | Rooms | ADL |

|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 100 | 0 | 0 | 100 | 0 | R1 | Walk |

| 2 | 0 | 100 | 100 | 0 | 66.66 | 33.33 | R1 | Walk |

| 3 | 0 | 100 | 100 | 0 | 85.71 | 14.29 | R1 | Walk |

| 4 | 0 | 100 | 100 | 0 | 100 | 0 | R1 | Walk |

| 5 | 85.71 | 14.29 | 100 | 0 | 83.33 | 16.67 | R1 | Walk |

| 6 | 100 | 0 | 100 | 0 | 100 | 0 | R1 | Walk |

| 7 | 100 | 0 | 100 | 0 | 100 | 0 | R1 | Walk |

| 8 | 100 | 0 | 100 | 0 | 100 | 0 | R1 | Walk |

| 9 | 100 | 0 | 100 | 0 | 100 | 0 | R1 | Walk |

| Ave. | 53.97 | 46.03 | 98.14 | 0 | 92.86 | 7.14 | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salem, Z.; Weiss, A.P. Improved Spatiotemporal Framework for Human Activity Recognition in Smart Environment. Sensors 2023, 23, 132. https://doi.org/10.3390/s23010132

Salem Z, Weiss AP. Improved Spatiotemporal Framework for Human Activity Recognition in Smart Environment. Sensors. 2023; 23(1):132. https://doi.org/10.3390/s23010132

Chicago/Turabian StyleSalem, Ziad, and Andreas Peter Weiss. 2023. "Improved Spatiotemporal Framework for Human Activity Recognition in Smart Environment" Sensors 23, no. 1: 132. https://doi.org/10.3390/s23010132

APA StyleSalem, Z., & Weiss, A. P. (2023). Improved Spatiotemporal Framework for Human Activity Recognition in Smart Environment. Sensors, 23(1), 132. https://doi.org/10.3390/s23010132