Formation Control of Automated Guided Vehicles in the Presence of Packet Loss

Abstract

1. Introduction

- Study of decentralized leader–follower formation control for non-holonomic automated guided vehicles using linear quadratic regulator (LQR). LQR is a simple yet popular control approach that can be easily implemented and that has not yet been used for formation control.

- Analysis of the impact of packet loss on the formation control of AGVs.

- Improving the performance of a linear quadratic regulator (LQR) controller via machine learning, e.g., LSTM, to deal with packet losses, rather than using a highly non-linear and complicated controller such as a sliding mode controller.

- Development of a mechanism to compensate for packet loss with LSTM and the application of the mechanism to the formation control of non-holonomic differential drive robots, which are more sensitive to network uncertainties due to non-holonomic constraints.

- Comparing LSTM with GRU and MCP for the compensation of 30 and 50 percent packet loss through simulation in MATLAB/SIMULINK.

2. Related Work

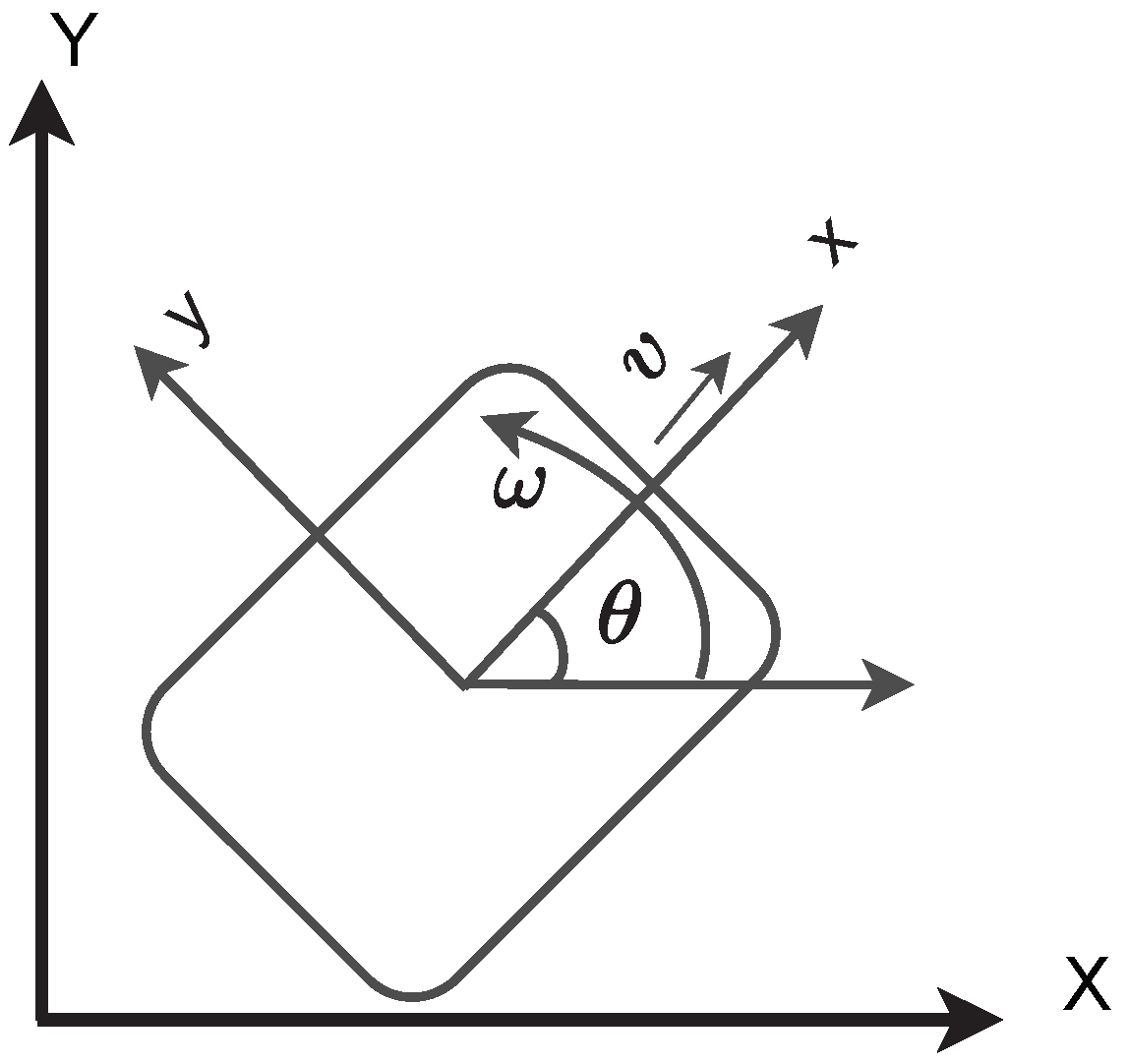

3. Mathematical System Model

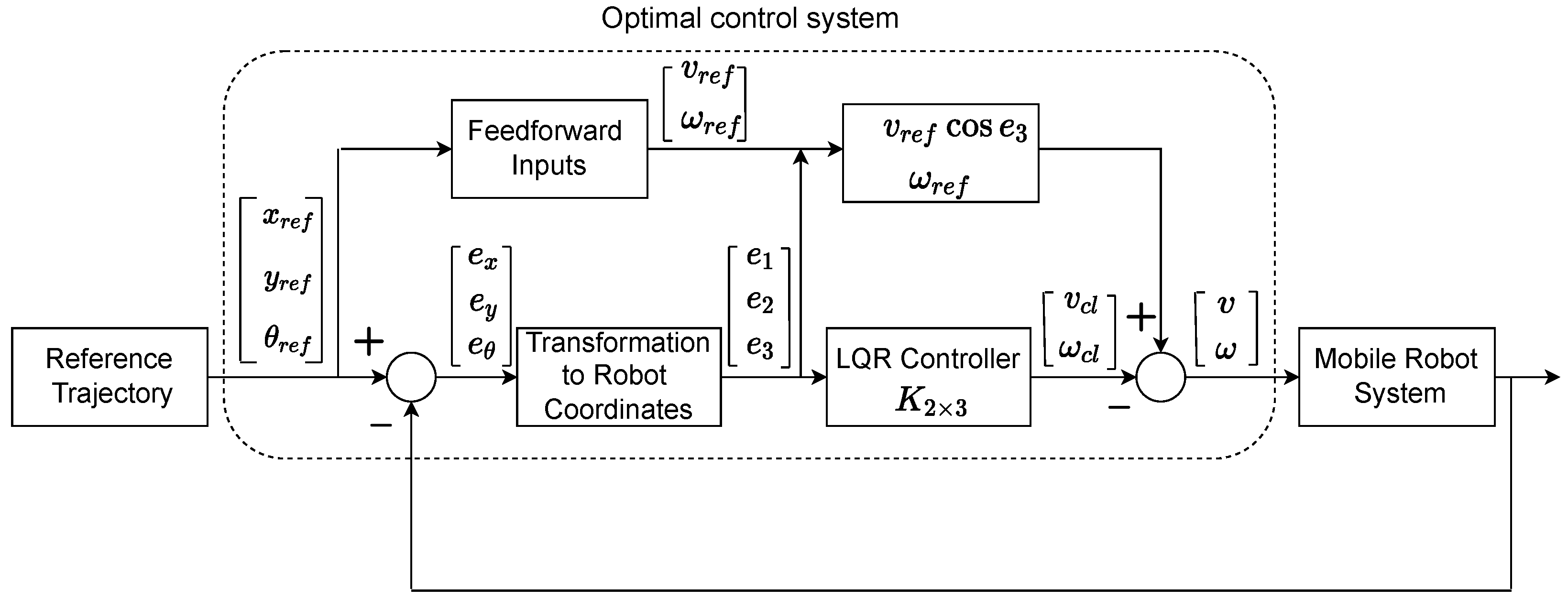

4. Controller Design

5. Formation Control under Packet Loss

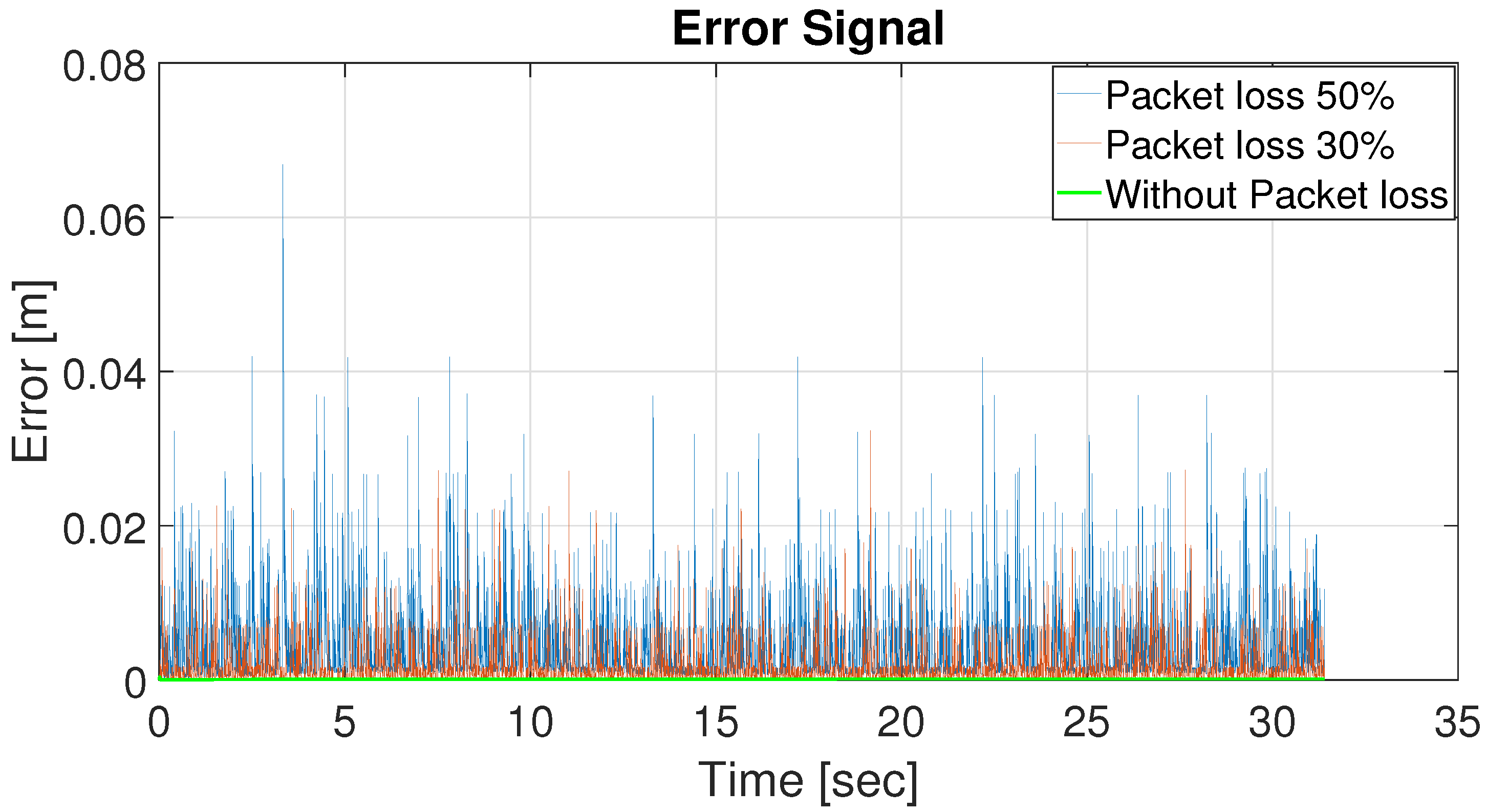

5.1. Impact of Packet Loss on Formation Control

5.2. Long Short-Term Memory to Cope with Packet Loss

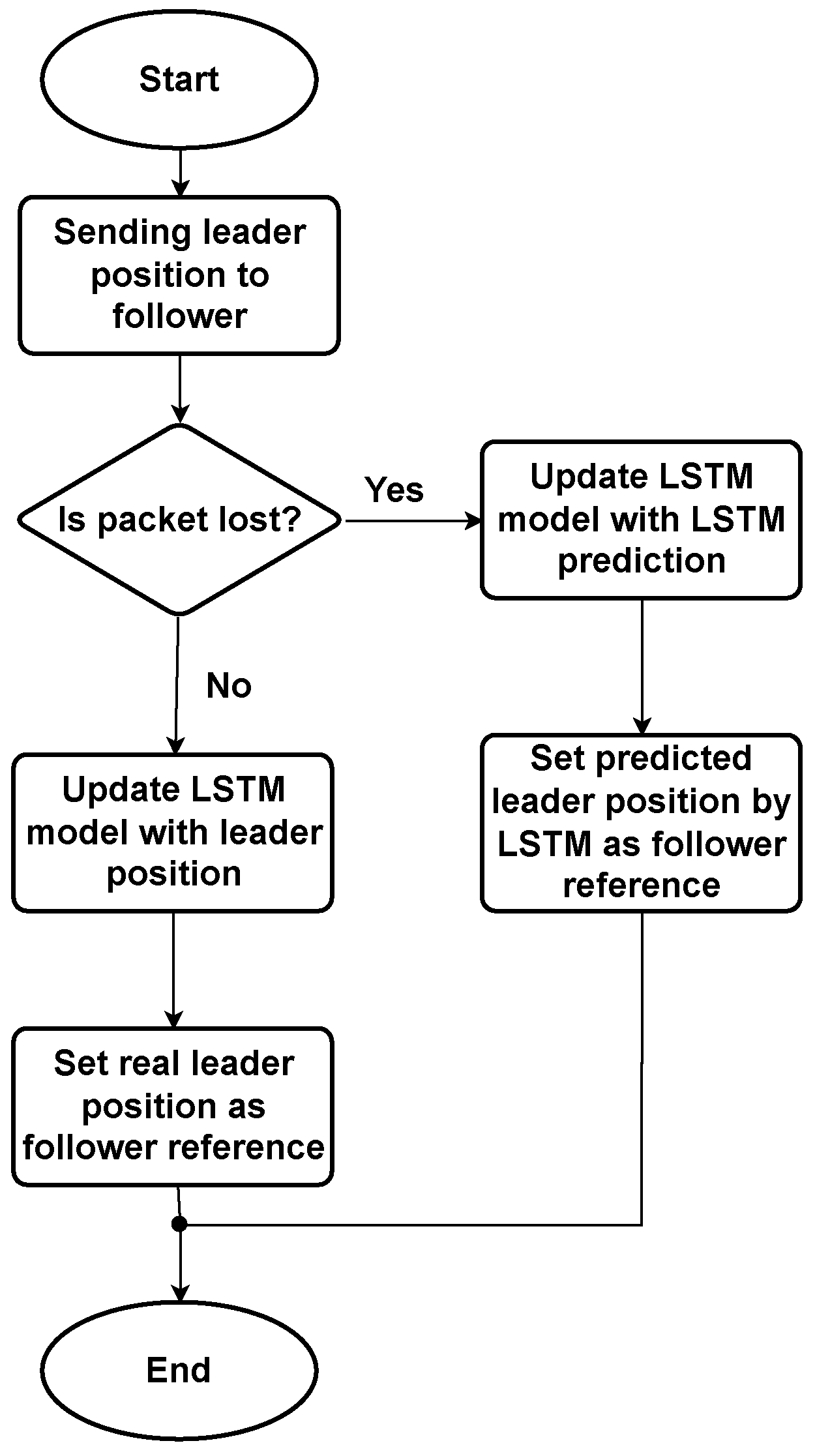

5.2.1. Architecture of LSTM Prediction and Control

- Forget gate: The forget gate (), given by Equation (18), decides whether the information from the previous cell state should be discarded or not.where is the forget gate, is the sigmoid function, is the weight matrix, is the bias term, is the previous hidden layer output, and is the new input.

- Input gate: This gate determines the information that has to be stored in the cell states that includes two parts given by Equation (19). The first part in Equation (19) consisting of identifies which value is to be updated, and the second part in Equation (19) including tanh generates the new candidate values.where is the input gate, is the candidate state of the input, and and tanh are the sigmoid and hyperbolic tangent functions, respectively. and are the weight matrices, and are the bias terms, is the previous hidden layer output, and is the new input.

- Updating cell state: Updating the cell state considers the new candidate memory and the long-term memory given by Equation (20).where and are the current and previous memory states, is the forget gate, is the input gate, and is the input candidate state.

- Output gate: This gate determines the output of the LSTM given by Equation (21).where is the output gate. and are the weight matrix and bias terms, respectively. and are the previous and current hidden layer outputs, is the new input, and is the current state of the memory block. The first part in Equation (21), which includes , determines which part of the cell state will be output (), and the second part in Equation (21) processes the cell state by tanh multiplied by the output of the sigmoid layer.

5.2.2. Application of LSTM for Leader Position Prediction

6. Performance Evaluation

6.1. Prediction Accuracy of LSTM, GRU, and MCP

6.2. Simulation Results

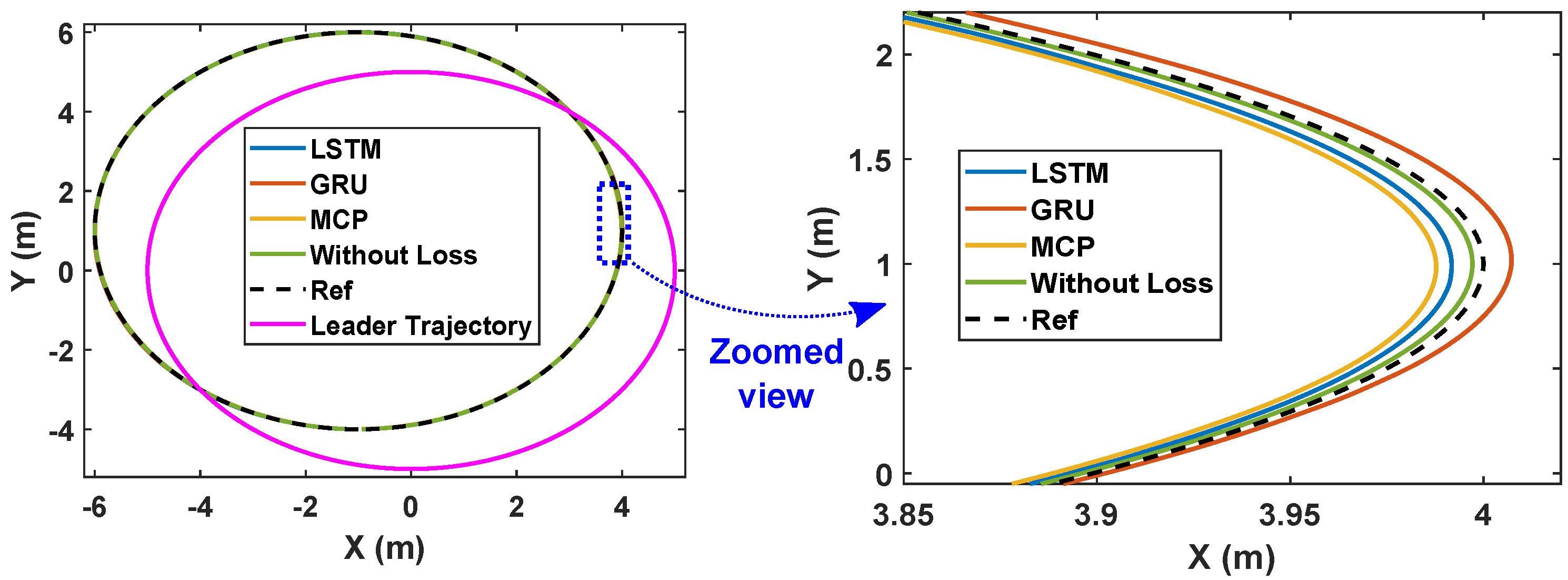

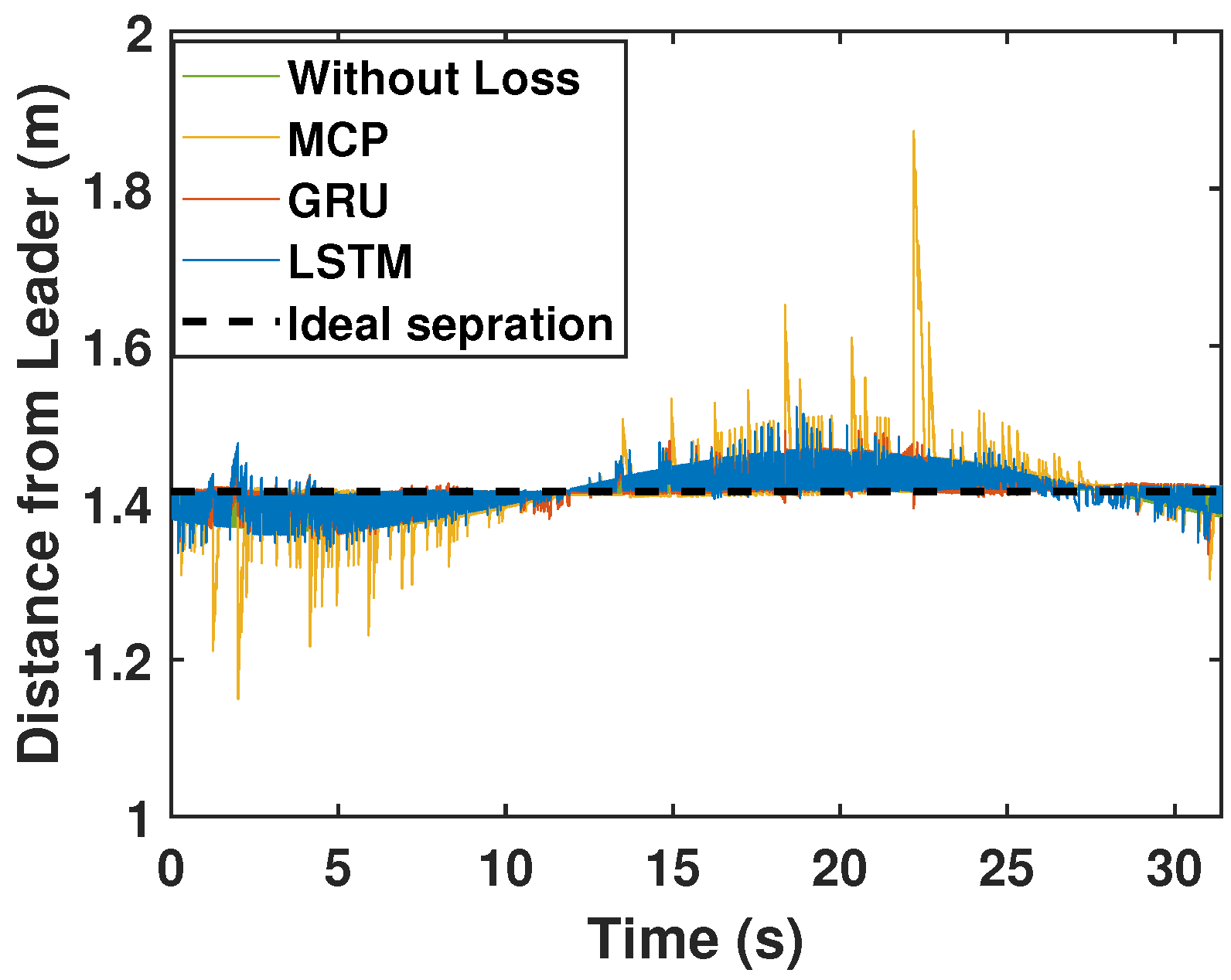

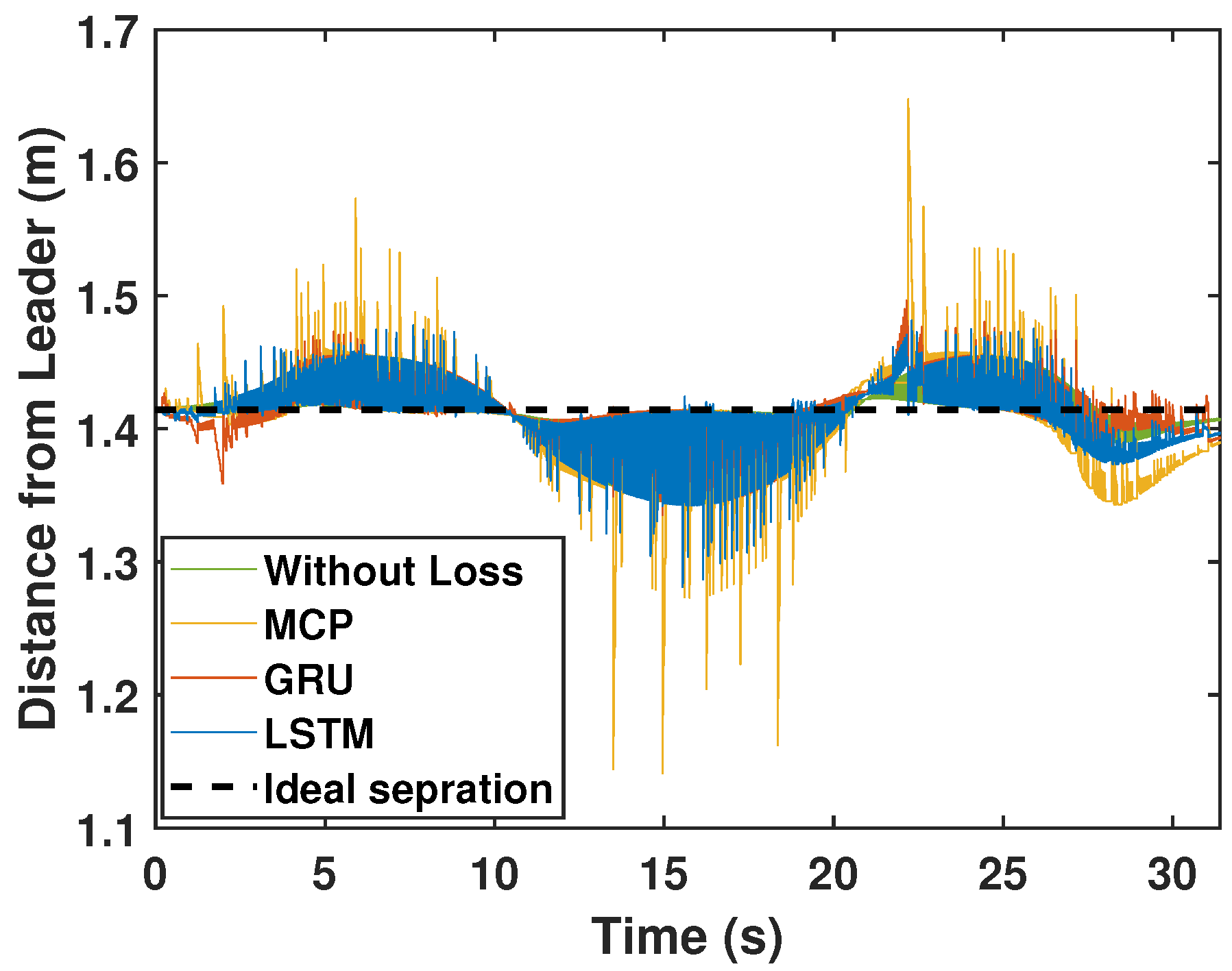

6.2.1. Circular Path

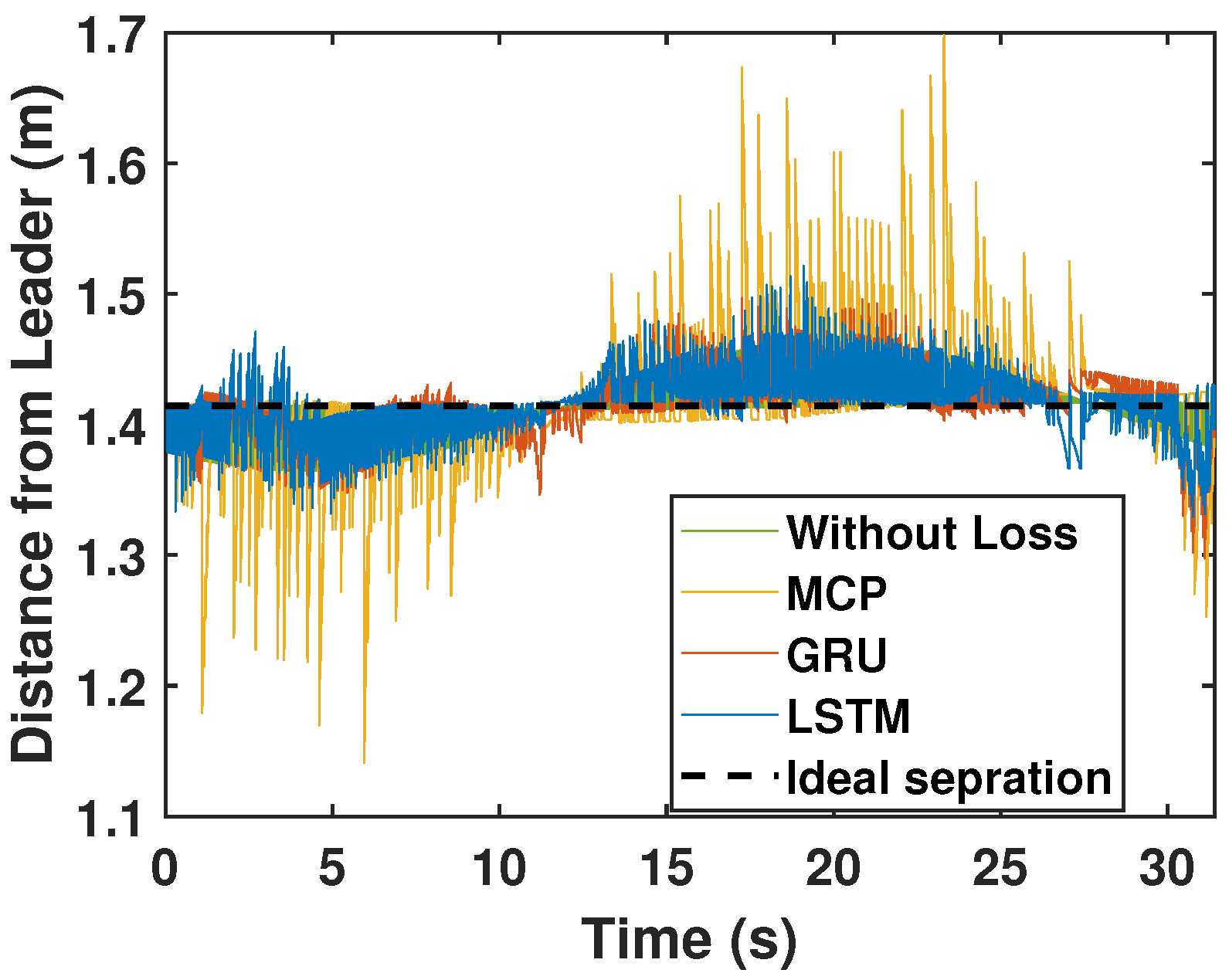

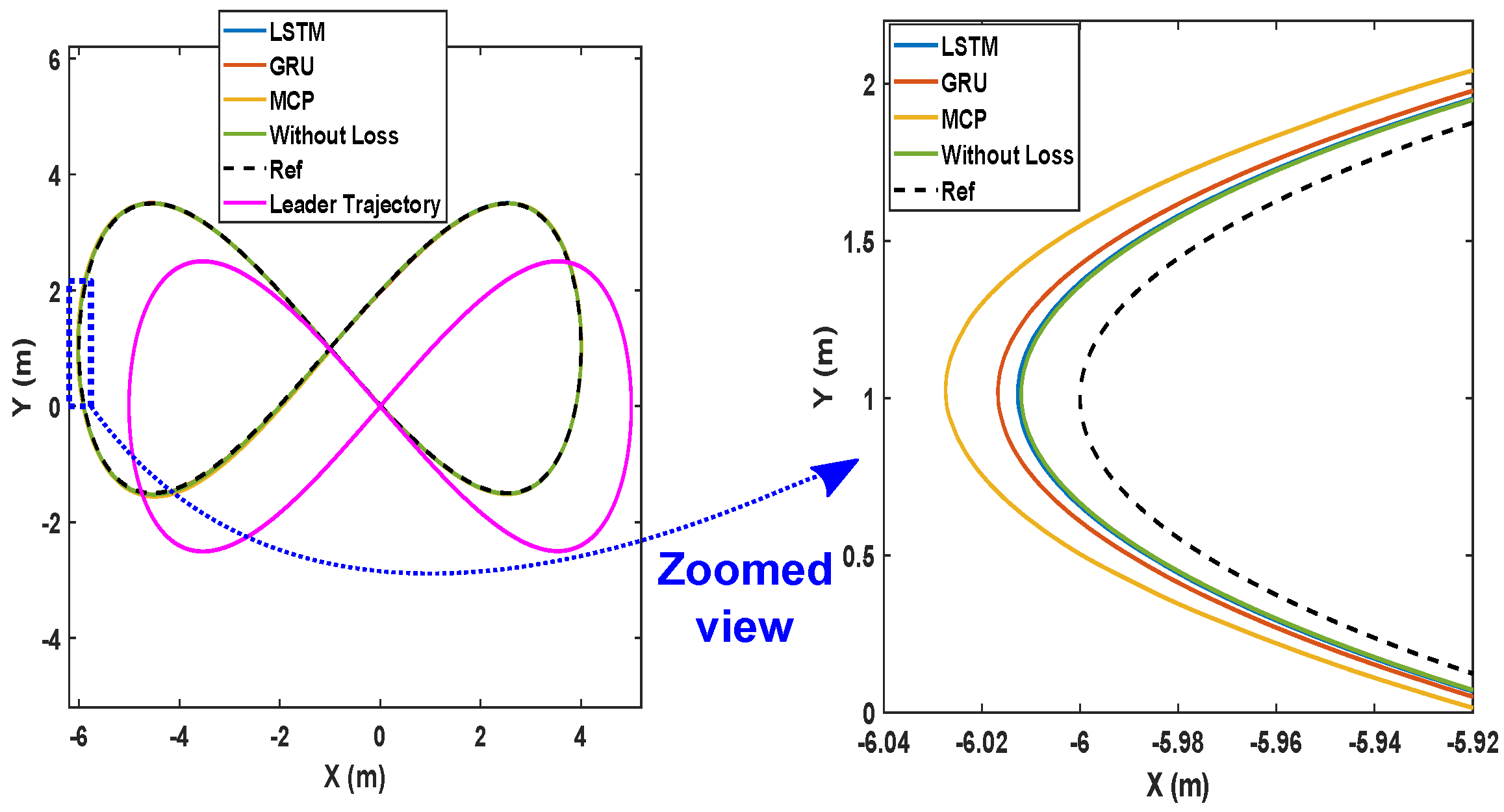

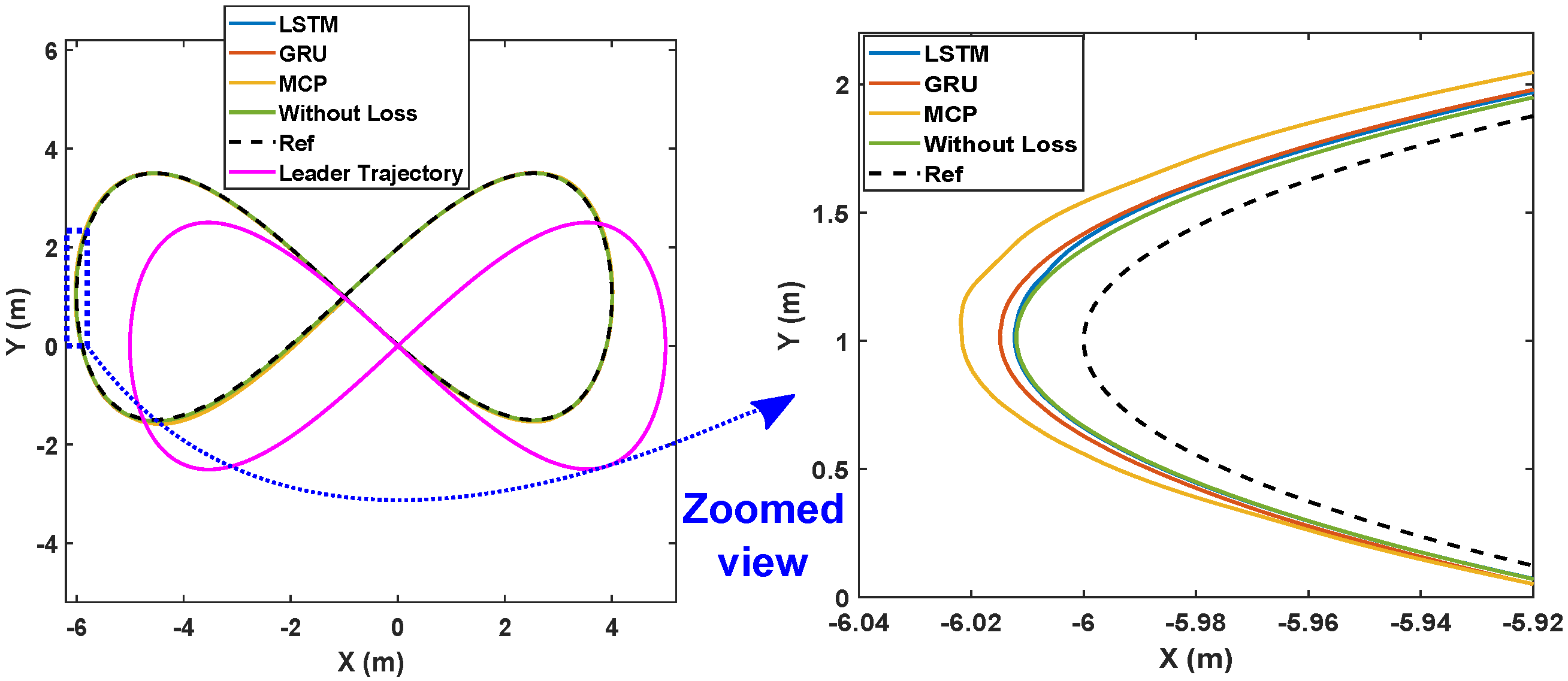

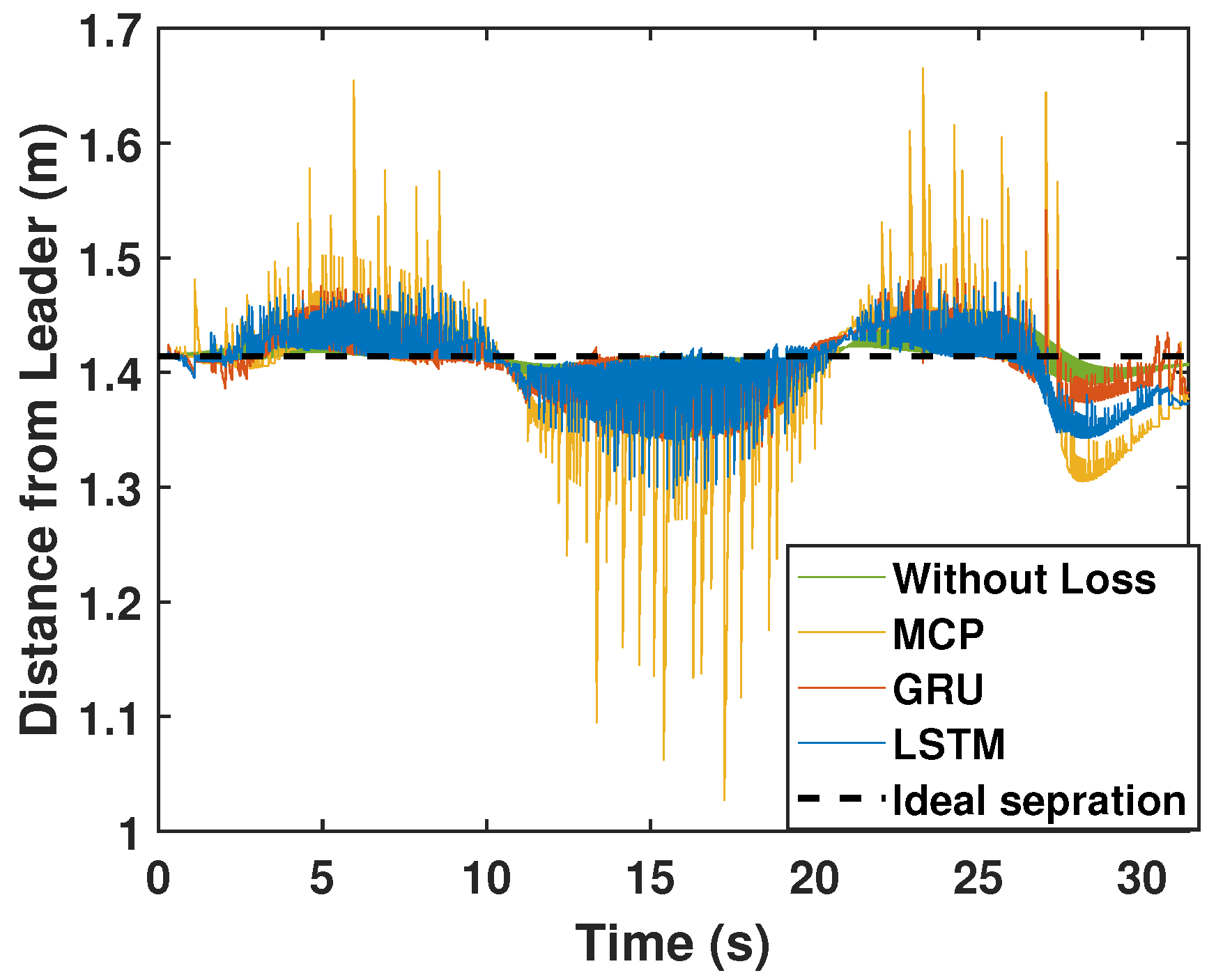

6.2.2. Eight-Shaped Trajectory

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Javed, M.A.; Muram, F.U.; Punnekkat, S.; Hansson, H. Safe and secure platooning of Automated Guided Vehicles in Industry 4.0. J. Syst. Archit. 2021, 121, 102309. [Google Scholar] [CrossRef]

- Soni, A.; Hu, H. A multi-robot simulator for the evaluation of formation control algorithms. In Proceedings of the 11th Computer Science and Electronic Engineering (CEEC), Essex, UK, 18–20 September 2019; pp. 79–84. [Google Scholar]

- Chen, Y.Q.; Wang, Z. Formation control: a review and a new consideration. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3181–3186. [Google Scholar]

- Wang, S.; Jiang, L.; Meng, J.; Xie, Y.; Ding, H. Training for smart manufacturing using a mobile robot-based production line. Front. Mech. Eng. 2021, 16, 249–270. [Google Scholar] [CrossRef]

- Feng, S.; Zhang, Y.; Li, S.E.; Cao, Z.; Liu, H.X.; Li, L. String stability for vehicular platoon control: Definitions and analysis methods. Annu. Rev. Control 2019, 47, 81–97. [Google Scholar] [CrossRef]

- Rizzo, C.; Lagraña, A.; Serrano, D. Geomove: Detached agvs for cooperative transportation of large and heavy loads in the aeronautic industry. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–16 April 2020; pp. 126–133. [Google Scholar]

- Liu, A.; Zhang, W.A.; Yu, L.; Yan, H.; Zhang, R. Formation control of multiple mobile robots incorporating an extended state observer and distributed model predictive approach. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 4587–4597. [Google Scholar] [CrossRef]

- Madhevan, B.; Sreekumar, M. Analysis of Communication Delay and Packet Loss During Localization Among Mobile Robots. In Intelligent Systems Technologies and Applications; Springer: Berlin, Germany, 2016; pp. 3–12. [Google Scholar]

- Pratama, P.S.; Nguyen, T.H.; Kim, H.K.; Kim, D.H.; Kim, S.B. Positioning and obstacle avoidance of automatic guided vehicle in partially known environment. Int. J. Control Autom. Syst. 2016, 14, 1572–1581. [Google Scholar] [CrossRef]

- Hu, J.; Zhang, H.; Liu, L.; Zhu, X.; Zhao, C.; Pan, Q. Convergent multiagent formation control with collision avoidance. IEEE Trans. Robot. 2020, 36, 1805–1818. [Google Scholar] [CrossRef]

- Consolini, L.; Morbidi, F.; Prattichizzo, D.; Tosques, M. Leader–follower formation control of nonholonomic mobile robots with input constraints. Automatica 2008, 44, 1343–1349. [Google Scholar] [CrossRef]

- Wang, C.; Tnunay, H.; Zuo, Z.; Lennox, B.; Ding, Z. Fixed-time formation control of multirobot systems: Design and experiments. IEEE Trans. Ind. Electron. 2018, 66, 6292–6301. [Google Scholar] [CrossRef]

- Nandanwar, A.; Dhar, N.K.; Malyshev, D.; Rybak, L.; Behera, L. Stochastic Event-Based Super-Twisting Formation Control for Multi-Agent System under Network Uncertainties. IEEE Trans. Control Netw. Syst. 2021. [Google Scholar] [CrossRef]

- Bai, C.; Yan, P.; Pan, W.; Guo, J. Learning-based multi-robot formation control with obstacle avoidance. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Lin, S.; Jia, R.; Yue, M.; Xu, Y. On composite leader–follower formation control for wheeled mobile robots with adaptive disturbance rejection. Appl. Artif. Intell. 2019, 33, 1306–1326. [Google Scholar] [CrossRef]

- Peng, Z.; Yang, S.; Wen, G.; Rahmani, A.; Yu, Y. Adaptive distributed formation control for multiple nonholonomic wheeled mobile robots. Neurocomputing 2016, 173, 1485–1494. [Google Scholar] [CrossRef]

- Cepeda-Gomez, R.; Perico, L.F. Formation control of nonholonomic vehicles under time delayed communications. IEEE Trans. Autom. Sci. Eng. 2015, 12, 819–826. [Google Scholar] [CrossRef]

- Wang, L.; Liu, Q.; Zang, C.; Zhu, S.; Gan, C.; Liu, Y. Formation Control of Dual Auto Guided Vehicles Based on Compensation Method in 5G Networks. Machines 2021, 9, 318. [Google Scholar] [CrossRef]

- Santos, C.; Espinosa, F.; Martinez-Rey, M.; Gualda, D.; Losada, C. Self-triggered formation control of nonholonomic robots. Sensors 2019, 19, 2689. [Google Scholar] [CrossRef]

- Wei, H.; Lv, Q.; Duo, N.; Wang, G.; Liang, B. Consensus algorithms based multi-robot formation control under noise and time delay conditions. Appl. Sci. 2019, 9, 1004. [Google Scholar] [CrossRef]

- Peng, L.; Guan, F.; Perneel, L.; Fayyad-Kazan, H.; Timmerman, M. Decentralized multi-robot formation control with communication delay and asynchronous clock. J. Intell. Robot. Syst. 2018, 89, 465–484. [Google Scholar] [CrossRef]

- Liu, Y.; Candell, R.; Moayeri, N. Effects of wireless packet loss in industrial process control systems. ISA Trans. 2017, 68, 412–424. [Google Scholar] [CrossRef]

- Teh, X.X.; Aijaz, A.; Portelli, A.; Jones, S. Communications-based Formation Control of Mobile Robots: Modeling, Analysis and Performance Evaluation. In Proceedings of the 23rd International ACM Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems, Alicante, Spain, 16–20 November 2020; pp. 149–153. [Google Scholar]

- Elahi, A.; Alfi, A.; Modares, H. H consensus control of discrete-time multi-agent systems under network imperfections and external disturbance. IEEE/CAA J. Autom. Sin. 2019, 6, 667–675. [Google Scholar] [CrossRef]

- Wu, J.; Chen, X.; Liu, A.; Yu, L. Predictive control for visual servoing control of cyber physical systems with packet loss. Peer-to-Peer Netw. Appl. 2019, 12, 1774–1784. [Google Scholar] [CrossRef]

- Phung, M.D.; Tran, T.H.; Tran, Q.V. Stable control of networked robot subject to communication delay, packet loss, and out-of-order delivery. Vietnam. J. Mech. 2014, 36, 215–233. [Google Scholar] [CrossRef][Green Version]

- Wang, B.F.; Guo, G. Kalman filtering with partial Markovian packet losses. Int. J. Autom. Comput. 2009, 6, 395–400. [Google Scholar] [CrossRef]

- Abdellah, A.R.; Mahmood, O.A.; Koucheryavy, A. Delay prediction in IoT using machine learning approach. In Proceedings of the 12th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Online, 5–7 October 2020; pp. 275–279. [Google Scholar]

- Abdellah, A.R.; Koucheryavy, A. Deep learning with long short-term memory for iot traffic prediction. In Internet of Things, Smart Spaces, and Next Generation Networks and Systems; Springer: Berlin, Germany, 2020; pp. 267–280. [Google Scholar]

- Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin, Germany, 2012; pp. 37–45. [Google Scholar]

- Manaswi, N.K. Rnn and lstm. In Deep Learning with Applications Using Python; Springer: Berlin, Germany, 2018; pp. 115–126. [Google Scholar]

- Mirali, F.; Werner, H. A Dynamic Quasi-Taylor Approach for Distributed Consensus Problems with Packet Loss. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 701–706. [Google Scholar]

- Zhang, T.Y.; Liu, G.P. Tracking control of wheeled mobile robots with communication delay and data loss. J. Syst. Sci. Complex. 2018, 31, 927–945. [Google Scholar] [CrossRef]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar]

- Tian, B.; Wang, G.; Xu, Z.; Zhang, Y.; Zhao, X. Communication delay compensation for string stability of CACC system using LSTM prediction. Veh. Commun. 2021, 29, 100333. [Google Scholar] [CrossRef]

- Al Issa, S.; Kar, I. Design and implementation of event-triggered adaptive controller for commercial mobile robots subject to input delays and limited communications. Control Eng. Pract. 2021, 114, 104865. [Google Scholar] [CrossRef]

- Gong, X.; Pan, Y.J.; Pawar, A. A novel leader following consensus approach for multi-agent systems with data loss. Int. J. Control Autom. Syst. 2017, 15, 763. [Google Scholar] [CrossRef]

- Zhang, T.; Li, J. Iterative Learning Control for Multi-Agent Systems with Finite-Leveled Sigma-Delta Quantizer and Input Saturation. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 5637–5644. [Google Scholar]

- Wu, Y.; Zhang, J.; Ge, Y.; Sheng, Z.; Fang, Y. A Dropout Compensation ILC Method for Formation Tracking of Heterogeneous Multi-Agent Systems with Loss of Multiple Communication Packets. Appl. Sci. 2020, 10, 4752. [Google Scholar] [CrossRef]

- Bristow, D.A.; Tharayil, M.; Alleyne, A.G. A survey of iterative learning control. IEEE Control Syst. Mag. 2006, 26, 96–114. [Google Scholar]

- Xuhui, B.; Zhongsheng, H.; Shangtai, J.; Ronghu, C. An iterative learning control design approach for networked control systems with data dropouts. Int. J. Robust Nonlinear Control 2016, 26, 91–109. [Google Scholar] [CrossRef]

- Deng, X.; Sun, X.; Liu, S. Iterative learning control for leader-following consensus of nonlinear multi-agent systems with packet dropout. Int. J. Control Autom. Syst. 2019, 17, 2135–2144. [Google Scholar] [CrossRef]

- Dutton, B.; Maloney, E.S. Dutton’s Navigation & Piloting; Prentice Hall: Upper Saddle River, NJ, USA, 1985. [Google Scholar]

- Topini, E.; Topini, A.; Franchi, M.; Bucci, A.; Secciani, N.; Ridolfi, A.; Allotta, B. LSTM-based Dead Reckoning Navigation for Autonomous Underwater Vehicles. In Proceedings of the Global Oceans 2020: Singapore–US Gulf Coast, Biloxi, MS, USA, 5–30 October 2020. [Google Scholar]

- Berkeveld, R.A. Design and Implementation of Unicycle AGVs for Cooperative Control. Master’s Thesis, Eindhoven University of Technology, Eindhoven, The Netherlands, 26 September 2016. [Google Scholar]

- Yan, T. Positioning of logistics and warehousing automated guided vehicle based on improved LSTM network. Int. J. Syst. Assur. Eng. Manag. 2021, 1–10. [Google Scholar] [CrossRef]

- Van Nam, D.; Gon-Woo, K. Deep Learning based-State Estimation for Holonomic Mobile Robots Using Intrinsic Sensors. In Proceedings of the 2021 21st International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 12–15 October 2021. [Google Scholar]

- Quan, Y.; Huang, L.; Ma, L.; He, Y.; Wang, R. Neural Network-Based Indoor Autonomously-Navigated AGV Motion Trajectory Data Fusion. Autom. Control Comput. Sci. 2021, 55, 334–345. [Google Scholar] [CrossRef]

- Çatal, O.; Leroux, S.; De Boom, C.; Verbelen, T.; Dhoedt, B. Anomaly detection for autonomous guided vehicles using bayesian surprise. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021. [Google Scholar]

- Ding, X.; Zhang, D.; Zhang, L.; Zhang, L.; Zhang, C.; Xu, B. Fault Detection for Automatic Guided Vehicles Based on Decision Tree and LSTM. In Proceedings of the 2021 5th International Conference on System Reliability and Safety (ICSRS), Palermo, Italy, 24–26 November 2021. [Google Scholar]

- Klancar, G.; Matko, D.; Blazic, S. Mobile robot control on a reference path. In Proceedings of the 2005 IEEE International Symposium on, Mediterrean Conference on Control and Automation Intelligent Control, Limassol, Cyprus, 27–29 June 2005; pp. 1343–1348. [Google Scholar]

- Abbasi, A.; Moshayedi, A.J. Trajectory tracking of two-wheeled mobile robots, using LQR optimal control method, based on computational model of KHEPERA IV. J. Simul. Anal. Nov. Technol. Mech. Eng. 2018, 10, 41–50. [Google Scholar]

- Prasad, L.B.; Tyagi, B.; Gupta, H.O. Optimal control of nonlinear inverted pendulum system using PID controller and LQR: Performance analysis without and with disturbance input. Int. J. Autom. Comput. 2014, 11, 661–670. [Google Scholar] [CrossRef]

- Kumar, E.V.; Jerome, J. Algebraic Riccati equation based Q and R matrices selection algorithm for optimal LQR applied to tracking control of 3rd order magnetic levitation system. Arch. Electr. Eng. 2016, 65, 151–168. [Google Scholar] [CrossRef]

- Desineni, S.N. Optimal control systems. In Electrical Engineering Textbook Series; CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Willems, J. Least squares stationary optimal control and the algebraic Riccati equation. IEEE Trans. Autom. Control 1971, 16, 621–634. [Google Scholar] [CrossRef]

- Katsuhiko, O. Modern Control Engineering; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Song, X.; Liu, Y.; Xue, L.; Wang, J.; Zhang, J.; Wang, J.; Jiang, L.; Cheng, Z. Time-series well performance prediction based on Long Short-Term Memory (LSTM) neural network model. J. Pet. Sci. Eng. 2020, 186, 106682. [Google Scholar] [CrossRef]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Kawakami, K. Supervised Sequence Labelling with Recurrent Neural Networks. Ph.D. Thesis, Technical University of Munich, München, Germany, 2008. [Google Scholar]

- Zhang, X.; Zhao, M.; Dong, R. Time-series prediction of environmental noise for urban iot based on long short-term memory recurrent neural network. Appl. Sci. 2020, 10, 1144. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, H.; Dong, J.; Zhong, G.; Sun, X. Prediction of sea surface temperature using long short-term memory. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1745–1749. [Google Scholar] [CrossRef]

| Packet Loss | 30% | 50% | ||||

|---|---|---|---|---|---|---|

| RMSE | X | Y | Heading Angle | X | Y | Heading Angle |

| LSTM | 3.19 × 10 | 3.46 × 10 | 9.8 × 10 | 3 × 10 | 3.71 × 10 | 1.01 × 10 |

| GRU | 3.35 × 10 | 3.52 × 10 | 1.17 × 10 | 3.41 × 10 | 3.72 × 10 | 1.43 × 10 |

| MCP | 3.47 × 10 | 4.28 × 10 | 1.10 × 10 | 5.53 × 10 | 5.70 × 10 | 1.59 × 10 |

| LSTM | X | Y | Heading Angle |

|---|---|---|---|

| Epox | 400 | 400 | 400 |

| Hidden nodes | 10 | 10 | 7 |

| Batch size | 128 | 128 | 128 |

| Learning rate | 0.005 | 0.01 | 0.1 |

| Learning rate drop factor | 0.2 | 0.2 | 0.2 |

| Packet Loss | Prediction Method | RMSE-X | RMSE-Y | RMSE-Heading Angle |

|---|---|---|---|---|

| 0% | - | 2.76 × 10 | 2.77 × 10 | 7.8 × 10 |

| LSTM | 2.45 × 10 | 2.81 × 10 | 8.3 × 10 | |

| 30% | GRU | 2.55 × 10 | 3.07 × 10 | 9.7 × 10 |

| MCP | 6.23 × 10 | 7.20 × 10 | 1.94 × 10 | |

| LSTM | 2.39 × 10 | 3.04 × 10 | 9.1 × 10 | |

| 50% | GRU | 2.60 × 10 | 3.49 × 10 | 1.28 × 10 |

| MCP | 8.94 × 10 | 9.15 × 10 | 2.68 × 10 |

| Packet Loss | Prediction Method | RMSE-X | RMSE-Y | RMSE-Heading Angle |

|---|---|---|---|---|

| 0% | - | 2.39 × 10 | 2.74 × 10 | 1.25 × 10 |

| LSTM | 2.08 × 10 | 2.63 × 10 | 1.18 × 10 | |

| 30% | GRU | 2.18 × 10 | 2.58 × 10 | 1.33 × 10 |

| MCP | 5.35 × 10 | 6.67 × 10 | 3.04 × 10 | |

| LSTM | 2.09 × 10 | 2.77 × 10 | 1.45 × 10 | |

| 50% | GRU | 2.57 × 10 | 2.67 × 10 | 1.80 × 10 |

| MCP | 7.94 × 10 | 9.36 × 10 | 4.84 × 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sedghi, L.; John, J.; Noor-A-Rahim, M.; Pesch, D. Formation Control of Automated Guided Vehicles in the Presence of Packet Loss. Sensors 2022, 22, 3552. https://doi.org/10.3390/s22093552

Sedghi L, John J, Noor-A-Rahim M, Pesch D. Formation Control of Automated Guided Vehicles in the Presence of Packet Loss. Sensors. 2022; 22(9):3552. https://doi.org/10.3390/s22093552

Chicago/Turabian StyleSedghi, Leila, Jobish John, Md Noor-A-Rahim, and Dirk Pesch. 2022. "Formation Control of Automated Guided Vehicles in the Presence of Packet Loss" Sensors 22, no. 9: 3552. https://doi.org/10.3390/s22093552

APA StyleSedghi, L., John, J., Noor-A-Rahim, M., & Pesch, D. (2022). Formation Control of Automated Guided Vehicles in the Presence of Packet Loss. Sensors, 22(9), 3552. https://doi.org/10.3390/s22093552