Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning

Abstract

:1. Introduction

- Classification based on target radar cross-section (RCS) estimates.

- Classification based on target RCS ratios.

- Classification based on target RCS distributions.

- Classification based on target modulation signatures.

- Classification based on the target polarization scattering matrix.

- Classification based on other scattering mechanisms.

- Classification based on target kinematics.

2. Related Work

3. Methods

3.1. Problem Definition

3.2. MAFAT Radar Challenge

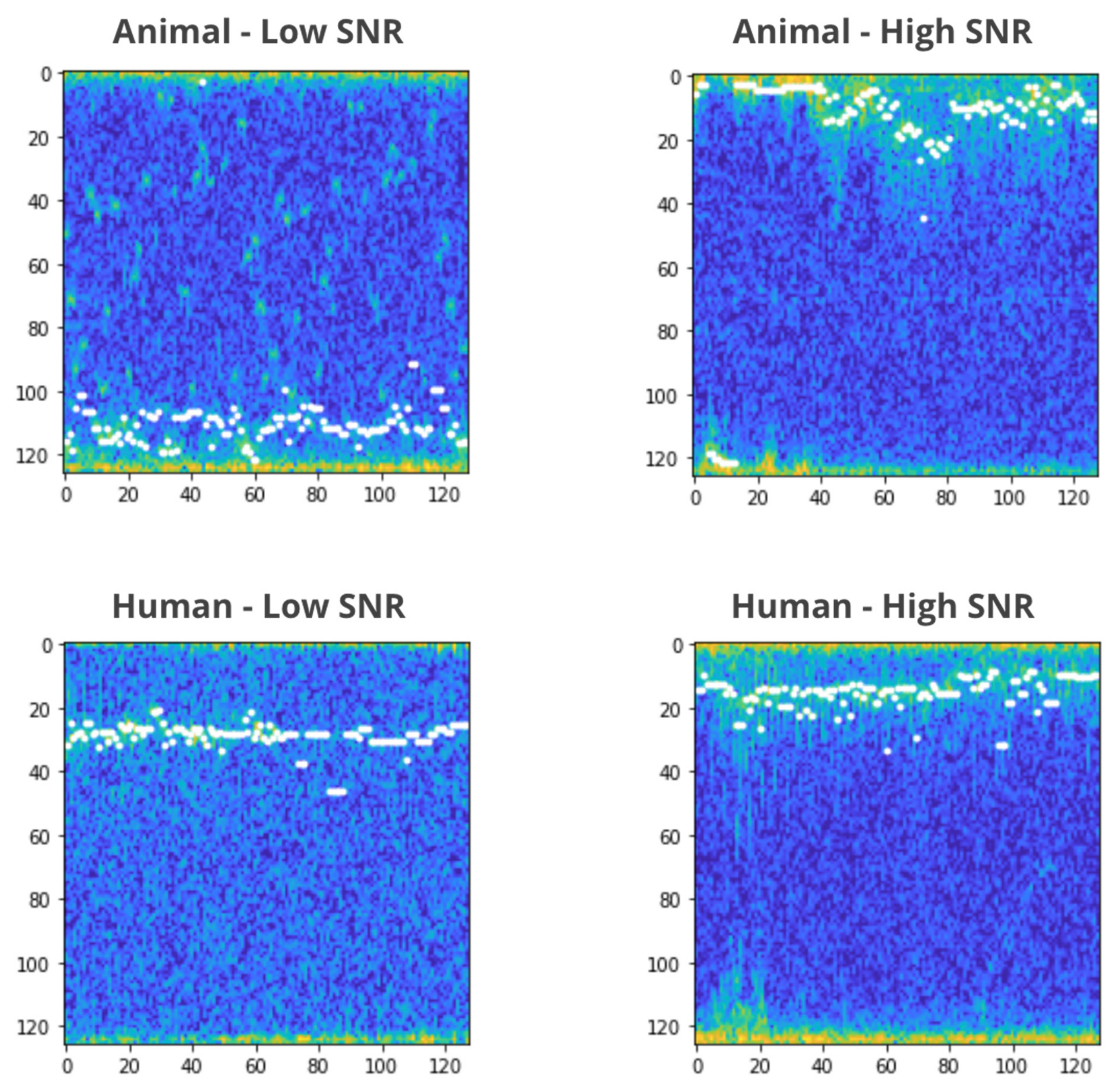

3.2.1. Data

3.2.2. Generalization Considerations

- A total of 1510 tracks in the training set;

- A total of 106 segments in the public test set and 6656 segments in the training set;

- In total, there are 566 high SNR tracks and 1144 low SNR tracks in the training set; *200 tracks are high SNR in one part and low SNR in the other;

- In total, there are 2465 high SNR segments and 4191 low SNR segments in the training set;

- Segments are taken from multiple locations. A location is not guaranteed to be a single dataset, but since the goal is to train models that can generalize well to new, unseen, locations—several locations are in the training or the test datasets only;

- It should be mentioned that the data in the training set and in the test set do not necessarily come from the same distribution. Participants are encouraged to split the training set into training and validation sets (via cross-validation or other methods) in such way that the validation set will resemble the test set.

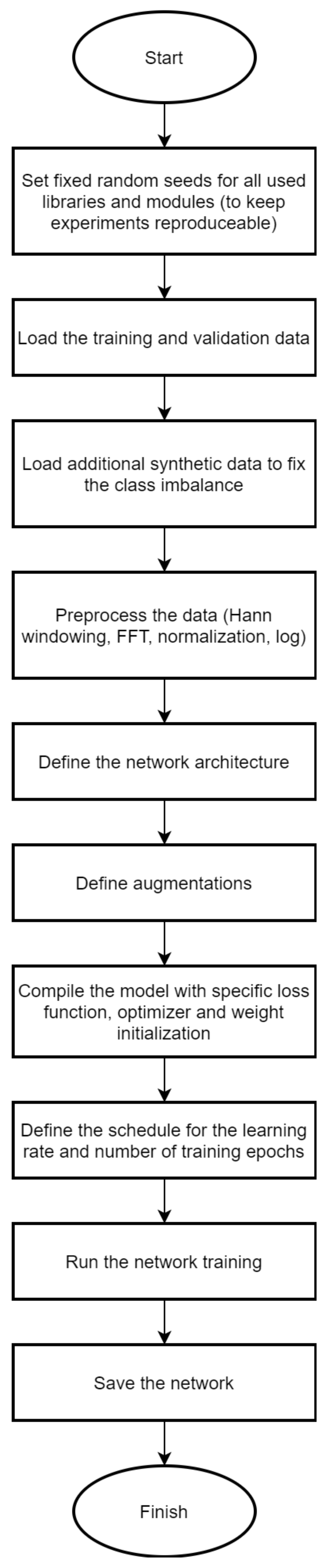

3.3. Data Pipeline

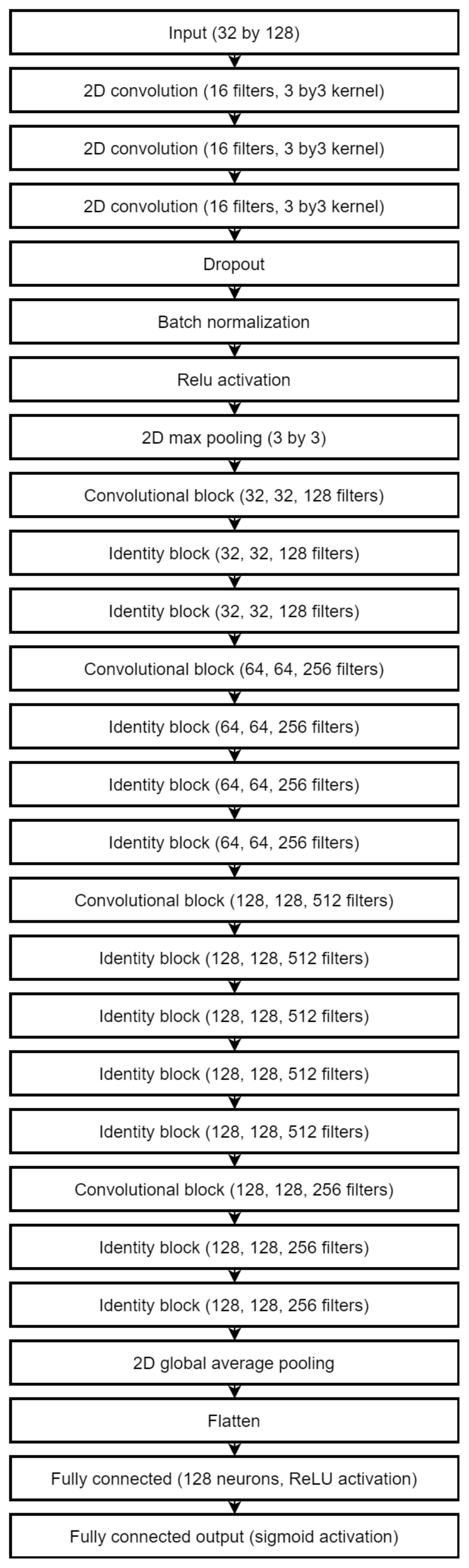

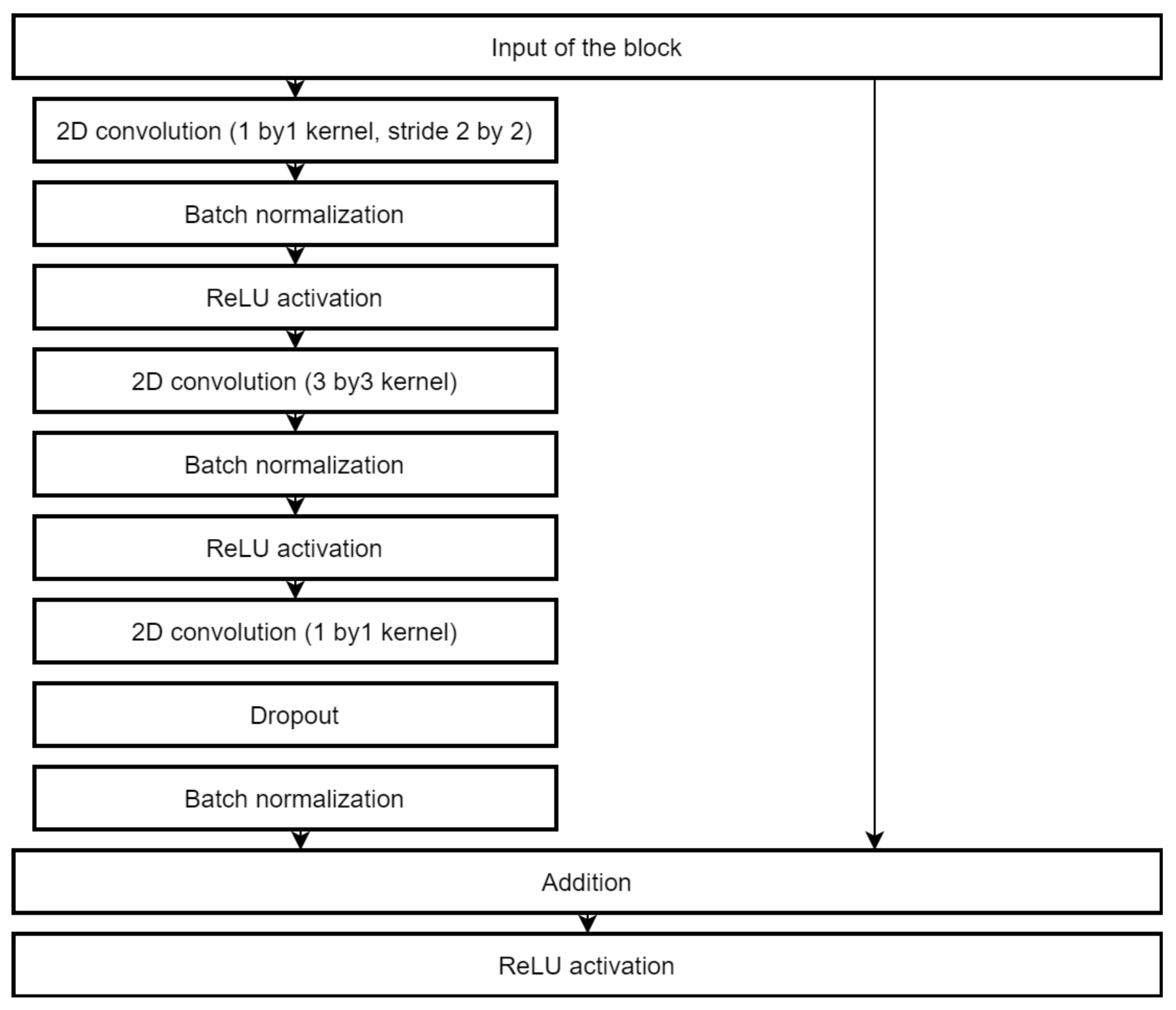

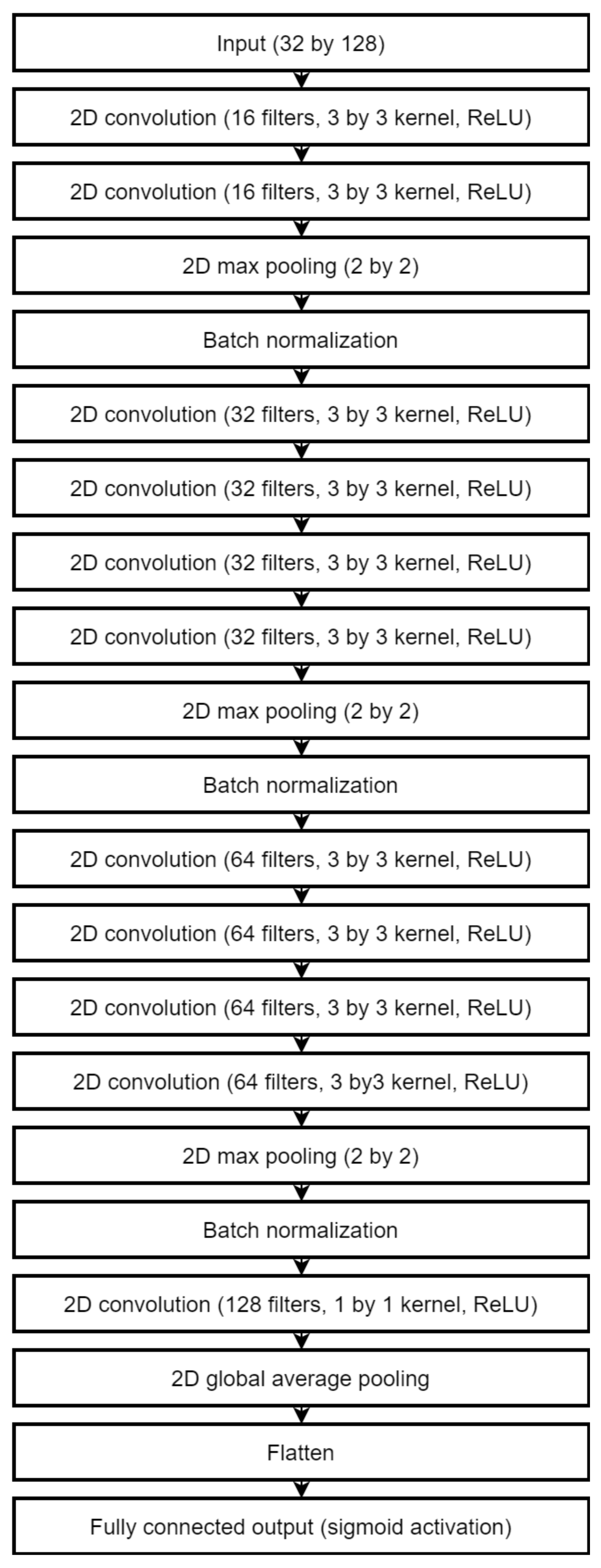

3.4. Neural Network Design

4. Experiments and Results

4.1. Data Set

4.2. Data Presprocessing

4.3. Image Augmentation

- Cyclic width shift of 0.25;

- Height shift of 0.05;

- Vertical and horizontal flips of the data.

4.4. Model Hyperparameters

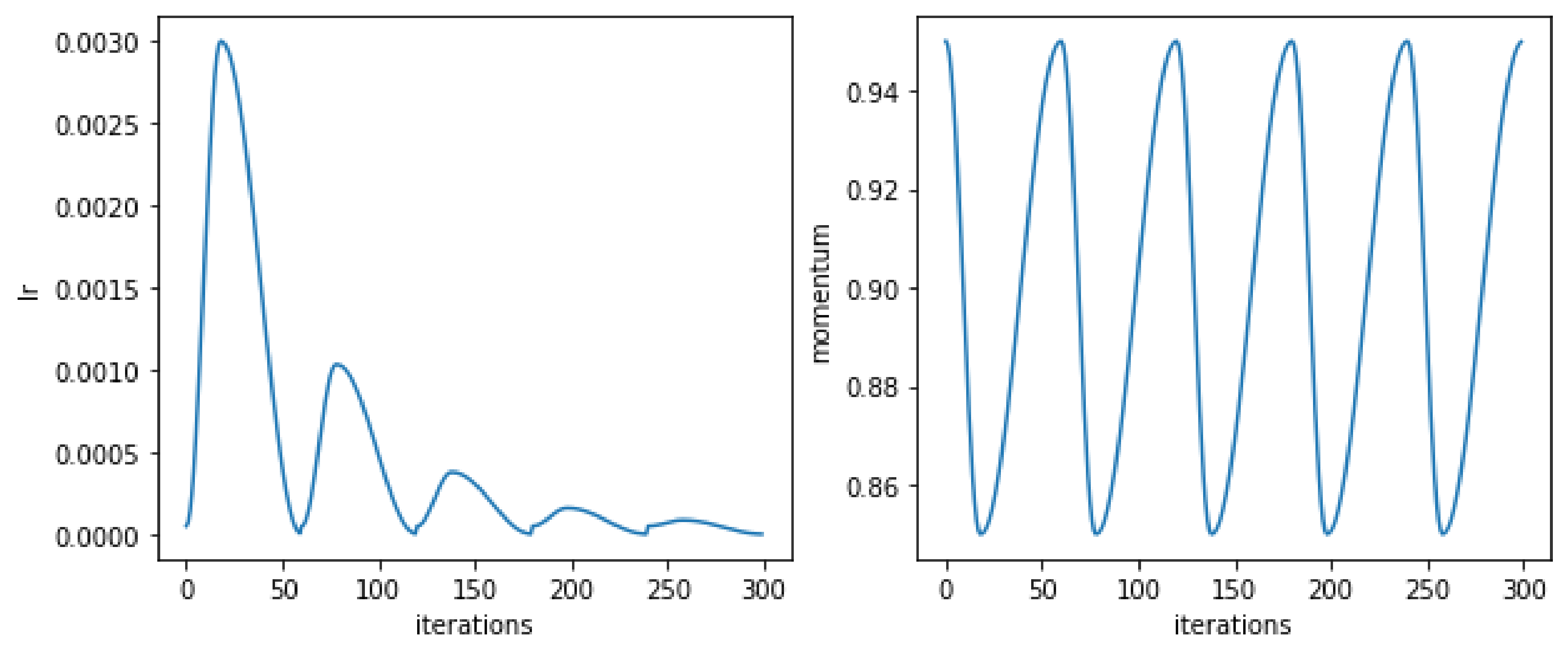

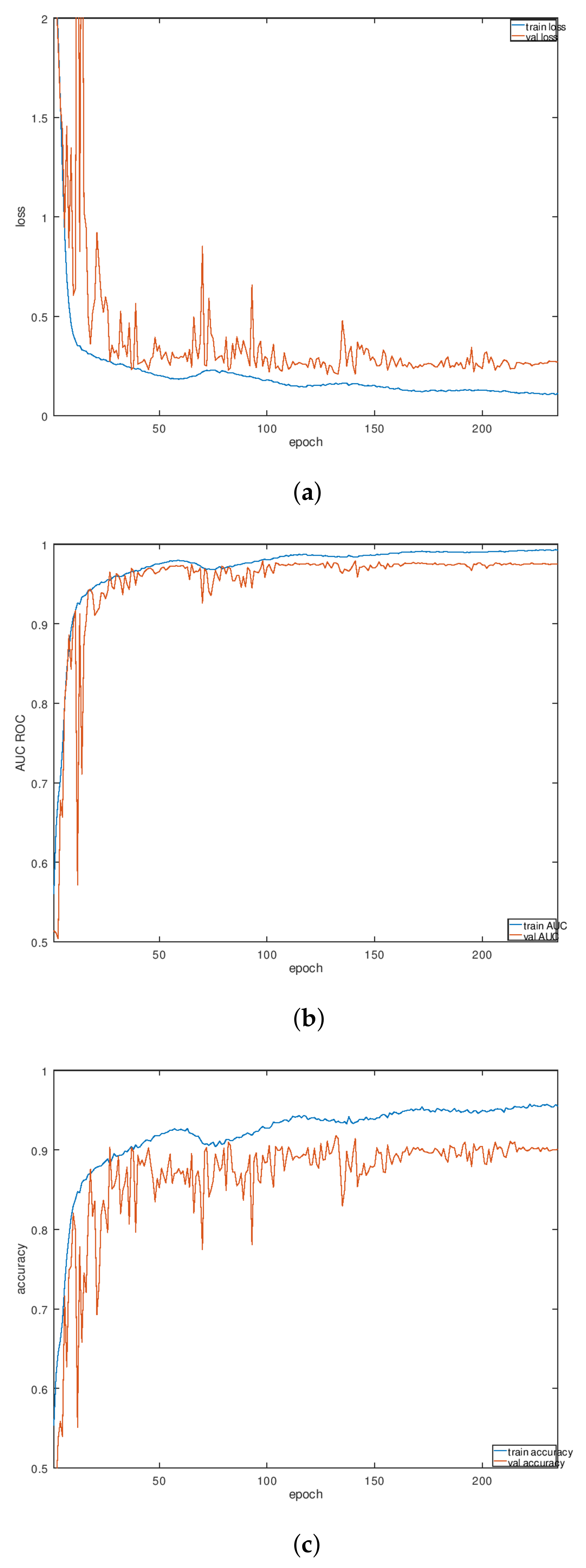

4.5. Neural Network Training

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, Z.; Huang, Y.; Hu, X.; Wei, H.; Zhao, B. A survey on deep learningbased approaches for scene understanding in autonomous driving. Electronics 2021, 10, 471. [Google Scholar] [CrossRef]

- Mahdavinejad, M.S.; Rezvan, M.; Barekatain, M.; Adibi, P.; Barnaghi, P.; Sheth, A.P. Machine learning for internet of things data analysis: A survey. Digit. Commun. Netw. 2018, 4, 161–175. [Google Scholar] [CrossRef]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a robot: Deep reinforcement learning, imitation learning, transfer learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef] [PubMed]

- Mauri, A.; Khemmar, R.; Decoux, B.; Ragot, N.; Rossi, R.; Trabelsi, R.; Boutteau, R.; Ertaud, J.; Savatier, X. Deep learning for real-time 3D multi-object detection, localisation, and tracking: Application to smart mobility. Sensors 2020, 20, 532. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Masood, H.; Zafar, A.; Ali, M.U.; Hussain, T.; Khan, M.A.; Tariq, U.; Damaševičius, R. Tracking of a Fixed-Shape Moving Object Based on the Gradient Descent Method. Sensors 2022, 22, 1098. [Google Scholar] [CrossRef]

- Ge, H.; Zhu, Z.; Lou, K.; Wei, W.; Liu, R.; Damaševičius, R.; Woźniak, M. Classification of infrared objects in manifold space using kullback-leibler divergence of gaussian distributions of image points. Symmetry 2020, 12, 434. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B.; Duan, X.; Ye, D.; Wei, W.; Woźniak, M.; Połap, D.; Damaševičius, R. Multi-level features extraction for discontinuous target tracking in remote sensing image monitoring. Sensors 2019, 19, 4855. [Google Scholar] [CrossRef] [Green Version]

- Kalake, L.; Wan, W.; Hou, L. Analysis Based on Recent Deep Learning Approaches Applied in Real-Time Multi-Object Tracking: A Review. IEEE Access 2021, 9, 32650–32671. [Google Scholar] [CrossRef]

- Mujahid, A.; Awan, M.J.; Yasin, A.; Mohammed, M.A.; Damaševičius, R.; Maskeliūnas, R.; Abdulkareem, K.H. Real-time hand gesture recognition based on deep learning YOLOv3 model. Appl. Sci. 2021, 11, 4164. [Google Scholar] [CrossRef]

- Ali, S.F.; Aslam, A.S.; Awan, M.J.; Yasin, A.; Damaševičius, R. Pose estimation of driver’s head panning based on interpolation and motion vectors under a boosting framework. Appl. Sci. 2021, 11, 11600. [Google Scholar] [CrossRef]

- Kiran, S.; Khan, M.A.; Javed, M.Y.; Alhaisoni, M.; Tariq, U.; Nam, Y.; Damaševǐcius, R.; Sharif, M. Multi-Layered Deep Learning Features Fusion for Human Action Recognition. Comput. Mater. Contin. 2021, 69, 4061–4075. [Google Scholar] [CrossRef]

- Žemgulys, J.; Raudonis, V.; Maskeliūnas, R.; Damaševičius, R. Recognition of basketball referee signals from real-time videos. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 979–991. [Google Scholar] [CrossRef]

- Patalas-maliszewska, J.; Halikowski, D.; Damaševičius, R. An automated recognition of work activity in industrial manufacturing using convolutional neural networks. Electronics 2021, 10, 2946. [Google Scholar] [CrossRef]

- Huang, Q.; Pan, C.; Liu, H. A Multi-sensor Fusion Algorithm for Monitoring the Health Condition of Conveyor Belt in Process Industry. In Proceedings of the 2021 3rd International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 8–11 November 2021; IEEE: Red Hook, NY, USA, 2021. [Google Scholar] [CrossRef]

- Bai, Z.; Li, Y.; Chen, X.; Yi, T.; Wei, W.; Wozniak, M.; Damasevicius, R. Real-time video stitching for mine surveillance using a hybrid image registration method. Electronics 2020, 9, 1336. [Google Scholar] [CrossRef]

- Ryselis, K.; Petkus, T.; Blažauskas, T.; Maskeliūnas, R.; Damaševičius, R. Multiple Kinect based system to monitor and analyze key performance indicators of physical training. Hum.-Centric Comput. Inf. Sci. 2020, 10, 4733. [Google Scholar] [CrossRef]

- Mondal, A. Occluded object tracking using object-background prototypes and particle filter. Appl. Intell. 2021, 51, 5259–5279. [Google Scholar] [CrossRef]

- Heikkilä, J.; Silvén, O. A real-time system for monitoring of cyclists and pedestrians. Image Vis. Comput. 2004, 22, 563–570. [Google Scholar] [CrossRef] [Green Version]

- Peng, X.; Shan, J. Detection and tracking of pedestrians using doppler lidar. Remote Sens. 2021, 13, 2952. [Google Scholar] [CrossRef]

- Held, P.; Steinhauser, D.; Koch, A.; Brandmeier, T.; Schwarz, U.T. A Novel Approach for Model-Based Pedestrian Tracking Using Automotive Radar. IEEE Trans. Intell. Transp. Syst. 2021, 1–14. [Google Scholar] [CrossRef]

- Severino, J.V.B.; Zimmer, A.; Brandmeier, T.; Freire, R.Z. Pedestrian recognition using micro Doppler effects of radar signals based on machine learning and multi-objective optimization. Expert Syst. Appl. 2019, 136, 304–315. [Google Scholar] [CrossRef]

- Ninos, A.; Hasch, J.; Heizmann, M.; Zwick, T. Radar-Based Robust People Tracking and Consumer Applications. IEEE Sens. J. 2022, 22, 3726–3735. [Google Scholar] [CrossRef]

- Gao, X.; Xing, G.; Roy, S.; Liu, H. RAMP-CNN: A Novel Neural Network for Enhanced Automotive Radar Object Recognition. IEEE Sens. J. 2021, 21, 5119–5132. [Google Scholar] [CrossRef]

- Wang, Z.; Miao, X.; Huang, Z.; Luo, H. Research of target detection and classification techniques using millimeter-wave radar and vision sensors. Remote Sens. 2021, 13, 1064. [Google Scholar] [CrossRef]

- Dudczyk, J. A method of feature selection in the aspect of specific identification of radar signals. Bull. Pol. Acad. Sci. Tech. Sci. 2017, 65, 113–119. [Google Scholar] [CrossRef] [Green Version]

- Pisa, S.; Pittella, E.; Piuzzi, E. A survey of radar systems for medical applications. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 64–81. [Google Scholar] [CrossRef]

- Cardillo, E.; Caddemi, A. Feasibility Study to Preserve the Health of an Industry 4.0 Worker: A Radar System for Monitoring the Sitting-Time. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd4.0&IoT), Naples, Italy, 4–6 June 2019. [CrossRef]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef] [Green Version]

- Mishra, A.; Li, C. A review: Recent progress in the design and development of nonlinear radars. Remote Sens. 2021, 13, 4982. [Google Scholar] [CrossRef]

- Tahmoush, D. Review of micro-Doppler signatures. IET Radar Sonar Navig. 2015, 9, 1140–1146. [Google Scholar] [CrossRef]

- Anderson, S. Target Classification, Recognition and Identification with HF Radar. In Proceedings of the NATO Research and Technology Agency, Sensors and Electronics Technology Panel Symposium SET–080/RSY17/RFT: Target Identification and Recognition Using RF Systems, Oslo, Norway, 11–13 October 2004; p. 18. [Google Scholar]

- Perl, E. Review of airport surface movement radar technology. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 24–27. [Google Scholar] [CrossRef]

- Le Caillec, J.; Gorski, T.; Sicot, G.; Kawalec, A. Theoretical Performance of Space-Time Adaptive Processing for Ship Detection by High-Frequency Surface Wave Radars. IEEE J. Ocean. Eng. 2018, 43, 238–257. [Google Scholar] [CrossRef]

- Coluccia, A.; Parisi, G.; Fascista, A. Detection and classification of multirotor drones in radar sensor networks: A review. Sensors 2020, 20, 4172. [Google Scholar] [CrossRef] [PubMed]

- Baczyk, M.K.; Samczyński, P.; Kulpa, K.; Misiurewicz, J. Micro-Doppler signatures of helicopters in multistatic passive radars. IET Radar Sonar Navig. 2015, 9, 1276–1283. [Google Scholar] [CrossRef]

- Zhou, T.; Yang, M.; Jiang, K.; Wong, H.; Yang, D. Mmw radar-based technologies in autonomous driving: A review. Sensors 2020, 20, 7283. [Google Scholar] [CrossRef]

- Amiri, R.; Shahzadi, A. Micro-Doppler based target classification in ground surveillance radar systems. Digit. Signal Process. Rev. J. 2020, 101, 102702. [Google Scholar] [CrossRef]

- Palffy, A.; Dong, J.; Kooij, J.F.P.; Gavrila, D.M. CNN Based Road User Detection Using the 3D Radar Cube. IEEE Robot. Autom. Lett. 2020, 5, 1263–1270. [Google Scholar] [CrossRef] [Green Version]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Classification of Unarmed/Armed Personnel Using the NetRAD Multistatic Radar for Micro-Doppler and Singular Value Decomposition Features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1933–1937. [Google Scholar] [CrossRef] [Green Version]

- Secmen, M. Radar target classification method with high accuracy and decision speed performance using MUSIC spectrum vectors and PCA projection. Radio Sci. 2011, 46, 1–9. [Google Scholar] [CrossRef]

- Zabalza, J.; Clemente, C.; Di Caterina, G.; Ren, J.; Soraghan, J.J.; Marshall, S. Robust PCA micro-doppler classification using SVM on embedded systems. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2304–2310. [Google Scholar] [CrossRef] [Green Version]

- Jiang, W.; Ren, Y.; Liu, Y.; Leng, J. Artificial Neural Networks and Deep Learning Techniques Applied to Radar Target Detection: A Review. Electronics 2022, 11, 156. [Google Scholar] [CrossRef]

- Van Eeden, W.D.; De Villiers, J.P.; Berndt, R.J.; Nel, W.A.; Blasch, E. Micro-Doppler radar classification of humans and animals in an operational environment. Expert Syst. Appl. 2018, 102, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Hou, F.; Lei, W.; Li, S.; Xi, J. Deep Learning-Based Subsurface Target Detection from GPR Scans. IEEE Sens. J. 2021, 21, 8161–8171. [Google Scholar] [CrossRef]

- Abdu, F.J.; Zhang, Y.; Fu, M.; Li, Y.; Deng, Z. Application of deep learning on millimeter-wave radar signals: A review. Sensors 2021, 21, 1951. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D Object Detection Networks Using LiDAR Data: A Review. IEEE Sens. J. 2021, 21, 1152–1171. [Google Scholar] [CrossRef]

- MAFAT Radar Challenge Homepage. Available online: https://competitions.codalab.org/competitions/25389#learn_the_details-overview (accessed on 16 January 2022).

- Jianjun, H.; Jingxiong, H.; Xie, W. Target Classification by Conventional Radar. In Proceedings of the International Radar Conference, Beijing, China, 8–10 October 1996; pp. 204–207. [Google Scholar] [CrossRef]

- Ibrahim, N.K.; Abdullah, R.S.A.R.; Saripan, M.I. Artificial Neural Network Approach in Radar Target Classification. J. Comput. Sci. 2009, 5, 23. [Google Scholar] [CrossRef]

- Ardon, G.; Simko, O.; Novoselsky, A. Aerial Radar Target Classification using Artificial Neural Networks. In Proceedings of the ICPRAM, Valletta, Malta, 22–24 February 2020; pp. 136–141. [Google Scholar] [CrossRef]

- Gadde, A.; Amin, M.G.; Zhang, Y.D.; Ahmad, F. Fall detection and classifications based on time-scale radar signal characteristics. In Proceedings of the SPIE—The International Society for Optical Engineering, Baltimore, MD, USA, 29 May 2014; Volume 9077. [Google Scholar]

- Ma, Y.; Anderson, J.; Crouch, S.; Shan, J. Moving object detection and tracking with doppler LiDAR. Remote Sens. 2019, 11, 1154. [Google Scholar] [CrossRef] [Green Version]

- Han, H.; Kim, J.; Park, J.; Lee, Y.; Jo, H.; Park, Y.; Matson, E.; Park, S. Object classification on raw radar data using convolutional neural networks. In Proceedings of the 2019 IEEE Sensors Applications Symposium (SAS), Sophia Antipolis, Valbonne, France, 11–13 March 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Stadelmayer, T.; Santra, A.; Weigel, R.; Lurz, F. Data-Driven Radar Processing Using a Parametric Convolutional Neural Network for Human Activity Classification. IEEE Sens. J. 2021, 21, 19529–19540. [Google Scholar] [CrossRef]

- Wan, J.; Chen, B.; Xu, B.; Liu, H.; Jin, L. Convolutional neural networks for radar HRRP target recognition and rejection. EURASIP J. Adv. Signal Process. 2019, 2019, 4962. [Google Scholar] [CrossRef] [Green Version]

- Dadon, Y.D.; Yamin, S.; Feintuch, S.; Permuter, H.H.; Bilik, I.; Taberkian, J. Moving Target Classification Based on micro-Doppler Signatures Via Deep Learning. In Proceedings of the IEEE National Radar Conference—Proceedings, Atlanta, GA, USA, 8–14 May 2021; Volume 2021. [Google Scholar]

- Tiwari, A.; Goomer, R.; Yenneti, S.S.S.; Mehta, S.; Mishra, V. Classification of Humans and Animals from Radar Signals using Multi-Input Mixed Data Model. In Proceedings of the 2021 International Conference on Computer Communication and Informatics, ICCCI 2021, Rhodes, Greece, 27–29 January 2021. [Google Scholar]

- Chen, V.C.; Ling, H. Time-Frequency Transforms for Radar Imaging and Signal Analysis; Artech House: London, UK, 2001. [Google Scholar]

- Chen, V.C. The Micro-Doppler Effect in Radar; Artech House: London, UK, 2011. [Google Scholar]

- Bilik, I.; Tabrikian, J.; Cohen, A. Gmm-based target classification for ground surveillance doppler radar. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 267–278. [Google Scholar] [CrossRef]

- Krizhevsky, A.E.A. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 16 January 2022).

- Lee, C.-Y.; Gallagher, P.W.; Tu, Z. Generalizing Pooling Functions in Convolutional Neural Networks: Mixed, Gated, and Tree. 2015. Available online: https://ieeexplore.ieee.org/document/7927440 (accessed on 16 January 2022). [CrossRef]

- Dodge, S.F.; Karam, L.J. A study and comparison of human and deep learning recognition performance under visual distortions. In Proceedings of the 2017 26th International Conference on Computer Communication and Networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- He, K.E.A. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Batista, G.E.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Bradley, A.P. The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recog. 1997, 30, 1145–1159. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. 2017. Available online: http://xxx.lanl.gov/abs/1412.6980 (accessed on 16 February 2022).

- Smith, L. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar] [CrossRef] [Green Version]

- Kultavewuti, P. One Cycle & Cyclic Learning Rate for Keras. Available online: https://github.com/psklight/keras_one_cycle_clr (accessed on 16 January 2022).

- Axon Pulse. MAFAT Radar Challenge: Solution by Axon Pulse. Available online: https://medium.com/axon-pulse/mafat-radar-challenge-solution-by-axon-pulse-a4f082e62b3e (accessed on 16 January 2022).

| K-Fold Experiments Results | |||

|---|---|---|---|

| K-Fold | 7 | 5 | 10 |

| Accuracy | 94.035 (±0.5777) | 89.708 (±0.5129) | 91.855 (±3.0449) |

| ROC AUC | 98.347 (±0.2502) | 97.181 (±0.1869) | 98.510 (±0.6938) |

| Loss | 0.1776 | 0.3074 | 0.2828 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buchman, D.; Drozdov, M.; Krilavičius, T.; Maskeliūnas, R.; Damaševičius, R. Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning. Sensors 2022, 22, 3456. https://doi.org/10.3390/s22093456

Buchman D, Drozdov M, Krilavičius T, Maskeliūnas R, Damaševičius R. Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning. Sensors. 2022; 22(9):3456. https://doi.org/10.3390/s22093456

Chicago/Turabian StyleBuchman, Danny, Michail Drozdov, Tomas Krilavičius, Rytis Maskeliūnas, and Robertas Damaševičius. 2022. "Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning" Sensors 22, no. 9: 3456. https://doi.org/10.3390/s22093456

APA StyleBuchman, D., Drozdov, M., Krilavičius, T., Maskeliūnas, R., & Damaševičius, R. (2022). Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning. Sensors, 22(9), 3456. https://doi.org/10.3390/s22093456