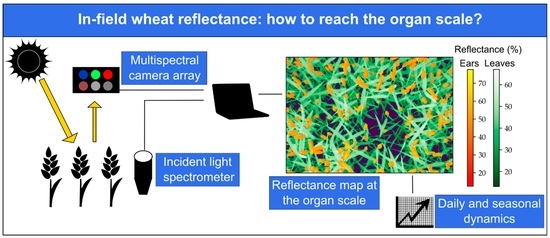

In-Field Wheat Reflectance: How to Reach the Organ Scale?

Abstract

1. Introduction

2. Materials and Methods

2.1. Material

2.2. Data Acquisition

2.2.1. Multi-Sensor System Set-Up

2.2.2. All-Day-Long Acquisitions

2.2.3. Acquisitions in Fertilization Trials

2.3. Reflectance Computation

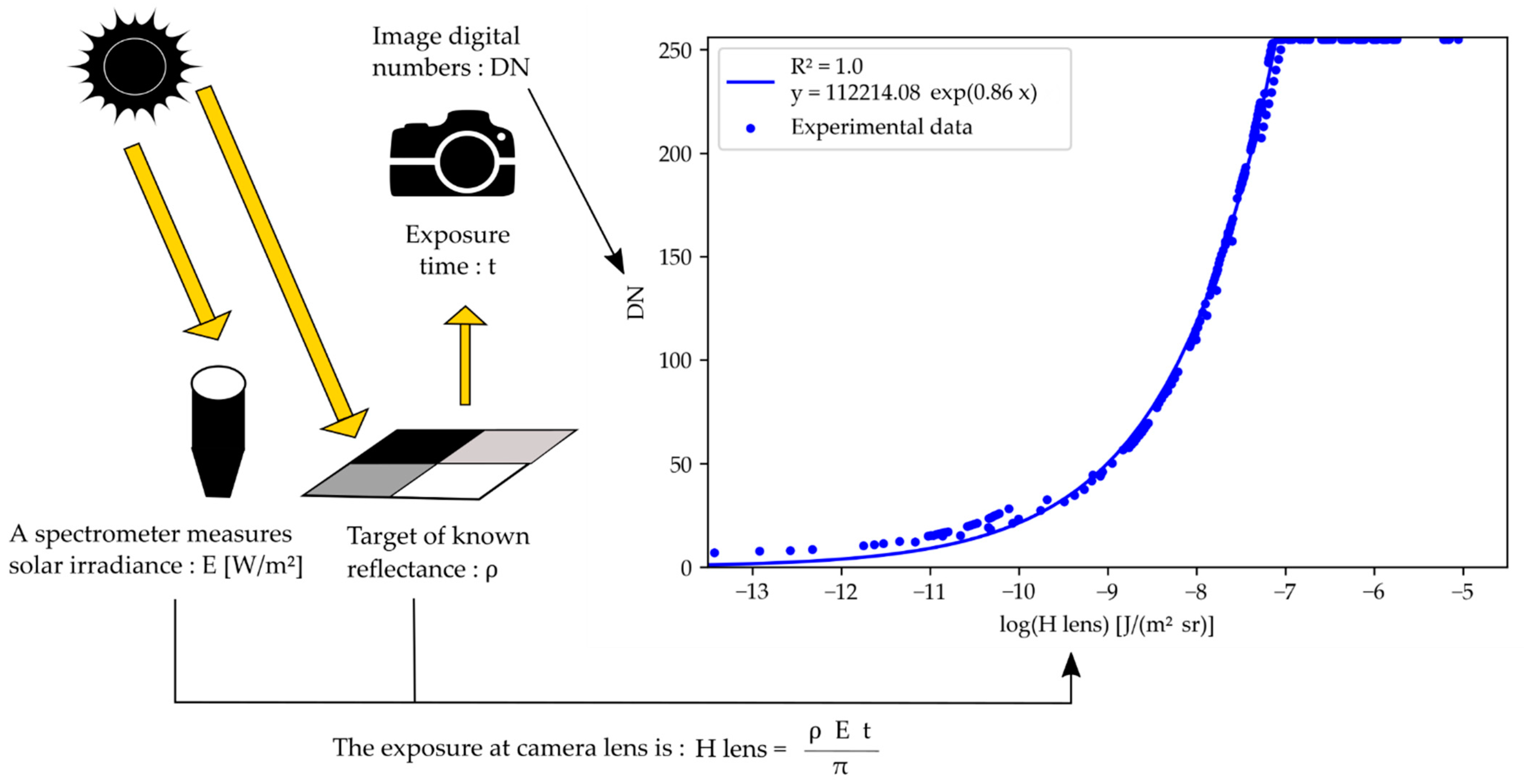

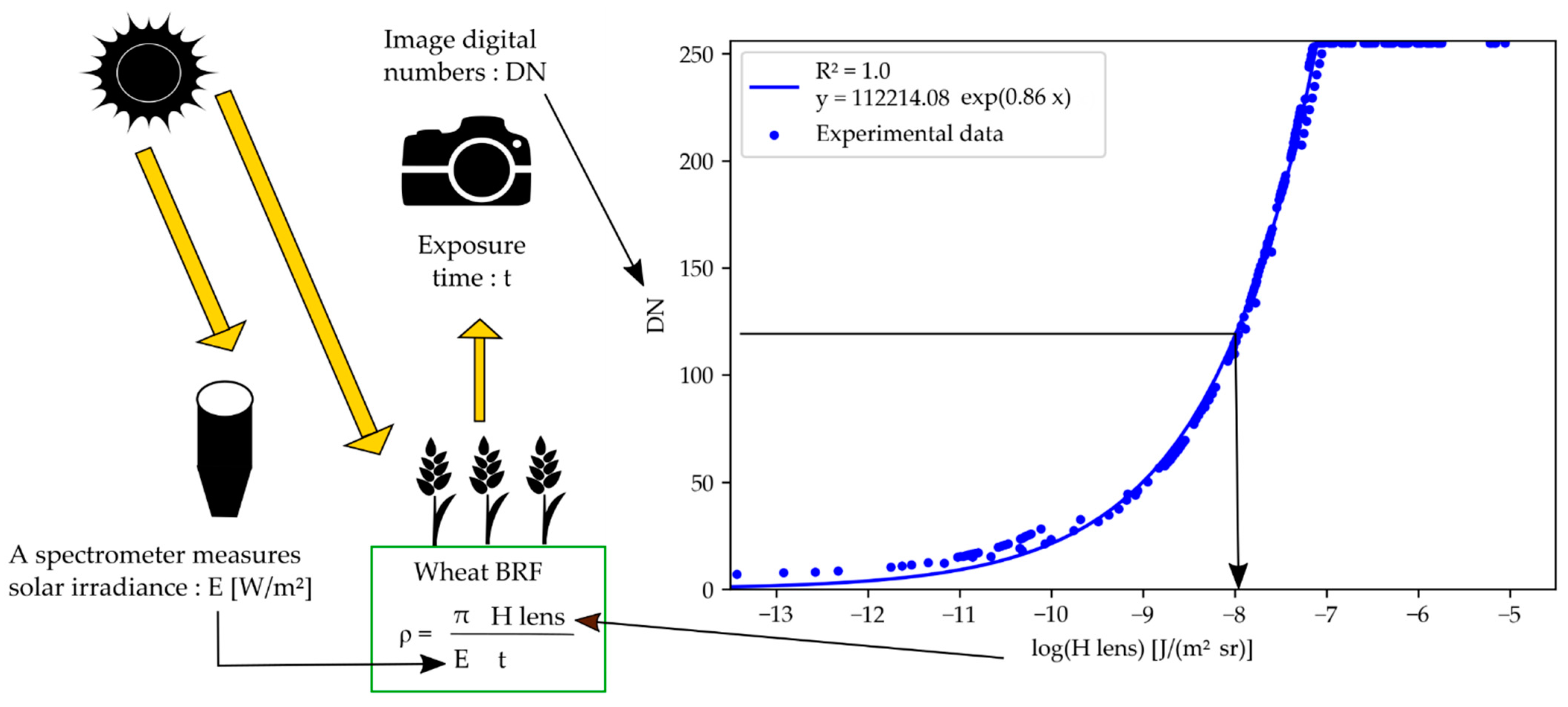

2.3.1. Theoretical Basis

2.3.2. Camera Response Curves

2.3.3. Wheat Organ Bi-Directional Reflectance Factor (BRF)

2.4. Images Registration

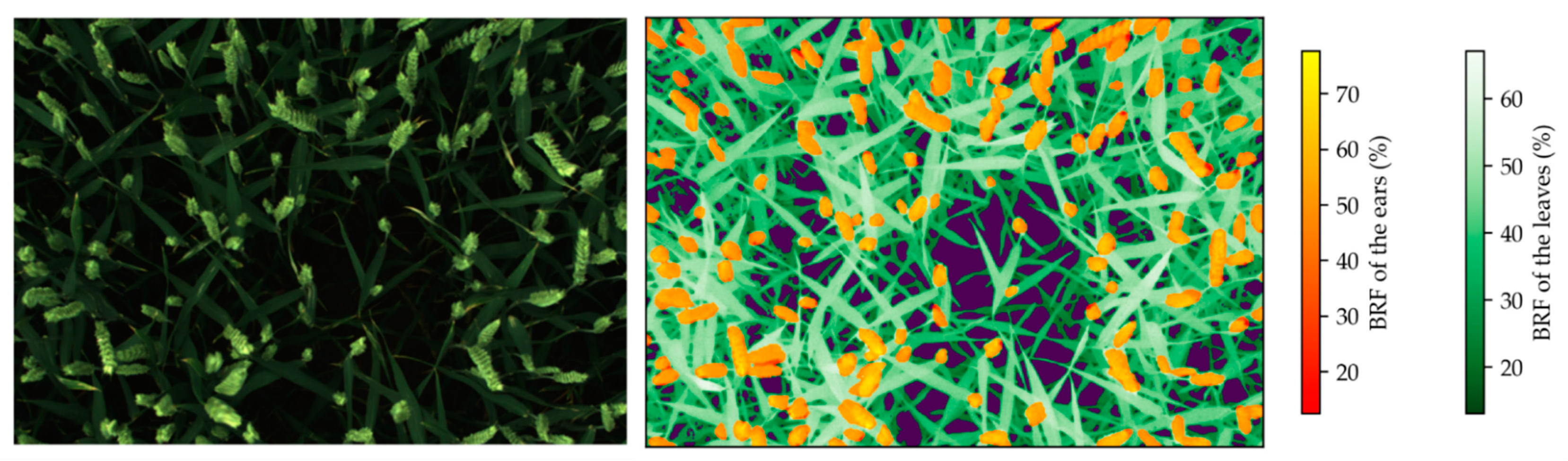

2.5. Image Segmentation at the Organ Scale

3. Results and Discussion

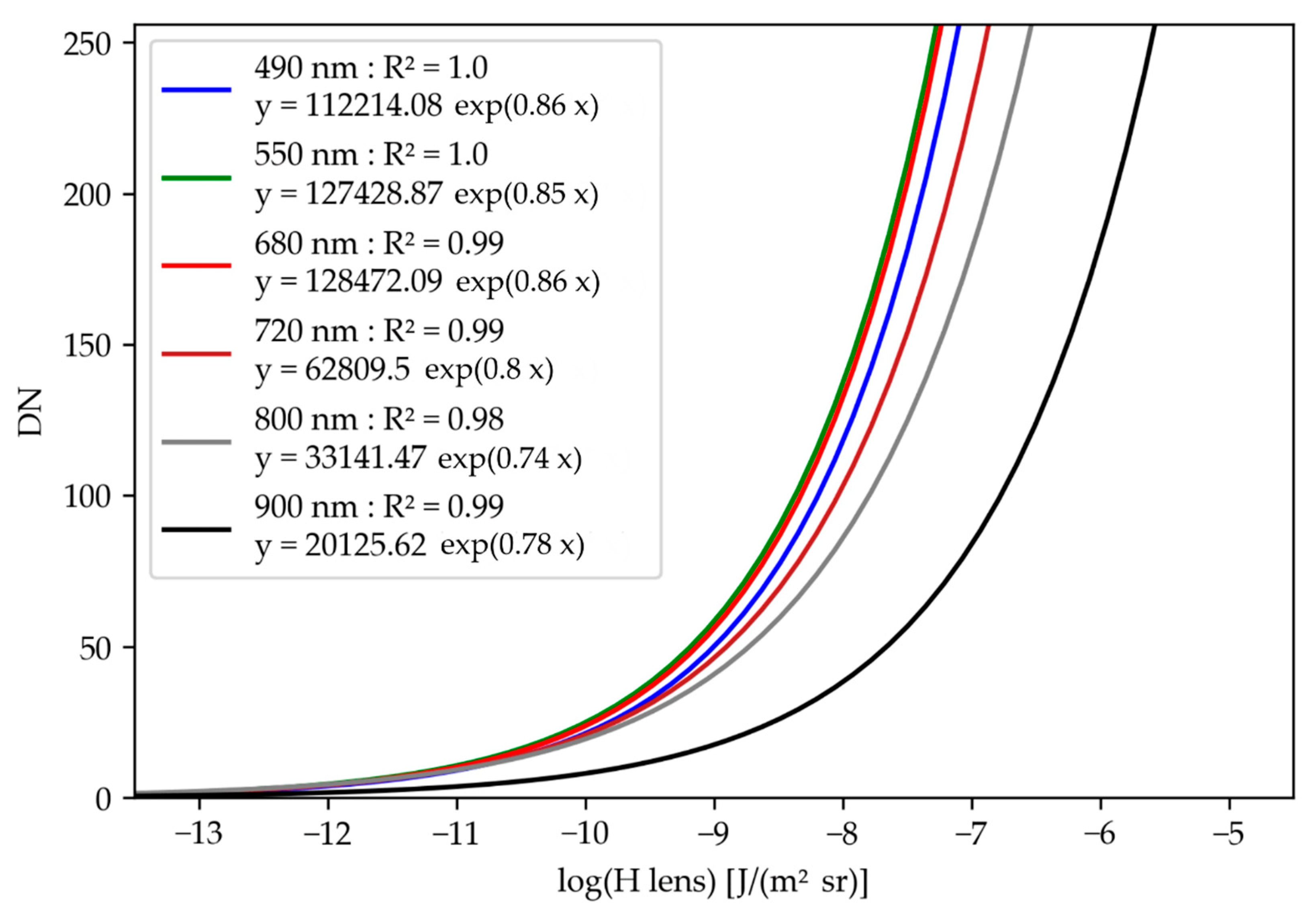

3.1. Camera Response Curves

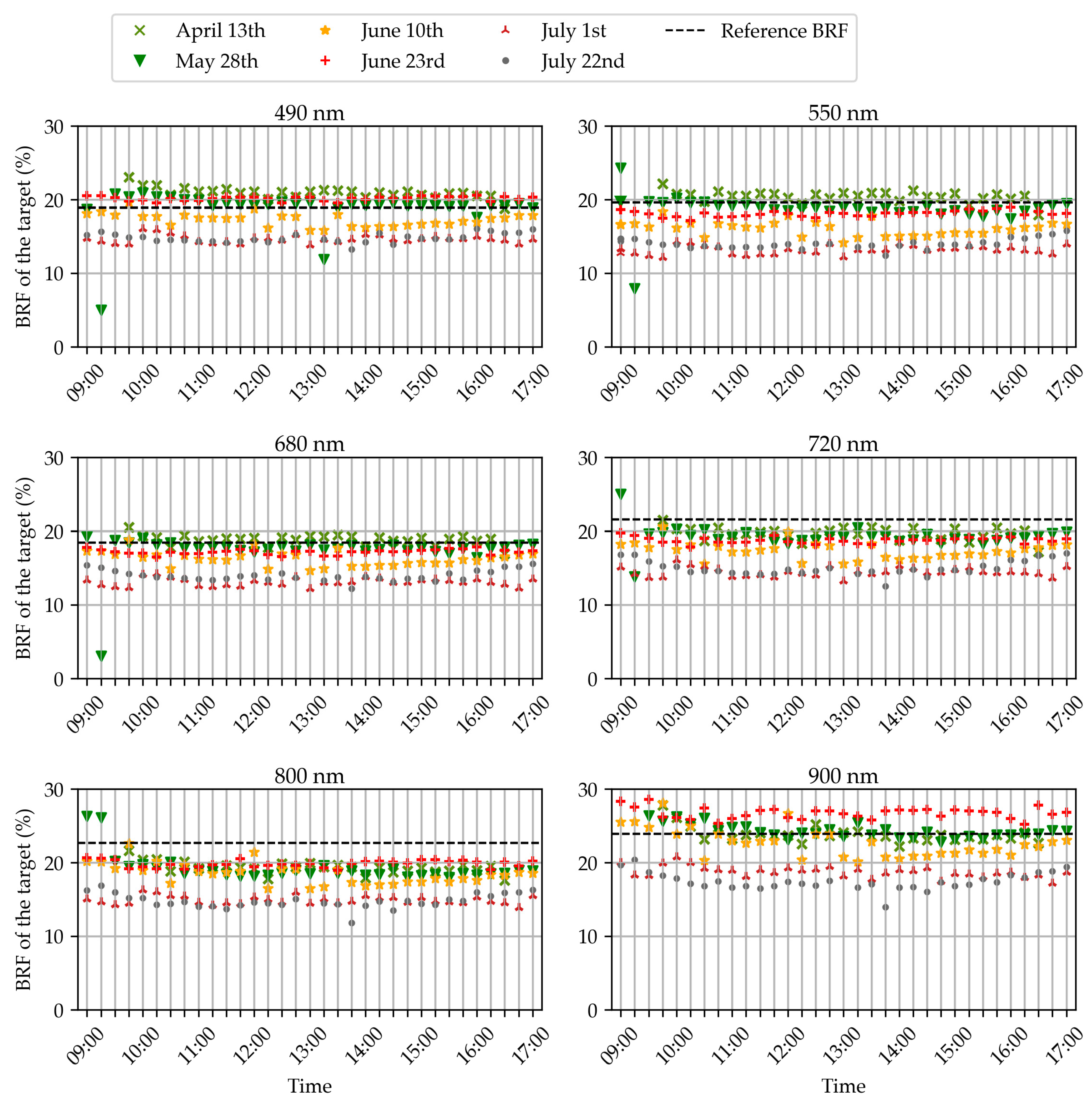

3.2. Bi-Directional Reflectance Factor of the Reference Panel

3.3. Wheat Organ Bi-Directional Reflectance Factor (BRF) throughout the Day

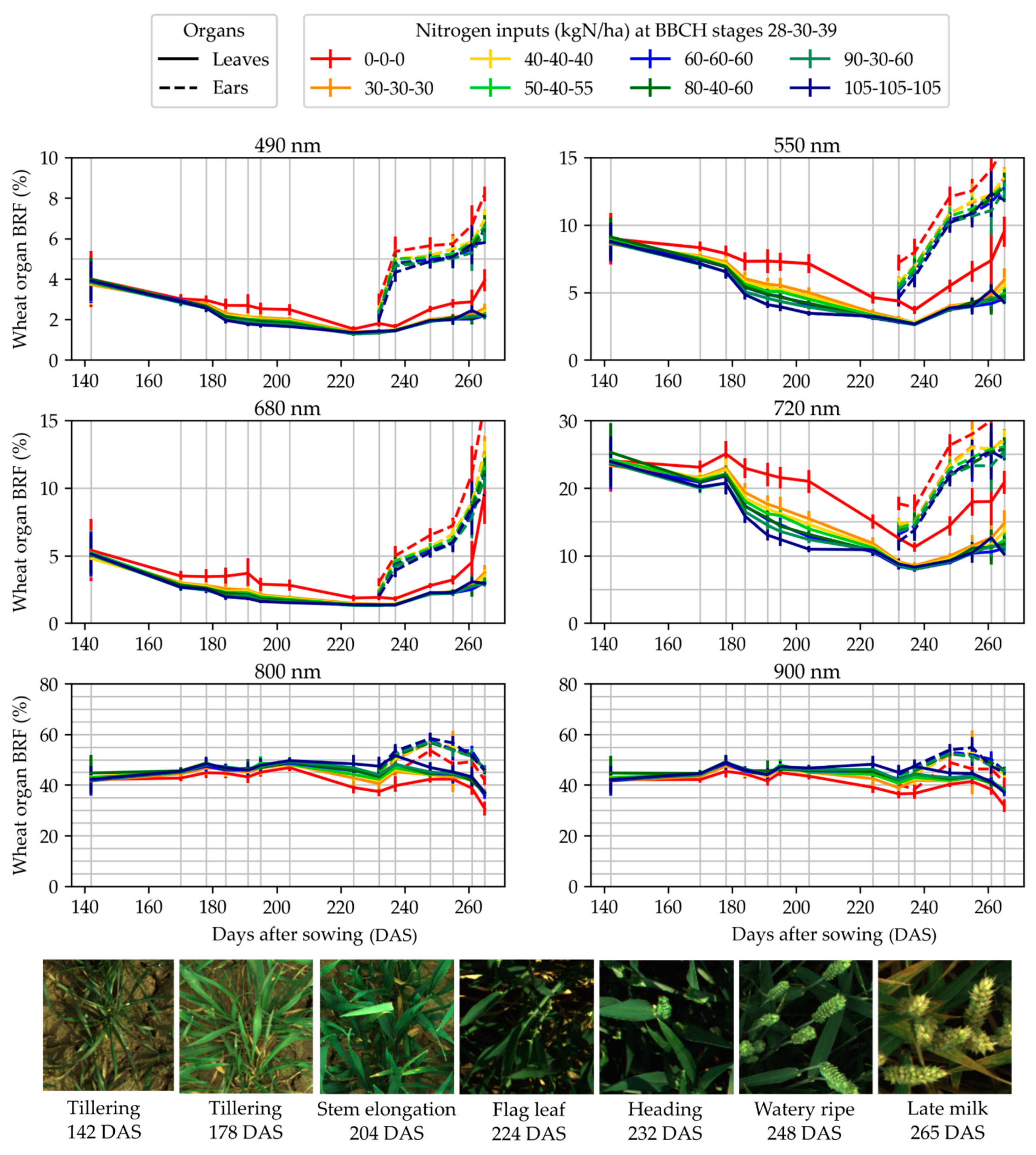

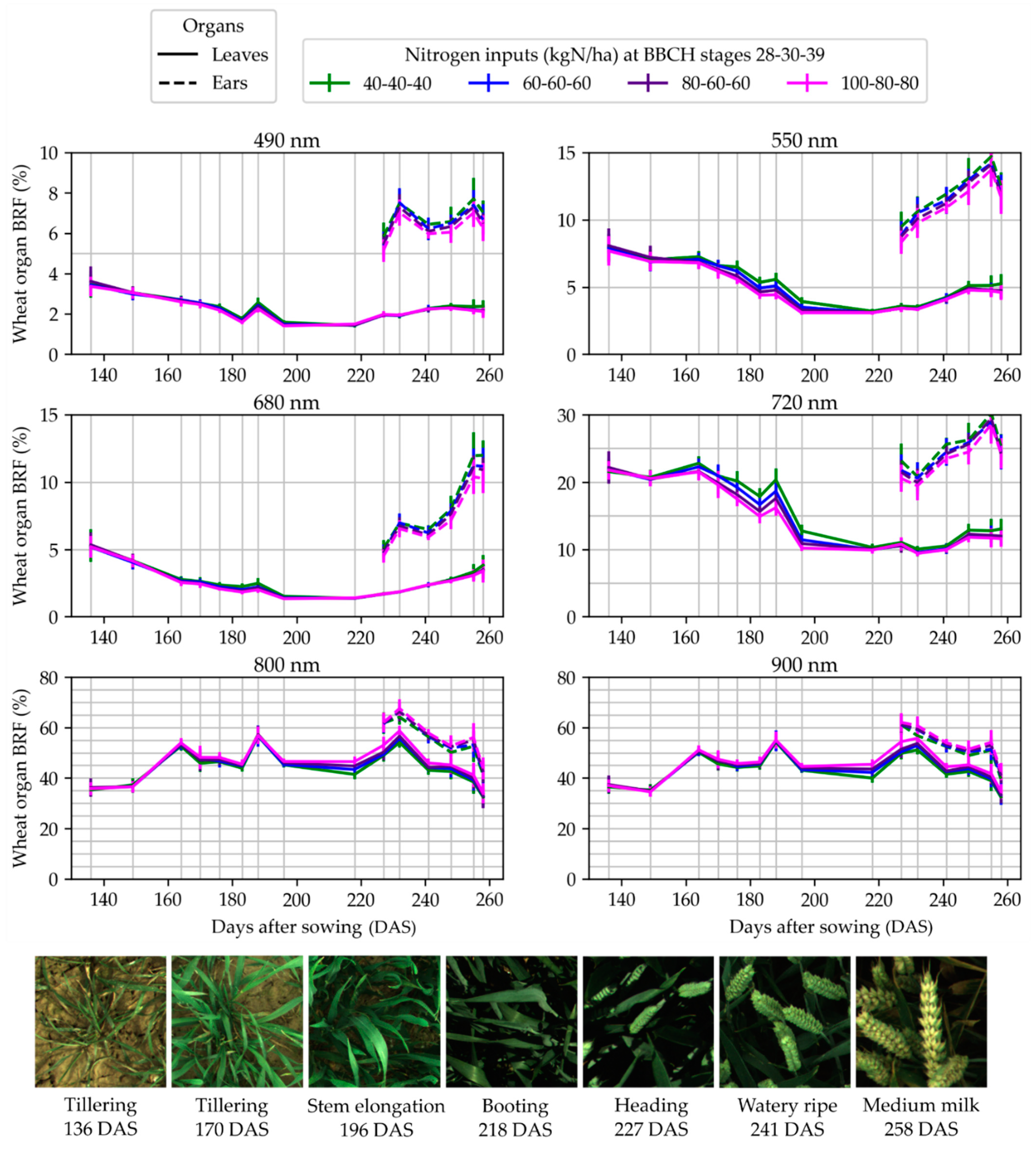

3.4. Wheat Organ Bi-Directional Reflectance Factor (BRF) in Fertilization Trials

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| BBCH | Relative Exposures in the Six Wavebands of the Multispectral Array | |||||

|---|---|---|---|---|---|---|

| 490 nm | 550 nm | 680 nm | 720 nm | 800 nm | 900 nm | |

| 32 to 75 | 400 | 250 | 400 | 200 | 80 | 220 |

| 77 | 250 | 100 | 250 | 150 | 80 | 200 |

| 83 to 87 | 160 | 100 | 100 | 100 | 80 | 140 |

| 89 | 110 | 70 | 60 | 100 | 80 | 140 |

References

- Reynolds, D.; Baret, F.; Welcker, C.; Bostrom, A.; Ball, J.; Cellini, F.; Lorence, A.; Chawade, A.; Khafif, M.; Noshita, K.; et al. What is cost-efficient phenotyping? Optimizing costs for different scenarios. Plant Sci. 2019, 282, 14–22. [Google Scholar] [CrossRef] [PubMed]

- Smith, D.T.; Potgieter, A.B.; Chapman, S.C. Scaling up high-throughput phenotyping for abiotic stress selection in the field. Theor. Appl. Genet. 2021, 134, 1845–1866. [Google Scholar] [CrossRef] [PubMed]

- Vigneau, N.; Ecarnot, M.; Rabatel, G.; Roumet, P. Potential of field hyperspectral imaging as a non destructive method to assess leaf nitrogen content in Wheat. Field Crop. Res. 2011, 122, 25–31. [Google Scholar] [CrossRef]

- Yuan, L.; Zhang, J.; Zhao, J.; Huang, W.; Wang, J.; Yuan, L.; Zhang, J.; Wang, J. Discrimination of yellow rust and powdery mildew in wheat at leaf level using spectral signatures. In Proceedings of the First International Conference on Agro-Geoinformatics, Shanghai, China, 2–4 August 2012. [Google Scholar]

- Ashourloo, D.; Mobasheri, M.R.; Huete, A. Developing two spectral disease indices for detection of wheat leaf rust (Pucciniatriticina). Remote Sens. 2014, 6, 4723–4740. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant disease detection by imaging sensors—Parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Ranson, K.J.; Daughtry, C.S.T.; Biehl, L.L.; Bauer, M.E. Sun-view angle effects on reflectance factors of corn canopies. Remote Sens. Environ. 1985, 18, 147–161. [Google Scholar] [CrossRef]

- Shibayama, M.; Wiegand, C.L. View azimuth and zenith, and solar angle effects on wheat canopy reflectance. Remote Sens. Environ. 1985, 18, 91–103. [Google Scholar] [CrossRef]

- Camacho-de-Coca, F.; Gilabert, M.A.; Meliá, J. Bidirectional Reflectance Factor Analysis from Field Radiometry and HyMap Data. In Proceedings of the Digital Airborne Spectrometer Experiment (DAISEX), Paris, France, June 2001. Available online: https://adsabs.harvard.edu/pdf/2001ESASP.499..163C (accessed on 22 March 2022).

- Chakraborty, A.; Remote, N.; Centre, S. Spectral reflectance anisotropy of wheat canopy in assessing biophysical parameters. J. Agric. Phys. 2005, 5, 1–10. [Google Scholar]

- Barman, D.; Sehgal, V.K.; Sahoo, R.N.; Nagarajan, S. Relationship of bidirectional reflectance of wheat with biophysical parameters and its radiative transfer modeling using Prosail. J. Indian Soc. Remote Sens. 2010, 38, 35–44. [Google Scholar] [CrossRef]

- Comar, A.; Baret, F.; Viénot, F.; Yan, L.; de Solan, B. Wheat leaf bidirectional reflectance measurements: Description and quantification of the volume, specular and hot-spot scattering features. Remote Sens. Environ. 2012, 121, 26–35. [Google Scholar] [CrossRef]

- Chakraborty, D.; Sehgal, V.K.; Sahoo, R.N.; Pradhan, S.; Gupta, V.K. Study of the anisotropic reflectance behaviour of wheat canopy to evaluate the performance of radiative transfer model PROSAIL5B. J. Indian Soc. Remote Sens. 2015, 43, 297–310. [Google Scholar] [CrossRef]

- Lunagaria, M.M.; Patel, H.R. Changes in reflectance anisotropy of wheat crop during different phenophases. Int. Agrophys. 2017, 31, 203–218. [Google Scholar] [CrossRef][Green Version]

- Cammarano, D.; Fitzgerald, G.J.; Casa, R.; Basso, B. Assessing the Robustness of Vegetation Indices to Estimate Wheat N in Mediterranean Environments. Remote Sens. 2014, 6, 2827–2844. [Google Scholar] [CrossRef]

- Goel, N.S. Models of vegetation canopy reflectance and their use in estimation of biophysical parameters from reflectance data. Remote Sens. Rev. 1988, 4, 1–212. [Google Scholar] [CrossRef]

- Baret, F.; Jacquemoud, S.; Guyot, G.; Leprieur, C. Modeled analysis of the biophysical nature of spectral shifts and comparison with information content of broad bands. Remote Sens. Environ. 1992, 41, 133–142. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT + SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Berger, K.; Atzberger, C.; Danner, M.; D’Urso, G.; Mauser, W.; Vuolo, F.; Hank, T. Evaluation of the PROSAIL Model Capabilities for Future Hyperspectral Model Environments: A Review Study. Remote Sens. 2018, 10, 85. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Verhoef, W. Light scattering by leaf layers with application to canopy reflectance modeling: The SAIL model. Remote Sens. Environ. 1984, 16, 125–141. [Google Scholar] [CrossRef]

- Lu, B.; Proctor, C.; He, Y. Investigating different versions of PROSPECT and PROSAIL for estimating spectral and biophysical properties of photosynthetic and non-photosynthetic vegetation in mixed grasslands. GISci. Remote Sens. 2021, 58, 354–371. [Google Scholar] [CrossRef]

- Li, W.; Jiang, J.; Weiss, M.; Madec, S.; Tison, F.; Philippe, B.; Comar, A. Impact of the reproductive organs on crop BRDF as observed from a UAV. Remote Sens. Environ. 2021, 259, 112433. [Google Scholar] [CrossRef]

- Danner, M.; Berger, K.; Wocher, M.; Mauser, W.; Hank, T. Fitted PROSAIL parameterization of leaf inclinations, water content and brown pigment content for winter wheat and maize canopies. Remote Sens. 2019, 11, 1150. [Google Scholar] [CrossRef]

- Tremblay, N.; Wang, Z.; Ma, B.L.; Belec, C.; Vigneault, P. A comparison of crop data measured by two commercial sensors for variable-rate nitrogen application. Precis. Agric. 2009, 10, 145–161. [Google Scholar] [CrossRef]

- Erdle, K.; Mistele, B.; Schmidhalter, U. Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crop. Res. 2011, 124, 74–84. [Google Scholar] [CrossRef]

- Kipp, S.; Mistele, B.; Schmidhalter, U. The performance of active spectral reflectance sensors as influenced by measuring distance, device temperature and light intensity. Comput. Electron. Agric. 2014, 100, 24–33. [Google Scholar] [CrossRef]

- Barmeier, G.; Schmidhalter, U. High-throughput phenotyping of wheat and barley plants grown in single or few rows in small plots using active and passive spectral proximal sensing. Sensors 2016, 16, 1860. [Google Scholar] [CrossRef]

- Qiu, R.; Wei, S.; Zhang, M.; Li, H.; Sun, H.; Liu, G.; Li, M. Sensors for measuring plant phenotyping: A review. Int. J. Agric. Biol. Eng. 2018, 11, 1–17. [Google Scholar] [CrossRef]

- Souza, R.D.; Buchhart, C.; Heil, K.; Plass, J.; Padilla, F.M.; Schmidhalter, U. Effect of Time of Day and Sky Conditions on Different Vegetation Indices Calculated from Active and Passive Sensors and Images Taken from UAV. Remote Sens. 2021, 13, 1691. [Google Scholar] [CrossRef]

- Rodriguez, D.; Fitzgerald, G.J.; Belford, R.; Christensen, L.K. Detection of nitrogen deficiency in wheat from spectral reflectance indices and basic crop eco-physiological concepts. Aust. J. Agric. Res. 2006, 57, 781–789. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Dong, Y.; Shi, Y.; Ma, H.; Liu, L. Identification of wheat yellow rust using optimal three-band spectral indices in different growth stages. Sensors 2019, 19, 35. [Google Scholar] [CrossRef]

- Anderegg, J.; Hund, A.; Karisto, P.; Mikaberidze, A. In-Field Detection and Quantification of Septoria Tritici Blotch in Diverse Wheat Germplasm Using Spectral–Temporal Features. Front. Plant Sci. 2019, 10, 1355. [Google Scholar] [CrossRef] [PubMed]

- Fu, P.; Meacham-Hensold, K.; Guan, K.; Wu, J.; Bernacchi, C. Estimating photosynthetic traits from reflectance spectra: A synthesis of spectral indices, numerical inversion, and partial least square regression. Plant Cell Environ. 2020, 43, 1241–1258. [Google Scholar] [CrossRef] [PubMed]

- Moshou, D.; Bravo, C.; West, J.; Wahlen, S.; McCartney, A.; Ramon, H. Automatic detection of “yellow rust” in wheat using reflectance measurements and neural networks. Comput. Electron. Agric. 2004, 44, 173–188. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.M.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Haghighattalab, A.; González Pérez, L.; Mondal, S.; Singh, D.; Schinstock, D.; Rutkoski, J.; Ortiz-Monasterio, I.; Singh, R.P.; Goodin, D.; Poland, J. Application of unmanned aerial systems for high throughput phenotyping of large wheat breeding nurseries. Plant Methods 2016, 12, 35. [Google Scholar] [CrossRef] [PubMed]

- Naito, H.; Ogawa, S.; Valencia, M.O.; Mohri, H.; Urano, Y.; Hosoi, F.; Shimizu, Y.; Chavez, A.L.; Ishitani, M.; Selvaraj, M.G.; et al. Estimating rice yield related traits and quantitative trait loci analysis under different nitrogen treatments using a simple tower-based field phenotyping system with modified single-lens reflex cameras. ISPRS J. Photogramm. Remote Sens. 2017, 125, 50–62. [Google Scholar] [CrossRef]

- Behmann, J.; Acebron, K.; Emin, D.; Bennertz, S.; Matsubara, S.; Thomas, S.; Bohnenkamp, D.; Kuska, M.T.; Jussila, J.; Salo, H.; et al. Specim IQ: Evaluation of a new, miniaturized handheld hyperspectral camera and its application for plant phenotyping and disease detection. Sensors 2018, 18, 441. [Google Scholar] [CrossRef]

- Jay, S.; Baret, F.; Dutartre, D.; Malatesta, G.; Héno, S.; Comar, A.; Weiss, M.; Maupas, F. Exploiting the centimeter resolution of UAV multispectral imagery to improve remote-sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sens. Environ. 2018, 231, 110898. [Google Scholar] [CrossRef]

- Bai, G.; Ge, Y.; Scoby, D.; Leavitt, B.; Stoerger, V.; Kirchgessner, N.; Irmak, S.; Graef, G.; Schnable, J.; Awada, T. NU-Spidercam: A large-scale, cable-driven, integrated sensing and robotic system for advanced phenotyping, remote sensing, and agronomic research. Comput. Electron. Agric. 2019, 160, 71–81. [Google Scholar] [CrossRef]

- Pérez-Ruiz, M.; Prior, A.; Martinez-Guanter, J.; Apolo-Apolo, O.E.; Andrade-Sanchez, P.; Egea, G. Development and evaluation of a self-propelled electric platform for high-throughput field phenotyping in wheat breeding trials. Comput. Electron. Agric. 2020, 169, 105237. [Google Scholar] [CrossRef]

- Tavakoli, H.; Gebbers, R. Assessing Nitrogen and water status of winter wheat using a digital camera. Comput. Electron. Agric. 2019, 157, 558–567. [Google Scholar] [CrossRef]

- Elsayed, S.; Barmeier, G.; Schmidhalter, U. Passive reflectance sensing and digital image analysis allows for assessing the biomass and nitrogen status of wheat in early and late tillering stages. Front. Plant Sci. 2018, 9, 1478. [Google Scholar] [CrossRef] [PubMed]

- Prey, L.; von Bloh, M.; Schmidhalter, U. Evaluating RGB imaging and multispectral active and hyperspectral passive sensing for assessing early plant vigor in winter wheat. Sensors 2018, 18, 2931. [Google Scholar] [CrossRef] [PubMed]

- Raymond Hunt, E.; Stern, A.J. Evaluation of incident light sensors on unmanned aircraft for calculation of spectral reflectance. Remote Sens. 2019, 11, 2622. [Google Scholar] [CrossRef]

- Hakala, T.; Markelin, L.; Honkavaara, E.; Scott, B.; Theocharous, T.; Nevalainen, O.; Näsi, R.; Suomalainen, J.; Viljanen, N.; Greenwell, C.; et al. Direct reflectance measurements from drones: Sensor absolute radiometric calibration and system tests for forest reflectance characterization. Sensors 2018, 18, 1417. [Google Scholar] [CrossRef] [PubMed]

- Bebronne, R.; Carlier, A.; Meurs, R.; Leemans, V.; Vermeulen, P.; Dumont, B.; Mercatoris, B. In-field proximal sensing of septoria tritici blotch, stripe rust and brown rust in winter wheat by means of reflectance and textural features from multispectral imagery. Biosyst. Eng. 2020, 197, 257–269. [Google Scholar] [CrossRef]

- Sadeghi-Tehran, P.; Virlet, N.; Hawkesford, M.J. A neural network method for classification of sunlit and shaded components of wheat canopies in the field using high-resolution hyperspectral imagery. Remote Sens. 2021, 13, 898. [Google Scholar] [CrossRef]

- Jay, S.; Gorretta, N.; Morel, J.; Maupas, F.; Bendoula, R.; Rabatel, G.; Dutartre, D.; Comar, A.; Baret, F. Estimating leaf chlorophyll content in sugar beet canopies using millimeter- to centimeter-scale reflectance imagery. Remote Sens. Environ. 2017, 198, 173–186. [Google Scholar] [CrossRef]

- Meier, U. Growth Stages of Mono and Dicotyledonous Plants, 2nd ed.; Federal Biological Research Centre for Agriculture and Forestry: Quedlinburg, Germany, 2001. [Google Scholar]

- Nicodemus, F.E.; Richmond, J.C.; Hsia, J.J.; Ginsberg, I.W.; Limperis, T. Geometrical Considerations and Nomenclature for Reflectance; NBS monograph: Washington, WA, USA, 1977.

- Schaepman-Strub, G.; Schaepman, M.E.; Painter, T.H.; Dangel, S.; Martonchik, J.V. Reflectance quantities in optical remote sensing-definitions and case studies. Remote Sens. Environ. 2006, 103, 27–42. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Carlier, A.; Dumont, B.; Mercatoris, B. Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions. Remote Sens. 2021, 13, 1380. [Google Scholar] [CrossRef]

- Meyer, G.E.; Hindman, T.; Laksmi, K. Machine Vision Detection Parameters for Plant Species Identification; SPIE: Bellingham, WA, USA, 1998. [Google Scholar]

- Dandrifosse, S.; Ennadifi, E.; Carlier, A.; Gosselin, B.; Dumont, B.; Mercatoris, B. Deep Learning for Wheat Ear Segmentation and Ear Density Measurement: From Heading to Maturity. Comput. Electron. Agric. 2021; submitted for publication. [Google Scholar]

- Birodkar, V.; Lu, Z.; Li, S.; Rathod, V.; Huang, J. The surprising impact of mask-head architecture on novel class segmentation. arXiv 2021, arXiv:2104.00613v2. [Google Scholar] [CrossRef]

- Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability 2019, 11, 978. [Google Scholar] [CrossRef]

| Date | Days after Sowing | BBCH Growth Stages | Sky Conditions | |

|---|---|---|---|---|

| Morning | Afternoon | |||

| 13 April | 157 | 29: Tillering | Sunny | Cloudy |

| 28 May | 202 | 39: Flag leaf | Sunny | Sunny |

| 10 June | 215 | 65: Flowering | Sunny | Sunny |

| 23 June | 228 | 69: End of flowering | Heavy clouds | Heavy clouds |

| 1 July | 236 | 77: Late milk | Heavy clouds | Sunny |

| 22 July | 257 | 87: Hard dough | Sunny | Sunny |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dandrifosse, S.; Carlier, A.; Dumont, B.; Mercatoris, B. In-Field Wheat Reflectance: How to Reach the Organ Scale? Sensors 2022, 22, 3342. https://doi.org/10.3390/s22093342

Dandrifosse S, Carlier A, Dumont B, Mercatoris B. In-Field Wheat Reflectance: How to Reach the Organ Scale? Sensors. 2022; 22(9):3342. https://doi.org/10.3390/s22093342

Chicago/Turabian StyleDandrifosse, Sébastien, Alexis Carlier, Benjamin Dumont, and Benoît Mercatoris. 2022. "In-Field Wheat Reflectance: How to Reach the Organ Scale?" Sensors 22, no. 9: 3342. https://doi.org/10.3390/s22093342

APA StyleDandrifosse, S., Carlier, A., Dumont, B., & Mercatoris, B. (2022). In-Field Wheat Reflectance: How to Reach the Organ Scale? Sensors, 22(9), 3342. https://doi.org/10.3390/s22093342