Abstract

Impulse response functions (IRFs) are useful for characterizing systems’ dynamic behavior and gaining insight into their underlying processes, based on sensor data streams of their inputs and outputs. However, current IRF estimation methods typically require restrictive assumptions that are rarely met in practice, including that the underlying system is homogeneous, linear, and stationary, and that any noise is well behaved. Here, I present data-driven, model-independent, nonparametric IRF estimation methods that relax these assumptions, and thus expand the applicability of IRFs in real-world systems. These methods can accurately and efficiently deconvolve IRFs from signals that are substantially contaminated by autoregressive moving average (ARMA) noise or nonstationary ARIMA noise. They can also simultaneously deconvolve and demix the impulse responses of individual components of heterogeneous systems, based on their combined output (without needing to know the outputs of the individual components). This deconvolution–demixing approach can be extended to characterize nonstationary coupling between inputs and outputs, even if the system’s impulse response changes so rapidly that different impulse responses overlap one another. These techniques can also be extended to estimate IRFs for nonlinear systems in which different input intensities yield impulse responses with different shapes and amplitudes, which are then overprinted on one another in the output. I further show how one can efficiently quantify multiscale impulse responses using piecewise linear IRFs defined at unevenly spaced lags. All of these methods are implemented in an R script that can efficiently estimate IRFs over hundreds of lags, from noisy time series of thousands or even millions of time steps.

1. Introduction

Many scientific challenges require understanding how systems respond to fluctuations in their inputs, as documented by sensor data streams or other time series data. Three examples from environmental science, for example, are (a) understanding how streamflow time series reflect landscape-scale responses to precipitation inputs, (b) quantifying source–receiver relationships for airborne or waterborne contaminants and pathogens, and (c) estimating how ecosystems’ rates of photosynthesis, respiration, and transpiration fluctuate in response to external forcings, such as solar radiation, precipitation, and nutrient concentrations. Many other examples can be found in fields ranging from chemistry [1], engineering [2], and economics [3,4] to systems biology [5], neuroscience [6,7], physiology [8] and epidemiology [9,10,11].

Questions such as these are both scientifically challenging and practically important. They can sometimes be investigated using controlled experiments, but it is often infeasible to conduct such experiments at realistic scale on systems of realistic complexity. Instead, we must often try to understand these processes by observing how systems respond, over time, to natural fluctuations in their external forcing and natural variations in their internal conditions. These efforts have been aided in recent decades by an ever-expanding range of observational time series from an increasingly diverse array of sensors and remote sensing platforms.

The question remains how to most usefully extract information from these signals. One widely employed approach is to postulate a model based on a set of assumed mechanisms, calibrate this model to the observational data, and then perform numerical experiments to explore the behavior of the model, under the assumption that the model is a realistic analogue to the real-world system. The strength of this approach is that results from these numerical experiments can be directly interpreted in terms of the postulated model processes. The corresponding weakness is that everything depends on the assumption that the postulated processes are the correct ones. Many models are sufficiently complex that even if they give the right answers (in the sense of matching their calibration data, for example), it can be difficult to tell whether they are doing so for the right reasons, which is essential to drawing valid inferences from their results.

An alternative approach is to make minimal assumptions about the processes of interest and instead to empirically model the input–output behavior of the system directly from observational data. The obvious drawback of such “black box” approaches is that any mechanistic inferences derived from them will necessarily be tentative and indirect (although in ways that will often be obvious to users). The corresponding advantage is that it is not necessary to assume a mechanistic model whose realism may be difficult to verify.

This model-independent, data-driven approach, commonly termed system identification, has evolved primarily within the field of industrial process control (e.g., [12,13,14]). Industrial process control problems typically enjoy several advantages over analyses of other types of systems. In such problems, one often has strong a priori information about the structure of the systems under study (since they are typically engineered), and one can frequently measure their response to controlled inputs (steps, pulses, sine waves, etc.). In analyses of many other real-world systems, by contrast, the system’s structure is often unknown (which is often why it is being studied in the first place), and we must work with whatever patterns of inputs and outputs nature gives us. Furthermore, the input and output time series are frequently much noisier than in typical industrial systems.

Here I present new methods for system identification, with a specific focus on impulse response functions and how they can be adapted to handle several challenges that often arise in real-world systems. Section 2.1 briefly introduces impulse response functions and their estimation from time series via least squares methods. Section 2.2 through Section 2.6 show how this approach can be adapted to account for the autoregressive moving average (ARMA) noise that often arises in real-world data. Section 2.5 and Section 2.7 present benchmark tests demonstrating that this approach accurately estimates impulse response functions and their uncertainties, even when confronted with time series that are badly contaminated by nonstationary ARIMA (AutoRegressive Integrated Moving Average) noise.

Many real-world systems exhibit substantial heterogeneity, such that inputs to different system compartments are processed differently, with the resulting signals being mixed together in the system output. Section 3 presents a demixing approach to estimating the impulse response functions of these multiple overlapping (and correlated) inputs. Section 3 also presents benchmark tests that demonstrate the potential of this demixing approach.

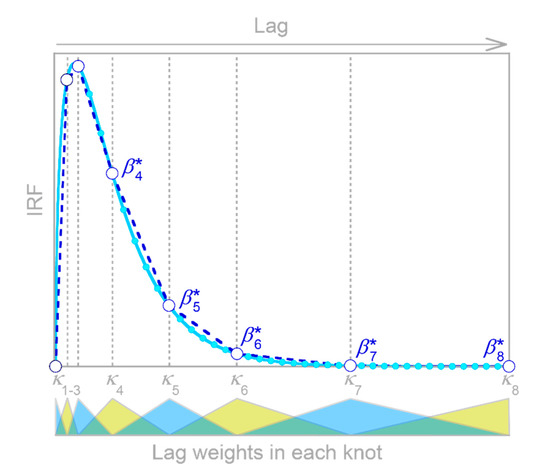

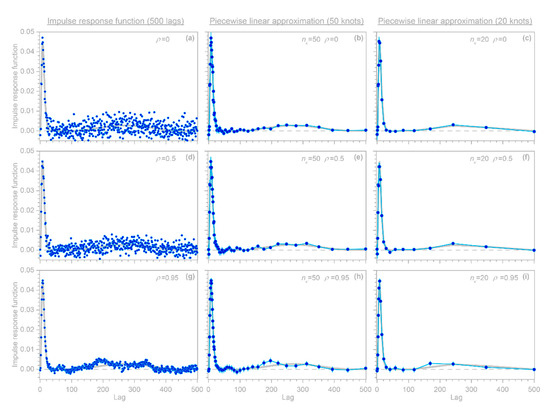

Conventional impulse response functions assume that the system’s response is both linear (that is, proportional to the input) and stationary (that is, independent of time). Real-world systems, by contrast, are often nonlinear and nonstationary. Therefore, Section 4 shows how the demixing approach of Section 3 can be adapted to characterize systems’ nonstationary behavior, and Section 5 shows how this approach can be further extended to create piecewise linear maps of systems’ nonlinear dependence on their inputs. Section 6 further shows how IRFs can be approximated by piecewise linear functions that are evaluated at a few unevenly spaced knots, rather than many evenly spaced lags. This permits the accurate estimation of multiscale IRFs that combine brief, sharp impulse responses and more persistent, delayed impulse responses.

The techniques presented here are implemented in an R script, IRFnnhs.R (for “Impulse Response Functions for nonlinear nonstationary and heterogeneous systems in R”), which is available along with scripts for each of the benchmark tests presented in the following sections (see data availability statement). The benchmark tests presented here are intentionally generic, without applications to specific fields. A subsequent paper will present a proof-of-concept application within my own field of hydrology, but the presentation here is generic to avoid the misconception that these techniques are restricted to hydrological applications.

2. Estimating Impulse Response Functions from Time Series Contaminated by Autoregressive and Nonstationary Noise

2.1. Impulse Response Functions

Many systems can be represented (at least approximately) as convolutions, in which the output depends on an input over a (potentially infinite) range of past lag times , weighted by a lag function that expresses the relative influence of the input at each lag:

The lag function is sometimes called a convolution kernel, transfer function, or Green’s function; it is also called an impulse response function (IRF) because it shows how the system output would respond to an input consisting of a single Dirac delta function pulse (i.e., an infinitely high, infinitely narrow pulse that integrates to 1). A system’s impulse response can be defined more generally as the change in the time evolution of its output when a single Dirac pulse is added to its input at any given time , compared to the system’s behavior without the Dirac pulse:

The input can itself be considered as a continuous series of appropriately scaled Dirac pulses, so if the impulse response is independent of the impulse time (that is, if the impulse response is stationary), integrating Equation (2) over all will lead directly to the convolution shown in Equation (1).

In most practical cases, continuous functions such as those in Equation (1) are not directly observable, and instead are approximated by discrete time series of measurements. In such cases, Equation (1) is typically approximated by its discrete counterpart,

The discrete impulse response function in Equation (3) is sometimes termed a finite impulse response (FIR) model, because it quantifies the finite-duration system response to a finite-duration input pulse, in contrast to Equation (1), which quantifies the potentially infinite-duration system response to an infinitesimally short input pulse.

The impulse response function or is useful in characterizing the system; indeed, it is a complete description of linear time-invariant systems such as Equations (1) and (3). A linear system responds proportionally to the input , such that its response to the sum of two inputs and equals the sum of its responses to the two inputs individually; this is known as the principle of linear superposition. A time-invariant (or stationary) system responds identically to the same inputs occurring at different times (except, of course, that its response is time-shifted by the same amount as the time difference between the inputs).

In contrast to such an idealized linear time-invariant system, many real-world systems are nonlinear, nonstationary, or both. In such cases, an impulse response function will not be a complete description of the system’s response but may still be a useful indicator of its average behavior. Precisely how the IRF averages such a system’s behavior will depend on the system characteristics and on how the IRF is estimated; this topic is explored further in Section 3.3, Section 3.4, Section 3.5 and Section 4.3 below. Furthermore, as described in Section 4 and Section 5 below, the simple linear time-invariant model in Equation (3) can be generalized to estimate how the IRF varies for different input intensities (thus quantitatively characterizing the nonlinearity of the system) and to estimate how the IRF varies for inputs occurring at different times (thus quantifying the system’s nonstationarity).

Convolutions such as Equations (1) and (3) scramble the input time series and the impulse response function together to generate the output time series . Deconvolution methods seek to invert this process, un-scrambling to yield estimates of (given ) or estimates of (given ). The term deconvolution is often applied specifically to the inversion of Equation (1) or (3) to solve for the input time series given the output time series and the impulse response function (a deconvolution of by ). Solving instead for the impulse response function given the input and output time series and is also, mathematically speaking, a deconvolution (in this case, a deconvolution of by ), but is also often termed system identification [13], since characterizes the behavior of the system linking the inputs and outputs. The classical approach to either type of deconvolution relied on Fourier transform methods, because convolution and deconvolution become simply multiplication and division in Fourier space. However, Fourier methods often yield unreliable results unless the underlying system is linear and time-invariant, and thus conforms closely to Equation (1) or (3), and unless the two “knowns” ( and for system identification, or and for deconvolution of the input time series ) are virtually noise-free. These requirements are often violated by real-world systems. Instead, the system identification problem is frequently approached by considering Equation (3) as a multiple linear regression equation,

where the kth column of the matrix is lagged by time steps. Equation (4) can be straightforwardly solved for the IRF coefficients using conventional least squares methods if the residual errors are uncorrelated white noise.

2.2. Estimating Impulse Response Functions in the Presence of ARMA Noise

Direct application of simple approaches such as Equation (4) to many real-world systems will be complicated by the fact that the residuals often violate the white noise assumptions underlying conventional linear regression. Instead, the residuals are often serially correlated, sometimes quite strongly, over a range of time scales, leading to biased estimates of the coefficients and their uncertainties. The serial correlation in can arise from many sources. Measurements of the output variable may be subject to serially correlated, or even nonstationary, errors. The input variable may also be subject to error (the so-called “error in variables” problem). Even if those input errors are not themselves serially correlated, they will nonetheless be reflected in serially correlated residuals because any excess or missing will appear to be smoothed and lagged by the same convolution process that smooths and lags the (unknown) true inputs. Equation (4) may also be a stationary approximation to a nonstationary real-world system, or may have other structural problems, such as missing variables or incorrect functional relationships, that would be reflected in serially correlated variations in the residuals . Efficiently handling these serially correlated errors requires novel statistical methods. Although several approaches have been widely used to perform regression in the presence of serially correlated errors (e.g., [15,16,17]; see also Section 9.5 of [12]), deconvolution in the presence of such errors is potentially a more complex problem, because the output will contain serially correlated signals from both the errors and the real-world convolution process, which must somehow be distinguished from one another.

Whatever the origin of the serial correlation in the residuals, it can be simply and flexibly represented as an Autoregressive Moving Average (ARMA) process,

where the autoregressive coefficients express how the residual depends on its own previous values, and the moving average coefficients express how the residual depends on the previous values of a white noise process . In many real-world cases, serially correlated errors can be summarized using just a few autoregressive coefficients and moving average coefficients . Moving-average processes that are invertible (as all real-world moving average processes should be) can be equivalently expressed as autoregressive processes (the duality principle: see Section 3.3.5 of [12]), meaning that any real-world ARMA process can be re-expressed as a purely autoregressive (AR) process of higher order,

In theory, the autoregressive order corresponding to a moving average process can be infinite, but in practice Equation (6) will often entail only a few more AR coefficients than the corresponding ARMA process in Equation (5), with the higher-order terms dying away to practically zero.

A conventional approach to solving regression problems such as Equation (4) with autoregressive errors such as those in Equation (6) proceeds as follows. Equation (6) is first rearranged to express the uncorrelated white noise error in terms of the AR coefficients and the lagged values of the correlated errors :

where , which has a value of 1, has been included in the first term on the right-hand side to make the following equations more systematic. Writing lagged copies of the original regression equation (Equation (4)) for lags 0 through and multiplying by the corresponding AR coefficients yields the following stack of equations:

Readers will note that the error terms in each line of Equation (8) sum up to the right-hand side of Equation (7), so if all of these lines are added together, the combined error terms will equal the uncorrelated error :

where the last line equals the uncorrelated error . This conventional approach then rewrites Equation (9) by transforming each of the variables to subtract the values that they inherit from previous time steps,

yielding

Equation (11) is in the form of a linear regression equation like Equation (4), but in place of the autocorrelated error term it instead has the white-noise error term , and thus conforms to the assumptions underlying regression analysis. As written, however, Equation (11) is nonlinear in its parameters and thus cannot be solved by linear regression (because the AR coefficients hidden within the are multiplied by the regression coefficients , as shown in the second line of Equation (9)). Historically, problems of this type were solved using the Cochrane–Orcutt procedure [15], which alternately estimates the AR coefficients and the regression coefficients , iterating these two steps until convergence, or the Hildreth-Lu procedure [16], which solves jointly for the AR coefficients and the regression coefficients using nonlinear search techniques [17]. More recent approaches iteratively estimate the ’s using Generalized Least Squares and the ’s using maximum likelihood or Restricted Maximum Likelihood (REML) methods (see Section 9.5 of [12]). Pre-programmed routines are also available, such as the R language’s function, which estimates the ’s and ’s using nonlinear optimization methods. However, these approaches can become slow and memory-intensive for large problems, such as those that arise when long time series are used to estimate convolution kernels over many lags. The order of difficulty of the matrix operations required to solve Equation (4) or (11) scales as roughly or depending on the relative sizes of and ; this is further magnified when these operations are repeatedly iterated to search for optimal values of the autoregressive coefficients . In addition to this computational issue, the differencing procedure in Equation (10) may amplify any errors in the input variables relative to the true input values (particularly if the true inputs are less time-varying than their errors, and thus are attenuated more by Equation (10) than their errors are), thereby magnifying the “errors in variables” problem [18,19].

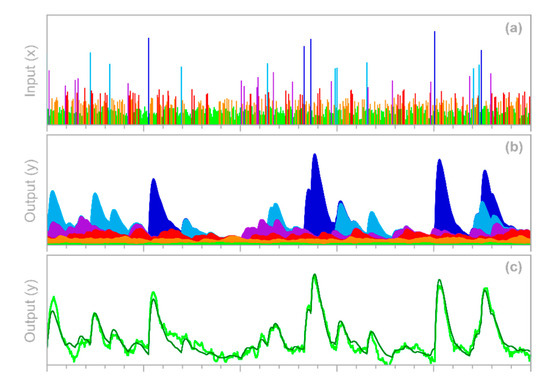

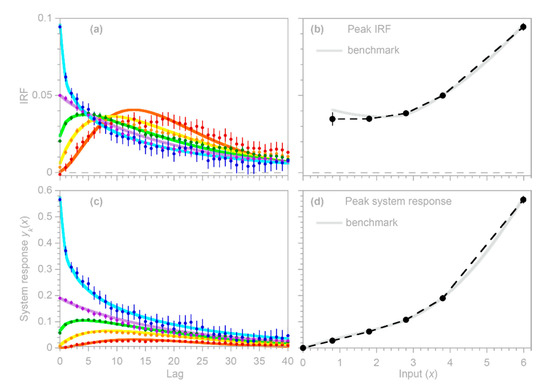

A further potentially serious concern is that the regression coefficients estimated from Equations (9)–(11) or from R’s function will artifactually converge toward 0 at lags approaching the largest modeled lag , even if the real-world process linking and extends to lags well beyond . Even worse, the uncertainty estimates for these will also be artifactually driven toward 0 at lags approaching , potentially misleading users into placing exaggerated confidence in these misleading results. The benchmark tests in Figure 1 show that these artifacts can occur even when the correct AR coefficients are known exactly (which of course will not be true in real-world cases).

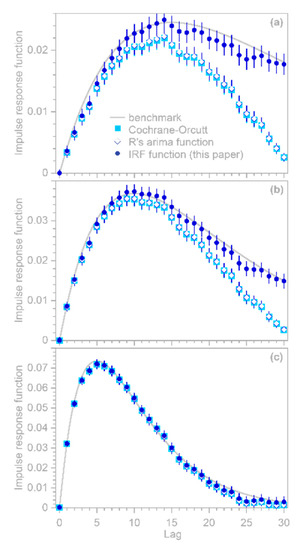

Figure 1.

Impulse response functions estimated by the Cochrane–Orcutt procedure (Equations (9)–(11), light blue squares), R’s function (open diamonds), and the function presented here (Equations (12)–(26), dark blue circles). Gray lines show benchmark convolution kernels (gamma distributions with shape factor = 2 and means of 30, 20, and 10 lag units in panels (a), (b), and (c), respectively), which were convolved with a Gaussian white noise time series of length = 2000 to simulate the system output. First-order autoregressive noise with = 0.9 was added to the system output at a signal-to-noise ratio of 4. The autoregressive coefficient of = 0.9 was supplied as a known parameter to the Cochrane–Orcutt procedure and R’s function. Impulse response functions estimated by the Cochrane–Orcutt procedure and R’s function (light blue squares and open diamonds, respectively) converge to nearly zero within the range of analyzed lags, even if the true convolution kernel does not (panels (a,b)). If the true convolution kernel converges to nearly zero within the range of analyzed lags (which will not be known in practice), all three methods yield nearly identical results (panel (c)).

Here I present a somewhat different approach that can efficiently handle large system identification problems in the presence of ARMA noise, and that is not vulnerable to the artifactual behavior shown in Figure 1. This approach is based on the observation that because Equation (9) is a convolution, it can be transformed into a conventional multiple linear regression problem that can be solved for coefficients that combine both the ’s and the ’s, and these coefficients can then be back-transformed to extract the desired values. The key is to recognize that the terms in the second line of Equation (9) can be aligned as follows (showing the first three rows as an example):

where, readers will recall, and thus can be included or excluded without loss of generality. The vertically aligned columns in Equation (12) show that collecting terms with the same lag in will convert Equation (9) to

which can be rearranged to create a conventional linear regression equation,

where the error term is white noise, and the coefficients and . can be jointly estimated by least-squares regression, with the matrix form (here using = 5 and = 2 as a simple example):

or equivalently

where the matrix includes columns with lagged values of and a further columns with lagged values of , and the parameter vector includes both the and coefficients. (In practice the first rows of the matrix must be omitted because they have missing values, along with the corresponding rows of ; any other rows with missing values in either or are similarly omitted.) The least-squares estimate of the parameter vector can be computed via the conventional matrix form of the “Normal Equation” of linear regression,

where the superscript T indicates the matrix transpose. If individual rows of Equation (15) are given different weights (for example, to exclude or down-weight uncertain or irrelevant observations), Equation (17) becomes

where is a diagonal matrix containing the weights. The effective sample size, accounting for the uneven weights , can be calculated straightforwardly as ; this converges to when all rows have the same weight. The function in IRFnnhs.R computes using R’s function, which in turn calls LAPACK solver routines based on LU decomposition. This is more efficient than inverting the cross-product matrix or followed by matrix multiplication with . For most problems where is much larger than, the function’s runtime is approximately linear in and , because the most time-consuming step is the construction of the matrix. For larger , runtime becomes linear in and quadratic in , because the most time-consuming step is the computation of the cross-product . For very large , the order of difficulty may approach if the most time-consuming step becomes solving the linear system. Solution times on a 2019-vintage 1.8 GHz Intel i7-8550 CPU with four cores and 16 GB of RAM are roughly 50 ms for an matrix with 10,000 rows and 100 columns, roughly 5 s for an matrix with 100,000 rows and 1000 columns, and roughly 27 min for an matrix with 1,000,000 rows and 10,000 columns. For comparison, R’s built-in function takes roughly 2000 times longer to solve the smallest of these problems, and for larger problems the discrepancy is even greater.

Because the coefficients estimated as part of in Equation (17) or (18) could be noisy if the underlying time series are short or particularly noisy, the function provides an option for Tikhonov–Phillips regularization, as described in Equations (46), (49) and (50) of [20]. This regularization routine minimizes the mean square of the second derivatives of the , thus penalizing values that deviate greatly from a line connecting their adjacent neighbors. This smoothness criterion has the advantage that it does not create a downward bias in the values, as conventional Tikhonov “ridge regression” would (see Section 4.3 of [20] for details). The degree of regularization is controlled by a dimensionless parameter that ranges between 0 and 1 and expresses the fractional weight given to the regularization criterion, relative to the least-squares criterion, in determining the best-fit values of . The default value of = 0 (no regularization) is used for all of the analyses presented here.

As with any least-squares multiple regression problem, Equations (17) and (18) are potentially vulnerable to outliers in the underlying input and output time series. Therefore, the IRFnnhs.R script includes the option for robust solution of Equations (17) and (18) via Iteratively Reweighted Least Squares (IRLS). This robust estimation method can be invoked by calling the function with the option set to TRUE. Because it is an iterative algorithm, IRLS will increase the solution time, but usually only by small multiples. A potentially greater concern is that, as with any robust estimation method, there is always a risk of excluding valid data that just happen to have unusually large influence. Therefore, it is worthwhile to investigate further, whenever robust and non-robust methods yield substantially different results. In the analyses presented here, the option is kept at its default value of FALSE, because the synthetic benchmark data sets contain no outliers (although they do contain significant noise).

The form of Equation (14) is similar to a conventional SISO (Single Input, Single Output) ARX (Autoregressive with eXogenous variables) model (e.g., [13,14]), but there are three essential differences. The first difference is that in ARX models, the focus is usually on the autoregressive part, and the objective is usually to be able to make one-step-ahead forecasts of the next value of , based mostly on ’s relationship to its own prior values. By contrast, in the analysis presented here, the focus is on the exogenous variable and its lags, and on estimating their structural relationship to .

The second difference is that in ARX models, the autoregressive terms , , etc., describe autoregressive behavior in the system itself (an “equation error model”), rather than correcting for autoregressive noise in the error term (an “output error model”). (Although these two model types can be combined in so-called CARARMA models, for which iterative and hierarchical estimation algorithms have been proposed e.g., [21], such complex models need not concern us here because in the present analysis only the noise is assumed to be autoregressive.) Because it attributes autoregressive behavior to rather than to , an ARX model evaluates the coefficients only for lags from 0 to rather than from 0 to as shown in Equation (14). This distinction is important because without the extra coefficients , Equation (14) would not be the same as Equation (9) and thus a solution for Equation (14) would not be a solution for the original problem as specified by Equation (4) combined with Equation (6).

The third crucial difference is that in an ARX model, the coefficients would directly measure the effects of the (lagged) external forcing . Here, by contrast, these effects are measured by the coefficients, which must be deconvolved from the coefficients as described in the next section.

2.3. Deconvolving the Impulse Response from the Fitted Coefficients

The impulse response coefficients are not estimated by the coefficients themselves, but rather by functions that combine the coefficients and the AR coefficients. One can translate between the regression coefficients and the impulse response coefficients by recognizing from inspection of Equation (12) that the coefficients are linear combinations of the impulse response coefficients , weighted by the AR coefficients ,

These relationships can be represented in matrix form as (again using = 5 and = 2 as a simple example)

or more compactly as

where is a unit lower triangular Toeplitz matrix whose off-diagonals are the negatives of . This mapping of b to is invertible, so the impulse response coefficients can be retrieved from regression estimates of and by inverting the system of linear equations in Equation (19). This inversion can be written as a series of simple recurrence relationships (remembering that the main diagonal of the matrix is 1):

In matrix notation, this inversion can be expressed as (here again using = 5 as an example)

or more compactly as

where is the inverse of the matrix , and both and are truncated at lag . Readers may recognize Equation (20) as a convolution, and Equation (23) as the corresponding deconvolution. The matrix, like the matrix, is a lower unit triangular Toeplitz matrix, and the individual values can be calculated by recurrence relationships analogous to those in Equation (22) above (noting that the terms of are , not , and that and both have 1s along the main diagonal):

The last h elements of have no effect on the calculated values (each of which depends only on values of —see Equation (22)), but they nonetheless must be present when Equation (14) is fitted by least squares, because otherwise the estimates of through can be distorted by correlations that should be absorbed by the terms through . Putting the same point differently, if Equation (14) is missing the last elements of , it will not be equivalent to Equation (9), leading to biased estimates of the resulting (unless, of course, the last elements of are all zero).

The matrix is also the Jacobian of the system of equations that translates into (Equation (22)), and thus it can also be used to convert the covariance matrix of to the covariance matrix of the impulse response vector , using the matrix form of the conventional first-order, second-moment error propagation equation,

The square root of the diagonal of the covariance matrix will then yield the standard errors of the impulse response coefficients . These uncertainty estimates will be somewhat inflated if Equation (26) is applied directly to the covariance matrix obtained from Equation (14), due to interactions between the and coefficients. Statistically speaking, the coefficients are “nuisance parameters” in the sense that the goal is to determine the values of the ’s, but as part of this process the ’s must also be estimated in order to account for serial correlation in the residuals. Benchmark tests show that the standard errors of the ’s can be more accurately estimated if the ’s are treated as being fixed at their estimated values, rather than as uncertain parameters. This can be efficiently accomplished by removing the rows and columns that correspond to the lagged values of (and thus correspond to the coefficients) from the cross-product matrix after it has been used to solve Equation (14). This modified cross-product matrix is then used to calculate the covariances of the via the standard formula , where is the variance of the residuals of Equation (14). The and their covariances are then translated into estimates of the and their covariances using Equations (24) and (26).

2.4. Choosing the Number of Autoregressive Correction Terms

A practical question will inevitably arise: how should users choose the correct order for the AR correction terms ? As noted in Section 2.2 above, these terms should be numerous enough to capture the effects of both autoregressive and moving average noise in the measured . One approach is to manually select the order of AR correction by trial and error, by setting the parameter and inspecting how different values of affect the estimates of and the correlations in the residuals. Benchmark tests suggest that as long as is much smaller than m, making too big will only slightly alter the estimates of or their uncertainties, since the extra coefficients will typically be very small and thus will barely affect the solution of Equation (14). (It should be clear that Equations (5) and (6) are models of the noise , not AR or ARMA models of the underlying processes generating the system output. Thus, any additional uncertainty in the coefficients due to overfitting is unproblematic, because the goal is to estimate the impulse response coefficients , not the coefficients describing the noise.)

The order of autoregressive correction can also be determined automatically. One might assume that the Akaike Information Criterion (AIC) or Bayesian Information Criterion (BIC) could be used to determine an optimal value of , but AIC and BIC can only compare models that predict the same set of points , and changing alters the number of rows of Equation (15) with missing values of or (and therefore the number of values that must be excluded). A more fundamental problem is that even if AIC and BIC could be used, they would select a value of that makes Equation (14) a good predictor of rather than a good estimator of and thus of , which is the objective of this analysis. One should remember that in this analysis, the coefficients are not themselves of interest, but are necessary to whiten the residuals, that is, to convert the serially correlated residuals to nearly uncorrelated residuals . With that objective in mind, by default the algorithm will automatically find the smallest value of that sufficiently whitens the residuals, using both a practical significance test and a statistical significance test. The practical significance test examines whether the absolute values of the autocorrelation and partial autocorrelation function (ACF and PACF) coefficients of the residuals , for lags from 1 to log10(), are less than a user-specified threshold The default value of is 0.2, which corresponds to a maximum contribution of 0.22 = 4% to the variance of the residuals due to serial correlations at any individual lag. The statistical significance test examines whether the absolute values of the ACF and PACF coefficients exceed a significance threshold of (corresponding to a two-tailed significance level of < 0.05) more often than would be expected by chance according to binomial statistics, where the threshold for “by chance” is a user-specified probability (default value 0.05). The algorithm will automatically find the smallest value of that passes either of these tests. The practical significance test is needed because in large samples, even trivially small ACF and PACF coefficients may still be statistically significant, triggering a pointless effort to make them smaller than they need to be. Conversely, the statistical significance test is needed because in small samples, even true white-noise processes may yield ACF and PACF coefficients that do not meet the practical significance threshold (if is less than ), triggering a pointless effort to further whiten residuals that are already white.

A further technical detail is that if (but only if) the true impulse response function converges to 0 well before the maximum lag , one can obtain more accurate estimates of at lags approaching , with correspondingly smaller uncertainties, by solving Equations (10) and (11) using the coefficients obtained from Equation (14) rather than using the and uncertainty estimates derived from Equation (14) itself. Users can choose between these two alternatives with the option in the function. If =TRUE, then the and their uncertainties are re-calculated via Equations (10) and (11) using the coefficients obtained from Equation (14). This is functionally equivalent to the Cochrane–Orcutt procedure, but is much faster because it does not require iteratively solving Equations (10) and (11) to determine the coefficients. Users should keep in mind, however, that if the true impulse response function does not converge to zero well before the maximum lag , setting to TRUE can lead to artifacts similar to those shown in Figure 1; thus, is set to FALSE by default.

2.5. Benchmark Tests

A simple benchmark test of the approach outlined above can be performed by generating a synthetic random input time series , , and convolving it with a known convolution kernel to yield the hypothetical system’s true output . A random ARMA error time series , generated with R’s function, is then added to to yield the hypothetical system’s measured output . This measured output and the input series are then supplied to the function, which estimates the impulse response function coefficients and their standard errors. One can then calculate the estimation error as the difference between and the known convolution kernel, and take the root-mean-square average of these estimation errors at each lag (RMSE) from many random iterations of the benchmark test. If this RMSE approximately equals the pooled (root mean square) average of the estimated standard errors over many random iterations, then the standard errors are reasonable estimates of the uncertainties in the . One can similarly calculate the mean estimation bias as the arithmetic average of the estimation errors at each lag. If this average estimation error scales roughly as the pooled standard error divided by the square root of the number of random iterations of the benchmark test, then this demonstrates that the estimates are unbiased (that is, no more biased than one would expect by chance) and the standard error estimates are realistic.

This simple rubric for benchmark testing encompasses many different possibilities, depending on the chosen shape of the “true” convolution kernel, the time series length , the maximum lag , the distribution and correlation structure of the input signal , and the amplitude and ARMA parameters of the error time series . Figure 2 shows only a few illustrative examples. In all of these cases, is 10,000, is 60, is synthetic Gaussian noise with a lag 1 serial correlation of 0.5, and the error time series is ARMA(1,2) noise with an AR coefficient of 0.95 and MA coefficients of −0.2 and +0.2. The error time series is rescaled so that its variance equals the variance of ; thus, the signal-to-noise ratio is 1, implying a much larger level of noise than is commonly used in such benchmark tests.

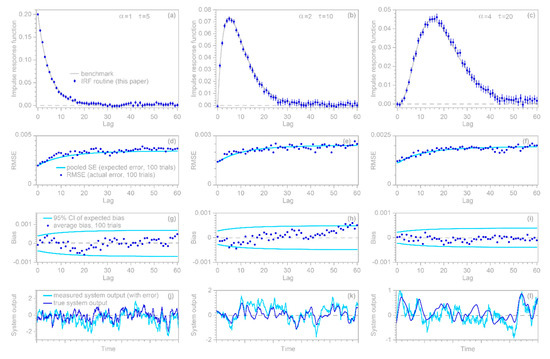

Figure 2.

Benchmark tests of impulse response function (IRF) calculations. Top row (a–c): IRFs calculated from noisy time series (dark symbols), compared to the true convolution kernels (gray lines) used as benchmarks. Error bars indicate one standard error. Benchmarks are gamma distributions with shape factors of 1, 2, and 4 in the left, center, and right columns, respectively. Second row (d–f): test of standard error estimates, obtained by repeating the analysis in the top row for 100 different randomizations. The estimates of expected deviations from the benchmark (the pooled standard errors at each lag, shown by light blue lines) agree with the actual deviations from the benchmark (root mean square average of deviations from the benchmark at each lag, shown by dark blue symbols). Third row (g–i): dark blue points show the average deviation from the benchmark in 100 different randomizations of the analysis in the top row, and the light blue lines show the 95% confidence interval of average deviations from the benchmark. The dark points generally lie between the blue lines, indicating that the IRFs are unbiased. Bottom row (j–l): true convolution output (dark blue lines) and the ARMA noise-corrupted used as input to the IRF calculations (light blue lines). Five hundred time steps, equaling five percent of each time series, are shown.

The three columns of Figure 2 show benchmark test results for gamma-distributed convolution kernels with shape factors of 1, 2, and 4. The top row of panels shows that IRFs estimated from time series corrupted with ARMA noise (dark blue points) correspond closely to the benchmarks (gray lines). The second row of panels shows that the RMS average deviations from the benchmarks in 100 random realizations of each benchmark test (dark blue points) agree with the expected error (as quantified by the pooled standard error, shown by the light blue lines). The third row of panels shows that the IRFs are unbiased; the average deviations from the benchmarks in 100 random realizations of each benchmark test (dark blue points) lie within their 95% confidence intervals, indicating that they are no larger than would be expected by chance in unbiased IRFs. The bottom row of panels shows 5% of each convolved time series , with and without noise (light and dark blue lines, respectively), to give a visual impression of how much the added noise distorts the source data of the IRF calculations.

2.6. Estimating Impulse Response Functions in the Presence of Nonstationary ARIMA Noise

The error in Equation (4) may not only be serially correlated; it may also be nonstationary, such that its true mean changes over time. Such a random error is known as ARIMA (Autoregressive Integrated Moving Average) noise instead of ARMA noise. The standard approach to handling such cases is differencing: one subtracts the prior values from each element of the time series and , yielding the “first differences” and . Then Equation (4) is conventionally re-cast in terms of these first differences:

where the intercept is conventionally assumed to be zero, although this will often not be strictly the case, precisely because is nonstationary so the mean of may not equal the mean of over a finite sample from . The conventional approach of Equation (27) leads to estimates that artifactually converge toward zero as approaches , similar to the artifact that is generated by Equation (11), as shown in Figure 1 above. The approach of Equation (27) would also greatly complicate the analysis presented in Section 3, Section 4 and Section 5 below. Therefore, a different approach will be followed here, in which first differencing is only applied to the left-hand side of Equation (4) and, similar to Equation (12) above, terms with the same lags of are combined. This has the effect of transforming the coefficients rather than the input time series , yielding

where , except when (whereupon ) and when (whereupon ). These transformations can be expressed in matrix form as

One advantage of this approach is that it can use the analysis already outlined in Equations (14)–(26), with the only modifications being the use of in place of and in place of . The results of that analysis will be in terms of , which can be transformed back to by inverting Equation (), yielding

One can straightforwardly combine Equations (14)–(30) to eliminate the need to use as an interim step between and . The resulting procedure can be summarized as follows: first, solve Equation (14), using in place of and in place of , to obtain the regression coefficients and their covariance matrix . Next, transform these to the IRF coefficients and their covariance matrix using

and

where is the matrix in Equation (30), which is the inverse of the first difference matrix in Equation (29). In principle, this procedure can also be straightforwardly adapted to differencing of any order , by differencing accordingly, using in place of , and raising to the power . In practice, however, differencing beyond first order is likely to be counterproductive, because even first-differencing will magnify the high-frequency noise in the time series, and higher-order differencing will amplify this noise further. In the routine, setting the parameter (default=FALSE) to TRUE will invoke the differencing procedure outlined in Equations (28)–(32) above.

2.7. Benchmark Tests with Nonstationary ARIMA Noise

The IRF estimation approach for handling nonstationary ARIMA noise, outlined in Section 2.6 above, can be tested using benchmark tests similar to those that were used in Section 2.5 to test IRF estimates with stationary ARMA noise. As before, a synthetic random input time series is convolved with a known convolution kernel to yield the hypothetical system’s true output . This is then corrupted by a random ARIMA noise series generated with R’s function (with the order parameters set for first-order integration), which is added to to yield the hypothetical system’s measured output . This measured output and the input series are then supplied to the routine.

In the illustrative examples shown in Figure 3, is 10,000, is 60, is synthetic Gaussian noise with a lag 1 serial correlation of 0.5, and the error time series is ARIMA (1,1,2) noise with an AR coefficient of 0.7 and MA coefficients of −0.2 and +0.2. The error time series is rescaled so that its standard deviation equals 10 times the standard deviation of . Thus, the signal-to-noise ratio is 0.01, implying that noise dominates the measured signal. The three columns of Figure 3, similarly to Figure 2, show benchmark test results for gamma-distributed convolution kernels with shape factors of 1, 2, and 4, and the four rows of panels are interpreted similarly. The top row of panels shows that IRFs estimated from time series that are corrupted with ARIMA noise (dark blue points) correspond closely to the benchmarks (gray lines). The second row shows that the calculated standard error accurately estimates the deviations from the benchmark, the third row shows that the IRFs are unbiased, and the bottom row presents excerpts from the time series to show how much the added noise distorts the source data of the IRF calculations.

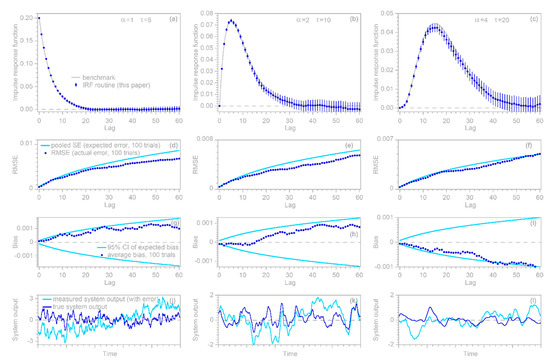

Figure 3.

Benchmark tests of impulse response function (IRF) calculations with nonstationary ARIMA noise. Top row (a–c): IRFs calculated from noisy time series (dark symbols), compared to the true convolution kernels (gray lines) used as benchmarks; error bars indicate one standard error. Benchmarks are gamma distributions with shape factors of 1, 2, and 4 in the left, center, and right columns, respectively. Second row (d–f): test of standard error estimates, obtained by repeating the analysis in the top row for 100 different randomizations. The estimates of expected deviations from the benchmark (the pooled standard errors at each lag, shown by light blue lines) roughly agree with the actual deviations from the benchmark (root mean square average of deviations from the benchmark at each lag, shown by dark blue symbols). Third row (g–i): dark blue points show the average deviation from the benchmark in 100 different randomizations of the analysis in the top row, and the light blue lines show the 95% confidence interval of average deviations from the benchmark. The dark points generally lie between the blue lines, indicating that the IRFs are unbiased. Bottom row (j–l): true convolution output (dark blue lines) compared to the ARIMA noise-corrupted used as input to IRF calculations (light blue lines). Five hundred time steps, equaling five percent of each time series, are shown. Because the noise is nonstationary, the noise-corrupted time series (light blue lines) eventually wander far away from the true (dark blue lines). The bottom panels show time intervals where they nearly overlap, so that they can be visualized together.

3. Using Demixing Techniques to Quantify System Response to Heterogeneous Inputs

3.1. Demixing Multiple Impulse Response Functions

The methods outlined in Section 2 can be used to estimate impulse response functions connecting single inputs to single outputs. However, many real-world systems combine signals from multiple sources, which may themselves be correlated, and which may have overlapping effects on the output. For example, precipitation falling in different parts of a drainage basin may result in different streamflow responses (due to differences in catchment geometry or subsurface hydrological properties), which may then be lagged and dispersed differently through the channel network. As another example, one may want to infer source/receptor relationships for air pollution sources located at different distances and directions from a given receiver. In situations such as these, estimating the system’s impulse response to each individual input is both a deconvolution problem and a demixing problem; one needs to not only un-scramble each input’s temporally overlapping effects, but also to un-mix the different inputs’ effects from one another.

The methods outlined in Section 1 can be straightforwardly extended to perform this combination of deconvolution and demixing. Consider the simple case of a system that combines two inputs and (which may be correlated with each other, as well as serially correlated with themselves). These two inputs are applied to corresponding fractions of the system and , and are translated into the system output over a range of lag times by the corresponding impulse response functions and :

where the constant term and the error term represent any bias or error in the measured system output . This simple two-input case can be straightforwardly extended to include more inputs.

ARMA noise in the error term can be handled analogously to Equations (5), (6) and (12)–(26) for the single-input case. Subtracting lagged copies of Equation (33), analogously to Equations (12)–(14), yields

where the coefficients and estimate and , combining the individual impulse response functions and the fractions of the system that they represent (which in principle cannot be individually determined without additional assumptions). As in Equation (14), the autoregressive coefficients also subsume the effects of moving-average noise. Equation (34) can be expressed in matrix form as (here showing the simple case of two sources, with m = 5, and h = 2):

which can be solved by the methods outlined in Equations (17)—(26). This approach can be straightforwardly extended to any number of sources , , , etc., although the design matrix in Equation (35) may become rather large, with rows and columns, where is the number of time steps, is the number of input time series, is the maximum lag, and is the order of AR correction. However, the routine is designed to handle large problems efficiently (see Section 2.2); it can also conserve memory by creating large design matrices sequentially in chunks rather than all at once, so that the largest matrix that must be stored is only in size.

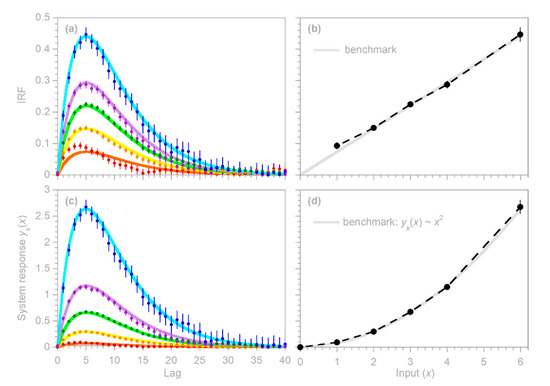

3.2. Benchmark Test

To test the approach outlined in Section 3.1 above, I generated three Gaussian random time series and convolved them with three different gamma-distributed convolution kernels, then combined the convolved signals to yield the hypothetical system’s true output . This true output was then corrupted by a random ARMA noise , and the error-corrupted system output was then supplied to the routine, along with the three input time series.

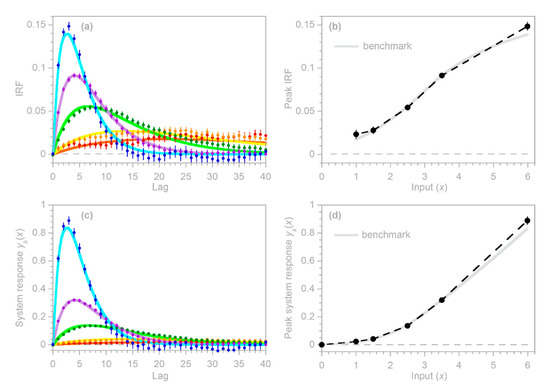

Two illustrative examples are shown in Figure 4: the left column was generated with three convolution kernels having roughly similar dispersion, but different lag times, whereas the right column was generated with kernels having different degrees of dispersion. In both columns, = 10,000, = 40, the three input signals , , and are Gaussian random noises (synthesized with a correlation of 0.8 between each pair of time series), and the error time series is ARMA(1,2) noise, with an AR coefficient of 0.9 and MA coefficients of −0.2 and +0.2. The error time series is rescaled so that its standard deviation equals half the standard deviation of ; thus, the signal-to-noise ratio is 4.

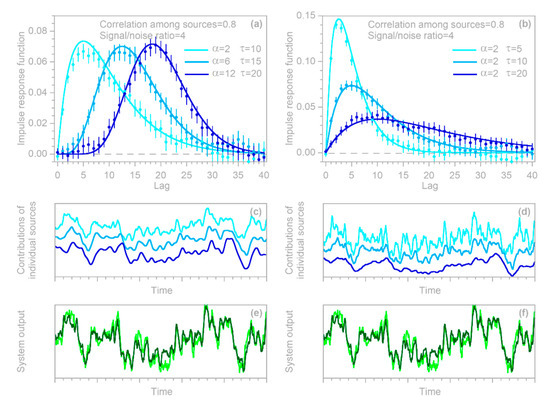

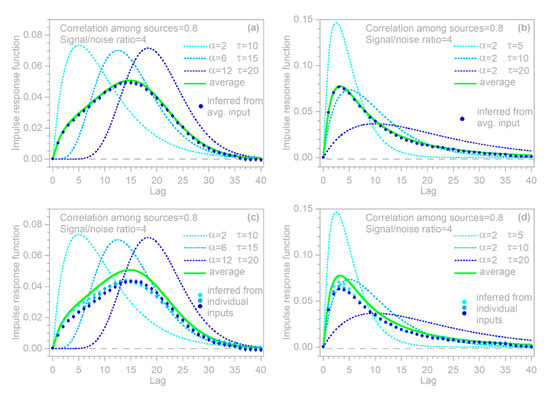

Figure 4.

Benchmark test with demixing of impulse response functions from three correlated sources that are mixed together in a system output that is corrupted by ARMA noise. The left column (a,c,e) shows three sources convolved with kernels exhibiting roughly similar dispersion, but different lag times, and the right column (b,d,f) shows three sources convolved with kernels exhibiting different degrees of dispersion. The top panels (a,b) show the three sources’ benchmark convolution kernels (lines) and their impulse response functions calculated from the individual inputs and their combined output (points). Error bars indicate 1 standard error. The middle panels (c,d) show each of the three convolved sources’ contributions to the system output, with colors matching the corresponding convolution kernels and IRFs in the top panel, and with each line shifted vertically for clearer visualization. The bottom panels (e,f) compare the combined system output (the sum of the three curves shown in the middle panels, shown in dark green), compared to the ARMA noise-corrupted used as input to the IRF calculations (shown in light green). Values of and in (a,b) are parameters of the gamma distributions used for the benchmark impulse responses. The middle and bottom panels show 500 time steps, equaling five percent of each time series.

The top row of panels shows that IRFs estimated from the error-corrupted time series (colored symbols) correspond closely to the benchmarks (colored lines), and that the IRFs for the three different inputs can be clearly distinguished from one another. This demixing exercise succeeds despite the clear correlations between the output signals generated from the three inputs (middle panels) and despite the distortion of the system output by ARMA noise (bottom panels).

3.3. Whole-System Impulse Response Inferred from Average of Heterogeneous Inputs

The benchmark test in Figure 4 immediately raises two questions. First, in heterogeneous systems such as those shown in Figure 4, what is the whole-system impulse response (the average of the individual IRFs) to the whole-system input (the average of the individual inputs)? Second, can we correctly infer this whole-system impulse response using the methods developed in Section 2.1, Section 2.2, Section 2.3, Section 2.4, Section 2.5 and Section 2.6, if we only know the whole-system input and not the individual inputs to the different compartments of the system, with their individual impulse responses?

From Equation (33) above, one can see that if the same instantaneous impulse were applied to the inputs and simultaneously, the expected impulse response of the system would be +. To some extent, this whole-system impulse response is inherently hypothetical, since it assumes that the inputs and are perfectly correlated, whereas estimating and in the first place requires that and are not perfectly correlated. Nonetheless, in many real-world cases, the individual inputs (, , etc.) may be sufficiently uncorrelated that their individual impulse responses (, etc.) can be accurately estimated, but also sufficiently correlated that +will reasonably approximate how the whole system would respond, if an impulse were applied to both of the inputs simultaneously.

In many real-world cases, however, we may not know the inputs to individual components of the system, but only their average or their sum. In such cases, the true situation might be described by expressions such as Equation (33), with several inputs (, , etc.), but we would interpret them as if they were described by expressions such as Equation (4), with a single input , instead. This naturally leads to the question of how the impulse response function that we would estimate from Equation (4) will depend on the individual IRFs and their weighting factors (, , etc.), if a system is governed by Equation (33) but is interpreted as if it is governed by Equation (4) instead. The answer can be found by applying the standard rules for first-order, second-moment propagation of covariances to the terms of the “Normal Equation” (Equation 17). Making the simplifying assumption that, although the inputs may be correlated with one another, they are not correlated with their own lags or each other’s lags, this approach yields the approximation,

where and denote different inputs to the system, with standard deviations and and correlation , and the summations are taken over all possible pairs of and . From Equation (36), one can see that in the simple case where all of the inputs are perfectly correlated ( = 1 for all pairs) and have the same variance (meaning that all of the inputs are identical), the impulse response function inferred from Equation () will be the average of the individual response functions weighted by the fractions , and thus will equal the theoretically expected impulse response of the system derived in the previous paragraph. The inferred impulse response function will also be close to this theoretically expected value if the inputs are only partially correlated (0 < < 1) or even uncorrelated with one another ( = 0), as long as they all have the same variance. Equation (36) shows that if the inputs have different variances, the inferred impulse response function will be approximately the average of the individual response functions , weighted by if the inputs are perfectly correlated, or weighted by if the inputs are completely uncorrelated. If the inputs are partially correlated, the inferred impulse response function will fall between these two limiting cases.

3.4. Whole-System Impulse Response Inferred from Individual Inputs

The analysis in the preceding section leads naturally to the question of whether (and under what conditions) we can correctly infer the average response of a heterogeneous system if we have only measured one of the heterogeneous inputs, but treat this time series as if it is the average input to the entire system. If we again assume that the inputs may be correlated with one another, but not with their own lags or each other’s lags (i.e., if we assume that the inputs are white noises), propagation of covariances leads to the following approximation for the whole-system impulse response that we would infer from a single input time series :

where the summation is taken over all , including = . In the trivial case that all of the inputs are perfectly correlated and have the same variance (i.e., they are all the same), Equation (37) predicts, unsurprisingly, that each of them will yield unbiased estimates of the whole system′s average . If the individual inputs have the same variance but are not perfectly correlated, they will underestimate the system′s average , with greater underestimation bias for inputs that account for small fractions of the total (small ) and that are weakly correlated with the other inputs (small ). If the individual inputs have different variances and are substantially (but imperfectly) correlated, they can either under- or over-estimate the system′s average , depending on how their standard deviations , impulse responses and input fractions compare with those of the other inputs.

3.5. Benchmark Test

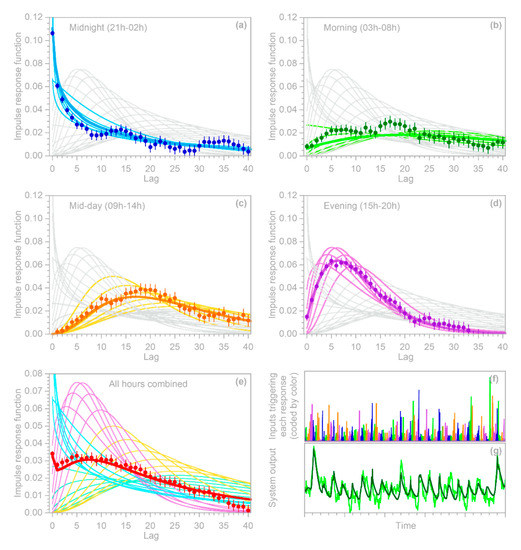

To test the inferences derived in Section 3.3 and Section 3.4, I repeated the benchmark test shown in Figure 4, and then used the routine to calculate the impulse response functions from the error-corrupted system output and the average of the three heterogeneous inputs (top panels in Figure 5), as well as each of the three inputs individually (bottom panels in Figure 5). Thus, the benchmark test in Figure 5 illustrates the IRFs that would be inferred from this heterogeneous system if only the average input were known (top panels), or if only one of the three inputs were known (bottom panels). In all cases, the inferred IRFs correspond closely to the average impulse responses of the whole system (thick green lines in Figure 5). These results indicate that if the routine is applied to systems that are heterogeneous but are not recognized as such (or not treated as such), it will yield good approximations of their average impulse responses, as long as it is supplied with the average input to the system, or with an input that is strongly correlated with that average.

Figure 5.

Benchmark test examining whether the average impulse response of the heterogeneous system shown in Figure 4 can be accurately inferred from the average of its three inputs (a,b), or from each input individually (c,d). As in Figure 4, the left column (a,c) shows three sources convolved with kernels exhibiting roughly similar dispersion, but different lag times, and the right column (b,d) shows three sources convolved with kernels exhibiting different degrees of dispersion. The impulse responses of the three heterogeneous inputs are shown by the thin dotted lines, and their averages (representing the whole-system impulse response) are shown by the thick green lines. The dots show the IRFs computed from the whole-system output and averaged input (top panels), and from the whole-system output and the individual inputs (bottom panels, with dot colors keyed to match the impulse responses of the corresponding individual inputs). Error bars are shown if they are larger than the plotting symbols. Values of and in (a,b) are parameters of the gamma distributions used for the benchmark impulse responses.

4. Quantifying Nonstationary Impulse Response

Many real-world systems are nonstationary, in the sense that identical inputs to the system will yield different responses at different times, due to differences in the internal state of the system or changes in external forcings. To give just a few examples from environmental science: (1) the same precipitation falling on a given landscape will typically generate a sharper runoff peak if that landscape is wet than if it is dry. (2) The runoff yield from a given volume of precipitation may also shift over much longer timescales if the landscape’s vegetation cover changes, thus altering rates of rainfall interception and evapotranspiration and thereby also altering soil moisture. (3) The coupling between atmospheric pollution sources and receptors will depend on atmospheric stability, and thus on the time of day and the prevailing weather patterns. (4) Ecosystem responses to short-term perturbations may change over time, as ambient conditions shift due to climate change.

Systems that are stationary (in the sense that their internal processes are time-invariant) can also generate nonstationary outputs if they are subjected to nonstationary forcing, or if their outputs are contaminated by nonstationary noise. The impulse response of such systems (i.e., the coupling between their inputs and outputs) nonetheless remains stationary, and can be estimated using the methods described in Section 2 and Section 3 above. This section, by contrast, focuses on systems in which the coupling between inputs and outputs is itself nonstationary. The central question is: can the time-varying coupling between inputs and outputs in a nonstationary system be quantified from the input and output time series themselves, without direct knowledge of the system’s internal workings?

Here, it is essential to distinguish two different cases. In the first case, the system’s impulse response changes gradually, on time scales much longer than the impulse response itself. For example, shifts in the stand density of a forest over decades or centuries may alter how the landscape responds, over timescales of hours to months, to inputs of precipitation or nutrients. Such long-term shifts in a system’s impulse response can be quantified by breaking the input and output time series into separate time intervals (in this example, perhaps separate decades) and analyzing them separately. In the methods developed in Section 2 and Section 3, this is equivalent to breaking the vector and the matrix in Equations (15) and (16) into horizontal blocks. This approach requires that each block is much longer than the impulse response timescale, thus minimizing the overlapping effects of inputs at the end of one block on outputs at the beginning of the next block. As long as this condition is met, this approach can be straightforwardly applied, and will not be analyzed further here.

In the second (and decidedly less trivial) case, the system’s impulse response changes on time scales that are similar to, or shorter than, the impulse response itself. For example, a landscape’s response to precipitation will depend on its antecedent wetness, and thus on its previous precipitation inputs; in some climates, it may also depend on short-term variations in temperature and thus in the fractions of precipitation that fall as rain versus snow. In such cases, the different impulse responses of the system (to wet vs. dry conditions, for example, or snow vs. rain) are overprinted on one another. Estimating these impulse responses thus requires both deconvolution, to un-scramble the lagged effects of each input over time, and demixing, to separate the effects of the different inputs (e.g., snow vs. rain) or the effects of different system states (e.g., wet vs. dry).

4.1. Deconvolving and Demixing Nonstationary Impulse Responses

Consider the simplest case, in which there are only two types of inputs (e.g., snow vs. rain) or two states of the system (e.g., wet vs. dry) that govern the system’s impulse response. This simple case can be represented in matrix form as

where one type of inputs (or one set of system states) corresponding to input times 3, 4, 6, 7, 8, and 11 is shown in bold, and the other type is shown in gray. In this simple example, the maximum lag is 3 and the AR correction order is 2, but the principles that it illustrates are applicable to systems of any degree of complexity. The core of the problem is this: how can the impulse response functions of the black and the gray inputs be separated from one another, given that both black and gray inputs can be found in any row of Equation (38)?

The solution to this problem is to define two different input time series, one containing the input values corresponding to the “black” time steps (and zero otherwise), and the other containing the input values corresponding to the “gray” time steps (and zero otherwise). Because one or the other of these time series is zero for every time step, their sum will exactly equal the original input time series. However, because the overlapping gray and black time series are now separated into two different variables, one can solve for their IRFs using the methods developed in Section 3 for heterogeneous systems. The resulting matrix form of the problem is

which one can see is analogous to the matrix form of the heterogeneity problem shown in Equation (35), but now there is only one input time series, split between the “black” and “gray” categories, each with its own set of lag columns. Like Equation (35), Equation (39) can be solved by the methods outlined in Equations (17)–(26), and can be straightforwardly extended to any number of different types of inputs or system states, although at the potential cost of making the design matrix rather large, depending on the number of lags and the number of time steps .

4.2. Benchmark Tests

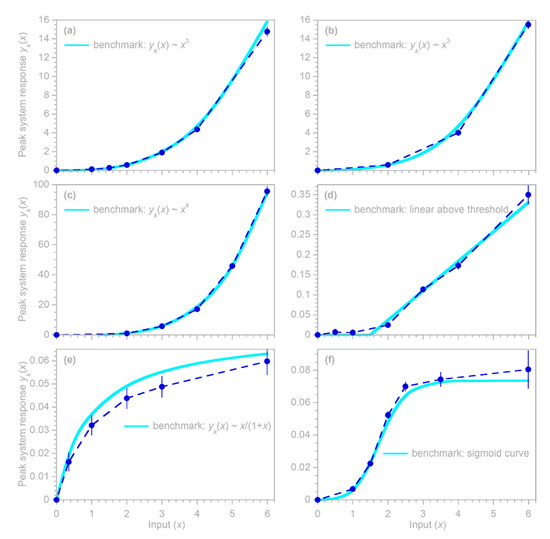

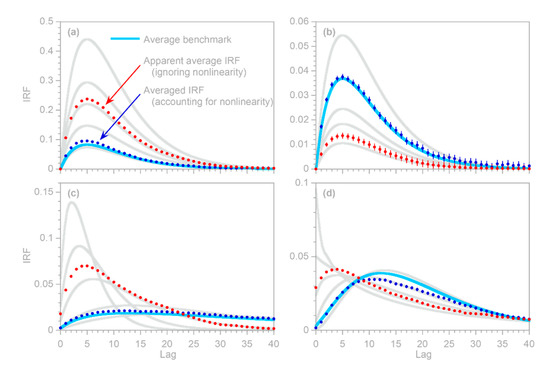

I conducted two benchmark tests to examine how well the deconvolution and demixing approach outlined in Section 4.1 can infer the time-varying impulse response functions of hypothetical nonstationary systems. In the first benchmark system, a random Poisson process irregularly switches among three markedly distinct impulse responses (Figure 6a), remaining with each for a random interval that averages five time steps. These impulse responses, when driven by the correspondingly colored random input time series shown in Figure 6c, generate the three components of the system output shown in Figure 6d (which would not be individually observable in real-world cases). These three components overprint one another to yield the combined system output shown by the dark green line in Figure 6e. This, in turn, when combined with ARMA noise at a signal-to-noise ratio of 1, yields the observable system output shown by the light green line in Figure 6e. The routine, when presented with this ARMA-noise-corrupted system output and the input time series (Figure 6c), yields the estimated IRFs shown by the solid dots in Figure 6a, which generally conform to the original benchmark impulse response functions.

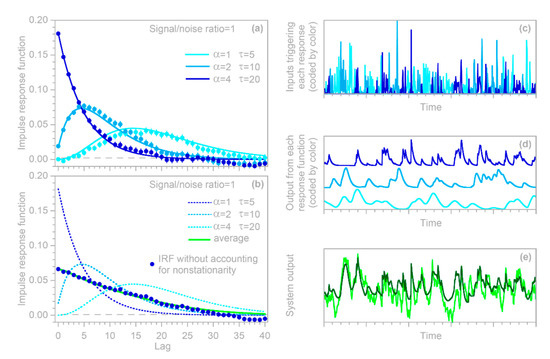

Figure 6.

Benchmark test with a nonstationary system that randomly shifts between three different impulse response functions. The three impulse responses are shown by the light, medium, and dark blue curves in (a,b), and are triggered by the correspondingly colored inputs in (c), yielding the output components in (d). These output components are not individually identifiable, but instead combine to form the system output in (e), shown with (light green) and without (dark green) added ARMA noise. The true system output (dark green) in (e) and its individual components in (d) are unobservable in practice. The estimated impulse response functions shown by the solid circles in (a) are inferred from the input in (c) and the ARMA-noise-corrupted output signal (light green) in (e) using the routine. If one treats the system as if it is stationary (by ignoring the differences among the colored input times in (c), the routine yields the solid circles shown in (b), which correspond closely to the ensemble average (light green curve) of the three impulse responses (dashed curves). Values of and in (a,b) are parameters of the gamma distributions used for the benchmark impulse responses. Noise in (e) is ARMA(1,2), with an AR coefficient of 0.9 and MA coefficients of −0.2 and +0.2, added to the true system output at a signal-to-noise ratio of 1. Error bars in (a,b) indicate 1 standard error, and lines in (d) are shifted vertically for clearer visualization. Panels (c–e) show 500 time steps, equaling five percent of each time series.

The routine is not provided with any information about the individual IRFs or their resulting time series shown in Figure 6d; it also has no information about the noiseless dark green curve shown in Figure 6e, or about the nature of the added noise. Thus, this can be considered a stringent benchmark test. One could nonetheless question whether it is too simple, because it employs three benchmark IRFs that are themselves time-invariant. In the real world, nonstationary systems are likely to exhibit continuously varying impulse responses, under the influence of continuously varying drivers (e.g., temperature, solar flux, nutrient levels, etc.).

To approximate such a case, I constructed a benchmark test in which the parameters of the benchmark impulse response vary cyclically with the hour of the day (Figure 7). Readers will notice that the system output (Figure 7g) shows a strong daily cycle. This cycle does not arise from a cycle in the input (which is purely random), but instead arises because the impulse response of the benchmark system has a stronger peak during night-time. The night-time impulse response is not larger overall—the area under each impulse response curve is the same—but it is more focused, such that more of the output comes out promptly, and less is distributed across all hours of the day.

Figure 7.

Benchmark test with a nonstationary system whose impulse response varies with time of day. Impulse responses for each hour are shown by the thin lines. These are grouped into four six-hour periods, as shown by the colored lines in (a–d), with the average impulse responses for each six-hour period shown by the thicker and slightly darker colored lines. The inputs to the benchmark system are random (f), but lead to an irregular daily cycle in the output (g), owing to the sharper impulse response during night-time (a). Using only the input shown in (f) and the ARMA-noise-corrupted output shown in light green in (g), the routine yields the solid circles shown in (a–d), which exhibit broadly similar patterns to the average impulse responses for the corresponding times of day (thicker colored lines). Alternatively, if one treats the system as if it is stationary (by ignoring the differences among the colored input times in (f), the routine yields the solid circles shown in (e), which correspond closely to the ensemble average (thick red curve) of the hourly impulse responses (thin lines). Error bars in (a–e) indicate 1 standard error. Noise in (g) is ARMA(1,2), with an AR coefficient of 0.9 and MA coefficients of −0.2 and +0.2, added to the true system output at a signal-to-noise ratio of 1. Panels (f,g) show 500 time steps, equaling five percent of each time series.

Figure 7a–d shows the hourly impulse responses, grouped into four six-hour periods each day. The individual hourly impulse response functions, and their six-hour averages shown by the wide colored lines in Figure 7a–d, would be unobservable in real-world cases: the only observables would be the input time series shown in Figure 7f and the noise-contaminated output time series shown by the light green line in Figure 7g. For the benchmark test shown in Figure 7, I divided each day of the input time series into four six-hourly periods corresponding to the colors in Figure 7f (and the corresponding panels in Figure 7a–d), and solved for their impulse response functions using the methods of Section 4.1. The resulting estimates of the individual impulse response functions, as shown by the solid dots in Figure 7, are generally consistent with the benchmarks (the wide colored lines in Figure 7a–d). The deviations from the benchmark are somewhat larger in the morning hours (Figure 7b), because the impulse response is more damped and lagged, and thus the signal is relatively weaker, than for the other hours of the day.

4.3. Average Impulse Response of Nonstationary Systems

Many nonstationary systems may not be recognized as such, and may be analyzed as if they were stationary instead. It is worthwhile to ask what the outcomes of such analyses are likely to be. What will be the apparent stationary IRF of the system? Additionally, will this resemble, or differ from, the average of the system’s nonstationary IRFs?

The two nonstationary benchmark tests shown in Figure 6 and Figure 7 provide an opportunity to explore these questions. The solid dots in Figure 6b and Figure 7e show the ensemble IRFs that are obtained if the benchmark systems are analyzed as if they were stationary, ignoring the nonstationarity that is known to be present. In other words, the solid dots are obtained by supplying the routine with a single composite input time series, rather than one that has been split into multiple inputs for the individual nonstationary categories represented by the different colors in Figure 6c and Figure 7f. Figure 6b and Figure 7e show that the resulting IRFs, shown by the solid dots, closely approximate the time-averaged benchmark impulse responses shown by the solid lines.

Two further points are worth mentioning here. First, even if the average impulse response can be reliably quantified, it will typically be a poor predictor of the system’s time-series behavior, except on time scales that are long enough that the system’s nonstationary fluctuations average out. For example, the average IRF in Figure 7e cannot capture the daily cycles shown in Figure 7g (because they originate from the differences between the daytime and nightime IRFs), but can capture most of the variation in the daily average output. The second point worth mentioning is that, as Figure 7e shows, the time-averaged impulse response function can have a different shape from any of the individual impulse responses that make up that average. Thus, any inferred “characteristic” impulse response functions, and any inferences about their possible underlying mechanisms, should be treated with caution in systems that may exhibit nonstationarity. However, the methods outlined in this section allow such nonstationarity to be detected and quantified, even where it is obscured by overprinting of the individual impulse responses.

5. Nonlinear Deconvolution

Real-world systems often exhibit nonlinearities and thresholds, in which successive additions to the input generate more-than-proportional (or, sometimes, less-than-proportional) increases in the output. In most hydrologic systems, for example, high-intensity storms generate disproportionately more runoff than low-intensity storms do. Rates of evapotranspiration, by contrast, increase roughly linearly with rates of moisture supply when moisture is limiting, but reach an upper bound when energy becomes limiting instead. Similarly, in nutrient-limited ecosystems, nutrient uptake typically increases roughly linearly with nutrient loading, but then reaches an upper limit as the ecosystem reaches nutrient saturation. This then results in nutrient exports in streams and groundwaters exhibiting threshold behavior, as nutrient loading crosses the saturation threshold.

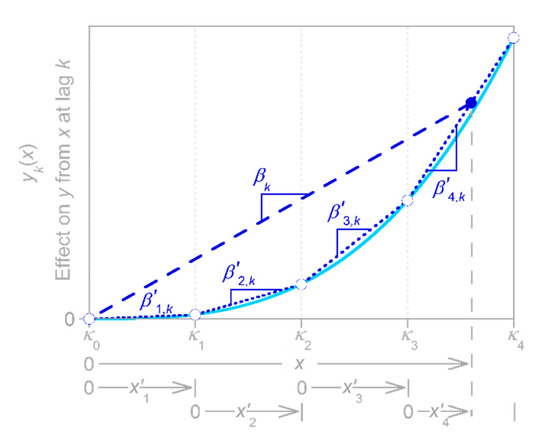

Here it is important to distinguish two different cases. In the first case, the system’s behavior can be approximated by a succession of steady states, because the inputs vary more slowly than the impulse response of the system itself. Nonlinearities in such systems can be quantified straightforwardly by plotting their outputs as functions of their inputs. Such systems will not be considered further here.