1. Introduction

The marine industry is an essential element of economical activity. It is estimated that, every day, thousands of cargo vessels travel the seas for the shipping of goods. However, outer ship hulls deteriorate over time due to their operational conditions, for example, maritime environments can favor the emergence of corrosion patches in metallic ship structures and the formation of biofouling on the surface. In other industrial sectors such as the petrochemical industry, large structures such as storage tanks are also necessary and deteriorate over time due to fatigue, corrosion, creed, and other factors. Hence, the inspection and maintenance of large-scale structures are critical to ensure their healthy state, so that the risk of catastrophic failures can be mitigated.

Standard inspection and maintenance methods are time-consuming. Indeed, outer-hull service, inspection, and maintenance are mostly conducted at a dry dock, either manually or with a remote automated system. In this condition, complete hull thickness measurements are achieved by discrete sampling, but this accounts for 5–8 days of work. This may also be achieved in areas that are difficult to access or that present risks for inspection when carried out by a human operator. Overall, the mentioned methods are time-inefficient and usually have serious financial impact to the owners.

In this work, the integration of ultrasonic guided waves (UGWs) is presented. On metal plates, UGWs are generated by applying piezoelectric transducers in contact with the surface. These waves propagate radially around the emitter through the plate material and potentially over large distances. When encountering structural features (such as plate edges, stiffeners, weld joints), these waves are reflected, and the transducer collects ultrasonic echoes. In this setup, the resulting acoustic data carry essential information on source position and structure geometry. Hence, their integration into a mobile robotic system may lead to accurate robot positioning on the structure in combination with other sensors. Furthermore, as these waves are sensitive to thickness-altering flaws (such as corrosion patches), they can be used for inspection purposes to enable the long-term objective of long-range defect detection, which has not yet been established for a mobile system.

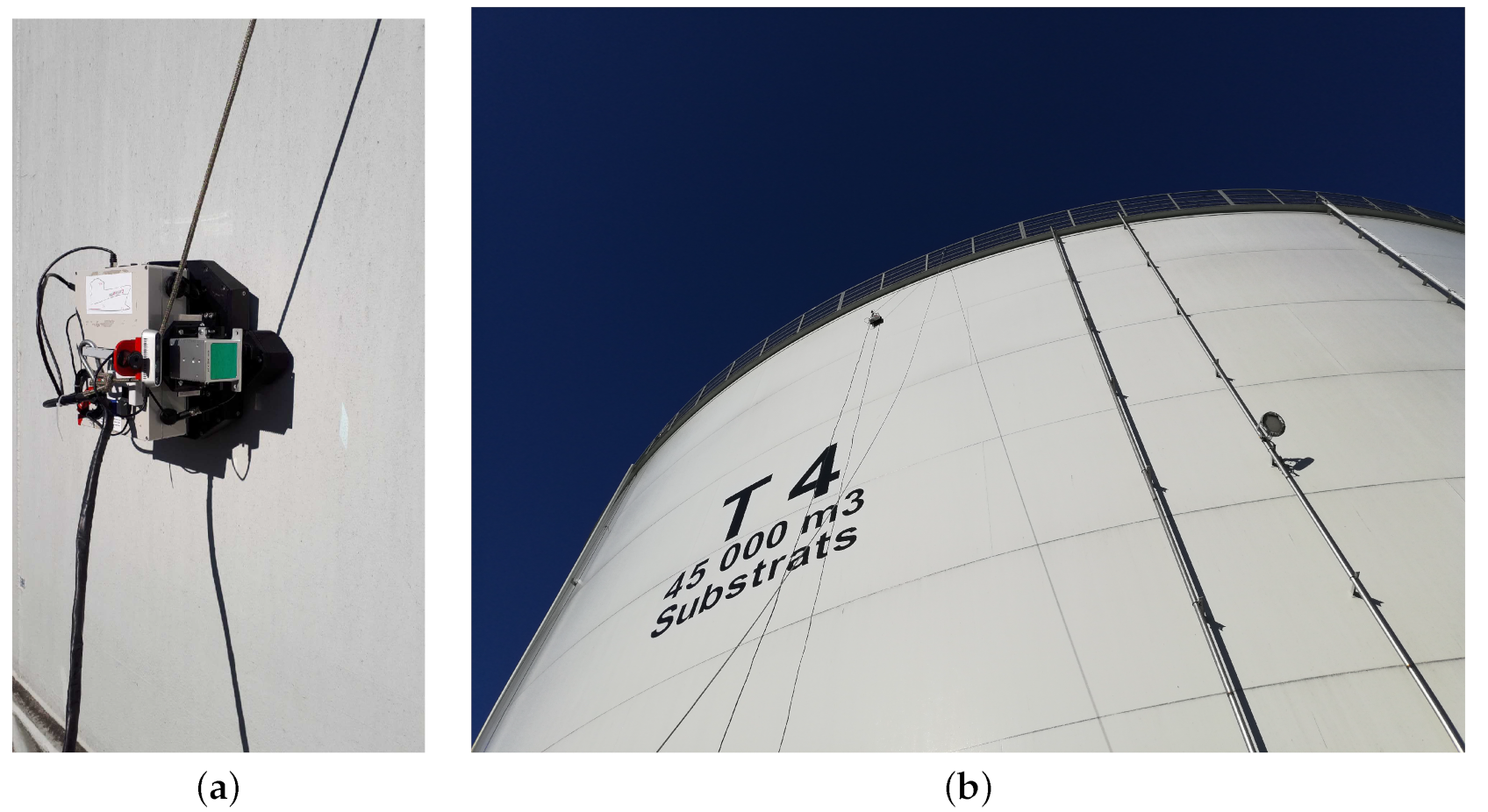

2. Experimental Platform: Altiscan Magnetic Crawler

The Altiscan magnetic crawler is a differentially driven robot equipped with magnetic wheels. The robot, manufactured by ROBOPLANET, is capable of driving on vertical surfaces, such as storage tanks and ship hulls. The crawler is also equipped with the following sensors:

off-the-shelf 6-DOF IMU;

off-the-shelf UWB receiver;

off-the-shelf RGB camera;

3D Lidar: Livox Mid-70;

embedded computer: Axiomtek CAPA310;

rotary wheel optical encoders;

contact V103-RM U8403008 piezoelectric transducer.

Power, water, and data channels are bundled into a single cord connected to the crawler and the respective inputs. Water supply is essential to ensure adequate surface contact between the piezoelectric transducer and the metal surface. The crawler can be operated in manual and autonomous modes. Lastly, a picture of the magnetic crawler is shown in

Figure 1.

3. Data Analysis and Interpretation

In this section, we present the scientific methodology adopted by the project towards the autonomous inspection of large structures. To that end, this section is divided into three parts: pose estimation, mapping and obstacle detection, and ultrasonic data processing.

3.1. Pose Estimation

A probabilistic algorithm for pose estimation is often necessary to create accurate localization. Currently, probabilistic algorithms such as the extended Kalman Filter (EKF), unscented Kalman Filter (UKF) and particle filter (PF) provide the best means of performing pose estimation for nonlinear systems with noisy measurements [

16]. More specifically, the PF provides high accuracy with a nonlinear system while also providing multimodal pose distribution capable of handling non-Gaussian sensor noise [

17,

18,

19].

3.1.1. Particle Filter

In our case, the particle filter is an optimal localization algorithm given the dynamics of the crawler, coupled with the sensor package used for localization. As mentioned above, the crawler uses a differential drive, and the kinematic motion model for a differential drive is nonlinear. By using a PF that handles nonlinear motion models well and does not require linearization, the PF is a good choice for this type of motion. In terms of sensors, the crawler has only encoder readings (odometry), an IMU, and UWB measurements that it can use for localization. Range measurements such as UWB can lead to multimodal distribution when there are insufficient range measurements or high levels of noise, and often exhibit non-Gaussian noise due to time-of-flight measurements [

17,

19]. As the PF, unlike the EKF or UKF, produces no Gaussian assumptions regarding noise or the probability density function of the position, it is the filter that best matches data given by the sensors.

3.1.2. Particle-Filter Mesh Projection

The major drawback of the PF for real-time use, as it tends to be computationally intractable in high dimensions due to bottlenecks in the algorithm coupled with a required high density of particles. In the case of wheeled robots, one solution is to only provide a pose estimate in SE(2) with 3 DoF [

20,

21,

22]. Indeed, this solution works reasonably well for wheeled robots operating on a flat surface such as a road or hallway. However, once the robots starts to move on less planarlike surfaces, the mapping between the estimated pose in SE(2) with 3 DoF and the real pose of the robot within the world is not possible without introducing a high degree of uncertainty or error. Thus, for robots such as the crawler, which generally dives in complex three-dimensional structures such as ships or storage containers, this method would not be optimal.

One means of solving PF tractability problem is to reduce the required particles while maintaining a pose estimate in SE(3) with 6 DoF. Normally, this is not possible because, with 6 DoF, the set of possible poses after the robot moves is so large that a giant sample is needed to obtain an accurate estimate. However, if the set of possible poses after motion is applied (i.e., within the PF transition function) is reduced, lowering the particle count becomes possible without reducing the accuracy of the estimate. In this case, the crawler’s motion is actually constrained in such a way because it must move along a surface. These constraints reduce the effective workspace of the crawler, allowing for motion to be constrained to a surface (i.e., the set of possible poses in the world after motion is applied is constrained by the fact the crawler is moving on a surface, and the surface is known; this motion constraint can be seen in

Figure 2, where the motion of the crawler can be seen as stuck to a surface when comparing mesh and nonmesh paths).

For the surface, manifold approximation in the form of a mesh is used during initialization and in the transitional function to reduce the workspace. To create this mesh, the surface on which the crawler moves is scanned to create a dense point cloud. On the basis of this point cloud, a mesh data structure is constructed that serves as an approximation of the smooth manifold on which the crawler moves. During the initialization and transitional functions, particles that are not on the surface of the manifold are then projected onto this manifold, ensuring that all particle positions are constrained to the mesh surface.

Once the position of the particles is constrained to the mesh surface, the orientation is further constrained so that it is consistent with the crawler moving on a surface. Here, the crawler (and by extension the particle) is attached to the surface of the manifold. To this end, the x axis in the robot frame is retained to preserve the equivalent of the yaw orientation of the crawler. The z axis of the crawler is then aligned with the normal vector of the manifold at the point where the crawler is located. The current orientation is then further constrained by aligning the y axis of the crawler to be perpendicular with the normal vector. In a sense, this can be seen as constraining the pitch and roll of the crawler to the mesh on a local plane while retaining the yaw.

By reducing the possible position and orientation of the robot, it is now possible to use a particle filter in the range of 200–500 particles while still maintaining a high level of accuracy (see

Table 1 and

Figure 3, both using the root mean square error (RMSE), defined below).

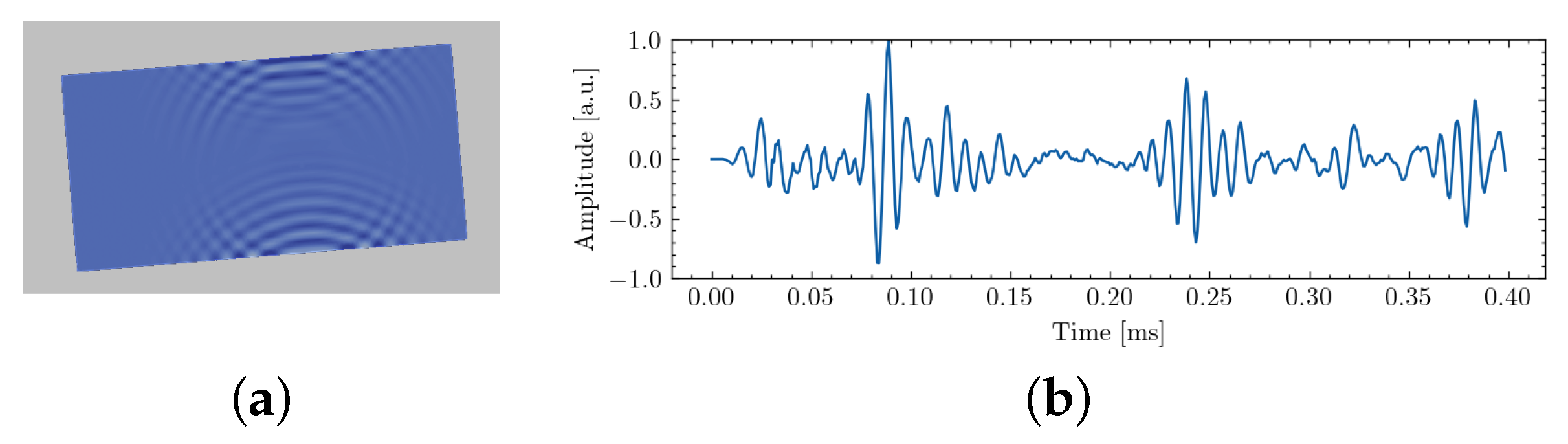

3.2. Ultrasonic Guided Waves

Autonomous robotic inspection on a large structure may be enabled by the use of ultrasonic measurements. Indeed, these waves may be leveraged to improve both localization and mapping capabilities by relying on acoustic echoes on the structural features (individual metal panel boundaries, stiffeners…), as illustrated by

Figure 4. Furthermore, they can be used to enable long-range inspection, which has not yet been established for current robotic systems. In the following, we describe how UGWs are integrated in the current magnetic crawler and how they can be leveraged to achieve ultrasonic mapping.

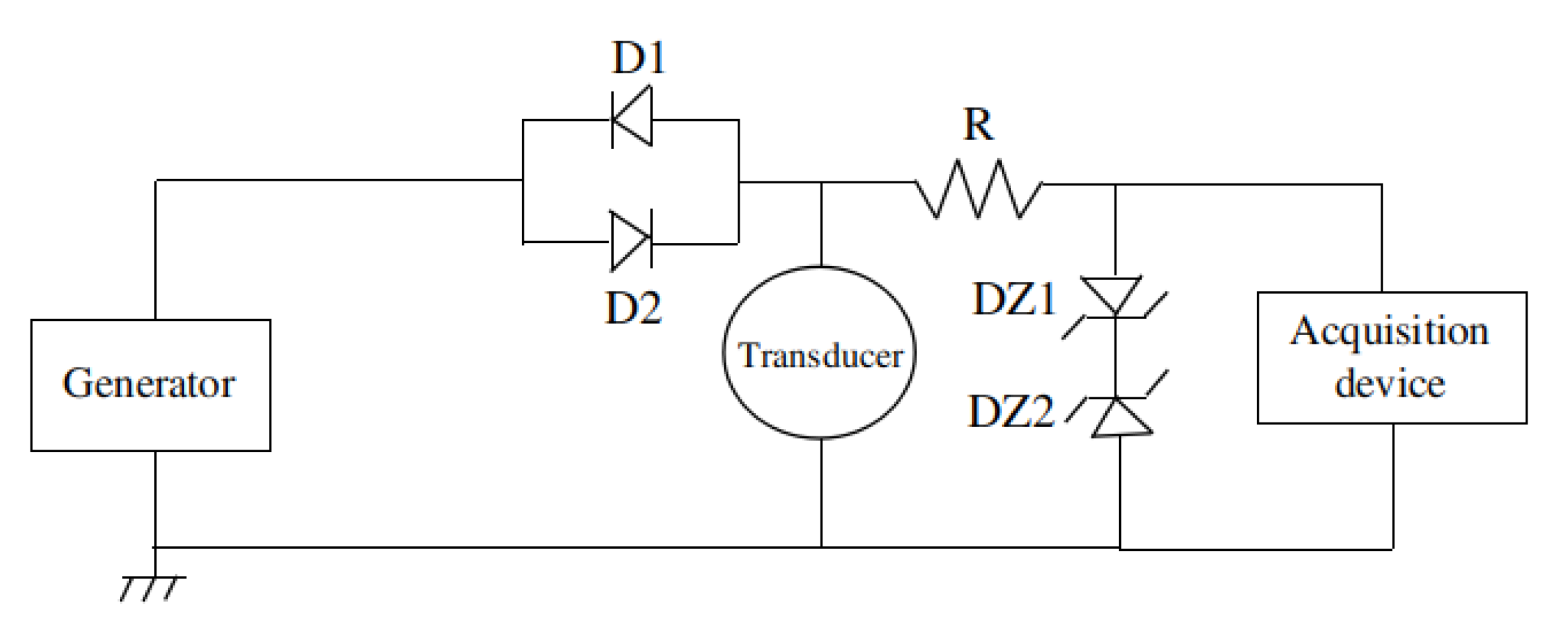

3.2.1. Integration of UGWs

In this part, we describe how UGWs were integrated within our magnetic crawler system. The robot is equipped with a single piezoelectric contact transducer placed in its head. This is in contact with the surface to simultaneously generate and receive UGWs propagating in the material. We used an electrical circuit, shown in

Figure 5, to emit and receive UGWs using a single transducer in true pulse-echo mode. The principle was to protect the acquisition device during excitation at high amplitude. Otherwise, the acquisition device could be damaged. This was achieved by the use of Zener diodes that limited the voltage level at their ends while only inducing little deformation on low-amplitude signals (that contained the acoustic echoes). The robot was also equipped with a tether that carried a tube to continuously bring water at the interface between sensor and surface with an electrical water pump.

The sensor used for our prototype system was a contact V103-RM U8403008 piezoelectric transducer. This sensor was used as it is a common industrial sensor that was easily available. Although it can generate and receive UGWs, it is not an optimal choice, as it should be used for 1 MHz signals; for our application, lower frequencies are typically used.

3.2.2. Ultrasonic Mapping via Beamforming

The chore of our methodology to achieve acoustic localization and mapping was beamforming [

23]. This spatial filtering approach can focus, in the localization space, the energy contained in the measurements acquired over the robot trajectory. Hence, acoustic reflectors (such as individual metal plate boundaries) can be localized by retrieving local maxima on beamforming results. The principle of this strategy was successfully demonstrated in [

14] to achieve acoustic SLAM. An example of plate geometry reconstruction using our magnetic crawler system is provided in the

Section 4.

4. Field Experiment

4.1. Mapping and Obstacle Detection

In this section, we present our approach to the mapping problem while taking into account contextual constraints such as obstacles and the need to detect free space.

Maps are important to robots since they can be used for obstacle avoidance, path planning, and to constrain the attitude of the robotic system. Maps are also important to human operators looking for visual feedback from the robot’s perspective.

As previously discussed, the robot was equipped with an RGB camera, a 3D lidar, and an IMU. In addition, optical sensors capture wheel odometry. Further, as discussed in

Section 3.1, an Extended Kalman Filter (EKF) [

24] filter was used to fuse IMU and wheel odometry data. For the remainder of this article, the obtained pose is further referenced to as the “EKF pose”.

To compensate for uncertainties such as drift, pose correction is performed using a constrained version of Iterative Closest Point (ICP). The latter approach is further discussed in

Section 4.1.3.

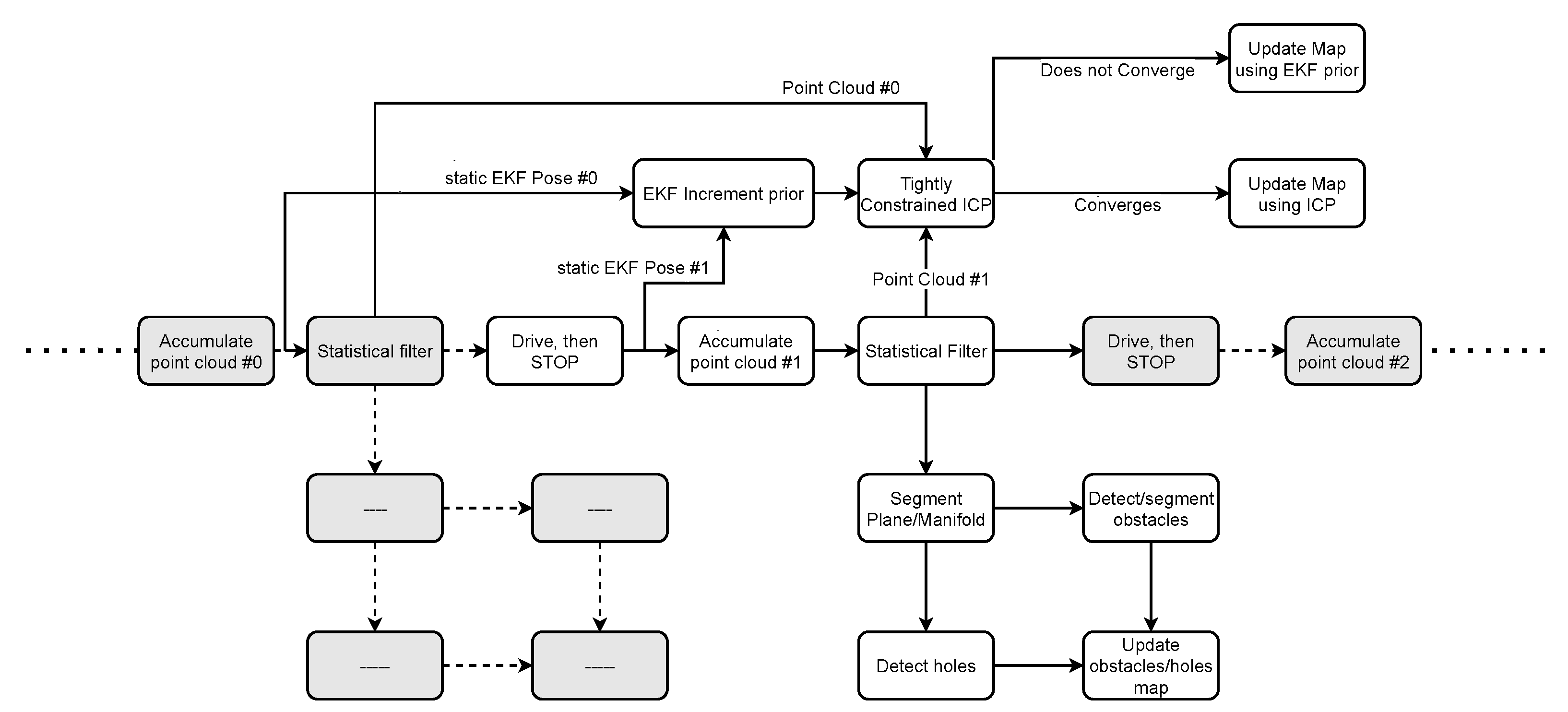

4.1.1. Stop and Map

The majority of filtering techniques, such as the Kalman filter [

25], introduce time delays between filter estimates and actual observations. In that sense, the generated estimate satisfied control requirements for autonomous driving. Nevertheless, mapping was more challenging. To solve this problem, a stop-and-map approach was implemented in the autonomous planner, i.e., the robot task manager.

As shown in

Figure 6, the proposed mapper is idle while the robot is moving and only captures data when the robot stops. Once static, the point cloud is accumulated, and the pose is captured.

The latter approach thus does not suffer from data delays. The EKF pose is then captured around half a second after the robot stops. Minimal stop time was set to 3 s to allow for the on-board laser to accumulate sufficient points for data fitting. This is especially useful with 3D lidars equipped with a scanning unit, such as the Livox Mid-70. A sample accumulated cloud can be seen in

Figure 7.

4.1.2. Obstacle Detection

The accumulated point cloud was then voxelized and processed through RANSAC [

26] by fitting a second-degree manifold [

27]. The choice of a second-degree manifold is rooted in the application in which the mapper is used: ship hulls and storage tanks using autonomous robots for inspection are often significant in size. As a result, nonflat surfaces comprise a significant radius. The curvature is thus locally negligible, i.e., the surface around the current position of the robot can be represented as a plane. Nevertheless, a second-degree manifold better captures surface geometry at unique places with an important curvature, such as at the tip of the ship structure.

Lastly, RANSAC inliers denote free observable space that belongs to the detected manifold, and outliers denote positive obstacles such as protruding objects and negative obstacles such as holes.

4.1.3. Pose Correction and ICP

ICP is an algorithm used to stitch overlapping point clouds. It works by iteratively finding the transformation that better aligns point cloud pairs. An ICP prior on the transformation to-be-found improves the chances of converging to a valid solution [

28].

Odometric EKF still suffers from translational drift. To that end, ICP is used between accumulated point clouds to reduce drift between successive stops. Nevertheless, ICP does not always properly converge on featureless surfaces. To overcome this issue, a constrained version of ICP was implemented. The purpose of these constraints is to prevent ICP from reducing the quality of the estimated EKF pose when it does not properly converge. The list of constraints can be found in

Table 2.

After running few ICP iterations and due to point cloud overlap, the density of points should be standardized for both the newly accumulated point cloud and the previous ICP map. To that end, a density filter is applied to both inputs. Although filter value depends on point cloud density, its true purpose is to have the same density (value) for both inputs. The full list of ICP parameters is listed in

Table 3.

Lastly, the map pose is corrected according to Equation (

1), where

C is the ICP correction inferred by matching the current accumulated cloud to the previously accumulated point cloud,

P is the current pose in the reference frame of the map,

is the previously captured EKF pose when the robot was still static, and

is the most recently captured pose with the robot also being static.

4.1.4. From Point Clouds to Texture Maps

Up to this point, the proposed framework still lacked a high-level visual component to be used by the system operator for visual feedback, manual driving, and debugging a possible snag. So far, point clouds have proven to be versatile data containers, and they are the precursors to creating maps. Nevertheless, there is a need for a representation that is finite in space and intelligible for people who are not point cloud experts. To that end, a multilayer texture map was conceived.

The generated texture is a projection of the RGB image on the robot surface. In the latter context, we assumed that the ground was flat. Ground pixels are projected onto the camera frame for color extraction. For this, we used pinhole model , where is a 3D ground point, is the extrinsic matrix that provides the geometric connection between lidar and camera frames, and A is the camera intrinsic matrix obtained by checkerboard calibration. Lastly, the colors of ground points are inferred by copying the colors of the nearest pixel after projection, i.e., those of .

We projected the RGB image onto the ground surrounding the robot. What followed was the fusion of relevant semantic information, such as free spaces and obstacles, extracted from point cloud data. As such, pixels not seen by the lidar i.e., unobservable space, are marked in black, pixels belonging to obstacles are marked in red, and free space keeps the original RGB colors. The texture map comprises 3 layers:

A bottom layer consisting of a dynamically updated projection of the ground portion of the image drawn at the estimated pose.

A middle layer that overwrites the bottom layer using a clean representation, updated every time the robot stops.

A top layer consisting of metadata such as grid resolution.

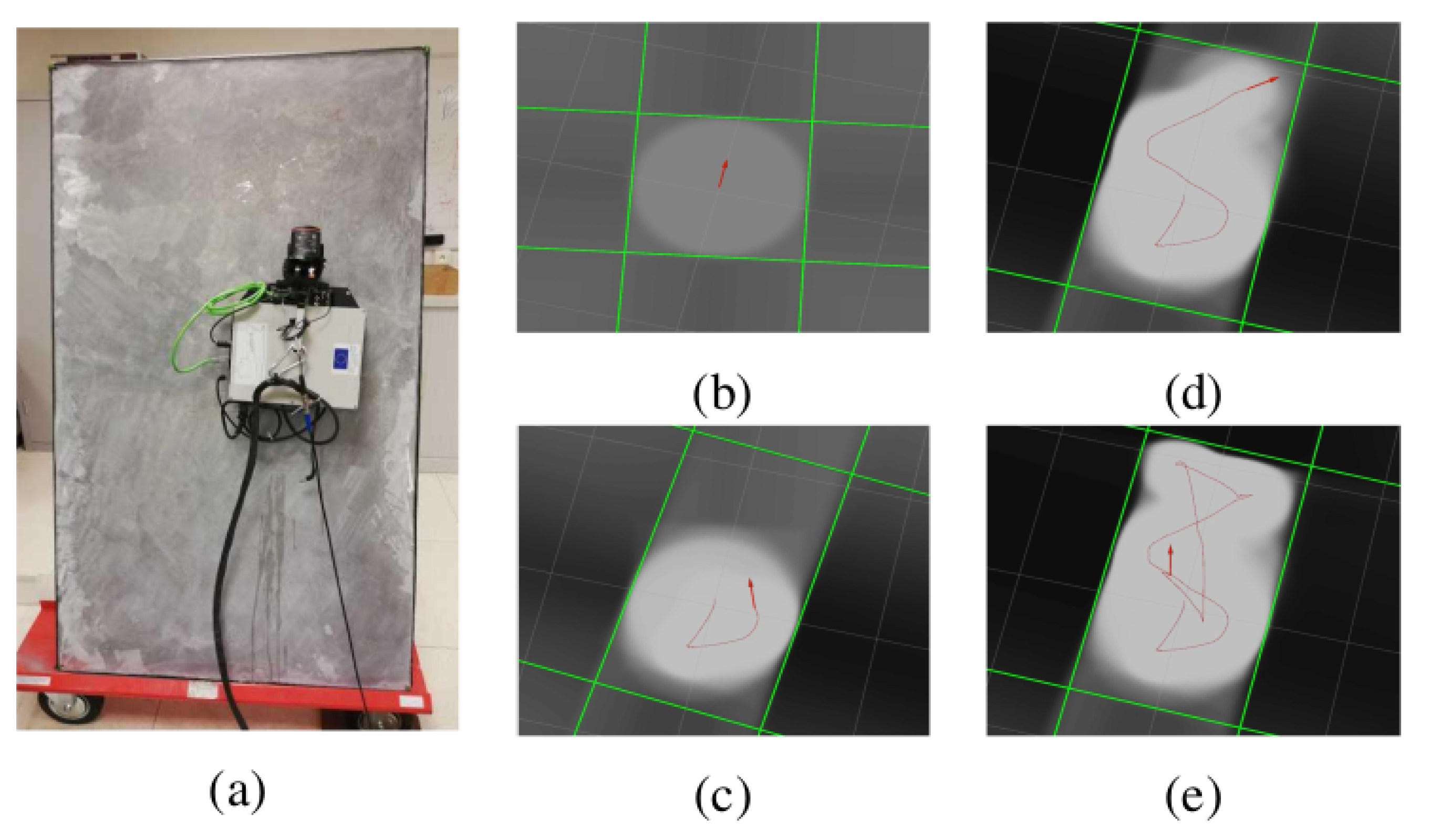

4.2. Ultrasonic Mapping

At the time of the writing, our prototype setup for emission and reception of UGWs was not yet fully established for successful operation on a large structure such as a storage tank. Still, we obtained experimental results with our system in a laboratory environment to only map the boundaries and the inner surface of an isolated 1700 × 1000 × 6 mm steel plate that was placed nearly vertically. A picture of the experimental setup is provided in

Figure 8a. In the experiment, IMU readings were integrated into the robot trajectory estimation for accurate robot heading. However, UGW measurements were not used for robot localization.

Obtained results using the beamforming method presented in [

29] are provided in

Figure 8b–e at different steps of the robot transect resulting from manual driving. Results show that acoustic mapping using UGWs and our magnetic crawler system is feasible, as the plate dimensions and plate orientation with respect to the robot are recovered with sufficient precision. Detailed results are available in [

29]. Overall, the experiment demonstrated the feasibility of ultrasonic mapping using guided waves and our magnetic crawler system. In future work, the combination of UGW measurements with other sensors and their deployment in more realistic environments should be investigated to achieve acoustic SLAM and eventually long-range defect detection.

4.3. Autonomous Navigation

The autonomous navigation system on the crawler consists of a mission system and a set of commands or tasks that can be used by the mission system. The mission system allows for the user to plan and create a mission in advance, consisting of a set of commands or tasks affecting the crawler’s behavior. For example, the crawler can schedule a task to continually monitor for objects while simultaneously performing a vertical transect for a set distance or until it reaches a set height.

In addition to more basic commands, such as performing vertical and horizontal transects, and rotations, the crawler can also utilize a mesh for control. In this case, the crawler gives a position on or near the mesh, generates a path to this position, and follows the trajectory to the position.

5. Infield Intervention

In this section, we show how the proposed system performed during infield interventions. To validate our system, a Leica MS-60 total station was used to track the real-time position of the crawler robot. Using NTP and PTP protocols, time synchronization was performed among on-board sensors, the on-board computer on the crawler, the total station, and the operator’s computer.

The experimental environment consisted of the large metallic storage tanks shown in

Figure 1b. During the experiments, the robot was given the task of autonomously driving on the structure. Further, the on-board lidar was used to detect nearby obstacles. During the entire process, the robot captured ultrasonic measurements while mapping the structure in real time.

5.1. Pose and Autonomy Evaluation

For the mission on the metallic tank, the crawler was given the goal of performing a vertical transect to the top of the tank, returning to the bottom, rotating, and then performing a horizontal transect (see

Section 2). The particle filter ran in real time on the crawler’s embedded computer with 200 particles. With 200 particles, the crawler was also able to use this localization for control due to the high accuracy of the particle filter (see

Section 1).

In addition to the standard controls, we were also able to test the mesh-based control. In this case, the crawler was able to successfully create a path on the mesh data structure and use that path to navigate to the given location on the real surface.

5.2. Mapping and Texture Generation

As shown in

Figure 9, the mapping algorithm provided semantic information that could be interpreted by both humans and computers. As expected, ICP did not always converge, but it improved the result when it did. When ICP did not converge, the fused pose was used instead.

6. Conclusions

This paper showed the potential afforded by autonomous inspection vehicle systems. By using the state of the art in localization, mapping, and the processing of ultrasonic guided waves, we showed how we can create an autonomous systems capable of navigating on its own while providing qualitative visual and quantitative feedback through the analysis of map and ultrasonic data. The development of such techniques is crucial to lowering costs associated with storage tank and ship inspections, and to decrease the already significant human risk. In this work, we showed how to generate and improve pose estimates, for instance, by using the mesh of the structure to constrain the pose. Semantic texture maps were also generated for navigation and obstacle avoidance. Lastly, we integrated ultrasonic measurements in our system to localize the boundaries of a metal panel using ultrasonic echoes.

Future work consists of improving the robot autonomy, for instance, by integrating data reported from the Unmanned Aerial Vehicle (UAV)s, Autonomous Underwater Vehicle (AUV)s, and the above-water crawler. The latter integration is needed to achieve a comprehensive understanding of structural integrity and for the precise localization of defects.

Author Contributions

Localization, P.S. and C.P.; mapping, G.C. and C.P.; ultrasonic Data, O.-L.O. and C.P.; validation, G.C., O.-L.O., P.S. and C.P.; writing—original draft preparation, G.C., O.-L.O. and P.S.; writing—review and editing, G.C., O.-L.O., P.S. and C.P.; supervision, C.P.; project administration, C.P.; funding acquisition, C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This project received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 871260.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EKF | Extended Kalman filter |

| UGW | Ultrasonic guided wave |

| PF | Particle filter |

| ICP | Iterative closest point |

| SLAM | Simultaneous localization and mapping |

| GPS | Global positioning system |

| IMU | Inertial measurement unit |

| DoF | Degrees of freedom |

| SLERP | Spherical linear interpolation |

| RMS | Root mean square |

| CAD | Computer-aided design |

| UWB | Ultrawide band |

| MDPI | Multidisciplinary Digital Publishing Institute |

References

- Gallegos Garrido, G. Towards Safe Inspection of Long Weld Lines on Ship Hulls Using an Autonomous Robot; Universidad Tecnológica de Panamá: Panama City, Panama, 2018. [Google Scholar]

- Enjikalayil Abdulkader, R.; Veerajagadheswar, P.; Htet Lin, N.; Kumaran, S.; Vishaal, S.R.; Mohan, R.E. Sparrow: A Magnetic Climbing Robot for Autonomous Thickness Measurement in Ship Hull Maintenance. J. Mar. Sci. Eng. 2020, 8, 469. [Google Scholar] [CrossRef]

- Negahdaripour, S.; Firoozfam, P. An ROV Stereovision System for Ship-Hull Inspection. IEEE J. Ocean. Eng. 2006, 31, 551–564. [Google Scholar] [CrossRef]

- Hover, F.S.; Eustice, R.M.; Kim, A.; Englot, B.; Johannsson, H.; Kaess, M.; Leonard, J.J. Advanced perception, navigation and planning for autonomous in-water ship hull inspection. Int. J. Robot. Res. 2012, 31, 1445–1464. [Google Scholar] [CrossRef] [Green Version]

- Jacobi, M. Autonomous inspection of underwater structures. Robot. Auton. Syst. 2015, 67, 80–86. [Google Scholar] [CrossRef]

- Ortiz, A. BUGWRIGHT2—Autonomous Robotic Inspection and Maintenance on Ship Hulls and Storage Tanks. Available online: https://alberto-ortiz.github.io/bugwright2.html (accessed on 7 March 2022).

- Su, Z.; Ye, L. Identification of Damage Using Lamb Waves: From Fundamentals to Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009; Volume 48. [Google Scholar]

- Hall, J.S.; McKeon, P.; Satyanarayan, L.; Michaels, J.E.; Declercq, N.F.; Berthelot, Y.H. Minimum variance guided wave imaging in a quasi-isotropic composite plate. Smart Mater. Struct. 2011, 20, 025013. [Google Scholar] [CrossRef] [Green Version]

- Quaegebeur, N.; Masson, P.; Langlois-Demers, D.; Micheau, P. Dispersion-based imaging for structural health monitoring using sparse and compact arrays. Smart Mater. Struct. 2011, 20, 025005. [Google Scholar] [CrossRef]

- Tabatabaeipour, M.; Trushkevych, O.; Dobie, G.; Edwards, R.S.; Dixon, S.; MacLeod, C.; Gachagan, A.; Pierce, S.G. A feasibility study on guided wave-based robotic mapping. In Proceedings of the 2019 IEEE International Ultrasonics Symposium (IUS), Scotland, UK, 6–9 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1567–1570. [Google Scholar]

- Tabatabaeipour, M.; Trushkevych, O.; Dobie, G.; Edwards, R.S.; McMillan, R.; Macleod, C.; O’Leary, R.; Dixon, S.; Gachagan, A.; Pierce, S.G. Application of ultrasonic guided waves to robotic occupancy grid mapping. Mech. Syst. Signal Process. 2022, 163, 108151. [Google Scholar] [CrossRef]

- Dobie, G.; Pierce, S.G.; Hayward, G. The feasibility of synthetic aperture guided wave imaging to a mobile sensor platform. Ndt Int. 2013, 58, 10–17. [Google Scholar] [CrossRef]

- Pradalier, C.; Ouabi, O.L.; Pomarede, P.; Steckel, J. On-plate localization and mapping for an inspection robot using ultrasonic guided waves: A proof of concept. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5045–5050. [Google Scholar]

- Ouabi, O.L.; Pomarede, P.; Geist, M.; Declercq, N.F.; Pradalier, C. A fastslam approach integrating beamforming maps for ultrasound-based robotic inspection of metal structures. IEEE Robot. Autom. Lett. 2021, 6, 2908–2913. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM 2.0: An improved particle filtering algorithm for simultaneous localization and mapping that provably converges. IJCAI 2003, 3, 1151–1156. [Google Scholar]

- Wang, Y.; Zhang, W.; Li, F.; Shi, Y.; Nie, F.; Huang, Q. UAPF: A UWB Aided Particle Filter Localization For Scenarios with Few Features. Sensors 2020, 20, 6814. [Google Scholar] [CrossRef] [PubMed]

- González, J.; Blanco, J.L.; Galindo, C.; Ortiz-de Galisteo, A.; Fernández-Madrigal, J.A.; Moreno, F.A.; Martínez, J.L. Mobile robot localization based on Ultra-Wide-Band ranging: A particle filter approach. Robot. Auton. Syst. 2009, 57, 496–507. [Google Scholar] [CrossRef]

- Vernaza, P.; Lee, D.D. Rao-blackwellized particle filtering for 6-DOF estimation of attitude and position via GPS and inertial sensors. In Proceedings of the IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 1571–1578. [Google Scholar] [CrossRef] [Green Version]

- Knobloch, D. Practical challenges of particle filter based UWB localization in vehicular environments. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation, IPIN 2017, Sapporo, Japan, 18–21 September 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; IJsselmuiden, J.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Chen, X.; Vizzo, I.; Labe, T.; Behley, J.; Stachniss, C. Range Image-Based LiDAR Localization for Autonomous Vehicles. arXiv 2021, arXiv:2105.12121. [Google Scholar] [CrossRef]

- Barfoot, T.D. State Estimation for Robotics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar] [CrossRef]

- Van Veen, B.D.; Buckley, K.M. Beamforming: A versatile approach to spatial filtering. IEEE Assp Mag. 1988, 5, 4–24. [Google Scholar] [CrossRef]

- Kalman, R.E.; Bucy, R.S. New Results in Linear Filtering and Prediction Theory. J. Basic Eng. 1961, 83, 95. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.; Bolles, R. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Luchowski, L. Adapting the RANSAC algorithm to detect 2nd-degree manifolds in 2D and 3D. Theor. Appl. Inform. 2012, 24, 151. [Google Scholar] [CrossRef]

- Chahine, G.; Vaidis, M.; Pomerleau, F.; Pradalier, C. Mapping in unstructured natural environment: A sensor fusion framework for wearable sensor suites. SN Appl. Sci. 2021, 3, 571. [Google Scholar] [CrossRef]

- Ouabi, O.L.; Pomarede, P.; Zeghidour, N.; Geist, M.; Declercq, N.; Pradalier, C. Combined Grid and Feature-based Mapping of Metal Structures with Ultrasonic Guided Waves. In Proceedings of the International Conference on Robotics and Automation (to appear), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

Figure 1.

First prototype of magnetic crawler robot during a testing session near Bazancourt in France. (a) Close-up of magnetic crawler; (b) magnetic crawler on top of 20 m high storage tank.

Figure 1.

First prototype of magnetic crawler robot during a testing session near Bazancourt in France. (a) Close-up of magnetic crawler; (b) magnetic crawler on top of 20 m high storage tank.

Figure 2.

3D path comparison of 200 particle MCPF with a 20,000 SPF and ground truth ( front and side views).

Figure 2.

3D path comparison of 200 particle MCPF with a 20,000 SPF and ground truth ( front and side views).

Figure 3.

RMS translation error for 200 particles against ground truth obtained from a Leica MS60 Total Station. Shaded areas represent standard error deviation. The inclusion of mesh constraints dramatically improves position estimates and the standard deviation of the error.

Figure 3.

RMS translation error for 200 particles against ground truth obtained from a Leica MS60 Total Station. Shaded areas represent standard error deviation. The inclusion of mesh constraints dramatically improves position estimates and the standard deviation of the error.

Figure 4.

(a) Ultrasonic guided waves reflecting on the edges of a metal panel in a simulated environment. (b) Example of ultrasonic measurement acquired on an isolated metal panel in pseudo pulse-echo mode (i.e., with two nearly collocated transducers).

Figure 4.

(a) Ultrasonic guided waves reflecting on the edges of a metal panel in a simulated environment. (b) Example of ultrasonic measurement acquired on an isolated metal panel in pseudo pulse-echo mode (i.e., with two nearly collocated transducers).

Figure 5.

Schematic of designed electrical circuit to use a true pulse-echo setup. D1 and D2 are commutation diodes, DZ1 and DZ2 are Zener diodes, and R is a resistor.

Figure 5.

Schematic of designed electrical circuit to use a true pulse-echo setup. D1 and D2 are commutation diodes, DZ1 and DZ2 are Zener diodes, and R is a resistor.

Figure 6.

Flowchart of proposed mapper: grayed-out rectangles denote repeated behavior.

Figure 6.

Flowchart of proposed mapper: grayed-out rectangles denote repeated behavior.

Figure 7.

RGB vs. intensity map showing metal plates used to test the magnetic crawler robot in Metz. (a) Accumulated intensity point cloud taken from the Livox Mid-70; (b) RGB camera feed.

Figure 7.

RGB vs. intensity map showing metal plates used to test the magnetic crawler robot in Metz. (a) Accumulated intensity point cloud taken from the Livox Mid-70; (b) RGB camera feed.

Figure 8.

Plate geometry mapping with our robotic platform relying on UGWs. (a) Experimental setup. (b–e) Mapping results at different steps along robot trajectory. Red arrow, robot pose; red line, trajectory.

Figure 8.

Plate geometry mapping with our robotic platform relying on UGWs. (a) Experimental setup. (b–e) Mapping results at different steps along robot trajectory. Red arrow, robot pose; red line, trajectory.

Figure 9.

Accumulated point cloud with obstacle detected. Texture map shows free space in real world colors, the same obstacle detected in the point cloud (red), and previously observed free space (green).

Figure 9.

Accumulated point cloud with obstacle detected. Texture map shows free space in real world colors, the same obstacle detected in the point cloud (red), and previously observed free space (green).

Table 1.

Average RMSE comparison.

Table 1.

Average RMSE comparison.

| Particles | Mesh | NoMesh |

|---|

| 200 | 0.0856 | 0.3694 |

| 500 | 0.0780 | 0.3389 |

| 20,000 | - | 0.2532 |

Table 2.

List of ICP constraints.

Table 2.

List of ICP constraints.

| Constraint Type | Value |

|---|

| 2D Constraint | |

| Maximal rotation norm | 0.05 rad. |

| Maximal translation norm | 0.35 m. |

| Minimal differential rotation error | 0.01 rad. |

| Minimal differential translation error | 0.01 rad. |

Table 3.

List of ICP parameters used for pose correction.

Table 3.

List of ICP parameters used for pose correction.

| Parameter Name | Mapper |

|---|

| Matcher | KD tree matcher |

| Matcher KNN size | 15 |

| Error minimizer size | Point to Plane |

| Max iterations | 25 |

| Octree grid filter | 0.01 |

| Maximal input point density | 400,000 |

| Maximal ICP map point density | 400,000 |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).