Abstract

This paper describes mobile robot tactics for recovering a wheeled vehicle that has overturned. If such a vehicle were to tip over backward off its wheels and be unable to recover itself, especially in areas where it is difficult for humans to enter and work, overall work efficiency could decline significantly, not only because the vehicle is not able to perform its job, but because it becomes an obstacle to other work. Herein, the authors propose a robot-based recovery method that can be used to recover such overturned vehicles, and the authors evaluate its effectiveness. The recovery robot, which uses a mounted manipulator and hand to recover the overturned vehicle, is also equipped with a camera and a personal computer (PC). The ARToolKit software package installed on the PC detects AR markers attached to the overturned vehicle and uses the information they provide to orient itself in order to perform recovery operations. A statics analysis indicates the feasibility of the proposed method. To facilitate these operations, it is also necessary to know the distance between the robotic hand and the target position for grasping of vehicle. Therefore, a theoretical analysis is conducted, and a control system based on the results is implemented. The experimental results obtained in this study demonstrate the effectiveness of the proposed system.

1. Introduction

A number of developing countries are grappling with the serious twin problems of declining birthrates and aging populations, both of which reduce the total available workforce. In response to this, various parties have posited that if robots could be employed in dangerous areas and/or perform jobs that most people would not want to do because such work makes them feel mentally and physically distressed, the situation could be improved. Since many such jobs would require robots to move independently, various locomotive designs such as wheeled and tracked vehicles [1], special wheels [2], or the fusion of different mechanisms [3,4], have been widely researched. However, even though a great deal of effort has been directed at improving the movement capabilities of mobile robots, none of the methods investigated thus far are perfect. Each method has its merits and demerits, and every movement mechanism has its limitations.

Wheel-based mechanisms are currently the most widely researched for producing mobile robots. However, the primary problem with such mechanisms is their propensity to fall over or suffer from drive wheel slippage when it is necessary to navigate across rough terrain, run over obstacles, or when collisions occur with people or other robots. Overturning is especially problematic for wheeled robotic vehicles because such mishaps can result in fatal damage to the robot body. Hence, in cases where a robotic vehicle has overturned, the robot must be able to right itself, or it must be recovered in some other way. If this is not done, not only will the vehicle be unable to perform its assigned duties, but the vehicle itself becomes an obstacle that can prevent the free movement of humans or other robots. If such accidents occur in areas where access limitations make it difficult for people or other robots to enter and work, the overall workspace efficiency will decline significantly. Thus, it is clear that work efficiency would be significantly improved in areas where multiple robots exist and work together if they were designed with the ability to assist each other when mishaps such as overturning occur.

Some research programs have already been conducted to investigate scenarios involving obstacle avoidance and the recovery of overturned humanoid robots [5,6,7]. Other studies have examined ways to minimize damage when humanoid robots overturn [8], and a method of returning an overturned robot to a standing position has been studied [9]. For example, Tam and Kottege studied a fall avoidance and recovery method using a humanoid robot equipped with walking sticks [10].

In the present paper, the authors describe the design and construction of a robot system that is capable of recovering a robotic vehicle that has overturned and placing it back onto its wheels. To accomplish this, the recovery robot must be able to raise the overturned robotic vehicle while continuously controlling its inclination. However, when the mass of the overturned robotic vehicle is higher than that of the assisting robot, such a degree of control is difficult to achieve. That point was examined by Yoshida et al., and by Inaba et al., who studied pivoting transportation methods using a humanoid robot [11,12]. In a separate study, Asama et al. examined a transportation method using multiple robots that could raise an object [13].

In our previous studies, our research group investigated a step climbing method that is capable of inclining a robotic vehicle [14] or a handcart [15] in order to permit it to ascend a step.

The remainder of this paper is organized as follows. Section 2 describes our newly designed robot recovery system, while Section 3 describes the process of recovering a robotic vehicle that has fallen over backward. A statics analysis for a vehicle recovery is described in Section 4, Section 5 shows the results of our theoretical analysis, while Section 6 describes experimental results. Finally, Section 7 contains the conclusions.

2. Robot-Based Recovery System

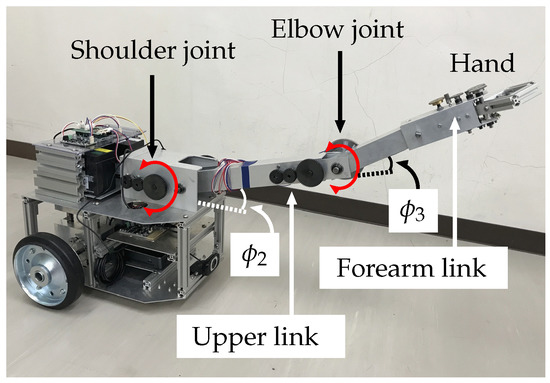

The wheeled recovery robot developed by our research group, which will be referred to as the “robot” hereafter, is shown in Figure 1. Table A1 in the Appendix A lists its specifications. Figure 2 shows a model of the robot and an overturned robotic vehicle. This robot has a manipulator that has two degrees of freedom (2DOF) and an attached hand that has 1DOF, for a total of 3DOF. It is mounted on the center of the robot’s body. Encoders are set on each axle of the robot manipulator and on the hand mechanism. In this paper, the length of the upper arm link (from the shoulder joint to the elbow joint) is , and the length of the forearm link (from the elbow joint to the hand) is . The angle of the shoulder joint is ° +30°, and the angle of the elbow joint is ° +90°.

Figure 1.

Recovery robot.

Figure 2.

Model of robot and overturned robotic vehicle.

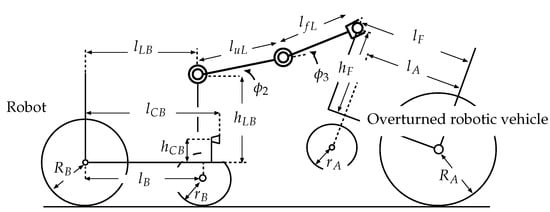

The robot hand is equipped with two fingers, and the angle between them is 0° +50°. (see Figure 3a.) The hand is equipped with an ultrasonic sensor (Digilent Pmod MAXSONAR) and an infrared sensor (POLORU VL6180X) for distance measurements, as shown in Figure 3b. A switch sensor (OMRON, D2F-L) is installed inside the hand mechanism to obtain contact information when the fingers are closed.

Figure 3.

Robot hand mechanism: (a) fingers, (b) hand-mounted sensors.

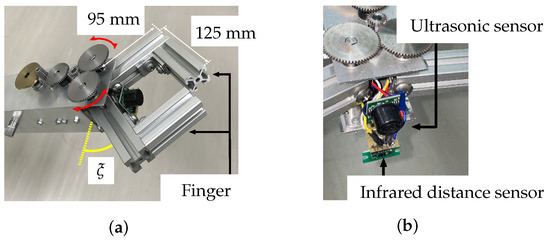

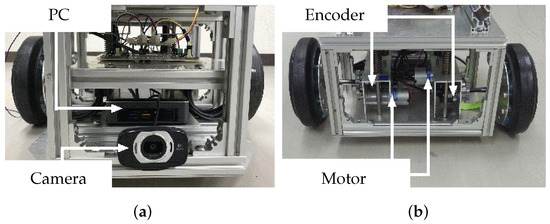

This robot has two pairs of left and right wheels mounted in tandem. The front pair are casters, and the rear pair are individually mounted driving wheels. The robot has motors and encoders on both driving wheel mechanisms that control its movements, as shown in Figure 4.

Figure 4.

Views of (a) front and (b) rear of robot.

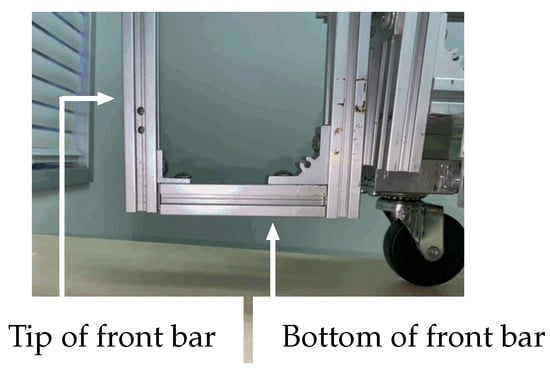

When the robot approaches the overturned robotic vehicle, the manipulator grasps the front bar of the vehicle (Figure 5), using its hand mechanism (Figure 2 and Figure 3a) and attempts to help restore the overturned vehicle to its correct working position. Table A2 in the Appendix A lists the specifications of the overturned robotic vehicle, which is referred to as the “vehicle” hereafter in this paper (Figure 6). Like the recovery robot, the vehicle also has a wheeled mechanism that consists of front and rear wheel pairs. The front pair are casters, and the rear pair are individually mounted driving wheels.

Figure 5.

Front bar of vehicle.

Figure 6.

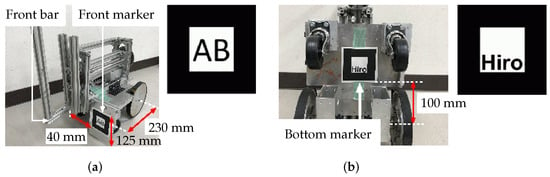

AR markers mounted on robotic vehicle: (a) front and (b) bottom.

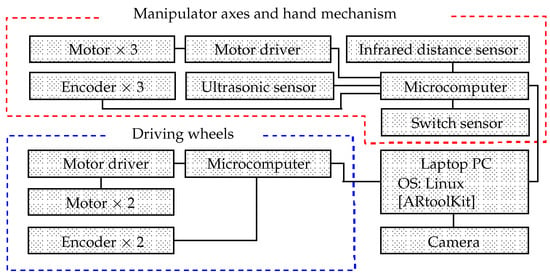

Figure 7 shows the system controls. The robot PC is equipped with an Intel (R) Core (TM) i3-7100U 2.40 GHz central processing unit (CPU) and 8 GB of random-access memory (RAM). The Linux (Lubuntu 18.04 LTS) operating system (OS) is installed. A Logicool HD Webcam C615 (angle of view: 74°) is mounted on the front of the robot and connected to the PC. The ARtoolkit software package is installed on the PC.

Figure 7.

System configuration.

ARtoolKit is a software package that is capable of processing posture data and calculating the distance between the camera and an AR marker [16,17,18]. ARtoolkit users are given free permission to make and use AR markers in their systems, providing that they obey the rules governing their manufacture. However, before using ARtoolkit, users must register the AR markers used in their systems.

In this study, AR markers are mounted on the front (Figure 6a, Size: 70 mm × 70 mm) and bottom (Figure 6b, Size: 80 mm × 80 mm) of the overturned vehicle. The robot is able to obtain posture and distance information from the overturned vehicle based on data from these AR markers.

The robot is equipped with microcomputers (Arduino Holding, Arduino Leonardo) connected to the PC via Universal Serial Bus (USB) cables and connectors. Three motor driver circuits (Cytron Co., Ltd., MD10C) are connected to each microcomputer to control the driving processes. Electric motors (driving wheels: Tsukasa Electric Co., Ltd., TG-85E-SU-114-KA; shoulder and elbow axles: Tsukasa Electric Co., Ltd., TG-85E-KU-113-KA; and hand: HG16-240-AB-00) are connected to the motor driver circuits. Rotary encoders permit the degrees of these motors to be determined (driving wheels: Autonics, E30S4-100-3-N-5, shoulder axle, elbow axle, and hand mechanism: Taiwan Alpha Electronic, RE640F-40E3-20A-24P).

3. Overturned Vehicle Recovery Process

Initially, to recover a vehicle that has fallen off its wheels, the robot approaches the overturned vehicle and grasps it using the hand mechanism attached to its manipulator arm. In this paper, it is assumed that the camera on the robot is able to observe the AR markers on the overturned vehicle and that both the robot and vehicle are on a flat surface.

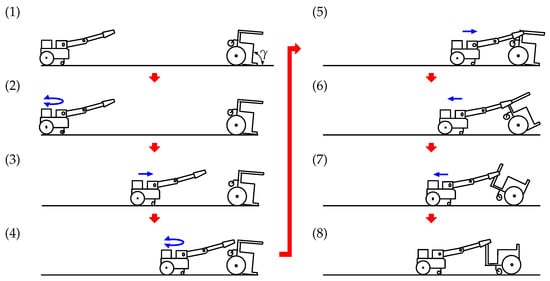

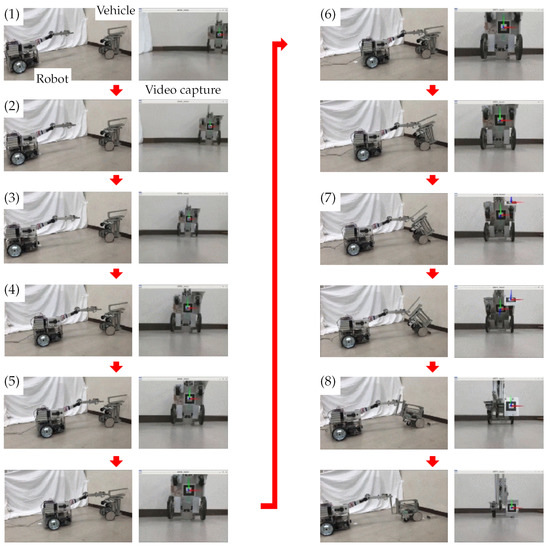

The recovery process is described in detail below, and each stage of the process is shown in Figure 8. The states shown in the figure correspond to (1)–(8) below:

Figure 8.

Overturned vehicle recovery process.

- (1)

- At this point, the vehicle has fallen over backward its inclination angle is . The camera on the robot captures an image of the overturned vehicle. ARtoolKit identifies the AR marker mounted on the bottom of the vehicle body and calculates the distance between the robot and the vehicle, as shown in Figure 6b.

- (2)

- The robot drives its left and right wheels to pivot around its center position until it is oriented towards the vehicle. Once the angle between the robot-mounted camera and the vehicle is reduced to approximately zero, ARtoolKit calculates the distance between the robot and the vehicle.

- (3)

- The robot moves toward the vehicle and stops after traveling half of the distance between it and the vehicle.

- (4)

- The robot again pivots on its left and right rear wheels until the angle between its front-mounted camera and the vehicle is reduced to approximately zero, after which ARtoolKit again determines the distance between the robot and the vehicle.These processes (AR marker detection, pivot turning, and forward motion) are repeated until the distance between the robot and the vehicle is reduced to 0.3 m.

- (5)

- The ultrasonic sensor on the hand then searches for and detects the front bar of the vehicle. Next, the infrared distance sensor on the hand detects the front bar of the vehicle and performs minor position adjustments until the robot hand is able to grasp the front bar (Figure 3). After the bar is grasped, the hand then stops.

- (6)

- The robot moves backward, causing the inclination angle of the vehicle, , to increase. The connection position between the robot and the vehicle begins moving up along the front bar. During this process, the robot uses ARtoolKit to monitor both the inclination of the vehicle and the AR marker mounted on its bottom, as shown in Figure 6b.

- (7)

- The robot continues to move backward. When is more than 120°, the robot observes the other AR marker mounted on the front of the vehicle, as shown in Figure 6a.

- (8)

- The robot continues to move backward until becomes 180°, and then stops. At that point, the vehicle has been recovered from the overturned state.

4. Requirements and Statics Analysis during Recovery of Overturned Vehicle

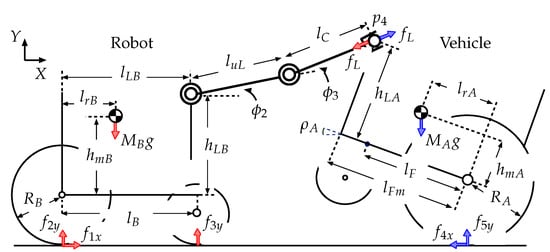

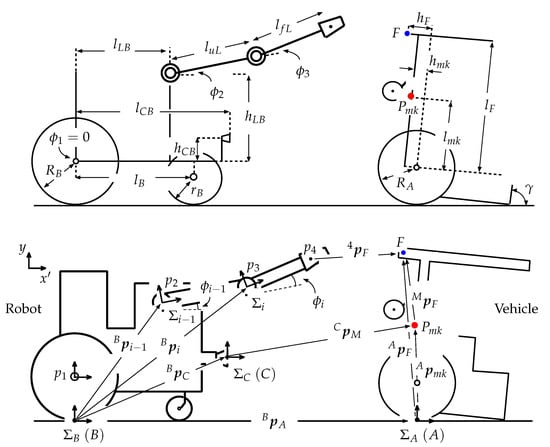

In this section, the authors perform a statics analysis to evaluate the possibility of recovering an overturned vehicle. The axles of the robot manipulator are fixed when the robot grasps the vehicle. The robot slowly moves and maintains its balance in the recovery process, which is analyzed by considering statics. Figure 9 shows the state in which the robot rescues the overturned vehicle. Here, : driving force for the rear wheels of the robot; : normal reaction which affects the rear wheels of the robot; : normal reaction which affects the front wheels of the robot; : driving force for the rear wheels of the vehicle; : normal reaction which affects the rear wheels of the vehicle; : reaction force from the connected vehicle (or robot); : height of the robot center of gravity above the rear wheel axle; : distance between the robot center of gravity and the rear wheel axis, : wheelbase of the robot; : radius of the robot rear wheels; : mass of the robot; : distance between the shoulder axle of the manipulator and the rear axle; : height of the shoulder axle of the manipulator above the rear axle; : length of the upper arm link (from the shoulder joint to the elbow joint), : length from the elbow joint to the connecting position between the robot and vehicle; : shoulder link angle, : elbow link angle, : connecting position between the robot and vehicle, : angle of inclination of the vehicle; : height of the center of gravity of the robotic vehicle above the rear wheel axle; : distance between the center of gravity of the vehicle and the rear wheel axle, : wheelbase of the vehicle; : radius of the rear wheels of the vehicle; : mass of the vehicle; : distance between the robot hand’s grasping position and the rear axle (in the recovery process, 0.28 m m); : maximum value of ; : height of the robot hand’s grasping position; g: gravitational acceleration. The dimensions for these parameters are shown in Table A1 and Table A2 in the Appendix A.

Figure 9.

Model of robot and overturned vehicle during recovery process.

4.1. Static Requirements to Achieve Recovery of an Overturned Vehicle

The system needs to satisfy conditions Req. 1–5 below in order to rescue the vehicle. Here, is the coefficient of friction between the driving wheels and the road surface.

- :

- No part of the robot manipulator contacts the overturned vehicle except the robot hand.

- :

- The connecting position between the robot and the vehicle is within the range reachable by the robot hand.

- :

- The driving wheels of the robot do not slip ().

- :

- The driving wheels of the robotic vehicle do not slip ().

- :

- The robot does not tip over backward as a result of the force exerted by the tilting vehicle ().

and are geometrical requirements. The inclination of the vehicle without interference from the road surface or robot hand is −20° 100° (). Considering the position of the center of the gravity of the vehicle, it will not tip over for a range of inclination of 0° 100°. For this range of inclination, the robot hand is able to grasp the robotic vehicle (). Thus, the requirements to achieve recovery satisfying to are analyzed in the range 0° 100°.

4.2. Analysis of Statics to Achieve Vehicle Rescue

When moving statically, the force equilibrium for the robot along the X and Y axes is given by the following Equation (Figure 9)

where

From (1),

From (2),

The following equation is obtained from the equilibrium of the moments about the point of contact between the robot rear wheels and the ground.

where ,

From (5),

The force equilibrium for the robotic vehicle along the X and Y axes is given by the following equations.

From (9),

From (10),

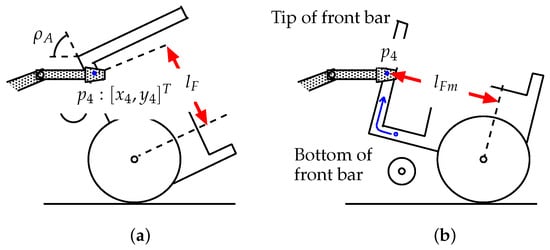

When the hand position is lower than the tip of the front bar (, thus 49.74° 100°, Figure 10a), the robot hand grasps the bottom part of the front bar of the overturned vehicle (Figure 5). In this case, the equilibrium of moment of the vehicle about the point of contact between the rear wheels and the ground is given below.

where is a variable that depends on the inclination of the vehicle. In this case, the reaction force exerted by the connected vehicle (or robot), , is expressed by the following Equation (13).

where 49.74° 100°.

Figure 10.

Connecting position and inclination of the vehicle: (a) situation where the hand is lower than the tip of the front bar (); (b) situation where the hand is higher than the tip of the front bar ().

The height of the hand, , is expressed by the following Equation (Figure 9).

When the hand position becomes higher than the vehicle’s front bar (, thus 0 ≤ 49.74°), Figure 10b), the hand grasps the tip of the front bar. The equilibrium vehicle moment about the point of contact between the rear wheels and the ground is then given below.

When (thus, 0 ≤ 49.74°), is constant.

The following equation is obtained geometrically (Figure 9).

From (19),

In this case, the reaction force exerted by the connected vehicle (or robot), , is expressed by following Equation (17).

where

The relation required by can be evaluated from (8)–(18), (14) and (21), and the relation required by can be evaluated from (11)–(14) and (21).

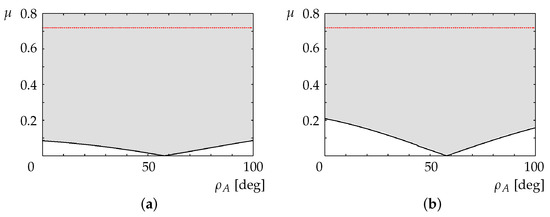

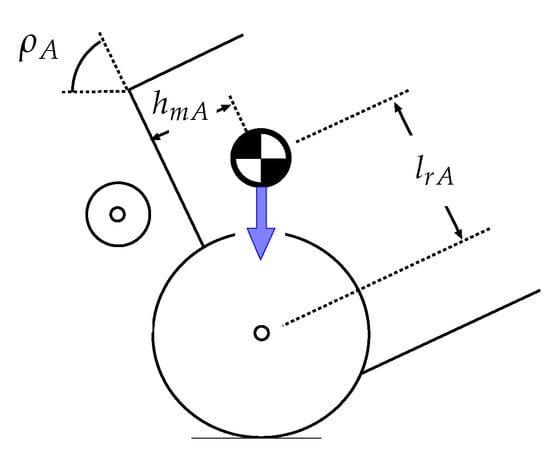

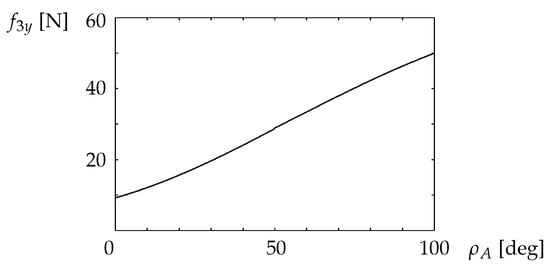

The shaded area in Figure 11a shows the relationship between the inclination of the overturned vehicle and the coefficient of friction between the robot rear wheels and the road surface required to avoid slippage of the robot driving wheels (). Similarly, the shaded area in Figure 11b shows the relationship between the inclination of the vehicle and the coefficient of friction that ensures no slippage of the vehicle wheels (). The lowest points in Figure 11a,b occur at , when the horizontal position of the center of gravity of the vehicle is above the contact position of the rear wheels (Figure 12).

Figure 11.

Inclination of overturned vehicle and friction coefficient for avoiding slippage of (a) robot driving wheels and (b) vehicle driving wheels.

Figure 12.

Situation in which the horizontal position of the center of gravity of the vehicle is above the contact position of the rear wheels.

Experiments were conducted for a coefficient of friction of (see Section 6), for which the driving wheels of the robot and the vehicle could generate enough torque to avoid tipping over.

The relation required by is evaluated using (8), (14), and (21). Figure 13 shows the relationship between the inclination of the vehicle and the normal force on the robot front wheels, (). For 0 °, the front wheels of the robot are not lifted, and the robot does not overturn due to the force exerted by the vehicle.

Figure 13.

Relationship between the coefficient of friction and the inclination of the overturned vehicle that prevents the robot from tipping over backward.

In theory, if the geometric requirements and are satisfied, the robot can successfully perform the recovery operation because to can also be satisfied.

5. Theoretical Analysis of the Distance between the Robot Hand and the Overturned Vehicle

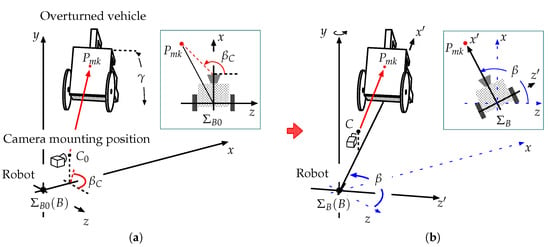

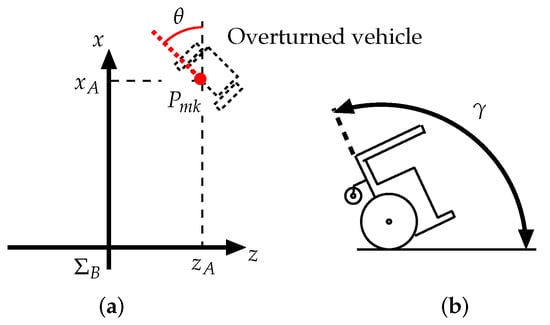

Figure 14a shows the initial status of the robot and the overturned vehicle. The basic robot coordinate system is denoted by , where point B is the origin. Here, point B is the center between the two floor surface contact positions for the left and right rear wheels. In this figure, x indicates the roll axle for the initial direction of the robot, y indicates the yaw axle, and z indicates the pitch axle. indicates the camera mounting position on the robot in .

Figure 14.

Model showing robot and overturned vehicle: (a) relative angle and (b) robot oriented towards vehicle.

is the angle formed by the x axle and the line from point B to point M (AR marker position) on the plane. Similarly, is the relative angle, which is formed by the x axle and the line from to M on the plane.

When the robot turns toward the vehicle, , x, and z change to , , and , respectively (see Figure 14b). The camera position on the robot, , changes to C.

Figure 15 shows the situation following a pivot turn when the robot is oriented toward the vehicle. The local coordinate system for the camera mounted on the robot is denoted as , which is always parallel to . The position vector for the camera in is expressed as .

Figure 15.

Model showing overturned vehicle recovery.

The camera position, , is given by (Figure 2),

where is the angle of the local coordinate system (), is formed by . In this system, both the inclination of the robot and are zero. The position vectors for these joints in are expressed as , where the robot hand position (Figure 2) is given by:

where .

As shown in Figure 15, the inclination angle of the overturned vehicle is , and its local coordinate system is denoted as . Here, point A is the center between the two surface contact positions for the left and right rear wheels, point M is the position of the AR marker mounted on the vehicle bottom, and point F is the position where the robot grasps the vehicle. is the AR marker position vector in , given by:

is the position vector for point F in , expressed as:

The position vector for the AR marker in is , given by

is obtained using ARToolKit.

When the robot is oriented towards the vehicle, . In this case,

Using ARtoolKit, the robot conducts a pivot turn until the value of (Figure 14a) becomes approximately zero, and it is oriented towards the vehicle. In this case, the position vector A in is expressed as (Figure 15):

The vector from the robot hand to the grasping position is ,

When the robot identifies the grasping position on the vehicle, it can set the hand at the same height as point F on the vehicle. In this case, in Equation (32), and the distance between the hand and point F is expressed as

The robot moves in order to reduce to zero using its sensors and ARtoolKit. At that point, the robot can grasp the overturned vehicle.

6. Experiment

6.1. Conditions under Which the Robot Can Rescue the Vehicle

An experiment was carried out in an environment with a surface friction coefficient of . The robot and overturned vehicle were placed on the same floor within the National Institute of Technology, Toyama College. The maximum movement speed of the robot was set at 3.6 km/h.

The authors began by conducting experiments to ensure that the AR markers could be detected using the robot-mounted camera. From the results obtained, the authors found that ARtoolkit sometimes reacted incorrectly in situations where the camera captured furniture or posters with a lattice-like pattern. In those situations, the robot could not be properly controlled. Accordingly, the external environment in which the robot and vehicle experiments were conducted had a white wall and was further partitioned from the rest of the room by suspending a piece of white cloth between the robot and any potentially distracting objects.

The authors then determined that the camera could detect the AR markers mounted on the overturned vehicle at distances of up to 1.5 m, and that when the robot and the vehicle were separated by less than 1.5 m, the camera was able to capture the AR marker position for −0.4 m 0.4 m, as shown in Figure 16. Furthermore, when the relative angle between the AR marker and the camera, , was −35° 35°, the camera could detect the AR marker. The body angle of the overturned vehicle, , could be detected for 78° 136°.

Figure 16.

Position and angle of overturned vehicle: (a) position and (b) angle.

The recovery experiments were carried out from the overturning point shown in Figure 16. Here, m, m, the relative angle between the AR marker and the camera was °, and the first inclination angle of the vehicle was set at °. The robot detected the AR marker mounted on the vehicle bottom within ° 120°, as shown in Figure 6b, and when the inclination angle of the vehicle was over 120°, the robot detected the AR marker mounted on the vehicle front, as shown in Figure 6 a.

We assumed that the robot had already determined the correct position to grasp the vehicle in order to achieve successful recovery ( mm, mm). We also assumed that the driving wheels of the robot and vehicle would not slip, and that the robot could generate sufficient force to pull and support the overturned vehicle during the recovery process.

6.2. Experimental Test of Recovery of Overturned Wheeled Vehicle

The states shown in Figure 17 correspond to (1)–(8) described earlier. The left panels show movements of the robot and vehicle, and the right panels show video images captured by the camera mounted on the robot. The arrows on the right side indicate that ARtoolkit was able to detect the AR markers.

Figure 17.

Experiment. The states shown in Figure 17 correspond to (1)–(8) described earlier.

The camera on the robot captured video images of the vehicle at the screen center. Then, based on its perception of the AR marker mounted on the vehicle bottom, the robot repeated its pivot turn to correct its orientation relative to the vehicle. The robot then traveled half of the distance between it and the vehicle, stopped, and repeated the AR marker detection, pivot turn, and forward motion processes until the robot hand was able to grasp the front bar of the vehicle.

Once the front bar was grasped, the robot began moving backward, exerting a pulling force on the overturned vehicle and causing to increase. Up to that point, the robot’s actions were based on its detection of the AR marker mounted on the vehicle bottom. However, once exceeded 120°, the robot detected the vehicle’s front-mounted AR marker instead of the bottom AR marker. The robot continued moving backward until 180°, at which time it stopped. At that point, the vehicle was back on its wheels, and the recovery process was complete.

As described above, it was necessary to modify the external environment in which the robot and vehicle operated during the experiment to make it suitable for ARtoolKit use. This required removing or hiding objects with lattice-like patterns. Once this was accomplished, the robot was able to work stably, and successfully recovered the overturned vehicle.

7. Conclusions and Future Work

This paper described potential recovery tactics to use when employing a mobile robot to recover a vehicle that had fallen over backward and showed how such a robot system could be constructed. A theoretical analysis clarified the relationship between the vehicle’s angle and the coefficient of friction required to recover an overturned vehicle. Determining the distance between the robot and the overturned vehicle made it possible for the robot to grasp the vehicle and return it to an upright position.

Since the results showed that ARtoolkit sometimes reacted incorrectly when the robot’s camera captured furniture or posters with lattice-like patterns, such items were removed or covered, and the experiments in this study were conducted in an environment that allowed ARtoolKit to correctly perceive the overturned vehicle. The robot was able to work stably and recover the overturned vehicle. Despite this restriction, the experimental results obtained confirmed the effectiveness of the proposed method. Therefore, it is thought that by further developing this system, it would be possible to establish a new technology that would allow vehicles to be easily recovered when an accident occurs. This would be particularly useful if such vehicles became incapacitated in areas that are inaccessible to humans. In the future, the authors will conduct experiments under various other environments and validate sensor outputs during the recovery process in order to improve the robot’s perception capabilities.

The authors will also construct a judgment system for determining the most suitable grasping positions when recovering an overturned vehicle and will study other recovery methods to be used when a vehicle overturns or becomes stuck due to driving wheel slippage when traversing rough terrain. In addition, the authors will construct a manipulator with a hand mechanism that is suitable for rescuing an overturned vehicle. The authors will study appropriate rescue methods for various vehicle tipping conditions, such as rolling.

Author Contributions

Conceptualization, H.I.; methodology, H.I. and S.A.; software, S.A., M.A. and K.S.; validation, S.A., M.A., S.T., T.K. and K.F.; formal analysis, H.I.; investigation, H.I.; resources, H.I.; writing—original draft preparation, H.I.; writing—review and editing, H.I.; visualization, H.I. and S.A.; supervision, H.I.; project administration, H.I.; funding acquisition, H.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Artificial Intelligence Research Promotion Foundation (28AI 043-1), Japan.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The funder had no role in the design of the study.

Appendix A. Robot and Vehicle Specifications

Table A1.

Robot specifications.

Table A1.

Robot specifications.

| Overall length | 490 mm |

| Overall height | 250 mm |

| Body width | 430 mm |

| Radius of front wheels () | 12.5 mm |

| Radius of rear wheels () | 90 mm |

| Wheelbase | 225 mm |

| Distance between the shoulder axle and the rear axle | 150 mm |

| Height of the shoulder axle above the rear axle | 190 mm |

| Camera position | 300 mm |

| Camera height | −28 mm |

| Length of upper link (between the shoulder joint and elbow joint) () | 330 mm |

| Length of forearm link (between the elbow joint and hand grasping position) () | 370 mm |

| Height of center of gravity () | 122 mm |

| Distance of center of gravity | 195 mm |

| Mass | 17.0 kg |

| Driving mechanism | Individually driven wheels |

Table A2.

Overturned vehicle specifications.

Table A2.

Overturned vehicle specifications.

| Overall length | 450 mm |

| Overall height | 355 mm |

| Body width | 250 mm |

| Radius of front wheels | 35 mm |

| Radius of rear wheels | 80 mm |

| Wheelbase | 190 mm |

| Position of center of gravity | 78 mm |

| Height of center of gravity | 49 mm |

| Target position for connection from the rear axle | 285–410 mm |

| Position of front bar (: maximum value of ) | 410 mm |

| Target height for connection from the rear axle | 20 mm |

| Mass | 6.5 kg |

| Driving mechanism | Individually driven wheels |

References

- Okada, Y.; Nagatani, K.; Yoshida, K. Semi-autonomous operation of tracked vehicles on rough terrain using autonomous control of active flippers. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 2815–2820. [Google Scholar] [CrossRef]

- Taguchi, K. Enhanced wheel system for step climbing. Adv. Robot. 1995, 9, 137–147. [Google Scholar] [CrossRef]

- Nakajima, S.; Nakano, E.; Takahashi, T. Free gait algorithm with two returning legs of a leg-wheel robot. J. Robot. Mechatronics 2008, 20, 661–668. [Google Scholar] [CrossRef]

- Kumar, V.; Krovi, V. Optimal Traction Control In A Wheelchair With legs Furthermore, Wheels. In Proceedings of the 4th National Applied Mechanisms and Robotics Conference, Cincinnati, OH, USA, 10–13 December 1995. [Google Scholar]

- Yun, S.; Goswami, A.; Sakagami, Y. Safe fall: Humanoid robot fall direction change through intelligent stepping and inertia shaping. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 781–787. [Google Scholar]

- Goswami, A.; Yun, S.; Nagarajan, U. Direction-changing fall control of humanoid robots: Theory and experiments. Auton. Robot. 2014, 36, 199–223. [Google Scholar] [CrossRef]

- Nagarajan, U.; Goswami, A. Generalized direction changing fall control of humanoid robots among multiple objects. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AL, USA, 3–7 May 2010; pp. 3316–3322. [Google Scholar]

- Fujiwara, K.; Kanehiro, F.; Kajita, S.; Kaneko, K.; Yokoi, K.; Hirukawa, H. UKEMI: Falling motion control to minimize damage to biped humanoid robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; pp. 2521–2526. [Google Scholar] [CrossRef]

- Fujiwara, K.; Kanehiro, F.; Saito, H.; Kajita, S.; Harada, K.; Hirukawa, H. Falling motion control of a humanoid robot trained by virtual supplementary tests. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; pp. 1077–1082. [Google Scholar] [CrossRef]

- Tam, B.; Kottege, N. Fall avoidance and recovery for bipedal robots using walking sticks. In Proceedings of the Australasian Conference on Robotics and Automation 2016, Brisbane, Australia, 5–7 December 2016; pp. 225–233. [Google Scholar]

- Murooka, M.; Noda, S.; Nozawa, S.; Kakiuchi, Y.; Okada, K.; Inaba, M. Achievement of Pivoting Large and Heavy Objects by Life-sized Humanoid Robot based on Online Estimation Control Method of Object State and Manipulation Force. J. Robot. Soc. Jpn. 2014, 32, 595–602. (In Japanese) [Google Scholar] [CrossRef][Green Version]

- Yoshida, E.; Poirier, M.; Laumond, J.P.; Kanoun, O.; Lamiraux, F.; Alami, R.; Yokoi, K. Pivoting based manipulation by a humanoid robot. Auton. Robot. 2010, 28, 77–88. [Google Scholar] [CrossRef]

- Yamashita, A.; Arai, T.; Ota, J.; Asama, H. Motion planning of multiple mobile robots for Cooperative manipulation and transportation. IEEE Trans. Robot. Autom. 2003, 19, 223–237. [Google Scholar] [CrossRef]

- Ikeda, H.; Toyama, T.; Maki, D.; Sato, K.; Nakano, E. Cooperative step-climbing strategy using an autonomous wheelchair and a care Robot. Robot. Auton. Syst. 2021, 135, 103670. [Google Scholar] [CrossRef]

- Ikeda, H.; Kawabe, T.; Wada, R.; Sato, K. Step-Climbing Tactics Using a Mobile Robot Pushing a Hand Cart. Appl. Sci. 2018, 8, 2114. [Google Scholar] [CrossRef]

- Kato, H.; Ishida, M.; Billinghurst, M. Marker Tracking and HMD Calibration for a Video-based Augmented Reality Conferencing System. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality ‘99’, San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar] [CrossRef]

- Kato, H.; Tachibana, K.; Tanabe, M.; Nakajima, T.; Fukuda, Y. MagicCup: A tangible interface for virtual objects manipulation in table-top augmented reality. In Proceedings of the 2003 IEEE International Augmented Reality Toolkit Workshop, Tokyo, Japan, 7 October 2003; pp. 75–76. [Google Scholar] [CrossRef]

- Piekarski, W.; Thomas, B.H. Using ARToolKit for3D hand position tracking in mobile outdoor environments. In Proceedings of the First IEEE International Workshop Augmented Reality Toolkit, Darmstadt, Germany, 29 September 2002. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).