1. Introduction

This work is an extended version of [

1], where this theoretical foundation was detailed and validated in simulation. Autonomous navigation of complex and dynamic indoor environments has continued being the subject of extensive research over the last few years. When conditions deteriorate, common sensors such as laser-scanners (LiDAR) and cameras may fail. Concretely, optical sensors could see their medium of structured light distorted by smoke, dust, airborne particles, mist, dirt, or differences in lighting contingent on the sun and cloud conditions. When these types of sensors are used as input for autonomous navigation, object recognition, localization, or mapping systems under such conditions, they may become vulnerabilities for the optimal performance of such systems [

2]. On the contrary, other types of sensors, such as radar or sonar, with their electromagnetic and acoustic sensor modalities, respectively, continue to achieve precise and accurate results, even in rough conditions.

Therefore, in-air acoustic sensing with sonar microphone arrays has become an active research topic in the last decade [

3,

4,

5,

6,

7]. This sensing modality can perform well in indoor industrial environments with rough conditions, generate spatial information from that environment, and be used for autonomous activities such as navigation or simultaneous localisation and mapping (SLAM). This type of sonar has a wide field of view (FOV) thanks to the microphone array structure. It can cope with simultaneously arriving echos and transform the recorded microphone signals containing the reflections to full 3D spatial images or point-clouds of the environment. Such biologically inspired [

8] sensors have been developed by Jan Steckel et al. [

6,

9] and were implemented for several use-cases, such as for autonomous local navigation [

10] and SLAM [

11,

12].

Not only is the sensor modality inspired by nature, but so is the signal processing. Research on insects that use optical flow clues [

13,

14] and bats using acoustic flow cues [

15,

16,

17,

18] shows that they use these cues to extract the motion of the agent through the environment, also called the ego-motion, and the spatial 3D structure of said environment through that motion. Jan Steckel et al. [

10] used acoustic flow cues, found in the images of a sonar sensor, in a 2D navigation controller for a mobile platform with motion behaviors such as obstacle avoidance, collision avoidance, and the following of corridors in indoor environments. However, its theoretical foundation had the constraint that only a single sensor could be used, which had to be placed in the center of rotation of the mobile platform. From a practical point of view, this is not ideal, as mobile platforms in real-world industrial applications will often not suit this constraint of mounting a sensor in that exact position and avoiding an obstructed FOV.

Another critical limitation is the limited spatial resolution that using a single sonar sensor can yield compared to the entire environment surrounding the sensor. The main reasons for this limitation are the leakage of the point-spread function (PSF) and that the sensors have an FOV of 180° in the frontal hemisphere of the sensor, which leaves much of the areas around the sides of a mobile platform undetected. The PSF leakage may cause undetected reflections. To solve the described problems, the most straightforward solution is to use several sonar sensors (multi-sonar) simultaneously and create a spatial resolution that is greater than what the sampling resolution of a single sonar can yield [

19].

However, digital signal processing of the in-air microphone array data to a 3D spatial image or point-cloud of the environment is a computationally expensive operation. To deal with this limitation and to support a system such as a mobile platform to use multiple in-air 3D sonar imaging sensors, recent work was published which allows for the synchronization and simultaneous real-time signal processing of a set of sonar sensors on a system with a GPU [

20]. That software framework generates a data stream of all the synchronized acoustic (3D) spatial images of the connected sonar sensors. This gives potential for both novel applications, as well as improves existing ones, when compared to only using a single sonar sensor. Specifically on mobile platforms with limited computational resources, this provides new opportunities, as will become clear in this paper.

In this work, we will use this gain in spatial resolution and FOV by using a multi-sonar in real time. The theoretical foundation created in [

10] will be expanded to remove the constraint of using one single sonar sensor and to allow a modular design of the mobile platform and placement of sensors. The navigation controller is expanded to provide the operator the opportunity to create adaptive zoning around the mobile platform where certain motion behaviors should be executed. We expand upon this with a real-time implementation of the navigation controller and additional real experiments in real-world scenarios with sonar sensors. In these experiments, a real mobile platform navigates an office environment by executing the primitive motion behaviors of the controller.

We will describe the 3D sonar sensor in

Section 2. Subsequently, we will be detailing the acoustic flow paradigm in

Section 3 and its implementation in the 2D navigation controller in

Section 4. Afterwards, we will provide validation and discussion of the layered controller with simulation and real-world experiments in

Section 5. Finally, we will conclude in

Section 6 and briefly look at the future of this research.

2. Real-Time 3D Imaging Sonar

First, we will provide a synopsis of the 3D imaging sonar sensor used, the embedded real-time imaging sonar (eRTIS) [

9]. A much more detailed description can be found in [

6,

12]. The sensor, drawn in

Figure 1a and photographed in

Figure 1b, contains a single emitter and uses a known, irregularly positioned array of 32 digital micro-electromechanical systems (MEMS) microphones. A broadband FM-sweep is sent out by the the ultrasonic emitter. The subsequent acoustic reflections will be captured by the microphone array when this FM-sweep is reflected on objects in the environment. Using these specific chirps in the FM-sweep, we can increase the spatial resolution [

21] of a single sonar sensor. Thereupon, the spatial images are created from digital signal processing of the microphone signals recorded by the sensors. Such a spatial image, which we also call an energyscape, uses a spherical coordinate system with (

). This coordinate system is shown in

Figure 1a, while an example of an energyscape is shown in

Figure 1c. A brief overview of the processing pipeline is shown in

Figure 2. The current eRTIS sensors have an FOV of 180° for the vertical and horizontal FOVs.

When multiple eRTIS sensors are simultaneously used with overlapping FOVs, their emitters are synchronized up to 400 ns [

12]. to avoid the unwanted artifacts by picking up the FM-sweep of another eRTIS sensor that was sent out at an other position in space and time. Furthermore, artifacts caused by multi-path reflections of other sensors do not cause issues in the results of this paper, as these types of reflections would only cause an inaccuracy in the resulting range and not angle. By using the sensor fusion model detailed further in this paper, where only the closest reflections for each angle would cause actuation of certain motion behavior, the resulting reflections would not cause any unwanted behavior.

Recently, a software framework was created that can handle multiple eRTIS sensors and perform the signal processing in real time using GPU-acceleration [

20]. Furthermore, the latest generation of eRTIS sensors can incorporate an NVIDIA Jetson module embedded into the casing such that the signal processing can be performed on board. A mobile platform equipped with multiple eRTIS sensors with embedded NVIDIA Jetson TX2 NX modules is able to process their sonar images in real time. A measurement frequency of 10

is used in the experiments of this paper.

3. Acoustic Flow Model

Energyscapes of an eRTIS device contain the coordinates () of a reflection in the seen environment. The theoretical acoustic flow model described here consists of a differential equation of the spatial 3D location of the object represented by this reflection with the rotation and linear ego-motions of the mobile platform. In other words, if the mobile platform moves, the model describes how the static reflection will be moving within the energyscape.

This model is based on the work in [

10]. However, it only works for a singular sonar sensor that faces forward. Therefore, the model is adjusted in this paper to allow multi-sonar, where several sensors can be placed on the mobile platform with the only constraint being that they must all be positioned on the same horizontal plane.

Figure 3 shows a schematic of a multi-sonar configuration. The model described here is the same as in the original paper [

1], but is detailed here once more to provide all necessary context to the reader.

3.1. 2D-Velocity Field

A vector

is used for defining the location of a reflector in the sonar sensor reference frame, as shown in

Figure 1a. The same spherical coordinate system is used within the energyscapes of the eRTIS devices. This spherical coordinate system is related to the standard right-handed Cartesian coordinates by

Expressed in the reference frame of the sensor, the time derivatives of the stationary reflector are given by

with symbols

and

representing the sensor’s linear and rotational velocity vectors, respectively, in the reference frame of the sensor.

The x-axis of the mobile platform’s frame, as shown in

Figure 3, is defined as the direction of the linear velocity vector. Furthermore, the mobile platform can only perform rotations about its z-axis. With these constraints, the earlier-defined velocity vectors of the sensor are

with

V and

representing the magnitudes of the linear and angular velocities of the mobile platform, respectively, which are constant during a single measurement. To describe the location of a sensor from the reference frame of the mobile platform, the parameters (

) are used.

describes the rotation around the z-axis of the mobile-platform relative to the x-axis, and

defines the rotation on the sensor around its own center point. Finally,

l describes the distance between the sensor and the center of rotation of the mobile platform. These parameters are also shown in

Figure 3. Taking the derivative of Equation, we obtain (

1)

Afterwards, Equations (

1), (

3) and (

5) are placed in Equation (

2). Furthermore, as we use the earlier-described constraint of having all sensors on the same horizontal plane and are performing only 2D planar navigation, the reflectors are restricted to lie in the horizontal plane, i.e.,

. With this simplification and applying trigonometric sum and difference identities, the 2D-velocity field can be expressed as

3.2. Only Linear Motion

If the linear motion of the mobile platform is limited, i.e.,

= 0 rad/sec, the vehicle can only move forward or backwards. In this case, Equation (

6) can be reduced to find the time derivatives of the reflector coordinates to make a 2D acoustic flow model for linear motion:

The solution to these differential equations has to comply with

with

describing the angle between the eRTIS sensor and the first sighting of the reflector and not being equal to 0. Subsequently, integrating both sides of Equation (

8), we can find a constant

C, defined as

This defines the solutions of the differential Equation (

7) for chosen starting conditions

. These are the different flow-lines for all chosen ranges, as shown in

Figure 4b.

3.3. Only Rotation Motion

If we limit the mobile platform to a pure rotation, i.e.,

V = 0 m/sec, the time derivatives of the coordinates

of a reflection of Equation (

6) reduce to

These flow-lines are shown in

Figure 5. A closed-form expression, similar to that for linear motion, could not be found for the case of a rotation motion.

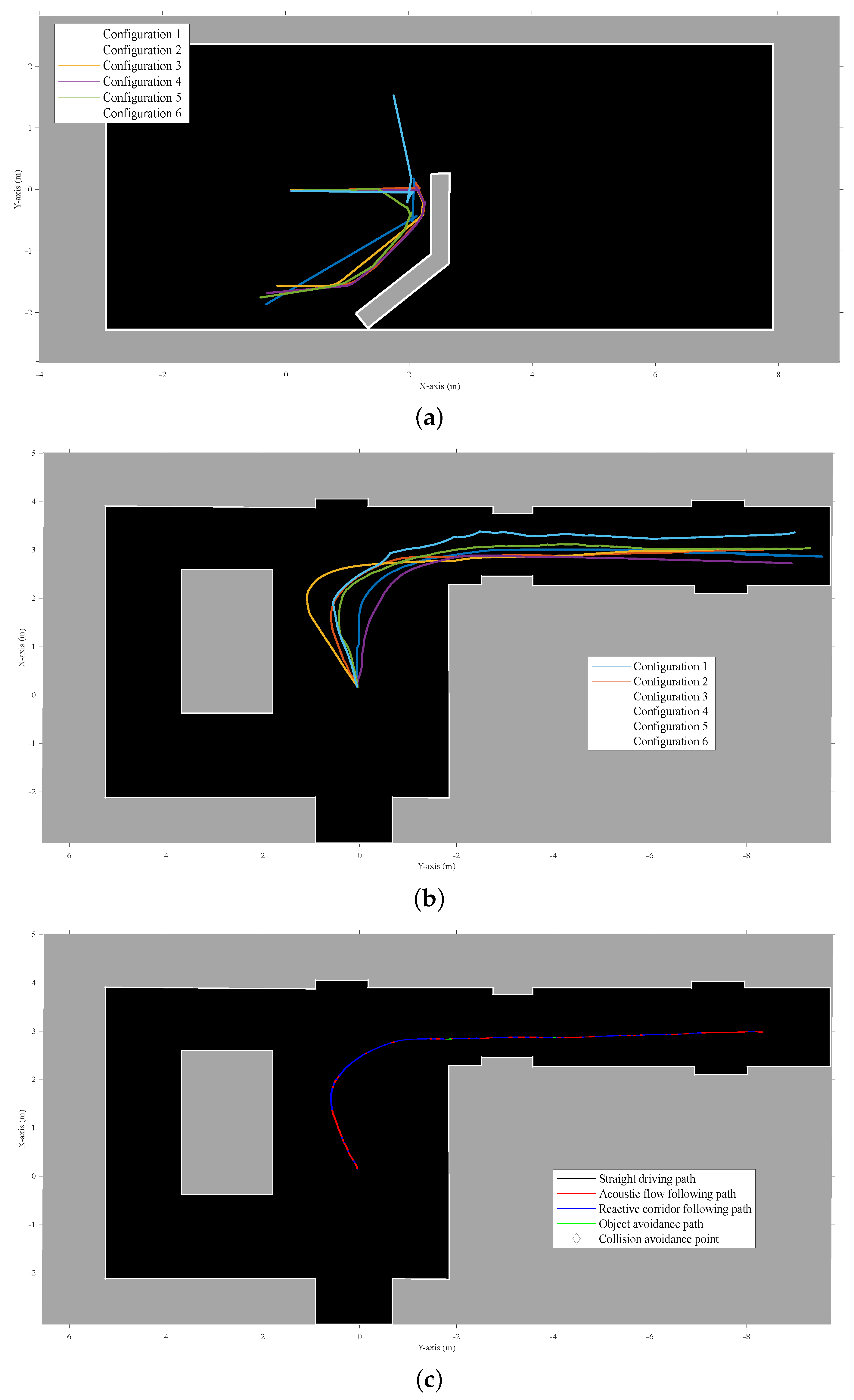

4. Layered Navigation Controller

With the acoustic flow model formulated in

Section 3, we can know a priori how a reflection will move through the energyscape based on the linear and rotational velocity of the mobile platform, and subsequently execute motion commands based on this new a priori knowledge. We suggest using acoustic regional zoning around the mobile platform in such a way that when the reflection of an object or obstacle is perceived in one of these predefined zones, certain primitive motion behaviors are activated. A layered control system designed as a subsumption architecture, first introduced by R. A. Brooks [

22], is used. A diagram of the layered navigation controller is shown in

Figure 6 combined with its input and output variables. The inputs are the linear and rotational velocities

. These can originate from several other systems, such as, for example, a manual human operator or a global path planner. The output velocities

of the layered controller is the modulation of these input velocities by the behavior law of one of the layers. These layers themselves also require input, which comes in the form of the sonar energyscapes of each eRTIS sensor, as well as the mask for that sensor and layer, which will be explained further in this section. The individual control behaviors are based on [

10] but have been adapted in this work to be stable and configurable for multi-sonar setups and to support the new acoustic regional zoning system.

4.1. Acoustic Control Regions and Masks

A zone is defined around the mobile platform for each layer. An example of the zone for each layer is shown in

Figure 7. Such a zone can be defined by basic contours such as straight lines, rectangles, circles, or trapezoids. These zones are constrained to these primitive shapes according to the time-differential equations of

Section 3. The flow-lines these equations provide are used as the outer edges of these contours within the area that can be perceived by the sensor in its 2D energyscape. A mask

with

c the behavior layer and the index

j of the sonar sensor can be made for each layer and sensor pairing. We created this mask as a matrix with the same resolution as the energyscape.

Figure 8 illustrates some examples of such acoustic masks based on the acoustic flow model.

If we cover an energyscape with a mask, and a certain amount of reflection energy is located in the the remaining active area, i.e., not nullified by the mask, a behavior layer can be excited if this abides by its control law with certain constraints, such as being above a certain threshold. These constraints will be described further when each layer is separately discussed later. A second feature of the masking system is to allow the controller to indicate if a reflector is on either the left or right side of the mobile platform. This creates the possibility for a behavior layer to decide on the correct steering direction for the output velocity commands. We use ternary masks, which make a voxel of the mask 0 when it is not within the active area of the mask, 1 if it is on the left side, and −1 if it is on the right side of the mobile platform, respectively.

The design of the navigation controller described here is the same as in the original paper [

1] but is detailed here once more to provide all necessary context to the reader.

4.2. Collision Avoidance

The lowest layer is collision avoidance (CA), which has the highest priority due to the subsumption architecture. This behavior layer is triggered if a certain amount of reflection energy above the threshold

is perceived within the CA region. This region, of which an example is illustrated in

Figure 7, is used to generate a mask for each eRTIS sensor

, as shown in

Figure 8. We use a half-circle near the front of the mobile platform so that when steering along a path, collision can be avoided using this region.

The collision avoidance control law

is

This results in an opposite motion of the nearest reflection. This motion is the rotational output velocity and is set as a constant for which we used / in our experiments. To know the sign of the output rotational velocity, the ternary masking as described earlier is used.

The linear output velocity for the mobile platform is always 0 / until this layer is triggered four times or more in succession. Once this occurs, a small reverse motion is started, with, in our experiments, this linear velocity being set to /. These output velocities are sustained as long as there is reflection energy in the region of the CA layer above the threshold .

4.3. Obstacle Avoidance

Obstacle avoidance (OA) is the the subsequent layer in terms of priority. Its behavior is triggered when the total amount of reflection energy in multiplication of the mask

and the energyscape

is above the threshold

. An example of this region is shown in

Figure 7. It is designed in a way that as long as a forward linear velocity

V is sustained without a rotational component (

), a collision is likely to occur. Therefore, the control law

is designed to circumvent this imminent collision. It is defined as

The outputs are

and

. In practice, this will result in the mobile platform moving farther away from the side with the largest total reflection within the masked energyscape summation. This is possible thanks to the ternary masks. As can be observed in Equation (

12), the division of the masked energyscapes by the square of the range of active voxels containing reflection energy, the further away this reflection energy is, the more it will result in a smaller avoidance motion. Subsequently, the avoidance behavior will slowly ramp up towards the obstacle to avoid unwanted drastic movement. Additionally, the linear output velocity will be lowered based on the range of the total energy in order to slow down the mobile platform as the obstacle moves closer. Gain factors to further tweak the output velocities

and

were both set to 1 in simulation and 0.1 for the real experiments, but can be modified.

4.4. Reactive Corridor Following

We will now discuss the last layer of the subsumption architecture shown in

Figure 6, reactive corridor following (RCF), as it establishes the initial alignment with a detected corridor quickly. By giving this layer the last position, acoustic flow following (AFF) will be higher in priority and activate once this initial alignment is achieved. This alignment is created by balancing the reflection energy in the summation of the masked energyscapes of the left and right peripheral regions of the mobile platform. Simply put, the control law will cause the platform to move away from the side with the most energy. These peripheral regions are shown in the example illustrated in

Figure 7. The masks generated from this region are shown in

Figure 8. This layer will be triggered when the total reflection energy is larger than the threshold

. The law

is expressed as

The output motion of this layer is the velocities and which are dependent on a gain factor . It is set to 1 in simulation and 0.1 for the real experiments.

4.5. Acoustic Flow Following

If the RCF layer has achieved alignment with a corridor, or when the mobile platform arrives near one or two parallel walls, the AFF layer is triggered. In such an event, the detected reflections on the wall(s) can be found on the equivalent flow-line for the distance to that wall, as described in

Section 3 and on

Figure 4b. This alignment for a sensor

j can be measured with

In principle, this formula is integrating all voxels on a flow-line at the lateral distance

d from the path of the mobile platform. Several lateral distances are shown in

Figure 7, with the associated flow-lines visualized in

Figure 8. In Equation (

14),

is representing the flow-line at the lateral distance

d, and

denotes its length, i.e., the amount of voxels that lay on that flow-line. For every combination of sensor

j and distance

d,

is generated. Fusing of all the

values gives the total alignment for that lateral distance

. When one such value peaks and is greater than the threshold

, this layer becomes actuated. If only one peak is detected, motion behavior will be started to continue to follow the wall at lateral distance

associated with that peak. This behavior is defined as

where

represents the preceding distance at which a single peak was perceived in an earlier time-step of the navigation controller. Hence, only when the layer is activated consecutively will the single wall AFF behavior cause an output rotational velocity to align the mobile platform with this wall.

If two peaks are detected, full-corridor-following behavior is triggered. These peaks at lateral distances

and

, respectively, need to be larger than the threshold

and should be on opposite sides of

. The full-corridor-following behavior is calculated as

Gain factor is the same single-wall and full-corridor-following behavior. It is set to 1 in simulation and 0.1 for the real experiments.

6. Conclusions and Future Work

The various experiments in both simulation and real-wold scenarios validate the layered navigation controller for an autonomous mobile platform for safe 2D navigation in spatially diverse environments featuring dynamic objects. The controller can do so while supporting several sensors to be placed where possible or required. No collisions or short periods of the mobile platform being stuck were observed during the experiments. A significant benefit of this work is that spatial navigation is accomplished without the necessity for explicit spatial segmentation. Furthermore, a user of this controller can choose their acoustic control regions to achieve specific behavior. The implemented primitive behavior layers are ample for safe motion between the waypoints in simulation or free-roam navigation, as shown in the real-world experiments. However, one could insert new layers for more specific motion behavior. For example, when moving through a corridor, to always keep the mobile platform on the right side, or to perform specific actions in cases where predefined objects such as for doors, charging stations, intersections, or elevators are detected. However, most of these suggestions would require some form of semantic knowledge about the environment to be known a priori or generated online. Nevertheless, the simplicity of the subsumption layered architecture allows more layers to be added with simple and complex navigation behaviors.

The real-time implementation on a real-experimental platform for both the signal processing and the behavior controller itself allows this system to be implemented on relatively low-end hardware with minimal computing power. This proves that it can be practically implemented for real industrial use-cases already without any additional change.

In the future, a primary extension to the current implementation would be a comprehensive system for path planning and global navigation. Consequently, the work presented here could provide the local navigation. Nevertheless, to accomplish a more global navigation, more spatial knowledge of the environment is necessary. This knowledge can be generated by SLAM [

11,

26] or landmark beacons [

16].