EmbeddedPigCount: Pig Counting with Video Object Detection and Tracking on an Embedded Board

Abstract

:1. Introduction

- For intelligent pig monitoring applications with low-cost embedded boards, such as the NVIDIA Jetson Nano [39], light-weight object detection and tracking algorithms are proposed. By reducing the computational cost in TinyYOLOv4 [40] and DeepSORT [41], we can detect and track pigs in real time on an embedded board, without losing accuracy.

- An accurate and real-time pig-counting algorithm is proposed. Although the accuracies of light-weight object detection and tracking algorithms are not perfect, we can obtain a counting accuracy of 99.44%, even when some pigs pass through the counting zone back and forth. Furthermore, all counting steps can be executed at 30 frames per second (FPS) on an embedded board. To the best of our knowledge, the trade-off between execution time and accuracy in pig counting on an embedded board has not been reported.

2. Background

3. Proposed Method

3.1. Pig Detection Module

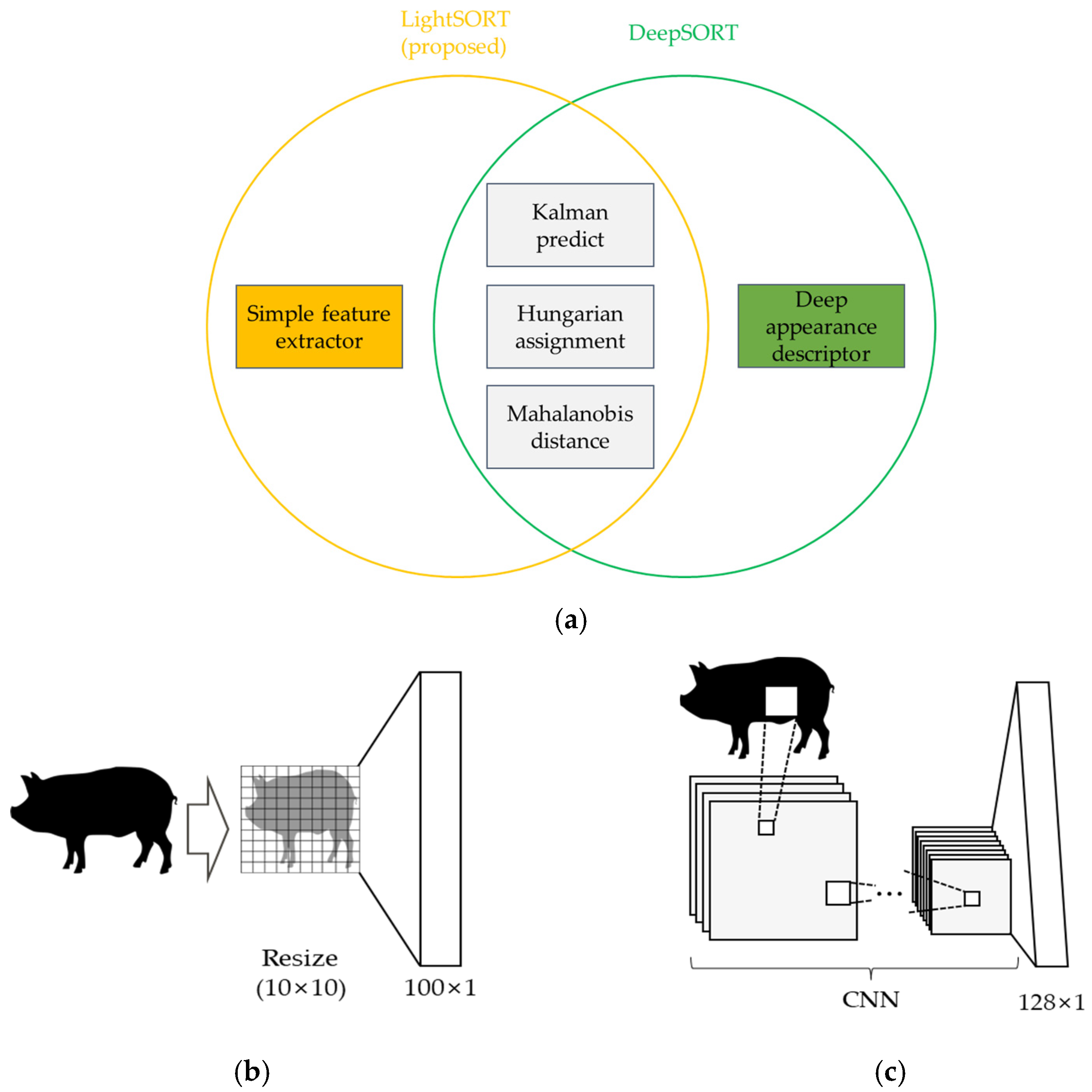

3.2. Pig Tracking Module

3.3. Pig-Counting Module

| Algorithm 1. EmbeddedPigCount |

| Input: Video stream from a surveillance camera |

| Output: Pig counting result |

| Detect individual pig using LightYOLOv4 |

| for (all detected objects): |

| if object class = person: |

| continue |

| if new object: |

| Add new track to track_list |

| Save start.status and end.status according to location of the pig |

| else: |

| Find a track with prediction results in track_list and connect |

| Save end.status based on location |

| Update new location and visualization feature |

| counting_result = 0 |

| for (all existing tracks): |

| if start.status = exit area and end.status = entrance area: |

| counting_result − = 1 |

| if start.status = entrance area and end.status = exit area: |

| counting_result + = 1 |

| return counting_result |

4. Experimental Results

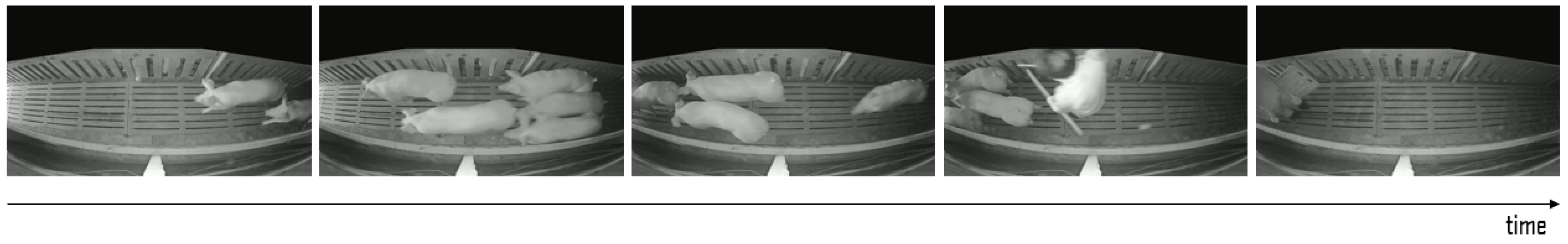

4.1. Experimental Environment and Dataset

4.2. Results with Pig Detection Module

4.3. Results with Pig Tracking Module

4.4. Results with Pig-Counting Module

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- OECD. Meat Consumption (Indicator). 2021. Available online: https://www.oecd-ilibrary.org/agriculture-and-food/meat-consumption/indicator/english_fa290fd0-en (accessed on 3 January 2022).

- Banhazi, T.; Lehr, H.; Black, J.; Crabtree, H.; Schofield, P.; Tscharke, M.; Berckmans, D. Precision Livestock Farming: An International Review of Scientific and Commercial Aspects. Int. J. Agric. Biol. 2012, 5, 1–9. [Google Scholar]

- Neethirajan, S. Recent Advances in Wearable Sensors for Animal Health Management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef] [Green Version]

- Tullo, E.; Fontana, I.; Guarino, M. Precision Livestock Farming: An Overview of Image and Sound Labelling. In Proceedings of the 6th European Conference on Precision Livestock Farming, ECPLF 2013, Leuven, Belgium, 12 September 2013; pp. 30–38. [Google Scholar]

- Tscharke, M.; Banhazi, T. A Brief Review of the Application of Machine Vision in Livestock Behaviour Analysis. J. Agric. Inform. 2016, 7, 23–42. [Google Scholar]

- Oliveira, D.; Pereira, L.; Bresolin, T.; Ferreira, R.; Dorea, J. A Review of Deep Learning Algorithms for Computer Vision Systems in Livestock. Livest. Sci. 2021, 253, 104700. [Google Scholar] [CrossRef]

- Matthews, S.; Miller, A.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early Detection of Health and Welfare Compromises through Automated Detection of Behavioural Changes in Pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [Green Version]

- Han, S.; Zhang, J.; Zhu, M.; Wu, J.; Kong, F. Review of Automatic Detection of Pig Behaviours by using Image Analysis. IOP Conf. Ser. Earth Environ. Sci. 2017, 69, 012096. [Google Scholar] [CrossRef]

- Chung, Y.; Kim, H.; Lee, H.; Park, D.; Jeon, T.; Chang, H. A Cost-Effective Pigsty Monitoring System based on a Video Sensor. KSII Trans. Internet Inf. Sys. 2014, 8, 1481–1498. [Google Scholar]

- Wongsriworaphon, A.; Arnonkijpanich, B.; Pathumnakul, S. An Approach based on Digital Image Analysis to Estimate the Live Weights of Pigs in Farm Environments. Comput. Electron. Agric. 2015, 115, 26–33. [Google Scholar] [CrossRef]

- Tu, G.; Karstoft, H.; Pedersen, L.; Jorgensen, E. Foreground Detection using Loopy Belief Propagation. Biosyst. Eng. 2013, 116, 88–96. [Google Scholar] [CrossRef] [Green Version]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.; Niewold, T.; Tuyttens, F.; Berckmans, D. Automatic Monitoring of Pig Locomotion using Image Analysis. Livest. Sci. 2014, 159, 141–148. [Google Scholar] [CrossRef]

- Zhu, Q.; Ren, J.; Barclay, D.; McCormack, S.; Thomson, W. Automatic Animal Detection from Kinect Sensed Images for Livestock Monitoring and Assessment. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology, Liverpool, UK, 26–28 October 2015; pp. 1154–1157. [Google Scholar] [CrossRef]

- Tu, G.; Karstoft, H.; Pedersen, L.; Jorgensen, E. Illumination and Reflectance Estimation with its Application in Foreground. Sensors 2015, 15, 21407–21426. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lu, M.; Xiong, Y.; Li, K.; Liu, L.; Yan, L.; Ding, Y.; Lin, X.; Yang, X.; Shen, M. An Automatic Splitting Method for the Adhesive Piglets Gray Scale Image based on the Ellipse Shape Feature. Comput. Electron. Agric. 2016, 120, 53–62. [Google Scholar] [CrossRef]

- Kim, J.; Chung, Y.; Choi, Y.; Sa, J.; Kim, H.; Chung, Y.; Park, D.; Kim, H. Depth-based Detection of Standing-Pigs in Moving Noise Environments. Sensors 2017, 17, 2757. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brunger, J.; Traulsen, I.; Koch, R. Model-based Detection of Pigs in Images under Sub-Optimal Conditions. Comput. Electron. Agric. 2018, 152, 59–63. [Google Scholar] [CrossRef]

- Kang, F.; Wang, C.; Li, J.; Zong, Z. A Multiobjective Piglet Image Segmentation Method based on an Improved Noninteractive GrabCut Algorithm. Adv. Multimed. 2018, 2018, 108876. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Liu, L.; Shen, M.; Sun, Y.; Lu, M. Group-Housed Pig Detection in Video Surveillance of Overhead Views using Multi-Feature Template Matching. Biosyst. Eng. 2019, 181, 28–39. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.; Olsson, A.; Müller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [Green Version]

- Psota, E.; Mittek, M.; Perez, L.; Schmidt, T.; Mote, B. Multi-Pig Part Detection and Association with a Fully-Convolutional Network. Sensors 2019, 19, 852. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Liu, Y.; Chen, S.; Luo, B.; Li, Y.; Liu, C. Pig Detection Algorithm based on Sliding Windows and PCA Convolution. IEEE Access 2019, 7, 44229–44238. [Google Scholar] [CrossRef]

- Seo, J.; Ahn, H.; Kim, D.; Lee, S.; Chung, Y.; Park, D. EmbeddedPigDet—Fast and Accurate Pig Detection for Embedded Board Implementations. Appl. Sci. 2020, 10, 2878. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically Detecting Pig Position and Posture by 2D Camera Imaging and Deep Learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Brünger, J.; Gentz, M.; Traulsen, I.; Koch, R. Panoptic Segmentation of Individual Pigs for Posture Recognition. Sensors 2020, 20, 3710. [Google Scholar] [CrossRef] [PubMed]

- Ahn, H.; Son, S.; Kim, H.; Lee, S.; Chung, Y.; Park, D. EensemblePigDet: Ensemble Deep Learning for Accurate Pig Detection. Appl. Sci. 2021, 11, 5577. [Google Scholar] [CrossRef]

- Zuo, S.; Jin, L.; Chung, Y.; Park, D. An Index Algorithm for Tracking Pigs in Pigsty. In Proceedings of the International Conference on Industrial Electronics and Engineering, Pune, India, 1–4 June 2014; pp. 797–803. [Google Scholar]

- Lao, F.; Brown-Brandl, T.; Stinn, J.; Liu, K.; Teng, G.; Xin, H. Automatic Recognition of Lactating Sow Behaviors through Depth Image Processing. Comput. Electron. Agric. 2016, 125, 56–62. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.; Sturm, B. Automatic Detection of Mounting Behaviours among Pigs using Image Analysis. Comput. Electron. Agric. 2016, 124, 295–302. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.; Sturm, B. A New Approach for Categorizing Pig Lying Behaviour based on a Delaunay Triangulation Method. Animal 2017, 11, 131–139. [Google Scholar] [CrossRef] [Green Version]

- Matthews, S.; Miller, A.; Plötz, T.; Kyriazakis, I. Automated Tracking to Measure Behavioural Changes in Pigs for Health and Welfare Monitoring. Sci. Rep. 2017, 7, 17582. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D.; Lin, S. Feeding Behavior Recognition for Group-Housed Pigs with the Faster R-CNN. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction using Deep Learning. IEEE Access 2019, 7, 108049–108060. [Google Scholar] [CrossRef]

- Nilsson, M.; Herlin, A.; Ardo, H.; Guzhva, O.; Astrom, K.; Bergsten, C. Development of Automatic Surveillance of Animal Behaviour and Welfare using Image Analysis and Machine Learned Segmentation Techniques. Animal 2015, 9, 1859–1865. [Google Scholar] [CrossRef] [Green Version]

- Oczak, M.; Maschat, K.; Berckmans, D.; Vranken, E.; Baumgartner, J. Automatic Estimation of Number of Piglets in a Pen during Farrowing, using Image Analysis. Biosyst. Eng. 2016, 151, 81–89. [Google Scholar] [CrossRef]

- Tian, M.; Guo, H.; Chen, H.; Wnag, Q.; Long, C.; Ma, Y. Automated Pig Counting using Deep Learning. Comput. Electron. Agric. 2019, 163, 104840. [Google Scholar] [CrossRef]

- Chen, G.; Shen, S.; Wen, L.; Luo, S.; Bo, L. Efficient Pig Counting in Crowds with Keypoints Tracking and Spatial-Aware Temporal Response Filtering. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10052–10058. [Google Scholar]

- Jensen, D.; Pedersen, L. Automatic Counting and Positioning of Slaughter Pigs within the Pen using a Convolutional Neural Network and Video Images. Comput. Electron. Agric. 2021, 188, 106296. [Google Scholar] [CrossRef]

- Jetson Nano Developer Kit. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 28 November 2021).

- Bochkovskiy, A.; Wang, C.; Liao, H. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2016, arXiv:1506.01497. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. Ssd: Single shot multibox detector. arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Lucas, B.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th IJCAI, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. arXiv 2016, arXiv:1602.00763. [Google Scholar]

- NVIDIA TensorRT. Available online: https://developer.nvidia.com/tensorrt. (accessed on 28 November 2021).

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Kuhn, H. The hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- McLachlan, G. Mahalanobis distance. Resonance 1999, 4, 20–26. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A. Deep cosine metric learning for person re-identification. arXiv 2018, arXiv:1812.00442. [Google Scholar]

- Hanwha Surveillance Camera. Available online: https://www.hanwhasecurity.com/product/qno-6012r/ (accessed on 28 November 2021).

- Top-View Person Detection Open Datasets. Available online: https://github.com/ucuapps/top-view-multi-person-tracking. (accessed on 10 December 2021).

- Schulzrinne, H.; Rao, A.; Lanphier, R. Real-Time Streaming Protocol (RTSP). Available online: https://www.hjp.at/doc/rfc/rfc2326.html (accessed on 28 November 2021).

- Bernardin, K.; Elbs, K.; Stiefelhagen, R. Multiple object tracking performance metrics and evaluation in a smart room environment. In Proc. of IEEE International Workshop on Visual Surveillance, in conjunction with ECCV. Citeseer 2006, 90, 91. [Google Scholar]

| Application | Data Type | Algorithm | No. of Pigs in Each Image/Video | Execution Time per Image (ms) | Target Platform | Reference |

|---|---|---|---|---|---|---|

| Pig Detection | Image | Image Processing | 9 | Not Specified | PC | [11] |

| Image Processing | 7~13 | Not Specified | PC | [12] | ||

| Image Processing | 1 | Not Specified | PC | [13] | ||

| Image Processing | Not Specified | 500 | PC | [14] | ||

| Image Processing | Not Specified | Not Specified | PC | [15] | ||

| Image Processing | 13 | 2 | PC | [16] | ||

| Image Processing | Not Specified | 1000 | PC | [17] | ||

| Deep Learning | 1 | Not Specified | PC | [18] | ||

| Deep Learning | Not Specified | 500 | PC | [19] | ||

| Deep Learning | ∼32 | 142 | PC | [20] | ||

| Image Processing | 4 | 921 | PC | [21] | ||

| Deep Learning | 6 | 500 | PC | [22] | ||

| Image Processing + Deep Learning | 9 | 29 | Embedded Board | [23] | ||

| Deep Learning | ~79 | Not Specified | PC | [24] | ||

| Deep Learning | 13 | 41~2000 | PC | [25] | ||

| Image Processing + Deep Learning | 9 | ~190 | Embedded Board | [26] | ||

| Video | Image Processing | 22 | Not Specified | PC | [27] | |

| Image Processing | 1 | Not Specified | PC | [28] | ||

| Image Processing | 22 | Not Specified | PC | [29] | ||

| Image Processing | 17~20 | Not Specified | PC | [30] | ||

| Deep Learning | 1 | 50 | PC | [31] | ||

| Deep Learning | 4 | Not Specified | PC | [32] | ||

| Deep Learning | 20 | 250 | PC | [33] | ||

| Pig Counting | Image | Image Processing | 8 | Not Specified | Not Specified | [34] |

| Image Processing | 9 | Not Specified | Not Specified | [35] | ||

| Deep Learning | ~40 | 42 | PC | [36] | ||

| Video | Deep Learning | ~250 | 313 | Embedded Board | [37] | |

| Deep Learning | ~18 | Not Specified | Not Specified | [38] | ||

| Deep Learning | ~34 | 32 | Embedded Board | Proposed |

| # | Layer | Filters | Size/Stride | Input | Output |

|---|---|---|---|---|---|

| 0 | Convolutional | 27 | 3 × 3/2 | 320 × 320 × 1 | 160 × 160 × 27 |

| 1 | Convolutional | 49 | 3 × 3/2 | 160 × 160 × 27 | 80 × 80 × 49 |

| 2 | Convolutional | 45 | 3 × 3/1 | 80 × 80 × 49 | 80 × 80 × 45 |

| 3 | Route 2 | ||||

| 4 | Convolutional | 31 | 3 × 3/1 | 80 × 80 × 22 | 80 × 80 × 31 |

| 5 | Convolutional | 28 | 3 × 3/1 | 80 × 80 × 31 | 80 × 80 × 28 |

| 6 | Route 4, 5 | ||||

| 7 | Convolutional | 64 | 1 × 1/1 | 80 × 80 × 59 | 80 × 80 × 64 |

| 8 | Route 2, 7 | ||||

| 9 | Maxpool | 2 × 2/2 | 80 × 80 × 109 | 40 × 40 × 109 | |

| 10 | Convolutional | 86 | 3 × 3/1 | 40 × 40 × 109 | 40 × 40 × 86 |

| 11 | Route 10 | ||||

| 12 | Convolutional | 56 | 3 × 3/1 | 40 × 40 × 43 | 40 × 40 × 56 |

| 13 | Convolutional | 47 | 3 × 3/1 | 40 × 40 × 56 | 40 × 40 × 47 |

| 14 | Route 12, 13 | ||||

| 15 | Convolutional | 128 | 1 × 1/1 | 40 × 40 × 128 | 40 × 40 × 128 |

| 16 | Route 10, 15 | ||||

| 17 | Maxpool | 2 × 2/2 | 40 × 40 × 214 | 20 × 20 × 214 | |

| 18 | Convolutional | 164 | 3 × 3/1 | 20 × 20 × 214 | 20 × 20 × 164 |

| 19 | Route 18 | ||||

| 20 | Convolutional | 83 | 3 × 3/1 | 20 × 20 × 82 | 20 × 20 × 83 |

| 21 | Convolutional | 83 | 3 × 3/1 | 20 × 20 × 83 | 20 × 20 × 83 |

| 22 | Route 20, 21 | ||||

| 23 | Convolutional | 256 | 1 × 1/1 | 20 × 20 × 166 | 20 × 20 × 256 |

| 24 | Route 18, 23 | ||||

| 25 | Maxpool | 2 × 2/2 | 20 × 20 × 420 | 10 × 10 × 420 | |

| 26 | Convolutional | 189 | 3 × 3/1 | 10 × 10 × 420 | 10 × 10 × 189 |

| 27 | Convolutional | 256 | 1 × 1/1 | 10 × 10 × 189 | 10 × 10 × 256 |

| 28 | Convolutional | 174 | 3 × 3/1 | 10 × 10 × 256 | 10 × 10 × 174 |

| 29 | Convolutional | 18 | 1 × 1/1 | 10 × 10 × 174 | 10 × 10 × 18 |

| 30 | YOLO output | ||||

| 31 | Route 27 | ||||

| 32 | Convolutional | 128 | 1 × 1/1 | 10 × 10 × 256 | 10 × 10 × 128 |

| 33 | Upsample | /2 | 10 × 10 × 128 | 20 × 20 × 128 | |

| 34 | Route 23, 33 | ||||

| 35 | Convolutional | 120 | 3 × 3/1 | 20 × 20 × 384 | 20 × 20 × 120 |

| 36 | Convolutional | 18 | 1 × 1/1 | 20 × 20 × 256 | 20 × 20 × 18 |

| 37 | YOLO output |

| Annotation | Dataset | Class | Train Set (Images) | Test Set (Images) |

|---|---|---|---|---|

| Manually annotated | Hallway | Pig + Human | 1396 | 306 |

| Pig pen | Pig | 873 | - | |

| Open dataset | people in top view [57] | Human | 100 | - |

| Only Hallway Data | Hallway + Pig Pen + Open | |

|---|---|---|

| Person (AP) | 94.96% | 98.50% |

| Pig (AP) | 94.94% | 95.39% |

| mAP | 94.95% | 96.95% |

| MOTA↑ | IDsw↓ | |

|---|---|---|

| DeepSORT [41] | 89.88% | 25 |

| LightSORT | 89.85% | 22 |

| Number of Pigs (N) | Counting Results | |||

|---|---|---|---|---|

| GroundTruth | EmbeddedPigCount | Number of Pigs Correctly Counted (Nc) | ||

| Clip01~06 | 1 | −1 | −1 | 1 |

| Clip07~08 | 1 | 0 | 0 | 1 |

| Clip09~15 | 1 | 1 | 1 | 1 |

| Clip16 | 2 | −2 | −2 | 2 |

| Clip17 | 2 | 0 | 0 | 2 |

| Clip18~25 | 2 | 2 | 2 | 2 |

| Clip26 | 3 | 2 | 2 | 3 |

| Clip27~37 | 3 | 3 | 3 | 3 |

| Clip38 | 4 | 3 | 3 | 4 |

| Clip39~59 | 4 | 4 | 4 | 4 |

| Clip60 | 5 | −5 | −5 | 5 |

| Clip61 | 5 | 1 | 1 | 5 |

| Clip62 | 5 | 3 | 3 | 5 |

| Clip63 | 5 | 4 | 4 | 5 |

| Clip64~84 | 5 | 5 | 5 | 5 |

| Clip85~86 | 6 | −2 | −2 | 6 |

| Clip87 | 6 | 0 | 0 | 6 |

| Clip88~91 | 6 | 5 | 5 | 6 |

| Clip92~110 | 6 | 6 | 6 | 6 |

| Clip111~114 | 7 | 7 | 7 | 7 |

| Clip115 | 8 | 5 | 5 | 8 |

| Clip116 | 8 | 6 | 6 | 8 |

| Clip117~118 | 8 | 8 | 8 | 8 |

| Clip119 | 9 | −8 | −8 | 9 |

| Clip120 | 9 | −1 | −1 | 9 |

| Clip121 | 9 | 7 | 7 | 9 |

| Clip122 | 10 | −1 | −1 | 10 |

| Clip123 | 12 | 0 | 0 | 12 |

| Clip124 | 14 | 4 | 4 | 14 |

| Clip125~126 | 21 | 0 | 0 | 21 |

| Clip127 | 23 | 0 | 1 | 22 |

| Clip128 Clip129 | 23 30 | 0 0 | 0 0 | 23 30 |

| Clip130 Total | 34 715 | 0 - | −3 - | 31 711 |

| Accuracy | FPS (Avg.) | |

|---|---|---|

| Proposed EmbeddedPigCount | 99.44% | 30.6 |

| Execution Time (Milliseconds) | Proportion (%) | |

|---|---|---|

| Detection module Tracking module | 25.8 4.1 | 78.9 12.5 |

| Etc. | 2.7 | 8.3 |

| Total | 32.7 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Suh, Y.; Lee, J.; Chae, H.; Ahn, H.; Chung, Y.; Park, D. EmbeddedPigCount: Pig Counting with Video Object Detection and Tracking on an Embedded Board. Sensors 2022, 22, 2689. https://doi.org/10.3390/s22072689

Kim J, Suh Y, Lee J, Chae H, Ahn H, Chung Y, Park D. EmbeddedPigCount: Pig Counting with Video Object Detection and Tracking on an Embedded Board. Sensors. 2022; 22(7):2689. https://doi.org/10.3390/s22072689

Chicago/Turabian StyleKim, Jonggwan, Yooil Suh, Junhee Lee, Heechan Chae, Hanse Ahn, Yongwha Chung, and Daihee Park. 2022. "EmbeddedPigCount: Pig Counting with Video Object Detection and Tracking on an Embedded Board" Sensors 22, no. 7: 2689. https://doi.org/10.3390/s22072689

APA StyleKim, J., Suh, Y., Lee, J., Chae, H., Ahn, H., Chung, Y., & Park, D. (2022). EmbeddedPigCount: Pig Counting with Video Object Detection and Tracking on an Embedded Board. Sensors, 22(7), 2689. https://doi.org/10.3390/s22072689