Abstract

This work determines whether hyperspectral imaging is suitable for discriminating ore from waste at the point of excavation. A prototype scanning system was developed for this study. This system combined hyperspectral cameras and a three-dimensional LiDAR, mounted on a pan-tilt head, and a positioning system which determined the spatial location of the resultant hyperspectral data cube. This system was used to obtain scans both in the laboratory and at a gold mine in Western Australia. Samples from this mine site were assayed to determine their gold concentration and were scanned using the hyperspectral apparatus in the laboratory to create a library of labelled reference spectra. This library was used as (i) the reference set for spectral angle mapper classification and (ii) a training set for a convolutional neural network classifier. Both classification approaches were found to classify ore and waste on the scanned face with good accuracy when compared to the mine geological model. Greater resolution on the classification of ore grade quality was compromised by the quality and quantity of training data. The work provides evidence that an excavator-mounted hyperspectral system could be used to guide a human or autonomous excavator operator to selectively dig ore and minimise dilution.

1. Introduction

Metal mining operations benefit from precise knowledge of ore grade at the point of excavation to minimise waste dilution and maximize ore recovery. Presently, mine models are constructed from drill core sampling and analysis, which requires significant expertise and is available only at discrete sample points. Precise information about ore grade between drill locations or post-blast disturbance is not available until after the ore has been processed. If grade information were known in advance, it would allow for more efficient ore recovery. This paper explores the application of hyperspectral imaging to provide precise information at the time of excavation.

Hyperspectral imaging is the acquisition of contiguous spectral information for each pixel in a 2D image [1]. Early work by Hunt [2] described the electronic and vibrational processes that create absorption features at certain wavelengths in the reflectance or emittance spectra of minerals. This information acts as a spectral signature; minerals at the surface of the scanned objects can be identified by the position, depth, width, and asymmetry of spectral absorption features [3]. Many methods have been developed for the characterisation of minerals from their spectral signatures, summarised by Asadzadeh and de Souza Filho [4]. They describe two broad categories of spectral processing methods: (i) knowledge-based, i.e., using knowledge of the characteristic absorption features to identify minerals directly from their spectra; or (ii) data-based, i.e., using reference data to classify target data, such as by direct comparison of spectral similarity to a reference library of labelled spectra. This is a useful distinction of the approaches to spectral analysis; the development of robust data-based methods reduces the requirement for specialised knowledge of the spectral absorption features relevant to the mineral characterisation of an individual mine site.

Much of the interest in hyperspectral imaging for geological applications has been in the field of remote sensing, as summarised by van der Meer et al. [5] and, more recently, Peyghambari and Zhang [6]. Dedicated hyperspectral sensing platforms and facilities such as airborne sensors (e.g., AVIRIS [7]) and spaceborne sensors (e.g., Hyperion [8] and EnMAP [9]) provide a wealth of data for researchers. However, greater availability of hyperspectral sensors has led to ground-based applications, which enables the use of hyperspectral imaging within mining operations. Kurz et al. [10] demonstrated the use of a terrestrial short-wavelength infrared (SWIR) sensor and LiDAR to classify and identify minerals in aboveground and subsurface applications. This is an early example of LiDAR and hyperspectral data fusion applied to face mapping. Okyay et al. [11] demonstrated end-member-based classification on a ground-based scan of a road cut, using both laboratory spectra (from samples obtained in the field during scanning) and spectra in the field image.

A number of authors have considered fusion of hyperspectral and LiDAR data to address various problems that include registering hyperspectral data to LiDAR data to improve geological interpretation and accuracy [12,13,14,15,16,17,18,19], illumination correction [20,21], assessing slope stability [22], and to localise data in airborne applications [23,24]. In this paper, LiDAR in combination with GNSS is used to spatially locate hyperspectral pixels within the surveyed scene.

The use of two hyperspectral cameras to cover both visible-near-infrared (VNIR) and SWIR spectra brings the challenge of image fusion in panoramic push-broom operation. Okyay and Khan [25] co-registered images from VNIR and SWIR cameras using the scale-invariant feature transform algorithm, also developing a correction for VNIR spectral information that removes discontinuities between the spectra of each camera. Krupnik and Khan [26], in addition to a review of terrestrial applications, presented three case studies of VNIR and SWIR imaging in mine environments, with spectral feature mapping used to identify minerals. Barton et al. [27] presented a case study of field mapping and classification using spectral angle mapper (SAM) classification with both ground-based and unmanned aerial vehicle (UAV) sensing. In particular, they explored the potential to integrate hyperspectral imaging into existing mining operation and interface with common geological software.

Many of these field applications have focused on mineral identification. Additional benefit can be achieved by relating spectral features to ore grade. Dalm et al. [28] used principal component analysis to discriminate sub-economic-grade copper in SWIR images of samples from a copper mine. Dalm et al. [29] analysed near-infrared and SWIR drill core samples of an epithermal gold–silver deposit and attempted to segment the set into ore and waste, succeeding in segmenting a population of waste samples. Both of these studies successfully identified a subset of the waste or sub-economic grade samples, but failed to determine a metric that correctly classified both ore and waste. Lypaczewski et al. [30] analysed core samples from a gold deposit and identified spectral features correlating with gold content. These spectral analysis methods are knowledge-based methods, requiring expertise in spectral feature analysis and a good understanding of the regional geology.

Recent developments in machine learning for hyperspectral imaging classification (summarised in [31]) present an opportunity to apply data-based techniques for ore/waste discrimination, without the manual work of identifying spectral features. A promising candidate method is based on convolutional neural networks (CNNs), and there is a history of using CNNs to classify hyperspectral data in remote sensing applications [32,33,34,35,36,37,38,39]. This approach is generally recognised to perform well, but there have been few applications in ground-based hyperspectral sensing or geological applications. Notably, Windrim et al. [40] applied transfer learning to pretrain a CNN on large labelled datasets, then fine-tuned the classifier on a domain-specific dataset, demonstrating classification of a ground-based hyperspectral scan obtained at a mine site. This acknowledges and addresses some of the difficulties of obtaining adequate well-labelled datasets and ground truth information for supervised classification problems.

Job et al. [41] presented a desktop study of a shovel-mounted hyperspectral imaging system for coal or ore sensing. The intent was to leverage the motion of the machine to conduct a spectral survey of the working face during operation. Herein, we take the first steps towards proving this capability in a field deployment within a production gold mine. We evaluate the application of a convolution neural network, trained on samples obtained from the mine, to discriminate ore and waste at the point of excavation. The performance of the neural network is compared with results from the spectral angle mapper as a competing approach and with the mine site geological model. The results suggest that ore grade discrimination is possible and an instrument mounted on the excavator could provide real-time information on ore–waste boundaries at the point of excavation. This information could be provided to the excavator operator, or the planning algorithms of an automation system, to reduce dilution and increase ore recovery.

2. Materials and Methods

2.1. Hardware

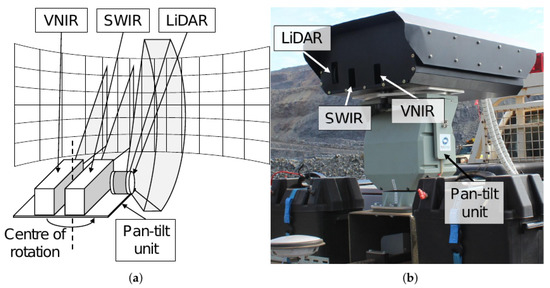

The apparatus to collect data for the study comprised VNIR and SWIR hyperspectral cameras and a three-dimensional light detection and ranging (LiDAR) sensor mounted on a pan-tilt head. Real-time kinematic global navigation satellite system (RTK GNSS) receivers and joint encoders from the pan-tilt head provided information to determine the six-degree-of-freedom (DOF) pose of the camera–LiDAR assembly. The apparatus was mounted to a utility vehicle, allowing transportation around the mine site and a platform from which to perform surveys (see Figure 1).

Figure 1.

(a) Schematic of sensing apparatus showing visible-near-infrared (VNIR) and short-wavelength infrared (SWIR) hyperspectral cameras, LiDAR, and pan-tilt unit. (b) Sensing apparatus mounted on a utility vehicle. The hyperspectral cameras and LiDAR are protected by a sun-shading enclosure, shown sitting atop the pan-tilt unit.

The survey process consisted of three steps: (1) a LiDAR survey of the working face to establish a 3D terrain model; (2) projection of spectral information onto the terrain model as the cameras were swept across the scene; and (3) geolocating the survey relative to the mine map using the platform pose provided by RTK GNSS receivers. The result of this process is a hyperspectral cube with two spatial dimensions and a third spectral dimension. In this study, the spatial dimensions are defined by spherical coordinates, which provide a convenient mapping with the movement of the sensors.

Terrain data were acquired with a Velodyne VLP-16 [42], a 16-beam rotating LiDAR, that provided 360 range in the rotating axis and 30 field of view (FOV) in the azimuth axis. The LiDAR was mounted sideways on the pan-tilt platform so the beams rotated in the vertical plane. Three RTK GNSS receivers were used to obtain a six-DOF pose solution to orient the system with respect to the mine map, so the final terrain model and hyperspectral data or classified solution were able to be integrated with existing mine models.

Hyperspectral data were acquired with a HySpex VNIR-1800 [43], covering 400–1000 nm with 186 bands (3.26 nm spectral resolution), and a HySpex SWIR-384 [44], covering 950–2500 nm with 288 bands (5.45 nm spectral resolution), from Norsk Elektro Optikk. The VNIR-1800 has a 17 FOV, with a spatial resolution of 0.16 mrad across track and 0.32 mrad along track. The SWIR-364 has a 16 FOV of view, with a spatial resolution of 0.73 mrad both across and along track. Both cameras are line scanners and operate in a push-broom fashion to obtain a complete two-dimensional scan. The cameras were mounted in stereo configuration on the same rotating platform as the LiDAR. Each line scan was an average of 3–5 sequential exposures. Exposure was adjusted so that no pixels in the scene were saturated. The pan speed was adjusted to ensure a contiguous image at the resolution of the cell grid used to store the hyperspectral data.

Two Labsphere Spectralon® targets with 99% and 50% reflectance were placed in the scanned scene to allow the reflectance values to be computed for materials in the scene.

Each line scan was registered to the terrain as it was acquired. Given the known location of the cameras and LiDAR units on the platform, and the known pan and tilt angles of the rotating platform, the position and orientation of the focal point of the cameras were known relative to the origin of the sensing platform frame of reference. Camera calibration information provided the precise field of view of each spatial camera pixel. Hence, each pixel in the line scan was treated as a vector emanating from the focal point of the camera, and was ray cast to find the intersection with the terrain. The hyperspectral data were stored in a spherical cell grid representation which matched the resolution of the SWIR camera. Data from the VNIR camera were downsampled by computing the mean of spectra that were ray cast to the same cell.

2.2. Fieldwork

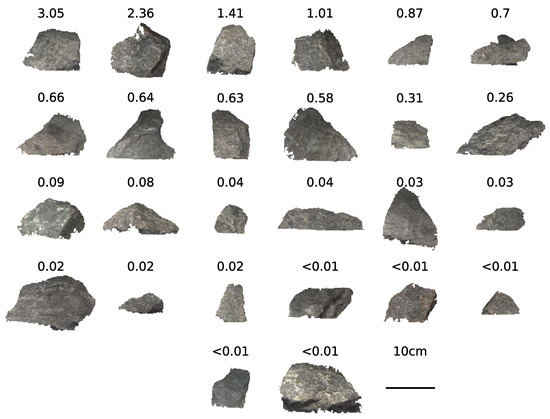

Fieldwork was undertaken in May 2019 at a gold mine in the Goldfields–Esperance region of Western Australia. Prior to this field work, the mine provided various ore samples, intending to cover a range of ore grades (see Figure 2). These were scanned in a laboratory under broad-spectrum halogen illumination. Scans were obtained at a sample distance of 1 m, using the same system as the field scans except for shorter focal-length lenses. These samples were sent to a laboratory for fire assay to determine gold concentration. The hyperspectral scans of these samples, and the associated concentration data, form the spectral libraries and training sets used for classification, as described in Section 2.4.

Figure 2.

Approximate true colour image of gold ore samples scanned in the laboratory, labelled by assayed gold concentration (ppm). Red, green, and blue channels are the 643 nm, 550 nm, and 464 nm bands from the visible-near-infrared (VNIR) camera.

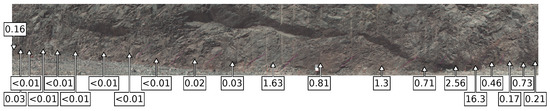

Figure 3 shows an image of a scanned mine face obtained during fieldwork. The hyperspectral survey was obtained with the equipment positioned 25 m from the face, with a 120 pan angle from start to finish. This scan took 5 min to obtain. The resulting scan has 349 pixels across track and 2577 pixels along track, with a resolution of 0.8 mrad. The maximum distance to the scanned face is 60 m at the extents of the scan, and the height of the face captured by the survey varies from 7 to 13 m across the scan. The spatial resolution of each pixel varies from 2 to 5 cm depending on rotation and distance from the scanned face. The root mean square error of registration between the VNIR and SWIR images, determined from 70 manually selected tie points spanning the scene, is approximately 2/3 of a pixel.

Figure 3.

False, three-colour image of the scanned site, with approximate sample locations and gold concentration (ppm) obtained from fire assay. Gold content data provided by AngloGoldAshanti. The red, green, and blue channels display the 1000 nm, 550 nm, and 464 nm bands from the data cube, respectively. The 1000 nm channel from the short-wavelength infrared (SWIR) camera was selected to create an image that fuses information from both cameras.

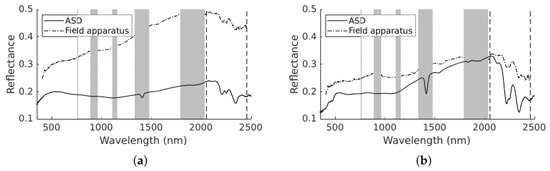

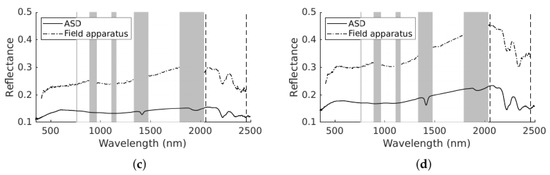

Additional ore samples were obtained from this face after the hyperspectral survey. These samples were assayed to determine gold concentration. The approximate location and gold concentration of these samples is also shown in Figure 3. Point spectrometry of the field samples was obtained with an Analytical Spectral Devices, Inc. (ASD) FieldSpec FR in a laboratory on site (see Figure 4 for comparison of the point spectrometer samples and field equipment spectra from the scanned face). These ore samples provide reference data to evaluate the classification of the scanned face.

Figure 4.

Sample spectra scanned with the Analytical Spectral Devices, Inc. (ASD) TerraSpec FR and the spectral pixel from the scene scanned by the field apparatus corresponding to the approximate location from which the sample was taken. The grey bands represent regions where there is significant atmospheric absorption and data have been removed. The dashed lines at 2050 and 2460 nm show the bounds of the spectral region that was used for classification. (a) <0.01 ppm. (b) 0.17 ppm. (c) 0.71 ppm. (d) 1.63 ppm.

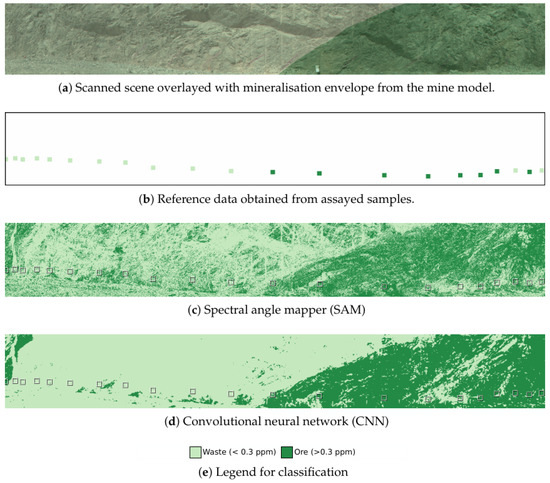

The mine provided a geological model which described the mineralization envelope relative to the current state of the mine. The boundary of the mineralization envelope on the scanned face is shown in Figure 5a. This provides another source of reference data to evaluate the classification performance.

Figure 5.

Reference data and results for the binary classification problem with the waste vs. ore boundary at 0.3 ppm. Reference data show the mine mineralisation envelope boundary and the classes of the assayed samples obtained from the face. Results of the classification for both SAM and the CNN are shown. Classification results are coloured by one of the two grade classifications, as shown in the legend, with a bounding box around the regions labelled in the reference model.

2.3. Data Preprocessing

Each hyperspectral survey was preceded by a dark current estimation which averaged 200 line scans which were collected with the shutter closed. The camera software removed this measure of background noise from each line scan. The camera calibration files include a non-uniformity matrix that corrects for sensor non-uniformity. This was also corrected in real time by the camera software. Additional background noise in the SWIR camera caused by sensor temperature variation was estimated from the band most affected by atmospheric noise. The across-track brightness gradient in this band was estimated with a first-order polynomial, and along-track with a second order polynomial. This estimate of the background noise variation was subtracted from every spectral band in the SWIR camera. This did not correct illumination variation in the scene due to varying cloud cover or shadowing effects.

The empirical line method [45] was used to convert the image to relative reflectance. For each spectral band, a gain and offset were computed by fitting a first-order polynomial to the at-sensor radiance and the known reflectance of the spectral targets. In the case that the 99% target was saturated, only the 50% target was used, with the assumption that the fit passes through the origin. Data within the atmospheric absorption bands 760–770 nm, 890–965 nm, 1110–1160 nm, 1355–1480 nm, and 1790–2035 nm, were removed. Bands at wavelengths longer than 2460 nm were removed due to decreased illumination and sensor efficiency. No further spectral or spatial smoothing or denoising was applied.

The cameras provided a strong signal in the region of interest, as shown by the signal to noise ratio (SNR) of 195:1 for the field image and 185:1 for the laboratory scanned samples. The SNR of the images was established by dividing the mean by the standard deviation of the reflectance in a spatially uniform part of the scan [11]. This was performed using the 50% reflectance panel, and averaged over the bands used for classification, 2050–2460 nm. This method does not address uncertainty as a result of the background noise correction, nor does it account for illumination variation over the scene, so this does not capture the full uncertainty of the derived reflectance spectra.

2.4. Classification

Two classification methods are compared: the spectral angle mapper (SAM), and a convolutional neural network (CNN). The laboratory scans of the ore samples (as shown in Figure 2) were used as reference and training data for both methods, with each pixel of the 2D image labelled with a class determined by the gold concentration of the ore sample. As the number of ore samples are small and not a contiguous sampling of the full range of possible gold concentration, the labels were grouped into classes defined by ranges of gold concentration. For the first problem, binary classification, each input was labelled ore or waste, depending on whether the gold concentration was above or below 0.3 ppm, the threshold used by the geological model. A second classification attempt was performed to test the sensitivity of the classifiers, with the data split into four classes: less than 0.01 ppm, 0.01–0.3 ppm, 0.3–1.0 ppm, and greater than 1.0 ppm.

SAM [46] is a supervised classification method that treats each spectral signature as an n-dimensional vector, and computes the angle between each pixel in a scene and a library of reference spectra:

where is the spectral angle, t is a spectral pixel in the image, r is a reference pixel from the library, and n is the number of spectral bands. The smaller the value of , the more similar the spectra. To classify a pixel, the label of the closest reference spectra is selected. Barton et al. [27] found that classification performance was improved when using samples obtained from the site, rather than from generic spectral mineral libraries. There are a combined 170,000 spectral pixels from the two-dimensional laboratory scans of the 26 ore samples, which is an unwieldly number of reference spectra to compare against to classify a single spectral pixel. As a result, the scans were sub-sampled to generate the library of reference spectra. 50 pixels were randomly selected from each ore sample, and each were labelled with the corresponding gold concentration class and added to the spectral library.

Convolutional neural networks are a series of learned filters which are convolved over the spectral and potentially spatial dimensions of the data to perform classification. Joint spatiospectral models, such as the architecture developed by Ben Hamida et al. [36], operate on a small spatial neighbourhood of each pixel to classify the central pixel. This approach applies 3D convolution filters that gradually reduce the 3D input to a 1D vector. The output of the CNN is a vector containing the output class probability for each class. The class with the highest probability is the chosen label. The six-layer network architecture from [36] was used with a 5 × 5 spatial input patch, where the output class was used to label the central pixel of each patch. This assumes that nearby pixels have similar ore grade and that there is value in preserving local spatial information. The stochastic gradient descent (SGD) solver with an initial learning rate of 0.002 and momentum of 0.9 was used in the learning process. All other common parameters were set as in [36]. The model was implemented and trained in Python with TensorFlow 2.5 [47].

One ore sample in each classification band was set aside for the validation set. The remaining ore samples were used to generate the training set. Each hyperspectral image was decomposed into overlapping 5 × 5 spatial patches (containing the full spectral data for that spatial region) as the input to the network, and the corresponding gold concentration class used as the output label. The CNN was trained with a batch size of 512 over 800 epochs. To avoid overfitting, the solution with the highest validation accuracy was used to classify the field scan.

Both classification methods were performed on the spectral data in the 2050–2460 nm wavelength range, as this band contains the spectral absorption features that were not obscured by atmospheric absorption bands, as seen in Figure 4.

3. Results

The ability to discriminate ore from waste was tested by performing a binary classification of the scanned face. All training samples with a gold concentration below 0.3 ppm were labelled as waste, and anything above this as ore. This is the same boundary as the mineralisation envelope used by the geological model. The terrain model of the scanned scene was converted to the mine map coordinates, allowing the mineralisation envelope to be overlaid on the scan, as seen in Figure 5a. Figure 5b shows the reference data constructed from the assayed samples taken from the face. The approximate location of the sample and a window of ten pixels in each direction were labelled as ore or waste by the same 0.3 ppm cut-off. Due to the uncertainty in the exact location and the uniformity of the gold concentration in the local area of each sample, this is better referred to as reference data than as “ground truth”. There are discrepancies between the two sources of reference data. Two samples from the assayed reference data at the right of the scene are classified as waste, despite having been obtained from the mineralised region of the geological model. This likely represents actual variation in gold content not captured by the geological model.

Both SAM and CNN classification results (see Figure 5c,d) have a diagonal ore/waste boundary in the centre of the scanned scene. This is near the boundary that is present in the geological model. The significant difference between the SAM and CNN classified images is the spatial consistency. In the CNN classification, nearby pixels often have the same class. This is the expected outcome of classifying each pixel based on an overlapping patch of pixels, as the patches of adjacent pixels will contain overlapping information. The per-class and overall accuracy relative to the reference assay data are given in Table 1. Both methods classify ore in the waste region and waste in the ore region that do not match the reference data. The CNN outperforms the SAM classification for both classes and overall accuracy, which is expected, given the reduced noise in the classification.

Table 1.

Per-class accuracy and overall accuracy (OA) of classification relative to reference data for the binary classification problem.

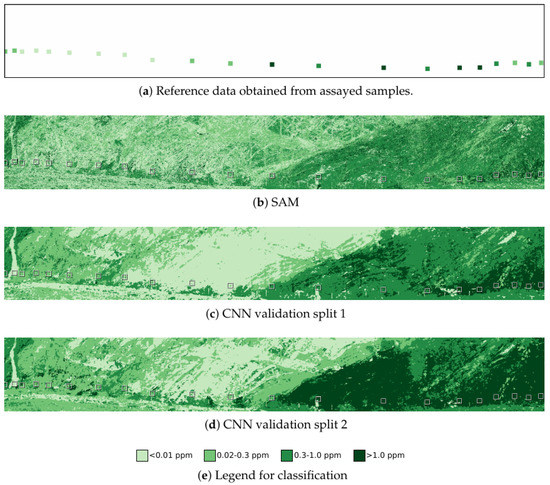

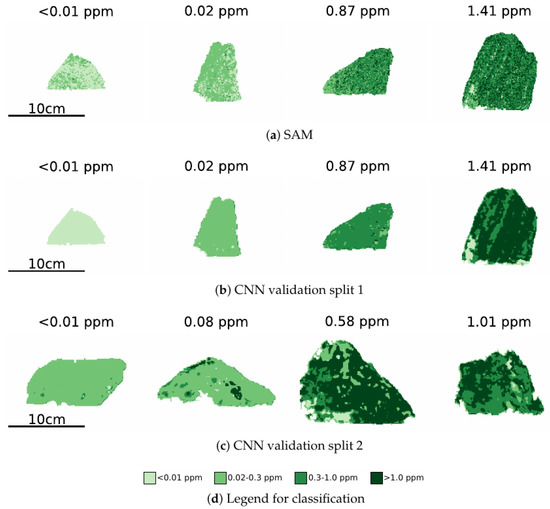

Classification into four ore grade bands was used to test the sensitivity to lower and higher grades of ore. One laboratory sample from each grade band was randomly selected as a validation sample, with two testing/validation splits of the laboratory dataset. In addition to the 0.3 ppm boundary of the mineralisation model, this model further discretised the waste category into above and below 0.01 ppm, the limit of detectable gold in the assay results, and the ore category into above and below 1.0 ppm, as high and low grade ore. These are arbitrary selections which could be varied in response to cut-offs for economically viable excavation, for example. Figure 6 shows the reference data of the assayed samples, now discretised to these four classes, along with the SAM and CNN classification results. Table 2 shows the per-class and overall accuracy relative to the reference data. Accuracy relative to the assayed samples is low, although the feature corresponding to the mineralisation envelope boundary is still visible. Furthermore, the high grade and low grade classifications are sensitive to the split of the laboratory samples into training and validation splits, as seen in Figure 6c,d and in the per-class accuracy. In the second training split, significantly more of the scanned scene is classified as high grade.

Figure 6.

Reference data and results for the four grade classification problem. Reference data show the classes of the assayed samples obtained from the face. Results of the classification for both SAM and the CNN are shown. Classification results are coloured by one of the four grade classifications, as shown in the legend, with a bounding box around the regions labelled in the reference model.

Table 2.

Per-class accuracy and overall accuracy (OA) of classification relative to reference data for the four grade classification problem. CNN 1 and CNN 2 refer to the two validation splits.

Similar sensitivity to the training and validation split is seen in the classification performance on the validation datasets (see Figure 7). For the first data split (see Figure 7b), each sample is predominantly classified with the correct label. However, for the second data split (see Figure 7c), both waste classes are largely classified the same, and more of the lower grade ore is classified as high grade ore than the high grade sample. This suggests a large amount of intra-class variability, and is also a limitation of the small number of samples collected prior to fieldwork.

Figure 7.

Four grade classification on validation sets. Classification results are coloured by one of the four grade classifications, as shown in the legend.

4. Discussion

Using hyperspectral imaging to identify ore grade relies on several assumptions: (i) there is a relationship between ore grade and the spectral features of the ore; (ii) these features are detectable by the equipment and have been captured in the data; and (iii) the training data and labels are a representative sample of both the possible variation and the scene being classified.

Relationships between spectral features and gold mineralisation vary from deposit to deposit. Gold itself is not visible at the concentrations present in the samples or the scanned face, so the spectral features of the mineralised rock are being observed. A link between the ore grade and the geological characteristics of the rock is assumed based on the geology of the mine site deposit.

The point spectrometer scans shown in Figure 4 show that the majority of spectral features are in the SWIR region. While the features in the 1400 nm and 1900 nm region are in the atmospheric absorption bands, the features above 2000 nm can be seen in the field spectra, though at lower spectral resolution than the point spectrometry obtained in the laboratory. Above 2400 nm, the features in the field spectra are degraded by noise. This could be addressed with better integration time settings to favour high dynamic range in the >2000 nm region, at the expense of saturation in the more sensitive and better-illuminated bands. Nevertheless, the deployed system under natural solar illumination is able to capture similar features to the point spectrometer under laboratory conditions.

There are two factors to consider in whether the training data provides sufficient coverage of the test space. Firstly, whether the mineralogical content reflects that in the field, and secondly, whether the imaging conditions in the laboratory are sufficiently similar to field conditions. There were 26 discrete samples obtained and scanned for the training set, ranging from lower than detectable gold concentration to 3.05 ppm. Only four samples were over 1 ppm (labelled as high grade for the four grade classification task), while field samples were found to have a gold concentration up to 16 ppm. High-grade ore is under-represented in the training samples.

Comparing the conditions of the laboratory and field scans, the samples in the laboratory were clean, dry samples, scanned under broad-spectrum artificial illumination. In the field, the mine face had areas of moisture, and post-blast excavation areas are likely to be dusty. Shadowing in rocky terrain and varying illumination due to cloud cover were confounding effects that were not corrected. Despite these impacts, the CNN classification algorithm was not obviously sensitive to orientation or shadowing in the field scan. Some misclassification of ore as waste occurred in pixels that are darker relative to their surrounds. It was observed in the field that the mine face was wet in this region. Adding samples to the training set that were washed prior to imaging could make the classifier more robust to the effect of moisture in the field.

The four grade classification problem had some predictive power on the validation dataset, although this was sensitive to the validation data split. The classification of the field scan could not reliably identify the finer split of ore grade. This suggests that while a mineralogical relationship may exist that reflects ore grade, it is not adequately captured in the training dataset. Fire assay has a lower resolution than hyperspectral imaging; while the cameras were able to obtain spectra at the millimetre scale, the assay process returned a single gold concentration for a rock sample. Labelling each spectral pixel in the sample with the same uniform gold concentration may be missing real variation in gold concentration or mineralisation. Mineralisation characteristics, and the relationship between mineralogy and ore grade, may also vary throughout the site, either with depth or location. Assembling a training set that is geolocated would allow separate classifiers to be trained on specific regions in order to detect local variations in mineralisation.

5. Conclusions

Both classification algorithms that were tested distinguished ore from waste to the same level as the mine model, without needing to extract spectral features or learn relationships directly from the reflectance spectra itself. This is a novel result in a field application, as prior attempts to discriminate ore and waste in gold samples have largely employed core scans or samples imaged under laboratory conditions. The attempt to discriminate higher or lower ore grades with finer resolution showed low accuracy relative to the reference gold concentration values of samples collected from the scanned mine face. A major limitation of this study is the small number of ore samples available for training data. A larger dataset would better demonstrate the limits of ore grade discrimination with this apparatus and scanning method. The results nevertheless suggest that it is possible to use hyperspectral data to discriminate ore and waste at the point of excavation. While this motivates the need for further investigation, on the evidence presented, the answer to the question that titles this paper is affirmative for gold with a high prospect of extending, mutatis mutandis, to other minerals.

Author Contributions

Conceptualization, A.T.J. and P.R.M.; methodology, A.T.J., M.L.E. and P.R.M.; software, K.A.C., K.J.A. and M.L.E.; validation, K.A.C.; formal analysis, K.A.C. and K.J.A.; investigation, M.L.E., K.A.C. and A.T.J.; resources, A.T.J.; data curation, K.A.C.; writing—original draft preparation, K.A.C.; writing—review and editing, M.L.E., K.J.A., A.T.J. and P.R.M.; visualization, K.A.C.; supervision, A.T.J., P.R.M. and K.J.A.; project administration, A.T.J.; funding acquisition, A.T.J. and P.R.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Mineral Research Institute of Western Australia (MRIWA) grant M0518.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data generated in this study are openly available in UQ eSpace at https://doi.org/10.48610/42d4d33 (accessed on 24 February 2022).

Conflicts of Interest

The authors A.T.J. and M.L.E. are employees of Plotlogic Pty Ltd. The authors K.A.C., K.A. and P.R.M. declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional neural network |

| DOF | Degree of freedom |

| FOV | Field of view |

| LiDAR | Light detection and ranging |

| OA | Overall accuracy |

| RTK GNSS | Real-time kinematic global navigation satellite system |

| SAM | Spectral angle mapper |

| SWIR | Short-wavelength infrared |

| VNIR | Visible-near-infrared |

References

- Goetz, A.F. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Environ. 2009, 113 (Suppl. 1), S5–S16. [Google Scholar] [CrossRef]

- Hunt, G.R. Spectral Signature of Particulate Minerals in the Visible and Near Infrared. Geophysics 1977, 42, 501–513. [Google Scholar] [CrossRef] [Green Version]

- Hecker, C.; van Ruitenbeek, F.J.A.; van der Werff, H.M.A.; Bakker, W.H.; Hewson, R.D.; van der Meer, F.D. Spectral Absorption Feature Analysis for Finding Ore. IEEE Geosci. Remote Sens. Mag. 2019, 7, 51–71. [Google Scholar] [CrossRef]

- Asadzadeh, S.; de Souza Filho, C.R. A review on spectral processing methods for geological remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 69–90. [Google Scholar] [CrossRef]

- Van der Meer, F.D.; van der Werff, H.M.; van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi- and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Peyghambari, S.; Zhang, Y. Hyperspectral remote sensing in lithological mapping, mineral exploration, and environmental geology: An updated review. J. Appl. Remote Sens. 2021, 15, 1–25. [Google Scholar] [CrossRef]

- Vane, G.; Green, R.O.; Chrien, T.G.; Enmark, H.T.; Hansen, E.G.; Porter, W.M. The airborne visible/infrared imaging spectrometer (AVIRIS). Remote Sens. Environ. 1993, 44, 127–143. [Google Scholar] [CrossRef]

- Pearlman, J.S.; Barry, P.S.; Segal, C.C.; Shepanski, J.; Beiso, D.; Carman, S.L. Hyperion, a Space-Based Imaging Spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Guanter, L.; Kaufmann, H.; Segl, K.; Foerster, S.; Rogass, C.; Chabrillat, S.; Kuester, T.; Hollstein, A.; Rossner, G.; Chlebek, C.; et al. The EnMAP spaceborne imaging spectroscopy mission for earth observation. Remote Sens. 2015, 7, 8830–8857. [Google Scholar] [CrossRef] [Green Version]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A. Close Range Hyperspectral Imaging Integrated With Terrestrial LiDAR Scanning Applied To Rock Characterisation At Centimetre Scale. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B5, 417–422. [Google Scholar] [CrossRef] [Green Version]

- Okyay, Ü.; Khan, S.D.; Lakshmikantha, M.R.; Sarmiento, S. Ground-based hyperspectral image analysis of the lower Mississippian (Osagean) reeds spring formation rocks in southwestern Missouri. Remote Sens. 2016, 8, 1018. [Google Scholar] [CrossRef] [Green Version]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A.; Schneider, D. Integration of panoramic hyperspectral imaging with terrestrial lidar data. Photogramm. Rec. 2011, 26, 212–228. [Google Scholar] [CrossRef]

- Buckley, S.J.; Kurz, T.H.; Howell, J.A.; Schneider, D. Terrestrial lidar and hyperspectral data fusion products for geological outcrop analysis. Comput. Geosci. 2013, 54, 249–258. [Google Scholar] [CrossRef]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A. Close-range hyperspectral imaging for geological field studies: Workflow and methods. Int. J. Remote Sens. 2013, 34, 1798–1822. [Google Scholar] [CrossRef]

- Murphy, R.J.; Taylor, Z.; Schneider, S.; Nieto, J. Mapping clay minerals in an open-pit mine using hyperspectral and LiDAR data. Eur. J. Remote Sens. 2015, 48, 511–526. [Google Scholar] [CrossRef]

- Denk, M.; Gläßer, C.; Kurz, T.H.; Buckley, S.J.; Drissen, P. Mapping of iron and steelwork by-products using close range hyperspectral imaging: A case study in Thuringia, Germany. Eur. J. Remote Sens. 2015, 48, 489–509. [Google Scholar] [CrossRef] [Green Version]

- Krupnik, D.; Khan, S.; Okyay, U.; Hartzell, P.; Zhou, H.W. Study of Upper Albian rudist buildups in the Edwards Formation using ground-based hyperspectral imaging and terrestrial laser scanning. Sediment. Geol. 2016, 345, 154–167. [Google Scholar] [CrossRef] [Green Version]

- Kirsch, M.; Lorenz, S.; Zimmermann, R.; Tusa, L.; Möckel, R.; Hödl, P.; Booysen, R.; Khodadadzadeh, M.; Gloaguen, R. Integration of terrestrial and drone-borne hyperspectral and photogrammetric sensing methods for exploration mapping and mining monitoring. Remote Sens. 2018, 10, 1366. [Google Scholar] [CrossRef] [Green Version]

- Thiele, S.T.; Lorenz, S.; Kirsch, M.; Cecilia Contreras Acosta, I.; Tusa, L.; Herrmann, E.; Möckel, R.; Gloaguen, R. Multi-scale, multi-sensor data integration for automated 3-D geological mapping. Ore Geol. Rev. 2021, 136, 104252. [Google Scholar] [CrossRef]

- Hartzell, P.; Glennie, C.; Khan, S. Terrestrial hyperspectral image shadow restoration through lidar fusion. Remote Sens. 2017, 9, 421. [Google Scholar] [CrossRef] [Green Version]

- Brell, M.; Segl, K.; Guanter, L.; Bookhagen, B. Hyperspectral and Lidar Intensity Data Fusion: A Framework for the Rigorous Correction of Illumination, Anisotropic Effects, and Cross Calibration. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2799–2810. [Google Scholar] [CrossRef] [Green Version]

- He, J.; Barton, I. Hyperspectral remote sensing for detecting geotechnical problems at ray mine. Eng. Geol. 2021, 292, 106261. [Google Scholar] [CrossRef]

- Brell, M.; Rogass, C.; Segl, K.; Bookhagen, B.; Guanter, L. Improving Sensor Fusion: A Parametric Method for the Geometric Coalignment of Airborne Hyperspectral and Lidar Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3460–3474. [Google Scholar] [CrossRef] [Green Version]

- Brell, M.; Segl, K.; Guanter, L.; Bookhagen, B. 3D hyperspectral point cloud generation: Fusing airborne laser scanning and hyperspectral imaging sensors for improved object-based information extraction. ISPRS J. Photogramm. Remote Sens. 2019, 149, 200–214. [Google Scholar] [CrossRef]

- Okyay, U.; Khan, S.D. Spatial co-registration and spectral concatenation of panoramic ground-based hyperspectral images. Photogramm. Eng. Remote Sens. 2018, 84, 781–790. [Google Scholar] [CrossRef]

- Krupnik, D.; Khan, S. Close-range, ground-based hyperspectral imaging for mining applications at various scales: Review and case studies. Earth-Sci. Rev. 2019, 198, 102952. [Google Scholar] [CrossRef]

- Barton, I.F.; Gabriel, M.J.; Lyons-Baral, J.; Barton, M.D.; Duplessis, L.; Roberts, C. Extending geometallurgy to the mine scale with hyperspectral imaging: A pilot study using drone- and ground-based scanning. Min. Met. Explor 2021, 38, 799–818. [Google Scholar] [CrossRef]

- Dalm, M.; Buxton, M.W.; van Ruitenbeek, F.J. Discriminating ore and waste in a porphyry copper deposit using short-wavelength infrared (SWIR) hyperspectral imagery. Min. Eng. 2017, 105, 10–18. [Google Scholar] [CrossRef]

- Dalm, M.; Buxton, M.W.; van Ruitenbeek, F.J. Ore–Waste Discrimination in Epithermal Deposits Using Near-Infrared to Short-Wavelength Infrared (NIR-SWIR) Hyperspectral Imagery. Math. Geosci. 2019, 51, 849–875. [Google Scholar] [CrossRef] [Green Version]

- Lypaczewski, P.; Rivard, B.; Gaillard, N.; Perrouty, S.; Piette-Lauzière, N.; Bérubé, C.L.; Linnen, R.L. Using hyperspectral imaging to vector towards mineralization at the Canadian Malartic gold deposit, Québec, Canada. Ore Geol. Rev. 2019, 111, 102945. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Zhao, W.; Mao, S.; Liu, H. Spectral-spatial classification of hyperspectral images using deep convolutional neural networks. Remote Sens. Lett. 2015, 6, 468–477. [Google Scholar] [CrossRef]

- Yu, S.; Jia, S.; Xu, C. Convolutional neural networks for hyperspectral image classification. Neurocomputing 2017, 219, 88–98. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral-spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef] [Green Version]

- Ben Hamida, A.; Benoit, A.; Lambert, P.; Ben Amar, C. 3-D deep learning approach for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4420–4434. [Google Scholar] [CrossRef] [Green Version]

- Fricker, G.A.; Ventura, J.D.; Wolf, J.A.; North, M.P.; Davis, F.W.; Franklin, J. A convolutional neural network classifier identifies tree species in mixed-conifer forest from hyperspectral imagery. Remote Sens. 2019, 11, 2326. [Google Scholar] [CrossRef] [Green Version]

- Hang, R.; Hang, R.; Li, Z.; Ghamisi, P.; Hong, D.; Hong, D.; Xia, G.; Liu, Q. Classification of hyperspectral and LiDAR data using coupled CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4939–4950. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Wang, J.; An, Z. Classification method of CO2 hyperspectral remote sensing data based on neural network. Comput. Commun. 2020, 156, 124–130. [Google Scholar] [CrossRef]

- Windrim, L.; Melkumyan, A.; Murphy, R.J.; Chlingaryan, A.; Ramakrishnan, R. Pretraining for Hyperspectral Convolutional Neural Network Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2798–2810. [Google Scholar] [CrossRef]

- Job, A.T.; Edgar, M.L.; McAree, P.R. Real-time shovel-mounted coal or ore sensing. In Proceedings of the AusIMM Iron Ore Conference 2017, Perth, Australia, 24–26 July 2017; pp. 397–406. [Google Scholar]

- Velodyne Lidar. Puck Datasheets. 2019. Available online: Https://velodynelidar.com/downloads/ (accessed on 22 October 2021).

- Norsk Elektro Optikk. HySpex VNIR-1800. 2021. Available online: https://www.hyspex.com/hyspex-products/hyspex-classic/hyspex-vnir-1800/ (accessed on 22 October 2021).

- Norsk Elektro Optikk. HySpex SWIR-384. 2021. Available online: https://www.hyspex.com/hyspex-products/hyspex-classic/hyspex-swir-384/ (accessed on 22 October 2021).

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F. The spectral image processing system (SIPS)-interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 15 February 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).