An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System

Abstract

1. Introduction

2. Materials and Methods

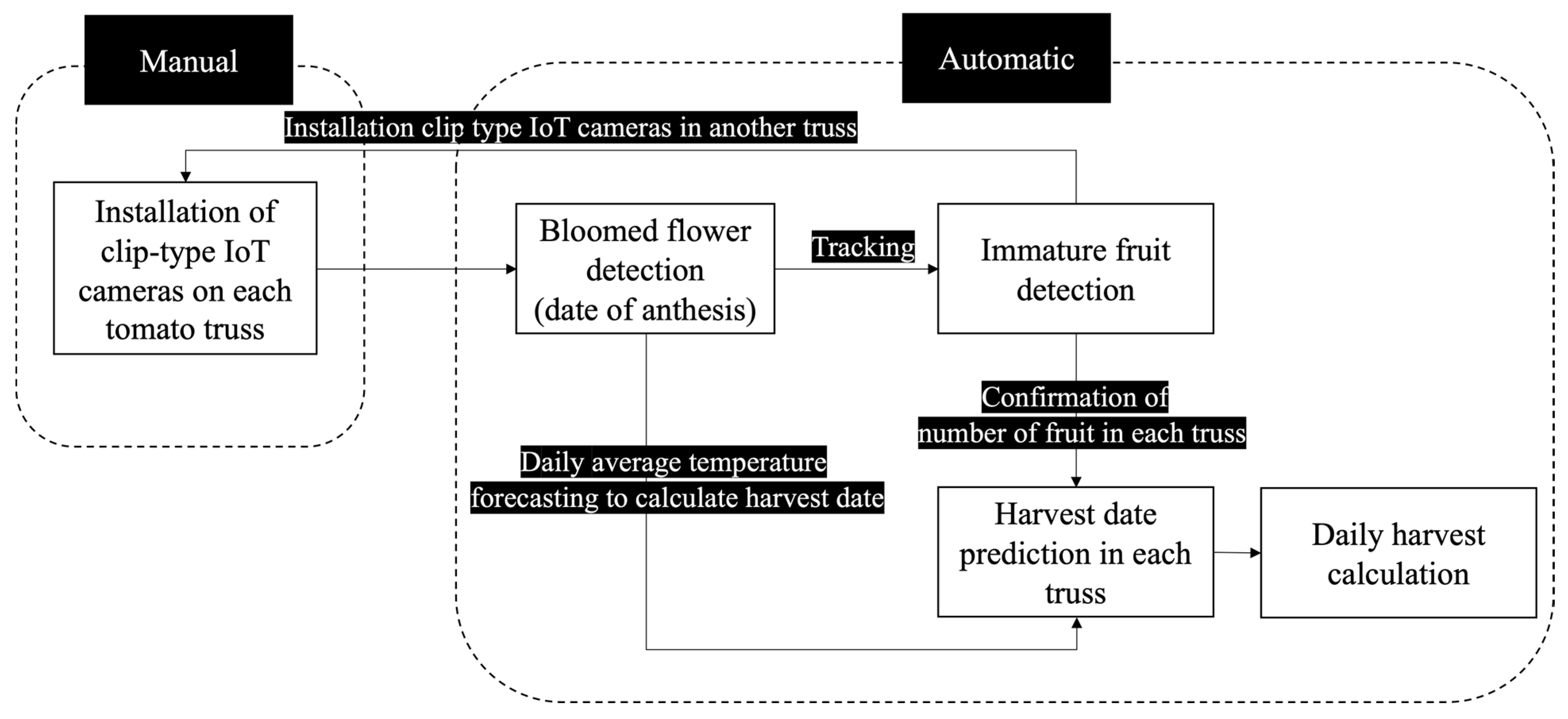

2.1. System Overview

2.1.1. System Pipeline

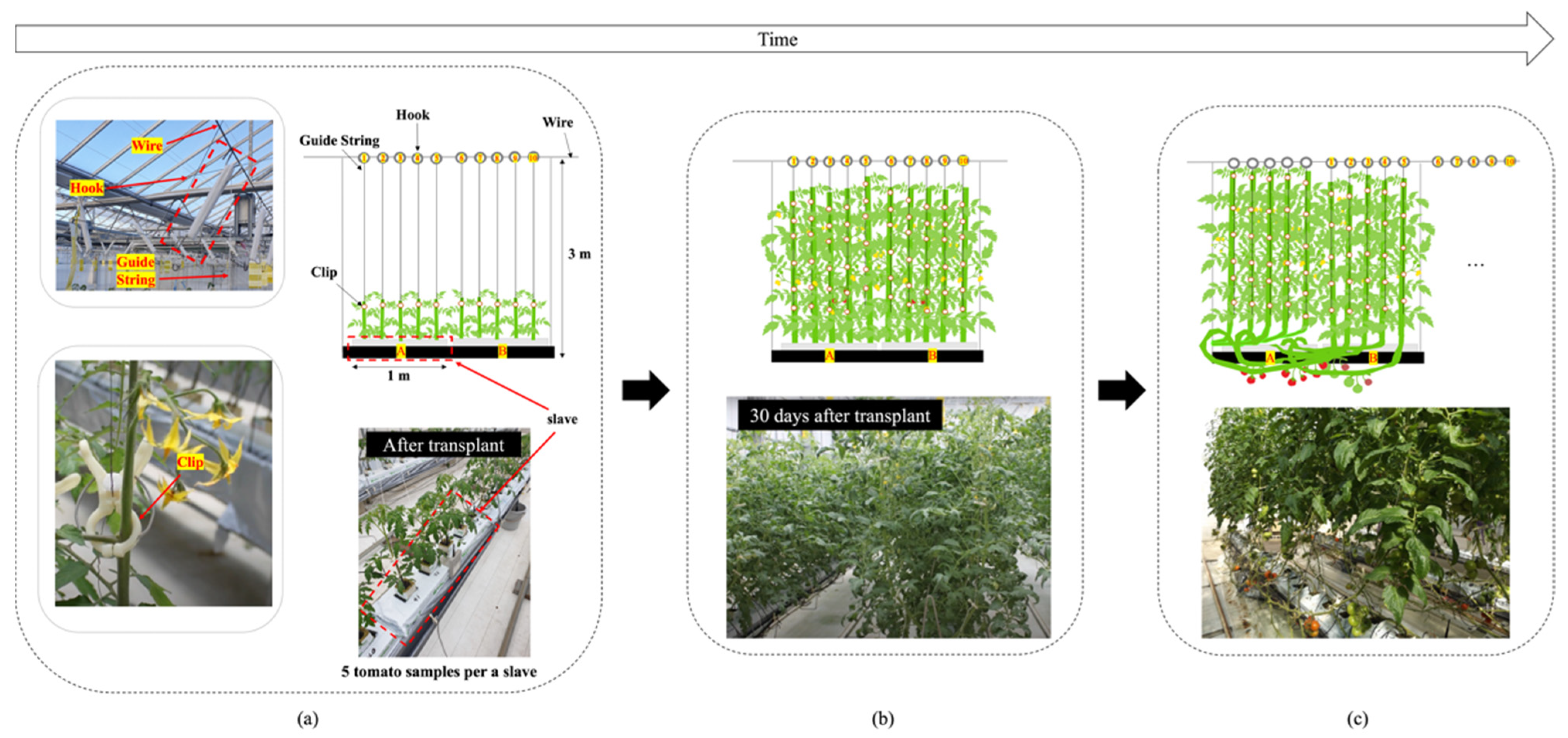

2.1.2. System Configuration

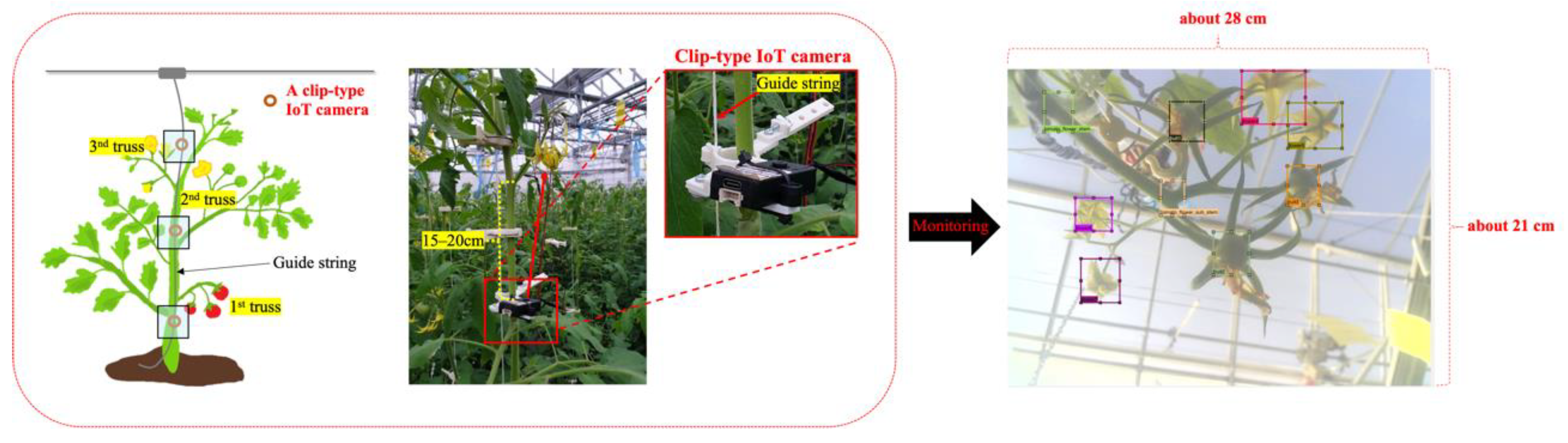

2.1.3. Clip-Type IoT Camera Design

2.1.4. Data Acquisition

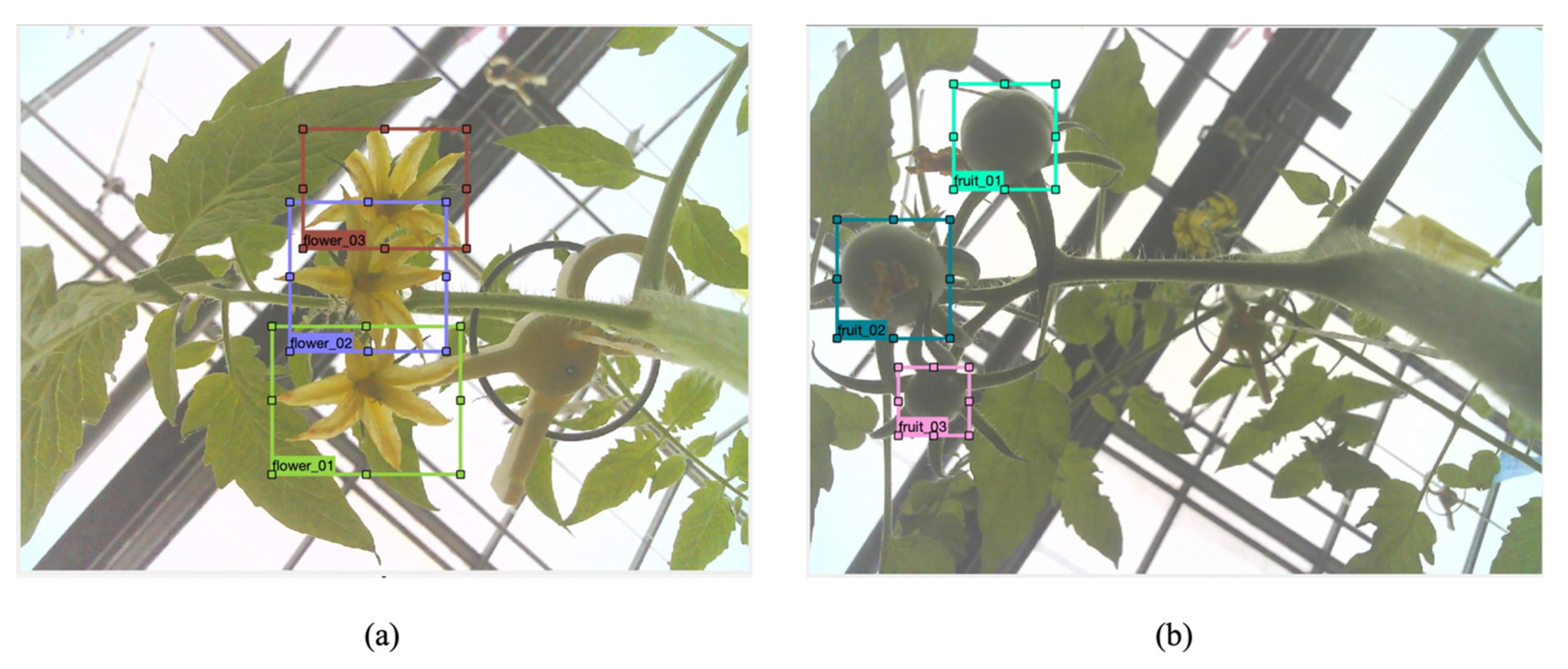

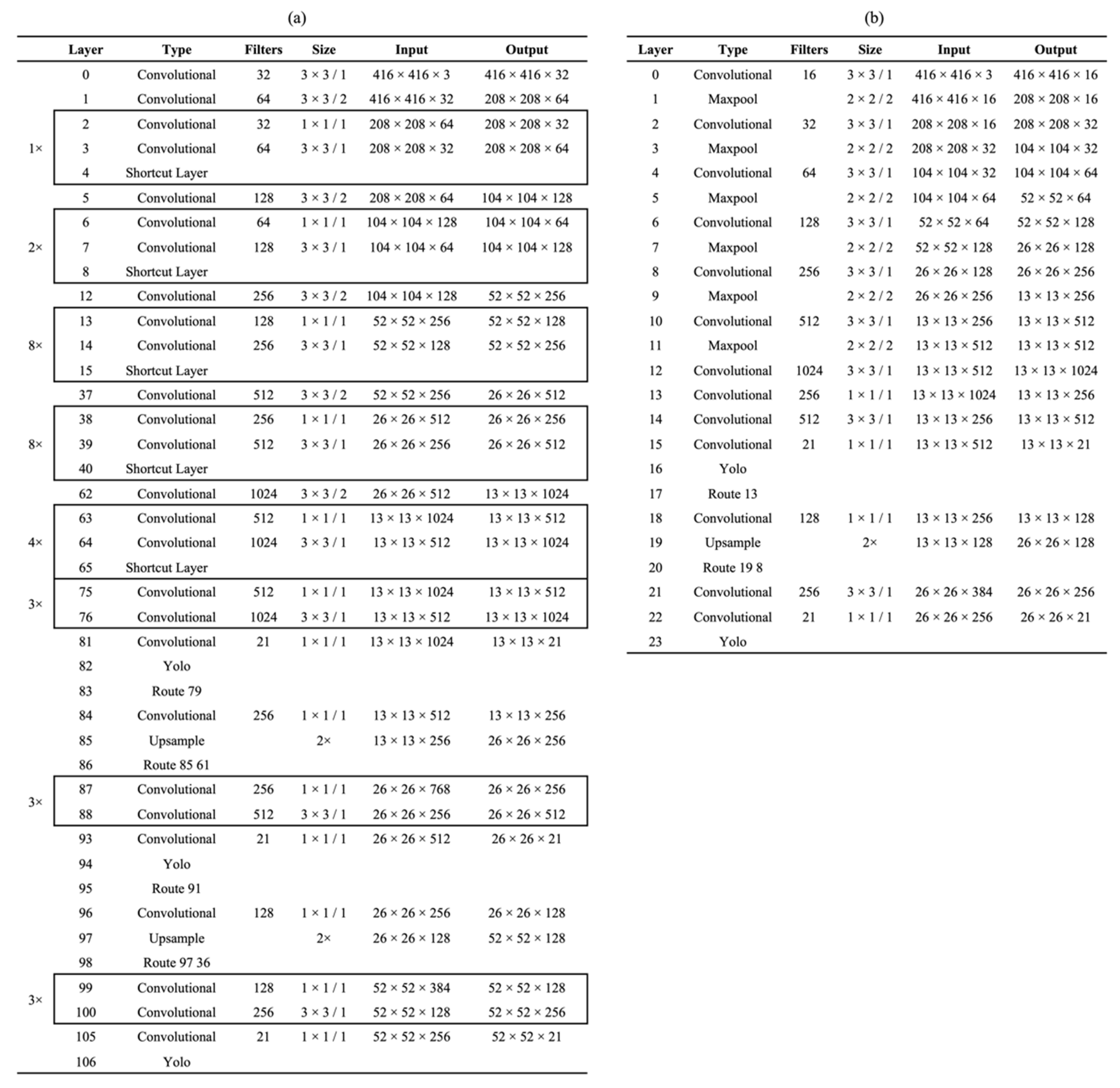

2.2. Deep Learning-Based Flower and Fruit Monitoring

Detection

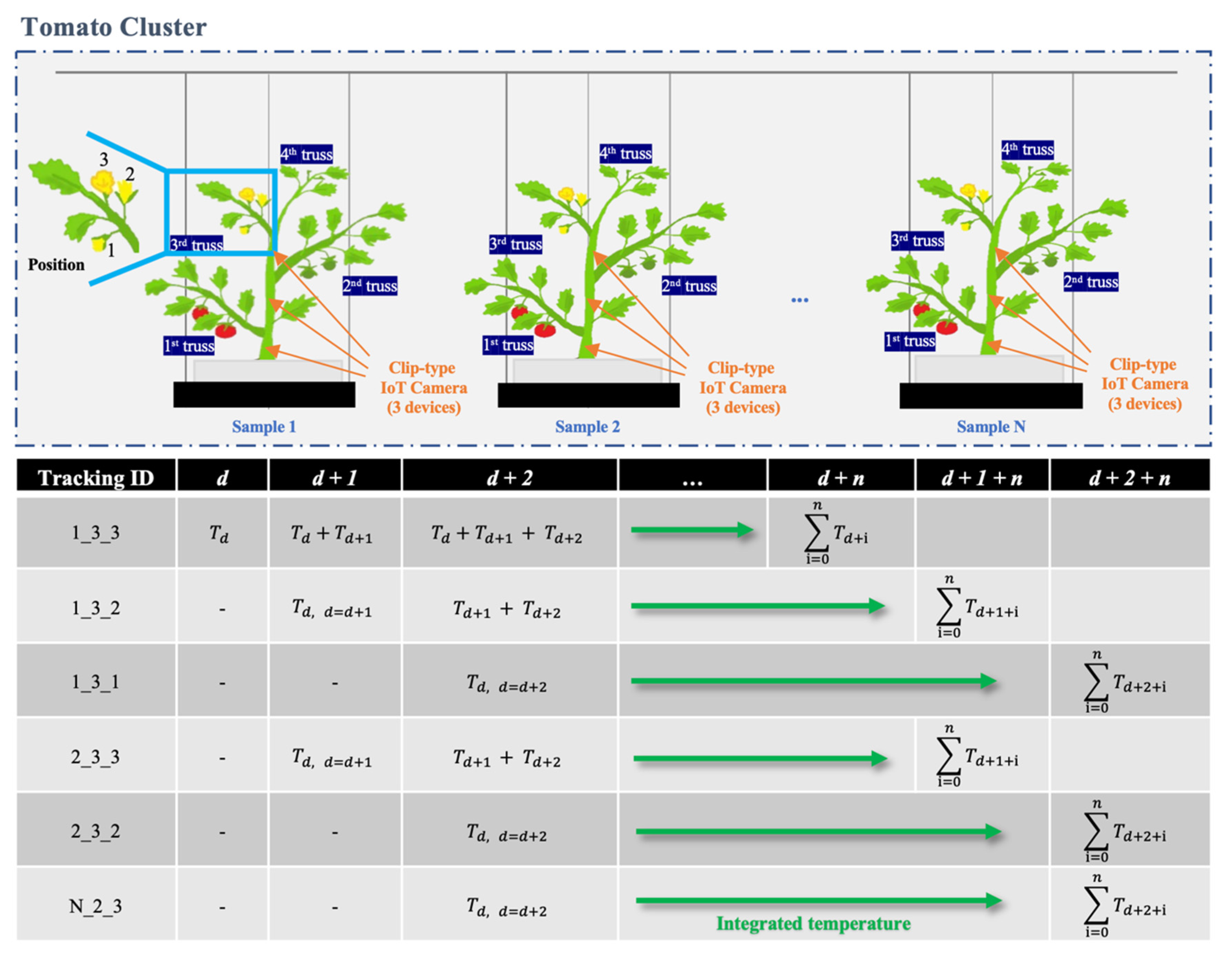

2.3. Tracking

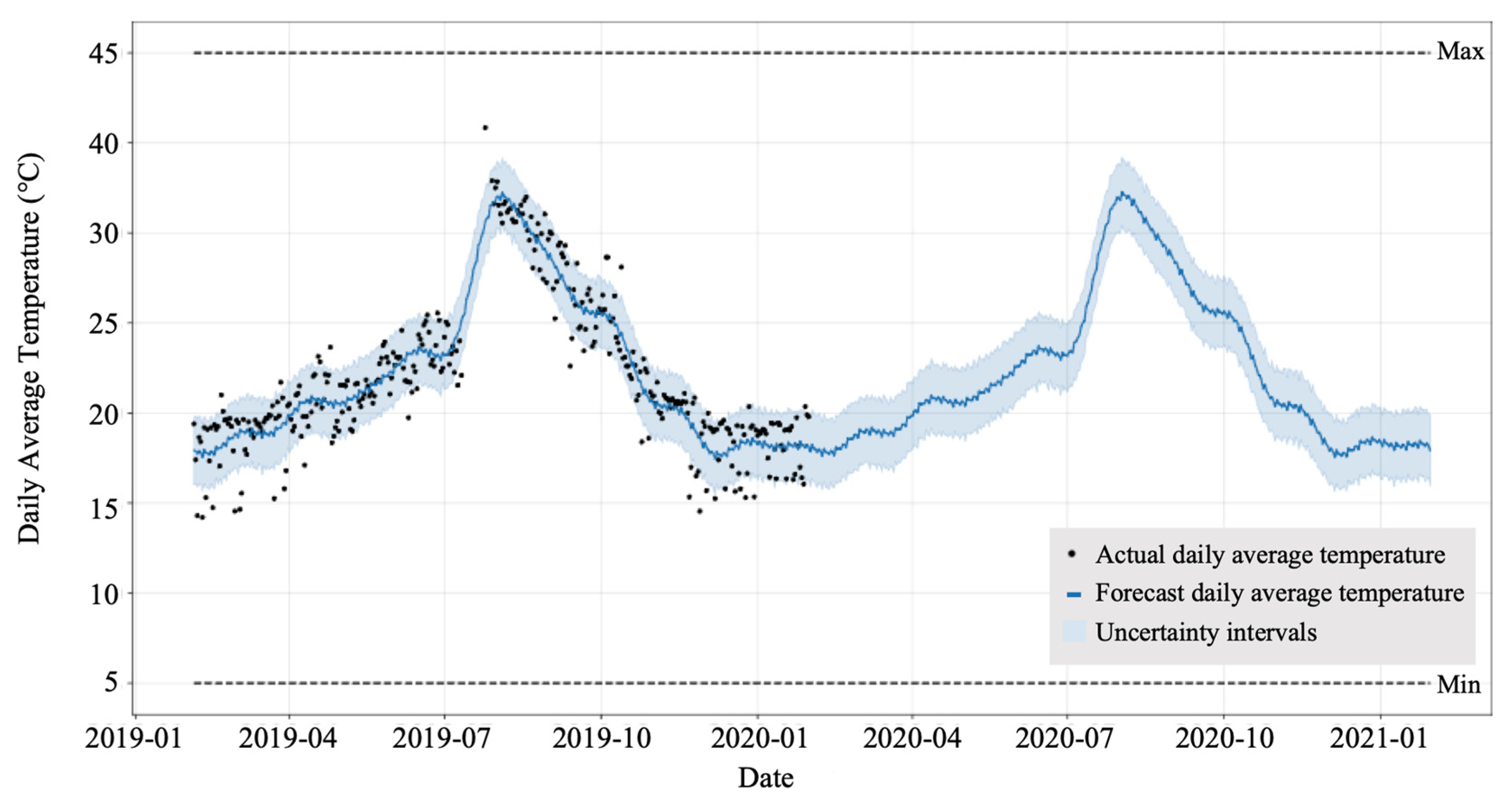

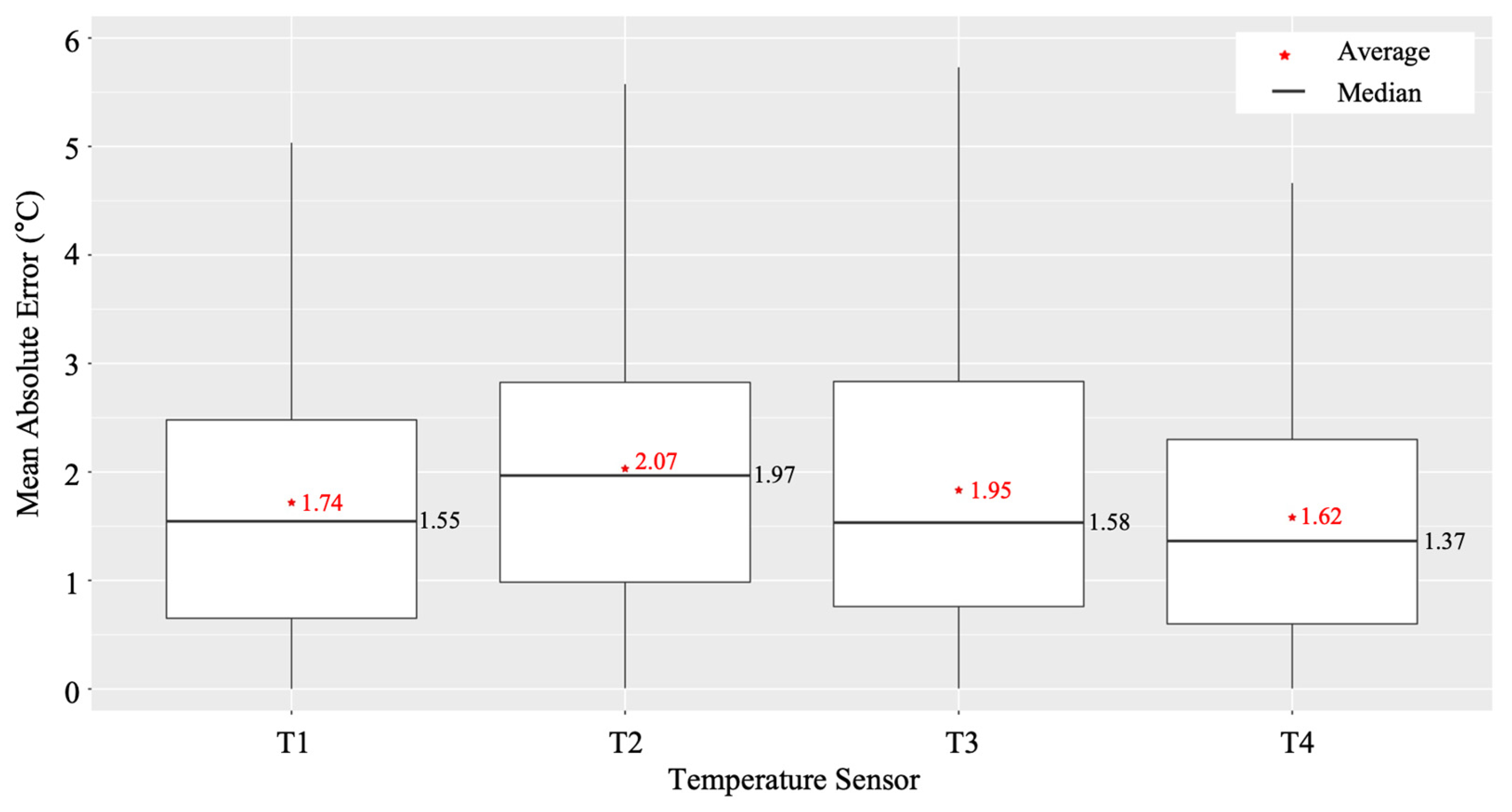

2.3.1. Temperature Forecasting

2.3.2. Harvest Prediction

3. Results

3.1. Object Detection Using a Clip-Type IoT Camera

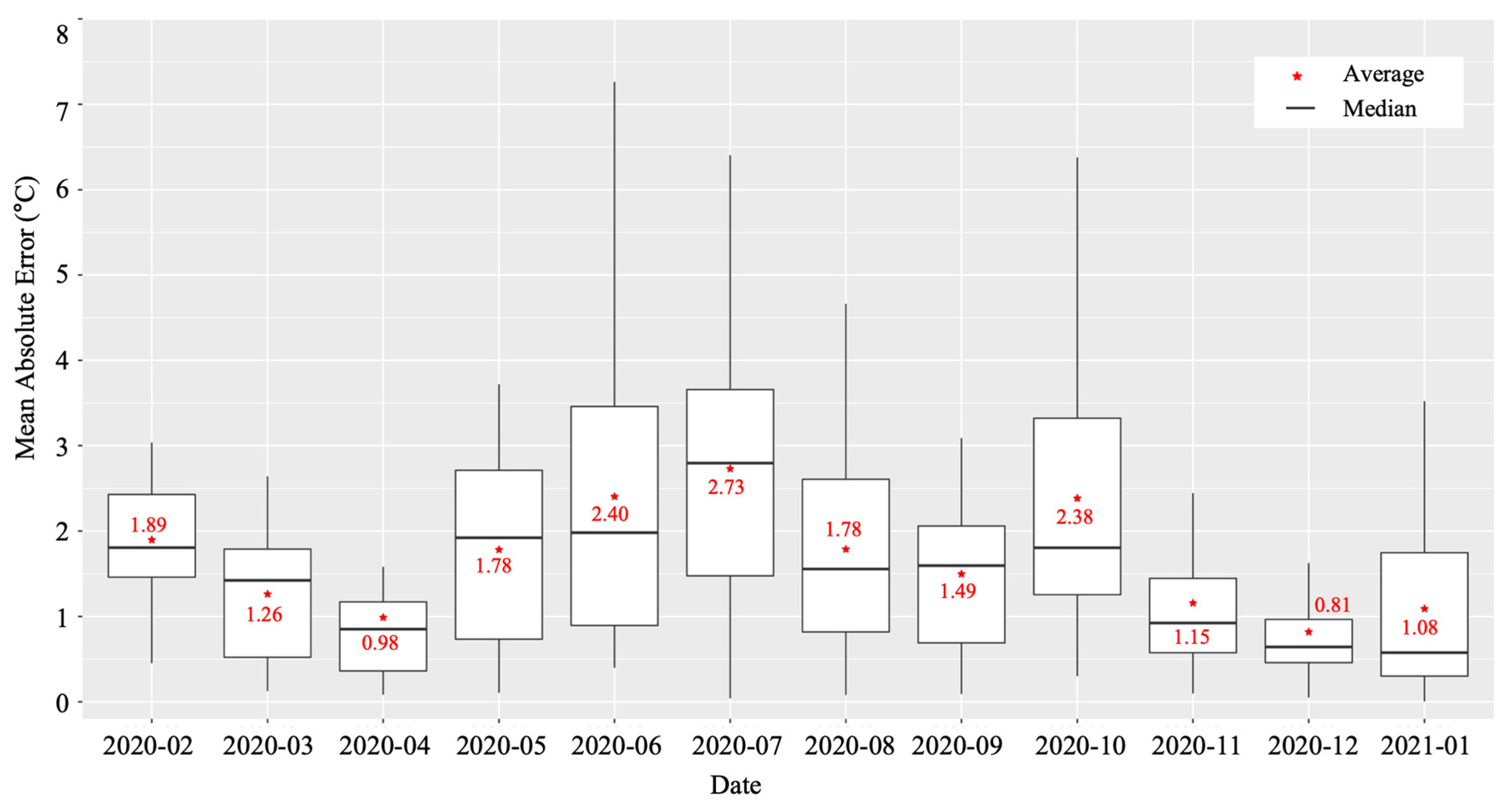

3.2. Temperature Forecasting

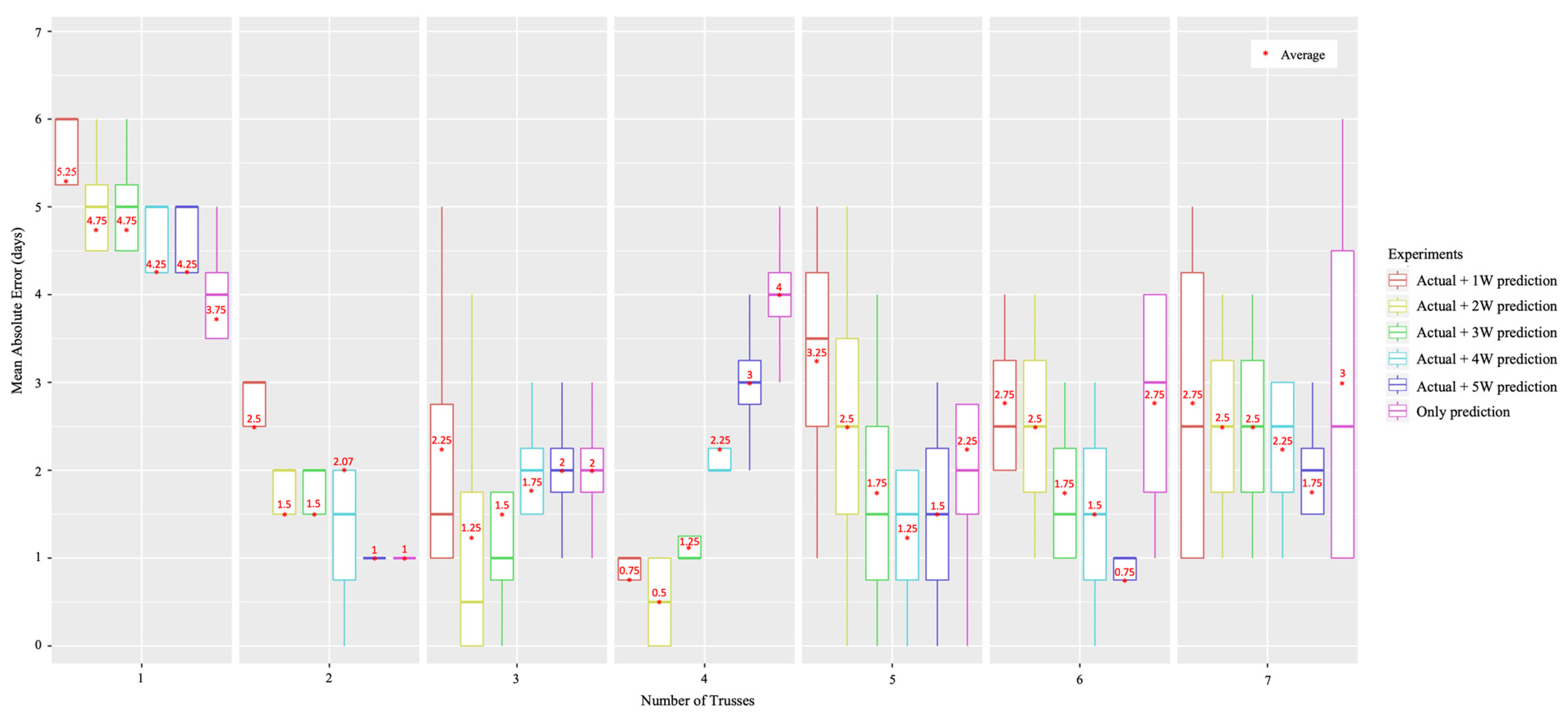

3.3. Harvest Prediction

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Das, S.P.; Moharana, D.P.; Aslam, T.; Nayak, N.J.; Mandal, A.R. Correlation studies of different growth, quality and yield attributing parameters of tomato (Solanum lycopersicum L.). Int. J. Agric. Environ. Biores. 2017, 2, 217–223. [Google Scholar]

- Kapach, K.; Barnea, E.; Mairon, R.; Edan, Y.; Ben-Shahr, O. Computer vision for fruit harvesting robots—State of the art and challenges ahead. Int. J. Comput. Vis. Robot. 2012, 3, 4–34. [Google Scholar] [CrossRef]

- Stajnko, D.; Lakota, M.; Hočevar, M. Estimation of number and diameter of apple fruits in an orchard during the growing season by thermal imaging. Comput. Electron. Agric. 2004, 42, 31–42. [Google Scholar] [CrossRef]

- Wang, Q.; Nuske, S.; Bergerman, M.; Singh, S. Automated crop yield estimation for apple orchards. In Proceedings of the International Symposium on Experimental Robotics, Québec City, QC, Canada, 18–21 June 2012. [Google Scholar]

- Häni, N.; Roy, P.; Isler, V. A comparative study of fruit detection and counting methods for yield mapping in apple orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Sengupta, S.; Lee, W.S. Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosyst. Eng. 2014, 117, 51–61. [Google Scholar] [CrossRef]

- Malik, Z.; Ziauddin, S.R.A.; Safi, A. Detection and counting of on-tree citrus fruit for crop yield estimation. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 519–523. [Google Scholar] [CrossRef]

- Nisar, H.; Yang, H.Z.; Ho, Y.K. Predicting Yield of Fruit and Flowers using Digital Image Analysis. Indian J. Sci. Technol. 2015, 8, 32. [Google Scholar] [CrossRef][Green Version]

- Gutiérrez, S.; Wendel, A.; Underwood, J. Ground based hyperspectral imaging for extensive mango yield estimation. Comput. Electron. Agric. 2019, 157, 126–135. [Google Scholar] [CrossRef]

- Song, Y.; Glasbey, C.A.; Horgan, G.W.; Polder, G.; Dieleman, J.A.; van der Heijden, G.W.A.M. Automatic fruit recognition and counting from multiple images. Biosyst. Eng. 2014, 118, 203–215. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Liu, C. Detecting tomatoes in greenhouse scenes by combining AdaBoost classifier and colour analysis. Biosyst. Eng. 2016, 148, 127–137. [Google Scholar] [CrossRef]

- Liu, G.; Mao, S.; Kim, J.H. A mature-tomato detection algorithm using machine learning and color analysis. Sensors 2019, 19, 2023. [Google Scholar] [CrossRef] [PubMed]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; Mccool, C. DeepFruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multi-species fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of key organs in tomato based on deep migration learning in a complex background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Eizentals, P. Picking System for Automatic Harvesting of Sweet Pepper: Sensing and Mechanism. Ph.D. Thesis, Kochi University of Technology Academic Resource Repository, Kochi, Japan, 2016. Available online: http://hdl.handle.net/10173/1417 (accessed on 12 March 2022).

- Yuan, T.; Zhang, S.; Sheng, X.; Wang, D.; Gong, Y.; Li, W. An autonomous pollination robot for hormone treatment of tomato flower in greenhouse. In Proceedings of the 3rd International Conference on Systems and Informatics (ICSAI), Shanghai, China, 19–21 November 2016; pp. 108–113. [Google Scholar]

- Seo, D.; Cho, B.-H.; Kim, K.-C. Development of Monitoring Robot System for Tomato Fruits in Hydroponic Greenhouses. Agronomy 2021, 11, 2211. [Google Scholar] [CrossRef]

- Ploeg, D.V.; Heuvelink, E. Influence of sub-optimal temperature on tomato growth and yield: A review. J. Hortic. Sci. Biotechnol. 2005, 80, 652–659. [Google Scholar] [CrossRef]

- Adams, S.R.; Cockshull, K.E.; Cave, C.R.J. Effect of Temperature on the Growth and Development of Tomato Fruits. Ann. Bot. 2001, 88, 869–877. [Google Scholar] [CrossRef]

- M5Camera. Available online: https://docs.m5stack.com/en/unit/m5camera (accessed on 19 March 2022).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767v1. [Google Scholar]

- Taylor, S.J.; Letham, B. Forecasting at Scale. Am. Stat. 2018, 72, 37–45. [Google Scholar] [CrossRef]

- Wolf, S.; Rudich, J.; Marani, A.; Rekah, Y. Predicting harvesting date of processing tomatoes by a simulation model. J. Am. Soc. Hort. Sci. 1986, 111, 11–16. [Google Scholar]

- Iwasaki, Y.; Yamane, A.; Itoh, M.; Goto, C.; Matsumoto, H.; Takaichi, M. Demonstration of Year-Round Production of Tomato Fruits with High Soluble-Solids Content by Low Node-Order Pinching and High-Density Planting. Bull. NARO Crop Sci. 2019, 3, 41–51. (In Japanese) [Google Scholar]

- Yasuba, K.; Suzuki, K.; Sasaki, H.; Higashide, T.; Takaichi, M. Fruit Yield and Environmental Condition under Integrative Environment Control for High Yielding Production at Long-time Culture of Tomato. Bull. Natl. Inst. Veg. Tea Sci. 2011, 10, 85–93. (In Japanese) [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Hyperparameter Tuning of Prophet Package. Available online: http://facebook.github.io/prophet/docs/diagnostics.html#hyperparameter-tuning (accessed on 14 March 2022).

| YOLO Architectures | BFLOPS |

|---|---|

| YOLO v3 | 115.938 |

| YOLO v2 | 52.083 |

| YOLO v3-Tiny | 9.673 |

| YOLO v2-Tiny | 12.329 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, U.; Islam, M.P.; Kochi, N.; Tokuda, K.; Nakano, Y.; Naito, H.; Kawasaki, Y.; Ota, T.; Sugiyama, T.; Ahn, D.-H. An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System. Sensors 2022, 22, 2456. https://doi.org/10.3390/s22072456

Lee U, Islam MP, Kochi N, Tokuda K, Nakano Y, Naito H, Kawasaki Y, Ota T, Sugiyama T, Ahn D-H. An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System. Sensors. 2022; 22(7):2456. https://doi.org/10.3390/s22072456

Chicago/Turabian StyleLee, Unseok, Md Parvez Islam, Nobuo Kochi, Kenichi Tokuda, Yuka Nakano, Hiroki Naito, Yasushi Kawasaki, Tomohiko Ota, Tomomi Sugiyama, and Dong-Hyuk Ahn. 2022. "An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System" Sensors 22, no. 7: 2456. https://doi.org/10.3390/s22072456

APA StyleLee, U., Islam, M. P., Kochi, N., Tokuda, K., Nakano, Y., Naito, H., Kawasaki, Y., Ota, T., Sugiyama, T., & Ahn, D.-H. (2022). An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System. Sensors, 22(7), 2456. https://doi.org/10.3390/s22072456