Customizable FPGA-Based Hardware Accelerator for Standard Convolution Processes Empowered with Quantization Applied to LiDAR Data

Abstract

:1. Introduction

2. State of The Art

2.1. Deep Learning for 3D Point Cloud

2.2. Convolution Implementations in FPGAs

2.2.1. Sliding Window Dataflow

2.2.2. Rescheduled Dataflow Optimized for Energy Efficiency

2.3. Optimization Methods

3. Convolution Hardware-Based Block

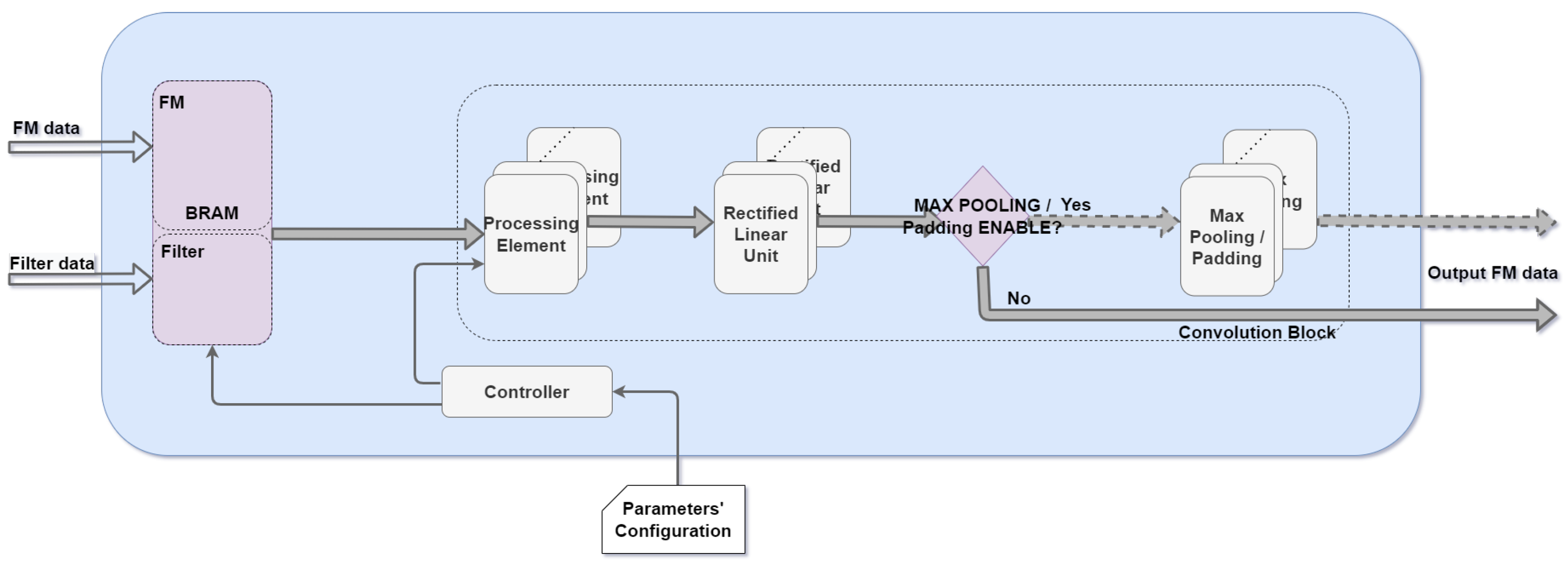

3.1. Block Architecture

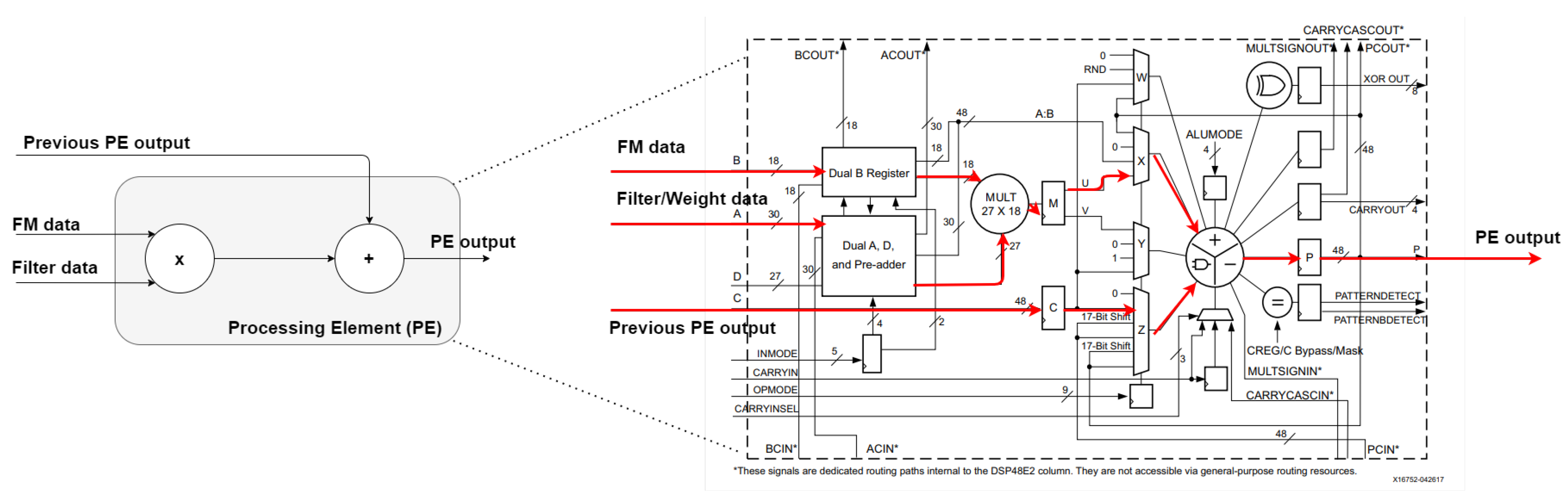

3.2. Processing Element

3.3. Memory Access and Dataflow

3.4. Board Resources-Driven Architecture

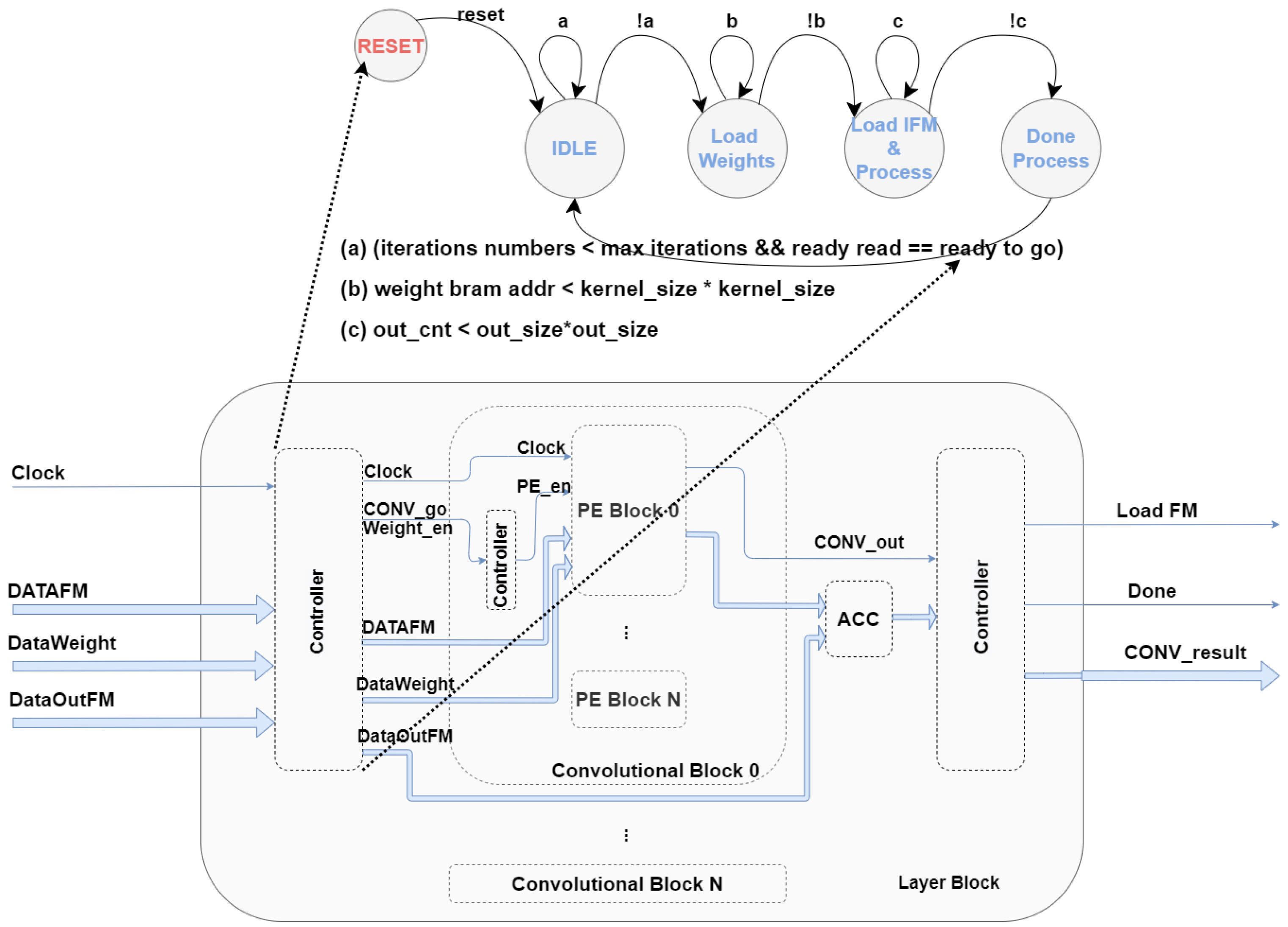

3.5. Filters Iteration—Control Module

3.6. Optimization Methods

Architecture Reconfiguration with 8 Bit Quantization

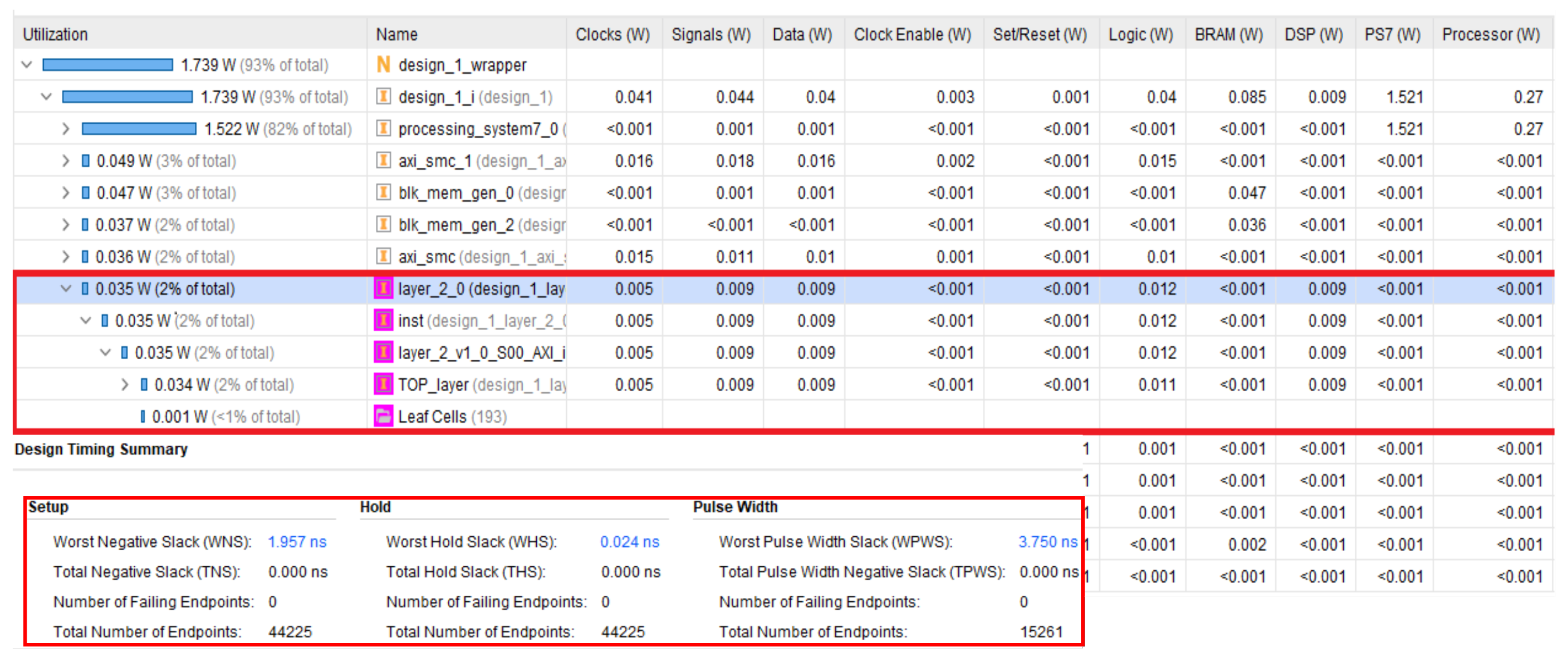

4. Implementation

5. Results

5.1. Generic Convolution

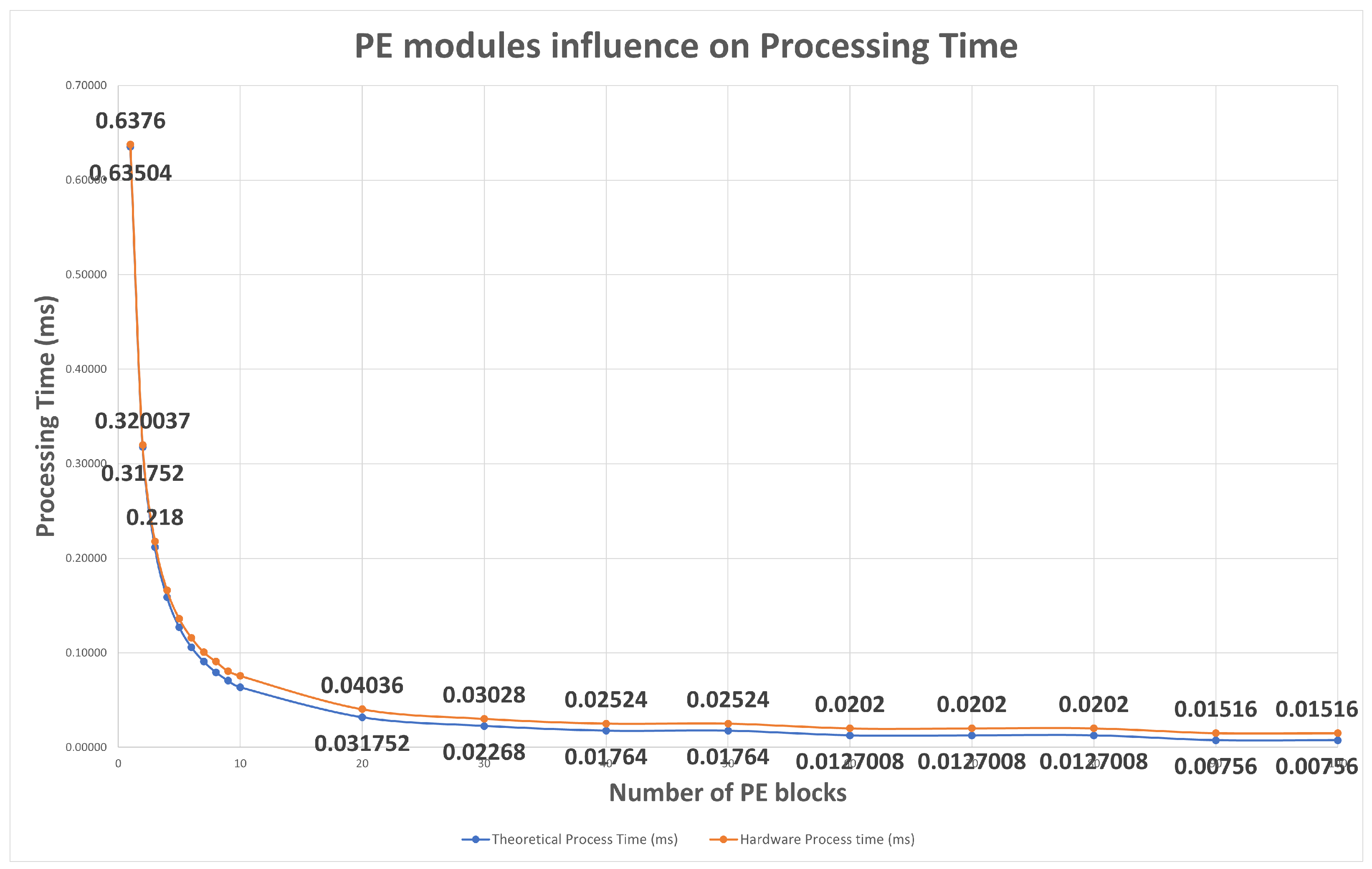

5.1.1. Parallelism Influence on Processing Time

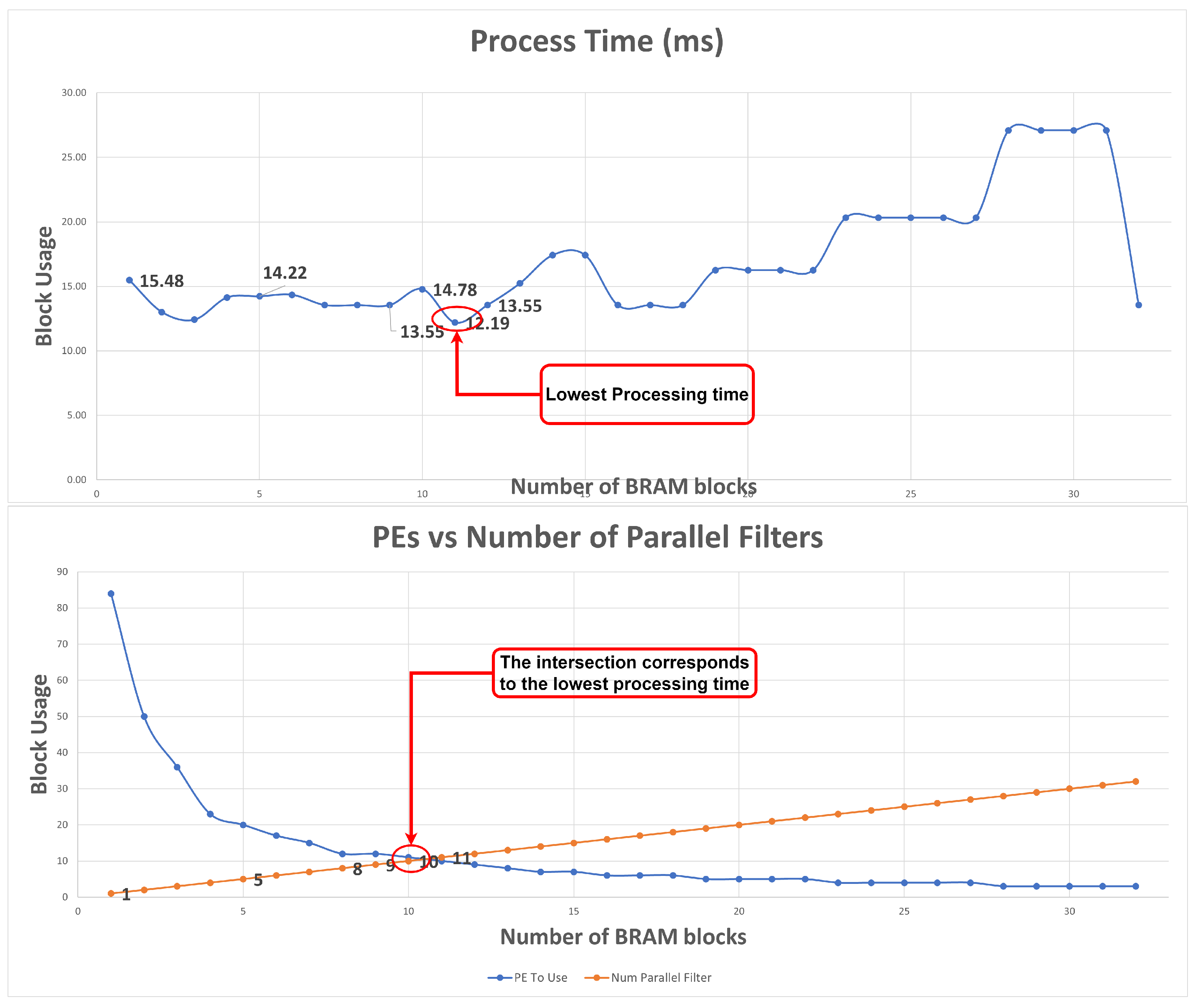

5.1.2. Block RAM Influence on PEs/Number of Parallel Filter/Processing Time

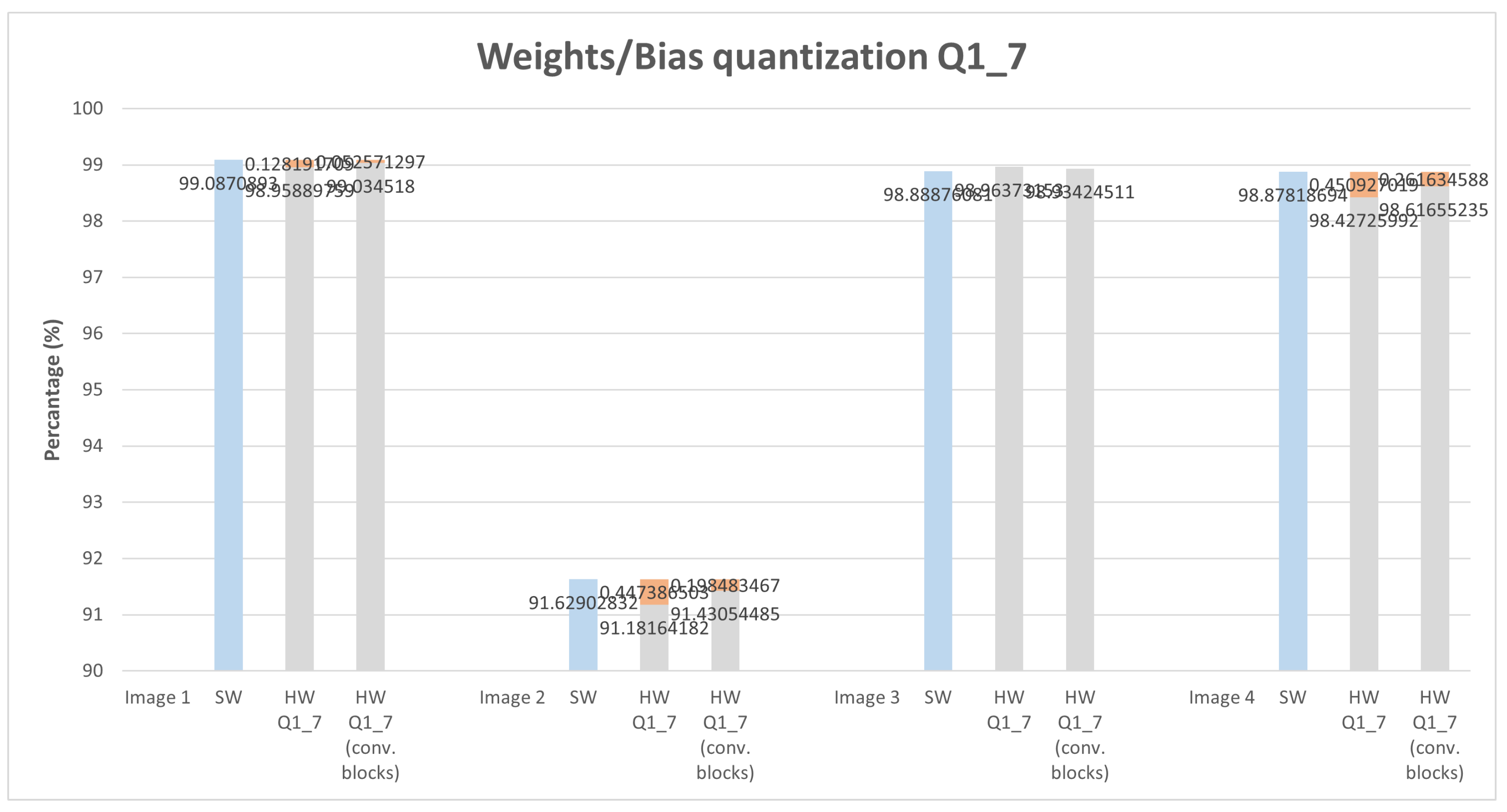

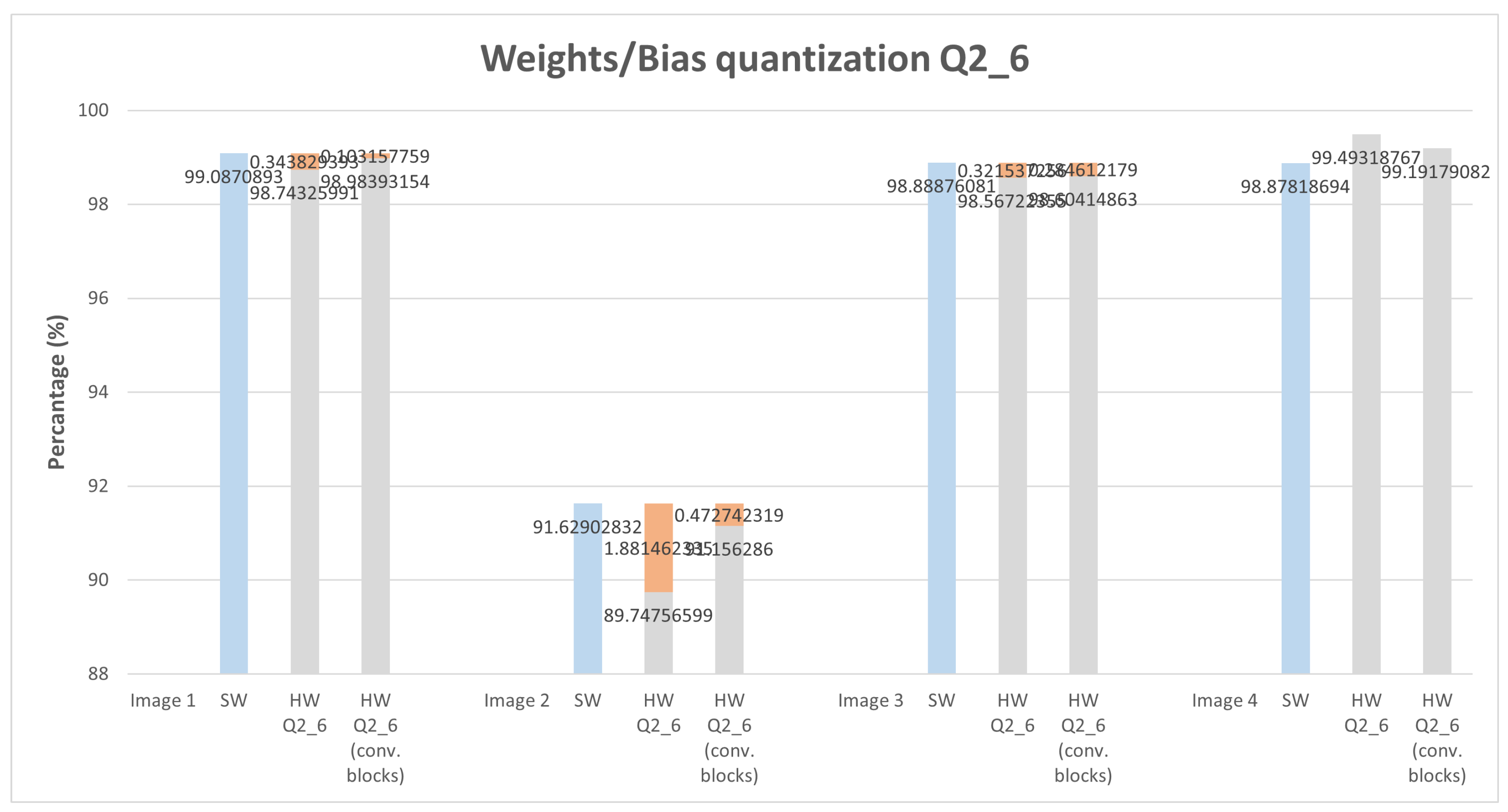

5.2. Quantization Influence Study

5.2.1. MNIST Dataset Model

Convolution Weights and Bias Quantized

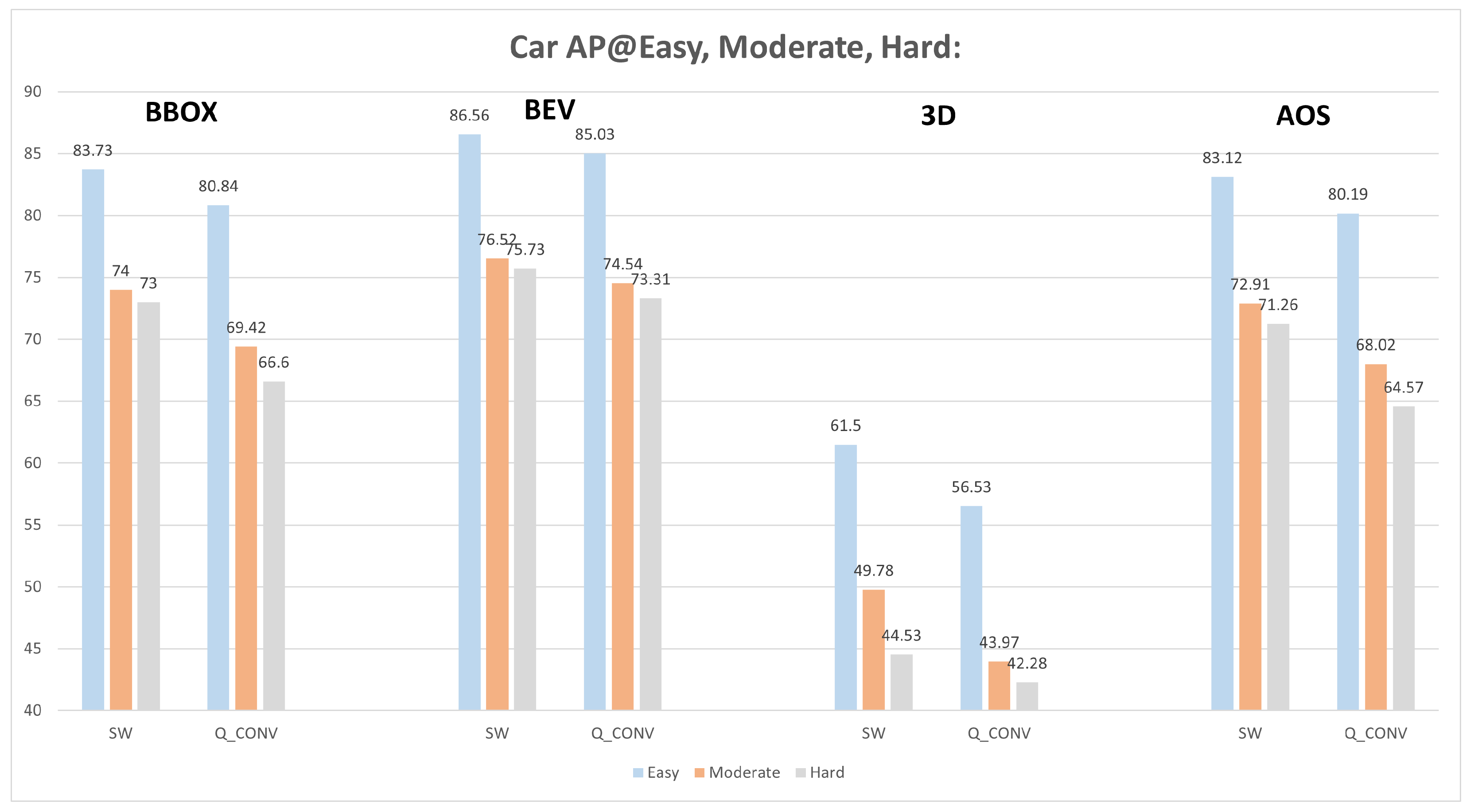

5.2.2. PointPillars Model

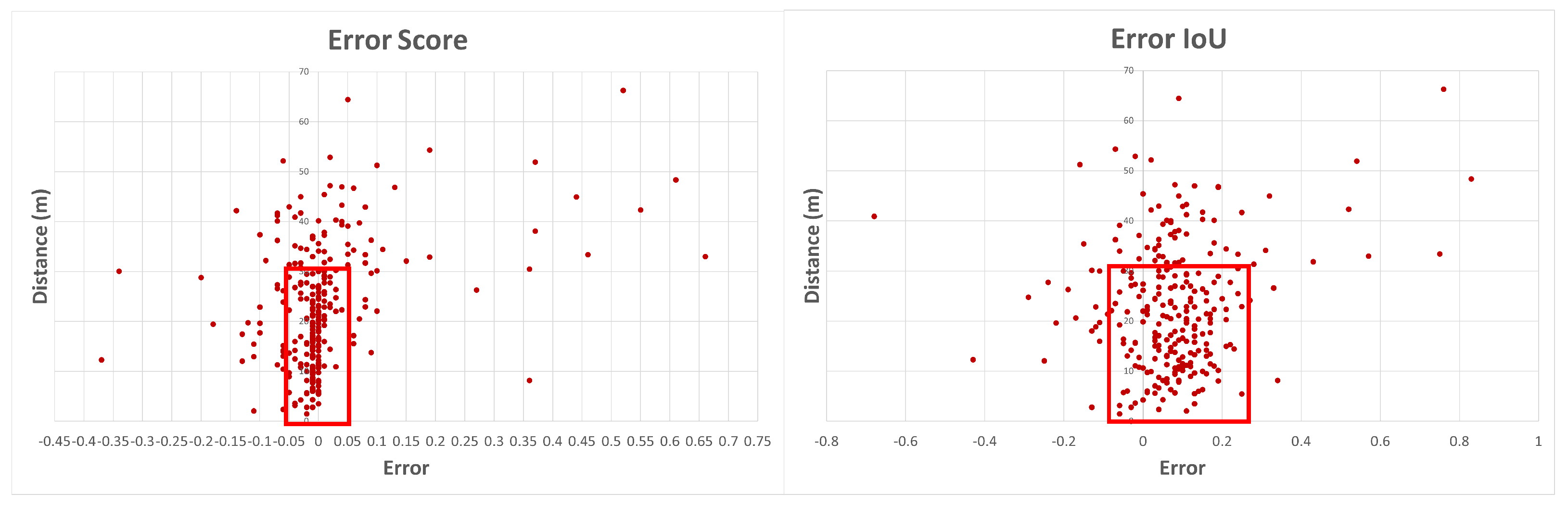

Quantized Convolution Weights

Convolution Layer Hardware Replacement

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kato, S.; Takeuchi, E.; Ishiguro, Y.; Ninomiya, Y.; Takeda, K.; Hamada, T. An open approach to autonomous vehicles. IEEE Micro 2015, 35, 60–68. [Google Scholar] [CrossRef]

- Cui, J.; Liew, L.S.; Sabaliauskaite, G.; Zhou, F. A review on safety failures, security attacks, and available countermeasures for autonomous vehicles. Ad Hoc Netw. 2019, 90, 101823. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Cao, D.; Li, J.; Chapman, M.A. Deep learning for lidar point clouds in autonomous driving: A review. arXiv 2020, arXiv:2005.09830. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multiview 3d object detection network for autonomous driving. arXiv 2016, arXiv:1611.07759. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. arXiv 2017, arXiv:1712.02294. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. arXiv 2018, arXiv:1812.05784. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. arXiv 2017, arXiv:1711.06396. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. arXiv 2017, arXiv:1711.08488. [Google Scholar]

- Wang, B.; An, J.; Cao, J. Voxel-fpn: Multi-scale voxel feature aggregation in 3d object detection from point clouds. arXiv 2019, arXiv:1907.05286. [Google Scholar]

- Wang, Z.; Jia, K. Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection. arXiv 2019, arXiv:1903.01864. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. arXiv 2016, arXiv:1612.00593. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-time 3d object detection from point clouds. arXiv 2019, arXiv:1902.06326. [Google Scholar]

- Chen, Y.-H.; Member, S.; Yang, T.-J.; Emer, J.; Sze, V.; Member, S. Eyeriss v2: A flexible accelerator for emerging deep neural networks on mobile devices. arXiv 2019, arXiv:1807.07928. [Google Scholar]

- Desoli, G.; Chawla, N.; Boesch, T.; Singh, S.; Guidetti, E.; de Ambroggi, F.; Majo, T.; Zambotti, P.; Ayodhyawasi, M.; Singh, H.; et al. 14.1 a 2.9tops/w deep convolutional neural network soc in fd-soi 28 nm for intelligent embedded systems. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 238–239. [Google Scholar]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing Fpga-Based Accelerator Design for Deep Convolutional Neural Networks; Association for Computing Machinery, Inc.: New York, NY, USA, 2015; pp. 161–170. [Google Scholar]

- Jahanshahi, A. Tinycnn: A tiny modular CNN accelerator for embedded FPGA. arXiv 2019, arXiv:1911.06777. [Google Scholar]

- Shen, Y.; Ferdman, M.; Milder, P. Maximizing CNN accelerator efficiency through resource partitioning. arXiv 2017, arXiv:1607.00064. [Google Scholar]

- Jo, J.; Kim, S.; Park, I.C. Energy-efficient convolution architecture based on rescheduled dataflow. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 4196–4207. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- LeCun, Y. Lenet-5, Convolutional Neural Networks. Available online: Http://yann.lecun.com/exdb/lenet (accessed on 9 March 2021).

- Zhang, X.; Zou, J.; He, K.; Sun, J. Accelerating very deep convolutional networks for classification and detection. arXiv 2015, arXiv:1505.06798. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef] [Green Version]

- Lo, C.Y.; Sham, C.-W. Energy Efficient Fixed-point Inference System of Convolutional Neural Network. In Proceedings of the 2020 IEEE 63rd International Midwest Symposium on Circuits and Systems (MWSCAS), Springfield, MA, USA, 9–12 August 2020. [Google Scholar]

- Ansari, A.; Ogunfunmi, T. Empirical analysis of fixed point precision quantization of CNNs. In Proceedings of the 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS), Dallas, TX, USA, 4–7 August 2019; pp. 243–246. [Google Scholar]

- Lo, C.Y.; Lau, F.C.-M.; Sham, C.-W. FixedPoint Implementation of Convolutional Neural Networks for Image Classification. In Proceedings of the 2018 International Conference on Advanced Technologies for Communications (ATC), Ho Chi Minh City, Vietnam, 18–20 October 2018. [Google Scholar]

- Libano, F.; Wilson, B.; Wirthlin, M.; Rech, P.; Brunhaver, J. Understanding the impact of quantization, accuracy, and radiation on the reliability of convolutional neural networks on fpgas. IEEE Trans. Nucl. Sci. 2020, 67, 1478–1484. [Google Scholar] [CrossRef]

- Xilinx Inc. Convolutional Neural Network with INT4 Optimization on Xilinx Devices 2 Convolutional Neural Network with INT4 Optimization on Xilinx Devices; Xilinx Inc.: San Jose, CA, USA, 2020. [Google Scholar]

- Fu, Y.; Wu, E.; Sirasao, A. 8-Bit Dot-Product Acceleration White Paper (wp487); Xilinx Inc.: San Jose, CA, USA, 2017. [Google Scholar]

- Vestias, M.P.; Duarte, R.P.; de Sousa, J.T.; Neto, H. Hybrid dot-product calculation for convolutional neural networks in FPGA. In Proceedings of the 2019 29th International Conference on Field Programmable Logic and Applications (FPL), Barcelona, Spain, 8–12 September 2019; pp. 350–353. [Google Scholar]

- Xilinx Inc. Ultrascale Architecture DSP Slice User Guide; Xilinx Inc.: San Jose, CA, USA, 2021. [Google Scholar]

- Diligent Inc. Zybo Z7 Board Reference Manual; Diligent Inc.: New York, NY, USA, 2018. [Google Scholar]

| Clock Source | Convolution of a 252 × 252 FM with 3 × 3 Filter | |||

|---|---|---|---|---|

| 100 MHz | LUT | FF | DSP | BRAM |

| Convolution IP | 2044 | 993 | 9 | 0 |

| All Design | 10,832 | 11,425 | 9 | 32.5 blocks |

| SW-Only | Score (0…1) | Location x, y, z (m) | BBOX (Left, Top, Right, Bottom) | Rotation_y | Alpha |

|---|---|---|---|---|---|

| Car 1 | 0.90 | 2.88, 1.74, 6.42 | 770.46, 201.58, 1219.04, 370 | 4.68 | 4.27 |

| Car 2 | 0.95 | 2.96, 1.54, 13.24 | 702.26, 182.34, 843.80, 277.94 | 4.69 | 4.47 |

| Car 3 | 0.86 | 2.85, 1.55, 19.36 | 677.58, 181.34, 745.63, 243.11 | 4.78 | 4.63 |

| Car 4 | 0.84 | −6.13, 1.88, 23.85 | 373.33, 191.57, 463.90, 241.74 | 1.67 | 1.92 |

| Car 5 | 0.72 | −6.55, 1.69, 46.78 | 489.81, 182.45, 518.89, 207.38 | 1.49 | 1.62 |

| Car 6 | 0.73 | 2.70, 1.03, 50.08 | 630.30, 173.84, 655.06, 195.71 | 4.71 | 4.66 |

| SW-HW | Score (0…1) | Location x, y, z (m) | BBOX (Left, Top, Right, Bottom) | Rotation_y | Alpha |

|---|---|---|---|---|---|

| Car 1 | 0.77 | 2.52, 1.51, 6.55 | 753.33, 188.01, 1102.09, 370 | 4.74 | 4.39 |

| Car 2 | 0.83 | 2.55, 1.39, 12.97 | 691.09, 177.49, 813.00, 269.46 | 4.74 | 4.54 |

| Car 3 | 0.83 | 2.58, 1.31, 19.16 | 670.47, 175.11, 735.29, 234.51 | 4.79 | 4.66 |

| Car 4 | 0.85 | −5.81, 1.69, 23.56 | 379.53, 186.77, 472.51, 236.24 | 4.89 | 5.13 |

| Car 5 | 0.82 | −6.45, 1.52, 46.16 | 487.63, 180.95, 521.33, 204.80 | 4.74 | 4.88 |

| Car 6 | - | - | - | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Silva, J.; Pereira, P.; Machado, R.; Névoa, R.; Melo-Pinto, P.; Fernandes, D. Customizable FPGA-Based Hardware Accelerator for Standard Convolution Processes Empowered with Quantization Applied to LiDAR Data. Sensors 2022, 22, 2184. https://doi.org/10.3390/s22062184

Silva J, Pereira P, Machado R, Névoa R, Melo-Pinto P, Fernandes D. Customizable FPGA-Based Hardware Accelerator for Standard Convolution Processes Empowered with Quantization Applied to LiDAR Data. Sensors. 2022; 22(6):2184. https://doi.org/10.3390/s22062184

Chicago/Turabian StyleSilva, João, Pedro Pereira, Rui Machado, Rafael Névoa, Pedro Melo-Pinto, and Duarte Fernandes. 2022. "Customizable FPGA-Based Hardware Accelerator for Standard Convolution Processes Empowered with Quantization Applied to LiDAR Data" Sensors 22, no. 6: 2184. https://doi.org/10.3390/s22062184

APA StyleSilva, J., Pereira, P., Machado, R., Névoa, R., Melo-Pinto, P., & Fernandes, D. (2022). Customizable FPGA-Based Hardware Accelerator for Standard Convolution Processes Empowered with Quantization Applied to LiDAR Data. Sensors, 22(6), 2184. https://doi.org/10.3390/s22062184