A New Method for Absolute Pose Estimation with Unknown Focal Length and Radial Distortion

Abstract

:1. Introduction

2. Problem and Method Statement

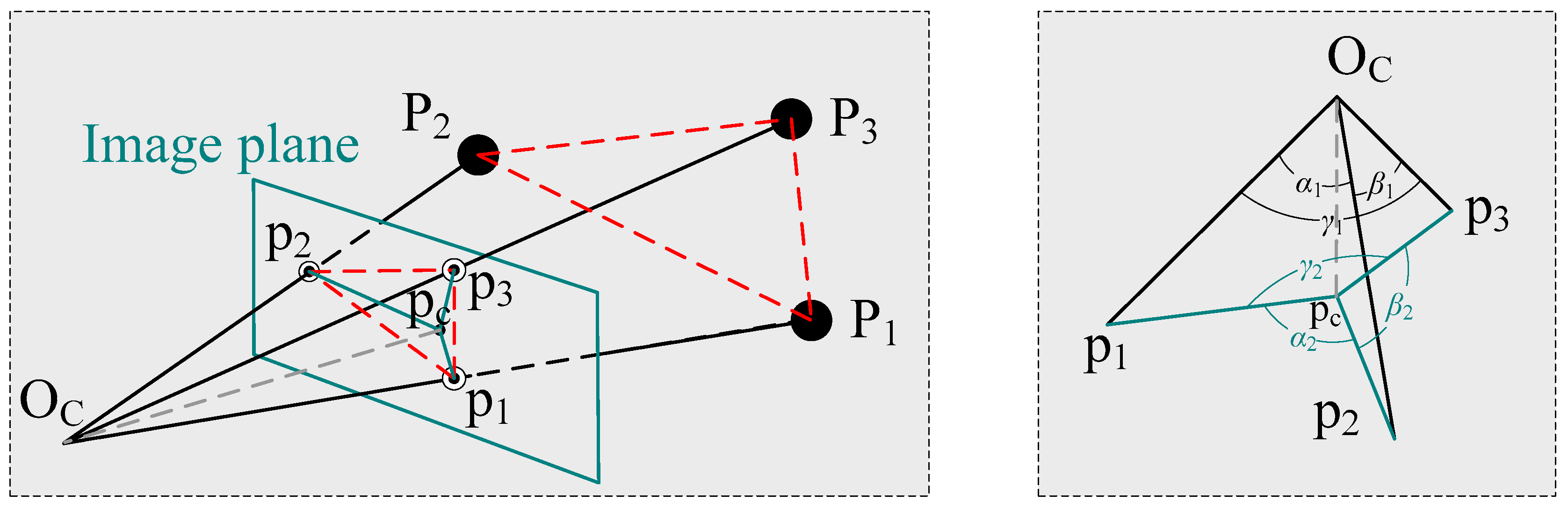

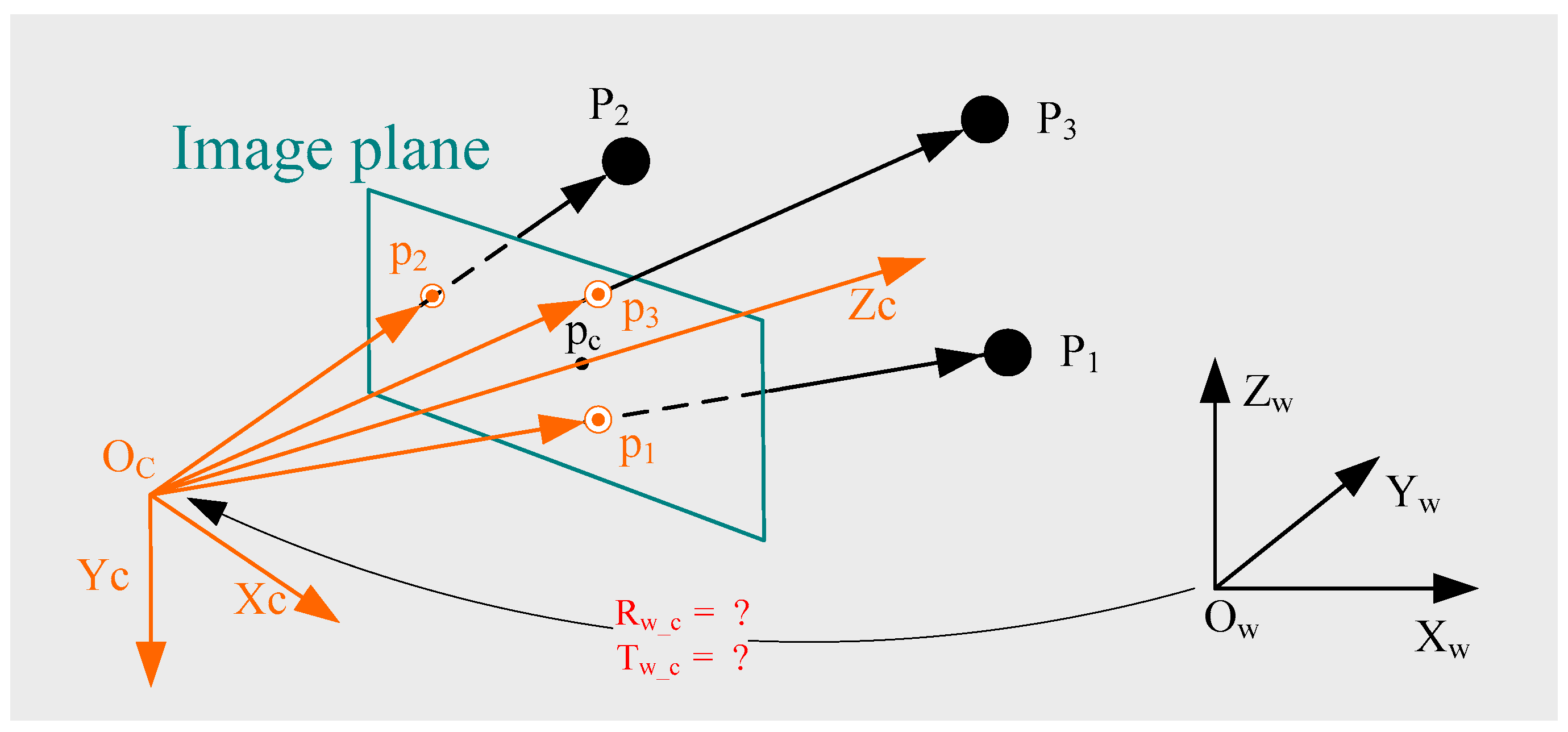

2.1. Problem Statement

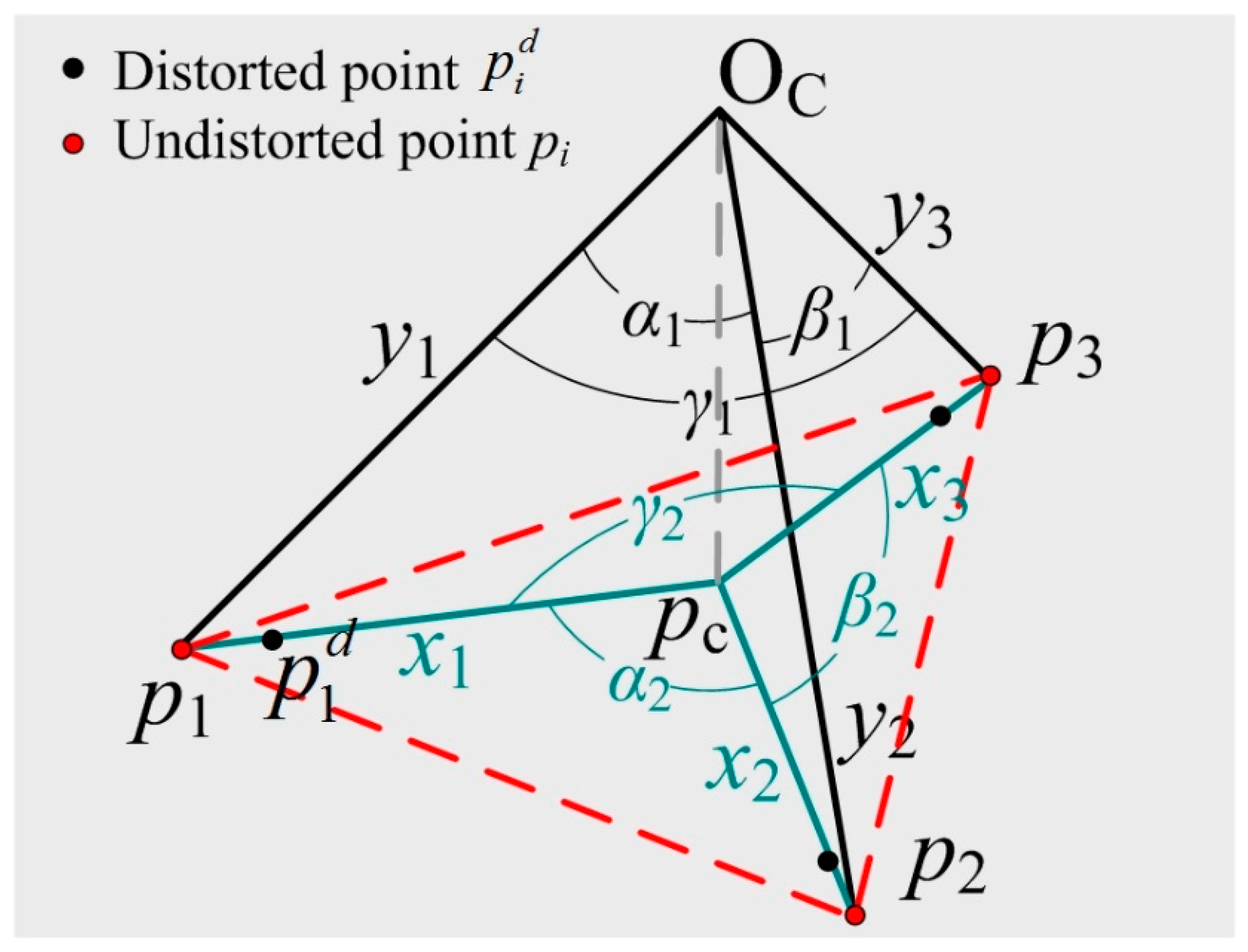

2.2. Radial Distortion and Focal Length Estimation

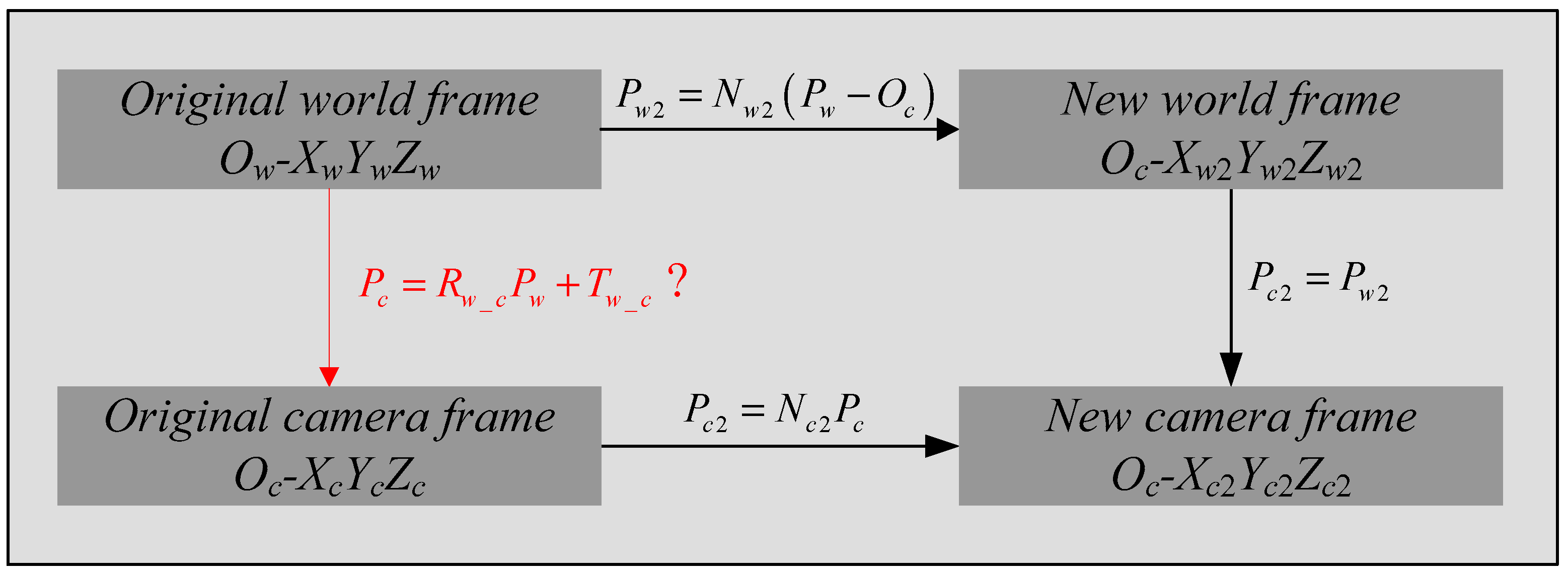

2.3. Camera Pose Estimation

3. Experiments and Results

3.1. Synthetic Data

3.1.1. Numerical Stability

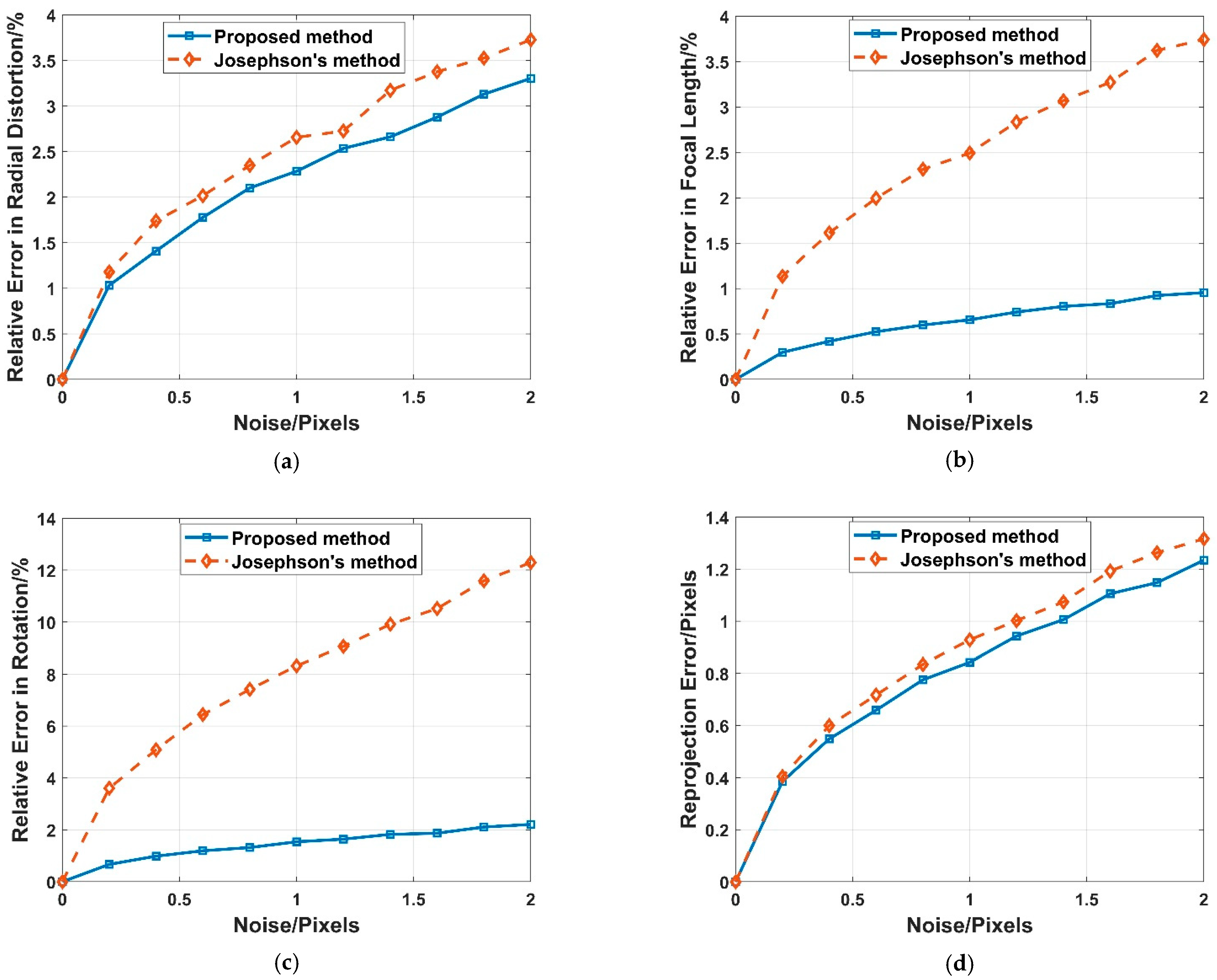

3.1.2. Noise Sensitivity

3.1.3. Computational Speed

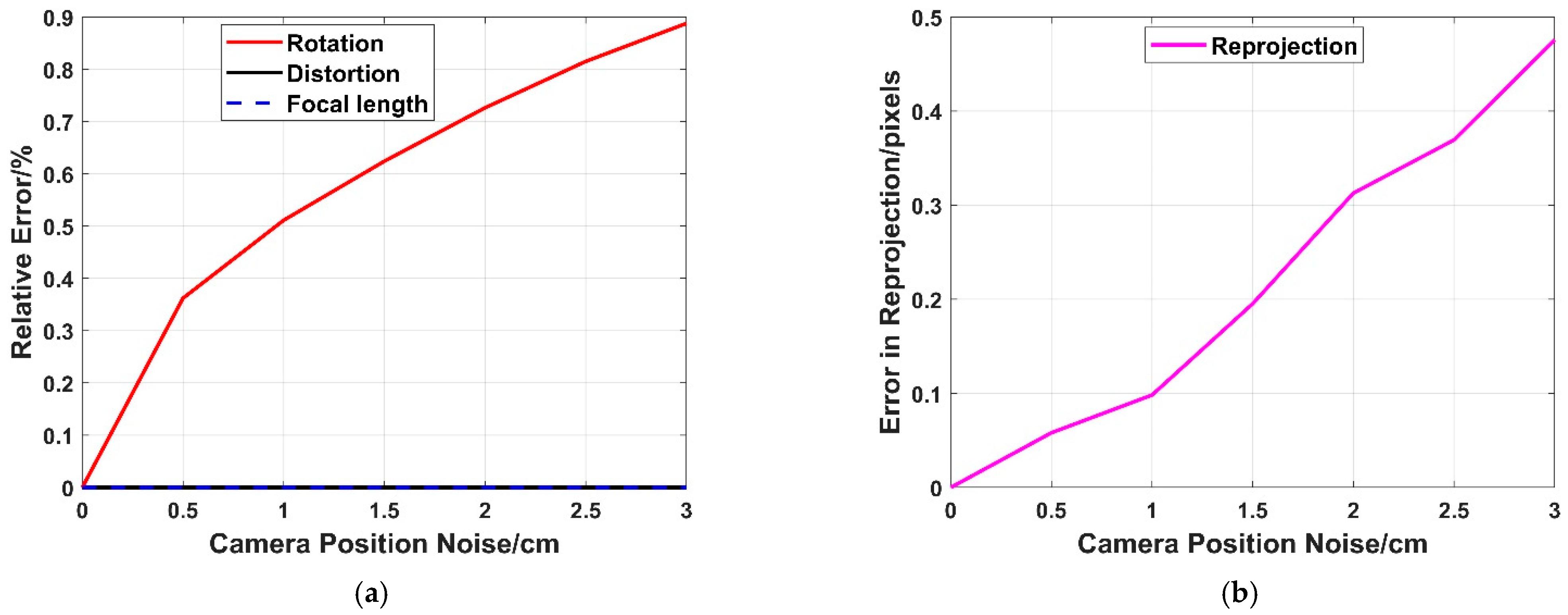

3.1.4. Robustness to Camera Position Noise

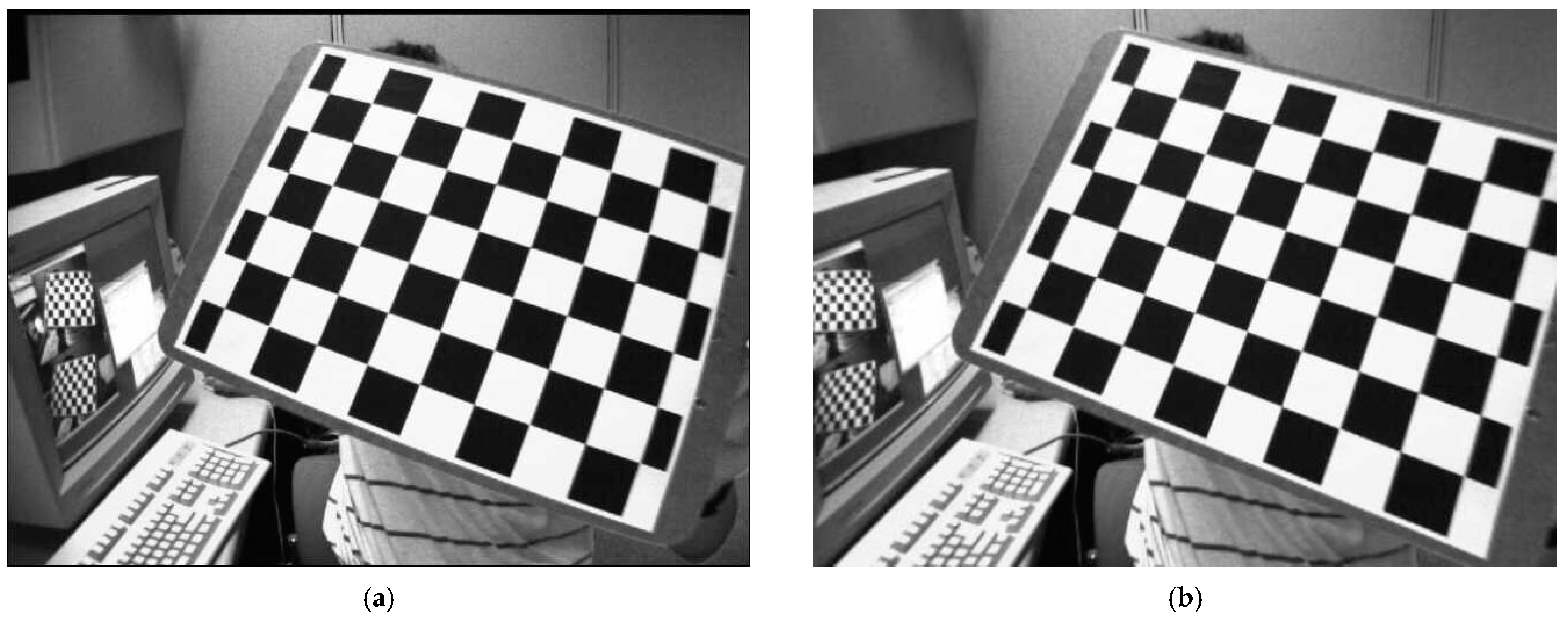

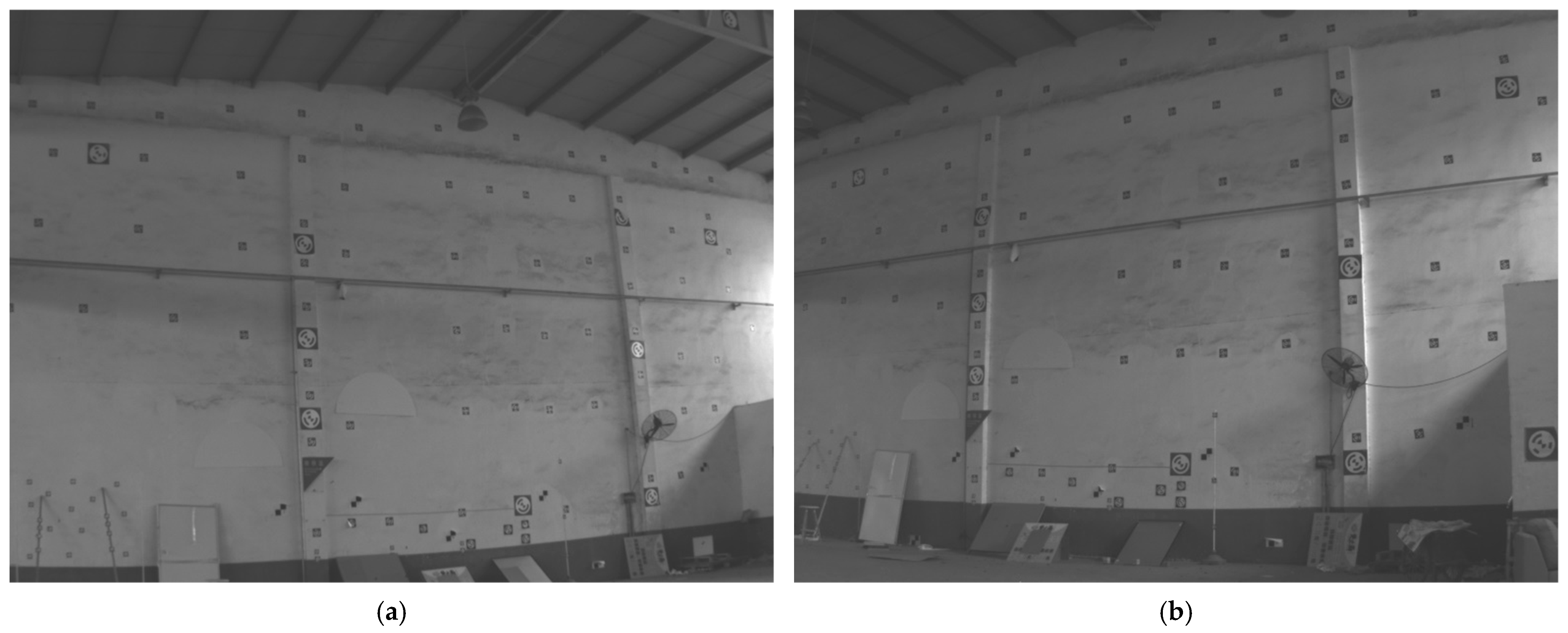

3.2. Real Images

4. Discussion

4.1. Difference and Advantage

4.2. Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kukelova, Z.; Heller, J.; Bujnak, M.; FitzGibbon, A.; Pajdla, T. Efficient solution to the epipolar geometry for radially distorted cameras. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2309–2317. [Google Scholar]

- Sweeney, C.M. Modeling and Calibrating the Distributed Camera; University of California: Santa Barbara, CA, USA, 2016. [Google Scholar]

- Camposeco, F.; Sattler, T.; Pollefeys, M. Non-parametric structure-based calibration of radially symmetric cameras. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2192–2200. [Google Scholar]

- Kukelova, Z.; Heller, J.; Bujnak, M.; Pajdla, T. Radial distortion homography. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 639–647. [Google Scholar]

- Larsson, V.; Sattler, T.; Kukelova, Z.; Pollefeys, M. Revisiting radial distortion absolute pose. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1062–1071. [Google Scholar]

- Jiang, F.; Kuang, Y.; Solem, J.E.; Åström, K. A minimal solution to relative pose with unknown focal length and radial distortion. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 443–456. [Google Scholar]

- Nakano, G. A versatile approach for solving PnP, PnPf, and PnPfr problems. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 338–352. [Google Scholar]

- Wu, Y.; Tang, F.; Li, H. Image-based camera localization: An overview. Vis. Comput. Ind. Biomed. Art 2018, 1, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sattler, T.; Sweeney, C.; Pollefeys, M. On sampling focal length values to solve the absolute pose problem. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 828–843. [Google Scholar]

- Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2344–2351. [Google Scholar]

- Ferraz, L.; Binefa, X.; Moreno-Noguer, F. Very fast solution to the PnP problem with algebraic outlier rejection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 501–508. [Google Scholar]

- Bujnák, M. Algebraic Solutions to Absolute Pose Problems. Ph.D. Thesis, Czech Technical University, Prague, Czech Republic, 2012. [Google Scholar]

- Youyang, F.; Qing, W.; Yuan, Y.; Chao, Y. Robust improvement solution to perspective-n-point problem. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419885700. [Google Scholar] [CrossRef]

- Wolfe, W.; Mathis, D.; Sklair, C.; Magee, M. The perspective view of three points. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 66–73. [Google Scholar] [CrossRef]

- Wang, P.; Xu, G.; Wang, Z.; Cheng, Y. An efficient solution to the perspective-three-point pose problem. Comput. Vis. Image Underst. 2018, 166, 81–87. [Google Scholar] [CrossRef]

- Gao, X.S.; Hou, X.R.; Tang, J.; Cheng, H.F. Complete solution classification for the perspective-three-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 930–943. [Google Scholar]

- Triggs, B. Camera pose and calibration from 4 or 5 known 3d points. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999; Volume 1, pp. 278–284. [Google Scholar]

- Larsson, V.; Kukelova, Z.; Zheng, Y. Making minimal solvers for absolute pose estimation compact and robust. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2316–2324. [Google Scholar]

- Kukelova, Z.; Albl, C.; Sugimoto, A.; Schindler, K.; Pajdla, T. Minimal rolling shutter absolute pose with unknown focal length and radial distortion. In Proceedings of the European Conference on Computer Vision, Online, 23–28 August 2020; pp. 698–714. [Google Scholar]

- Bujnak, M.; Kukelova, Z.; Pajdla, T. New efficient solution to the absolute pose problem for camera with unknown focal length and radial distortion. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 11–24. [Google Scholar]

- Josephson, K.; Byrod, M. Pose estimation with radial distortion and unknown focal length. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; pp. 2419–2426. [Google Scholar]

- Huang, K.; Ziauddin, S.; Zand, M.; Greenspan, M. One shot radial distortion correction by direct linear transformation. In Proceedings of the IEEE International Conference on Image Processing, Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 473–477. [Google Scholar]

- D’Alfonso, L.; Garone, E.; Muraca, P.; Pugliese, P. On the use of IMUs in the PnP Problem. In Proceedings of the International Conference on Robotics and Automation, Hong Kong, China, 31 May–5 June 2014; pp. 914–919. [Google Scholar]

- Ornhag, M.V.; Persson, P.; Wadenback, M.; Astrom, K.; Heyden, A. Efficient real-time radial distortion correction for UAVs. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Online, 5–9 January 2021; pp. 1751–1760. [Google Scholar]

- Kukelova, Z.; Bujnak, M.; Pajdla, T. Closed-form solutions to minimal absolute pose problems with known vertical direction. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 216–229. [Google Scholar]

- Sweeney, C.; Flynn, J.; Nuernberger, B.; Turk, M.; Höllerer, T. Efficient computation of absolute pose for gravity-aware augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; pp. 19–24. [Google Scholar]

- Chang, Y.J.; Chen, T. Multi-view 3D reconstruction for scenes under the refractive plane with known vertical direction. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 351–358. [Google Scholar]

- D’Alfonso, L.; Garone, E.; Muraca, P.; Pugliese, P. P3P and P2P problems with known camera and object vertical directions. In Proceedings of the Mediterranean Conference on Control and Automation, Crete, Greece, 25–28 June 2013; pp. 444–451. [Google Scholar]

- Kukelova, Z.; Pajdla, T. A minimal solution to the autocalibration of radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–7. [Google Scholar]

- Oskarsson, M. Fast solvers for minimal radial distortion relative pose problems. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online Conference, 19–25 June 2021; pp. 3668–3677. [Google Scholar]

- Barreto, J.P.; Daniilidis, K. Fundamental matrix for cameras with radial distortion. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005; Volume 1, pp. 625–632. [Google Scholar]

- Kuang, Y.; Solem, J.E.; Kahl, F.; Astrom, K. Minimal solvers for relative pose with a single unknown radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 33–40. [Google Scholar]

- Steele, R.M.; Jaynes, C. Overconstrained linear estimation of radial distortion and multi-view geometry. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 253–264. [Google Scholar]

- Fitzgibbon, A.W. Simultaneous linear estimation of multiple view geometry and lens distortion. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1. [Google Scholar]

- Kukelova, Z.; Bujnak, M.; Pajdla, T. Real-time solution to the absolute pose problem with unknown radial distortion and focal length. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2816–2823. [Google Scholar]

- Guo, K.; Ye, H.; Gu, J.; Chen, H. A novel method for intrinsic and extrinsic parameters estimation by solving perspective-three-point problem with known camera position. Appl. Sci. 2021, 11, 6014. [Google Scholar] [CrossRef]

- Guo, K.; Ye, H.; Zhao, Z.; Gu, J. An efficient closed form solution to the absolute orientation problem for camera with unknown focal length. Sensors 2021, 21, 6480. [Google Scholar] [CrossRef] [PubMed]

- Sturm, P.; Ramalingam, S. Camera Models and Fundamental Concepts Used in Geometric Computer Vision; Now Publishers Inc.: Boston, MA, USA, 2011. [Google Scholar]

- Kileel, J.; Kukelova, Z.; Pajdla, T.; Sturmfels, B. Distortion varieties. Found. Comput. Math. 2018, 18, 1043–1071. [Google Scholar] [CrossRef]

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, L.; Chen, Y.Q.; Moore, K.L. A new analytical radial distortion model for camera calibration. arXiv 2003, arXiv:cs/0307046. [Google Scholar]

- Henrique Brito, J.; Angst, R.; Koser, K.; Pollefeys, M. Radial distortion self-calibration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1368–1375. [Google Scholar]

- Wang, J.; Shi, F.; Zhang, J.; Liu, Y. A new calibration model of camera lens distortion. Pattern Recognit. 2008, 41, 607–615. [Google Scholar] [CrossRef]

- Duane, C.B. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Papadaki, A.I.; Georgopoulos, A. Development, comparison, and evaluation of software for radial distortion elimination. In Proceedings of the Videometrics, Range Imaging, and Applications XIII, Munich, Germany, 21 June 2015; Volume 9528, p. 95280C. [Google Scholar]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar]

- Lopez, M.; Mari, R.; Gargallo, P.; Kuang, Y.; Gonzalez-Jimenez, J.; Haro, G. Deep single image camera calibration with radial distortion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 11817–11825. [Google Scholar]

- Bukhari, F.; Dailey, M.N. Automatic radial distortion estimation from a single image. J. Math. Imaging Vis. 2013, 45, 31–45. [Google Scholar] [CrossRef]

- Wu, F.; Wei, H.; Wang, X. Correction of image radial distortion based on division model. Opt. Eng. 2017, 56, 013108. [Google Scholar] [CrossRef] [Green Version]

- Byrod, M.; Kukelova, Z.; Josephson, K.; Pajdla, T.; Astrom, K. Fast and robust numerical solutions to minimal problems for cameras with radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Kukelova, Z.; Pajdla, T. Two minimal problems for cameras with radial distortion. In Proceedings of the IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Wang, A.; Qiu, T.; Shao, L. A simple method of radial distortion correction with center of distortion estimation. J. Math. Imaging Vis. 2009, 35, 165–172. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, Z.Y.; Smith, T. Radial distortion correction in a vision system. Appl. Opt. 2016, 55, 8876–8883. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Bae, H.; Lee, S.G. Image distortion and rectification calibration algorithms and validation technique for a stereo camera. Electronics 2021, 10, 339. [Google Scholar] [CrossRef]

- Forlani, G.; Dall’Asta, E.; Diotri, F.; di Cella, U.M.; Roncella, R.; Santise, M. Quality assessment of DSMs produced from UAV flights georeferenced with on-board RTK positioning. Remote Sens. 2018, 10, 311. [Google Scholar] [CrossRef] [Green Version]

- Camera Calibration Toolbox. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/htmls/example5.html (accessed on 7 January 2022).

- Do, P.N.B.; Nguyen, Q.C. A review of stereo-photogrammetry method for 3-D reconstruction in computer vision. In Proceedings of the IEEE 19th International Symposium on Communications and Information Technologies, Ho Chi Minh City, Vietnam, 25–27 September 2019; pp. 138–143. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, K.; Ye, H.; Chen, H.; Gao, X. A New Method for Absolute Pose Estimation with Unknown Focal Length and Radial Distortion. Sensors 2022, 22, 1841. https://doi.org/10.3390/s22051841

Guo K, Ye H, Chen H, Gao X. A New Method for Absolute Pose Estimation with Unknown Focal Length and Radial Distortion. Sensors. 2022; 22(5):1841. https://doi.org/10.3390/s22051841

Chicago/Turabian StyleGuo, Kai, Hu Ye, Honglin Chen, and Xin Gao. 2022. "A New Method for Absolute Pose Estimation with Unknown Focal Length and Radial Distortion" Sensors 22, no. 5: 1841. https://doi.org/10.3390/s22051841

APA StyleGuo, K., Ye, H., Chen, H., & Gao, X. (2022). A New Method for Absolute Pose Estimation with Unknown Focal Length and Radial Distortion. Sensors, 22(5), 1841. https://doi.org/10.3390/s22051841