Accuracy and Speed Improvement of Event Camera Motion Estimation Using a Bird’s-Eye View Transformation

Abstract

:1. Introduction

1.1. Backgrounds

1.2. Motivations

1.3. Objectives

1.4. Related Works

1.5. Contributions

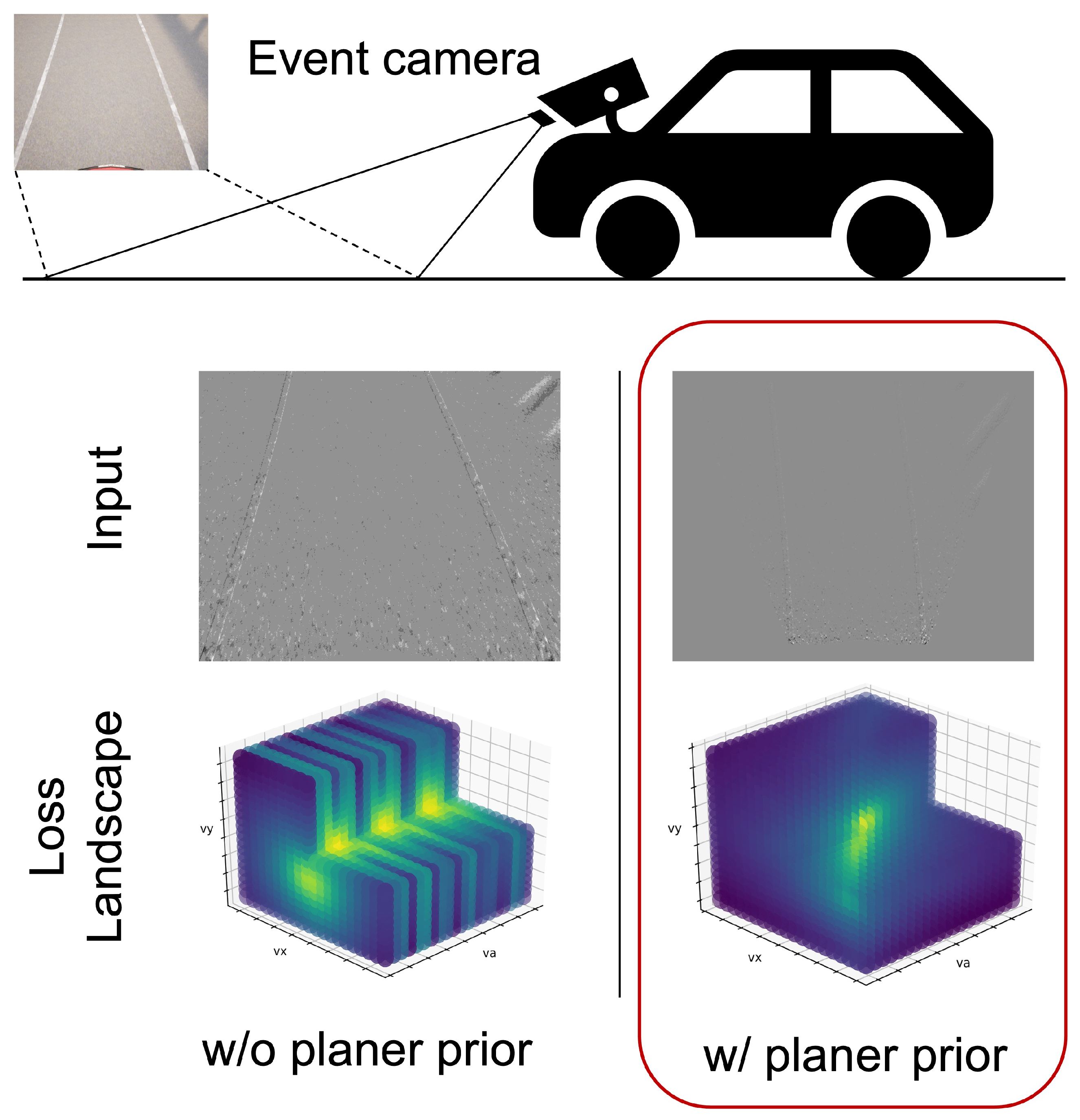

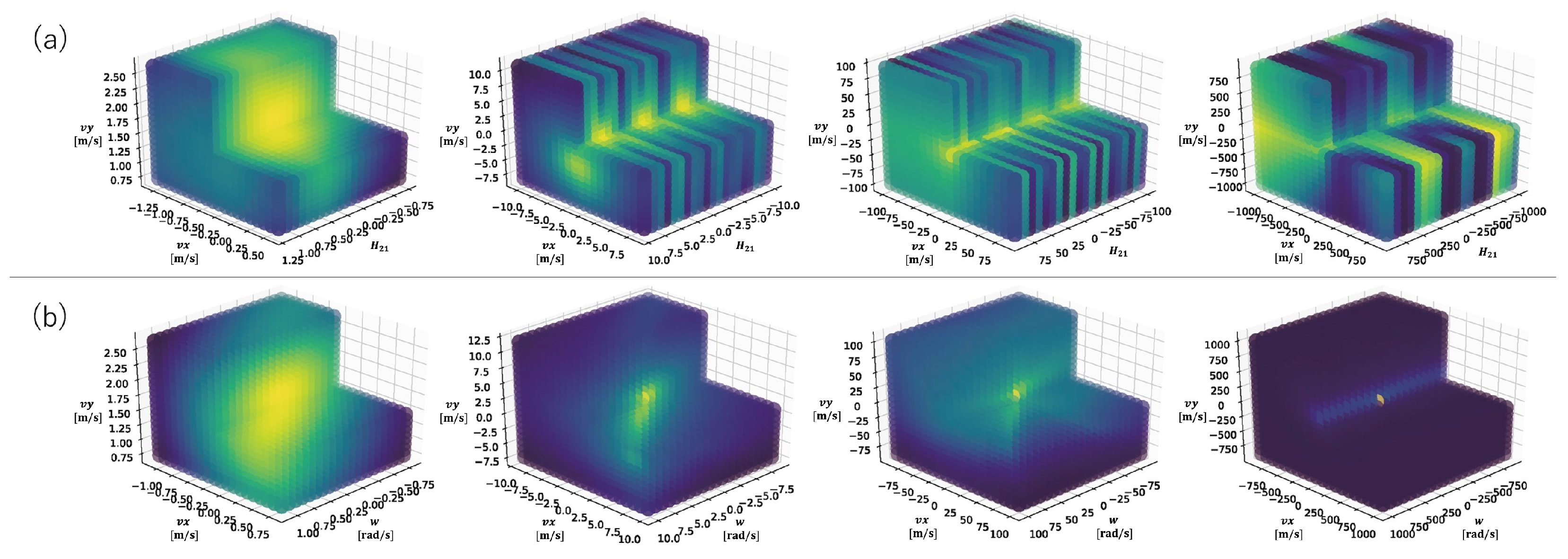

- We empirically found that the loss function of contrast maximization becomes convex around the true value by performing a bird’s-eye transformation to the event data. In doing this, we accomplished the following:

- -

- The initial value of the estimation can be taken widely;

- -

- The global extremum will be the correct value.

- The effectiveness of the proposed method was demonstrated using both synthetic and real data.

2. Materials and Methods

2.1. Event Data Representation

2.2. Contrast Maximization

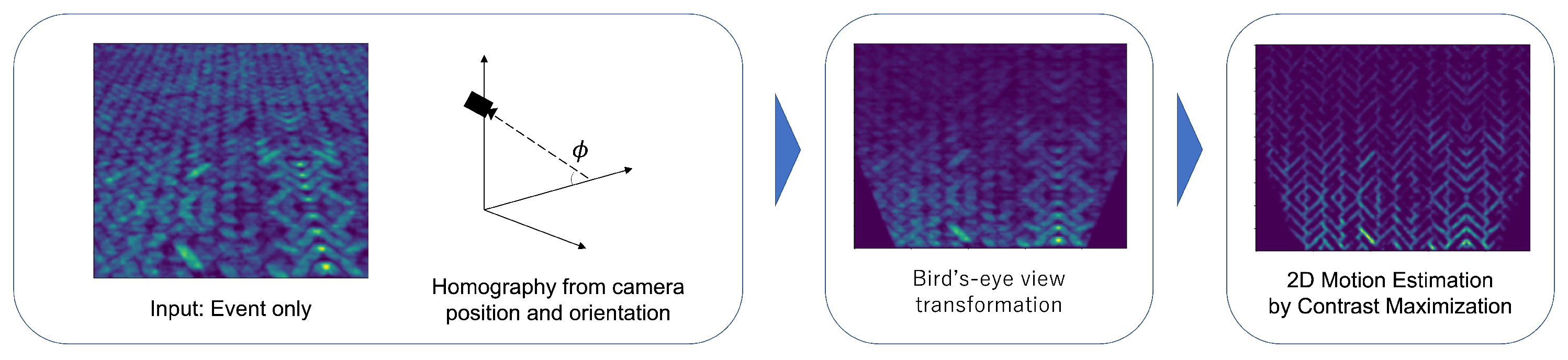

2.3. Bird’s-Eye View Transformation

3. Results

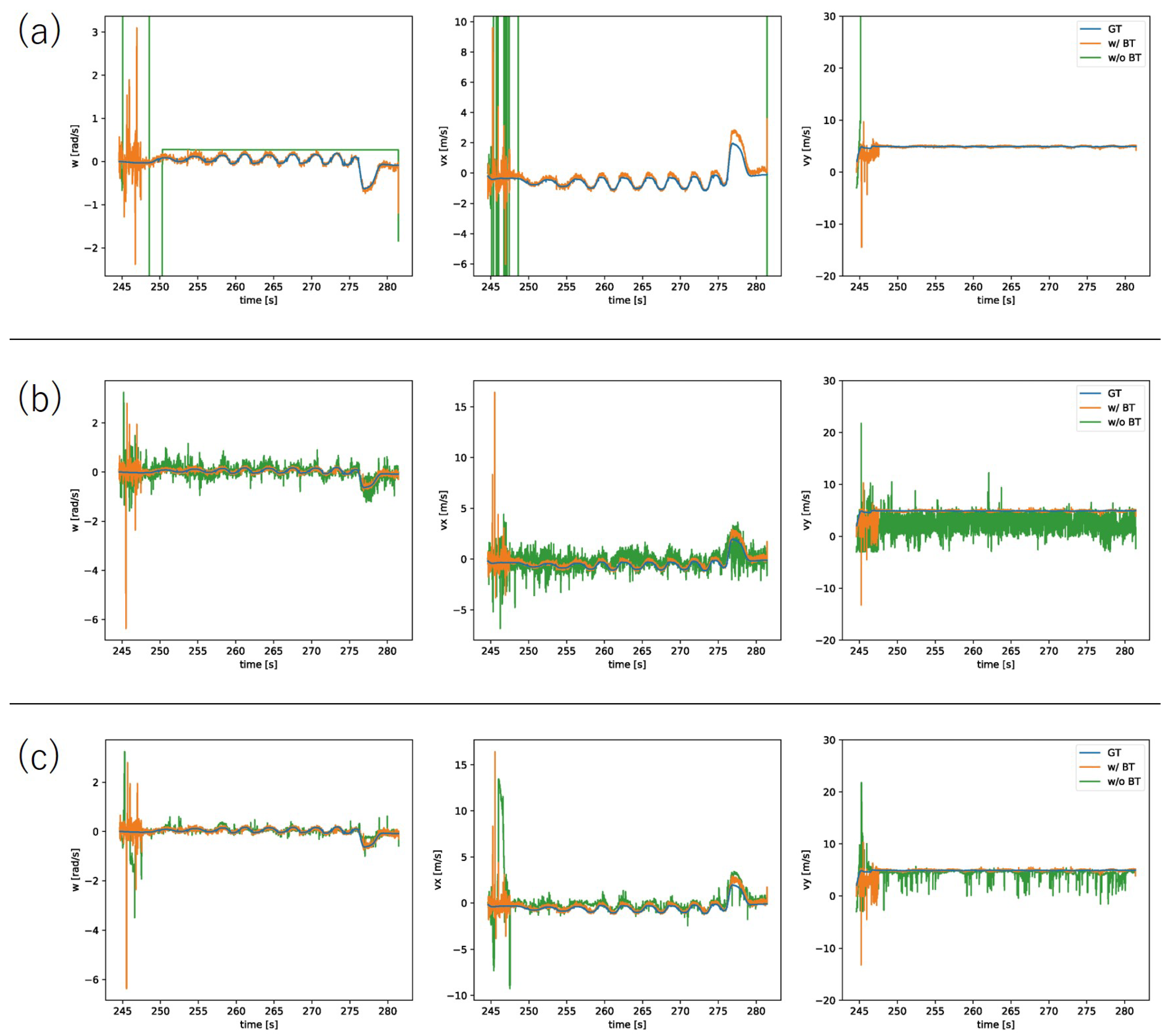

3.1. Accuracy and Speed Evaluation

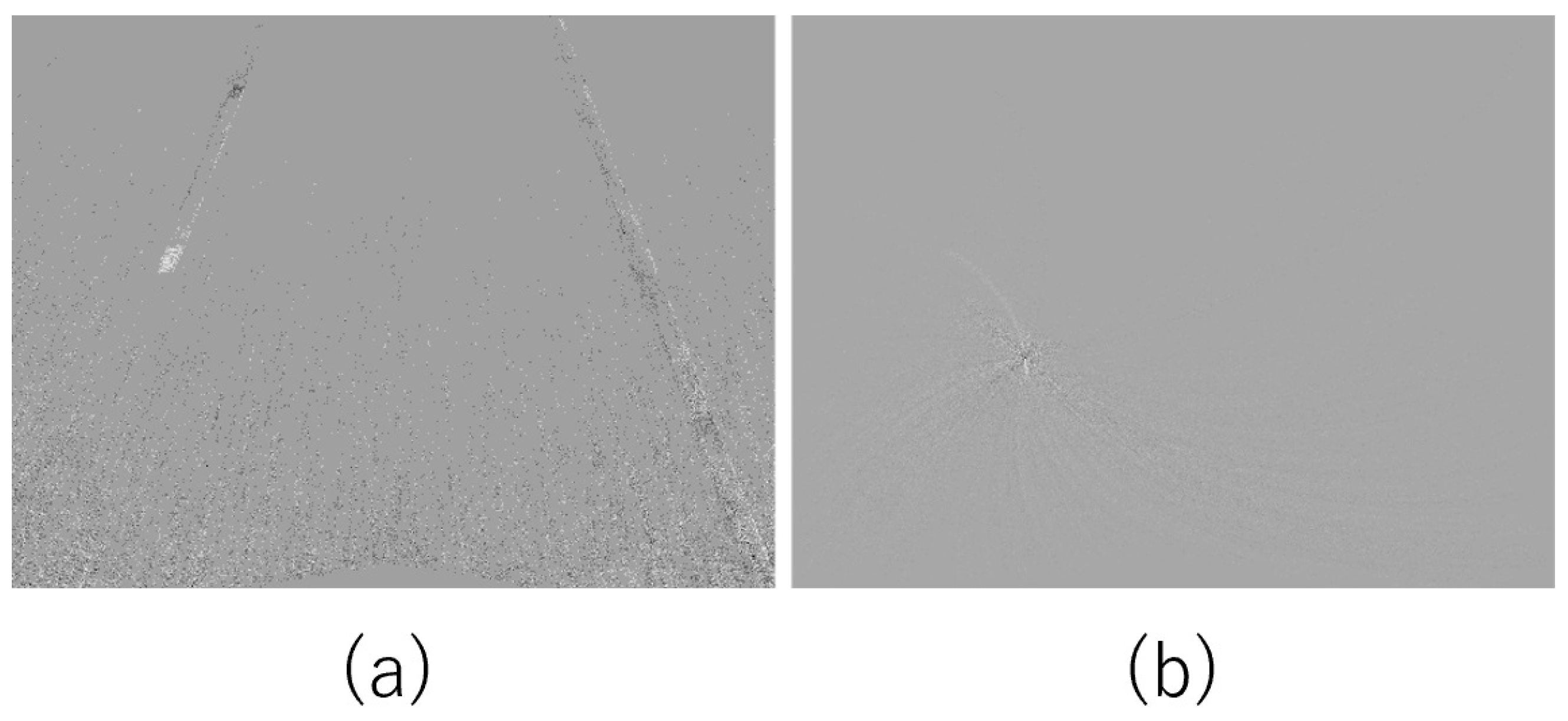

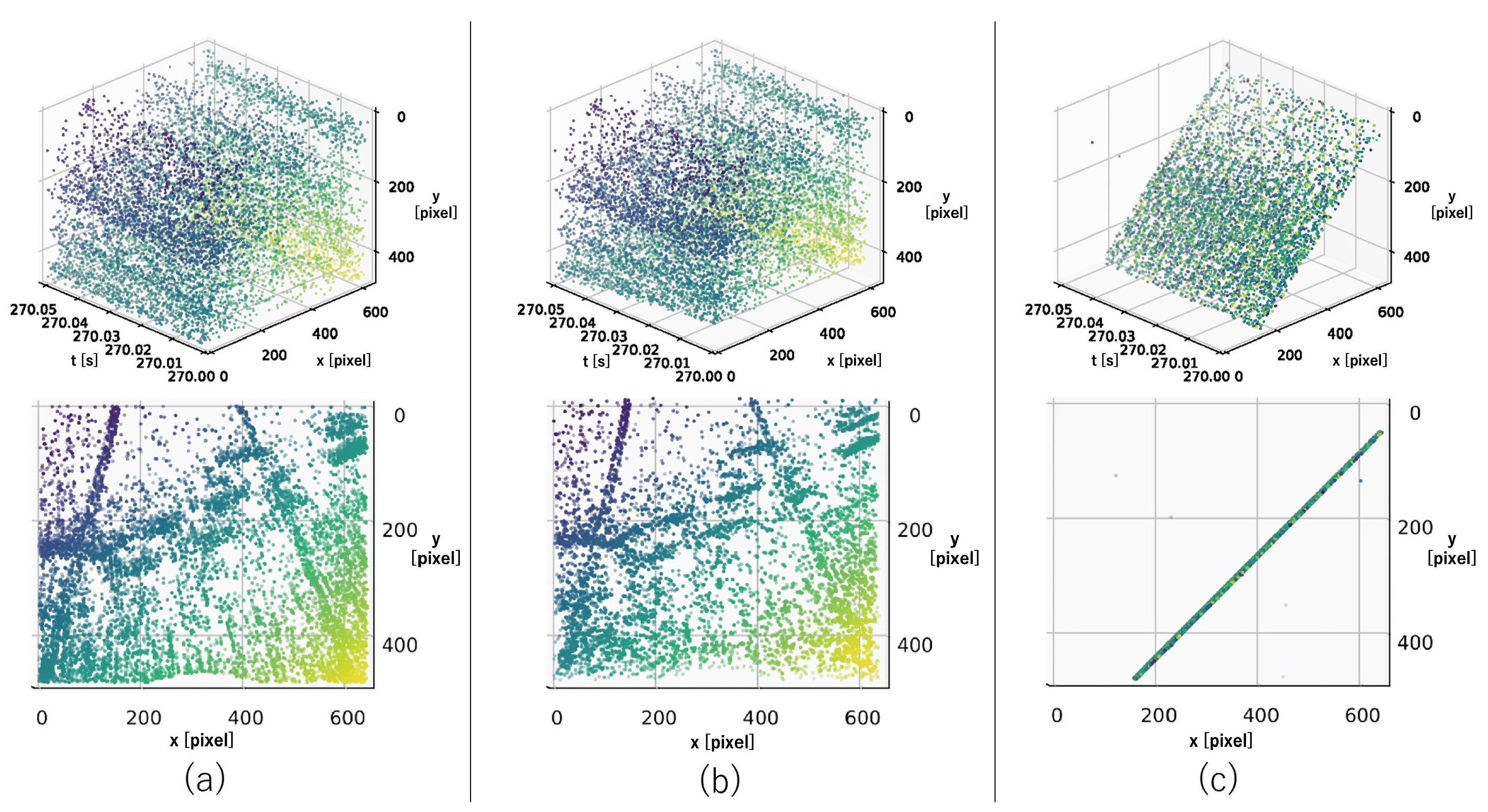

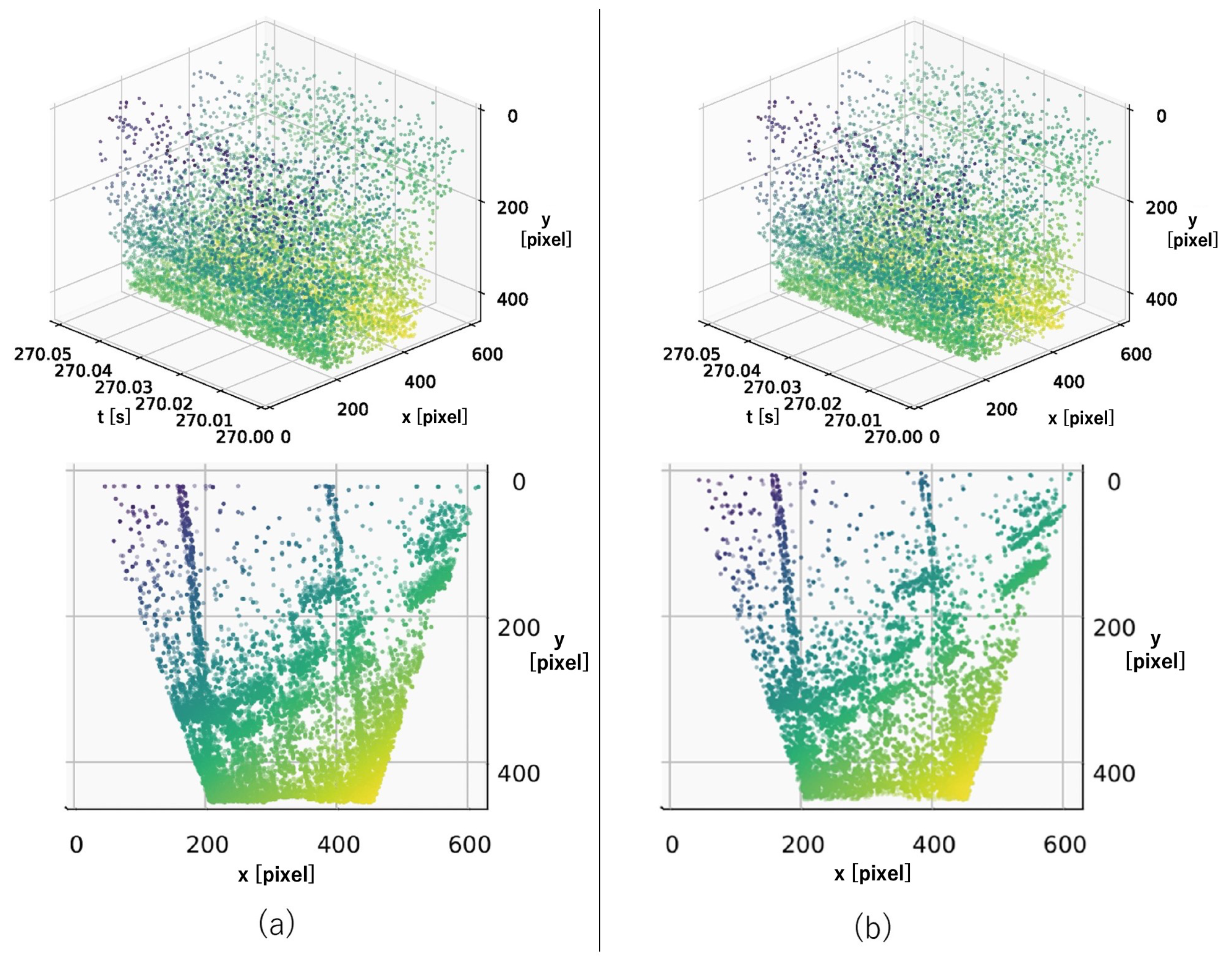

3.2. Loss Landscape

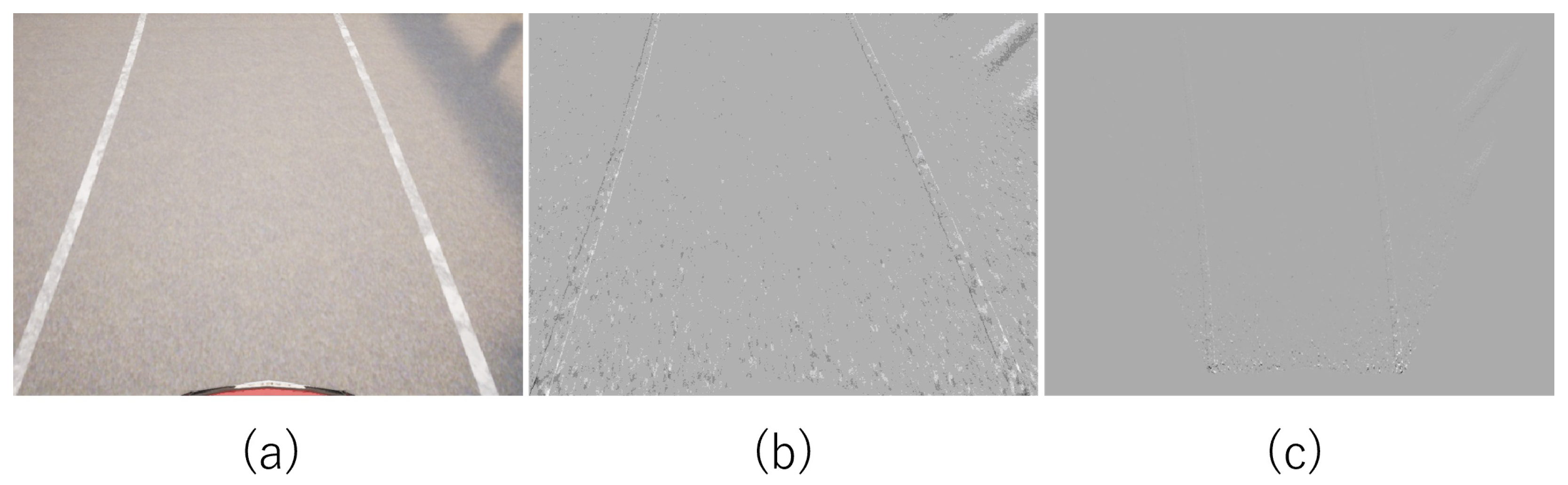

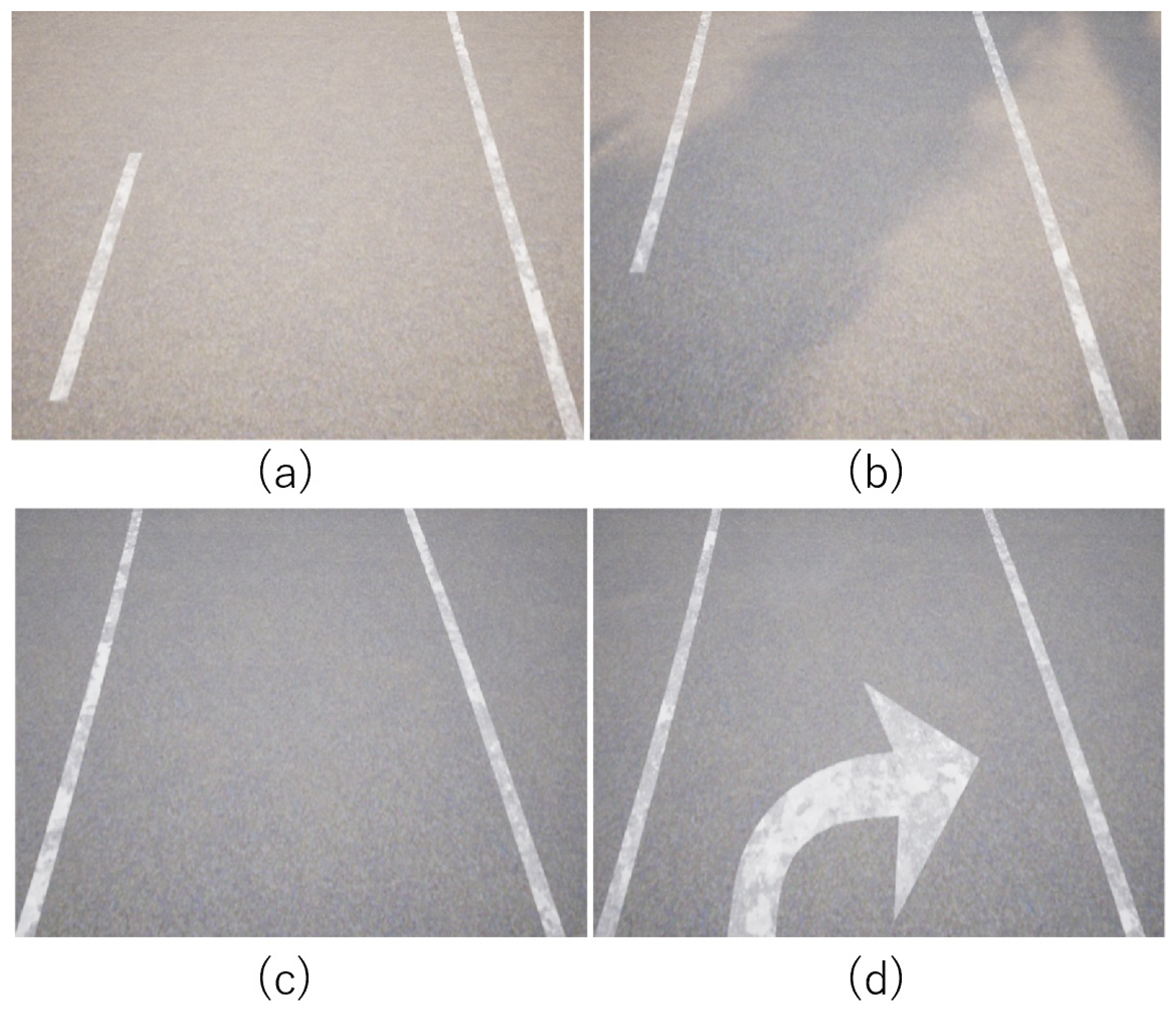

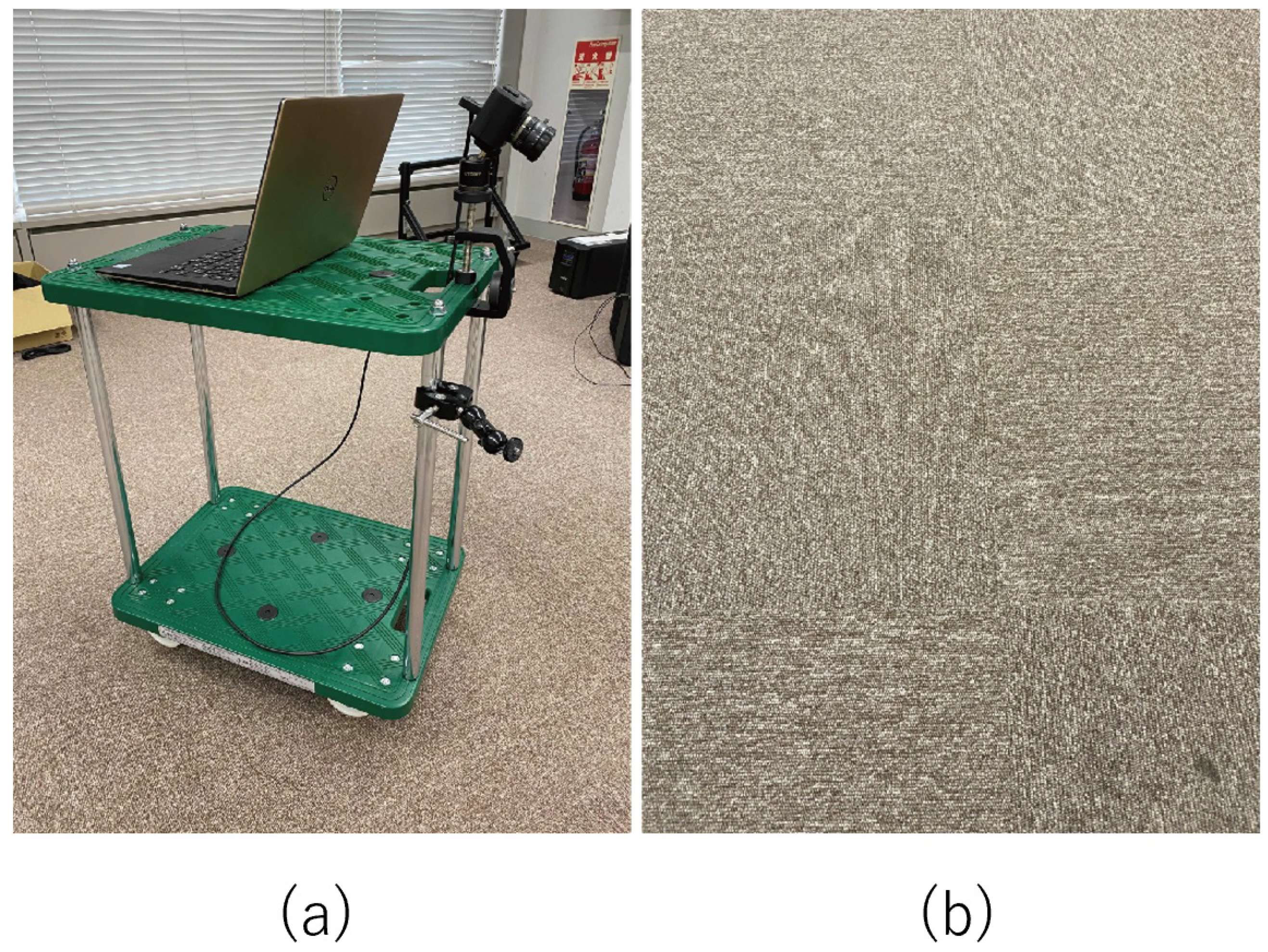

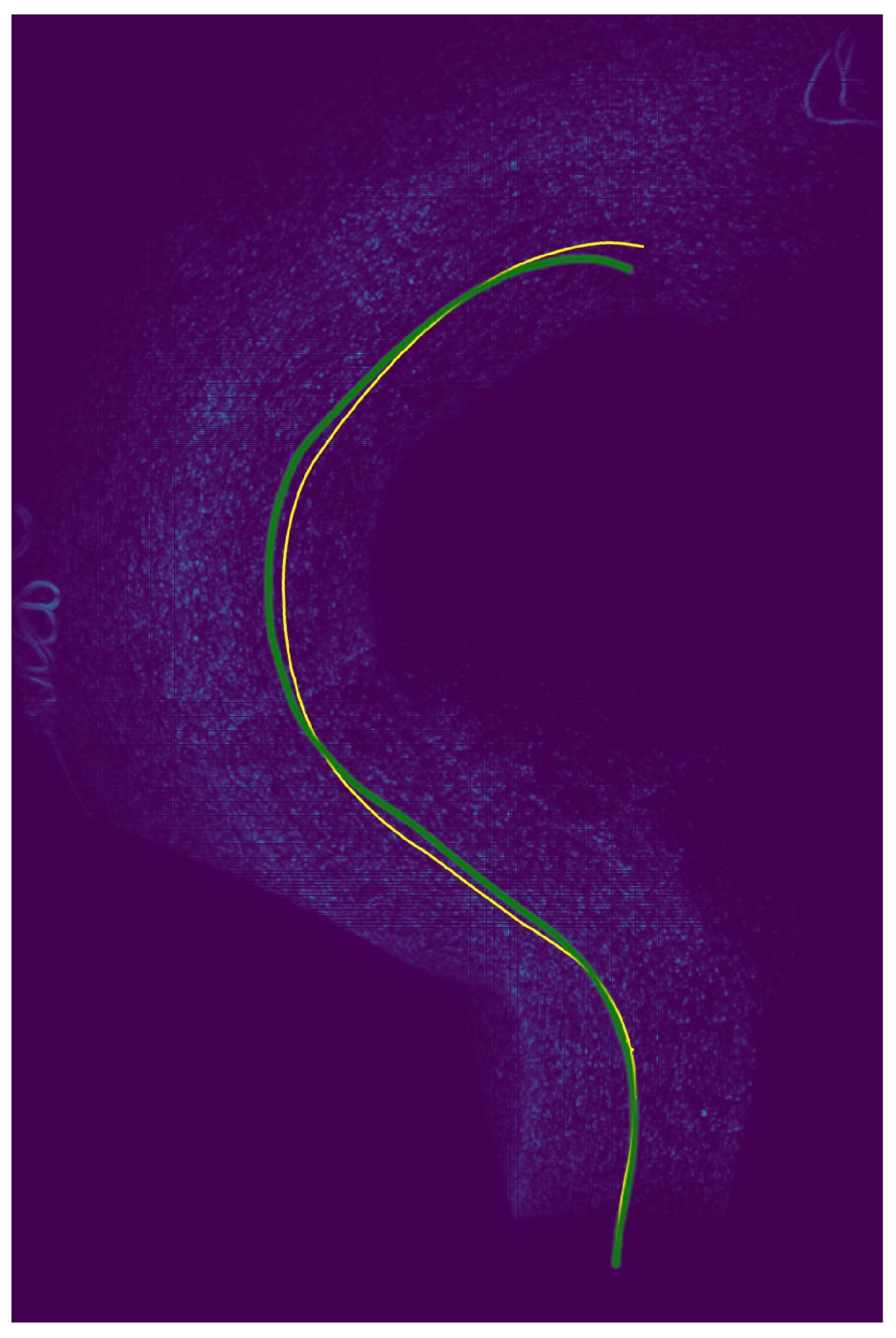

3.3. Evaluation with Real Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Teshima, T.; Saito, H.; Ozawa, S.; Yamamoto, K.; Ihara, T. Vehicle Lateral Position Estimation Method Based on Matching of Top-View Images. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR), Hong Kong, China, 20–24 August 2006; Volume 4, pp. 626–629. [Google Scholar]

- Saurer, O.; Vasseur, P.; Boutteau, R.; Demonceaux, C.; Pollefeys, M.; Fraundorfer, F. Homography Based Egomotion Estimation with a Common Direction. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 327–341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gilles, M.; Ibrahimpasic, S. Unsupervised deep learning based ego motion estimation with a downward facing camera. Vis. Comput. 2021. [Google Scholar] [CrossRef]

- Ke, Q.; Kanade, T. Transforming camera geometry to a virtual downward-looking camera: Robust ego-motion estimation and ground-layer detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madison, WI, USA, 18–20 June 2003; Volume 1, p. I. [Google Scholar]

- Nourani-Vatani, N.; Roberts, J.; Srinivasan, M.V. Practical visual odometry for car-like vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 3551–3557. [Google Scholar]

- Gao, L.; Su, J.; Cui, J.; Zeng, X.; Peng, X.; Kneip, L. Efficient Globally-Optimal Correspondence-Less Visual Odometry for Planar Ground Vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2696–2702. [Google Scholar]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.; Conradt, J.; Daniilidis, K.; et al. Event-based Vision: A Survey. arXiv 2019, arXiv:1904.08405. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240 × 180 130 dB 3 µs Latency Global Shutter Spatiotemporal Vision Sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128× 128 120 dB 15 μs Latency Asynchronous Temporal Contrast Vision Sensor. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef] [Green Version]

- Gallego, G.; Rebecq, H.; Scaramuzza, D. A Unifying Contrast Maximization Framework for Event Cameras, with Applications to Motion, Depth, and Optical Flow Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3867–3876. [Google Scholar]

- Gallego, G.; Gehrig, M.; Scaramuzza, D. Focus is all you need: Loss functions for event-based vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Stoffregen, T.; Kleeman, L. Event cameras, contrast maximization and reward functions: An analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12300–12308. [Google Scholar]

- Nunes, U.M.; Demiris, Y. Entropy Minimisation Framework for Event-Based Vision Model Estimation. In European Conference on Computer Vision (ECCV); Springer International Publishing: Cham, Switzerland, 2020; pp. 161–176. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; Volume 78, pp. 1–16. [Google Scholar]

- Benosman, R.; Ieng, S.H.; Clercq, C.; Bartolozzi, C.; Srinivasan, M. Asynchronous frameless event-based optical flow. Neural Netw. 2012, 27, 32–37. [Google Scholar] [CrossRef] [PubMed]

- Rueckauer, B.; Delbruck, T. Evaluation of Event-Based Algorithms for Optical Flow with Ground-Truth from Inertial Measurement Sensor. Front. Neurosci. 2016, 10, 176. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brosch, T.; Tschechne, S.; Neumann, H. On event-based optical flow detection. Front. Neurosci. 2015, 9, 137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Benosman, R.; Clercq, C.; Lagorce, X.; Ieng, S.H.; Bartolozzi, C. Event-based visual flow. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 407–417. [Google Scholar] [CrossRef] [PubMed]

- Delbruck, T. Frame-free dynamic digital vision. In Proceedings of the International Symposium on Secure-Life Electronics, Advanced Electronics for Quality Life and Society, Tokyo, Japan, 6–7 March 2008; Volume 1, pp. 21–26. [Google Scholar]

- Liu, M.; Delbruck, T. Block-matching optical flow for dynamic vision sensors: Algorithm and FPGA implementation. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017. [Google Scholar]

- Almatrafi, M.; Baldwin, R.; Aizawa, K.; Hirakawa, K. Distance Surface for Event-Based Optical Flow. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1547–1556. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, J.J.; Harley, A.W.; Derpanis, K.G. Back to Basics: Unsupervised Learning of Optical Flow via Brightness Constancy and Motion Smoothness. In European Conference on Computer Vision (ECCV); Springer: Cham, Switaerland, 2016; pp. 3–10. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Meister, S.; Hur, J.; Roth, S. UnFlow: Unsupervised Learning of Optical Flow With a Bidirectional Census Loss. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. EV-FlowNet: Self-Supervised Optical Flow Estimation for Event-based Cameras. arXiv 2018, arXiv:1802.06898. [Google Scholar]

- Paredes-Vallés, F.; Scheper, K.Y.W.; de Croon, G.C.H.E. Unsupervised Learning of a Hierarchical Spiking Neural Network for Optical Flow Estimation: From Events to Global Motion Perception. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2051–2064. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, C.; Kosta, A.K.; Zhu, A.Z.; Chaney, K.; Daniilidis, K.; Roy, K. Spike-FlowNet: Event-Based Optical Flow Estimation with Energy-Efficient Hybrid Neural Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 366–382. [Google Scholar]

- Weikersdorfer, D.; Conradt, J. Event-based particle filtering for robot self-localization. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 866–870. [Google Scholar]

- Weikersdorfer, D.; Hoffmann, R.; Conradt, J. Simultaneous Localization and Mapping for Event-Based Vision Systems. In Computer Vision Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 133–142. [Google Scholar]

- Kim, H.; Handa, A.; Benosman, R.; Ieng, S.H.; Davison, A.J. Simultaneous mosaicing and tracking with an event camera. J. Solid State Circ. 2008, 43, 566–576. [Google Scholar]

- Kim, H.; Leutenegger, S.; Davison, A.J. Real-Time 3D Reconstruction and 6-DoF Tracking with an Event Camera. In Computer Vision—ECCV 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 349–364. [Google Scholar]

- Gallego, G.; Scaramuzza, D. Accurate Angular Velocity Estimation With an Event Camera. IEEE Robot. Autom. Lett. 2017, 2, 632–639. [Google Scholar] [CrossRef] [Green Version]

- Liu, D.; Parra, A.; Chin, T.J. Globally Optimal Contrast Maximisation for Event-based Motion Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6349–6358. [Google Scholar]

- Peng, X.; Wang, Y.; Gao, L.; Kneip, L. Globally-optimal event camera motion estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 51–67. [Google Scholar]

- Rebecq, H.; Gehrig, D.; Scaramuzza, D. ESIM: An open event camera simulator. In Proceedings of the Conference on Robot Learning, Zürich, Switzerland, 29–31 October 2018; pp. 969–982. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

| Metric | Scenes | w/o BT | Ours |

|---|---|---|---|

| Iteration | Scene 1 | 200 | 19 |

| Scene 2 | 124 | 19 | |

| Scene 3 | 118 | 11 | |

| Scene 4 | 107 | 29 | |

| Ave. | 137.2 | 19.5 | |

| Total time (s) | Scene 1 | 3.98 | 0.57 |

| Scene 2 | 12.39 | 3.98 | |

| Scene 3 | 15.94 | 3.41 | |

| Scene 4 | 21.63 | 8.61 | |

| Ave. | 13.49 | 4.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ozawa, T.; Sekikawa, Y.; Saito, H. Accuracy and Speed Improvement of Event Camera Motion Estimation Using a Bird’s-Eye View Transformation. Sensors 2022, 22, 773. https://doi.org/10.3390/s22030773

Ozawa T, Sekikawa Y, Saito H. Accuracy and Speed Improvement of Event Camera Motion Estimation Using a Bird’s-Eye View Transformation. Sensors. 2022; 22(3):773. https://doi.org/10.3390/s22030773

Chicago/Turabian StyleOzawa, Takehiro, Yusuke Sekikawa, and Hideo Saito. 2022. "Accuracy and Speed Improvement of Event Camera Motion Estimation Using a Bird’s-Eye View Transformation" Sensors 22, no. 3: 773. https://doi.org/10.3390/s22030773

APA StyleOzawa, T., Sekikawa, Y., & Saito, H. (2022). Accuracy and Speed Improvement of Event Camera Motion Estimation Using a Bird’s-Eye View Transformation. Sensors, 22(3), 773. https://doi.org/10.3390/s22030773