Improving Network Training on Resource-Constrained Devices via Habituation Normalization †

Abstract

1. Introduction

- We proposed a new normalization method, Habituation Normalization (HN), based upon the idea of habituation. HN can accelerate the convergence and accuracy of networks in a wide range of batch sizes.

- HN helps maintain the model’s stability. It avoids the accuracy degeneration when the batch size is small and the performance saturating when the size is significant.

- Experiments on LeNet-5, VGG16, and ResNet-50 show that HN has good adaptability.

- The application of HN to deep learning-based EEG signal application system shows that HN is suitable for deep neural networks running on resource-constrained devices.

2. Related Works

2.1. Normalization

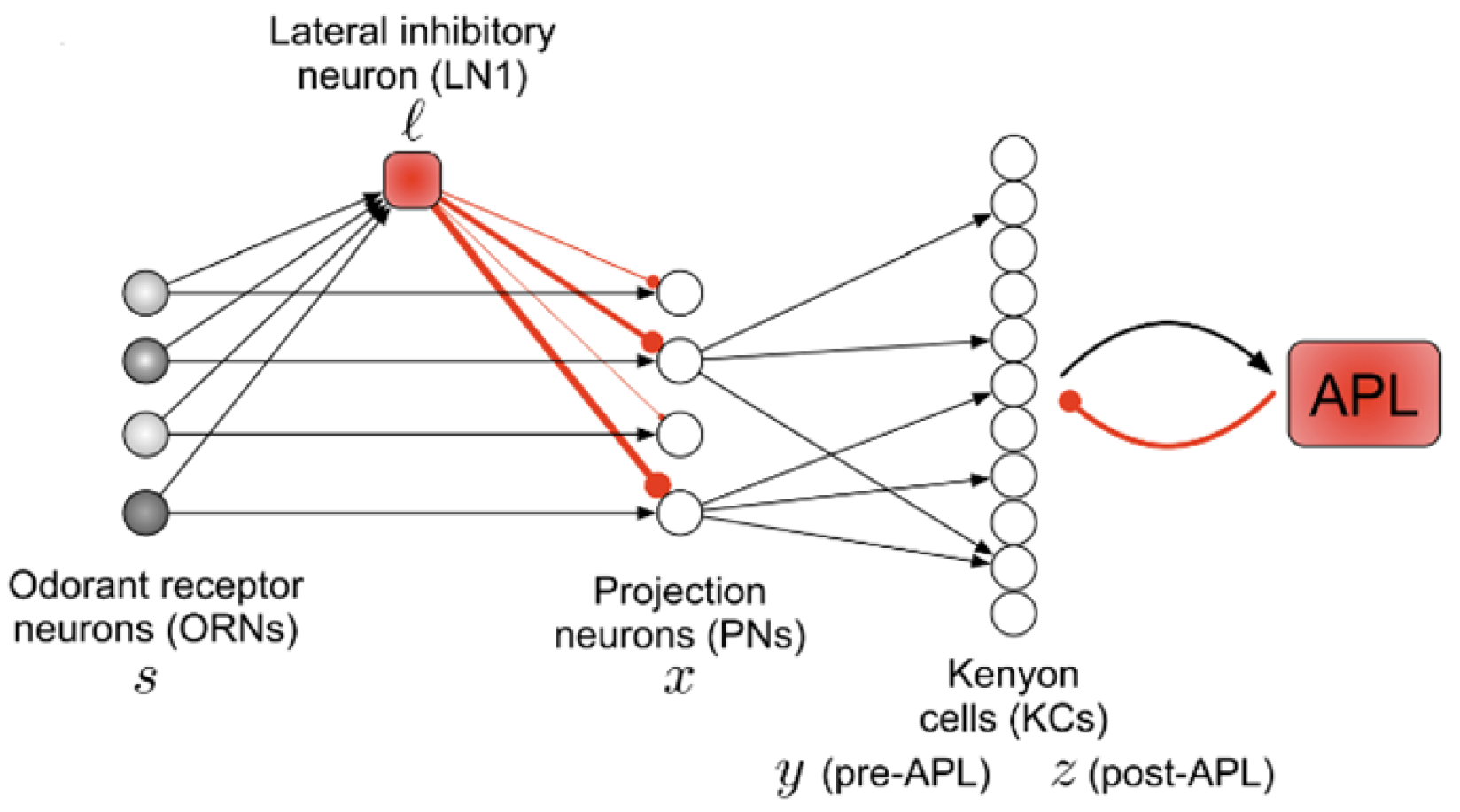

2.2. Biological Habituation and Applications

3. Method

3.1. The Theory of Existing Normalization

3.2. Habituation Normalization

- Stimulus adaptation (reduced responsiveness to neutral stimuli with no learned or innate value).

- Stimulus specificity (habituation to one stimulus does not reduce responsiveness to another stimulus).

- Reversibility (de-habituation to context when it becomes relevant).

3.3. Implementation

4. Experiment

4.1. Experimental Setup

- FASHION-MNIST [29]: FASHION-MNIST clones all the irrelevant features of the MNIST dataset: 60,000 training images and corresponding labels, 10,000 test images and related labels, 10 categories and 28 × 28 resolution per image. The difference is that FASHION-MNIST is no more extended abstract symbols but more concrete human necessities-clothing, with 10 types.

- CIFAR10 [30]: this dataset consists of 60,000 color images with 50,000 training images and 10,000 test images of 32 × 32 pixels, divided into 10 categories.

4.2. Comparisons on Convolutional Neural Networks

4.2.1. LeNet-5

4.2.2. VGG16

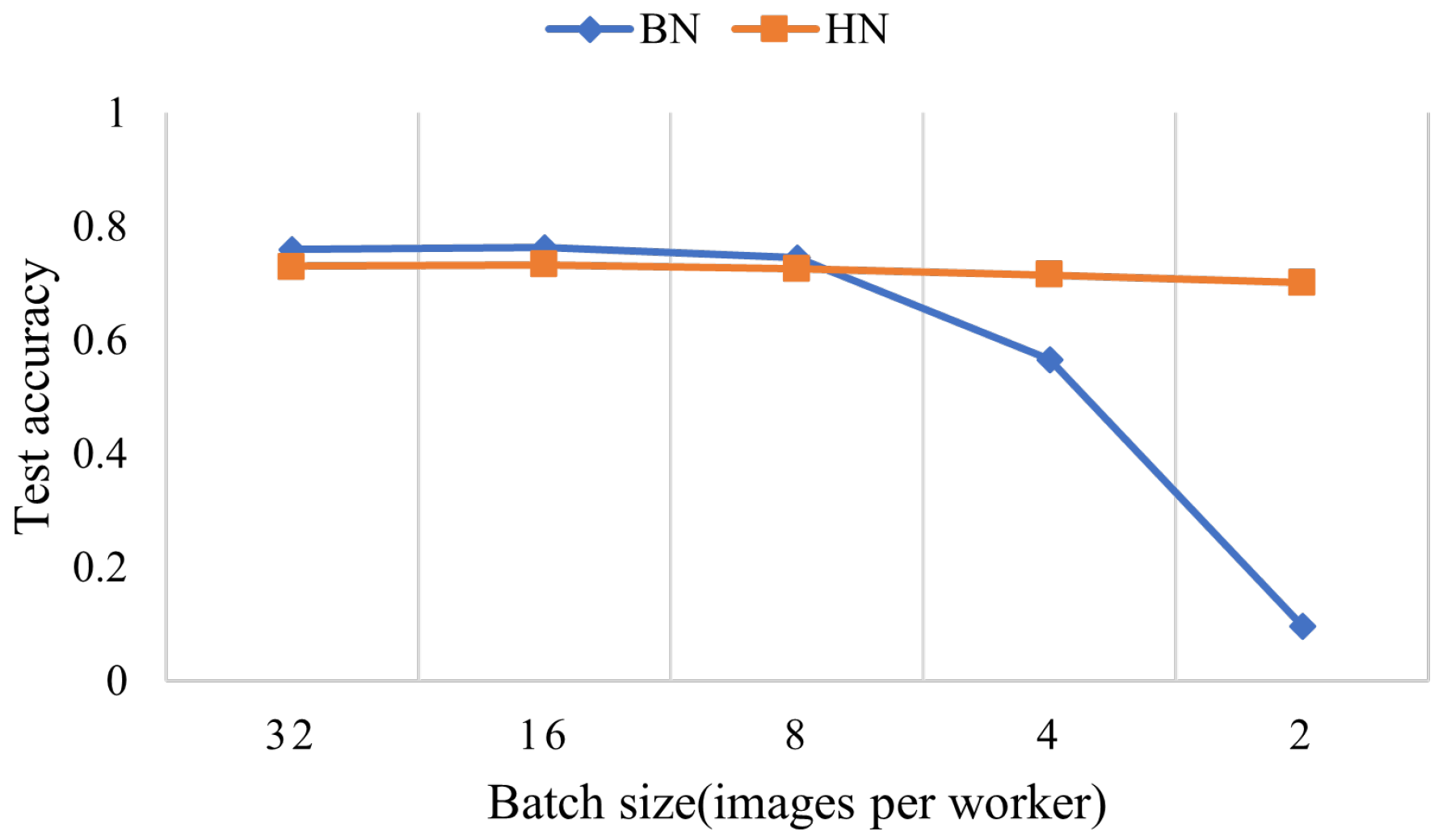

4.2.3. Comparisons on Residual Networks

4.3. Memory Requirement Analysis

5. Case Study

- Training: in the laboratory experimental situation, collect enough EEG trials to train a deep neural network for patterns recognition.

- Deploying: deploy the pre-trained deep neural network model to the embedded device.

- Fine-tuning: fine-tune the deep neural network model while acquiring EEG trials.

- Applying: apply the fine-tuned and stabilized deep neural network model to the control of the embedded.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. Proc. Int. Conf. Mach. Learn. 2015, 37, 448–456. [Google Scholar]

- Murad, N.; Pan, M.C.; Hsu, Y.F. Reconstruction and Localization of Tumors in Breast Optical Imaging via Convolution Neural Network Based on Batch Normalization Layers. IEEE Access 2022, 10, 57850–57864. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Salimans, T.; Kingma, D.P. Weight normalization: A simple reparameterization to accelerate training of deep neural networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar] [CrossRef]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Chapel Hill, UK, 23–28 August 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Tang, X.; Qi, X.; Li, C.G.; Xiao, R. Learning graph normalization for graph neural networks. Neurocomputing 2022, 493, 613–625. [Google Scholar] [CrossRef]

- Wilson, D.A.; Linster, C. Neurobiology of a simple memory. J. Neurophysiol. 2008, 100, 2–7. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Dasgupta, S.; Navlakha, S. Habituation as a neural algorithm for online odor discrimination. Proc. Natl. Acad. Sci. USA 2020, 117, 12402–12410. [Google Scholar] [CrossRef]

- Marsland, S.; Nehmzow, U.; Shapiro, J. Novelty detection on a mobile robot using habituation. arXiv 2000, arXiv:cs/0006007. [Google Scholar]

- Kim, C.; Lee, J.; Han, T.; Kim, Y.M. A hybrid framework combining background subtraction and deep neural networks for rapid person detection. J. Big Data 2018, 5, 1–24. [Google Scholar] [CrossRef]

- Markou, M.; Singh, S. Novelty detection: A review—part 2: Neural network based approaches. Signal Process. 2003, 83, 2499–2521. [Google Scholar] [CrossRef]

- Ioffe, S. Batch renormalization: Towards reducing minibatch dependence in batch-normalized models. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Daneshmand, H.; Joudaki, A.; Bach, F.R. Batch Normalization Orthogonalizes Representations in Deep Random Networks. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y.N., Liang, P., Vaughan, J.W., Eds.; 2021; pp. 4896–4906. Available online: https://proceedings.neurips.cc/paper/2021 (accessed on 10 October 2022).

- Lobacheva, E.; Kodryan, M.; Chirkova, N.; Malinin, A.; Vetrov, D.P. On the Periodic Behavior of Neural Network Training with Batch Normalization and Weight Decay. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y.N., Liang, P., Vaughan, J.W., Eds.; 2021; pp. 21545–21556. Available online: https://proceedings.neurips.cc/paper/2021 (accessed on 10 October 2022).

- Bailey, C.H.; Chen, M. Morphological basis of long-term habituation and sensitization in Aplysia. Science 1983, 220, 91–93. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, S.M.; Castellucci, V.F.; Bayley, H.; Schwartz, J.H. A molecular mechanism for long-term sensitization in Aplysia. Nature 1987, 329, 62–65. [Google Scholar] [CrossRef] [PubMed]

- O’keefe, J.; Nadel, L. The Hippocampus as a Cognitive Map; Oxford University Press: Oxford, UK, 1978. [Google Scholar]

- Ewert, J.P.; Kehl, W. Configurational prey-selection by individual experience in the toadBufo bufo. J. Comp. Physiol. 1978, 126, 105–114. [Google Scholar] [CrossRef]

- Wang, D.; Arbib, M.A. Modeling the dishabituation hierarchy: The role of the primordial hippocampus. Biol. Cybern. 1992, 67, 535–544. [Google Scholar] [CrossRef]

- Thompson, R.F. The neurobiology of learning and memory. Science 1986, 233, 941–947. [Google Scholar] [CrossRef]

- Dasgupta, S.; Stevens, C.F.; Navlakha, S. A neural algorithm for a fundamental computing problem. Science 2017, 358, 793–796. [Google Scholar] [CrossRef]

- Groves, P.M.; Thompson, R.F. Habituation: A dual-process theory. Psychol. Rev. 1970, 77, 419. [Google Scholar] [CrossRef]

- Stanley, J.C. Computer simulation of a model of habituation. Nature 1976, 261, 146–148. [Google Scholar] [CrossRef]

- Wang, D.; Hsu, C. SLONN: A simulation language for modeling of neural networks. Simulation 1990, 55, 69–83. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. In Handbook of Systemic Autoimmune Diseases; 2009; Volume 1, Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 1 December 2022).

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

| Batch Size | 2 | 4 | 8 | 16 |

|---|---|---|---|---|

| Vanilla | 0.5408 | 0.5496 | 0.5702 | 0.5796 |

| BN | 0.2986 | 0.673 | 0.6854 | 0.6564 |

| GN | 0.592 | 0.618 | 0.6056 | 0.6096 |

| LN | 0.5826 | 0.5894 | 0.6178 | 0.598 |

| BRN | 0.3046 | 0.6328 | 0.6476 | 0.6498 |

| HN | 0.601 | 0.6114 | 0.6232 | 0.6278 |

| Batch Size | 2 | 4 | 8 | 16 |

|---|---|---|---|---|

| Vanilla | 0.100 | 0.100 | 0.100 | 0.100 |

| BN | 0.784 | 0.865 | 0.923 | 0.929 |

| HN | 0.884 | 0.924 | 0.924 | 0.923 |

| Batch Size | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|

| BN | 0.109 | 0.7606 | 0.8618 | 0.9124 | 0.9176 |

| BRN | 0.479 | 0.904 | 0.9132 | 0.9108 | 0.9138 |

| GN@G = 32 | 0.9062 | 0.9064 | 0.9038 | 0.9066 | 0.9046 |

| HN | 0.9024 | 0.906 | 0.9018 | 0.9056 | 0.9074 |

| Batch Size | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|

| BN | 0.0954 | 0.5658 | 0.7354 | 0.7344 | 0.7504 |

| BRN | 0.2052 | 0.7356 | 0.7370 | 0.7626 | 0.7552 |

| GN@G = 32 | 0.1 | 0.702 | 0.7016 | 0.7138 | 0.714 |

| GN@G = 2 | 0.7018 | 0.701 | 0.7186 | 0.721 | 0.7224 |

| HN | 0.7226 | 0.7254 | 0.7326 | 0.7218 | 0.7264 |

| Accuracy | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| BN | 0.840 | 0.483 | 0.882 | 0.740 | 0.288 | 0.535 | 0.924 | 0.778 | 0.764 | 0.693 |

| HN | 0.865 | 0.472 | 0.896 | 0.719 | 0.563 | 0.569 | 0.910 | 0.799 | 0.788 | 0.731 |

| Δ | 0.025 | −0.011 | 0.014 | −0.021 | 0.275 | 0.034 | −0.014 | 0.021 | 0.024 | 0.038 |

| Accuracy | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| BN | 0.792 | 0.431 | 0.837 | 0.642 | 0.368 | 0.517 | 0.927 | 0.740 | 0.736 | 0.666 |

| HN | 0.840 | 0.465 | 0.865 | 0.708 | 0.566 | 0.535 | 0.906 | 0.785 | 0.806 | 0.720 |

| Δ | 0.048 | 0.034 | 0.028 | 0.066 | 0.198 | 0.018 | −0.021 | 0.045 | 0.070 | 0.046 |

| Accuracy | 2→1 | 4→3 | 6→5 | 8→7 | 1→9 | Average |

|---|---|---|---|---|---|---|

| BN | 0.507 | 0.628 | 0.288 | 0.566 | 0.587 | 0.515 |

| HN | 0.594 | 0.635 | 0.340 | 0.597 | 0.628 | 0.559 |

| Δ | 0.087 | 0.007 | 0.052 | 0.031 | 0.041 | 0.044 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, H.; Zhang, L.; Zhang, S. Improving Network Training on Resource-Constrained Devices via Habituation Normalization. Sensors 2022, 22, 9940. https://doi.org/10.3390/s22249940

Lai H, Zhang L, Zhang S. Improving Network Training on Resource-Constrained Devices via Habituation Normalization. Sensors. 2022; 22(24):9940. https://doi.org/10.3390/s22249940

Chicago/Turabian StyleLai, Huixia, Lulu Zhang, and Shi Zhang. 2022. "Improving Network Training on Resource-Constrained Devices via Habituation Normalization" Sensors 22, no. 24: 9940. https://doi.org/10.3390/s22249940

APA StyleLai, H., Zhang, L., & Zhang, S. (2022). Improving Network Training on Resource-Constrained Devices via Habituation Normalization. Sensors, 22(24), 9940. https://doi.org/10.3390/s22249940