Abstract

Radar is widely employed in many applications, especially in autonomous driving. At present, radars are only designed as simple data collectors, and they are unable to meet new requirements for real-time and intelligent information processing as environmental complexity increases. It is inevitable that smart radar systems will need to be developed to deal with these challenges and digital twins in cyber-physical systems (CPS) have proven to be effective tools in many aspects. However, human involvement is closely related to radar technology and plays an important role in the operation and management of radars; thus, digital twins’ radars in CPS are insufficient to realize smart radar systems due to the inadequate consideration of human factors. ACP-based parallel intelligence in cyber-physical-social systems (CPSS) is used to construct a novel framework for smart radars, called Parallel Radars. A Parallel Radar consists of three main parts: a Descriptive Radar for constructing artificial radar systems in cyberspace, a Predictive Radar for conducting computational experiments with artificial systems, and a Prescriptive Radar for providing prescriptive control to both physical and artificial radars to complete parallel execution. To connect silos of data and protect data privacy, federated radars are proposed. Additionally, taking mines as an example, the application of Parallel Radars in autonomous driving is discussed in detail, and various experiments have been conducted to demonstrate the effectiveness of Parallel Radars.

1. Introduction

A radar is a kind of active sensor that plays an increasingly important role in many fields, such as national defense [1,2], traditional industry [3,4,5,6,7,8,9,10], and autonomous driving [11,12]. Current radars collect data using electromagnetic waves to acquire three-dimensional information about their surroundings. However, due to the increased complexity of the environment, traditional radars are unable to satisfy the needs for new functional requirements, and smart radar systems must be built to adapt to the changing environment in real time. With the rapid development of artificial intelligence and computer science, digital twins in cyber-physical systems (CPS) [13,14,15], which are regarded as the key to the next industrial revolution, are being used to construct digital radars in cyberspace to achieve intelligence. Radar models in CPS [16,17,18,19,20,21,22,23,24,25] have already been extensively researched and demonstrated to be effective in solving many problems, including generating virtual data for various downstream tasks [26,27,28,29,30,31,32,33,34] and closed-loop testing.

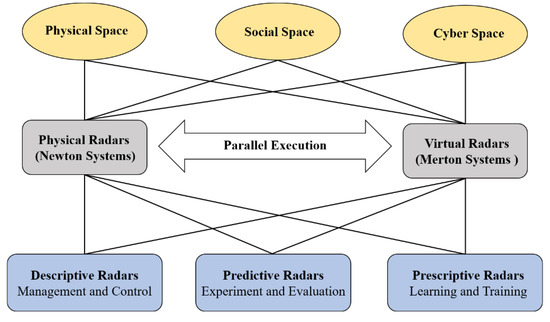

In a real application, human involvement exerts a tremendous influence on the operation and maintenance of radar systems; thus, radar systems should be regarded as Morton systems with self-actualization rather than Newton systems [35]. Due to an incomplete consideration of human factors, digital twins radars in CPS are insufficient for the construction of smart radar systems. ACP-based parallel intelligence proposed by Prof. Wang has demonstrated obvious advantages in realizing intelligence in cyber-physical-social systems (CPSS) [36,37,38]. The ACP method comprises artificial societies, computational experiments, and parallel execution, which has already been widely used in many applications, such as control and management [39,40,41], driving [42,43,44], scenarios engineering [45,46], and light fields [47,48,49]. Based on parallel theory, this paper proposes Parallel Radars—a new framework that consists of Descriptive Radars, Predictive Radars, and Prescriptive Radars. This is a paradigm of future smart radars that can not only overcome radar hardware limitations through cloud computing but also make intelligent adjustments in real time. The main contributions of this paper can be summarized as follows:

- To overcome the hardware limitations of radars, we propose the novel framework Parallel Radars, a virtual-real interactive radar system in CPSS. It constructs a complete closed loop between physical space and cyberspace to achieve digital intelligence.

- In order to utilize Parallel Radars’ data more efficiently, federated radars are put forward to connect data silos and protect data privacy.

This paper is organized as follows. Section 2 introduces the principles and applications of commonly used radars briefly. Section 3 presents the framework—Parallel Radars—in detail and federated radars that focus on data security are discussed in Section 4. The experiments about Parallel Radars’ application in mine autonomous driving are desrcibed and analyzed in Section 5. Section 6 presents the conclusions and summarizes the prospects for the future work of Parallel Radars.

2. Radar Systems

Radar is a broad concept; there are various types of radars for different applications, such as millimeter wave (mm-wave) radars, LiDARs, and synthetic aperture radars (SAR). In this section, we only focus on mm-wave radars and LiDARs, which are widely applied in autonomous driving.

2.1. Principles

In this part, the working principles of mm-wave radars and LiDARs are clarified in detail. Due to the differences in working principles, they have respective characteristics and are deployed for different tasks.

2.1.1. Mm-Wave Radars

Mm-wave radars use electromagnetic waves to measure the range, Doppler velocity, and azimuth angle of the target. The two most frequently used frequencies in automotive mm-wave radars are 24 GHz and 77 GHz, but there is generally a preference for 77 GHz because of its advantages in higher-resolution and smaller antennas [50].

The mode of waveform modulation plays an important role in radar systems. Among all the modulation modes, frequency modulated continuous waveform (FMCW) is the most commonly used method. The transmitted and received FMCW signals, which are also called chirps, can be formulated as (1) and (2); f stands for frequency and is the phase of the signal.

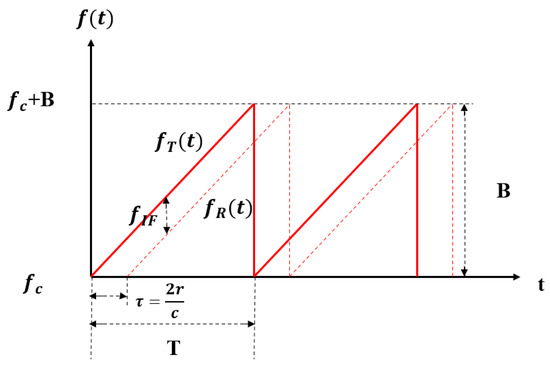

As shown in Figure 1, the frequency of FMCW signals varies linearly in each period, with important parameters including the carrier frequency , bandwidth B, and signal cycle T. Through the frequency mixer, an intermediate frequency (IF) signal is generated in (3) and beat frequency can be calculated by the frequency of transmitted signal and received signal in (4). From Figure 1, we can obtain the correlation between and distance r, as shown in (5).

Figure 1.

The chirps of FMCW mm-wave radars.

The information on Doppler velocity requires multiple adjacent IF signals and it focuses on the phase information of signals. denotes the phase shift between adjacent IF signals, is the wavelength of chirps, and c is the speed of light. The Doppler velocity of the specific target can be calculated using (6) and (7).

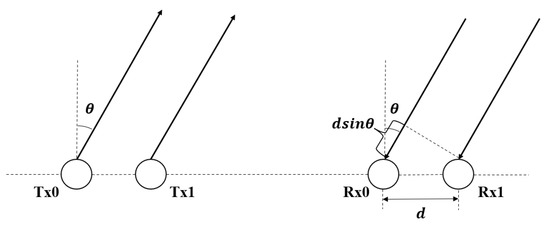

In addition, the azimuth angle of the target is calculated based on Multiple Input Multiple Output (MIMO) principles [51] in Figure 2. The distance between adjacent receivers is d and IF signals’ phase shift of adjacent receivers . Based on the above information, the azimuth angle of the target can be obtained by Equations (8)–(10).

Figure 2.

Linear distributed transmitters and receivers in MIMO radars.

2.1.2. LiDARs

LiDARs, which are also called laser radars, play an important role in autonomous driving. Instead of emitting electromagnetic waves such as mm-wave radars, LiDARs use laser beams used fpr detection and ranging. The most frequently used wavelengths are 905 nm and 1550 nm in automotive LiDARs. Due to the great collimation of laser beams, LiDARs possess the advantages of a high angular resolution and distance resolution, as well as strong anti-interference ability compared with traditional radars. However, LiDAR’s performance degrades seriously in adverse weather [52,53].

On the basis of the waveform modulation mode, the working principles of LiDARs can be divided into two categories: FMCW and time of flight (ToF). FMCW LiDARs use frequency modulated continuous waves to measure the distance and velocity like FMCW mm-wave radars, while ToF LiDARs play a dominant role in autonomous driving and the range d is calculated by the flight time of pulsed lasers directly in (11).

2.2. Applications

At present, radars are widely applied in various scenarios, especially in autonomous driving. In this part, the applications of mm-wave radars and LiDARs will be introduced in detail.

2.2.1. Mm-Wave Radars

Mm-wave radars are used for a variety of detection targets, including objects in industry and human beings in biomedical applications [54]. mm-wave radars can not only monitor and measure products in traditional industries, but they can also complete the perception of obstacles in autonomous driving. In the context of biomedical applications, MIMO mm-wave radars enable real-time detection of vital signs including fall detection [55], sleep monitoring [56], and hand gesture recognition [57]. In addition to observing conventional vital signs, mm-wave radars can also be employed as an aid for special populations. For example, Refs. [58,59] introduce a cane with mm-wave radars to help blind people to perceive obstacles, which can facilitate their lives effectively.

Due to the good penetration in rain and snow, automotive mm-wave radars are able to work in all weather conditions. According to the different detection ranges, the current automotive mm-wave radars can be divided into three categories—long-range radars (LRR), middle-range radars (MRR), and short-range radars (SRR)—as shown in Table 1. In real applications, LRR are mainly applied for adaptive cruise control and forward collision warning, MRR for blind spot detection and lane change assistance, and SRR for parking assistance [50]. With the rapid development of radar technologies, the emerging 4D mm-wave radars can potentially replace automotive LiDARs in the future.

Table 1.

Comparison of different automotive mm-wave radars [50].

2.2.2. LiDARs

LiDARs are able to provide dense point clouds of surroundings. Due to the obvious advantages in accuracy, LiDARs are suitable for various high-precision tasks and can be classified into three categories according to different platforms in Table 2. Spaceborne LiDARs are mainly used for space rendezvous, docking, and aircraft navigation [60]. Airborne LiDARs are designed for the tasks such as terrain mapping and underwater detection [61], while vehicle-borne LiDARs play an important role in autonomous driving.

Table 2.

Comparison of LiDARs on different platforms.

2.3. Future of the Radar Industry: From CPS to CPSS

With the development of computer science, digital twins’ radar systems in CPS have received extensive attention and shown great advantages in cost. However, they not only ignore the interaction between the physical and virtual world but also oversimplify various important factors in physical scenarios. Additionally, the current digital twin radar systems also neglect human factors in social space, which are closely related to each part of radar systems. Human dynamics introduce predictive uncertainty into radar systems, which is a great challenge to the framework of digital twins’ radars. In order to solve the above problems and achieve digital intelligence, Parallel Radars in CPSS that are tightly coupled with physical space, cyber space, and social space are proposed as a novel method framework. A comparison of digital twins’ radars and Parallel Radars is shown in Table 3. Parallel Radars construct complete artificial systems in cyber space, provide a mechanism to realize knowledge automation through computational experiments, and conduct intelligent interactions between physical and digital radars.

Table 3.

Comparison between digital twins’ radars and Parallel Radars.

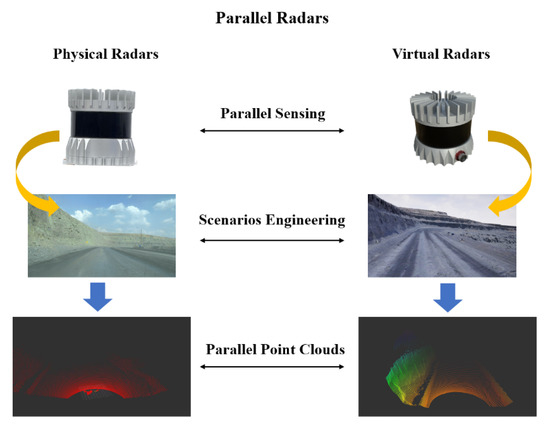

3. Parallel Radars

On the basis of parallel intelligence and ACP method [36], Parallel Radars, a new technical framework leading the future development of radar systems in Figure 3, is proposed. Parallel Radars integrate traditional radar knowledge with artificial intelligence and advanced cloud computing, as well as 5G communication technologies. It is able to provide efficient, convergent solutions to achieve smart radar systems in CPSS through data-driven Descriptive Radars, experiment-driven Predictive Radars, and Prescriptive Radars for interaction between the physical and virtual radars.

Figure 3.

The framework of Parallel Radars.

Descriptive Radars construct artificial systems for describing radars in cyber space and can be used to collect large amounts of virtual data. Predictive Radars conduct various computational experiments with artificial systems and generate deep knowledge, while Prescriptive Radars take feedback control of both physical and artificial systems and complete parallel execution. In the following subsections, we take autonomous driving as an example to introduce the framework of Parallel Radars in detail.

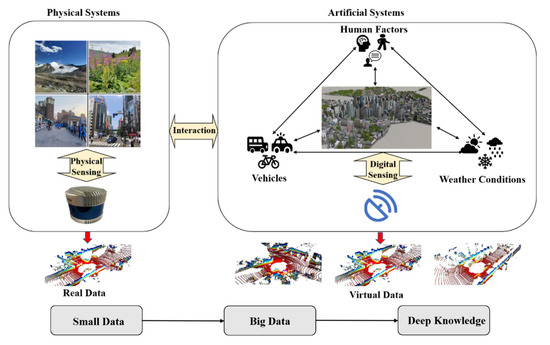

3.1. Descriptive Radars

Traditional radar sensors only complete the data collection without the ability to adjust operating modes in real time. Useless redundant data will be collected and only can be processed with local computing devices that drop radars’ performance seriously. Descriptive Radars that correspond to artificial radar systems in ACP method are proposed for better operation and management of radar systems, as shown in Figure 4. Each descriptive radar can be viewed as the mirror image of a physical radar in artificial systems. Apart from high-fidelity radar models, Descriptive Radars are closely related to scenario engineering and it’s the pioneering work to take the social environment into consideration. The physical environment in artificial scenarios includes common buildings, driving cars, and different weather conditions, while the social environment focuses on human behaviors and knowledge. Due to the involvement of social space, Descriptive Radars are able to generate massive more realistic synthetic data compared with the current digital twins’ radars in CPS [16,17,18,19,20,21,22,23,24,25].

Figure 4.

Descriptive Radars: the framework and process.

Descriptive Radars should build complete artificial systems in the cloud at first. In terms of sensor modeling, advanced ray tracing technology [62,63] is able to imitate the process of data collection and AutoCAD [64] for modeling radars’ physical structures precisely. The working conditions of Descriptive Radars should be consistent with physical radars to ensure the fidelity of virtual data. The developed game engines such as Unreal Engine [65] and the industrial engines Omniverse [66] can be applied to build artificial scenarios, while the novel 3D reconstruction technique, Nerf [67], is also a suitable method for environment modeling. Synthetic data can be collected by moving the sensor models in artificial traffic scenes. Compared with end-to-end generation models, such as GANs [68] and diffusion models [69], Descriptive Radars have better interpretability and are able to obtain labels directly. In a real application, Descriptive Radars are responsible for modifying the incremental information that can reduce the process of redundant data and break the physical limits of hardware through cloud computing. They also can generate big virtual data at a low cost and provide security for radar systems when physical radars fail. To be specific, virtual data are mainly used for augmenting real training sets to enhance the model performance. We use a large amount of virtual data for models pre-training and conduct fine tuning with small real data. The generated virtual data have alredy been proven effective for object detection [26,27], segmentation [28,29,30,31], and mapping [32,33,34]. Additionally, Descriptive Radars can also be used to extract the hidden features of different traffic scenes to make the model achieve better generalization.

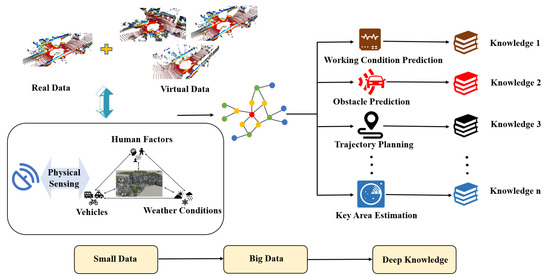

3.2. Predictive Radars

Although Descriptive Radars have already constructed artificial systems in cyber space, there is an obvious problem that automotive radars are facing in a dynamic environment in practice. Due to human involvement, the static scenes established by Descriptive Radars are impossible to cover all possible scenes of the physical world. It is challenging to achieve the optimal scheme and provide help for physical radars. Predictive Radars, as shown in Figure 5, are proposed for experiments and evaluation with artificial systems to solve this problem. They are similar to the process of imagining the consequences in mind before making a decision. Predictive Radars conduct various computational experiments with artificial systems, which is also the transition process from small data to big data to deep knowledge. Data, including real data and virtual data, are used to achieve deep knowledge that can be understood by humans clearly.

Figure 5.

Predictive Radars: the framework and process.

Predictive Radars take small data of the current scenes as input and perform general perception tasks such as PointPillars [70] for object detection and PointNet++ [71] for segmentation at first. The emerging cooperative perception [72,73,74,75] can greatly increase the perception range of each vehicle and deserves more attention in the future. Based on the results of general perception tasks, Predictive Radars will predict different future scenes which is the transition from small data to big data. It is essential to apply trajectory planning technology [76,77,78,79,80] to conduct predictions while the network structures of Transformer [81,82] and N-Bests [83] have shown great advantages and show promise for wide application. After acquiring data for future scenarios, we should aggregate features in the time axis, evaluate different situations, and find out the optimal strategy to develop deep knowledge from big data. Predictive Radars are able to be applied to many specific problems, including working conditions prediction, obstacle prediction, and key area estimation. It can increase the utilization efficiency of hardware resources and avoid the process of redundant data.

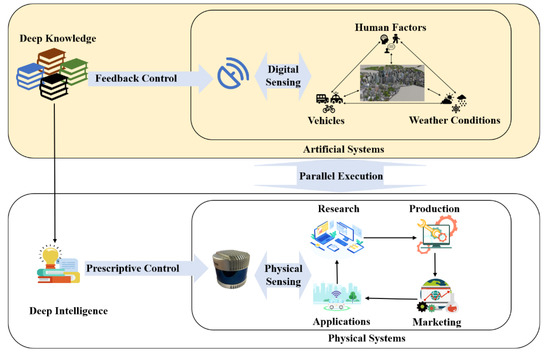

3.3. Prescriptive Radars

At present, radars are only used to collect data in the fixed operating mode and there is no interaction between the physical radars’ hardware and generated deep knowledge through computational experiments. However, automotive radars are facing a dynamic and complex environment in CPSS with human involvement during application. It is essential to build smart radar systems to meet new requirements for intelligent information processing in real time. With the assistance of Descriptive and Predictive Radars, deep knowledge has already been achieved through various computational experiments while Prescriptive Radars in Figure 6 are proposed to take prescriptive control of physical radars and complete parallel execution. Prescriptive Radars use software to redefine radar systems as interactive systems tightly coupled between physical and virtual radars instead of regarding them as two isolated systems.

Figure 6.

Prescriptive Radars: the framework and process.

On the basis of obtained deep knowledge in cyber space, Prescriptive Radars provide feedback to physical radars and adjust operating modes in real time with the assistance of digital technologies [84]. Novel modulation methods such as OFDM [85] and PMCW [86] can generate waveforms digitally with timely adjustment and they have shown great flexibility and intelligence in radar systems. Prescriptive Radars can change the waveforms’ type and sparsity of beams to focus on key areas that help radar systems adapt to different weather conditions and emergencies. In the real application, Prescriptive Radars keep virtual radars consistent with physical radars all the time to conduct parallel execution and constituted a complete closed loop.

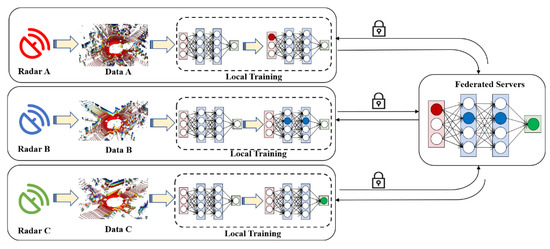

4. Federated Radars

Parallel Radars can realize data fusion through the network of multiple radars, which has not only constructive benefits for perception tasks but also lower requirements for communication bandwidth with cost savings.

The improvement of algorithms has accelerated the development of big data and hardware computing power. However, the existing centralized training mode makes the deployment of Parallel Radars face many difficulties and challenges. It is mainly reflected in the high privacy of data collected by each radar system. These sensitive data are forbidden to be uploaded without authorization so that the data of each client can’t be shared. However, the effect of deep learning models depends on the quantity and quality of data. Limited by the data fragmentation, the models are only fed with local data for single-point modeling, which affects the model performance seriously. In addition, due to the differences in device performance, data synchronization is inconsistent among various data sources and the current scene cannot be responded to in real time. Non-uniformity in data distribution, quality, and size also affects model performance seriously.

Data privacy threats including data theft and data leakage after leaving the local area need to be solved urgently [87], especially for military use. As a method to connect the silos of data, federated learning [88] enables participants to build models without sharing data to achieve swarm intelligence [89]. Under this framework, federated radars in Figure 7 use common data to train a model for multiple radars [90], while the federation of radars can realize model interactive and cooperative learning between different radars [91]. In federation of radars, data are aggregated in each client for local training. The models will be deployed locally and uploaded to the cloud servers after local training. When the performance of global models in the cloud exceeds the original local models, they can be deployed to other clients with permission. They can work across different data structures and different institutions. It has advantages in lossless model quality and data security without the limitation of algorithms [92]. Each radar system based on federated learning can collect and process data independently, and is entitled to initiate federated learning that speeds up the deployment of new models for radars. During the process of model uploading, the transmission volume of model parameters is much smaller than the transmission of data, which can save network bandwidth effectively [93].

Figure 7.

Federated Radars: The Framework and Process.

5. Applications of Parallel Radars

Parallel Radars in CPSS realize digital intelligence with virtual–real interactions and can be widely applied in many fields. In this section, we focus on three main application cases of Parallel Radars, including burgeoning autonomous driving, traditional industry, and military use. In autonomous driving, we take mines as an example to introduce the technical implementation of Parallel Radars and conduct various experiments.

5.1. Autonomous Driving

Parallel Radars play an important role in autonomous driving. The high cost of collecting data and long-tail problem are serious issues. With the complete artificial systems in cyber space, Parallel Radars can generate sufficient virtual data to train new models for different downstream tasks such as object detection [26,27], segmentation [28,29,30,31], and cooperative perception [72,73,74,75], which can solve these problems effectively. Specific tasks such as the validation of new radars [94], super-resolution [95,96,97], and the analysis of radar placement [98,99], can also be settled. Additionally, due to the limitation of local computing resources, it is impossible to conduct various predictive experiments locally. Parallel Radars can help solve prediction problems in the time domain effectively such as trajectory planning, working condition prediction, and critical area estimation through computational experiments in the cloud. They are able to provide instructions for the next movement of radars and avoid redundant data processing. Parallel Radars use software to redefine radar systems and are able to take indicative control of radar’s hardware based on the obtained deep knowledge in real time. Parallel execution can be conducted with the growing digital technologies such as digital beam forming and digital waveform generation [85,86]. In addition to the application process, Parallel Radars can also take prescriptive control of each step of the radar industry, including research and manufacturing, as well as marketing.

Due to the complex environment, it is difficult to realize autonomous driving in urban areas in a short time, while specific scenes such as mines and ports are promising to make autopilot apply in the first place. On the basis of parallel intelligence, we apply Parallel Radars to mines and carry out dozens of experiments.

First, as shown in Figure 8, we build artificial radar systems including sensor models and scenarios of mining areas based on Unreal Engine 4 to generate virtual data in cyber space [100]. In our artificial systems, virtual radars are consistent with real radars in appearance and internal physical parameters. It can not only collect a lot of data through artificial mines, which are costly in physical space, but also maintain safe operation when physical radars fail.

Figure 8.

Application of Parallel Radars in mines.

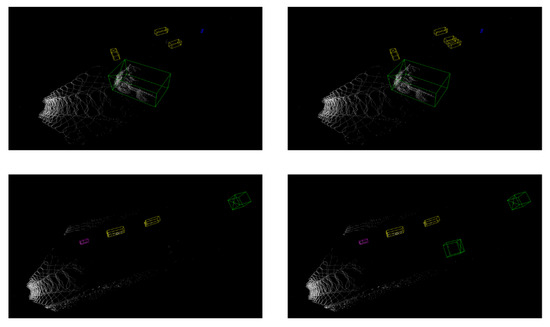

Second, we conduct computational experiments on object detection to verify the effectiveness of collected virtual data. We only utilize real mining data to train a PointPillars model as the baseline and mixed data including real and synthetic data is applied to train a new model. Both models are tested in real testing set for qualitative analysis. We find that the additional virtual training data can improve models’ performance effectively as shown in Figure 9. Vehicles in the distance with only a few points are missed by the baseline model while they can be successfully identified and localized by the new model.

Figure 9.

Comparative experiments between the baseline model and new model in object detection. The left column is the baseline model trained only on real data and the right column is the new model trained on both real data and virtual data.

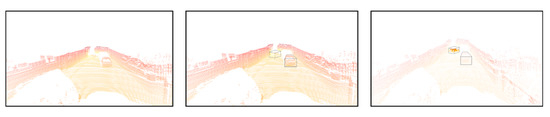

Finally, we conducted experiments to realize the prescriptive control of Parallel Radars. The left picture in Figure 10 is a frame of point cloud data collected in the normal operating mode and the middle one highlights the detected bounding boxes. We found that a vehicle with only a few points is changing lines in the distance while it is important for subsequent decisions and requires more attention. On the basis of perception results, we put more hardware resources on the estimated critical areas in order to obtain locally dense point clouds as shown in the right picture of Figure 10. More detailed information on key areas can be achieved through Parallel Radars. It realizes the feedback from obtained deep knowledge through computational experiments in cyber space to physical radars and builds a complete closed loop.

Figure 10.

Critical area prediction and scene rescan based on Parallel Radars.

Taking mines as an example, we introduce the working process of Parallel Radars in autonomous driving and provide concrete methods at the technical level. In the future, we will apply Parallel Radars to predict the occurrence of landslides which is a common but dangerous phenomenon in mines.

5.2. Traditional Industry

Parallel Radars can also be widely applied in traditional industries, such as architectural design [3], observation [4,5,6,7], industrial robots [8], and heating, ventilation, and air condition (HVAC) control [9,10]. In the field of architectural design, Parallel Radars are helpful in the 3D modeling and analysis of architectures with the consideration of human factors. It can predict the evolution of a building from its establishment realistically at short notice. For observation tasks, Parallel Radars can be employed in the observation of burden surface inside blast furnaces [4], the concentration of air pollutants [5], and the structural failure of wind turbines [6,7]. They can predict potential problems in advance and lead physical radars to pay more attention to critical areas. It ensures the security of equipment while utilizing resources efficiently. Parallel Radars also play an important role in industrial robots that enable the precise positioning of industrial robots at a low cost. Additionally, Parallel Radars can realize intelligent control in HVAC systems, which can reduce energy consumption effectively. With a complete consideration of human factors, parallel radars allow for accurate monitoring and prediction of human behaviors.

5.3. Military Use

Parallel Radars also play an important role in military use which is the initial application scenario of radars [84,101]. There are several radar systems used in the military such as early warning radars for target sensing in the distance and tracing radars for target tracking. Parallel Radars can provide predictive information through computational experiments with artificial systems. It can not only help radar systems discover potential targets earlier but also be used for robustness testing of tracking algorithms. Additionally, due to the requirement of a large survey region, military radar systems take a huge energy consumption. Parallel Radars are able to take intelligent adjustments of operating modes according to the environment to reduce energy consumption.

6. Conclusions

Traditional radars must evolve into smart radar systems to adapt to the dynamic and complex environment. Due to the neglect of human involvement and virtual–real interactions, digital twin radars in CPS are insufficient to achieve true intelligence. However, parallel intelligence in CPSS can be applied to construct a novel methodology framework of radars. Based on parallel theory and ACP method, we propose Parallel Radars, a new paradigm of future radars for digital intelligence. Parallel Radars follow the principle of “from small data to big data to deep intelligence” and achieve knowledge automation. Taking the case of autonomous driving in mines, the application process of Parallel Radars is clarified and various experiments are conducted for validation. They have boosted the performance of physical radars efficiently and shown great potential in solving more problems. In order to connect data silos and protect data privacy, the concept of federated radars and federation of radars are discussed. The proposed Parallel Radars offer an effective solution for accomplishing smart radar systems and they are convinced to be widely applied in the future.

Author Contributions

Conceptualization, F.-Y.W.; methodology, F.-Y.W. and Y.L.; software, not applicable; validation, Y.L., Y.S., L.F. and Y.T.; formal analysis, Y.L., Y.S., L.F. and Y.T.; investigation, Y.L., Y.S. and L.F.; resources, F.-Y.W.; data curation, not applicable; writing—original draft preparation, Y.L., Y.S. and L.F.; writing—review and editing, Y.L., Y.S., L.F., Y.T., Y.A., B.T., Z.L. and F.-Y.W.; visualization, Y.L., L.F. and Y.T.; supervision, F.-Y.W.; project administration, F.-Y.W.; funding acquisition, not applicable. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data not available due to commercial restrictions.

Acknowledgments

The authors wish to express our gratitude to every reviewer of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CPSS | Cyber-Physical-Social Systems |

| CPS | Cyber-Physical Systems |

| mm-wave radar | millimeter wave radar |

| LiDAR | Light Detection and Ranging |

| SAR | Synthetic Aperture Radars |

| FMCW | Frequency Modulated Continuous Waveform |

| IF | Intermediate Frequency |

| MIMO | Multiple Input Multiple Output |

| ToF | Time of Flight |

| LRR | Long-Range Radars |

| MRR | Middle-Range Radars |

| SRR | Short-Range Radars |

| HVAC | Heating, Ventilation, and Air Condition |

References

- Skolnik, M. Role of radar in microwaves. IEEE Trans. Microw. Theory Tech. 2002, 50, 625–632. [Google Scholar] [CrossRef]

- Wellig, P.; Speirs, P.; Schuepbach, C.; Oechslin, R.; Renker, M.; Boeniger, U.; Pratisto, H. Radar systems and challenges for C-UAV. In Proceedings of the International Radar Symposium, Bonn, Germany, 20–22 June 2018; pp. 1–8. [Google Scholar]

- Noichl, F.; Braun, A.; Borrmann, A. “BIM-to-Scan” for Scan-to-BIM: Generating Realistic Synthetic Ground Truth Point Clouds based on Industrial 3D Models. In Proceedings of the European Conference on Computing in Construction, Online, 26–28 July 2021. [Google Scholar]

- Chen, X.; Liu, F.; Hou, Q.; Lu, Y. Industrial high-temperature radar and imaging technology in blast furnace burden distribution monitoring process. In Proceedings of the International Conference on Electronic Measurement and Instruments, Beijing, China, 16–19 August 2009; pp. 1-599–1-603. [Google Scholar]

- Tong, Y.; Liu, W.; Zhang, T.; Dong, Y.; Zhao, X. The method of monitoring for particle transport flux from industrial source by lidar. Opt. Tech. 2010, 36, 29–32. [Google Scholar]

- Muñoz-Ferreras, J.; Peng, Z.; Tang, Y.; Gómez-García, R.; Liang, D.; Li, C. Short-range Doppler-radar signatures from industrial wind turbines: Theory, simulations, and measurements. IEEE Trans. Instrum. Meas. 2016, 65, 2108–2119. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, G.; Hong, H.; Zhu, X. Remote structural health monitoring for industrial wind turbines using short-range Doppler radar. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- van Delden, M.; Guzy, C.; Musch, T. Investigation on a System for Positioning of Industrial Robots Based on Ultra-Broadband Millimeter Wave FMCW Radar. In Proceedings of the IEEE Asia-Pacific Microwave Conference, Singapore, 10–13 December 2019; pp. 744–746. [Google Scholar]

- Cardillo, E.; Li, C.; Caddemi, A. Heating, Ventilation, and Air Conditioning Control by Range-Doppler and Micro-Doppler Radar Sensor. In Proceedings of the European Radar Conference, London, UK, 5–7 April 2021; pp. 21–24. [Google Scholar]

- Santra, A.; Ulaganathan, R.V.; Finke, T. Short-Range Millimetric-Wave Radar System for Occupancy Sensing Application. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Hakobyan, G.; Yang, B. High-performance automotive radar: A review of signal processing algorithms and modulation schemes. IEEE Signal Process. Mag. 2019, 36, 32–44. [Google Scholar] [CrossRef]

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR technology: A survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6282–6297. [Google Scholar] [CrossRef]

- Lee, E.A. CPS foundations. In Proceedings of the Design Automation Conference, Anaheim, CA, USA, 13–18 June 2010; pp. 737–742. [Google Scholar]

- Lee, E.A. Cyber physical systems: Design challenges. In Proceedings of the IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing, Orlando, FL, USA, 5–7 May 2008; pp. 363–369. [Google Scholar]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y. Digital twin in industry: State-of-the-art. IEEE Trans. Ind. Inform. 2018, 15, 2405–2415. [Google Scholar] [CrossRef]

- Holder, M.; Rosenberger, P.; Winner, H.; D’hondt, T.; Makkapati, V.P.; Maier, M.; Schreiber, H.; Magosi, Z.; Slavik, Z.; Bringmann, O.; et al. Measurements revealing challenges in radar sensor modeling for virtual validation of autonomous driving. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; pp. 2616–2622. [Google Scholar]

- Hanke, T.; Schaermann, A.; Geiger, M.; Weiler, K.; Hirsenkorn, N.; Rauch, A.; Schneider, S.A.; Biebl, E. Generation and validation of virtual point cloud data for automated driving systems. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Ngo, A.; Bauer, M.P.; Resch, M. A multi-layered approach for measuring the simulation-to-reality gap of radar perception for autonomous driving. In Proceedings of the IEEE International Intelligent Transportation Systems Conference, Indianapolis, IN, USA, 19–22 September 2021; pp. 4008–4014. [Google Scholar]

- Schöffmann, C.; Ubezio, B.; Böhm, C.; Mühlbacher-Karrer, S.; Zangl, H. Virtual radar: Real-time millimeter-wave radar sensor simulation for perception-driven robotics. IEEE Robot. Autom. Lett. 2021, 6, 4704–4711. [Google Scholar] [CrossRef]

- Thieling, J.; Frese, S. Scalable and physical radar sensor simulation for interacting digital twins. IEEE Sens. J. 2020, 21, 3184–3192. [Google Scholar] [CrossRef]

- Muckenhuber, S.; Museljic, E.; Stettinger, G. Performance evaluation of a state-of-the-art automotive radar and corresponding modeling approaches based on a large labeled dataset. J. Intell. Transp. Syst. 2021, 26, 655–674. [Google Scholar] [CrossRef]

- Weston, R.; Jones, O.P.; Posner, I. There and back again: Learning to simulate radar data for real-world applications. In Proceedings of the IEEE International Conference on Robotics and Automation, Xi’an, China, 30 May–5 June 2021; pp. 12809–12816. [Google Scholar]

- Weston, R.; Cen, S.; Newman, P.; Posner, I. Probably unknown: Deep inverse sensor modelling radar. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 5446–5452. [Google Scholar]

- Vacek, P.; Jašek, O.; Zimmermann, K.; Svoboda, T. Learning to predict lidar intensities. IEEE Trans. Intell. Transp. Syst. 2021, 23, 3556–3564. [Google Scholar] [CrossRef]

- Kumar, P.; Sahoo, S.; Shah, V.; Kondameedi, V.; Jain, A.; Verma, A.; Bhattacharyya, C.; Vishwanath, V. DSLR: Dynamic to Static LiDAR Scan Reconstruction Using Adversarially Trained Autoencoder. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 1836–1844. [Google Scholar]

- Yue, X.; Wu, B.; Seshia, S.A.; Keutzer, K.; Sangiovanni-Vincentelli, A.L. A lidar point cloud generator: From a virtual world to autonomous driving. In Proceedings of the International Conference on Multimedia Retrieval, Yokohama, Japan, 11–14 June 2018; pp. 458–464. [Google Scholar]

- Fang, J.; Zhou, D.; Yan, F.; Zhao, T.; Zhang, F.; Ma, Y.; Wang, L.; Yang, R. Augmented lidar simulator for autonomous driving. IEEE Robot. Autom. Lett. 2020, 5, 1931–1938. [Google Scholar] [CrossRef]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road-Object Segmentation from 3D LiDAR Point Cloud. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. Squeezesegv2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a lidar point cloud. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Wang, F.; Zhuang, Y.; Gu, H.; Hu, H. Automatic generation of synthetic LiDAR point clouds for 3-D data analysis. IEEE Trans. Instrum. Meas. 2019, 68, 2671–2673. [Google Scholar] [CrossRef]

- Li, W.; Pan, C.; Zhang, R.; Ren, J.; Ma, Y.; Fang, J.; Yan, F.; Geng, Q.; Huang, X.; Gong, H.; et al. AADS: Augmented autonomous driving simulation using data-driven algorithms. Sci. Robot. 2019, 4, eaaw0863. [Google Scholar] [CrossRef]

- Chen, X.; Vizzo, I.; Läbe, T.; Behley, J.; Stachniss, C. Range image-based LiDAR localization for autonomous vehicles. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5802–5808. [Google Scholar]

- Deschaud, J.E.; Duque, D.; Richa, J.P.; Velasco-Forero, S.; Marcotegui, B.; Goulette, F. Paris-CARLA-3D: A real and synthetic outdoor point cloud dataset for challenging tasks in 3D mapping. Remote Sens. 2021, 13, 4713. [Google Scholar] [CrossRef]

- Gao, P.; Zhang, S.; Wang, W.; Lu, C.X. DC-Loc: Accurate Automotive Radar Based Metric Localization with Explicit Doppler Compensation. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022; pp. 4128–4134. [Google Scholar]

- Li, L.; Lin, Y.; Cao, D.; Zheng, N.; Wang, F.-Y. Parallel learning—A new framework for machine learning. Acta Autom. Sin. 2017, 43, 1–8. [Google Scholar]

- Wang, F.-Y. Parallel system methods for management and control of complex systems. Control. Decis. 2004, 19, 485–489. [Google Scholar]

- Wang, F.-Y. The emergence of intelligent enterprises: From CPS to CPSS. IEEE Intell. Syst. 2010, 25, 85–88. [Google Scholar] [CrossRef]

- Wang, F.-Y.; Wang, X.; Li, L.; Li, L. Steps toward parallel intelligence. IEEE/CAA J. Autom. Sin. 2016, 3, 345–348. [Google Scholar]

- Wang, F.-Y. Parallel control and management for intelligent transportation systems: Concepts, architectures, and applications. IEEE Trans. Intell. Transp. Syst. 2010, 11, 630–638. [Google Scholar] [CrossRef]

- Wei, Q.; Li, H.; Wang, F.-Y. Parallel control for continuous-time linear systems: A case study. IEEE/CAA J. Autom. Sin. 2020, 7, 919–928. [Google Scholar] [CrossRef]

- Lu, J.; Wei, Q.; Wang, F.-Y. Parallel control for optimal tracking via adaptive dynamic programming. IEEE/CAA J. Autom. Sin. 2020, 7, 1662–1674. [Google Scholar] [CrossRef]

- Wang, F.-Y.; Zheng, N.; Cao, D.; Martinez, C.M.; Li, L.; Liu, T. Parallel driving in CPSS: A unified approach for transport automation and vehicle intelligence. IEEE/CAA J. Autom. Sin. 2017, 4, 577–587. [Google Scholar] [CrossRef]

- Liu, T.; Xing, Y.; Tang, X.; Wang, H.; Yu, H.; Wang, F.-Y. Cyber-Physical-Social System for Parallel Driving: From Concept to Application. IEEE Intell. Transp. Syst. Mag. 2020, 13, 59–69. [Google Scholar] [CrossRef]

- Tan, J.; Xu, C.; Li, L.; Wang, F.-Y.; Cao, D.; Li, L. Guidance control for parallel parking tasks. IEEE/CAA J. Autom. Sin. 2020, 7, 301–306. [Google Scholar] [CrossRef]

- Wang, F.-Y. The engineering of intelligence: DAO to I&I, C&C, and V&V for intelligent systems. Int. J. Intell. Control. Syst. 2021, 1, 1–5. [Google Scholar]

- Li, X.; Ye, P.; Li, J.; Liu, Z.; Cao, L.; Wang, F.-Y. From features engineering to scenarios engineering for trustworthy AI: I&I, C&C, and V&V. IEEE Intell. Syst. 2022, 37, 21–29. [Google Scholar]

- Wang, F.-Y.; Meng, X.; Du, S.; Geng, Z. Parallel light field: The framework and processes. Chin. J. Intell. Sci. Technol. 2021, 3, 110–122. [Google Scholar]

- Wang, F.-Y.; Shen, Y. Parallel Light Field: A Perspective and a Framework. IEEE/CAA J. Autom. Sin. Lett. 2022, 9, 1871–1873. [Google Scholar]

- Wang, F.-Y. Parallel light field and parallel optics, from optical computing experiments to prescriptive intelligence. 2018. [Google Scholar]

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive radars: A review of signal processing techniques. IEEE Signal Process. Mag. 2017, 34, 22–35. [Google Scholar] [CrossRef]

- Friedlander, B. Waveform design for MIMO radars. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1227–1238. [Google Scholar] [CrossRef]

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Autonomous Driving in Adverse Weather Conditions: A Survey. arXiv 2021, arXiv:2112.08936. [Google Scholar]

- Van Berlo, B.; Elkelany, A.; Ozcelebi, T.; Meratnia, N. Millimeter wave sensing: A review of application pipelines and building blocks. IEEE Sens. J. 2021, 21, 10332–10368. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Vaughan, R. Deep Learning Radar Design for Breathing and Fall Detection. IEEE Sens. J. 2020, 20, 5072–5085. [Google Scholar] [CrossRef]

- Wang, P.; Luo, Y.; Shi, G.; Huang, S.; Miao, M.; Qi, Y.; Ma, J. Research Progress in Millimeter Wave Radar-Based non-contact Sleep Monitoring—A Review. In Proceedings of the International Symposium on Antennas, Zhuhai, China, 1–4 December 2021; pp. 1–3. [Google Scholar]

- Hazra, S.; Santra, A. Robust gesture recognition using millimetric-wave radar system. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Cardillo, E.; Li, C.; Caddemi, A. Millimeter-wave radar cane: A blind people aid with moving human recognition capabilities. IEEE J. Electromagn. Microw. RF Med. Biol. 2021, 6, 204–211. [Google Scholar] [CrossRef]

- Cardillo, E.; Li, C.; Caddemi, A. Empowering Blind People Mobility: A Millimeter-Wave Radar Cane. In Proceedings of the IEEE International Workshop on Metrology for Industry 4.0 & IoT, Rome, Italy, 3–5 June 2020; pp. 213–217. [Google Scholar]

- Li, R.; Wang, C.; Su, G.; Zhang, K.; Tang, L.; Li, C. Development and Applications of Spaceborne LiDAR. Sci. Technol. Rev. 2007, 25, 58–63. [Google Scholar]

- Bo, L.; Yang, Y.; Shuo, J. Review of advances in LiDAR detection and 3D imaging. Opto-Electron. Eng. 2019, 46, 190167. [Google Scholar]

- Liang, G.; Bertoni, H.L. A new approach to 3-D ray tracing for propagation prediction in cities. IEEE Trans. Antennas Propag. 1998, 46, 853–863. [Google Scholar] [CrossRef]

- Li, T.; Aittala, M.; Durand, F.; Lehtinen, J. Differentiable monte carlo ray tracing through edge sampling. ACM Trans. Graph. 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Li, W.; Grossman, T.; Fitzmaurice, G. GamiCAD: A gamified tutorial system for first time autocad users. In Proceedings of the 25th annual ACM symposium on User interface software and technology, Cambridge, MA, USA, 7–10 October 2012; pp. 103–112. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Nvidia Omniverse. Available online: https://docs.omniverse.nvidia.com/ (accessed on 17 October 2022).

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Caccia, L.; Van Hoof, H.; Courville, A.; Pineau, J. Deep generative modeling of lidar data. In Proceedings of the International Conference on Intelligent Robots and Systems, Macau, China, 4–8 November 2019; pp. 5034–5040. [Google Scholar]

- Zyrianov, V.; Zhu, X.; Wang, S. Learning to Generate Realistic LiDAR Point Clouds. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, T.H.; Manivasagam, S.; Liang, M.; Yang, B.; Zeng, W.; Urtasun, R. V2vnet: Vehicle-to-vehicle communication for joint perception and prediction. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 605–621. [Google Scholar]

- Xu, R.; Xiang, H.; Xia, X.; Han, X.; Li, J.; Ma, J. Opv2v: An open benchmark dataset and fusion pipeline for perception with vehicle-to-vehicle communication. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022; pp. 2583–2589. [Google Scholar]

- Xu, R.; Xiang, H.; Tu, Z.; Xia, X.; Yang, M.H.; Ma, J. V2X-ViT: Vehicle-to-everything cooperative perception with vision transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Bai, Z.; Wu, G.; Barth, M.J.; Liu, Y.; Sisbot, A.; Oguchi, K. PillarGrid: Deep Learning-based Cooperative Perception for 3D Object Detection from Onboard-Roadside LiDAR. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Macau, China, 8–12 October 2022. [Google Scholar]

- Tang, C.; Salakhutdinov, R.R. Multiple futures prediction. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Rhinehart, N.; McAllister, R.; Levine, S. Deep Imitative Models for Flexible Inference, Planning, and Control. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Roh, J.; Mavrogiannis, C.; Madan, R.; Fox, D.; Srinivasa, S. Multimodal Trajectory Prediction via Topological Invariance for Navigation at Uncontrolled Intersections. In Proceedings of the Conference on Robot Learning, Online, 26–30 April 2020; pp. 2216–2227. [Google Scholar]

- Tian, F.; Zhou, R.; Li, Z.; Li, L.; Gao, Y.; Cao, D.; Chen, L. Trajectory Planning for Autonomous Mining Trucks Considering Terrain Constraints. IEEE Trans. Intell. Veh. 2021, 6, 772–786. [Google Scholar] [CrossRef]

- Zu, C.; Yang, C.; Wang, J.; Gao, W.; Cao, D.; Wang, F.-Y. Simulation and field testing of multiple vehicles collision avoidance algorithms. IEEE/CAA J. Autom. Sin. 2020, 7, 1045–1063. [Google Scholar] [CrossRef]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal Fusion Transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 11106–11115. [Google Scholar]

- Oreshkin, B.N.; Carpov, D.; Chapados, N.; Bengio, Y. N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Klein, M.; Carpentier, T.; Jeanclaude, E.; Kassab, R.; Varelas, K.; de Bruijn, N.; Barbaresco, F.; Briheche, Y.; Semet, Y.; Aligne, F. AI-augmented multi function radar engineering with digital twin: Towards proactivity. In Proceedings of the IEEE Radar Conference, Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar]

- Sturm, C.; Wiesbeck, W. Waveform design and signal processing aspects for fusion of wireless communications and radar sensing. Proc. IEEE 2011, 99, 1236–1259. [Google Scholar] [CrossRef]

- Bourdoux, A.; Ahmad, U.; Guermandi, D.; Brebels, S.; Dewilde, A.; Van Thillo, W. PMCW waveform and MIMO technique for a 79 GHz CMOS automotive radar. In Proceedings of the IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–5. [Google Scholar]

- Yuan, Y.; Wang, F.-Y. Blockchain and cryptocurrencies: Model, techniques, and applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1421–1428. [Google Scholar] [CrossRef]

- Wang, F.-Y.; Qin, R.; Chen, Y.; Tian, Y.; Wang, X.; Hu, B. Federated ecology: Steps toward confederated intelligence. IEEE Trans. Comput. Soc. Syst. 2021, 8, 271–278. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Fong, L.W.; Lou, P.C.; Lu, L.; Cai, P. Radar Sensor Fusion via Federated Unscented Kalman Filter. In Proceedings of the International Conference on Measurement, Information and Control, Harbin, China, 23–25 August 2019; pp. 51–56. [Google Scholar]

- Tian, Y.; Wang, J.; Wang, Y.; Zhao, C.; Yao, F.; Wang, X. Federated Vehicular Transformers and Their Federations: Privacy-Preserving Computing and Cooperation for Autonomous Driving. IEEE Trans. Intell. Veh. 2022, 7, 456–465. [Google Scholar] [CrossRef]

- Driss, M.; Almomani, I.; Ahmad, J. A federated learning framework for cyberattack detection in vehicular sensor networks. Complex Intell. Syst. 2022, 8, 1–15. [Google Scholar] [CrossRef]

- Zhao, Y.; Barnaghi, P.; Haddadi, H. Multimodal Federated Learning on IoT Data. In Proceedings of the IEEE/ACM Seventh International Conference on Internet-of-Things Design and Implementation, Milano, Italy, 4–6 May 2022; pp. 43–54. [Google Scholar]

- Berens, F.; Elser, S.; Reischl, M. Generation of synthetic Point Clouds for MEMS LiDAR Sensor. TechRxiv Preprint 2022. [Google Scholar] [CrossRef]

- Sallab, A.E.; Sobh, I.; Zahran, M.; Essam, N. LiDAR Sensor modeling and Data augmentation with GANs for Autonomous driving. In Proceedings of the International Conference on Machine Learning Workshop on AI for Autonomous Driving, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Shan, T.; Wang, J.; Chen, F.; Szenher, P.; Englot, B. Simulation-based lidar super-resolution for ground vehicles. Robot. Auton. Syst. 2020, 134, 103647. [Google Scholar] [CrossRef]

- Kwon, Y.; Sung, M.; Yoon, S.E. Implicit LiDAR Network: LiDAR Super-Resolution via Interpolation Weight Prediction. In Proceedings of the International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022; pp. 8424–8430. [Google Scholar]

- Berens, F.; Elser, S.; Reischl, M. Genetic Algorithm for the Optimal LiDAR Sensor Configuration on a Vehicle. IEEE Sens. J. 2021, 22, 2735–2743. [Google Scholar] [CrossRef]

- Hu, H.; Liu, Z.; Chitlangia, S.; Agnihotri, A.; Zhao, D. Investigating the Impact of Multi-LiDAR Placement on Object Detection for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–23 June 2022; pp. 2550–2559. [Google Scholar]

- Tian, Y.; Wang, X.; Shen, Y.; Guo, Z.; Wang, Z.; Wang, F.-Y. Parallel Point Clouds: Hybrid Point Cloud Generation and 3D Model Enhancement via Virtual–Real Integration. Remote Sens. 2021, 13, 2868. [Google Scholar] [CrossRef]

- Rouffet, T.; Poisson, J.B.; Hottier, V.; Kemkemian, S. Digital twin: A full virtual radar system with the operational processing. In Proceedings of the International Radar Conference, Toulon, France, 23–27 September 2019; pp. 1–5. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).