A Reinforcement Learning-Based Strategy of Path Following for Snake Robots with an Onboard Camera

Abstract

1. Introduction

- A novel hierarchical control method that combines the RL algorithm and the gait equation is developed for the path following of snake robots, which guarantees efficient training and is satisfactory following the accuracy.

- A visual localization stabilization term is introduced into the reward function to avoid excessive head swings, which ensures successful pan-tilt compensation, thereby optimizing the accuracy of visual localization.

- To verify the effectiveness of the algorithm, real-world experiments are implemented on a practical snake robot, and the experimental results demonstrate the promising path following the performance of the proposed method.

2. Materials and Methods

2.1. Problem Statement

2.2. Hierarchical Path Following Control

2.2.1. Visual Localization

2.2.2. Rl Policy Training Layer

2.2.3. Gait Executive Layer

3. Results

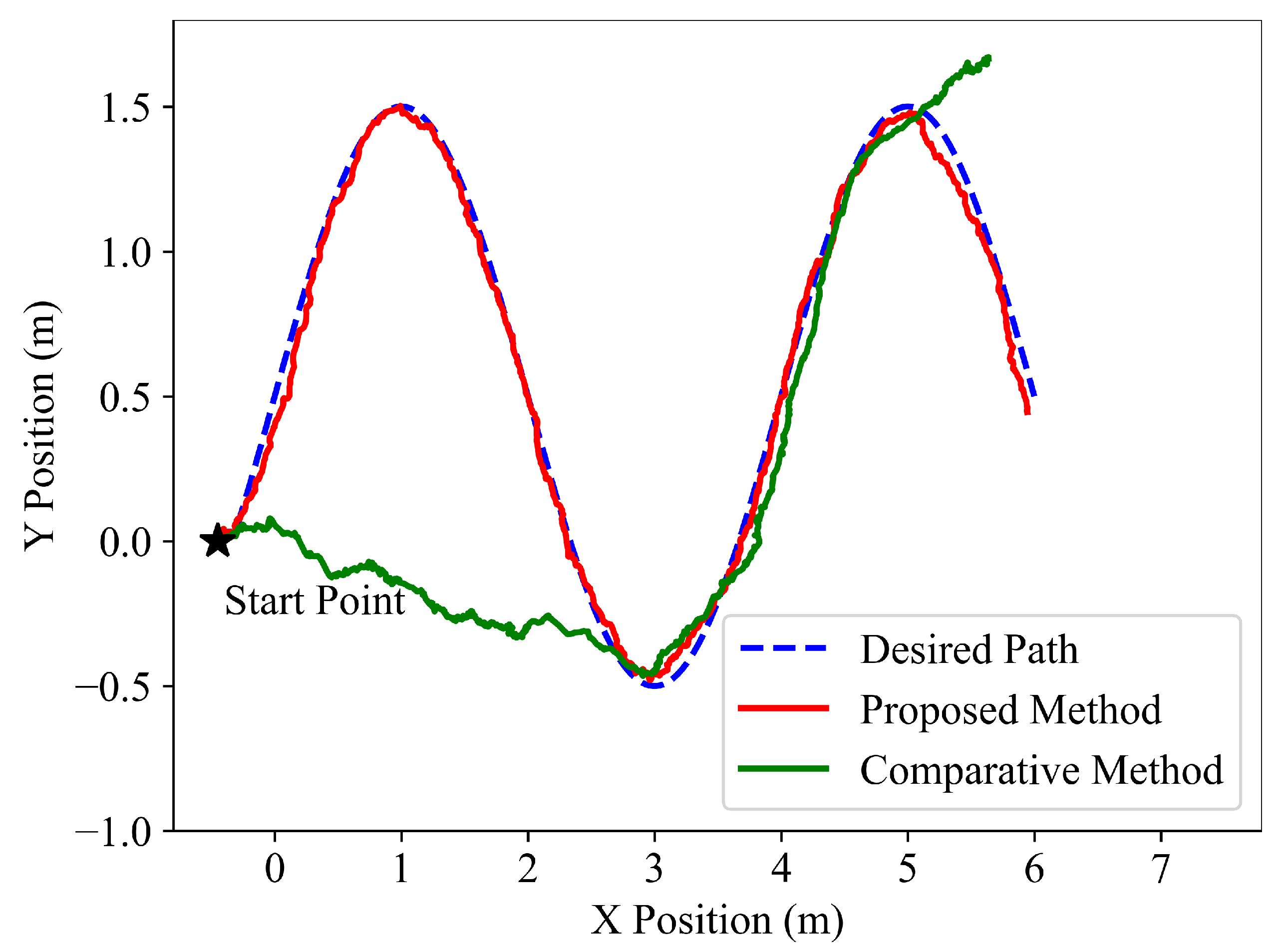

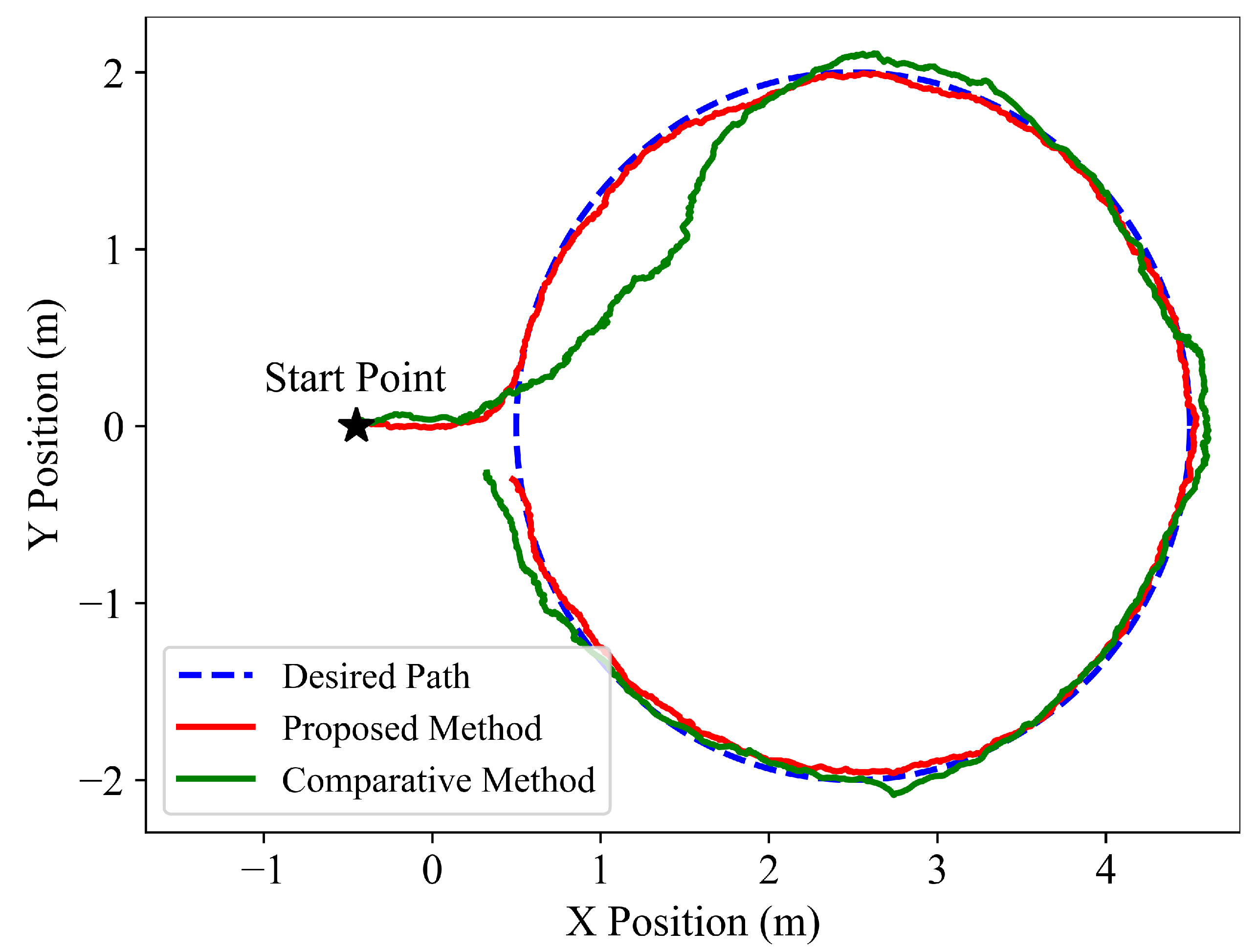

3.1. Simulations

3.2. Experiments

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hirose, S. Biologically Inspired Robots: Snake-like Locomotors and Manipulators; Oxford University Press: Oxford, UK, 1993. [Google Scholar]

- Ye, C.; Ma, S.; Li, B.; Wang, Y. Turning and side motion of snake-like robot. In Proceedings of the IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA ’04. 2004, New Orleans, LA, USA, 26 April 2004–1 May 2004; Volume 5, pp. 5075–5080. [Google Scholar] [CrossRef]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. A review on modelling, implementation, and control of snake robots. Robot. Auton. Syst. 2012, 60, 29–40. [Google Scholar] [CrossRef]

- Liljeback, P.; Haugstuen, I.U.; Pettersen, K.Y. Path following control of planar snake robots using a cascaded approach. IEEE Trans. Control Syst. Technol. 2011, 20, 111–126. [Google Scholar] [CrossRef]

- Rezapour, E.; Pettersen, K.Y.; Liljebäck, P.; Gravdahl, J.T. Path following control of planar snake robots using virtual holonomic constraints. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 530–537. [Google Scholar] [CrossRef][Green Version]

- Wang, G.; Yang, W.; Shen, Y.; Shao, H. Adaptive path following of snake robot on ground with unknown and varied friction coefficients. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7583–7588. [Google Scholar]

- Yang, W.; Wang, G.; Shao, H.; Shen, Y. Spline based curve path following of underactuated snake robots. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5352–5358. [Google Scholar]

- Cao, Z.; Zhang, D.; Zhou, M. Direction Control and Adaptive Path Following of 3-d Snake-Like Robot Motion. IEEE Trans. Cybern. 2021, 52, 10980–10987. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Pan, Z.; Deng, H.; Hu, L. Adaptive path following controller of a multijoint snake robot based on the improved serpenoid curve. IEEE Trans. Ind. Electron. 2021, 69, 3831–3842. [Google Scholar] [CrossRef]

- Mohammadi, A.; Rezapour, E.; Maggiore, M.; Pettersen, K.Y. Direction following control of planar snake robots using virtual holonomic constraints. In Proceedings of the 53rd IEEE Conference on Decision and Control, Los Angeles, CA, USA, 15–17 December 2014; pp. 3801–3808. [Google Scholar]

- Rezapour, E.; Hofmann, A.; Pettersen, K.Y.; Mohammadi, A.; Maggiore, M. Virtual holonomic constraint based direction following control of planar snake robots described by a simplified model. In Proceedings of the 2014 IEEE Conference on Control Applications (CCA), Juan Les Antibes, France, 8–10 October 2014; pp. 1064–1071. [Google Scholar]

- Rezapour, E.; Hofmann, A.; Pettersen, K.Y. Maneuvering control of planar snake robots based on a simplified model. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 548–555. [Google Scholar]

- Mohammadi, A.; Rezapour, E.; Maggiore, M.; Pettersen, K.Y. Maneuvering control of planar snake robots using virtual holonomic constraints. IEEE Trans. Control Syst. Technol. 2015, 24, 884–899. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, X.; Houthooft, R.; Schulman, J.; Abbeel, P. Benchmarking deep reinforcement learning for continuous control. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 1329–1338. [Google Scholar]

- Kiumarsi, B.; Vamvoudakis, K.G.; Modares, H.; Lewis, F.L. Optimal and autonomous control using reinforcement learning: A survey. IEEE Trans. Neural Networks Learn. Syst. 2017, 29, 2042–2062. [Google Scholar] [CrossRef] [PubMed]

- Ibarz, J.; Tan, J.; Finn, C.; Kalakrishnan, M.; Pastor, P.; Levine, S. How to train your robot with deep reinforcement learning: Lessons we have learned. Int. J. Robot. Res. 2021, 40, 698–721. [Google Scholar] [CrossRef]

- Iscen, A.; Caluwaerts, K.; Tan, J.; Zhang, T.; Coumans, E.; Sindhwani, V.; Vanhoucke, V. Policies modulating trajectory generators. In Proceedings of the Conference on Robot Learning, PMLR, Zürich, Switzerland, 29–31 October 2018; pp. 916–926. [Google Scholar]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Caluwaerts, K.; Iscen, A.; Zhang, T.; Tan, J.; Sindhwani, V. Data efficient reinforcement learning for legged robots. In Proceedings of the Conference on Robot Learning, PMLR, Cambridge, MA, USA, 16–18 November 2020; pp. 1–10. [Google Scholar]

- Xie, Z.; Berseth, G.; Clary, P.; Hurst, J.; van de Panne, M. Feedback control for cassie with deep reinforcement learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1241–1246. [Google Scholar]

- Li, Z.; Cheng, X.; Peng, X.B.; Abbeel, P.; Levine, S.; Berseth, G.; Sreenath, K. Reinforcement learning for robust parameterized locomotion control of bipedal robots. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 2021–5 June 2021; pp. 2811–2817. [Google Scholar]

- Liu, L.; Guo, X.; Fang, Y. Goal-driven Motion Control of Snake Robots with Onboard Cameras via Policy Improvement with Path Integrals. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 61–68. [Google Scholar] [CrossRef]

- Ponte, H.; Queenan, M.; Gong, C.; Mertz, C.; Travers, M.; Enner, F.; Hebert, M.; Choset, H. Visual sensing for developing autonomous behavior in snake robots. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May 2014–7 June 2014; pp. 2779–2784. [Google Scholar]

- Chang, A.H.; Feng, S.; Zhao, Y.; Smith, J.S.; Vela, P.A. Autonomous, monocular, vision-based snake robot navigation and traversal of cluttered environments using rectilinear gait motion. arXiv 2019, arXiv:1908.07101. [Google Scholar]

- Liu, L.; Xi, W.; Guo, X.; Fang, Y. Vision-based Path Following of Snake-like Robots. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 2021–5 June 2021; pp. 3084–3090. [Google Scholar] [CrossRef]

- Todorov, E.; Erez, T.; Tassa, Y. MuJoCo: A physics engine for model-based control. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 5026–5033. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Guo, X.; Fang, Y. A Reinforcement Learning-Based Strategy of Path Following for Snake Robots with an Onboard Camera. Sensors 2022, 22, 9867. https://doi.org/10.3390/s22249867

Liu L, Guo X, Fang Y. A Reinforcement Learning-Based Strategy of Path Following for Snake Robots with an Onboard Camera. Sensors. 2022; 22(24):9867. https://doi.org/10.3390/s22249867

Chicago/Turabian StyleLiu, Lixing, Xian Guo, and Yongchun Fang. 2022. "A Reinforcement Learning-Based Strategy of Path Following for Snake Robots with an Onboard Camera" Sensors 22, no. 24: 9867. https://doi.org/10.3390/s22249867

APA StyleLiu, L., Guo, X., & Fang, Y. (2022). A Reinforcement Learning-Based Strategy of Path Following for Snake Robots with an Onboard Camera. Sensors, 22(24), 9867. https://doi.org/10.3390/s22249867