Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks

Abstract

1. Introduction

- We use our large dataset composed of 85 users with information on 11 different hand gestures (5 static and 6 dynamic gestures) that contain EMG and IMU signals. The data were taken from two different armband sensors, the Myo armband and G-force sensors.

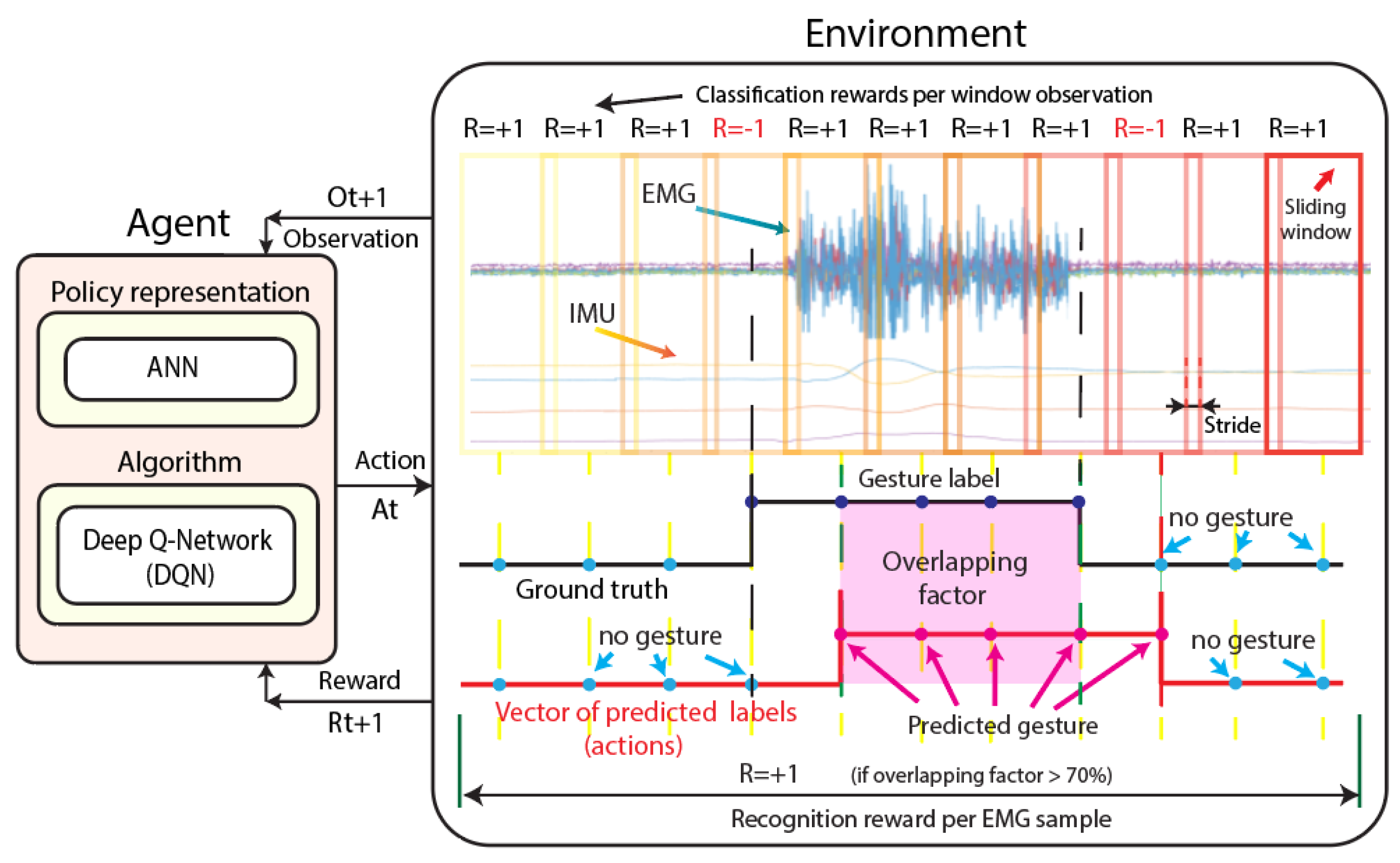

- We successfully combine the EMG-IMU signals with the deep Q-network (DQN) reinforcement learning algorithm. We propose an agent’s policy representations based on artificial neural networks (ANN).

- We compare the results of the proposed method using both sensors, the Myo armband and G-force sensors. We also compare the results found in the present work, which uses EMG and IMU signals, with those of a method previously developed on a dataset that used only EMG signals and the Q-learning algorithm.

2. Hand Gesture Recognition Method

2.1. Data Acquisition

2.2. Pre-Processing

2.3. Feature Extraction

2.4. Classification of EMGs

2.4.1. Q-Learning

2.4.2. Deep Q-Networks (DQN)

| Algorithm 1 DQN with Experience Replay |

|

2.4.3. DQN for EMG-IMU Classification

2.5. Post-Processing

3. Results

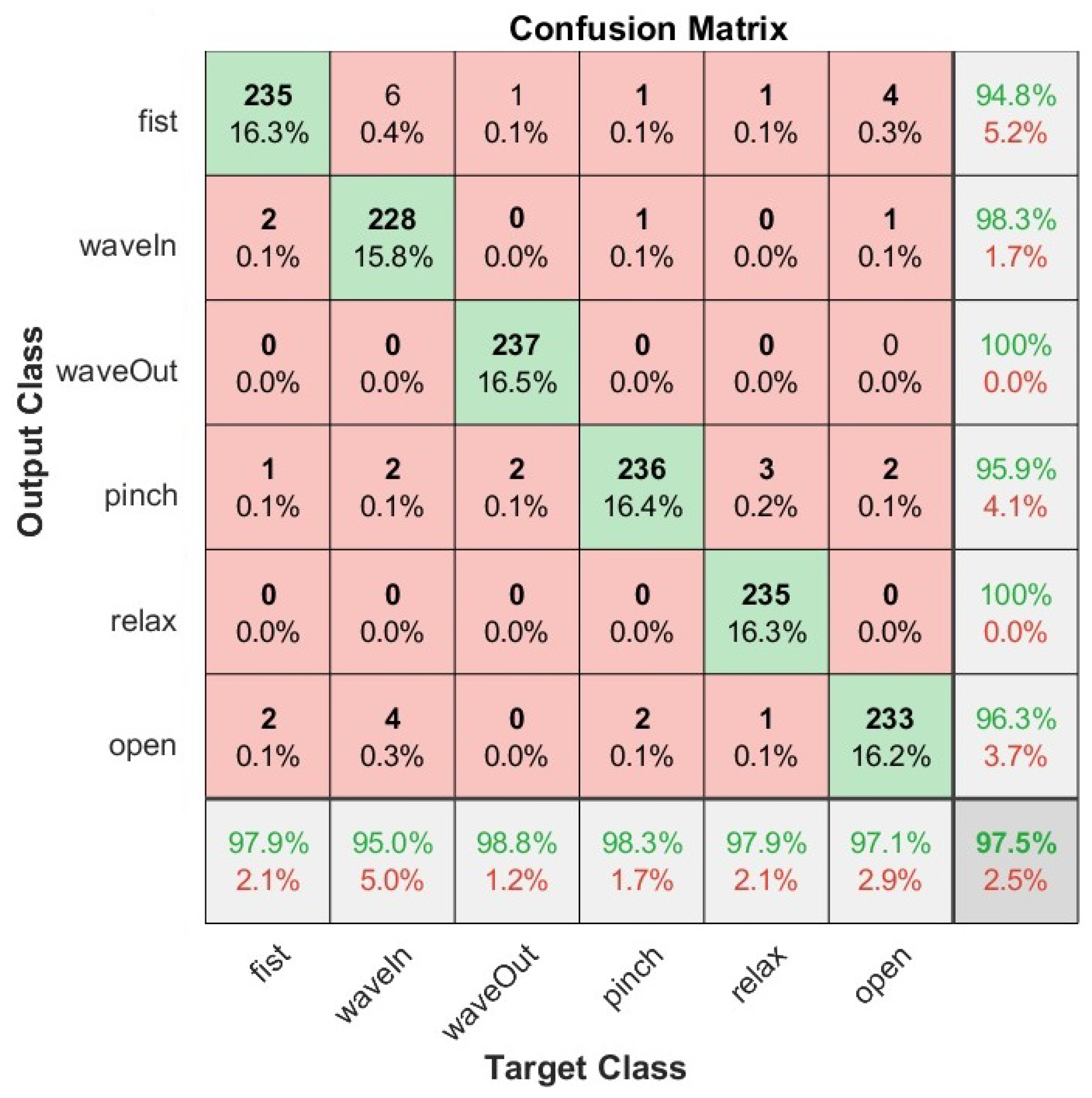

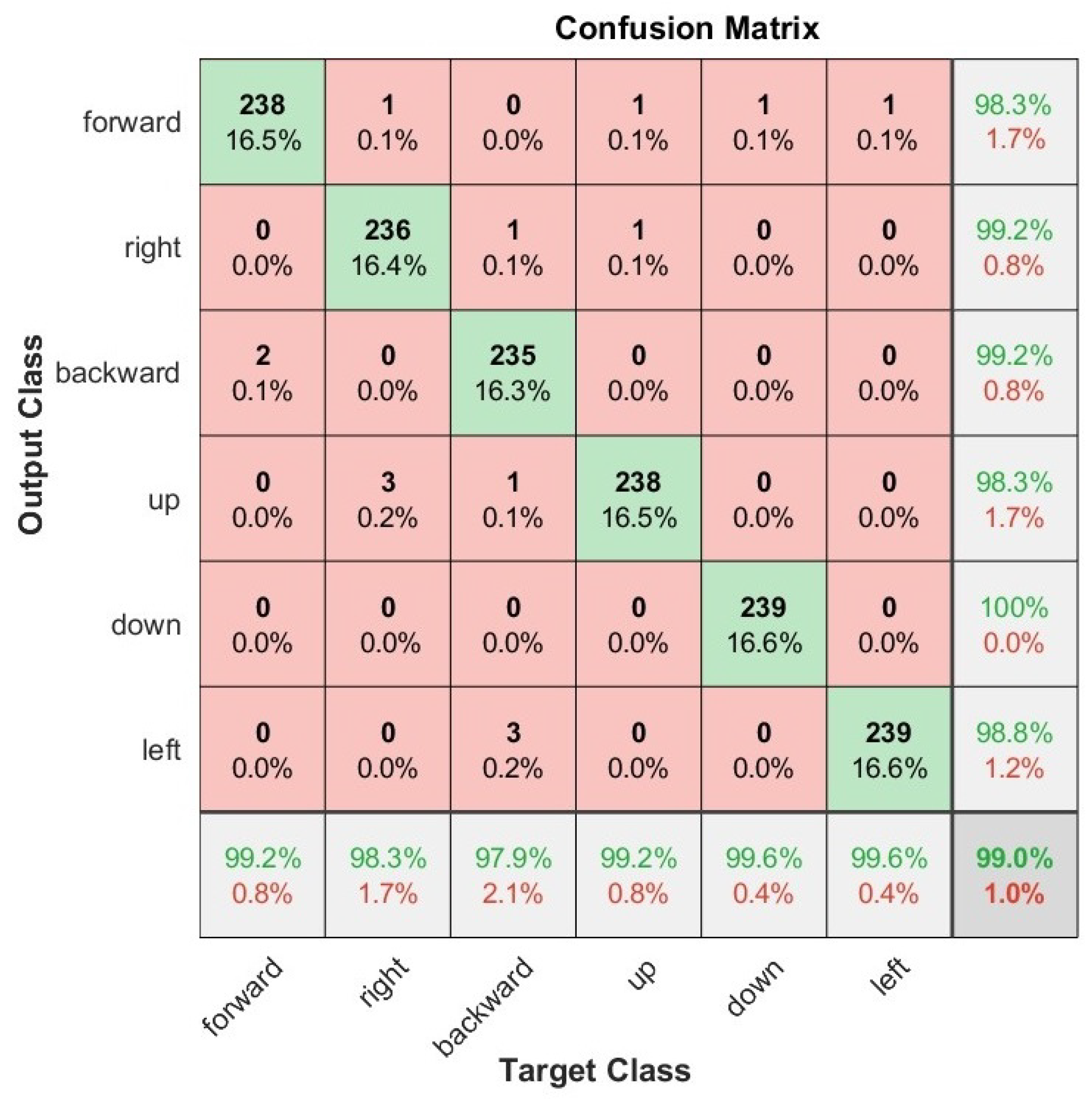

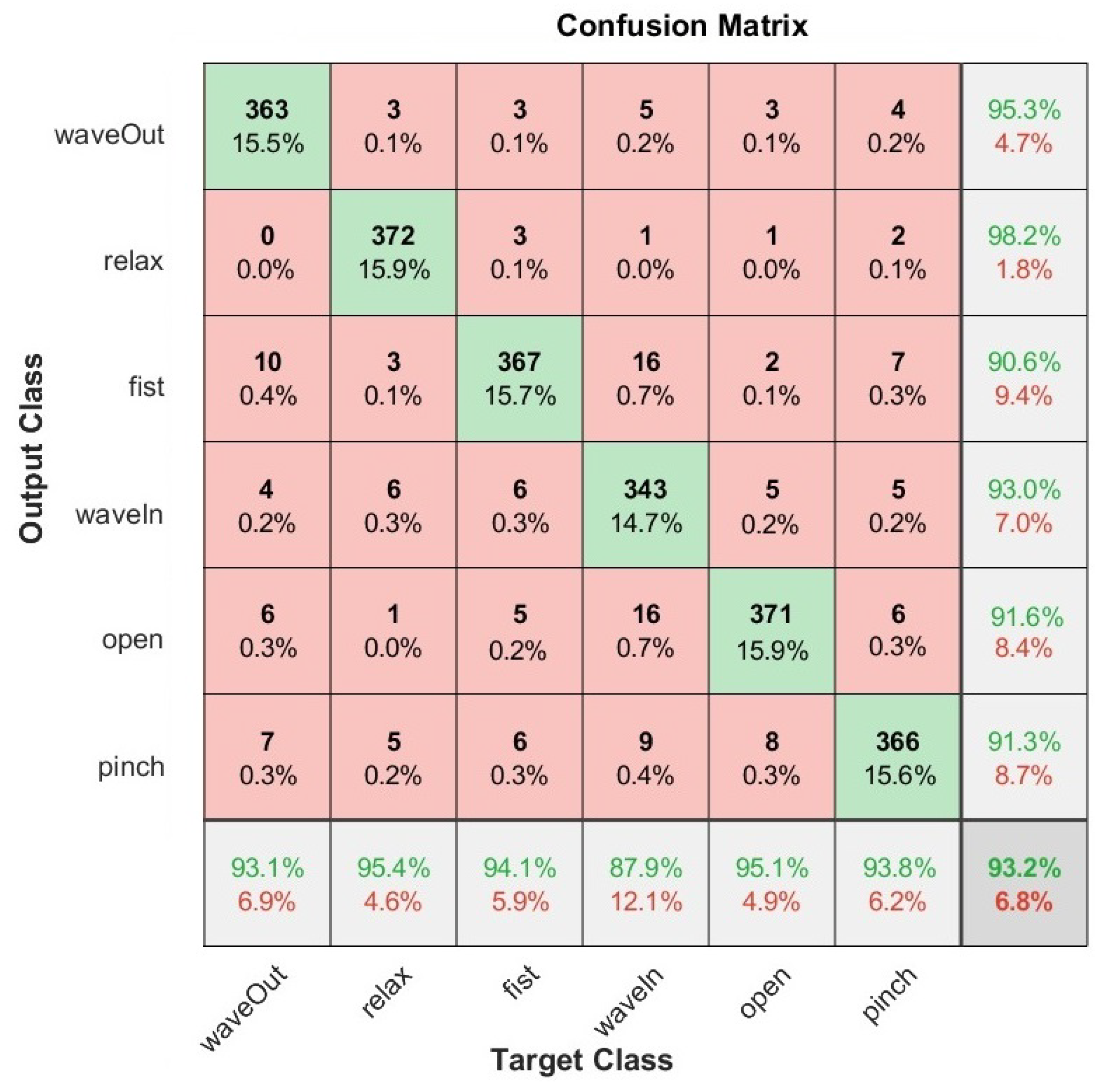

3.1. Validation Results

3.2. Testing Results

3.3. Comparison with Other Methods

4. Discussion

- According to the test results, the best classification accuracies were obtained for static gestures using the Myo armband sensor and were and for the classification and recognition, respectively. On the other hand, for dynamic gestures using the Myo armband sensor, the accuracies were and for the classification and recognition, respectively. The accuracies of the test results for static gestures using the G-force sensor were and for the classification and recognition, respectively. On the other hand, for dynamic gestures using the G-force sensor, the accuracies were and for the classification and recognition, respectively. This indicates that the method based on a DQN for the Myo armband sensor obtained slightly better results than the method based on a DQN for the G-force sensor.

- We compared the proposed method that used EMG and IMU signals with respect to other similar works where the same sensor was used with only EMG signals for static gestures. We obtained accuracies of and for the classification and recognition, respectively, using both EMG and IMU signals versus accuracies of and for the classification and recognition, respectively, using only EMG signals. This indicates the benefits of using EMG-IMU signals over using EMGs alone. This represents a 7% and 1% improvement in the classification and recognition, as well as a substantial reduction of more than 10% in the standard deviation of these metrics when using EMG-IMU signals instead of EMG signals alone. This also indicates the benefits of using EMG-IMU signals over using EMGs alone. Moreover, it can be seen that we are the first study to use RL with EMG-IMU signals to obtain better results compared to using only EMG signals with RL. Our results also outperformed those obtained with methods that use EMG or EMG-IMU with supervised learning.

- In general, the difference between the results of the validation and testing with regard to the classification and recognition was less than 5%. This difference is small so it can be said that the proposed method is robust and does not suffer from the effects of overfitting for the proposed dataset distribution.

- The processing time of each window observation was, on average, 33 ms for both sensors. Since this is less than 300 ms, we can consider that both models work in real time for the proposed application.

- Although the proposed results are encouraging, it is important to mention that in future works we will focus on the convenience and comfort that users experience when using static or dynamic gestures. User preference data can impact the development of HGR architectures so we will study this in depth in future work.

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HGR | hand gesture recognition systems |

| EMG | electromyography |

| EMGs | electromyography signals |

| IMU | inertial measurement unit |

| IMUs | inertial measurement unit signals |

| ML | machine learning |

| RL | reinforcement learning |

| CNN | convolutional neural network |

| ANN | artificial neural network |

| DQN | deep Q-network |

Appendix A

| Alpha | Classification Accuracy | Recognition Accuracy |

|---|---|---|

| 0.07 | ||

| 0.05 | ||

| 0.03 | ||

| 0.01 | ||

| 0.007 | ||

| 0.005 | ||

| 0.003 | ||

| 0.001 | ||

| 0.0007 | ||

| 0.0005 | ||

| 0.0003 | ||

| 0.0001 | ||

| 0.00007 | ||

| 0.00005 | ||

| 0.00003 | ||

| 0.00001 |

References

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Yang, S.; Koo, B.; Lee, S.; Park, S.; Kim, S.; Cho, K.H.; Kim, Y. sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee. Sensors 2022, 22, 7984. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Li, C.; Zhang, Y. Interactive Application of Data Glove Based on Emotion Recognition and Judgment System. Sensors 2022, 22, 6327. [Google Scholar] [CrossRef] [PubMed]

- Chico, A.; Cruz, P.J.; Vásconez, J.P.; Benalcázar, M.E.; Álvarez, R.; Barona, L.; Valdivieso, Á.L. Hand Gesture Recognition and Tracking Control for a Virtual UR5 Robot Manipulator. In Proceedings of the 2021 IEEE Fifth Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 12–15 October 2021; pp. 1–6. [Google Scholar]

- Romero, R.; Cruz, P.J.; Vásconez, J.P.; Benalcázar, M.; Álvarez, R.; Barona, L.; Valdivieso, Á.L. Hand Gesture and Arm Movement Recognition for Multimodal Control of a 3-DOF Helicopter. In International Conference on Robot Intelligence Technology and Applications; Springer: Cham, Switzerland, 2022; pp. 363–377. [Google Scholar]

- Benalcázar, M.E.; Jaramillo, A.G.; Zea, A.; Páez, A.; Andaluz, V.H. Hand gesture recognition using machine learning and the Myo armband. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 1040–1044. [Google Scholar]

- Nuzzi, C.; Pasinetti, S.; Lancini, M.; Docchio, F.; Sansoni, G. Deep learning based machine vision: First steps towards a hand gesture recognition set up for collaborative robots. In Proceedings of the 2018 Workshop on Metrology for Industry 4.0 and IoT, Brescia, Italy, 16–18 April 2018; pp. 28–33. [Google Scholar]

- Yang, L.; Chen, J.; Zhu, W. Dynamic hand gesture recognition based on a leap motion controller and two-layer bidirectional recurrent neural network. Sensors 2020, 20, 2106. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Cho, J.; Lee, S.; Jung, Y. IMU sensor-based hand gesture recognition for human-machine interfaces. Sensors 2019, 19, 3827. [Google Scholar] [CrossRef] [PubMed]

- Wen, F.; Sun, Z.; He, T.; Shi, Q.; Zhu, M.; Zhang, Z.; Li, L.; Zhang, T.; Lee, C. Machine learning glove using self-powered conductive superhydrophobic triboelectric textile for gesture recognition in VR/AR applications. Adv. Sci. 2020, 7, 2000261. [Google Scholar] [CrossRef] [PubMed]

- Kundu, A.S.; Mazumder, O.; Lenka, P.K.; Bhaumik, S. Hand gesture recognition based omnidirectional wheelchair control using IMU and EMG sensors. J. Intell. Robot. Syst. 2018, 91, 529–541. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, Z.; Chen, T.; Chen, D.; Huang, M.C. Cooperative sensing and wearable computing for sequential hand gesture recognition. IEEE Sens. J. 2019, 19, 5775–5783. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of wrist-worn, real-time hand, and surface gesture recognition via sEMG and IMU sensing. IEEE Trans. Ind. Inform. 2017, 14, 3376–3385. [Google Scholar] [CrossRef]

- Benalcázar, M.E.; Motoche, C.; Zea, J.A.; Jaramillo, A.G.; Anchundia, C.E.; Zambrano, P.; Segura, M.; Palacios, F.B.; Pérez, M. Real-time hand gesture recognition using the Myo armband and muscle activity detection. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar]

- Englehart, K.; Hudgins, B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 2003, 50, 848–854. [Google Scholar] [CrossRef] [PubMed]

- Vásconez, J.P.; López, L.I.B.; Caraguay, Á.L.V.; Cruz, P.J.; Álvarez, R.; Benalcázar, M.E. A Hand Gesture Recognition System Using EMG and Reinforcement Learning: A Q-Learning Approach. In International Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2021; pp. 580–591. [Google Scholar]

- Zhang, C.; Wang, Z.; An, Q.; Li, S.; Hoorfar, A.; Kou, C. Clustering-Driven DGS-Based Micro-Doppler Feature Extraction for Automatic Dynamic Hand Gesture Recognition. Sensors 2022, 22, 8535. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Song, L.; Zhang, J.; Song, Y.; Yan, M. Multi-Category Gesture Recognition Modeling Based on sEMG and IMU Signals. Sensors 2022, 22, 5855. [Google Scholar] [CrossRef] [PubMed]

- Pan, T.Y.; Tsai, W.L.; Chang, C.Y.; Yeh, C.W.; Hu, M.C. A hierarchical hand gesture recognition framework for sports referee training-based EMG and accelerometer sensors. IEEE Trans. Cybern. 2022, 52, 3172–3183. [Google Scholar] [CrossRef] [PubMed]

- Colli Alfaro, J.G.; Trejos, A.L. User-Independent Hand Gesture Recognition Classification Models Using Sensor Fusion. Sensors 2022, 22, 1321. [Google Scholar] [CrossRef] [PubMed]

- Seok, W.; Kim, Y.; Park, C. Pattern recognition of human arm movement using deep reinforcement learning. In Proceedings of the 2018 International Conference on Information Networking (ICOIN), Chiang Mai, Thailand, 10–12 January 2018; pp. 917–919. [Google Scholar]

- Song, C.; Chen, C.; Li, Y.; Wu, X. Deep Reinforcement Learning Apply in Electromyography Data Classification. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), Shenzhen, China, 25–27 October 2018; pp. 505–510. [Google Scholar]

- Sharma, R.; Kukker, A. Neural Reinforcement Learning based Identifier for Typing Keys using Forearm EMG Signals. In Proceedings of the 9th International Conference on Signal Processing Systems, Auckland, New Zealand, 27–30 November 2017; pp. 225–229. [Google Scholar]

- Kukker, A.; Sharma, R. Neural reinforcement learning classifier for elbow, finger and hand movements. J. Intell. Fuzzy Syst. 2018, 35, 5111–5121. [Google Scholar] [CrossRef]

- Barona López, L.I.; Valdivieso Caraguay, Á.L.; Vimos, V.H.; Zea, J.A.; Vásconez, J.P.; Álvarez, M.; Benalcázar, M.E. An Energy-Based Method for Orientation Correction of EMG Bracelet Sensors in Hand Gesture Recognition Systems. Sensors 2020, 20, 6327. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Kapturowski, S.; Ostrovski, G.; Quan, J.; Munos, R.; Dabney, W. Recurrent experience replay in distributed reinforcement learning. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

| User-Specific Model (One Model for Each of the 85 Users) | ||||

|---|---|---|---|---|

| Number of Models | Training | Validation | Test | |

| Training set | 43 models trained (to find the best hyperparameters) | 180 samples per user | 180 samples per user | - |

| Testing set | 42 models trained (to use the best of the found hyperparameters) | 180 samples per user | - | 180 samples per user |

| Hyperparameter Name | Hyperparameter Values |

|---|---|

| Activation function between layers | Relu |

| Target Smooth Factor | 5 × 10 |

| Experience buffer length | 1 × 10 |

| Learn rate () | 0.3 × 10 |

| Epsilon initial value | 1 |

| Epsilon greedy epsilon decay | 1 × 10 |

| Discount factor | 0.99 |

| Training set replay per user | 15 times |

| Sliding window size | 300 points |

| Stride size | 40 points |

| Mini-batch size | 64 |

| Optimizer | Adam |

| Gradient decay factor | 0.9 |

| L2 regularization factor | 0.0001 |

| Number of neurons for layer | 60, 50, 50, 7 for the input layer, hidden layer 1, hidden layer 2, and output layer, respectively |

| Sensor | Classification Accuracy | Recognition Accuracy |

|---|---|---|

| Myo armband (Static gestures) | ||

| Myo armband (Dynamic gestures) | ||

| G-force (Static gestures) | ||

| G-force (Dynamic gestures) |

| Sensor | Classification Accuracy | Recognition Accuracy |

|---|---|---|

| Myo armband (Static gestures) | ||

| Myo armband (Dynamic gestures) | ||

| G-force (Static gestures) | ||

| G-force (Dynamic gestures) |

| Learning Method | Type of Signal | Classification | Recognition |

|---|---|---|---|

| Reinforcement learning (this work) | EMG + IMU | ||

| Reinforcement learning [16] | EMG | ||

| Supervised learning—KNN classifier | EMG + IMU | ||

| Supervised learning—CNN classifier | EMG + IMU | ||

| Supervised learning [25] | EMG |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vásconez, J.P.; Barona López, L.I.; Valdivieso Caraguay, Á.L.; Benalcázar, M.E. Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks. Sensors 2022, 22, 9613. https://doi.org/10.3390/s22249613

Vásconez JP, Barona López LI, Valdivieso Caraguay ÁL, Benalcázar ME. Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks. Sensors. 2022; 22(24):9613. https://doi.org/10.3390/s22249613

Chicago/Turabian StyleVásconez, Juan Pablo, Lorena Isabel Barona López, Ángel Leonardo Valdivieso Caraguay, and Marco E. Benalcázar. 2022. "Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks" Sensors 22, no. 24: 9613. https://doi.org/10.3390/s22249613

APA StyleVásconez, J. P., Barona López, L. I., Valdivieso Caraguay, Á. L., & Benalcázar, M. E. (2022). Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks. Sensors, 22(24), 9613. https://doi.org/10.3390/s22249613