Abstract

The recognition of hand signs is essential for several applications. Due to the variation of possible signals and the complexity of sensor-based systems for hand gesture recognition, a new artificial neural network algorithm providing high accuracy with a reduced architecture and automatic feature selection is needed. In this paper, a novel classification method based on an extreme learning machine (ELM), supported by an improved grasshopper optimization algorithm (GOA) as a core for a weight-pruning process, is proposed. The k-tournament grasshopper optimization algorithm was implemented to select and prune the ELM weights resulting in the proposed k-tournament grasshopper extreme learner (KTGEL) classifier. Myographic methods, such as force myography (FMG), deliver interesting signals that can build the basis for hand sign recognition. FMG was investigated to limit the number of sensors at suitable positions and provide adequate signal processing algorithms for perspective implementation in wearable embedded systems. Based on the proposed KTGEL, the number of sensors and the effect of the number of subjects was investigated in the first stage. It was shown that by increasing the number of subjects participating in the data collection, eight was the minimal number of sensors needed to result in acceptable sign recognition performance. Moreover, implemented with 3000 hidden nodes, after the feature selection wrapper, the ELM had both a microaverage precision and a microaverage sensitivity of 97% for the recognition of a set of gestures, including a middle ambiguity level. The KTGEL reduced the hidden nodes to only 1000, reaching the same total sensitivity with a reduced total precision of only 1% without needing an additional feature selection method.

1. Introduction

Hand gestures are part of behavioral attributes that are authentic (emphasize or help to express a thought or feeling), distinguishable (present a known meaning that depends on culture, language, and use case), and have unique physiological patterns (physiological signals and phenomena resulted from various hand gestures present varying unique properties). Hand gesture recognition is essential in several applications, such as sign language, mobile security systems, smart homes, and other IoT-based applications. In addition, hand gesture recognition involves several challenges concerning the sensors and machine learning algorithms, including the system design, which needs to fit different persons, and the influence of the physiological state of the subject on the collected signal quality. Hand grasp recognition and hand sign recognition are the main subtopics of hand gesture recognition. The first is dedicated to the identification of the grasping nature, and the object-handling tasks while sign recognition is dedicated mainly to communication between persons or between persons and intelligent agents. Hand sign recognition is valuable, e.g., for communication over long distances, in noisy environments, and with people with disabilities. Identifying hand signs with camera-based systems is challenging in such environments and suffers from limited resolution, significant distances, and varying light conditions. Myographic measurement methods and sensors, which allow the direct collection of information on the muscle state during the gesture performance, can be of great importance in overcoming these limitations. Techniques such as surface electromyography, force myography, and surface electrical impedance myography show promising performance for gesture detection, even if only a few current investigations exist for sign language recognition based on myographic signals. Another challenge for hand sign classification is in the level of algorithms and features. The classification algorithm must get a suitable feature subset to be able to realize a high classification accuracy. In addition, the quality of the classification is variable, along with the number of features. Hence, the control of the feature number is essential since a limited feature number may cause data overlapping, which means that the classification becomes not sufficiently grounded. Too many features increase the dimension of the problem, and more complex classification algorithms will be needed. Thus, the goal of feature selection is to define the best subset of features by directly removing the irrelevant and redundant features from the data and improving the classification performance and stability. Moreover, reduced resource consumption is required to ensure the suitability of the classification algorithm with wearable hand gesture recognition systems. Most investigations adopt a feature selection based on metaheuristic optimization methods in binary format. The classification accuracy depends on many factors, including the gesture types and numbers, the measurement accuracy of the myographic signals, and the choice of the classifier itself. Furthermore, the classification method should be suitable for solving multiclass problems with minimal calculation. Such property is reported to be insured by an extreme learning machine (ELM). It is a single-layer feed-forward network (SLFN) with randomly generated input weights and biases and output layer weights calculated via linear algebra methods allowing fast training in only one iteration, even in multiclassification problems. However, ELM suffers from the incertitude caused by this random weight generation. Many optimization methods have been suggested in the literature to solve this problem, including controlling the randomization and pruning the hidden nodes. However, weight pruning is not sufficiently investigated for the ELM architecture’s optimization. This work proposes a new approach for ELM network optimization based on a coupled weight and feature selection that allows not only the elimination of irrelevant weights in the network but also an integrated feature selection and hidden node number reduction.

The paper is structured as follows: In Section 2, related works are described, which provide information on the state of the art of ELM pruning and FMG-based gesture recognition. In Section 3, the methodology of implementation of a k-tournament grasshopper extreme learner, the ELM weight selection concept, and the proposed KTGEL is detailed. Section 4 shows the study of the number of FMG sensors for an efficient hand sign recognition system and the influence of the number of subjects on the KTGEL performance. This section also provides the experimental investigation on the performance of the KTGEL compared with the state of the art and with a variation of the ambiguity level in the data set followed by the conclusion.

2. Related Work

In the first part of this section, we present an overview of applied methods for pruning an extreme learning machine to reduce its model architecture while keeping its good performance and exposing the gap in approaches exploited to fulfill this aim. In the second part, an overview of hand gesture recognition based on FMG sensors is presented, focusing on the number of sensors, the features, the number of subjects, and the American Sign Language recognition as an application.

2.1. Pruning of Extreme Learning Machine

An extreme learning machine (ELM) is a single-layer feed-forward network (SLFN) where the fundamental concept is that the weights and biases of the hidden layer are randomly generated. Moreover, the output layer weights are calculated using a least-squares solution defined by the outputs of the hidden layer and the target [1]. Thus, the weights that connect the hidden nodes to the outputs can be trained very fast in one iteration according to the pseudocode presented in Algorithm 1.

| Algorithm 1: Pseudocode of an extreme learning machine [2]. |

1. Given a training set , activation function , and number of hidden nodes ; 2. Assign random input weights , and biases , for ; 3. Calculate the hidden layer output matrix H; 4. Calculate the output weight matrix

where is the Moore–Penrose generalized inverse of matrix H and ; The output weight matrix ; |

Since its first introduction, the ELM has been a subject for optimization as it represents a promising possibility for embedded systems and online real-time classification tasks. However, it also presents some limitations, especially in its hidden node number and weights’ randomization method. An ELM also randomly generates the input weights and the bias of hidden nodes, which has the following consequences: first, a slow learning speed caused by the minor roles played by some hidden nodes with too small output weights on the network’s output; second, a slow error reduction during the training process is caused by these invalid hidden layer neurons, which increase the network complexity [3]. To solve this, most of the proposed algorithms focus on simplifying the computation process, finding the optimized depth of the SLFNs, or expanding the range of the generalized methods via multilayers or a complex domain. However, for random weight optimization, the proposed solutions tend to replace the completely randomly generated input weight and bias with fully controllable metrics, which turns the ELM into a controlled method and reduces the benefits of the weights’ randomness in the ELM results. The optimally pruned ELM (OP-ELM) was proposed by Miche et al. [4] based on the ELM algorithm in terms of kernel selection and using the methodology of pruning the neurons, leading to more efficient algorithms and improving the ELM problems experienced when using irrelevant or correlated data [4,5]. Compared to the ELM, the OP-ELM enhanced the robustness and accuracy of the network. However, it had a higher computational time, affecting the accuracy and training time [6,7]. Genetic algorithms for pruned ELM (GPA-ELM) were proposed by Alencar et al. [8] to prune the hidden layer neurons based on multiobjective GAs. It combined the advantages of ELMs and GAs to optimize the performance of the ELM classifiers and prune the maximum possible number of hidden neurons. In [9], the authors proposed the PSO-ELM for optimizing the input feature subset selection and the number of hidden nodes to enhance the classification performance of ELM in the application of power system disturbances classification. The experimental results showed that the proposed PSO-ELM was faster and more accurate than the original ELM algorithm. However, the PSO which was used to perform those optimizations was reported to be outperformed by other newly introduced swarm intelligence optimization methods, including the GOA [10,11,12]. In the literature, the main difference between the various pruned ELM versions is the different optimization methods implemented to modify the ELM architecture to realize the hidden nodes’ pruning. However, there is no specific idea proposed so far about weight selection without controlling the random initialization or connection pruning optimization, which is an integral part of extreme machine learning in data classification. Hence, in this work, optimizing the ELM by proposing a weight selection by an improved version of the GOA after the initial random initialization is presented as a methodology for connection pruning in ELMs.

2.2. Sensors for FMG

Since it is possible to perform FMG with either pressure or strain sensors, unlimited choices of sensors are available. However, in 2006, Amft et al. [13] compared the force-sensitive resistor (FSR) as a pressure sensor with a fabric stretch sensor (FSS) as a strain sensor and surface electromyography (sEMG) for monitoring muscles’ contraction for grasping, upper-hand activities, and object lifting. The feasibility of muscle activity detection by the strain and pressure sensors as alternatives to sEMG was confirmed in that study. Moreover, the experimental results showed that the pressure sensors were more suitable as a future alternative to sEMG for gesture recognition applications as they were able to monitor the contraction of more muscle groups than the strain sensor. Hence, the FSR pressure sensor for FMG measurement was chosen for this study. Moreover, as commercial sensors were more suitable for this work’s aims, a study of the FSR sensors market and publications was conducted. As the cost for various FSR sensors were almost similar, and the FSR sensor by Interlink Electronics and the FlexiForce™ by Tekscan Ink were the most popular commercial sensors, which were used in 55% of publications about FMG applications until 2019 [14], the sensor choice range was limited between both these sensors. Their characteristics extracted from their data sheets are shown in Table 1.

Table 1.

FSR Interlink and Flexiforce properties from data sheets [14].

Vecchi et al. compared the previous sensors on several points, such as repeatability, time drift, or dynamic force measurement via an experimental process. The results showed that the FlexiForce sensors had better performance in terms of linearity, repeatability, time drift, and dynamic accuracy. However, Interlink’s FSR was more robust [15]. Another study that compared the same sensors with the LuSense PS3 (Standard 151) sensor was conducted in 2006 and concluded that the FlexiForce had not only the highest precision but also the highest noise with the slowest response time and the highest resistance dropping from the nominal value during subsequent tests [16]. Hence, each sensor has its pros and cons. The choice was based on the response time as a real-time and fast system was the goal in this study’s outlook. Thus, the Interlink’s FSR possessing the lowest response time in the data sheet (see Table 1) and in experiments [16] was chosen to perform the FMG data collection in this work. A typical Interlink Electronics’s FSR sensor consists of a top carbon-based ink layer and a bottom conductive substrate layer with a spacer adhesive located in the middle of the two layers [17]. Therefore, during FMG collection, as the hand exerts a force, the corresponding muscles on the arm produce a deformation on the skin’s surface. These deformations apply pressure to the surface of the top layer of the FSR, changing its resistance. These changes in resistance can be translated into corresponding changes in voltage by a voltage divider structure resulting in the FMG distinct patterns that could be used for hand gesture recognition with the best sensitivity, which is ensured by a reference resistance of 100 kΩ in the voltage divider [17,18].

2.3. Hand Gesture Recognition Based on FMG Sensors

FSRs have been used for hand gesture recognition often in recent years, sometimes alone [19], sometimes in combination with sEMG [20] or other sensors [21]. In these studies, the sensors were mostly worn on the forearm or the wrist [14]. In some rare cases, the sensors were worn while attached to a glove [20]. Moreover, FSR-based hand gesture recognition studies have practically focused on grasping [22], upper-arm activities such as pinching or rotations [21], and robotic hand [23] or prostheses control [24]. Moreover, there have been studies comparing grasp vs. nongrasp gestures [19]. However, sign language recognition have rarely been investigated with FMG signals and have never been the focus of any published scientific work except a few [18,25,26], where the feasibility of sign language recognition by FMG-based systems and investigations about the measurement system and the recognition with classic classification methods were conducted. Many studies have shown the advantages of FMG over EMG signals [19,27]. For example, FMG does not require much skin preparation and is less affected by skin impedance or sweat. Furthermore, FMG is characterized by its stability and robustness to external electrical noise; in addition, it does not necessitate the same amount of signal processing, and feature extraction as EMG [19]. Thus, all of these factors were the main reason for making the implementation of FMG in wearable devices more reliable in terms of cost and equipment. The oldest research discussing FMG features is from 2017 [28], while most research has implemented FMG as raw signals for gesture recognition. The discussed features for force myography are primarily used in grasping detection, robot hand control, and gait analysis [28,29,30,31,32]. Many researchers have achieved hand gesture recognition based on various machine learning methods. In addition, the hand gesture term includes a massive number of gestures with different levels of force and acceleration from sign language alphabets that generally cover postures and some slight motions to grasping and upper-arm activities that contain the interaction with objects and a high level of muscle contraction force. As for the different myography measurement techniques considered in this work, the high force level ensures a higher representation of the gesture. Most hand gesture recognition studies in the literature have focused on grasping and upper-arm activities. In contrast, sign language recognition is still an application where more investigations for features and classification methods are mandatory. Hence for the experimental part of this work, the application focus is on sign language recognition and, more specifically, American Sign Language (ASL) recognition. An overview of publications discussing American Sign Language recognition based on FMG as a standalone system or combining FMG and sEMG are listed in Table 2.

Table 2.

State of the art for FMG-sensor-based ASL recognition.

For FMG-based hand gesture recognition studies in the state of the art, the number of sensors is relatively high for portable and user-friendly systems. Moreover, the use of raw FMG signals in most of the studies limits the signal abilities and the machine learning methods’ performance. In addition, the applications of FMG are mainly focused on grasping and robotic hand or prosthesis control where a significant muscle contraction force is included, and they are rarely investigated for sign language recognition. From Table 2, for force myography, only one publication presented the sign language recognition by FMG as a standalone system based on commercial sensors [19]. However, that previous study was based on raw FMG only. In our studies in [18,25,35], the feasibility of sign language recognition by a reduced number of FMG sensors up to four and the investigation of various features for the recognition from an FMG-based bracelet with classic classification methods were conducted. In [25], it was proved that for a low-level ambiguity in the gesture set that the ELM could recognize the signs with an accuracy of 89.65% based on six standard features extracted from signals collected by four commercial sensors. In [35], the ELM accuracy for sign recognition based on the same extracted features as in [25] from the FMG signals collected by six sensors was equal to 91.11%. In this work, an optimized classifier is proposed, and its adequate minimal number of sensors to recognize various sets of signs with different levels of ambiguities is investigated.

3. Proposed k-Tournament Grasshopper Extreme Learner

The ELM has been proven in the literature to outperform other algorithms in terms of accuracy, speed, and model size. Therefore, it is more suitable for embedded systems. However, the weights’ random tuning remains a source of incertitude in terms of the optimal result this algorithm could reach. Researchers with different approaches proposed many optimizations of ELMs to reduce this effect. However, the used optimization methods were relatively old algorithms in the field. New optimization methods with good performances in various applications have been newly proposed and could give better results. Moreover, none of the proposed methods investigated the selection of randomly generated weights to optimize the architecture of an ELM without controlling its randomization process.

3.1. ELM Weights Selection

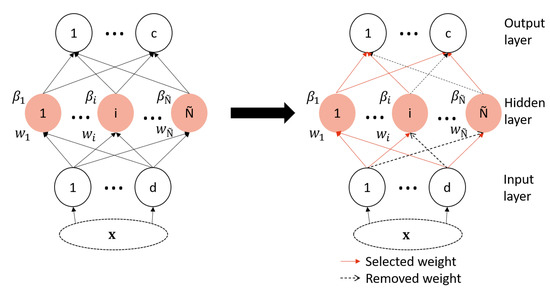

A neural network weight selection is one of the pruning types of network architecture, also named connection pruning, where the number of connections in the network is reduced. Another type is node pruning, where the number of hidden nodes is reduced by selecting the more significant hidden nodes [36]. For ELM pruning, researchers have proposed several methods for node pruning [8,37,38,39,40,41,42], but the weight pruning problem has not yet been studied. To cover this gap in the ELM architecture optimization strategies, a weight selection of the ELM is proposed in this paper as shown in Figure 1.

Figure 1.

Proposed ELM architecture optimization strategy.

The selection of initially generated weights proposed in this work has the aim of keeping only the best subset of weights, which shares the same idea as other feature selection methods. In the latter methods, the goal, in general, is to define the best subset of features to improve the performance of the classification stage. Moreover, feature selection is important because the quality of the classification is variable along with the number of features. Hence, controlling this number is important because when it is too small, it may cause an overlap of data, which means it is not enough for the classification. However, if the number of features are too great, the dimension of the problem increases, and more complex classification algorithms are needed. Similar to the number of features, the number of weights in the ELM also impacts the overfitting, the model size, and the complexity. From there comes the inspiration to use a feature selection approach as a strategy for ELM weights’ selection.

3.2. k-Tournament Grasshopper Extreme Learning Machine for Selection Problems

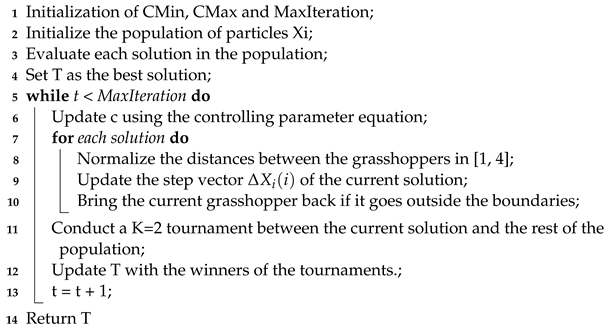

First, the tournament process is included in the grasshopper repositioning process, as shown in the pseudocode in Algorithm 2 by controlling the best population evaluation.

| Algorithm 2: k-Tournament grasshopper optimization algorithm. |

|

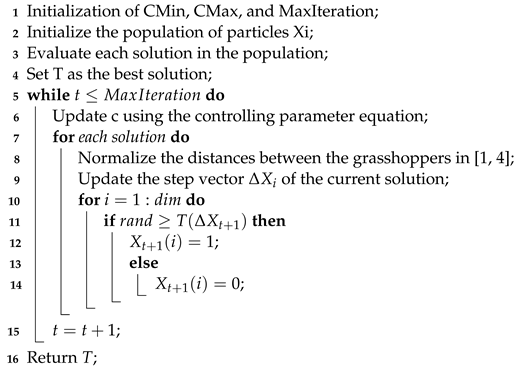

Furthermore, to perform the selection of this algorithm, the S-shaped transfer function is applied to the velocity of the search agents in the same way shown in the binary grasshopper optimization algorithm proposed in [43] presented by the pseudo-code in Algorithm 3 before combining it with the extreme learning machine shown in the Algorithm 1 as the wrapper’s evaluation classifier.

For this wrapper, the ELM was chosen as the evaluation method of the selected subsets because it outperformed other classification methods customarily used for wrapper building, such as KNN and SVM, in terms of accuracy, speed, and minimal computation complexity [44,45,46,47,48]. Moreover, searching for the best feature subset in feature selection is a challenging problem, especially in wrapper-based methods. This is because the selected subset needs to be evaluated by the learning algorithm (e.g., classifier) at each individual optimization step. Hence, a proper optimization method is required to reduce the number of evaluations, which is ensured by the ELM’s ability to solve multiclass problems in one iteration [49].

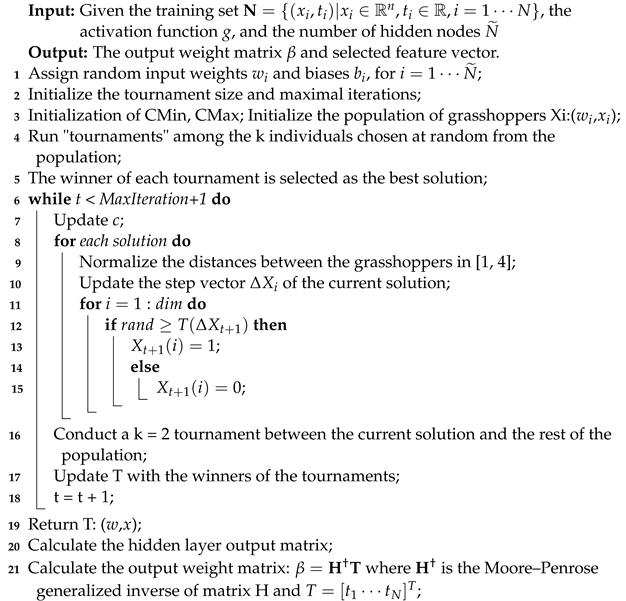

| Algorithm 3: Binary grasshopper optimization algorithm (BGOA) [43]. |

|

3.3. k-Tournament Grasshopper Extreme Learner

The final proposed KTGEL is shown in Algorithm 4. The proposed approach tends to optimize the extreme learning machine by selecting the most significant weights from the randomly generated ones during its initialization. The weight selection is integrated into the training process of the ELM. Moreover, the proposed KTGEL inherits the training procedure of the ELM, including the coupling between the input data and the input weights. Hence, the KTGEL is able to perform the feature selection within its training phase as an effect of the weight coupling relation with the input data during this phase. Each weight is coupled to one feature, but one feature is coupled to many weights, resulting in a feature being only eliminated if all its related weights are eliminated. Hence the proposed k-tournament grasshopper extreme learner is estimated to provide a better classification accuracy than the original ELM classifier on different biosignal databases for hand gesture recognition with a smaller model size as nonselected weights are replaced by zero so that no more computations are devoted to them.

| Algorithm 4: Pseudocode of the proposed k-tournament grasshopper extreme learner. |

|

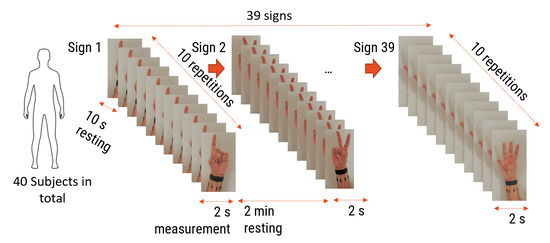

4. Experimental Investigations

In this section, three performance metrics were investigated: the accuracy in comparison with other works in the state of the art, reproducing the same set of gestures performed with the same number of subjects under as many similar conditions as possible, the influence of the number of sensors in relation with the number of subjects on the classification accuracy, and the influence of the ambiguity level in the set of gestures in comparison with an ELM after a feature selection step. The ELM and KTGEL were initialized with 3000 hidden nodes and compared on the data collected from eight FSR sensors with a total of 48 initial features in terms of accuracy, the final network architecture after weight selection by the KTGEL, the average sensitivity, and the average precision. To investigate the effect of the ambiguity level between gestures on their classification based on the FMG eight-sensor band, 40 participants from both genders in the age range between 20 and 32 years old participated in the collection of the 27 letters, the ASL numbers from 0 to 10, and the expression “I love you.”. Each subject participated in collecting only 10 or 9 signs with ten repetitions for each. In total, the collected data included 39 signs from the ASL, with 100 observations for each one. From this database, two sets of gestures were exploited in this paper for the investigations of the ambiguity level influence on the KTGEL performance. As for the evaluation with the accuracy, both the micro precision and the micro recall are conventionally used for a multiclassification assessment, where , , are, respectively, the numbers of true positives, false positives, and false negatives of a class j, to show the overall classifier precision and sensitivity [50].

4.1. Comparison with the State of the Art of FMG-Based Gesture Recognition

In Table 3 a comparison between this work and the 2 studies from the state of the art was conducted to compare the algorithms’ performance while keeping the number of sensors, observations, and subjects. In [33], the FMG signals were collected with eight self-produced sEMG-FMG colocated sensors placed on the forearm of the subjects, and in this work, eight commercial FSR sensors were integrated into a wristband. In [34], carbon-nanotube-based FMG sensors were customized to produce more sensitive sensors with a higher ability to detect signs than commercial FSR sensors.

Table 3.

Performance of the proposed classifiers vs. the state of the art of ASL numbers’ recognition by FMG.

For FMG, the comparison with [33] showed that the proposed FMG bracelet located on the wrist and commercial sensors could provide better accuracy for ASL numbers’ classification. Moreover, the comparison with [34] was made with the exact same gestures proving that the KTGEL outperformed the ELM in terms of accuracy, even while implemented on data collected with commercial sensors. In contrast, the data in [34] were collected with optimized sensors that had been proved to outperform the commercial FSR sensors when the same signal processing was applied to data collected by both sensors.

4.2. Investigation of the Sensors and Subject Number Influence

Force myography is rarely used for sign language recognition, and it has only been used with raw signals. Thus, it has not been sufficiently investigated in the literature. That is why it was necessary to conduct tests and observe the results. Both the number of sensors and the convenient features should be examined in this part. The idea is to find the optimal number of sensors from the wrist sensor band since previous studies [19] confirmed that the wrist-positioned band had more sensitivity to ASL than forearm bands. To minimize the sensors’ number and thereby ensure user comfort, two bands of six and eight commercial pressure sensors were designed, realized, and tested to find the band that led to the best accuracy for the ASL gesture recognition system. In the first band, eight sensors were placed with a gap of 2 cm around the wrist, while the second band had six sensors with a 2.25 cm gap between sensors. In all systems, Teensy boards with synchronized ADCs were employed as acquisition boards with a sampling frequency of 100 Hz. The two-band system was used to collect data during the performance of ASL signs according to the measurement protocol in Figure 2.

Figure 2.

FMG signal collection protocol.

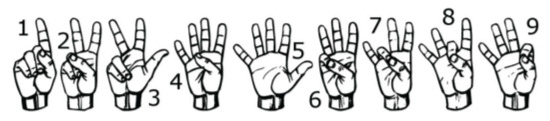

The first investigation aimed to test the feasibility of finger sign detection by the wrist FSR bands, including a small number of sensors compared to the state of the art, where the previous studies that implemented sign language included 16 commercial sensors [19] or 8 customized sensors [33,34]. Hence, only one person was asked to wear one of the two bands each time and perform the nine ALS numbers from one to nine shown in Figure 3 for twenty trials each.

Figure 3.

Performed ASL numbers from 1 to 9.

Gestures have been performed with a resting of two minutes between every two gestures to avoid muscle fatigue. The collected signal seemed to have stationary behaviors for the different gestures, so it was estimated that even though features increased the performance of algorithms in comparison with raw data, there was no need for complicated features. Hence, six basic features, which were the min, max, RMS, var, STD, and mean, were extracted and normalized by the min–max method, and the KTGEL was used to classify the gestures. The classification accuracy was considered here as the evaluation criterion for the needed number of sensors for further data collection.

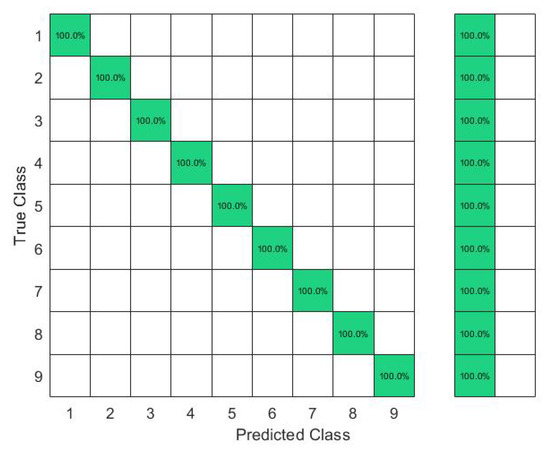

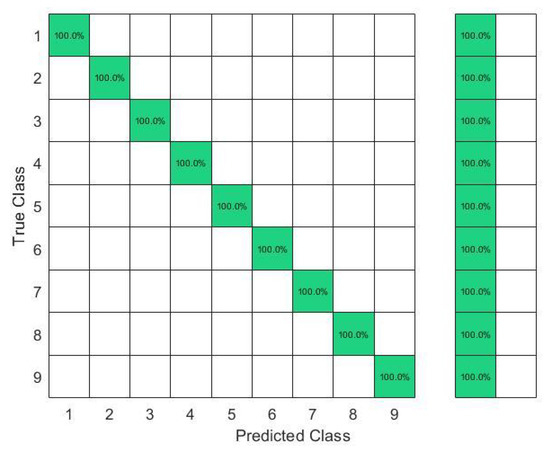

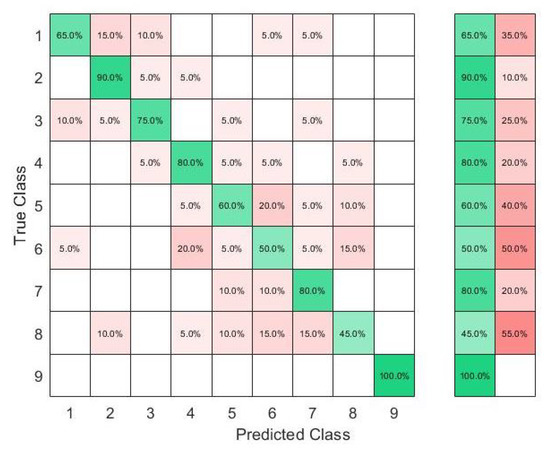

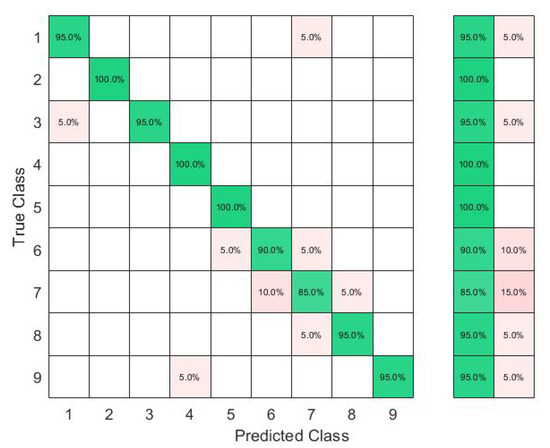

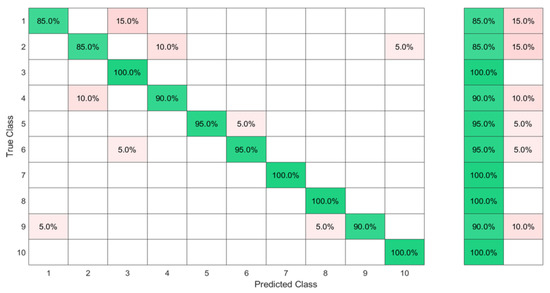

In this set of gestures, the ambiguity level between signs could be described as low since no dynamic gestures were considered, and the similarity between the gesture performance process was limited between the numbers six, seven, eight, and nine between all the possible combinations of the nine gestures. The collected data from only one person resulted in a total of 180 observations. In this investigation, 80% of the observations were used to train and validate the KTGEL using a fivefold cross-validation while saving a random 20% of each gesture’s data to be used only as testing data. From the confusion matrices in Figure 4 and Figure 5, it could be confirmed that for only one subject performing the gestures, both bracelets could detect and allow the classifier to predict the nine tested gestures correctly. It was proved by this investigation that the six sensors were sufficient to recognize gestures with a low ambiguity level collected from only one subject.

Figure 4.

Confusion matrix of the KTGEL for one person for ASL numbers from 1 to 9:6 sensors.

Figure 5.

Confusion matrix of the KTGEL for one person for ASL numbers from 1 to 9:8 sensors.

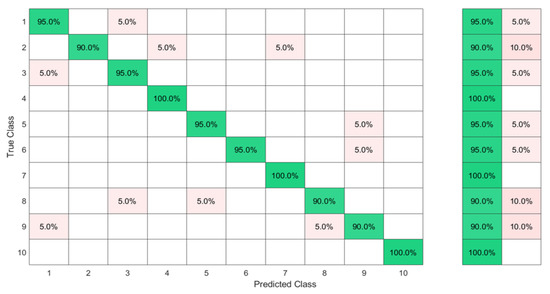

The second investigation was to evaluate the system’s stability and accuracy for the same gesture recognition while increasing the number of subjects to 10 subjects. However, in that investigation, each person was asked to perform each gesture only ten times. In total, 1000 observations were used in this implementation of the KTGEL, with 80% of the observations employed to train and validate the model using fivefold cross-validation while a random 20% of each gesture observations were safeguarded to be used only as testing data.

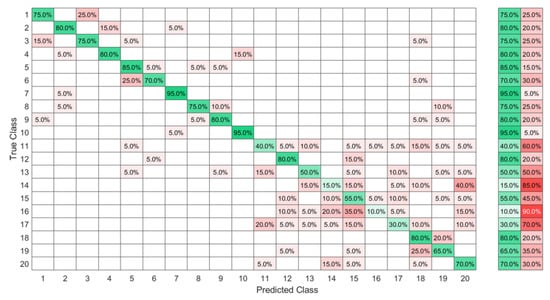

The results in Figure 6 show that with six sensors collecting nine gestures, the KTGEL had a test accuracy of 71%. Figure 7 shows that the eight sensors band collecting American Sign Language numbers could be recognized with an accuracy of 95%. These results confirmed that six sensors were not suitable enough for FMG-based gesture recognition with several subjects. The additional complexity in the signals induced by the physiological difference between the various subjects could not be canceled by the use of six sensors only. It is also observed in Figure 6 that the confusion between gestures could not be totally obvious from the gestures’ nature, which led to the estimation that the collected data were not enough to differentiate the gestures. However, observing Figure 7, it could be seen that the confusions were limited, with the most relevant confusions being between gestures six, seven, and eight. Hence, this investigation showed that eight FSR sensors as the minimal number of sensors had an acceptable gesture recognition accuracy from the data collected from 10 subjects. In addition, to confirm the user’s comfort with the used number of sensors, subjects were asked about their evaluation of the band. None of the subjects complained about the sensor band placement, but they announced that the material used for the actual band was not soft enough. Hence, the eight-sensor band was kept for further data collection as a possible standalone system for a future investigation of sign recognition with more features, and a modification of the bracelet material will be considered as an outlook of the system design.

Figure 6.

Confusion matrix of the KTGEL for ten person and nine numbers: 6 sensors.

Figure 7.

Confusion matrix of the KTGEL for ten person and nine numbers: 8 sensors.

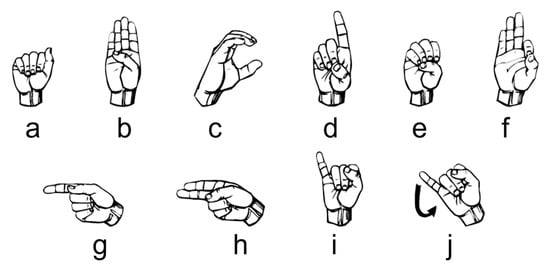

4.3. Recognition of ASL Signs with a Middle Ambiguity Level

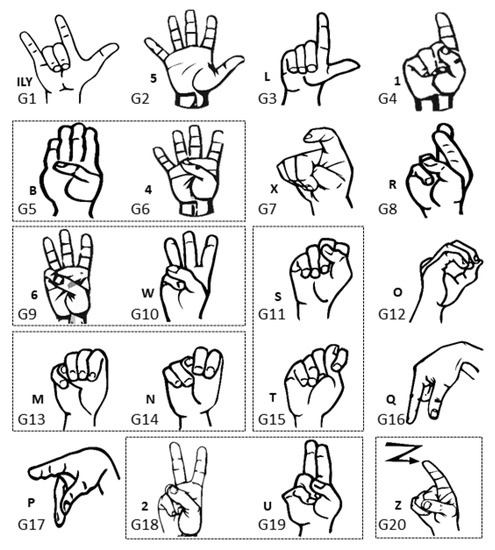

To investigate the influence of ambiguity on the KTGEL performance for American Sign Language recognition, the first ten alphabet letters from A to J were collected from 10 healthy subjects. During the data collection, subjects followed an informative video for ASL teaching. Gestures were collected as postures except for the letter J, which was a dynamic gesture including a rotation movement of the wrist as symbolized by the arrow in Figure 8. This set of gestures was considered to have a middle ambiguity level as it included a dynamic gesture and a similarity in the posture between the signs A, C, and E and the signs G and H.

Figure 8.

Ten ASL letters, A–J.

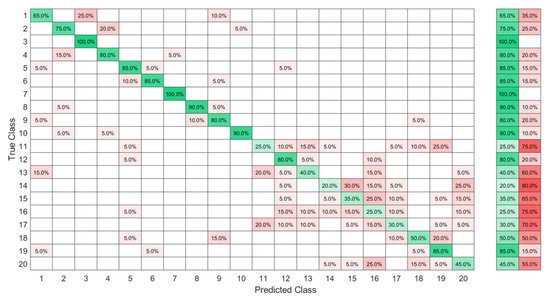

Implemented with 3000 hidden nodes after a feature selection by the KTGELM, the ELM had both a microaverage precision and a microaverage sensitivity of 97% when trained with only 13 selected features out of the original 48 features, as it is detailed in the comparison presented in Table 4. The KTGEL initialized with 3000 hidden nodes resulted in a trained model with only 1000 hidden nodes while it was given the full 48 features as inputs. The KTGEL reached the same total sensitivity with a reduced total precision by only 1% in comparison with the ELM after a separate feature selection stage.

Table 4.

Comparison between the ELM and KTGEL in recognition of ASL signs with a middle ambiguity level.

The confusion matrices in Figure 9 and Figure 10 show that even though J was a dynamic gesture, it was 100% recognized using the FSR wrist band, which could be explained by the muscle deformation resulting from the rotation of the wrist which resulted in a stronger level of the signal in comparison with the other signs where the muscle movements in the wrist level were not visible.

Figure 9.

Ten ASL letters, A–J, detected with FMG and classified by the ELM after feature selection by the KTGELM.

Figure 10.

Ten ASL letters, A–J, detected with FMG and classified by the KTGEL.

4.4. Recognition of ASL Signs with a High Ambiguity Level

The used data set in this part included the 20 ASL letters shown in Figure 11 with 10 of the signs showing a big similarity, namely between “B” and “4”, “M” and “N”, “U” and “2”, “6” and “W,” “S” and “T”, and a dynamic sign “Z”, so the expected accuracy could be as low as 50% for this data set.

Figure 11.

Data set of 20 ASL letters with expected high ambiguity, namely between “B” and “4”, “M” and “N”, “U” and “2”, “6” and “W”, “S” and “T”, and a dynamic sign “Z”.

The same sign set was collected by 20 new subjects while wearing the eight-sensor band, and 100 observations of each sign were collected as FMG data. The data collected by the FMG sensor at the wrist level presented not only information about muscle contraction but also about the tendon state. As the sensors were distributed around the wrist, the FMG band could cover all the superficial muscles. Hence, more confusion between signs was noted due to the force transmission through the muscle fibers during the contraction and the influence of the deep muscle on the superficial ones. Therefore, different signs could have the same FMG response at the level of one or more sensors when signs shared an initial hand shape or the same performing fingers. For the ELM after the KTGELM feature selection shown in Figure 12, it could be seen that the signs “T” and “S”, symbolized as classes 15 and 11, presented a source of confusion for the rest of the signs as not only the majority of their observations were misclassified, but also many other classes were mispredicted as signs “T” and “S”. Based on the FMG data set, the ELM after feature selection by the KTGELM presented a classification microaveraged precision of 78% with a sensitivity of 80% among the 20 signs.

Figure 12.

High-ambiguity data set classified by ELM after feature selection by the KTGELM.

Using the same database, the KTGEL resulted a trained model with 1000 hidden nodes. The original data without feature selection are presented in the confusion matrix in Figure 13, where similar results to the ELM with feature selection could be seen with a micro-p of 77% and a micro-r of 80% and a thrice smaller model size. Evaluating the overall classification accuracy, it could be seen that the ELM after the KTGELM and the KTGEL had the same performance in most cases, with the second being less complicated as it had only 1000 hidden nodes and could do the feature selection and the classification in the same process.

Figure 13.

High-ambiguity data set classified by the KTGEL without a previous feature selection.

5. Conclusions

This work focused on recognizing American Sign Language based on commercial FMG sensors. We proposed to optimize an ELM by a weight-pruning method to optimize the network architecture and maintain the randomness of the initial weights. The pruning reduced the network size in the ELM by removing the weights, which were participating less in the classification result. We proposed to use the k-tournament grasshopper optimization algorithm (KTGOA) as the core of the ELM’s weight-pruning process due to its fast convergence in multidimensional optimization spaces. A KTGOA was implemented to select the ELM weights. Thereby, a k-tournament grasshopper extreme learner (KTGEL) was proposed as a classifier with a reduced architecture, high performance, and internal feature selection. The influence of the number of FMG sensors and the number of subjects on the performance of the KTGEL was first investigated. It was proved in this paper that if only one subject was performing the data collection, a six-sensor bracelet was sufficient. However, with an increasing number of subjects, eight sensors were the minimal number needed to recognize the ASL numbers accurately. The investigation of the influence of the ambiguity level in the set of gestures on the performance of the KTGEL compared with the ELM showed that both had similar accuracy in the case of middle and high ambiguity levels. However, the ELM was trained with fewer features as it was preceded by a feature selection wrapper, while the KTGEL was trained with all the features. Moreover, in both tested cases, the KTGEL-trained model reduced the number of initially hidden nodes by two-thirds. The KTGEL also showed similar sensitivity and precision values with those of the ELM trained with selected features. The proposed KTGEL was created by the KTGOA that optimized the process of the best solution selection but inherited the linear behavior of the exploration–exploitation balancing coefficient from the original GOA. Similarly to the GOA, this linearity could lead to a trapping into a local optimum during the selection process of coupled features and weights, during the weight pruning in the KTGEL. Hence, in future work, the nonlinearization of the exploration–exploitation coefficient for the weight selection process will be investigated.

Author Contributions

Conceptualization, R.B. and O.K.; methodology, R.B.; software, R.B.; validation, R.B. and O.K.; formal analysis, R.B.; investigation, R.B.; resources, O.K.; data curation, R.B.; writing—original draft preparation, R.B.; writing—review and editing, R.B.; visualization, R.B.; supervision, O.K.; project administration, O.K.; funding acquisition, O.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)–Project-ID 416228727–SFB 1410, subproject A03.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Ethics Committee of Technische Universitat Chemnitz, (reference: V-331-15-GJSensor-13052019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest and the funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Albadr, M.A.A.; Tiun, S.; AL-Dhief, F.T.; Sammour, M.A.M. Spoken language identification based on the enhanced self-adjusting extreme learning machine approach. PLoS ONE 2018, 13, e0194770. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Chen, Z.; Chen, Y.; Wang, S.; Wang, H. A Class Incremental Extreme Learning Machine for Activity Recognition. Cogn. Comput. 2014, 6, 423–431. [Google Scholar] [CrossRef]

- Song, S.; Wang, M.; Lin, Y. An improved algorithm for incremental extreme learning machine. Syst. Sci. Control Eng. 2020, 8, 308–317. [Google Scholar] [CrossRef]

- Miche, Y.; Sorjamaa, A.; Bas, P.; Simula, O.; Jutten, C.; Lendasse, A. OP-ELM: Optimally Pruned Extreme Learning Machine. IEEE Trans. Neural Netw. 2010, 21, 158–162. [Google Scholar] [CrossRef] [PubMed]

- Miche, Y.; Sorjamaa, A.; Lendasse, A. OP-ELM: Theory, experiments and a toolbox. In Proceedings of the International Conference on Artificial Neural Networks, Prague, Czech Republic, 3–6 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 145–154. [Google Scholar] [CrossRef]

- Kaloop, M.R.; El-Badawy, S.M.; Ahn, J.; Sim, H.B.; Hu, J.W.; El-Hakim, R.T.A. A hybrid wavelet-optimally-pruned extreme learning machine model for the estimation of international roughness index of rigid pavements. Int. J. Pavement Eng. 2022, 23, 862–876. [Google Scholar] [CrossRef]

- Pouzols, F.M.; Lendasse, A. Evolving fuzzy optimally pruned extreme learning machine for regression problems. Evol. Syst. 2010, 1, 43–58. [Google Scholar] [CrossRef]

- Alencar, A.S.C.; Neto, A.R.R.; Gomes, J.P.P. A new pruning method for extreme learning machines via genetic algorithms. Appl. Soft Comput. 2016, 44, 101–107. [Google Scholar] [CrossRef]

- Ahila, R.; Sadasivam, V.; Manimala, K. An integrated PSO for parameter determination and feature selection of ELM and its application in classification of power system disturbances. Appl. Soft Comput. 2015, 32, 23–37. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper Optimisation Algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Wang, J.; Tang, L.; Bronlund, J.E. Surface EMG Signal Amplification and Filtering. Int. J. Comput. Appl. 2013, 82, 15–22. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Awadallah, M.A.; Faris, H.; Aljarah, I.; Hammouri, A.I. Natural selection methods for Grey Wolf Optimizer. Expert Syst. Appl. 2018, 113, 481–498. [Google Scholar] [CrossRef]

- Amft, O.; Junker, H.; Lukowicz, P.; Troster, G.; Schuster, C. Sensing muscle activities with body-worn sensors. In Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06), Cambridge, MA, USA, 3–5 April 2006; pp. 4–141, ISSN 2376-8886. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. A Review of Force Myography Research and Development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef] [PubMed]

- Vecchi, F.; Freschi, C.; Micera, S.; Sabatini, A.M.; Dario, P.; Sacchetti, R.; Vecchi, F.; Sabatini, P.R.; Sacchetti, R. Experimental evaluation of two commercial force sensors for applications in biomechanics and motor control. In Proceedings of the IFESS 2000 and NP 2000 Proceedings: 5th Annual Conference of the International Functional Electrical Stimulation Society and 6th Triennial Conference "Neural Prostheses: Motor Systems; Center for Sensory-Motor Interaction (SMI), Department of Health Science and Technology, Aalborg University: Aalborg, Denmark, 2000. [Google Scholar]

- Hollinger, A.; Wanderley, M.M. Evaluation of Commercial Force-Sensing Resistors. In Proceedings of the International Conference on New Interfaces for Musical Expression NIME-06, Paris, France, 4–8 June 2006. [Google Scholar]

- Saadeh, M.Y.; Trabia, M.B. Identification of a force-sensing resistor for tactile applications. J. Intell. Mater. Syst. Struct. 2012, 24, 813–827. [Google Scholar] [CrossRef]

- Barioul, R.; Ghribi, S.F.; Abbasi, M.B.; Fasih, A.; Jmeaa-Derbel, H.B.; Kanoun, O. Wrist Force Myography (FMG) Exploitation for Finger Signs Distinguishing. In Proceedings of the 2019 5th IEEE International Conference on Nanotechnology for Instrumentation and Measurement (NanofIM), Sfax, Tunisia, 30–31 October 2019. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.K.; Xiao, Z.G.; Menon, C. Exploration of Force Myography and surface Electromyography in hand gesture classification. Med. Eng. Phys. 2017, 41, 63–73. [Google Scholar] [CrossRef] [PubMed]

- Wan, B.; Wu, R.; Zhang, K.; Liu, L. A new subtle hand gestures recognition algorithm based on EMG and FSR. In Proceedings of the 2017 IEEE 21st International Conference on Computer Supported Cooperative Work in Design (CSCWD), Wellington, New Zealand, 26–28 April 2017; pp. 127–132. [Google Scholar] [CrossRef]

- Dementyev, A.; Paradiso, J.A. WristFlex: Low-power Gesture Input with Wrist-worn Pressure Sensors. In Proceedings of the Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; ACM: New York, NY, USA, 2014. UIST ’14. pp. 161–166. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.K.; Menon, C. Force Exertion Affects Grasp Classification Using Force Myography. IEEE Trans. -Hum.-Mach. Syst. 2018, 48, 219–226. [Google Scholar] [CrossRef]

- Ferigo, D.; Merhi, L.K.; Pousett, B.; Xiao, Z.G.; Menon, C. A Case Study of a Force-myography Controlled Bionic Hand Mitigating Limb Position Effect. J. Bionic Eng. 2017, 14, 692–705. [Google Scholar] [CrossRef]

- Cho, E.; Chen, R.; Merhi, L.K.; Xiao, Z.; Pousett, B.; Menon, C. Force Myography to Control Robotic Upper Extremity Prostheses: A Feasibility Study. Front. Bioeng. Biotechnol. 2016, 4, 18. [Google Scholar] [CrossRef]

- Barioul, R.; Ghribi, S.F.; Derbel, H.B.J.; Kanoun, O. Four Sensors Bracelet for American Sign Language Recognition based on Wrist Force Myography. In Proceedings of the 2020 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Tunis, Tunisia, 22–24 June 2020. [Google Scholar] [CrossRef]

- Atitallah, B.B.; Abbasi, M.B.; Barioul, R.; Bouchaala, D.; Derbel, N.; Kanoun, O. Simultaneous Pressure Sensors Monitoring System for Hand Gestures Recognition. In Proceedings of the 2020 IEEE Sensors, Rotterdam, The Netherlands, 25–28 October 2020. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Merhi, L.K.; Pousett, B.; Sangha, S.; Menon, C. Toward Intuitive Prosthetic Control: Solving Common Issues Using Force Myography, Surface Electromyography, and Pattern Recognition in a Pilot Case Study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

- Sadarangani, G.P.; Menon, C. A preliminary investigation on the utility of temporal features of Force Myography in the two-class problem of grasp vs. no-grasp in the presence of upper-extremity movements. Biomed. Eng. Online 2017, 16, 59. [Google Scholar] [CrossRef]

- Jiang, X.; Tory, L.; Khoshnam, M.; Chu, K.H.T.; Menon, C. Exploration of Gait Parameters Affecting the Accuracy of Force Myography-Based Gait Phase Detection. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018. [Google Scholar] [CrossRef]

- Islam, M.R.U.; Waris, A.; Kamavuako, E.N.; Bai, S. A comparative study of motion detection with FMG and sEMG methods for assistive applications. J. Rehabil. Assist. Technol. Eng. 2020, 7, 205566832093858. [Google Scholar] [CrossRef]

- Godiyal, A.K.; Pandit, S.; Vimal, A.K.; Singh, U.; Anand, S.; Joshi, D. Locomotion mode classification using force myography. In Proceedings of the 2017 IEEE Life Sciences Conference (LSC), Sydney, Australia, 13–15 December 2017. [Google Scholar] [CrossRef]

- Anvaripour, M.; Saif, M. Hand gesture recognition using force myography of the forearm activities and optimized features. In Proceedings of the 2018 IEEE International Conference on Industrial Technology (ICIT), Lyon, France, 19–22 February 2018; pp. 187–192. [Google Scholar] [CrossRef]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuators A Phys. 2020, 301, 111738. [Google Scholar] [CrossRef]

- Ramalingame, R.; Barioul, R.; Li, X.; Sanseverino, G.; Krumm, D.; Odenwald, S.; Kanoun, O. Wearable Smart Band for American Sign Language Recognition With Polymer Carbon Nanocomposite-Based Pressure Sensors. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Al-Hammouri, S.; Barioul, R.; Lweesy, K.; Ibbini, M.; Kanoun, O. Six Sensors Bracelet for Force Myography based American Sign Language Recognition. In Proceedings of the 2021 IEEE 18th International Multi-Conference on Systems, Signals & Devices (SSD), Monastir, Tunisia, 22–25 March 2021. [Google Scholar] [CrossRef]

- Reed, R. Pruning algorithms-a survey. IEEE Trans. Neural Netw. 1993, 4, 740–747. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Yu, Y. A new pruning algorithm for extreme learning machine. In Proceedings of the 2017 IEEE International Conference on Information and Automation (ICIA), Macau SAR, China, 18–20 July 2017. [Google Scholar] [CrossRef]

- Freire, A.L.; Neto, A.R.R. A Robust and Optimally Pruned Extreme Learning Machine. In Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2017; pp. 88–98. [Google Scholar] [CrossRef]

- Cui, L.; Zhai, H.; Wang, B. An Improved Pruning Algorithm for ELM Based on the PCA. In Proceedings of the 2017 International Conference on Robotics and Artificial Intelligence-ICRAI, Shanghai, China, 29–31 December 2017; ACM Press: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- de Campos Souza, P.V.; Araujo, V.S.; Guimaraes, A.J.; Araujo, V.J.S.; Rezende, T.S. Method of pruning the hidden layer of the extreme learning machine based on correlation coefficient. In Proceedings of the 2018 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Gudalajara, Mexico, 7–9 November 2018. [Google Scholar] [CrossRef]

- Li, X.; Wang, K.; Jia, C. Data-Driven Control of Ground-Granulated Blast-Furnace Slag Production Based on IOEM-ELM. IEEE Access 2019, 7, 60650–60660. [Google Scholar] [CrossRef]

- de Campos Souza, P.V.; Torres, L.C.B.; Silva, G.R.L.; de Padua Braga, A.; Lughofer, E. An Advanced Pruning Method in the Architecture of Extreme Learning Machines Using L1-Regularization and Bootstrapping. Electronics 2020, 9, 811. [Google Scholar] [CrossRef]

- Mafarja, M.; Aljarah, I.; Faris, H.; Hammouri, A.I.; Al-Zoubi, A.M.; Mirjalili, S. Binary grasshopper optimisation algorithm approaches for feature selection problems. Expert Syst. Appl. 2019, 117, 267–286. [Google Scholar] [CrossRef]

- Huang, G.B. What are Extreme Learning Machines? Filling the Gap Between Frank Rosenblatt’s Dream and John von Neumann’s Puzzle. Cogn. Comput. 2015, 7, 263–278. [Google Scholar] [CrossRef]

- Anam, K.; Al-Jumaily, A. Evaluation of extreme learning machine for classification of individual and combined finger movements using electromyography on amputees and non-amputees. Neural Netw. 2017, 85, 51–68. [Google Scholar] [CrossRef]

- Chorowski, J.; Wang, J.; Zurada, J.M. Review and performance comparison of SVM- and ELM-based classifiers. Neurocomputing 2014, 128, 507–516. [Google Scholar] [CrossRef]

- Ibrahim, H.T.; Mazher, W.J.; Ucan, O.N.; Bayat, O. A grasshopper optimizer approach for feature selection and optimizing SVM parameters utilizing real biomedical data sets. Neural Comput. Appl. 2018, 31, 5965–5974. [Google Scholar] [CrossRef]

- Shi, J.; Cai, Y.; Zhu, J.; Zhong, J.; Wang, F. SEMG based hand motion recognition using cumulative residual entropy and extreme learning machine. Med. Biol. Eng. Comput. 2012, 51, 417–427. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man, Cybern. Part (Cybernetics) 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Carrara, F.; Elias, P.; Sedmidubsky, J.; Zezula, P. LSTM-based real-time action detection and prediction in human motion streams. Multimed. Tools Appl. 2019, 78, 27309–27331. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).