Decentralized Policy Coordination in Mobile Sensing with Consensual Communication

Abstract

1. Introduction

1.1. Contributions

- We model the mobile sensing problem as a decentralized sequential optimization problem, where the vehicles navigate to maximize the spatial-temporal coverage of the events in the environment.

- A communication framework is proposed for cooperative navigation. In particular, the communication protocol is learned by model-free reinforcement learning methods.

- We explicitly correlate the vehicles moving policies with the communication messages to promote coordination. The regularized algorithm can be proved to converge to equilibrium points under certain mild assumptions.

- Extensive experiments are conducted to show the effectiveness of our approach.

1.2. Organizations

2. Related Work

2.1. Reinforcement Learning

2.2. Mobile Sensing

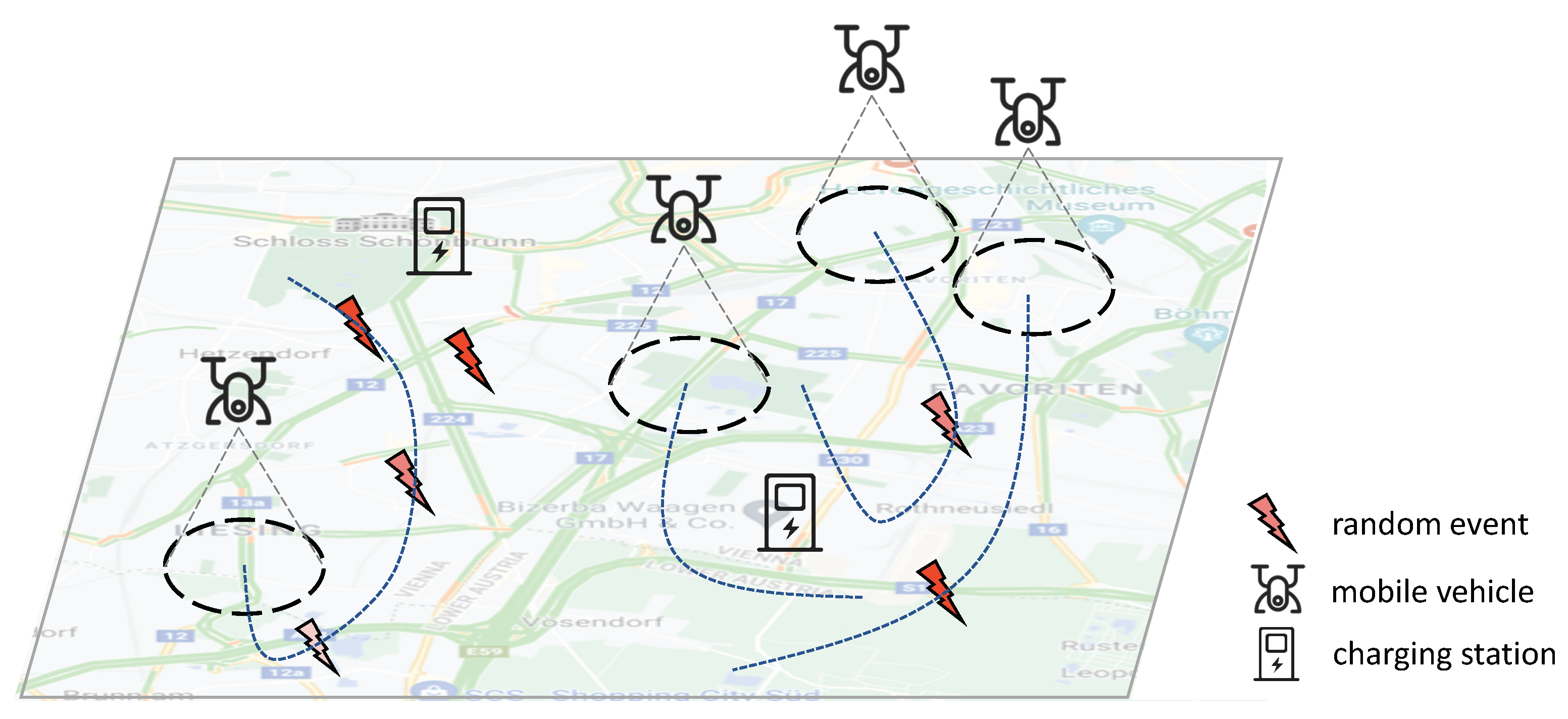

3. System Model

4. Learning to Communicate

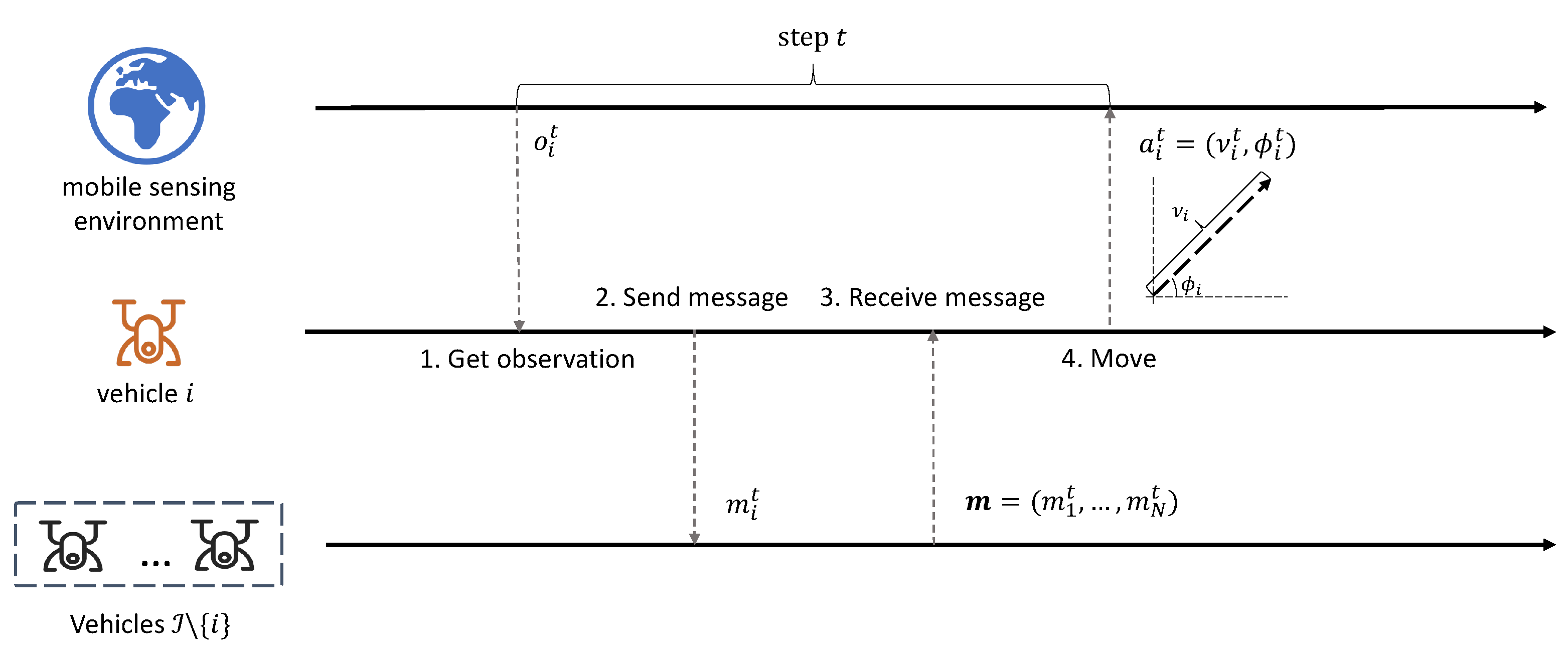

4.1. Mobile Sensing as a Markov Game

- State: In the mobile sensing problem, at each interval t, the system state includes the global information of the environment.

- Observation: In the environment, each vehicle i can only partially observe the state. The observation is the subset of the environment state: . We assume that each vehicle can observe the environment information within the sensing radius , including its own position, last moving action, remaining battery capacity and sensed events.

- Action: The action of the mobile vehicle i is a continuous tuple , where is the speed, and represents the moving angle. At each interval, all the vehicles will take the moving action to form a joint action .

- Transition: Given the joint actions of the vehicles, the environment will transit to the next state according to the transition function:Note that this function is not known to be used, and can only be inferred through repeated interaction with the environment.

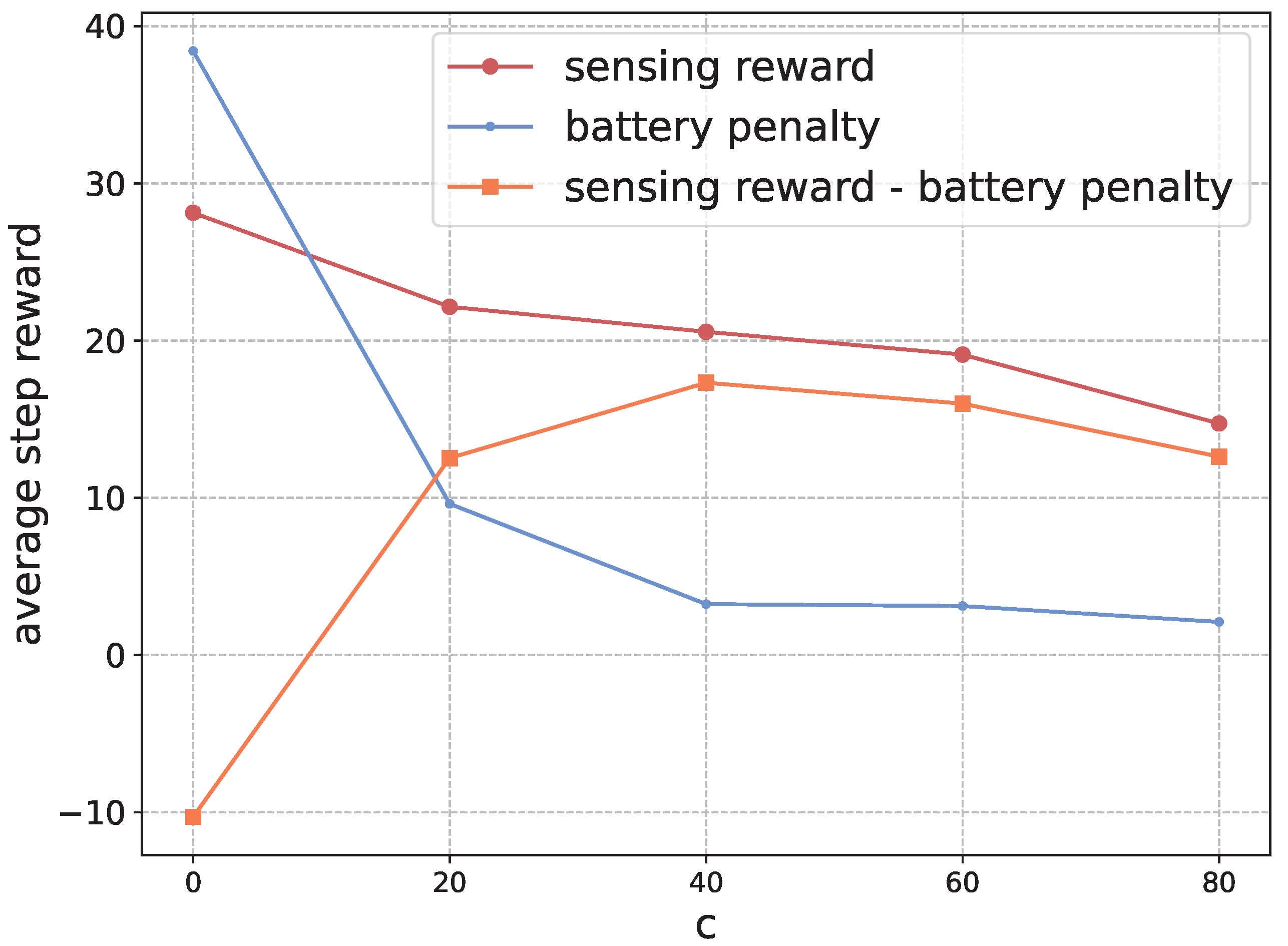

- Reward: As the mobile vehicles cooperate to maximize the spatial–temporal coverage of the environment, we define a global reward as the sensed events intensities:However, for each vehicle, it is intricate to infer its contribution to the global reward. Therefore, we decompose the reward function and define the individual reward for each vehicle i asThe reward function indicates that the reward of sensing event e is averaged by the number of vehicles that cover e at this step. It is obvious to see that . To take the battery capacity into account, we relax the constraint in Equation (4) with an additional term c when the vehicle runs out of battery power. The vehicles will receive this penalty when the capacity is below zero, i.e., if ; otherwise, . The value of c balances the preference between sensing a reward and penalty of battery loss. The relaxed version of the reward can be formulated as

4.2. The Communication Framework

4.3. Policy Optimization

5. Consensual Communication

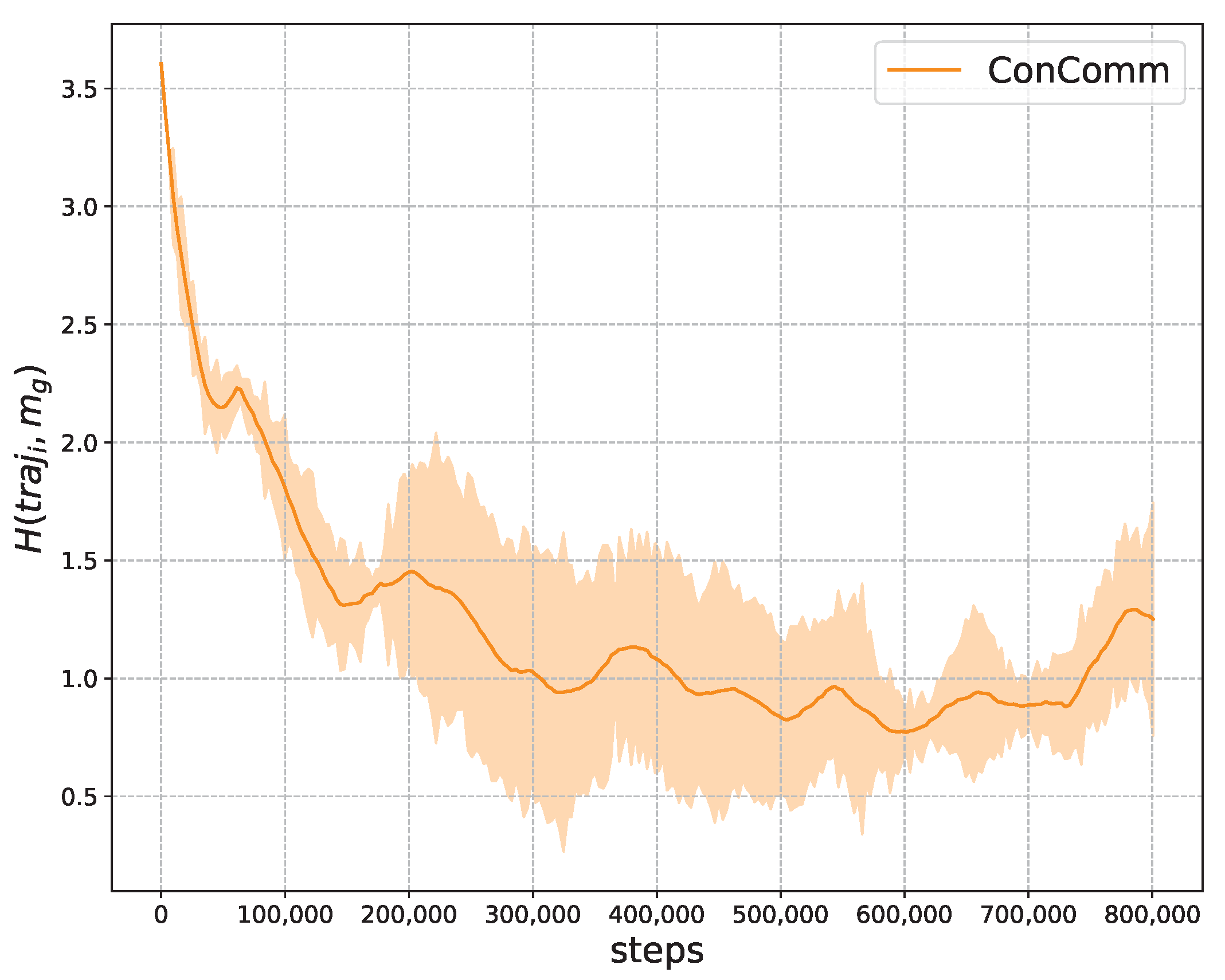

5.1. Mutual Information for Consensual Communication

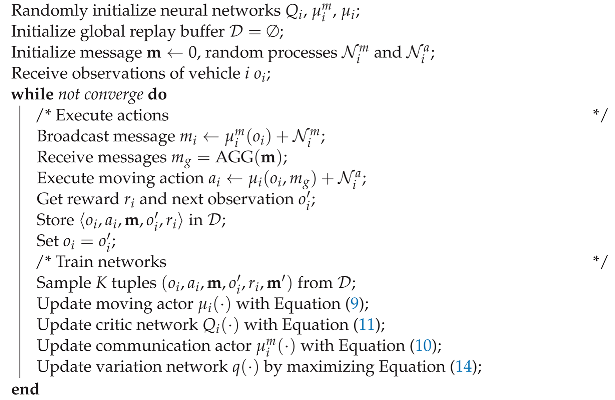

| Algorithm 1: Policy optimization for ego vehicle i |

|

5.2. Convergence Analysis

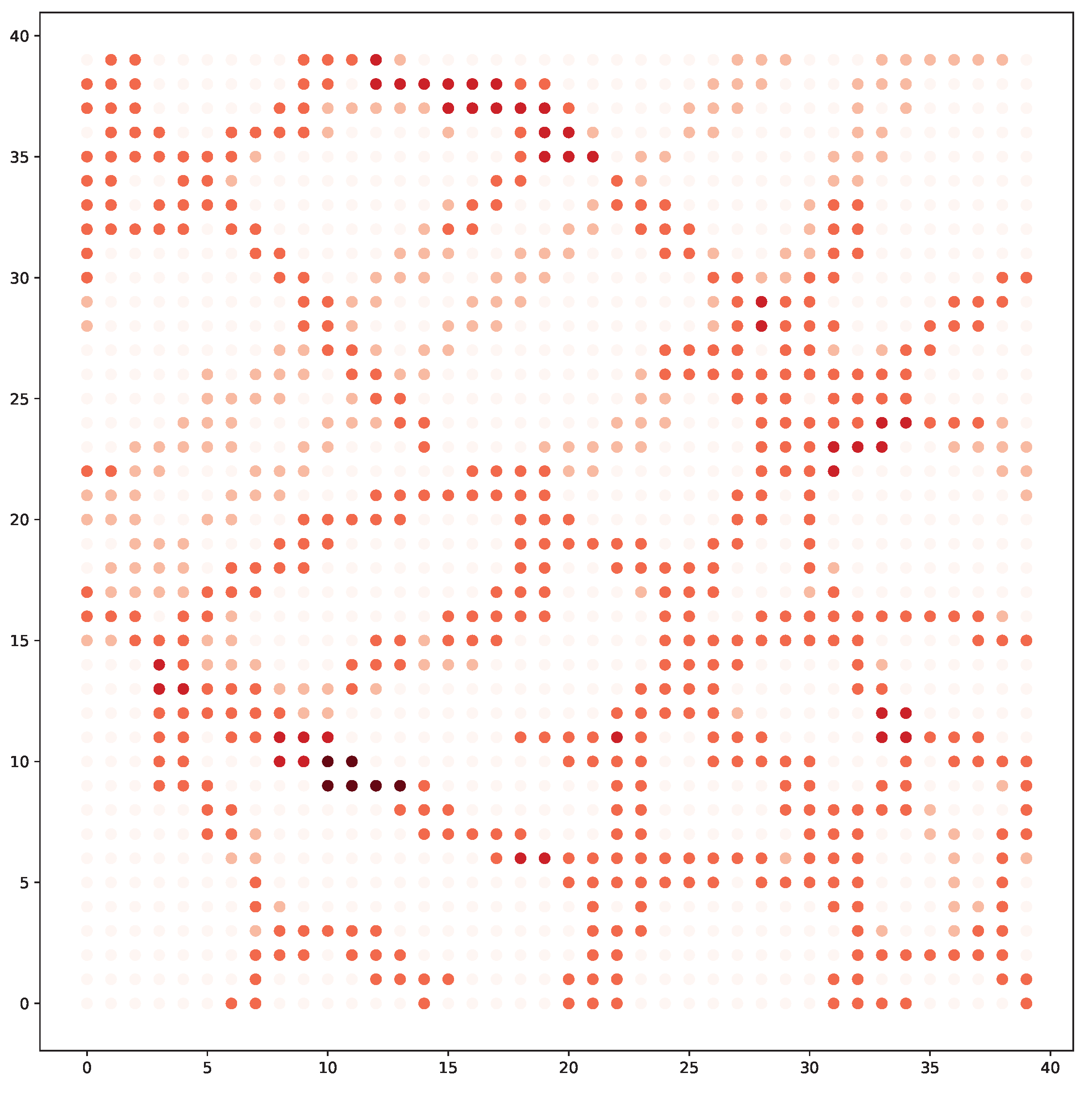

6. Evaluation

6.1. Experiment Setup

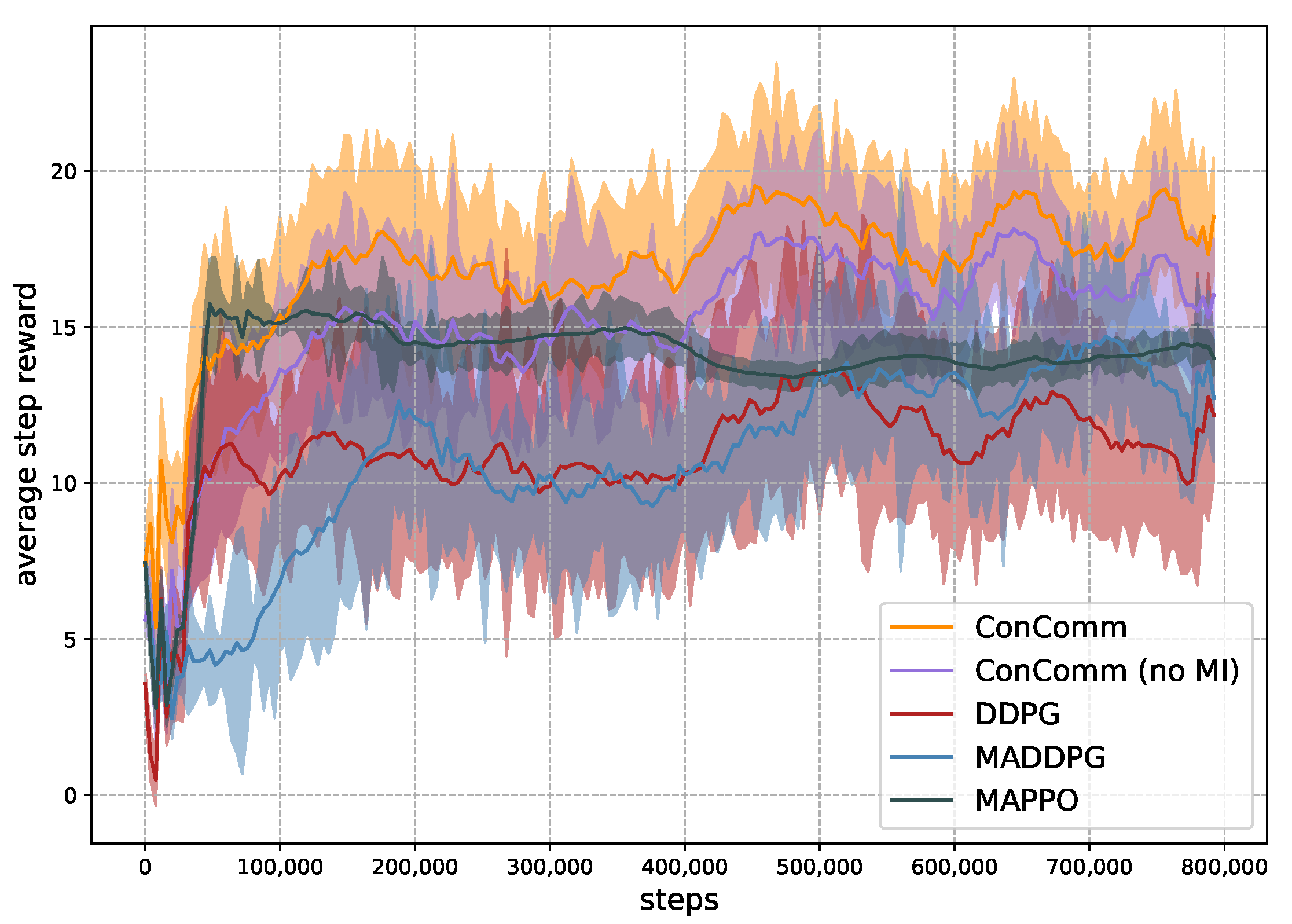

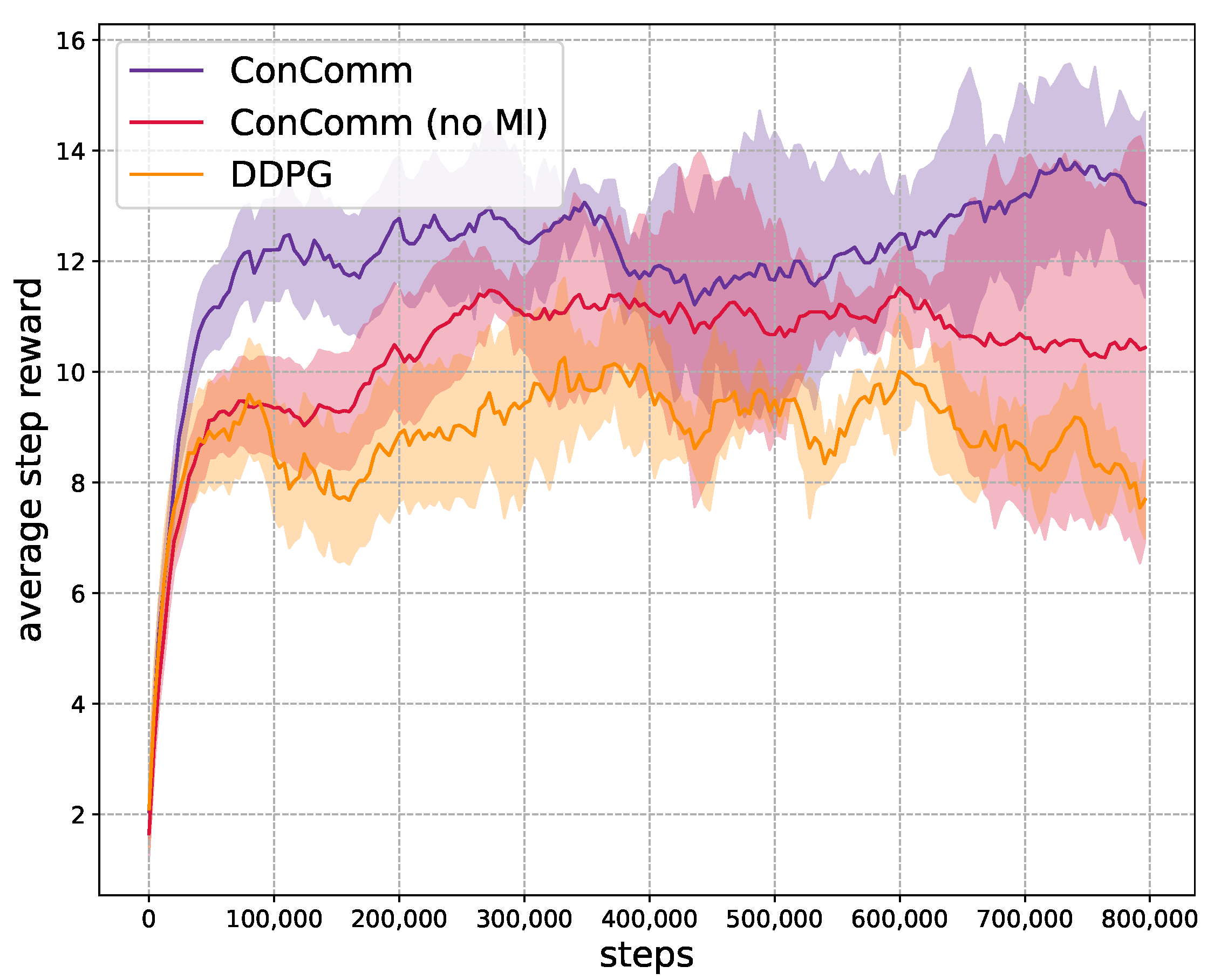

- ConComm (no MI): In this algorithm, we implement the ConComm algorithm without the mutual information item. This comparison is to demonstrate the effectiveness of the mutual information item.

- DDPG [15]: In this algorithm, each mobile vehicle independently learns a policy to schedule the sensing path. The main drawback is that the multi-agent environment does not follow the Markov property, which may lead to the failure of this algorithm.

- MADDPG [9]: MADDPG uses the CTDE framework, where there is a global critic function that has access to the historical samples from all mobile vehicles. However, the policies of the vehicles are not coordinated explicitly during execution.

- MAPPO [42]: This algorithm is a multi-agent version of PPO. It has achieved state-of-the-art performance in many scenarios.

6.2. Performance Analysis

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, Y.; Dong, W.; Guo, K.; Liu, X.; Chen, Y.; Liu, X.; Bu, J.; Chen, C. Mosaic: A low-cost mobile sensing system for urban air quality monitoring. In Proceedings of the 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Carnelli, P.; Yeh, J.; Sooriyabandara, M.; Khan, A. Parkus: A Novel Vehicle Parking Detection System. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31, pp. 4650–4656. [Google Scholar]

- Laport, F.; Serrano, E.; Bajo, J. A Multi-Agent Architecture for Mobile Sensing Systems. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 4439–4451. [Google Scholar] [CrossRef]

- Ranieri, A.; Caputo, D.; Verderame, L.; Merlo, A.; Caviglione, L. Deep Adversarial Learning on Google Home Devices. J. Internet Serv. Inf. Secur. 2021, 11, 33–43. [Google Scholar]

- Liu, C.H.; Ma, X.; Gao, X.; Tang, J. Distributed energy-efficient multi-UAV navigation for long-term communication coverage by deep reinforcement learning. IEEE Trans. Mob. Comput. 2019, 19, 1274–1285. [Google Scholar] [CrossRef]

- Wei, Y.; Zheng, R. Multi-Robot Path Planning for Mobile Sensing through Deep Reinforcement Learning. In Proceedings of the IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- Rashid, T.; Samvelyan, M.; Schroeder, C.; Farquhar, G.; Foerster, J.; Whiteson, S. QMIX: Monotonic Value Function Factorisation for Deep Multi-Agent Reinforcement Learning. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4292–4301. [Google Scholar]

- Foerster, J.; Farquhar, G.; Afouras, T.; Nardelli, N.; Whiteson, S. Counterfactual Multi-Agent Policy Gradients. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, O.P.; Mordatch, I. Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 6379–6390. [Google Scholar]

- Foerster, J.; Assael, I.A.; de Freitas, N.; Whiteson, S. Learning to Communicate with Deep Multi Agent Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29, pp. 2137–2145. [Google Scholar]

- Cao, K.; Lazaridou, A.; Lanctot, M.; Leibo, J.Z.; Tuyls, K.; Clark, S. Emergent Communication through Negotiation. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Lowe, R.; Foerster, J.; Boureau, Y.L.; Pineau, J.; Dauphin, Y. On the pitfalls of measuring emergent communication. arXiv 2019, arXiv:1903.05168. [Google Scholar]

- Sukhbaatar, S.; Szlam, A.; Fergus, R. Learning multiagent communication with backpropagation. arXiv 2016, arXiv:1605.07736. [Google Scholar]

- Das, A.; Gervet, T.; Romoff, J.; Batra, D.; Parikh, D.; Rabbat, M.; Pineau, J. Tarmac: Targeted multi-agent communication. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 1538–1546. [Google Scholar]

- Jaques, N.; Lazaridou, A.; Hughes, E.; Gulcehre, C.; Ortega, P.A.; Strouse, D.; Leibo, J.Z.; de Freitas, N. Intrinsic social motivation via causal influence in multi-agent RL. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Karaliopoulos, M.; Telelis, O.; Koutsopoulos, I. User recruitment for mobile crowdsensing over opportunistic networks. In Proceedings of the 2015 IEEE Conference on Computer Communications, Hong Kong, China, 26 April–1 May 2015; pp. 2254–2262. [Google Scholar]

- Hu, Q.; Wang, S.; Cheng, X.; Zhang, J.; Lv, W. Cost-efficient mobile crowdsensing with spatial-temporal awareness. IEEE Trans. Mob. Comput. 2019, 20, 928–938. [Google Scholar] [CrossRef]

- Rahili, S.; Lu, J.; Ren, W.; Al-Saggaf, U.M. Distributed coverage control of mobile sensor networks in unknown environment using game theory: Algorithms and experiments. IEEE Trans. Mob. Comput. 2017, 17, 1303–1313. [Google Scholar] [CrossRef]

- Esch, R.R.; Protti, F.; Barbosa, V.C. Adaptive event sensing in networks of autonomous mobile agents. J. Netw. Comput. Appl. 2016, 71, 118–129. [Google Scholar] [CrossRef]

- Li, F.; Wang, Y.; Gao, Y.; Tong, X.; Jiang, N.; Cai, Z. Three-Party Evolutionary Game Model of Stakeholders in Mobile Crowdsourcing. IEEE Trans. Comput. Soc. Syst. 2021, 9, 974–985. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, M.; Zhu, L.; Wu, T.; Liu, X. Enabling Efficient and Strong Privacy-Preserving Truth Discovery in Mobile Crowdsensing. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3569–3581. [Google Scholar] [CrossRef]

- Zhao, B.; Liu, X.; Chen, W.N.; Deng, R. CrowdFL: Privacy-Preserving Mobile Crowdsensing System via Federated Learning. IEEE Trans. Mob. Comput. 2022, 1. [Google Scholar] [CrossRef]

- You, X.; Liu, X.; Jiang, N.; Cai, J.; Ying, Z. Reschedule Gradients: Temporal Non-IID Resilient Federated Learning. IEEE Internet Things J. 2022, 1. [Google Scholar] [CrossRef]

- Nasiraee, H.; Ashouri-Talouki, M.; Liu, X. Optimal Black-Box Traceability in Decentralized Attribute-Based Encryption. IEEE Trans. Cloud Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- Wang, J.; Li, P.; Huang, W.; Chen, Z.; Nie, L. Task Priority Aware Incentive Mechanism with Reward Privacy-Preservation in Mobile Crowdsensing. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design, Hangzhou, China, 4–6 May 2022; pp. 998–1003. [Google Scholar]

- Komisarek, M.; Pawlicki, M.; Kozik, R.; Choras, M. Machine Learning Based Approach to Anomaly and Cyberattack Detection in Streamed Network Traffic Data. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2021, 12, 3–19. [Google Scholar]

- Nowakowski, P.; Zórawski, P.; Cabaj, K.; Mazurczyk, W. Detecting Network Covert Channels using Machine Learning, Data Mining and Hierarchical Organisation of Frequent Sets. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2021, 12, 20–43. [Google Scholar]

- Johnson, C.; Khadka, B.; Ruiz, E.; Halladay, J.; Doleck, T.; Basnet, R.B. Application of deep learning on the characterization of tor traffic using time based features. J. Internet Serv. Inf. Secur. 2021, 11, 44–63. [Google Scholar]

- Bithas, P.S.; Michailidis, E.T.; Nomikos, N.; Vouyioukas, D.; Kanatas, A.G. A survey on machine-learning techniques for UAV-based communications. Sensors 2019, 19, 5170. [Google Scholar] [CrossRef]

- An, N.; Wang, R.; Luan, Z.; Qian, D.; Cai, J.; Zhang, H. Adaptive assignment for quality-aware mobile sensing network with strategic users. In Proceedings of the 2015 IEEE 17th International Conference on High Performance Computing and Communications, 2015 IEEE 7th International Symposium on Cyberspace Safety and Security, and 2015 IEEE 12th International Conference on Embedded Software and Systems, New York, NY, USA, 24–26 August 2015; pp. 541–546. [Google Scholar]

- Zhang, W.; Song, K.; Rong, X.; Li, Y. Coarse-to-fine uav target tracking with deep reinforcement learning. IEEE Trans. Autom. Sci. Eng. 2018, 16, 1522–1530. [Google Scholar] [CrossRef]

- Liu, C.H.; Dai, Z.; Zhao, Y.; Crowcroft, J.; Wu, D.; Leung, K.K. Distributed and energy-efficient mobile crowdsensing with charging stations by deep reinforcement learning. IEEE Trans. Mob. Comput. 2019, 20, 130–146. [Google Scholar] [CrossRef]

- Liu, C.H.; Chen, Z.; Zhan, Y. Energy-efficient distributed mobile crowd sensing: A deep learning approach. IEEE J. Sel. Areas Commun. 2019, 37, 1262–1276. [Google Scholar] [CrossRef]

- Zeng, F.; Hu, Z.; Xiao, Z.; Jiang, H.; Zhou, S.; Liu, W.; Liu, D. Resource allocation and trajectory optimization for QoE provisioning in energy-efficient UAV-enabled wireless networks. IEEE Trans. Veh. Technol. 2020, 69, 7634–7647. [Google Scholar] [CrossRef]

- Samir, M.; Ebrahimi, D.; Assi, C.; Sharafeddine, S.; Ghrayeb, A. Leveraging UAVs for coverage in cell-free vehicular networks: A deep reinforcement learning approach. IEEE Trans. Mob. Comput. 2020, 20, 2835–2847. [Google Scholar] [CrossRef]

- Szepesvári, C.; Littman, M.L. A unified analysis of value-function-based reinforcement-learning algorithms. Neural Comput. 1999, 11, 2017–2060. [Google Scholar] [CrossRef]

- Hu, J.; Wellman, M.P. Nash Q-learning for general-sum stochastic games. J. Mach. Learn. Res. 2003, 4, 1039–1069. [Google Scholar]

- Yu, C.; Velu, A.; Vinitsky, E.; Wang, Y.; Bayen, A.; Wu, Y. The Surprising Effectiveness of PPO in Cooperative, Multi-Agent Games. arXiv 2021, arXiv:2103.01955. [Google Scholar]

| Notation | Definition |

|---|---|

| the set of mobile vehicles: | |

| the set of events | |

| t | the time step |

| the position of vehicle i at step t | |

| moving speed and angle of vehicle i at step t | |

| battery capacity of vehicle i at step t | |

| battery consumption rate of vehicle i at step t | |

| battery charging rate at the charging station | |

| the sensing radius of vehicle i | |

| the event intensity of event at step t | |

| c | the penalty for running out of battery |

| the reward of vehicle i at step t | |

| the moving policy function of vehicle i | |

| the communication policy function of vehicle i | |

| the action–value function of vehicle i | |

| the state value function of vehicle i | |

| proxy for the posterior function | |

| mutual information function | |

| the weight of the MI reward |

| Sensing Reward | Battery Penalty | Average Step Reward | |

|---|---|---|---|

| 0 | |||

| 0.25 | |||

| 0.5 | |||

| 0.25 | |||

| 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Wu, L.; You, I. Decentralized Policy Coordination in Mobile Sensing with Consensual Communication. Sensors 2022, 22, 9584. https://doi.org/10.3390/s22249584

Zhang B, Wu L, You I. Decentralized Policy Coordination in Mobile Sensing with Consensual Communication. Sensors. 2022; 22(24):9584. https://doi.org/10.3390/s22249584

Chicago/Turabian StyleZhang, Bolei, Lifa Wu, and Ilsun You. 2022. "Decentralized Policy Coordination in Mobile Sensing with Consensual Communication" Sensors 22, no. 24: 9584. https://doi.org/10.3390/s22249584

APA StyleZhang, B., Wu, L., & You, I. (2022). Decentralized Policy Coordination in Mobile Sensing with Consensual Communication. Sensors, 22(24), 9584. https://doi.org/10.3390/s22249584