CamNuvem: A Robbery Dataset for Video Anomaly Detection

Abstract

1. Introduction

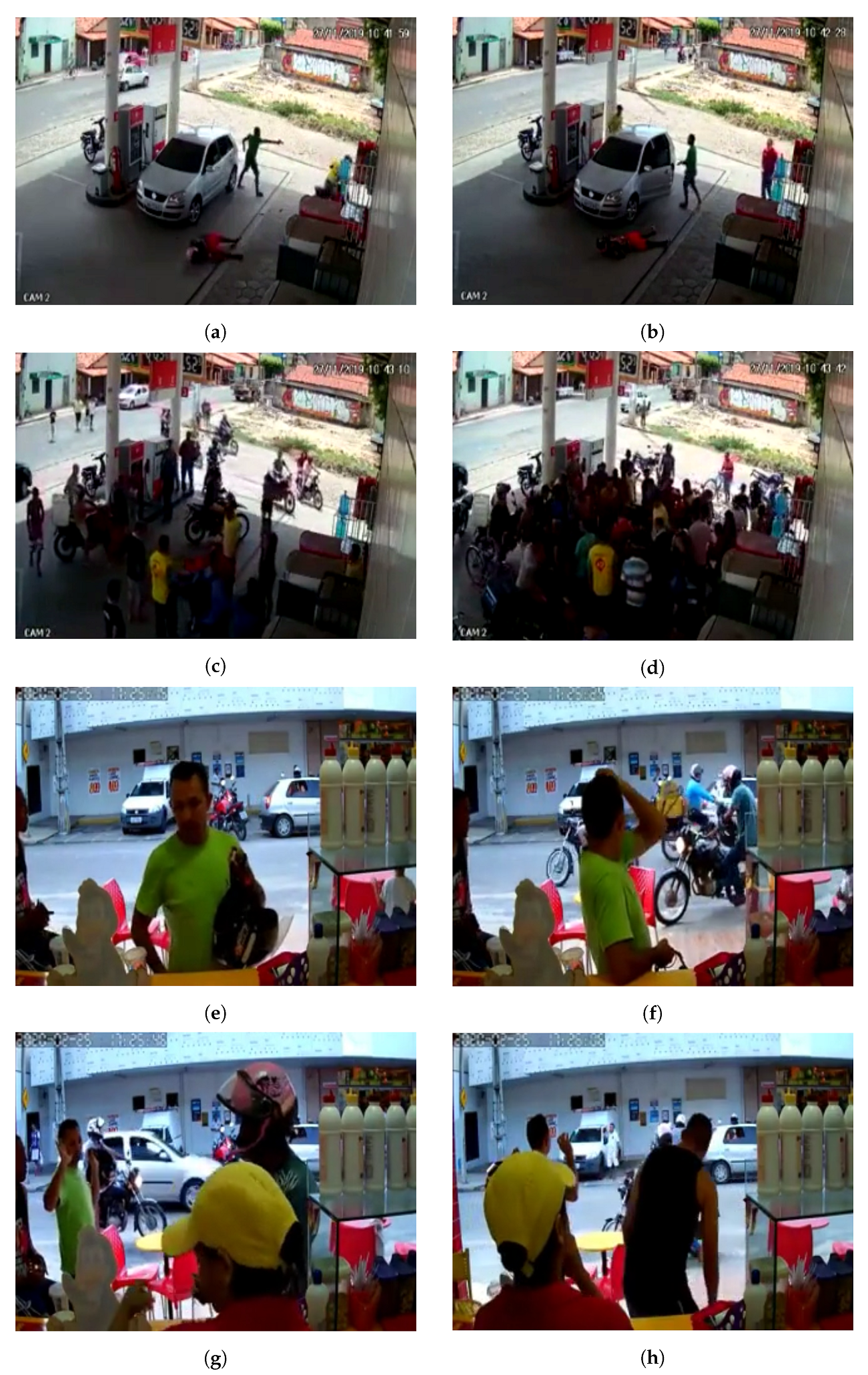

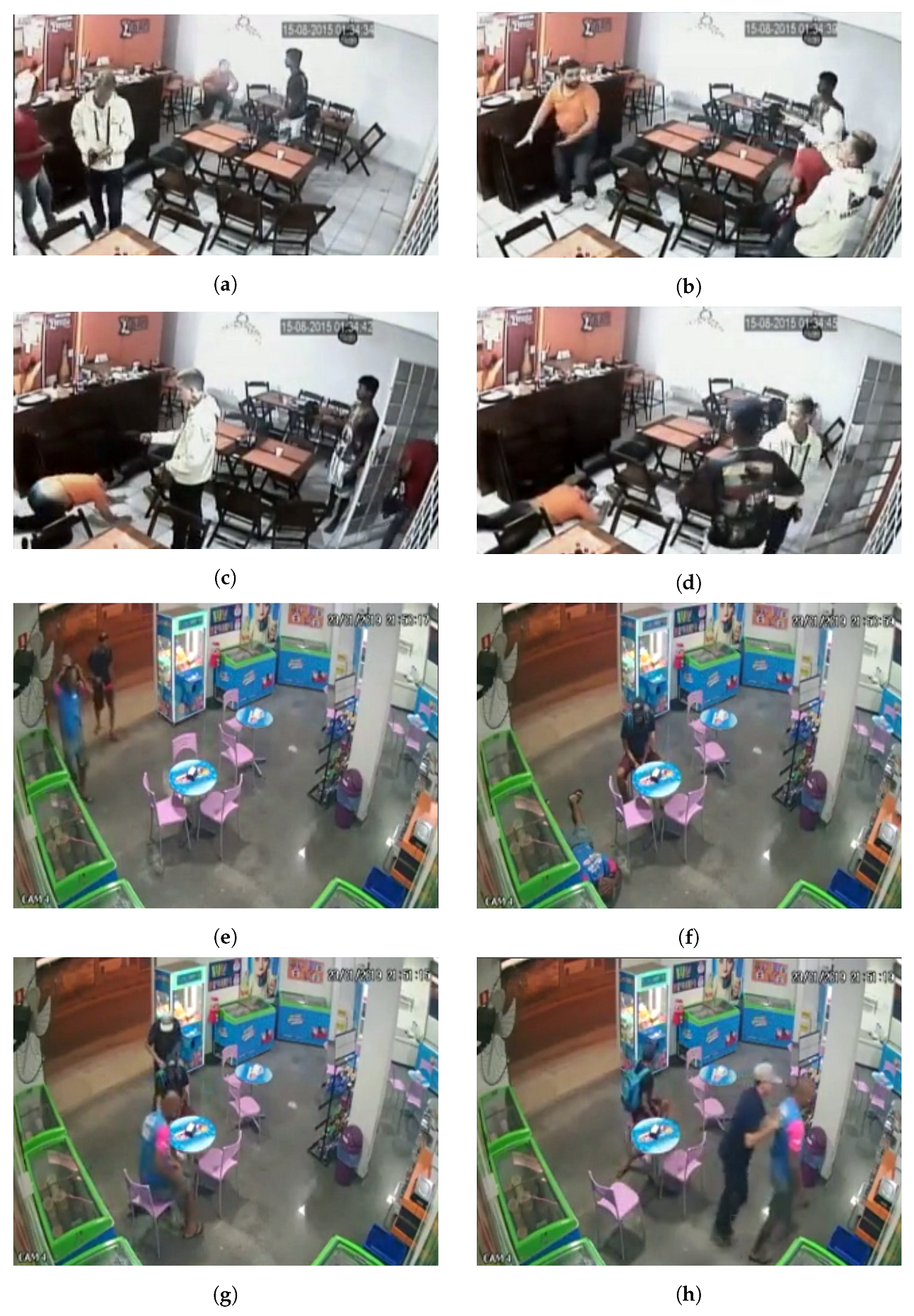

- A dataset containing 486 new real–world video surveillance clips showing robberies that were acquired from public sources such as social media is presented.

- A benchmark is created through the application of three state–of–the–art video surveillance methods that were originally applied to the UCF–Crime dataset. These methods were standardised for a fair comparison, which was not performed in the original UCF–Crime comparison.

2. Related Work

- Classical Methods: In this category are the non–deep–learning–based methods, such as that proposed in [17], which focused on capturing pedestrian movement information using a histogram of oriented gradients (HOG) [18], object positions, and colour histograms. Khan et al. [19] used the Lukas–Kanade method [20] to calculate the flow direction from a pixel’s spatial vicinity (in the feature extraction step) and used k–means to determine an abnormality score for a video segment. This work focused on low–power devices and presented interesting results, taking into account the low–power needs of these devices. Amraee et al. [21] used the histogram of optical flow (HOF) [22] to represent the fixed part of an image (background) and the HOG [18] and local binary pattern (LBP) [23] to extract the features of moving objects from images (foreground).

- Hybrid Methods: These methods aim to model some part of the anomaly detection using classical models and deep learning. Rezaei and Yazdi [24] used a convolutional neural network (CNN) [25] and non–negative matrix factorisation to extract features from pixels. Then, they used a support vector machine (SVM) [26] to classify the features according to whether they are from a normal or abnormal segment. The method proposed in [7] used the Canny edge detector [27] to extract the features from the flow of a frame set. These features and the original images are combined and used as the input of a deep autoencoder, which was trained in an unsupervised manner. Wang et al. [28] used the Horn–Schunck [29] optical flow descriptor to extract the frame information and a deep autoencoder to calculate the reconstruction error.

- Deep Learning Methods: These approaches are based only on deep learning. Singh and Pankajakshan [11] used a CNN to extract local visual features (in the same frame) and long short–term memory (LSTM) [30] to aggregate features from several frames. Then, deconvolution [31] is used to reconstruct a future frame, which, in the training phase, is compared with the real future frame. In the test phase, an abnormality is detected if a frame has a reconstruction error greater than a predetermined threshold. Wang et al. [32] replaced the deconvolution part with a generative adversarial network (GAN) [33] that was used to predict a future frame given a set of the previous frames. They used PSNR as an error measure and the optical loss, intensity loss, and gradient loss to capture specific characteristics of the images. Following this GAN scheme, Doshi and Ylimaz [12] proposed a statistical approach to automatically configure the reconstruction error threshold that is used to detect abnormal video segments, since this is an important design choice.

3. Materials and Methods

3.1. Multiple Instance Learning (MIL) Approach

3.2. CamNuvem Dataset Statistics

3.3. Feature Extractor

3.4. Robust Temporal Feature Magnitude Learning (RTFM)

3.5. Weakly Supervised Anomaly Localisation (WSAL)

3.6. RADS

3.7. Experiments

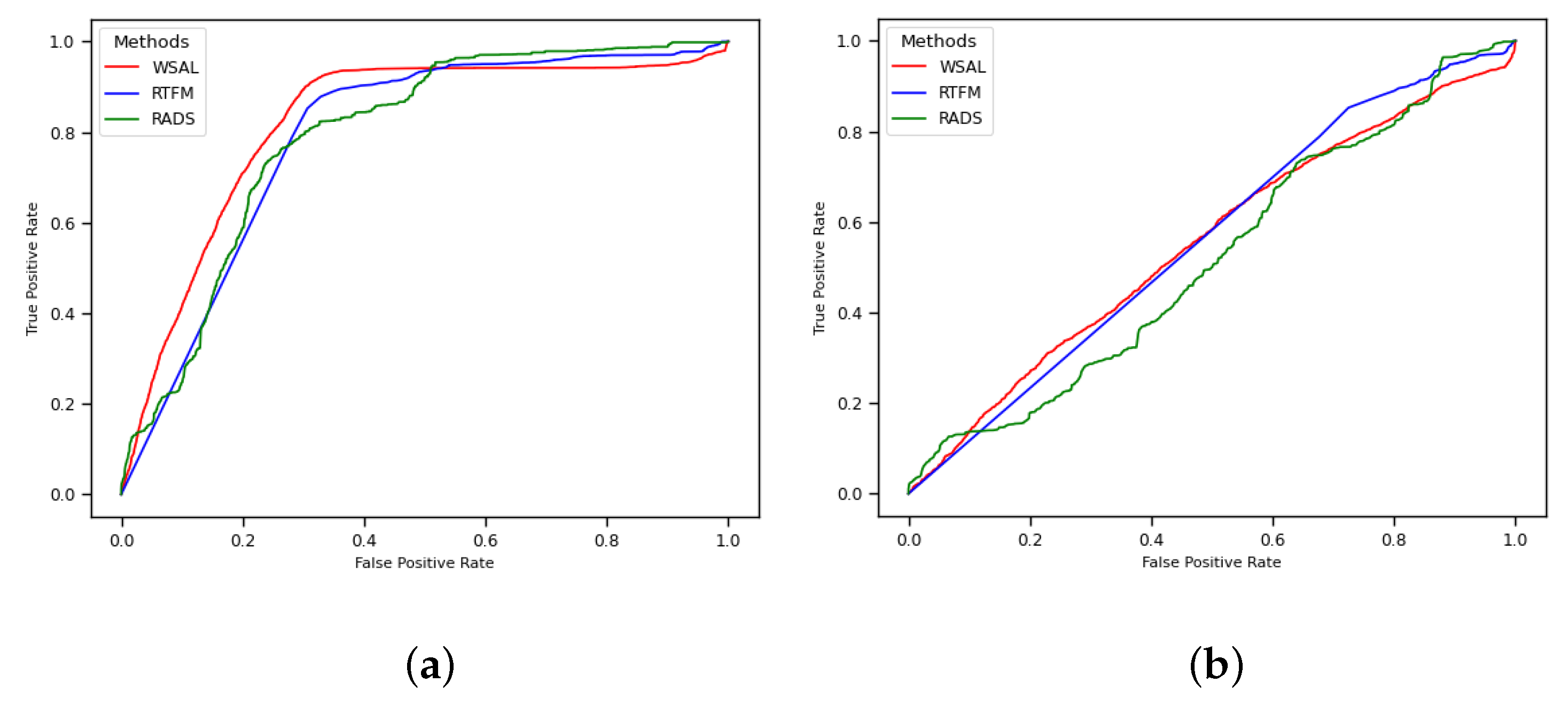

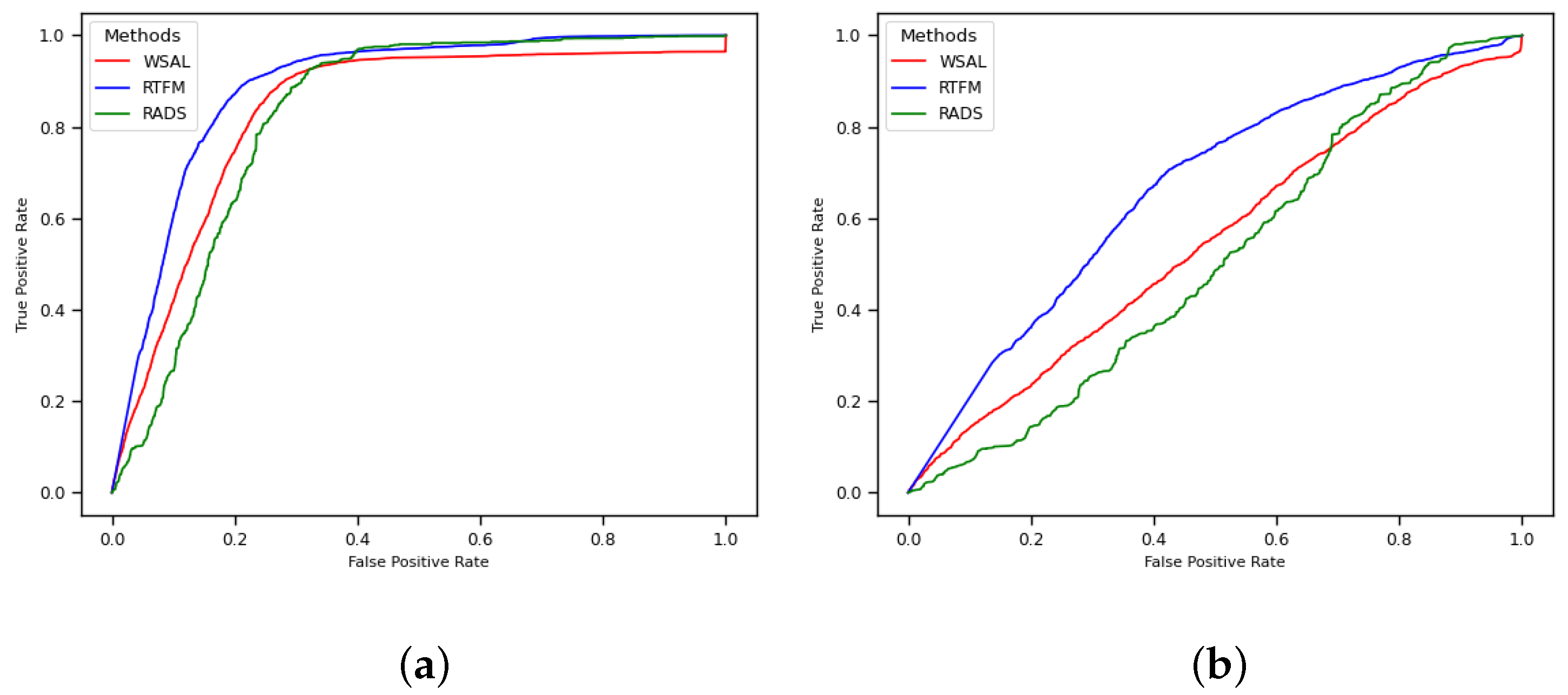

4. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DNN | Deep Neural Network |

| GAN | Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| LSTM | Long–Short Term Memory |

| HOG | Histogram of Oriented Gradients |

| AUC | Area Under Curve |

| ROC | Receiver Operating Characteristic |

| RTFM | Robust Temporal Feature Magnitude Learning |

| WSAL | Weakly Supervised Anomaly Localisation |

| MIL | Multiple Instance Learning |

References

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar]

- Tian, Y.; Pang, G.; Chen, Y.; Singh, R.; Verjans, J.W.; Carneiro, G. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 4975–4986. [Google Scholar]

- Lv, H.; Zhou, C.; Cui, Z.; Xu, C.; Li, Y.; Yang, J. Localizing anomalies from weakly-labeled videos. IEEE Trans. Image Process. 2021, 30, 4505–4515. [Google Scholar] [CrossRef]

- Shin, W.; Bu, S.J.; Cho, S.B. 3D-convolutional neural network with generative adversarial network and autoencoder for robust anomaly detection in video surveillance. Int. J. Neural Syst. 2020, 30, 2050034. [Google Scholar] [CrossRef]

- Chen, D.; Wang, P.; Yue, L.; Zhang, Y.; Jia, T. Anomaly detection in surveillance video based on bidirectional prediction. Image Vis. Comput. 2020, 98, 103915. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, J.; Wu, P. Bidirectional retrospective generation adversarial network for anomaly detection in videos. IEEE Access 2021, 9, 107842–107857. [Google Scholar] [CrossRef]

- Ramchandran, A.; Sangaiah, A.K. Unsupervised deep learning system for local anomaly event detection in crowded scenes. Multimed. Tools Appl. 2020, 79, 35275–35295. [Google Scholar] [CrossRef]

- Fan, Z.; Yin, J.; Song, Y.; Liu, Z. Real-time and accurate abnormal behavior detection in videos. Mach. Vis. Appl. 2020, 31, 72. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with enhanced motion vector CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2718–2726. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 20–36. [Google Scholar]

- Singh, P.; Pankajakshan, V. A deep learning based technique for anomaly detection in surveillance videos. In Proceedings of the 2018 Twenty Fourth National Conference on Communications (NCC), Hyderabad, India, 25–28 February 2018; pp. 1–6. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Online anomaly detection in surveillance videos with asymptotic bound on false alarm rate. Pattern Recognit. 2021, 114, 107865. [Google Scholar] [CrossRef]

- Wang, T.; Miao, Z.; Chen, Y.; Zhou, Y.; Shan, G.; Snoussi, H. AED-Net: An abnormal event detection network. Engineering 2019, 5, 930–939. [Google Scholar] [CrossRef]

- Jiang, F.; Yuan, J.; Tsaftaris, S.A.; Katsaggelos, A.K. Anomalous video event detection using spatiotemporal context. Comput. Vis. Image Underst. 2011, 115, 323–333. [Google Scholar] [CrossRef]

- Sabokrou, M.; Fayyaz, M.; Fathy, M.; Moayed, Z.; Klette, R. Deep-anomaly: Fully convolutional neural network for fast anomaly detection in crowded scenes. Comput. Vis. Image Underst. 2018, 172, 88–97. [Google Scholar] [CrossRef]

- Doshi, K.; Yilmaz, Y. Continual learning for anomaly detection in surveillance videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 254–255. [Google Scholar]

- Ma, D.; Wang, Q.; Yuan, Y. Anomaly detection in crowd scene via online learning. In Proceedings of the International Conference on Internet Multimedia Computing and Service, Xiamen, China, 10–12 July 2014; pp. 158–162. [Google Scholar]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Khan, M.U.K.; Park, H.S.; Kyung, C.M. Rejecting motion outliers for efficient crowd anomaly detection. IEEE Trans. Inf. Forensics Secur. 2018, 14, 541–556. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI’81), Vancouver, BC, Canada, 24–28 August 1981; Volume 81. [Google Scholar]

- Amraee, S.; Vafaei, A.; Jamshidi, K.; Adibi, P. Abnormal event detection in crowded scenes using one-class SVM. Signal Image Video Process. 2018, 12, 1115–1123. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B.; Schmid, C. Human detection using oriented histograms of flow and appearance. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 428–441. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Rezaei, F.; Yazdi, M. A new semantic and statistical distance-based anomaly detection in crowd video surveillance. Wirel. Commun. Mob. Comput. 2021, 2021, 5513582. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Hearst, M.; Dumais, S.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Wang, T.; Qiao, M.; Zhu, A.; Shan, G.; Snoussi, H. Abnormal event detection via the analysis of multi-frame optical flow information. Front. Comput. Sci. 2020, 14, 304–313. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. [Google Scholar]

- Wang, C.; Yao, Y.; Yao, H. Video anomaly detection method based on future frame prediction and attention mechanism. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Virtual, 27–30 January 2021; pp. 0405–0407. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Li, W.; Mahadevan, V.; Vasconcelos, N. Anomaly detection and localization in crowded scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 18–32. [Google Scholar]

- Lu, C.; Shi, J.; Jia, J. Abnormal event detection at 150 fps in Matlab. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2720–2727. [Google Scholar]

- Velastin, S.A.; Gómez-Lira, D.A. People detection and pose classification inside a moving train using computer vision. In Proceedings of the International Visual Informatics Conference, Bangi, Malaysia, 28–30 November 2017; Springer: Cham, Switzerland, 2017; pp. 319–330. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I.; Reinitz, D. Robust real-time unusual event detection using multiple fixed-location monitors. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 555–560. [Google Scholar] [CrossRef] [PubMed]

- Raghavendra, R.; Bue, A.; Cristani, M. Unusual Crowd Activity Dataset of University of Minnesota. 2006. Available online: http://mha.cs.umn.edu/proj_events.shtml#crowd (accessed on 19 October 2022).

- Öztürk, H.İ.; Can, A.B. ADNet: Temporal anomaly detection in surveillance videos. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; Springer: Cham, Switzerland, 2021; pp. 88–101. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Carreira, J.; Noland, E.; Hillier, C.; Zisserman, A. A short note on the Kinetics-700 Human Action Dataset. arXiv 2019, arXiv:1907.06987. [Google Scholar]

| Number of Videos | Average Number of Frames | Dataset Length | Anomaly Examples | |

|---|---|---|---|---|

| Avenue [37] | 37 | 839 | 30 min | Bikers, small carts, walking across walkways |

| BOSS [38] | 12 | 4052 | 27 min | Bikers, small carts, walking across walkways |

| Subway Entrance [39] | 1 | 121,749 | 1.5 h | Wrong direction, no payment |

| Subway Exit [39] | 1 | 64,901 | 1.5 h | Wrong direction, no payment |

| UCSD Ped1 [36] | 70 | 201 | 5 min | Run, throw, new object |

| UCSD Ped2 [36] | 28 | 163 | 5 min | Run |

| UMN [40] | 5 | 1290 | 5 min | Harass, disease, panic |

| UCF–Crime [1] | 1900 | 7247 | 128 h | Abuse, arrest, arson, assault, accident, burglary, fighting, robbery |

| UCF–Crime Extension [41] | 240 | 3060 | 7.5 h | Protest, violence using Molotov cocktails, fighting |

| Ours | 972 (486) * | 6329 (2303) * | 57 (10.37) * hours | Robbery |

| RTFM | WSAL | RADS | |||

|---|---|---|---|---|---|

| No 10–crop | 10–crop | No 10–crop | 10–crop | No 10–crop | 10–crop |

| Entire test set | |||||

| 78.76% | 88.75% | 82.01% | 83.45% | 79.08% | 82.48% |

| Only the abnormal videos in the test set | |||||

| 56.03% | 66.35% | 55.22% | 54.59% | 51.68% | 50.51% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Paula, D.D.; Salvadeo, D.H.P.; de Araujo, D.M.N. CamNuvem: A Robbery Dataset for Video Anomaly Detection. Sensors 2022, 22, 10016. https://doi.org/10.3390/s222410016

de Paula DD, Salvadeo DHP, de Araujo DMN. CamNuvem: A Robbery Dataset for Video Anomaly Detection. Sensors. 2022; 22(24):10016. https://doi.org/10.3390/s222410016

Chicago/Turabian Stylede Paula, Davi D., Denis H. P. Salvadeo, and Darlan M. N. de Araujo. 2022. "CamNuvem: A Robbery Dataset for Video Anomaly Detection" Sensors 22, no. 24: 10016. https://doi.org/10.3390/s222410016

APA Stylede Paula, D. D., Salvadeo, D. H. P., & de Araujo, D. M. N. (2022). CamNuvem: A Robbery Dataset for Video Anomaly Detection. Sensors, 22(24), 10016. https://doi.org/10.3390/s222410016