Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review

Abstract

1. Introduction

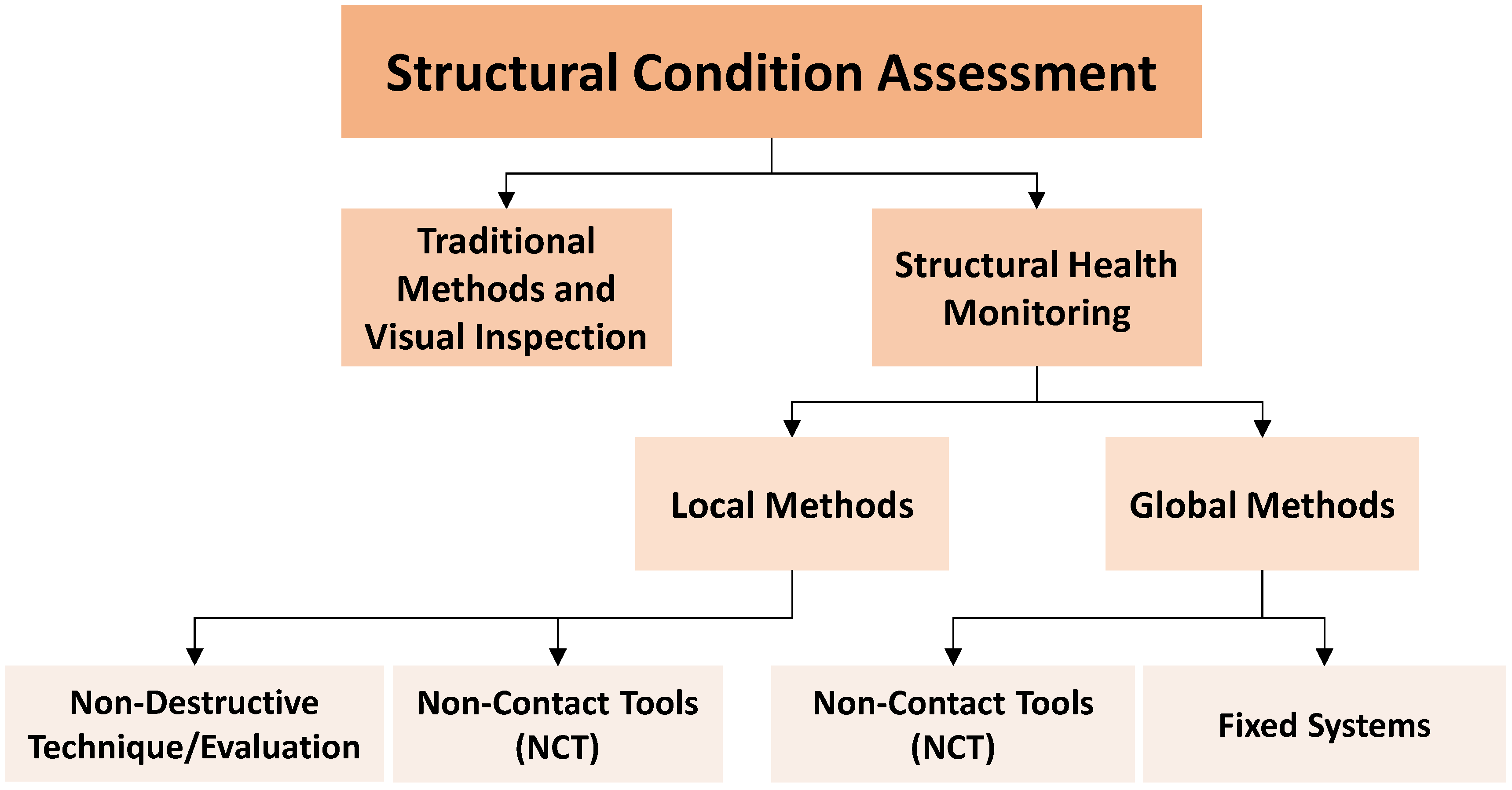

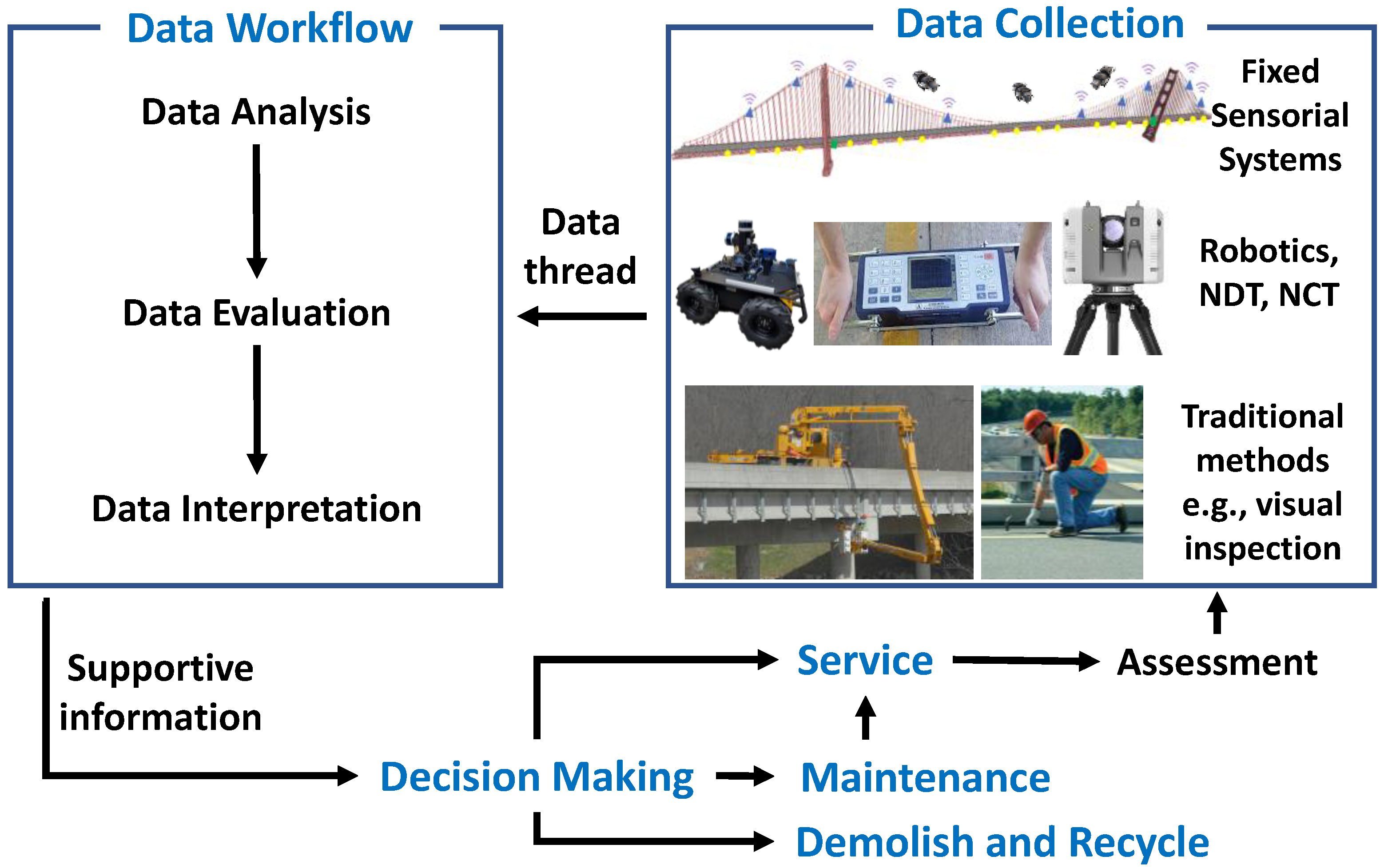

1.1. Civil Structural Health Monitoring

1.2. Motivation, Objective and Scope

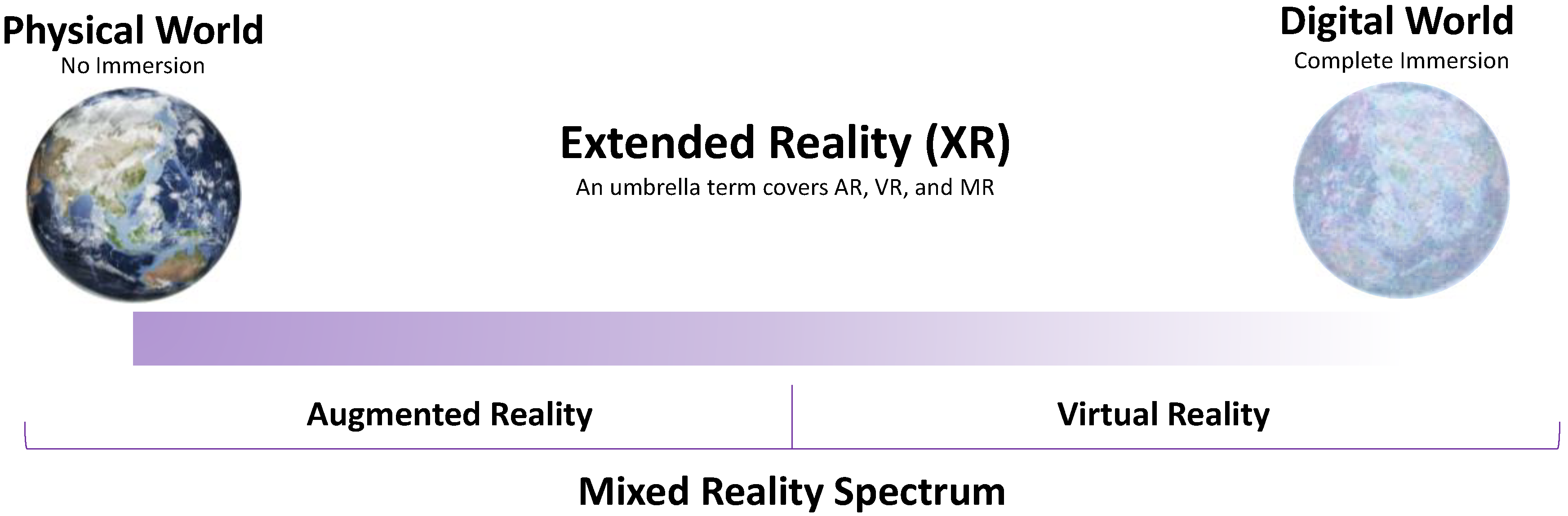

2. Virtual Reality, Augmented Reality, and Mixed Reality

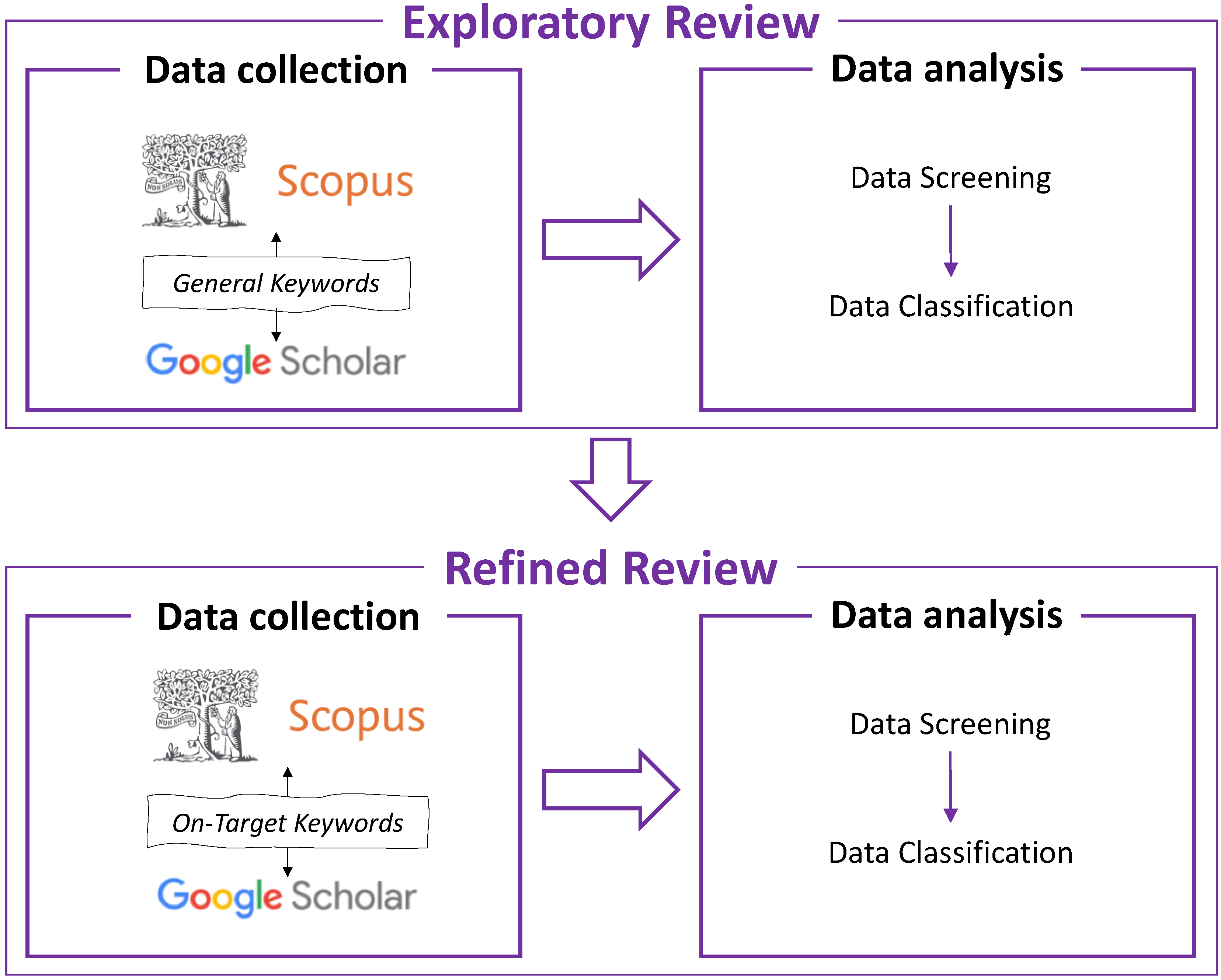

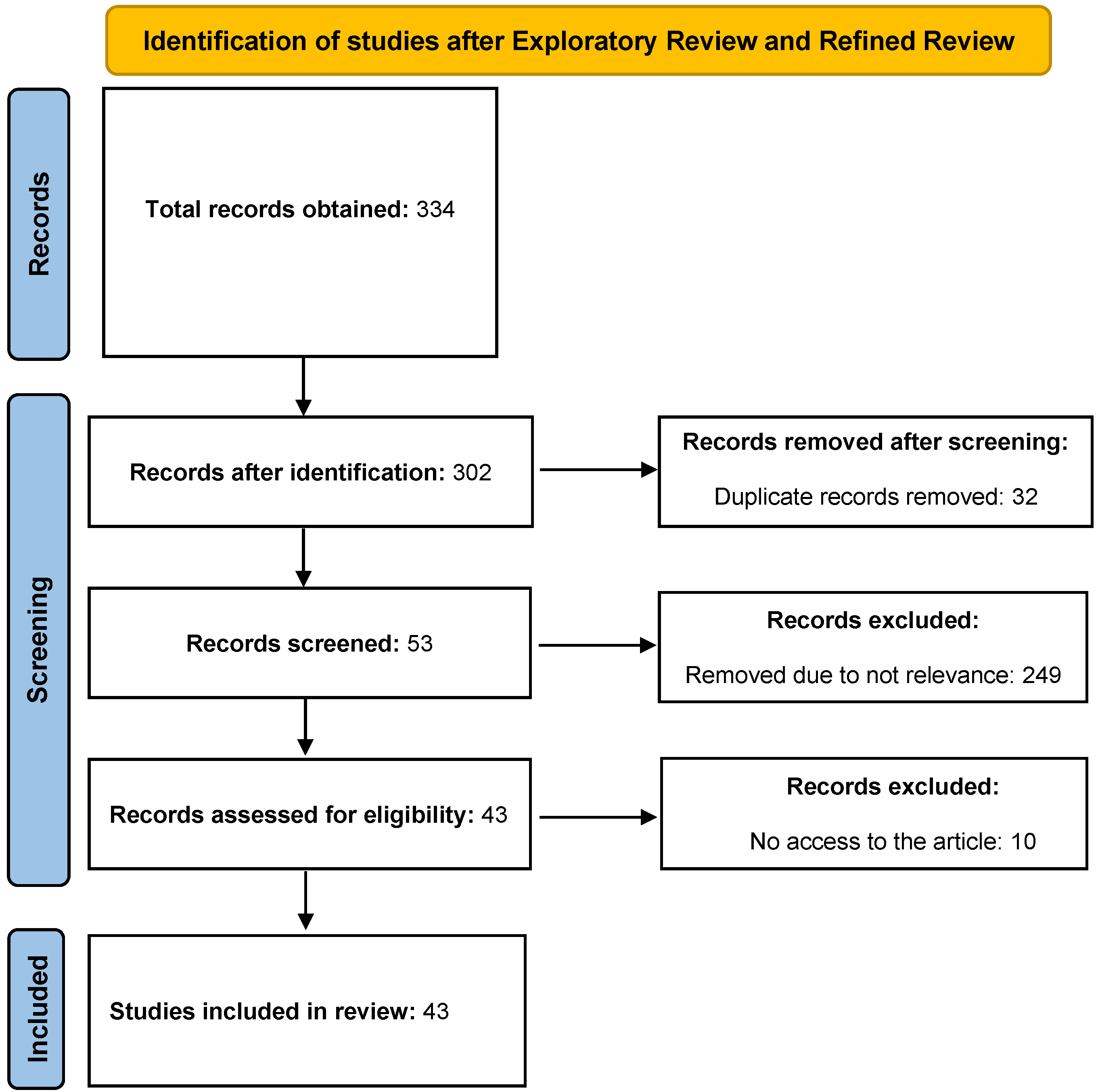

3. Research Methodology

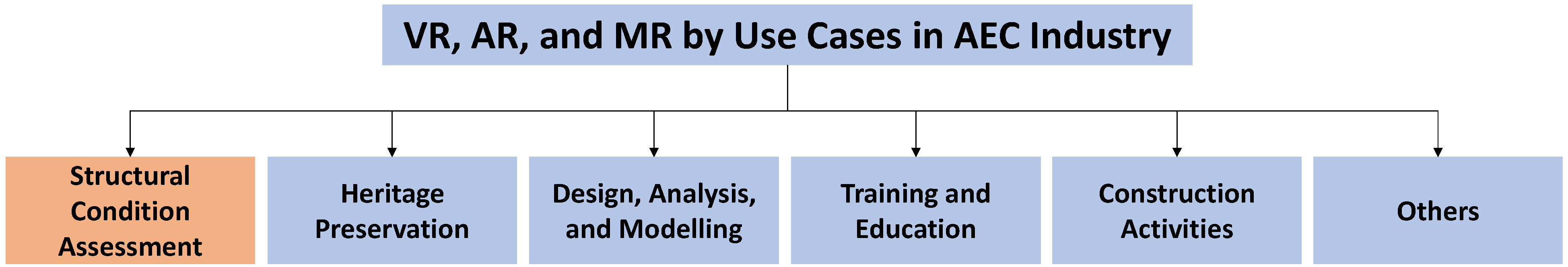

4. Extended Reality for Structural Condition Assessment of Civil Structures

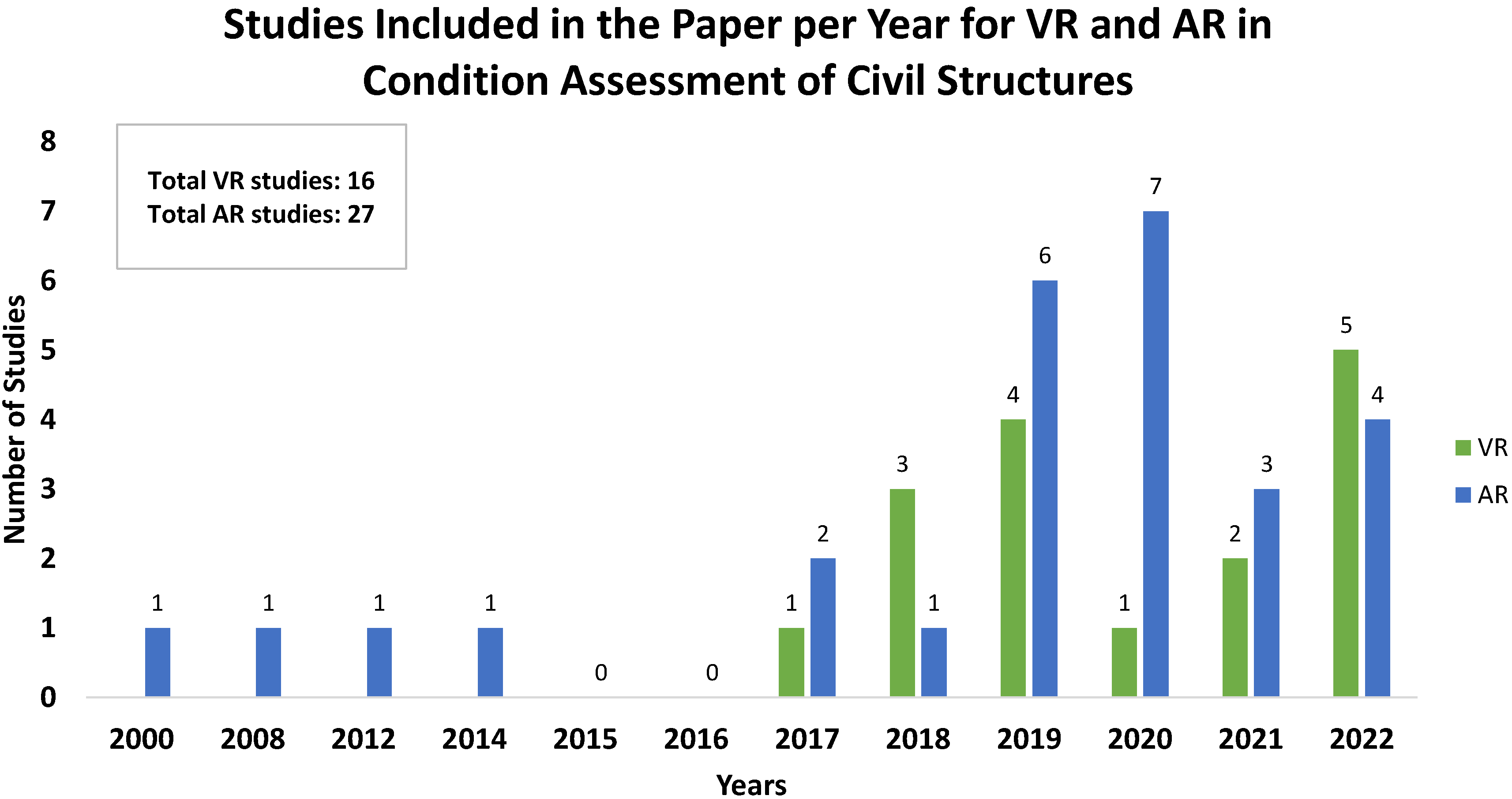

4.1. Virtual Reality for Structural Condition Assessment

4.1.1. 2017 (One Paper)

4.1.2. 2018 (Three Papers)

4.1.3. 2019 (Four Papers)

4.1.4. 2020 (One Paper)

4.1.5. 2021 (Two Papers)

4.1.6. 2022 (Five Papers)

4.2. Augmented Reality for Structural Condition Assessment

4.2.1. 2000 (One Paper)

4.2.2. 2008 (One Paper)

4.2.3. 2012 (One Paper)

4.2.4. 2014 (One Paper)

4.2.5. 2017 (Two Papers)

4.2.6. 2018 (One Paper)

4.2.7. 2019 (Six Papers)

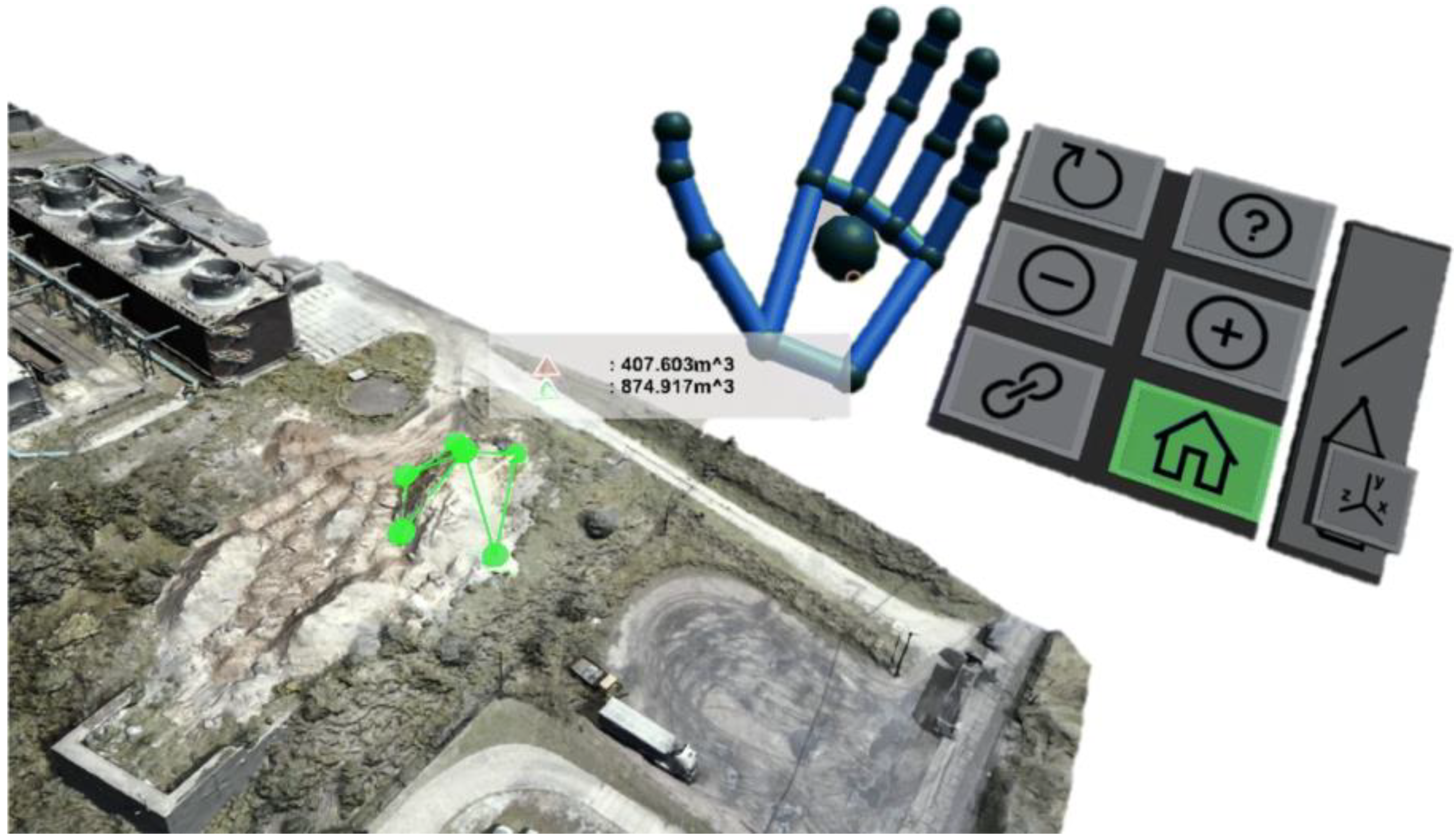

4.2.8. 2020 (Seven Papers)

4.2.9. 2021 (Three Papers)

4.2.10. 2022 (Four Papers)

4.3. Discussion, Recommendations, and Current and Future Trends

5. Summary and Conclusions

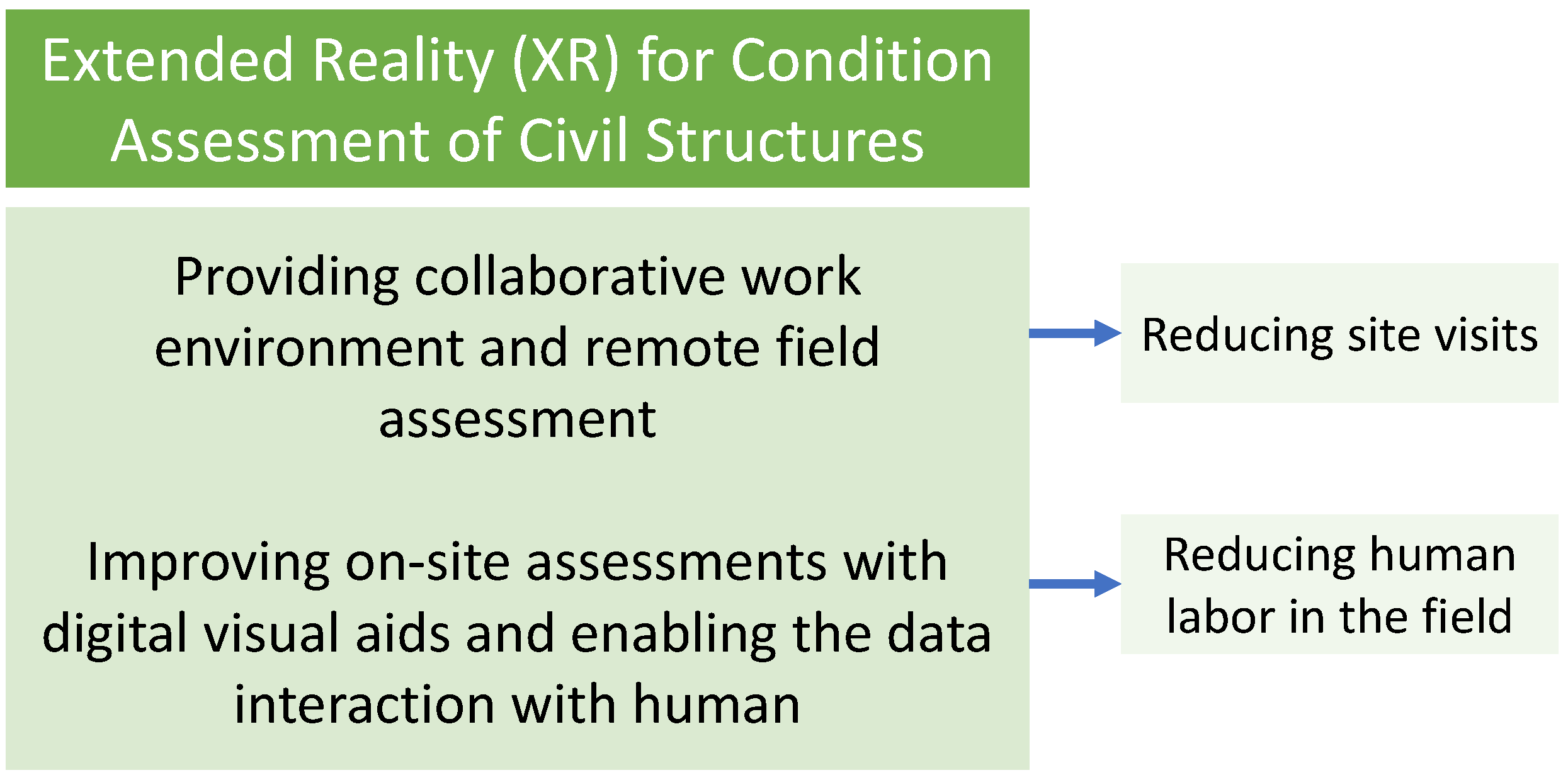

- It is generally observed from the reviewed studies that the studies use XR to conduct field assessment remotely while simultaneously providing a collaborative work environment for engineers, inspectors, and other third parties. In addition, some other studies use XR to reduce human labor in the field and to support inspection activity by providing inspectors with digital visual aids and enabling the interaction of those visual aids with the data observed in the real world.

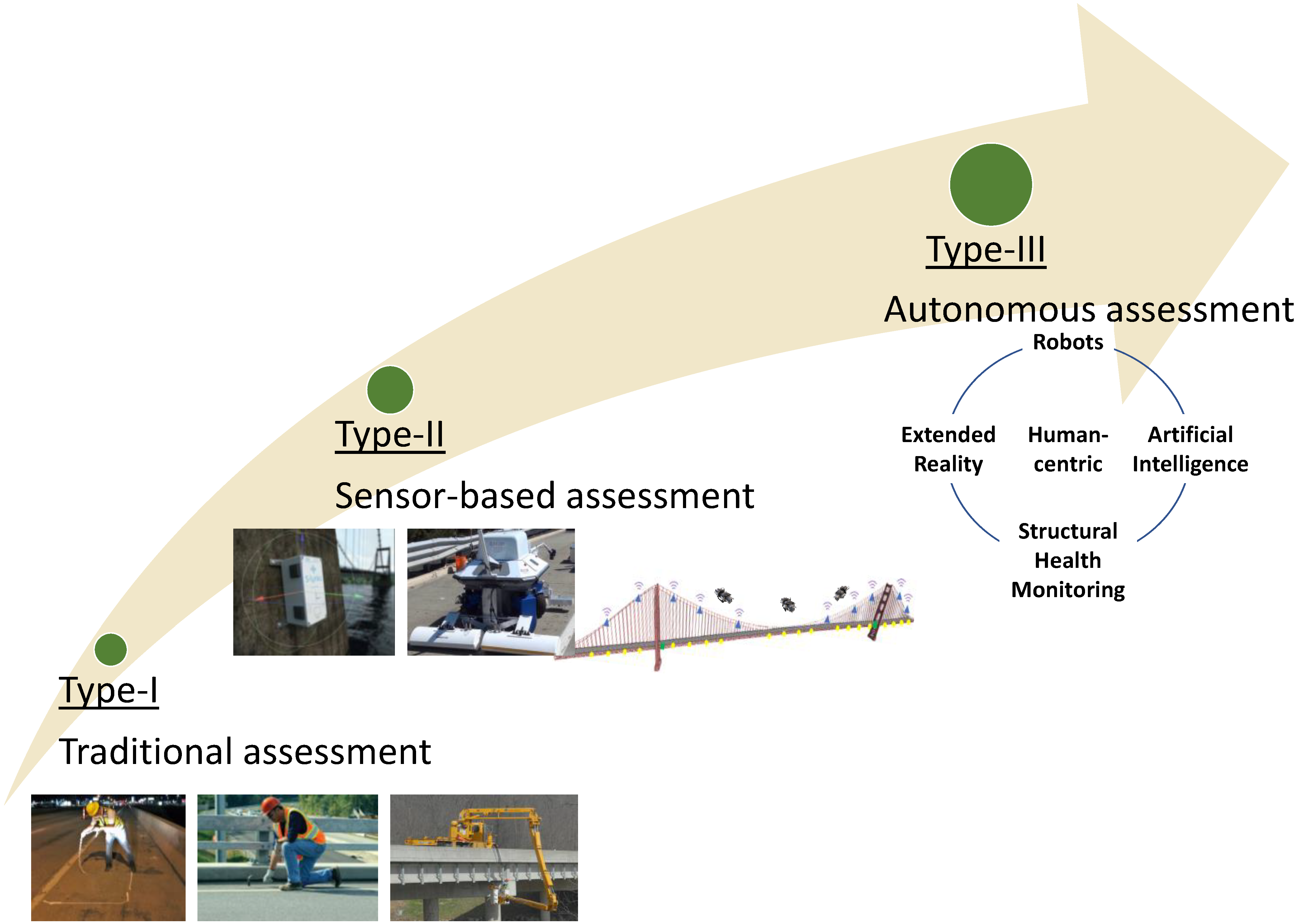

- The first study that used AR for assessment was published in 2000. The first studies on VR, on the other hand, were in 2017. Since 2017, the overall number of studies per year has gradually increased. As XR technologies are becoming more accessible, affordable, and mainstream, more research and development of using them for the condition assessment of civil structures is expected.

- Understanding the benefits of using XR over conventional assessment techniques is vital to their utilization in the field. These comparative analysis studies are critical as they reveal the comparison results between XR and conventional assessment techniques, which could expedite the use of XR in practice. Therefore, these studies should employ quantification indices for a contextual analogy. As such, the indices should account for, e.g., accuracy, time, and the technique’s practicality.

- More involvement of technological advancements in condition assessment procedures is expected in the near future. The technological progress in hardware and software will enable the use of AI, Robots, XR, and SHM in collaboration with a central unit (human) for a fully autonomous condition assessment approach to the civil structures.

- Future studies could perform a comparative analysis of using VR/AR/MR tools, such as different HMDs, for the condition assessment of civil structures. In this regard, each HMD could be listed in terms of its use efficiency for various purposes.

Author Contributions

Funding

Conflicts of Interest

References

- Catbas, F.N. Investigation of Global Condition Assessment and Structural Damage Identification of Bridges with Dynamic Testing and Modal Analysis. Ph.D. Dissertation, University of Cincinnati, Cincinnati, OH, USA, 1997. [Google Scholar]

- Zaurin, R.; Catbas, F.N. Computer Vision Oriented Framework for Structural Health Monitoring of Bridges. Conference Proceedings of the Society for Experimental Mechanics Series; Scopus Export 2000s. 5959. 2007. Available online: https://stars.library.ucf.edu/scopus2000/5959 (accessed on 2 November 2022).

- Basharat, A.; Catbas, N.; Shah, M. A Framework for Intelligent Sensor Network with Video Camera for Structural Health Monitoring of Bridges. In Proceedings of the Third IEEE International Conference on Pervasive Computing and Communications Workshops, Kauai, HI, USA, 8–12 March 2005; IEEE: New York, NY, USA, 2005; pp. 385–389. [Google Scholar]

- Catbas, F.N.; Khuc, T. Computer Vision-Based Displacement and Vibration Monitoring without Using Physical Target on Structures. In Bridge Design, Assessment and Monitoring; Taylor Francis: Abingdon, UK, 2018. [Google Scholar]

- Dong, C.-Z.; Catbas, F.N. A Review of Computer Vision–Based Structural Health Monitoring at Local and Global Levels. Struct Health Monit. 2021, 20, 692–743. [Google Scholar] [CrossRef]

- Catbas, F.N.; Grimmelsman, K.A.; Aktan, A.E. Structural Identification of Commodore Barry Bridge. In Nondestructive Evaluation of Highways, Utilities, and Pipelines IV.; Aktan, A.E., Gosselin, S.R., Eds.; SPIE: Bellingham, WA, USA, 2000; pp. 84–97. [Google Scholar]

- Aktan, A.E.; Catbas, F.N.; Grimmelsman, K.A.; Pervizpour, M. Development of a Model Health Monitoring Guide for Major Bridges; Report for Federal Highway Administration: Washington, DC, USA, 2002. [Google Scholar]

- Gul, M.; Catbas, F.N. Damage Assessment with Ambient Vibration Data Using a Novel Time Series Analysis Methodology. J. Struct. Eng. 2011, 137, 1518–1526. [Google Scholar] [CrossRef]

- Gul, M.; Necati Catbas, F. Statistical Pattern Recognition for Structural Health Monitoring Using Time Series Modeling: Theory and Experimental Verifications. Mech. Syst. Signal. Process. 2009, 23, 2192–2204. [Google Scholar] [CrossRef]

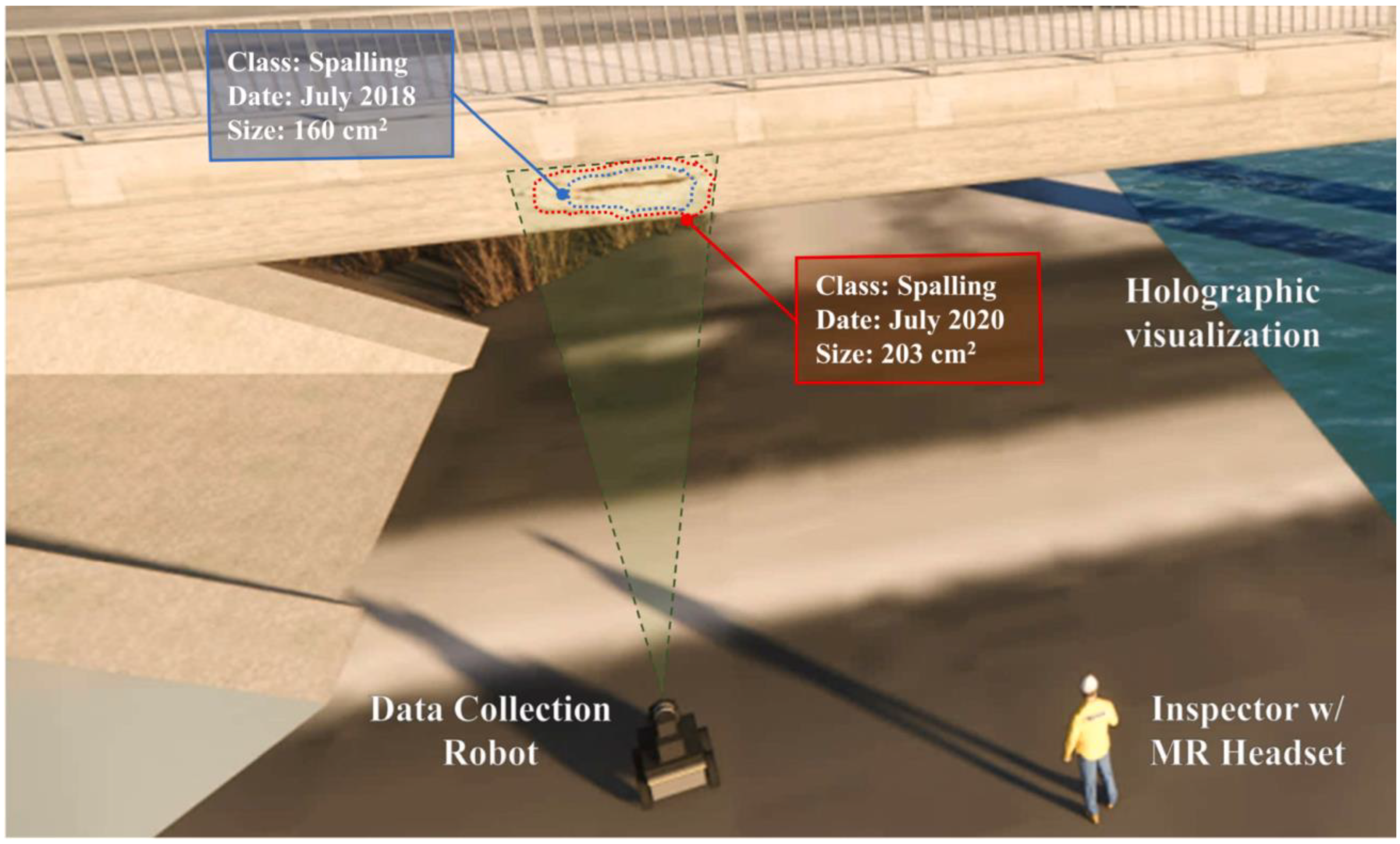

- Karaaslan, E.; Zakaria, M.; Catbas, F.N. Mixed Reality-Assisted Smart Bridge Inspection for Future Smart Cities. In The Rise of Smart Cities; Elsevier: Amsterdam, The Netherlands, 2022; pp. 261–280. [Google Scholar]

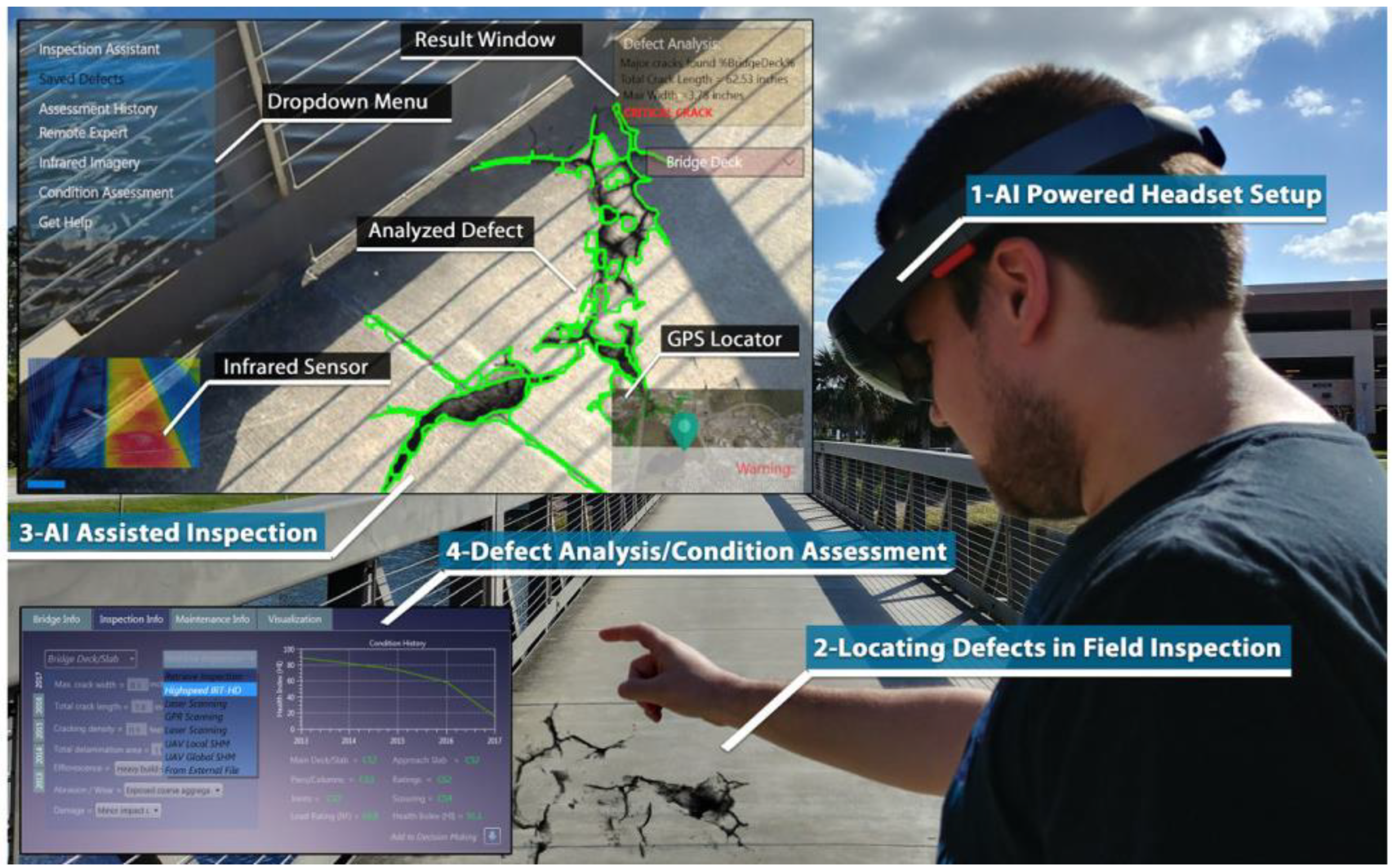

- Karaaslan, E.; Bagci, U.; Catbas, F.N. Artificial Intelligence Assisted Infrastructure Assessment Using Mixed Reality Systems. Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 413–424. [Google Scholar] [CrossRef]

- Luleci, F.; Li, L.; Chi, J.; Reiners, D.; Cruz-Neira, C.; Catbas, F.N. Structural Health Monitoring of a Foot Bridge in Virtual Reality Environment. Procedia Struct. Integr. 2022, 37, 65–72. [Google Scholar] [CrossRef]

- Catbas, N.; Avci, O. A Review of Latest Trends in Bridge Health Monitoring. In Proceedings of the Institution of Civil Engineers-Bridge Engineering; Thomas Telford Ltd.: London, UK, 2022; pp. 1–16. [Google Scholar] [CrossRef]

- Luleci, F.; Catbas, F.N.; Avci, O. A Literature Review: Generative Adversarial Networks for Civil Structural Health Monitoring. Front. Built Environ. Struct. Sens. Control Asset Manag. 2022, 8, 1027379. [Google Scholar] [CrossRef]

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Labeled Acceleration Data Augmentation for Structural Damage Detection. J. Civ. Struct. Health Monit. 2022. [Google Scholar] [CrossRef]

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Data Generation in Structural Health Monitoring. Front Built Env. 2022, 8, 6644. [Google Scholar] [CrossRef]

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Labelled Vibration Data Generation. In Special Topics in Structural Dynamics & Experimental Techniques; Conference Proceedings of the Society for Experimental Mechanics Series; Springer: Berlin/Heidelberg, Germany, 2023; Volume 5, pp. 41–50. [Google Scholar]

- Luleci, F.; Catbas, N.; Avci, O. Improved Undamaged-to-Damaged Acceleration Response Translation for Structural Health Monitoring. Eng. Appl. Artif. Intell. 2022. [Google Scholar]

- Luleci, F.; Catbas, F.N.; Avci, O. CycleGAN for Undamaged-to-Damaged Domain Translation for Structural Health Monitoring and Damage Detection. arXiv 2022, arXiv:2202.07831. [Google Scholar]

- Sun, L.; Shang, Z.; Xia, Y.; Bhowmick, S.; Nagarajaiah, S. Review of Bridge Structural Health Monitoring Aided by Big Data and Artificial Intelligence: From Condition Assessment to Damage Detection. J. Struct. Eng. 2020, 146, 04020073. [Google Scholar] [CrossRef]

- Aktan, A.E.; Farhey, D.N.; Brown, D.L.; Dalal, V.; Helmicki, A.J.; Hunt, V.J.; Shelley, S.J. Condition Assessment for Bridge Management. J. Infrastruct. Syst. 1996, 2, 108–117. [Google Scholar] [CrossRef]

- Housner, G.W.; Bergman, L.A.; Caughey, T.K.; Chassiakos, A.G.; Claus, R.O.; Masri, S.F.; Skelton, R.E.; Soong, T.T.; Spencer, B.F.; Yao, J.T.P. Structural Control: Past, Present, and Future. J. Eng. Mech. 1997, 123, 897–971. [Google Scholar] [CrossRef]

- Farrar, C.R.; Worden, K. An Introduction to Structural Health Monitoring. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2007, 365, 303–315. [Google Scholar] [CrossRef] [PubMed]

- Li, H.-N.; Ren, L.; Jia, Z.-G.; Yi, T.-H.; Li, D.-S. State-of-the-Art in Structural Health Monitoring of Large and Complex Civil Infrastructures. J. Civ. Struct. Health Monit. 2016, 6, 3–16. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Gabbouj, M.; Inman, D.J. A Review of Vibration-Based Damage Detection in Civil Structures: From Traditional Methods to Machine Learning and Deep Learning Applications. Mech. Syst. Signal. Process. 2021, 147, 107077. [Google Scholar] [CrossRef]

- Luleci, F.; AlGadi, A.; Debees, M.; Dong, C.Z.; Necati Catbas, F. Investigation of Comparative Analysis of a Multi-Span Prestressed Concrete Highway Bridge. In Bridge Safety, Maintenance, Management, Life-Cycle, Resilience and Sustainability; CRC Press: London, UK, 2022; pp. 1433–1437. [Google Scholar]

- Balayssac, J.-P.; Garnier, V. Non-Destructive Testing and Evaluation of Civil Engineering Structures; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Schabowicz, K. Non-Destructive Testing of Materials in Civil Engineering. Materials 2019, 12, 3237. [Google Scholar] [CrossRef]

- Gucunski, N.; Romero, F.; Kruschwitz, S.; Feldmann, R.; Abu-Hawash, A.; Dunn, M. Multiple Complementary Nondestructive Evaluation Technologies for Condition Assessment of Concrete Bridge Decks. Transp. Res. Rec. J. Transp. Res. Board 2010, 2201, 34–44. [Google Scholar] [CrossRef]

- Scott, M.; Rezaizadeh, A.; Delahaza, A.; Santos, C.G.; Moore, M.; Graybeal, B.; Washer, G. A Comparison of Nondestructive Evaluation Methods for Bridge Deck Assessment. NDT E Int. 2003, 36, 245–255. [Google Scholar] [CrossRef]

- Stanley, R.K.; Moore, P.O.; McIntire, P. Nondestructive Testing Handbook: Special Nondestructive Testing Methods. In Nondestructive Testing Handbook, 2nd ed.; 1995; Available online: file:///C:/Users/MDPI/Downloads/%D9%87%D9%86%D8%AF%D8%A8%D9%88%DA%A9-%D8%AA%D8%B3%D8%AA-%D8%BA%DB%8C%D8%B1%D9%85%D8%AE%D8%B1%D8%A8.pdf (accessed on 2 November 2022).

- Feng, D.; Feng, M.Q. Computer Vision for SHM of Civil Infrastructure: From Dynamic Response Measurement to Damage Detection—A Review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Kaartinen, E.; Dunphy, K.; Sadhu, A. LiDAR-Based Structural Health Monitoring: Applications in Civil Infrastructure Systems. Sensors 2022, 22, 4610. [Google Scholar] [CrossRef] [PubMed]

- van Nguyen, L.; Gibb, S.; Pham, H.X.; La, H.M. A Mobile Robot for Automated Civil Infrastructure Inspection and Evaluation. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Zhu, D.; Guo, J.; Cho, C.; Wang, Y.; Lee, K.-M. Wireless Mobile Sensor Network for the System Identification of a Space Frame Bridge. IEEE ASME Trans. Mechatron. 2012, 17, 499–507. [Google Scholar] [CrossRef]

- McLaughlin, E.; Charron, N.; Narasimhan, S. Automated Defect Quantification in Concrete Bridges Using Robotics and Deep Learning. J. Comput. Civ. Eng. 2020, 34, 04020029. [Google Scholar] [CrossRef]

- Ribeiro, D.; Santos, R.; Cabral, R.; Saramago, G.; Montenegro, P.; Carvalho, H.; Correia, J.; Calçada, R. Non-Contact Structural Displacement Measurement Using Unmanned Aerial Vehicles and Video-Based Systems. Mech. Syst. Signal. Process. 2021, 160, 107869. [Google Scholar] [CrossRef]

- Stalker, R.; Smith, I. Augmented Reality Applications to Structural Monitoring. In Artificial Intelligence in Structural Engineering; Springer: Berlin/Heidelberg, Germany, 1998; pp. 479–483. [Google Scholar]

- Mi, K.; Xiangyu, W.; Peter, L.; Heng, L.; Shih--Chung, K. Virtual Reality for the Built Environment: A Critical Review of Recent Advances. J. Inf. Technol. Constr. 2013, 18, 279–305. [Google Scholar]

- Rankohi, S.; Waugh, L. Review and Analysis of Augmented Reality Literature for Construction Industry. Vis. Eng. 2013, 1, 9. [Google Scholar] [CrossRef]

- Chi, H.-L.; Kang, S.-C.; Wang, X. Research Trends and Opportunities of Augmented Reality Applications in Architecture, Engineering, and Construction. Autom. Constr. 2013, 33, 116–122. [Google Scholar] [CrossRef]

- Agarwal, S. Review on Application of Augmented Reality in Civil Engineering. In Proceedings of the Review on Application of Augmented Reality in Civil Engineering, New Delhi, India, February 2016; pp. 68–71. [Google Scholar]

- Wang, P.; Wu, P.; Wang, J.; Chi, H.-L.; Wang, X. A Critical Review of the Use of Virtual Reality in Construction Engineering Education and Training. Int. J. Env. Res. Public Health 2018, 15, 1204. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, H.; Kang, S.-C.; Al-Hussein, M. Virtual Reality Applications for the Built Environment: Research Trends and Opportunities. Autom. Constr. 2020, 118, 103311. [Google Scholar] [CrossRef]

- Gębczyńska-Janowicz, A. Virtual Reality Technology in Architectural Education. World Trans. Eng. Technol. Educ. 2020, 18, 24–28. [Google Scholar]

- Wen, J.; Gheisari, M. Using Virtual Reality to Facilitate Communication in the AEC Domain: A Systematic Review. Constr. Innov. 2020, 20, 509–542. [Google Scholar] [CrossRef]

- Safikhani, S.; Keller, S.; Schweiger, G.; Pirker, J. Immersive Virtual Reality for Extending the Potential of Building Information Modeling in Architecture, Engineering, and Construction Sector: Systematic Review. Int. J. Digit. Earth 2022, 15, 503–526. [Google Scholar] [CrossRef]

- Sidani, A.; Dinis, F.M.; Sanhudo, L.; Duarte, J.; Santos Baptista, J.; Poças Martins, J.; Soeiro, A. Recent Tools and Techniques of BIM-Based Virtual Reality: A Systematic Review. Arch. Comput. Methods Eng. 2021, 28, 449–462. [Google Scholar] [CrossRef]

- Sidani, A.; Matoseiro Dinis, F.; Duarte, J.; Sanhudo, L.; Calvetti, D.; Santos Baptista, J.; Poças Martins, J.; Soeiro, A. Recent Tools and Techniques of BIM-Based Augmented Reality: A Systematic Review. J. Build. Eng. 2021, 42, 102500. [Google Scholar] [CrossRef]

- Xu, J.; Moreu, F. A Review of Augmented Reality Applications in Civil Infrastructure During the 4th Industrial Revolution. Front. Built Env. 2021, 7, 732. [Google Scholar] [CrossRef]

- Hajirasouli, A.; Banihashemi, S.; Drogemuller, R.; Fazeli, A.; Mohandes, S.R. Augmented Reality in Design and Construction: Thematic Analysis and Conceptual Frameworks. Constr. Innov. 2022, 22, 412–443. [Google Scholar] [CrossRef]

- Nassereddine, H.; Hanna, A.S.; Veeramani, D.; Lotfallah, W. Augmented Reality in the Construction Industry: Use-Cases, Benefits, Obstacles, and Future Trends. Front Built Env. 2022, 8, 730094. [Google Scholar] [CrossRef]

- Behzadi, A. Using Augmented and Virtual Reality Technology in the Construction Industry. Am. J. Eng. Res. AJER 2016, 5, 350–353. [Google Scholar]

- Li, X.; Yi, W.; Chi, H.-L.; Wang, X.; Chan, A.P.C. A Critical Review of Virtual and Augmented Reality (VR/AR) Applications in Construction Safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Ahmed, S. A Review on Using Opportunities of Augmented Reality and Virtual Reality in Construction Project Management. Organ. Technol. Manag. Constr. Int. J. 2019, 11, 1839–1852. [Google Scholar] [CrossRef]

- Davila Delgado, J.M.; Oyedele, L.; Demian, P.; Beach, T. A Research Agenda for Augmented and Virtual Reality in Architecture, Engineering and Construction. Adv. Eng. Inform. 2020, 45, 101122. [Google Scholar] [CrossRef]

- Noghabaei, M.; Heydarian, A.; Balali, V.; Han, K. Trend Analysis on Adoption of Virtual and Augmented Reality in the Architecture, Engineering, and Construction Industry. Data 2020, 5, 26. [Google Scholar] [CrossRef]

- Alaa, M.; Wefki, H. Augmented Reality and Virtual Reality in Construction Industry. In Proceedings of the 5th IUGRC International Undergraduate Research Conference, Cairo, Egypt, 9–12 August 2021. [Google Scholar]

- Albahbah, M.; Kıvrak, S.; Arslan, G. Application Areas of Augmented Reality and Virtual Reality in Construction Project Management: A Scoping Review. J. Constr. Eng. Manag. Innov. 2021, 4, 151–172. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, N. Virtual and Augmented Reality Technologies for Emergency Management in the Built Environments: A State-of-the-Art Review. J. Saf. Sci. Resil. 2021, 2, 1–10. [Google Scholar] [CrossRef]

- Wu, S.; Hou, L.; Zhang, G. Integrated Application of BIM and EXtended Reality Technology: A Review, Classification and Outlook. In Part of the Lecture Notes in Civil Engineering; Springer: Berlin/Heidelberg, Germany, 2021; Volume 98, pp. 1227–1236. [Google Scholar]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. From BIM to Extended Reality in AEC Industry. Autom. Constr. 2020, 116, 103254. [Google Scholar] [CrossRef]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented Reality: A Class of Displays on the Reality-Virtuality Continuum. In Telemanipulator and Telepresence Technologies; Das, H., Ed.; SPIE: Bellingham, WA, USA, 1995; pp. 282–292. [Google Scholar]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A.; Kenyon, R.v.; Hart, J.C. The CAVE: Audio Visual Experience Automatic Virtual Environment. Commun. ACM 1992, 35, 64–72. [Google Scholar] [CrossRef]

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q 2017, 36, 171–178. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A Survey of Augmented, Virtual, and Mixed Reality for Cultural Heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Amakawa, J.; Westin, J. New Philadelphia: Using Augmented Reality to Interpret Slavery and Reconstruction Era Historical Sites. Int. J. Herit. Stud. 2018, 24, 315–331. [Google Scholar] [CrossRef]

- Debailleux, L.; Hismans, G.; Duroisin, N. Exploring Cultural Heritage Using Virtual Reality. In Digital Cultural Heritage; Springer: Cham, Switzerland, 2018; pp. 289–303. [Google Scholar]

- Anoffo, Y.M.; Aymerich, E.; Medda, D. Virtual Reality Experience for Interior Design Engineering Applications. In Proceedings of the 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, 20–21 November 2018; IEEE: New York, NY, USA, 2018; pp. 1–4. [Google Scholar]

- Berg, L.P.; Vance, J.M. An Industry Case Study: Investigating Early Design Decision Making in Virtual Reality. J. Comput. Inf. Sci. Eng. 2017, 17, 011001. [Google Scholar] [CrossRef]

- Faas, D.; Vance, J.M. Interactive Deformation Through Mesh-Free Stress Analysis in Virtual Reality. In Proceedings of the 28th Computers and Information in Engineering Conference, Parts A and B., ASMEDC, Sarajevo, Bosnia and Herzegovina, 1 January 2008; Volume 3, pp. 1605–1611. [Google Scholar]

- Wolfartsberger, J. Analyzing the Potential of Virtual Reality for Engineering Design Review. Autom. Constr. 2019, 104, 27–37. [Google Scholar] [CrossRef]

- Li, Y.; Karim, M.M.; Qin, R. A Virtual-Reality-Based Training and Assessment System for Bridge Inspectors With an Assistant Drone. IEEE Trans. Hum. Mach. Syst. 2022, 52, 591–601. [Google Scholar] [CrossRef]

- Wu, W.; Tesei, A.; Ayer, S.; London, J.; Luo, Y.; Gunji, V. Closing the Skills Gap: Construction and Engineering Education Using Mixed Reality—A Case Study. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Luo, X.; Mojica Cabico, C.D. Development and Evaluation of an Augmented Reality Learning Tool for Construction Engineering Education. In Proceedings of the Construction Research Congress 2018, New Orleans, LO, USA, 2–4 April 2018; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 149–159. [Google Scholar]

- Zhao, X.; Li, M.; Sun, Z.; Zhao, Y.; Gai, Y.; Wang, J.; Huang, C.; Yu, L.; Wang, S.; Zhang, M.; et al. Intelligent Construction and Management of Landscapes through Building Information Modeling and Mixed Reality. Appl. Sci. 2022, 12, 7118. [Google Scholar] [CrossRef]

- DaValle, A.; Azhar, S. An Investigation of Mixed Reality Technology for Onsite Construction Assembly. MATEC Web Conf. 2020, 312, 06001. [Google Scholar] [CrossRef]

- Mutis, I.; Ambekar, A. Challenges and Enablers of Augmented Reality Technology for in Situ Walkthrough Applications. J. Inf. Technol. Constr. 2020, 25, 55–71. [Google Scholar] [CrossRef]

- Davidson, J.; Fowler, J.; Pantazis, C.; Sannino, M.; Walker, J.; Sheikhkhoshkar, M.; Rahimian, F.P. Integration of VR with BIM to Facilitate Real-Time Creation of Bill of Quantities during the Design Phase: A Proof of Concept Study. Front. Eng. Manag. 2020, 7, 396–403. [Google Scholar] [CrossRef]

- Klinker, G.; Stricker, D.; Reiners, D. Augmented Reality for Exterior Construction Applications. In Fundamentals of Wearable Computers and Augmented Reality; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Khanal, S.; Medasetti, U.S.; Mashal, M.; Savage, B.; Khadka, R. Virtual and Augmented Reality in the Disaster Management Technology: A Literature Review of the Past 11 Years. Front. Virtual Real. 2022, 3, 843195. [Google Scholar] [CrossRef]

- Chudikova, B.; Faltejsek, M. Advantages of Using Virtual Reality and Building Information Modelling When Assessing Suitability of Various Heat Sources, Including Renewable Energy Sources. IOP Conf. Ser. Mater. Sci. Eng. 2019, 542, 012022. [Google Scholar] [CrossRef]

- Al-Adhami, M.; Ma, L.; Wu, S. Exploring Virtual Reality in Construction, Visualization and Building Performance Analysis. In Proceedings of the 2018 Proceedings of the 35th ISARC, Berlin, Germany, 20–25 July 2018; pp. 960–967. [Google Scholar]

- Jauregui, D.V.; White, K.R. Bridge Inspection Using Virtual Realty and Photogrammetry. 2005. Available online: https://trid.trb.org/view/920024 (accessed on 13 August 2022).

- Baker, D.V.; CHEN, S.-E.; Leontopoulos, A. Visual Inspection Enhancement via Virtual Reality. In Proceedings of the Structural Materials Technology IV—An NDT Conference, Atlantic City, NJ, USA, 1 May 2000. [Google Scholar]

- Trizio, I.; Savini, F.; Ruggieri, A.; Fabbrocino, G. Digital Environment for Remote Visual Inspection and Condition Assessment of Architectural Heritage. In International Workshop on Civil Structural Health Monitoring; Springer: Berlin/Heidelberg, Germany, 2021; pp. 869–888. [Google Scholar]

- Insa-Iglesias, M.; Jenkins, M.D.; Morison, G. 3D Visual Inspection System Framework for Structural Condition Monitoring and Analysis. Autom. Constr. 2021, 128, 103755. [Google Scholar] [CrossRef]

- Fabbrocino, G.; Savini, F.; Marra, A.; Trizio, I. Virtual Investigation of Masonry Arch Bridges: Digital Procedures for Inspection, Diagnostics, and Data Management. In Proceedings of the International Conference of the European Association on Quality Control of Bridges and Structures, Padova, Italy, 29 August–1 September 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 979–987. [Google Scholar]

- Jáuregui, D.v.; White, K.R. Implementation of Virtual Reality in Routine Bridge Inspection. Transp. Res. Rec. J. Transp. Res. Board 2003, 1827, 29–35. [Google Scholar] [CrossRef]

- Du, J.; Shi, Y.; Zou, Z.; Zhao, D. CoVR: Cloud-Based Multiuser Virtual Reality Headset System for Project Communication of Remote Users. J. Constr. Eng. Manag. 2018, 144, 04017109. [Google Scholar] [CrossRef]

- Shi, Y.; Du, J.; Tang, P.; Zhao, D. Characterizing the Role of Communications in Teams Carrying Out Building Inspection. In Proceedings of the Construction Research Congress 2018, New Orleans, LO, USA, 2–4 April 2018; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 554–564. [Google Scholar]

- Omer, M.; Hewitt, S.; Mosleh, M.H.; Margetts, L.; Parwaiz, M. Performance Evaluation of Bridges Using Virtual Reality. In Proceedings of the 6th European Conference on Computational Mechanics (ECCM 6), Glasgow, UK, 11–15 June 2018. [Google Scholar]

- Attard, L.; Debono, C.J.; Valentino, G.; di Castro, M.; Osborne, J.A.; Scibile, L.; Ferre, M. A Comprehensive Virtual Reality System for Tunnel Surface Documentation and Structural Health Monitoring. In Proceedings of the 2018 IEEE International Conference on Imaging Systems and Techniques (IST), Glasgow, UK, 16–18 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Omer, M.; Margetts, L.; Hadi Mosleh, M.; Hewitt, S.; Parwaiz, M. Use of Gaming Technology to Bring Bridge Inspection to the Office. Struct. Infrastruct. Eng. 2019, 15, 1292–1307. [Google Scholar] [CrossRef]

- Burgos, M.; Castaneda, B.; Aguilar, R. Virtual Reality for the Enhancement of Structural Health Monitoring Experiences in Historical Constructions. In Structural Analysis of Historical Constructions; Springer: Cham, Switzerland, 2019; pp. 429–436. [Google Scholar]

- Lee, J.; Kim, J.; Ahn, J.; Woo, W. Remote Diagnosis of Architectural Heritage Based on 5W1H Model-Based Metadata in Virtual Reality. ISPRS Int. J. Geoinf. 2019, 8, 339. [Google Scholar] [CrossRef]

- Tadeja, S.K.; Rydlewicz, W.; Lu, Y.; Kristensson, P.O.; Bubas, T.; Rydlewicz, M. PhotoTwinVR: An Immersive System for Manipulation, Inspection and Dimension Measurements of the 3D Photogrammetric Models of Real-Life Structures in Virtual Reality. arXiv 2019, arXiv:1911.09958. [Google Scholar]

- Bacco, M.; Barsocchi, P.; Cassara, P.; Germanese, D.; Gotta, A.; Leone, G.R.; Moroni, D.; Pascali, M.A.; Tampucci, M. Monitoring Ancient Buildings: Real Deployment of an IoT System Enhanced by UAVs and Virtual Reality. IEEE Access 2020, 8, 50131–50148. [Google Scholar] [CrossRef]

- Tadeja, S.K.; Lu, Y.; Rydlewicz, M.; Rydlewicz, W.; Bubas, T.; Kristensson, P.O. Exploring Gestural Input for Engineering Surveys of Real-Life Structures in Virtual Reality Using Photogrammetric 3D Models. Multimed. Tools Appl. 2021, 80, 31039–31058. [Google Scholar] [CrossRef]

- Omer, M.; Margetts, L.; Mosleh, M.H.; Cunningham, L.S. Inspection of Concrete Bridge Structures: Case Study Comparing Conventional Techniques with a Virtual Reality Approach. J. Bridge Eng. 2021, 26, 05021010. [Google Scholar] [CrossRef]

- Xia, P.; Xu, F.; Zhu, Q.; Du, J. Human Robot Comparison in Rapid Structural Inspection. In Proceedings of the Construction Research Congress 2022, Arlington, VA, USA, 9–12 March 2022; American Society of Civil Engineers: Reston, VA, USA, 2022; pp. 570–580. [Google Scholar]

- Halder, S.; Afsari, K. Real-Time Construction Inspection in an Immersive Environment with an Inspector Assistant Robot. EPiC Ser. Built Environ. 2022, 3, 379–389. [Google Scholar]

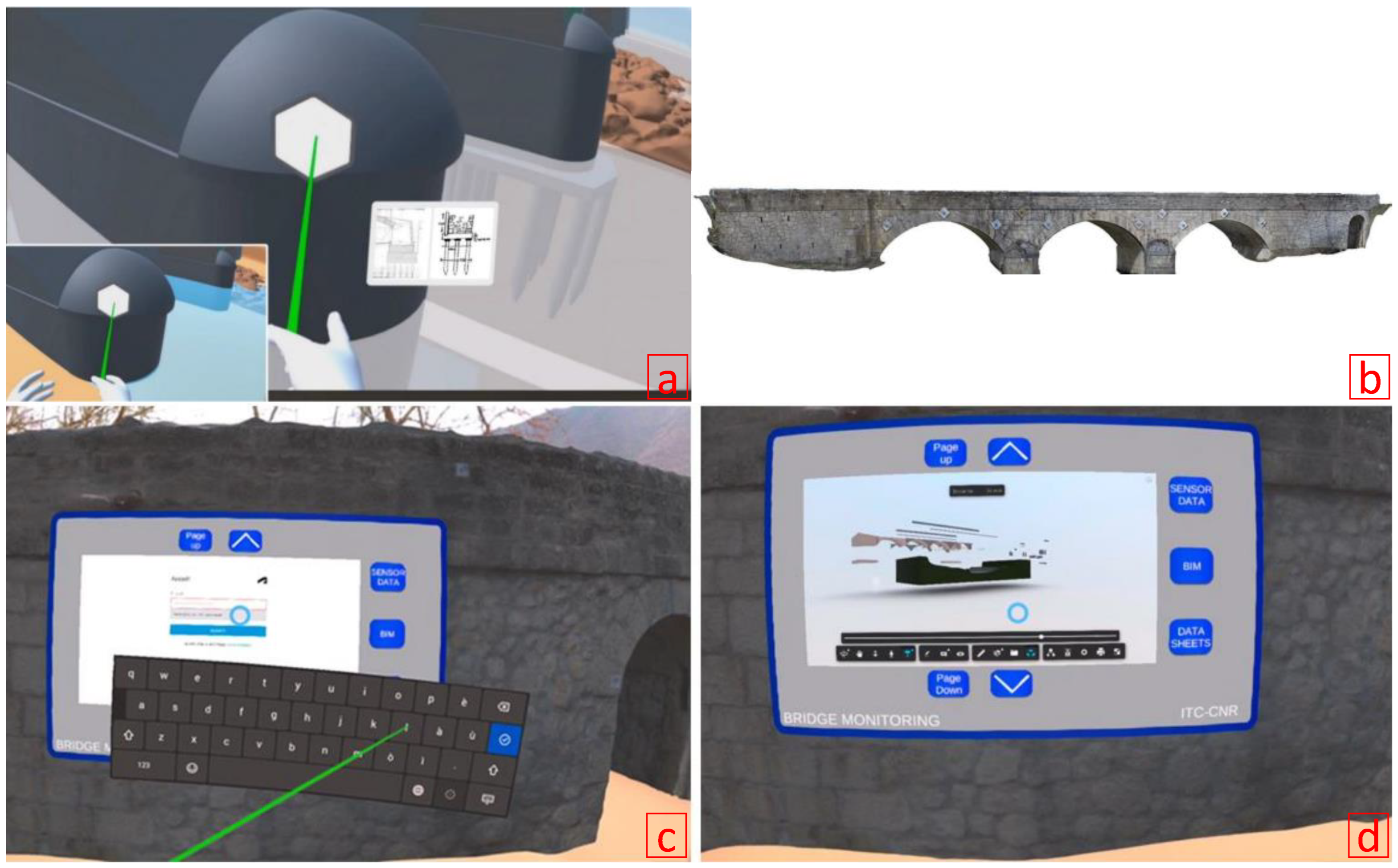

- Savini, F.; Marra, A.; Cordisco, A.; Giallonardo, M.; Fabbrocino, G.; Trizio, I. A Complex Virtual Reality System for the Management and Visualization of Bridge Data. SCIentific RESearch Inf. Technol. Ric. Sci. E Tecnol. Dell’informazione 2022, 12, 49–66. [Google Scholar] [CrossRef]

- Peng, X.; Su, G.; Chen, Z.; Sengupta, R. A Virtual Reality Environment for Developing and Testing Autonomous UAV-Based Structural Inspection. In European Workshop on Structural Health Monitoring; Springer: Cham, Switzerland, 2023; pp. 527–535. [Google Scholar]

- Webster, A.; Feiner, S.; MacIntyre, B.; Massie, W.; Krueger, T. Augmented Reality in Architectural Construction, Inspection, and Renovation. In Proceedings of the ASCE Third Congress on Computing in Civil Engineering, Stanford, CA, USA, 14–16 August 2000. [Google Scholar]

- Shin, D.H.; Dunston, P.S. Evaluation of Augmented Reality in Steel Column Inspection. Autom. Constr. 2009, 18, 118–129. [Google Scholar] [CrossRef]

- Dong, S.; Feng, C.; Kamat, V.R. Sensitivity Analysis of Augmented Reality-Assisted Building Damage Reconnaissance Using Virtual Prototyping. Autom. Constr. 2013, 33, 24–36. [Google Scholar] [CrossRef]

- Teixeira, J.M.; Ferreira, R.; Santos, M.; Teichrieb, V. Teleoperation Using Google Glass and AR, Drone for Structural Inspection. In Proceedings of the 2014 XVI Symposium on Virtual and Augmented Reality, Salvador, Brazil, 12–15 May 2014; IEEE: New York, NY, USA; pp. 28–36. [Google Scholar]

- Zhou, Y.; Luo, H.; Yang, Y. Implementation of Augmented Reality for Segment Displacement Inspection during Tunneling Construction. Autom. Constr. 2017, 82, 112–121. [Google Scholar] [CrossRef]

- Fonnet, A.; Alves, N.; Sousa, N.; Guevara, M.; Magalhaes, L. Heritage BIM Integration with Mixed Reality for Building Preventive Maintenance. In Proceedings of the 2017 24o Encontro Português de Computação Gráfica e Interação (EPCGI), Guimaraes, Portugal, 12–13 October 2017; IEEE: New York, NY, USA, 2017; pp. 1–7. [Google Scholar]

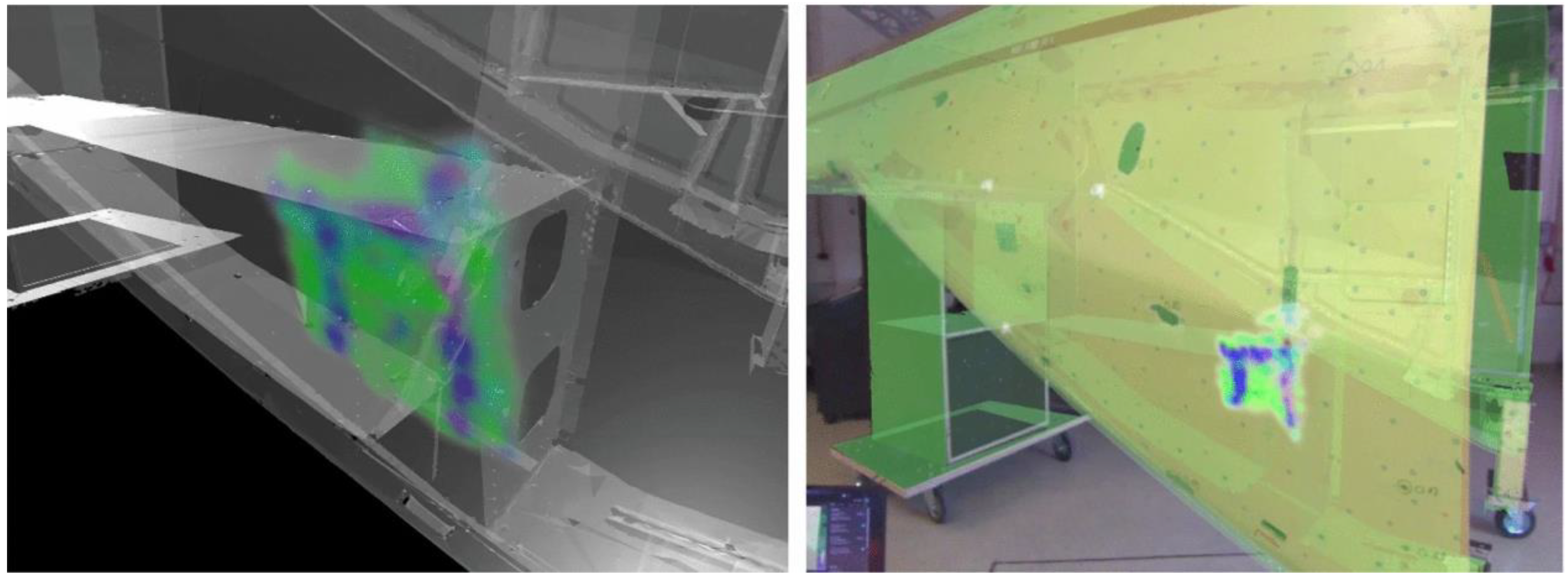

- Schickert, M.; Koch, C.; Bonitz, F. Prospects for Integrating Augmented Reality Visualization of Nondestructive Testing Results into Model-Based Infrastructure Inspection. In Proceedings of the NDE/NDT for Highways & Bridges: SMT 2018, New Brunswick, NJ, USA, 27 August 2018. [Google Scholar]

- Napolitano, R.; Liu, Z.; Sun, C.; Glisic, B. Virtual Tours, Augmented Reality, and Informational Modeling for Visual Inspection and Structural Health Monitoring (Conference Presentation). In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2019, Denver, CO, USA, 4–7 March 2019; Wang, K.-W., Sohn, H., Huang, H., Lynch, J.P., Eds.; SPIE: Bellingham, WA, USA, 2019; p. 6. [Google Scholar]

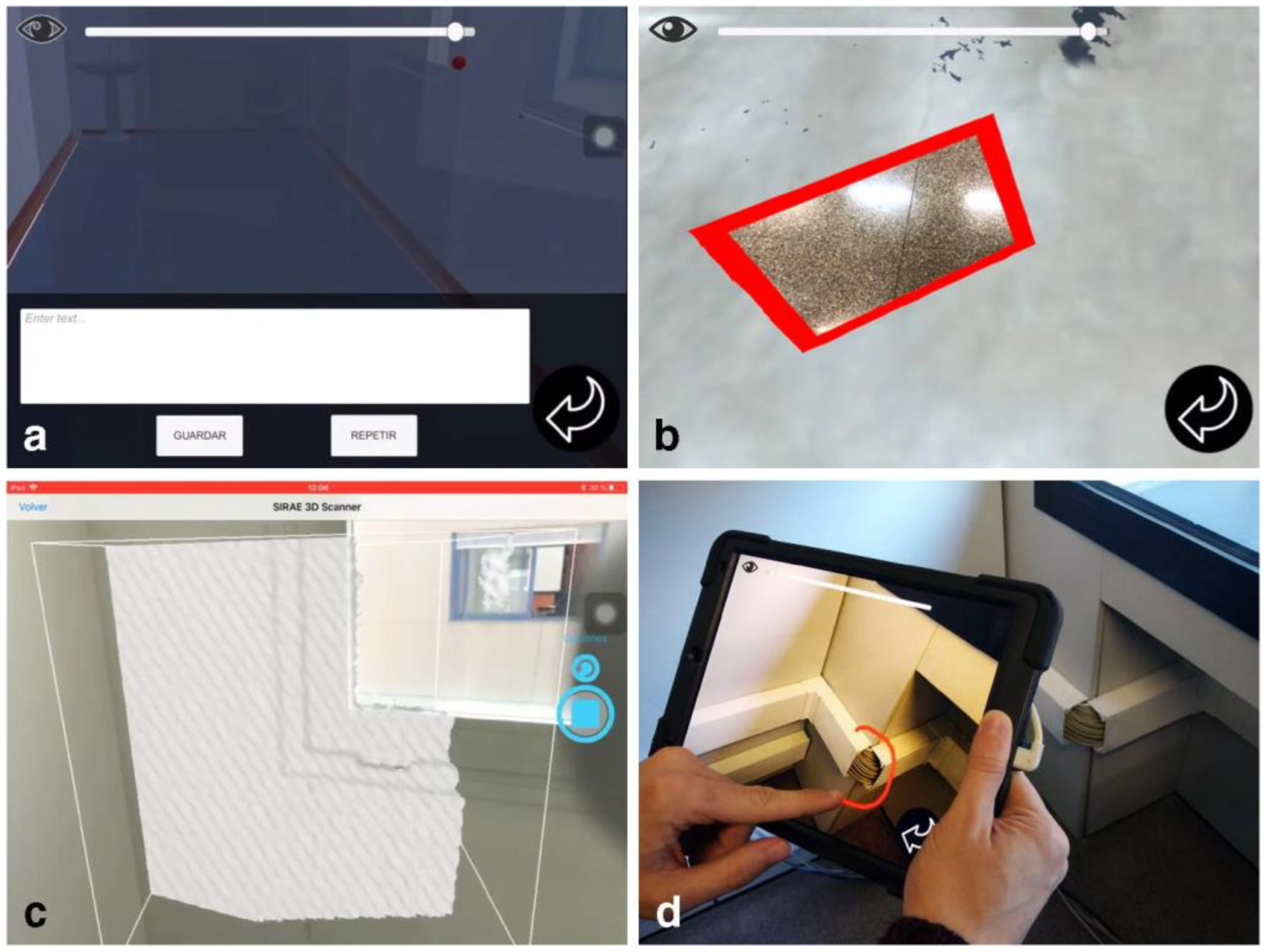

- García-Pereira, I.; Portalés, C.; Gimeno, J.; Casas, S. A Collaborative Augmented Reality Annotation Tool for the Inspection of Prefabricated Buildings. Multimed. Tools Appl. 2020, 79, 6483–6501. [Google Scholar] [CrossRef]

- Brito, C.; Alves, N.; Magalhães, L.; Guevara, M. Bim Mixed Reality Tool for the Inspection of Heritage Buildings. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W6, 25–29. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Shibuya, T.; Kanda, M.; Yasojima, A. Crack Inspection Support System for Concrete Structures Using Head Mounted Display in Mixed Reality Space. In Proceedings of the 2019 58th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Hiroshima, Japan, 10–13 September 2019; IEEE: New York, NY, USA, 2019; pp. 791–796. [Google Scholar]

- Dang, N.; Shim, C. BIM-Based Innovative Bridge Maintenance System Using Augmented Reality Technology. In Proceedings of the CIGOS 2019, Innovation for Sustainable Infrastructure, Hanoi, Vietnam, 31 October–1 November 2019; Springer: Singapore, 2020; pp. 1217–1222. [Google Scholar]

- Van Dam, J.; Krasne, A.; Gabbard, J.L. Drone-Based Augmented Reality Platform for Bridge Inspection: Effect of AR Cue Design on Visual Search Tasks. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; IEEE: New York, NY, USA, 2020; pp. 201–204. [Google Scholar]

- Kilic, G.; Caner, A. Augmented Reality for Bridge Condition Assessment Using Advanced Non-Destructive Techniques. Struct. Infrastruct. Eng. 2021, 17, 977–989. [Google Scholar] [CrossRef]

- Wang, S.; Zargar, S.A.; Yuan, F.-G. Augmented Reality for Enhanced Visual Inspection through Knowledge-Based Deep Learning. Struct. Health Monit. 2021, 20, 426–442. [Google Scholar] [CrossRef]

- Liu, D.; Xia, X.; Chen, J.; Li, S. Integrating Building Information Model and Augmented Reality for Drone-Based Building Inspection. J. Comput. Civ. Eng. 2021, 35, 04020073. [Google Scholar] [CrossRef]

- Rehbein, J.; Lorenz, S.-J.; Holtmannspötter, J.; Valeske, B. 3D-Visualization of Ultrasonic NDT Data Using Mixed Reality. J. Nondestr. Eval. 2022, 41, 26. [Google Scholar] [CrossRef]

- Mascareñas, D.D.; Ballor, J.P.; McClain, O.L.; Mellor, M.A.; Shen, C.-Y.; Bleck, B.; Morales, J.; Yeong, L.-M.R.; Narushof, B.; Shelton, P.; et al. Augmented Reality for next Generation Infrastructure Inspections. Struct. Health Monit. 2021, 20, 1957–1979. [Google Scholar] [CrossRef]

- Maharjan, D.; Agüero, M.; Mascarenas, D.; Fierro, R.; Moreu, F. Enabling Human–Infrastructure Interfaces for Inspection Using Augmented Reality. Struct. Health Monit. 2021, 20, 1980–1996. [Google Scholar] [CrossRef]

- Moreu, F.; Kaveh, M. Bridge Cracks Monitoring: Detection, Measurement, and Comparison Using Augmented Reality. 2021. Available online: https://digitalcommons.lsu.edu/transet_pubs/125/ (accessed on 2 November 2022).

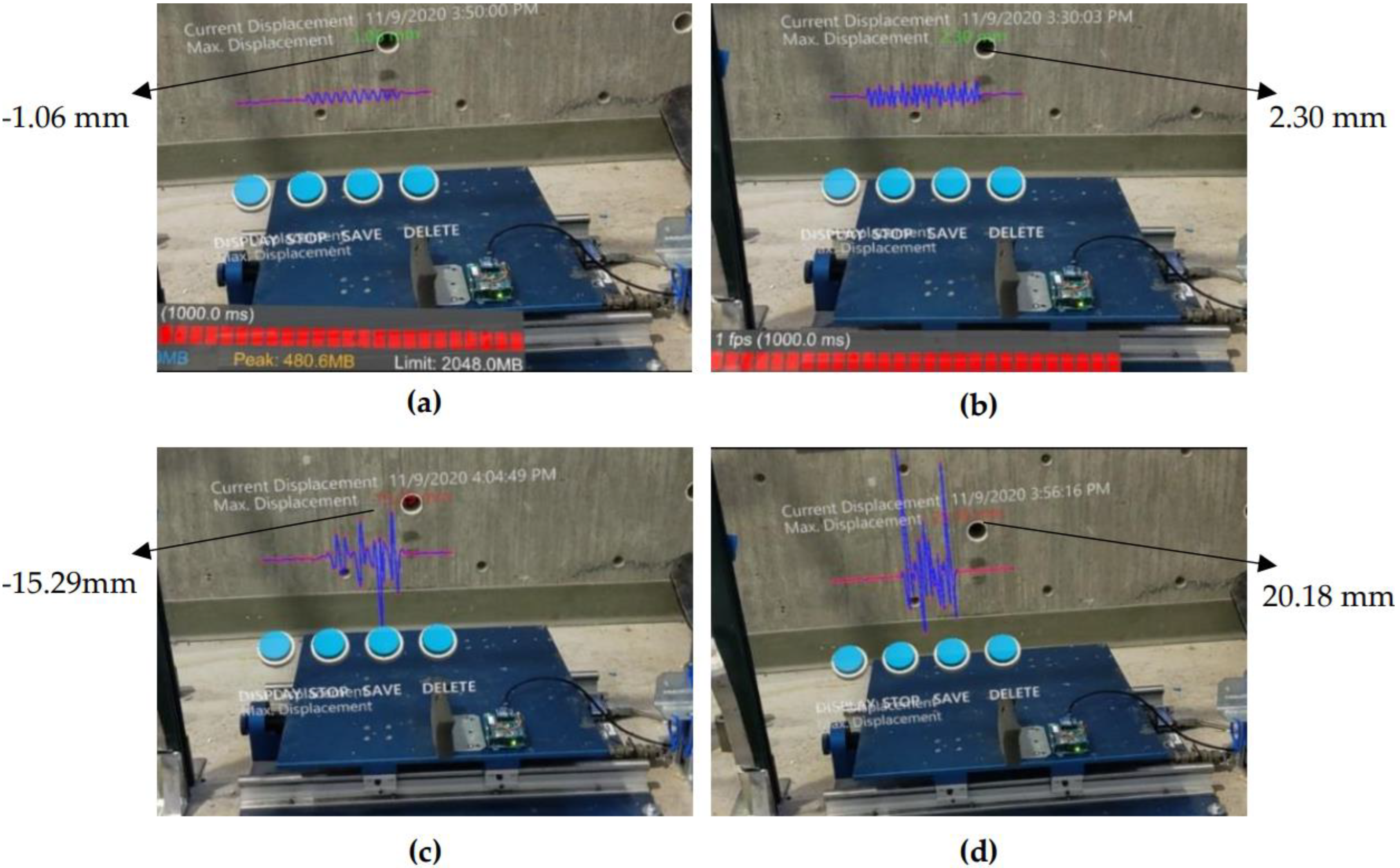

- Aguero, M.; Doyle, D.; Mascarenas, D.; Moreu, F. Visualization of Real-Time Displacement Time History Superimposed with Dynamic Experiments Using Wireless Smart Sensors (WSS) and Augmented Reality (AR). arXiv 2021, arXiv:2110.08700. [Google Scholar]

- Xu, J.; Wyckoff, E.; Hanson, J.-W.; Moreu, F.; Doyle, D. Implementing Augmented Reality Technology to Measure Structural Changes across Time. arXiv 2021, arXiv:2111.02555. [Google Scholar]

- John Samuel, I.; Salem, O.; He, S. Defect-Oriented Supportive Bridge Inspection System Featuring Building Information Modeling and Augmented Reality. Innov. Infrastruct. Solut. 2022, 7, 247. [Google Scholar] [CrossRef]

- Nguyen, D.-C.; Nguyen, T.-Q.; Jin, R.; Jeon, C.-H.; Shim, C.-S. BIM-Based Mixed-Reality Application for Bridge Inspection and Maintenance. Constr. Innov. 2022, 22, 487–503. [Google Scholar] [CrossRef]

- Al-Sabbag, Z.A.; Yeum, C.M.; Narasimhan, S. Enabling Human–Machine Collaboration in Infrastructure Inspections through Mixed Reality. Adv. Eng. Inform. 2022, 53, 101709. [Google Scholar] [CrossRef]

- Mondal, T.G.; Chen, G. Artificial Intelligence in Civil Infrastructure Health Monitoring—Historical Perspectives, Current Trends, and Future Visions. Front. Built Env. 2022, 8, 1007886. [Google Scholar] [CrossRef]

| General Keywords X: Link the Words with “AND” | Civil Engineering | Civil Structures | AEC | Virtual Reality | Augmented Reality | Mixed Reality | Extended Reality |

|---|---|---|---|---|---|---|---|

| Civil Engineering | X | X | X | X | |||

| Civil Structures | X | X | X | X | |||

| AEC | X | X | X | X | |||

| Virtual Reality | X | X | X | ||||

| Augmented Reality | X | X | X | ||||

| Mixed Reality | X | X | X | ||||

| Extended Reality | X | X | X |

| On-Target Keywords X: Link the Words with “AND” | Condition Assessment | Inspection | Structural Health Monitoring | Non-Destructive Technique/Evaluation | Virtual Reality | Augmented Reality | Mixed Reality | Extended Reality |

|---|---|---|---|---|---|---|---|---|

| Condition Assessment | X | X | X | X | ||||

| Inspection | X | X | X | X | ||||

| Structural Health Monitoring | X | X | X | X | ||||

| Non-Destructive Technique/Evaluation | X | X | X | X | ||||

| Virtual Reality | X | X | X | X | ||||

| Augmented Reality | X | X | X | X | ||||

| Mixed Reality | X | X | X | X | ||||

| Extended Reality | X | X | X | X |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Catbas, F.N.; Luleci, F.; Zakaria, M.; Bagci, U.; LaViola, J.J., Jr.; Cruz-Neira, C.; Reiners, D. Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review. Sensors 2022, 22, 9560. https://doi.org/10.3390/s22239560

Catbas FN, Luleci F, Zakaria M, Bagci U, LaViola JJ Jr., Cruz-Neira C, Reiners D. Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review. Sensors. 2022; 22(23):9560. https://doi.org/10.3390/s22239560

Chicago/Turabian StyleCatbas, Fikret Necati, Furkan Luleci, Mahta Zakaria, Ulas Bagci, Joseph J. LaViola, Jr., Carolina Cruz-Neira, and Dirk Reiners. 2022. "Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review" Sensors 22, no. 23: 9560. https://doi.org/10.3390/s22239560

APA StyleCatbas, F. N., Luleci, F., Zakaria, M., Bagci, U., LaViola, J. J., Jr., Cruz-Neira, C., & Reiners, D. (2022). Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review. Sensors, 22(23), 9560. https://doi.org/10.3390/s22239560