Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes

Abstract

1. Introduction

- A new deep-learning-based ensemble algorithm with event-type-driven suppression for activity recognition,

- Determination of the optimal device position for activity recognition,

- An extended set of recognized human activities, including non-standard ones,

- Analysis of various configurations of phone and sensors’ positions.

2. Related Works

2.1. Data Acquisition and Pre-Processing

2.2. Feature Extraction and Classification

2.3. Transmission Using Additional Nodes

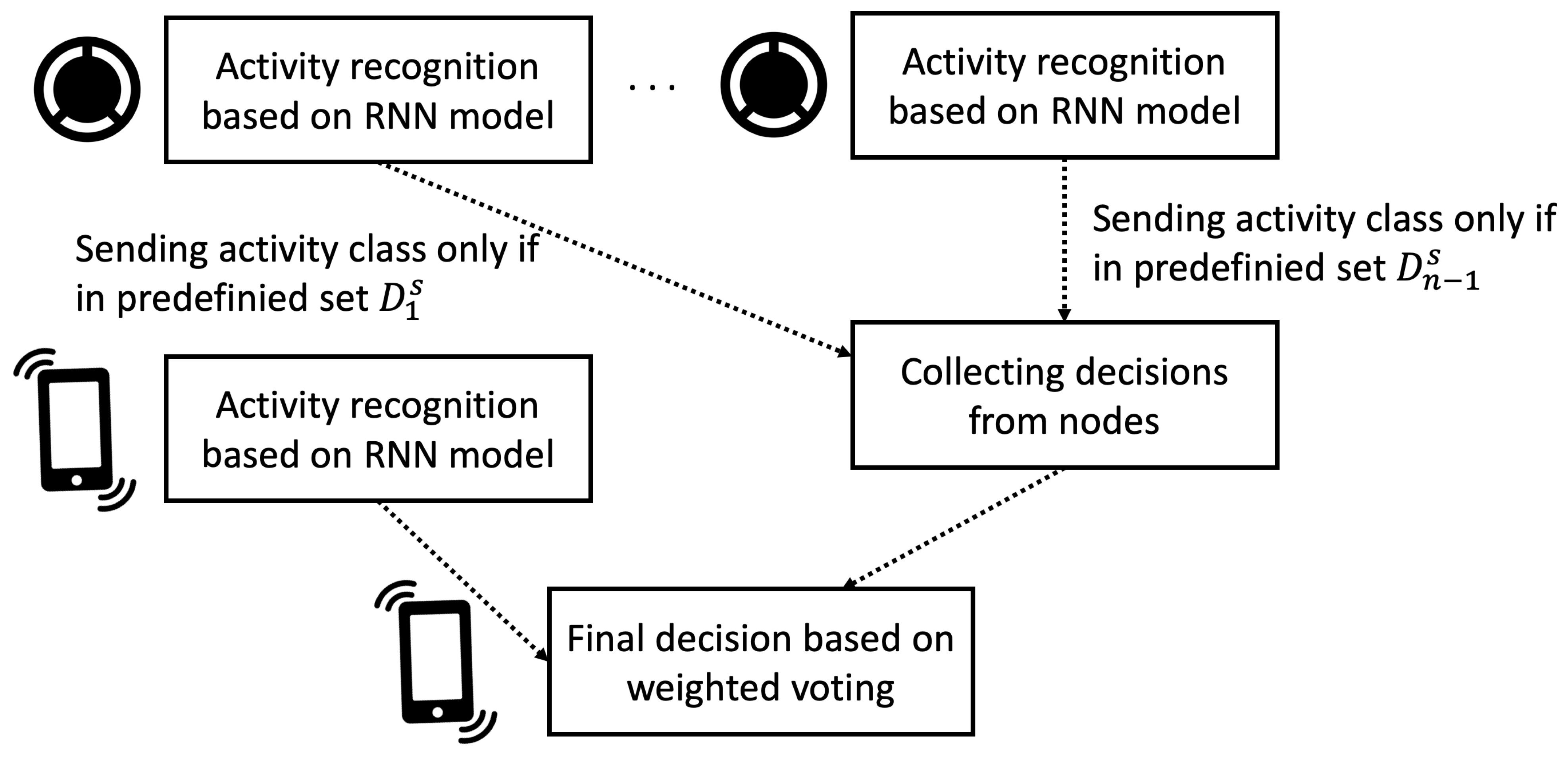

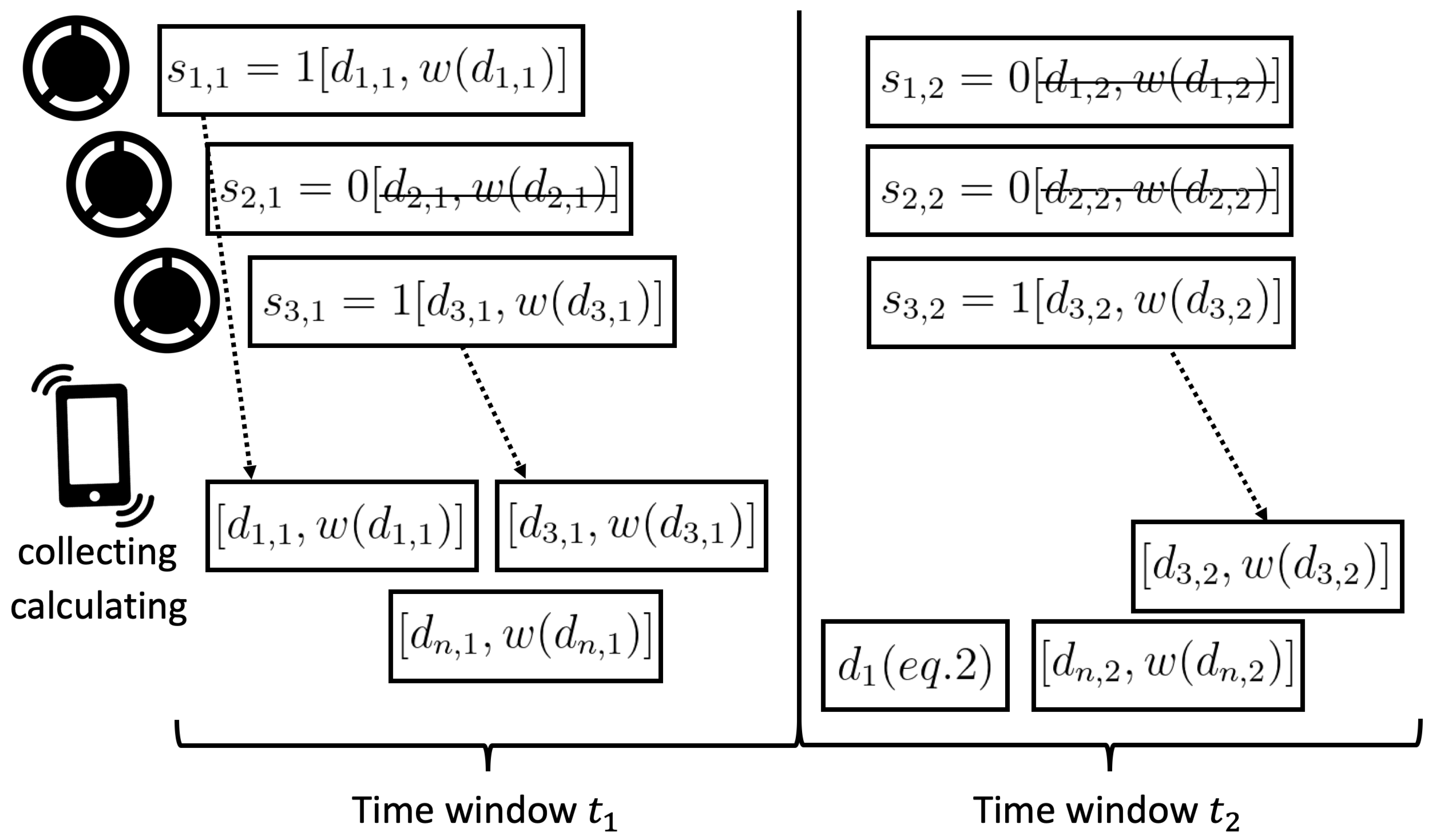

3. Proposed Method

- Receive results of local classification and from supporting sensor nodes.

- If , calculate the final recognition result according to Equation (2).

- Determine based on its own sensor readings.

3.1. Optimal Activity Set Finding for a Supporting Sensor Node

| Algorithm 1 Optimization strategy for reducing the set of transmitted data. |

|

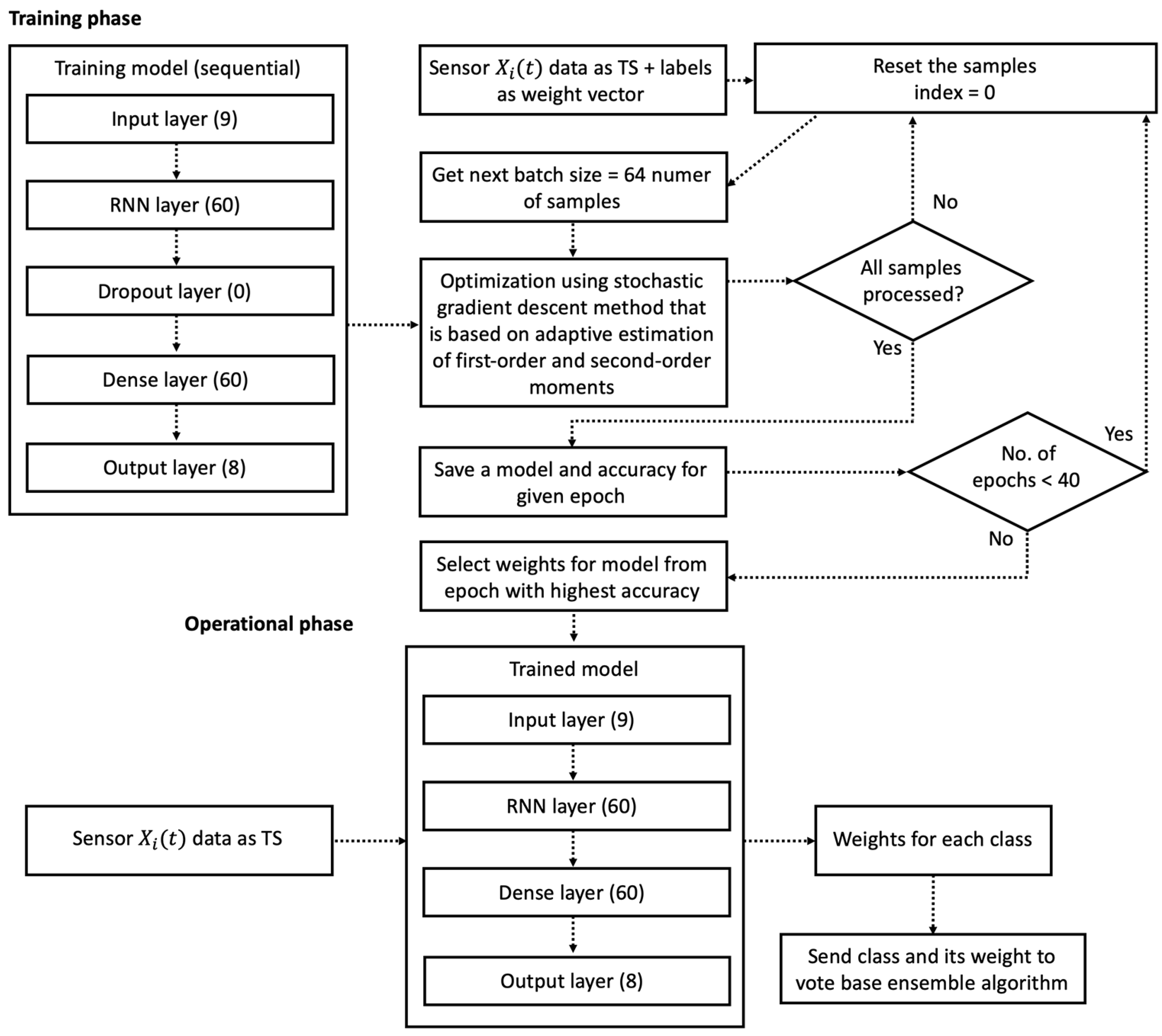

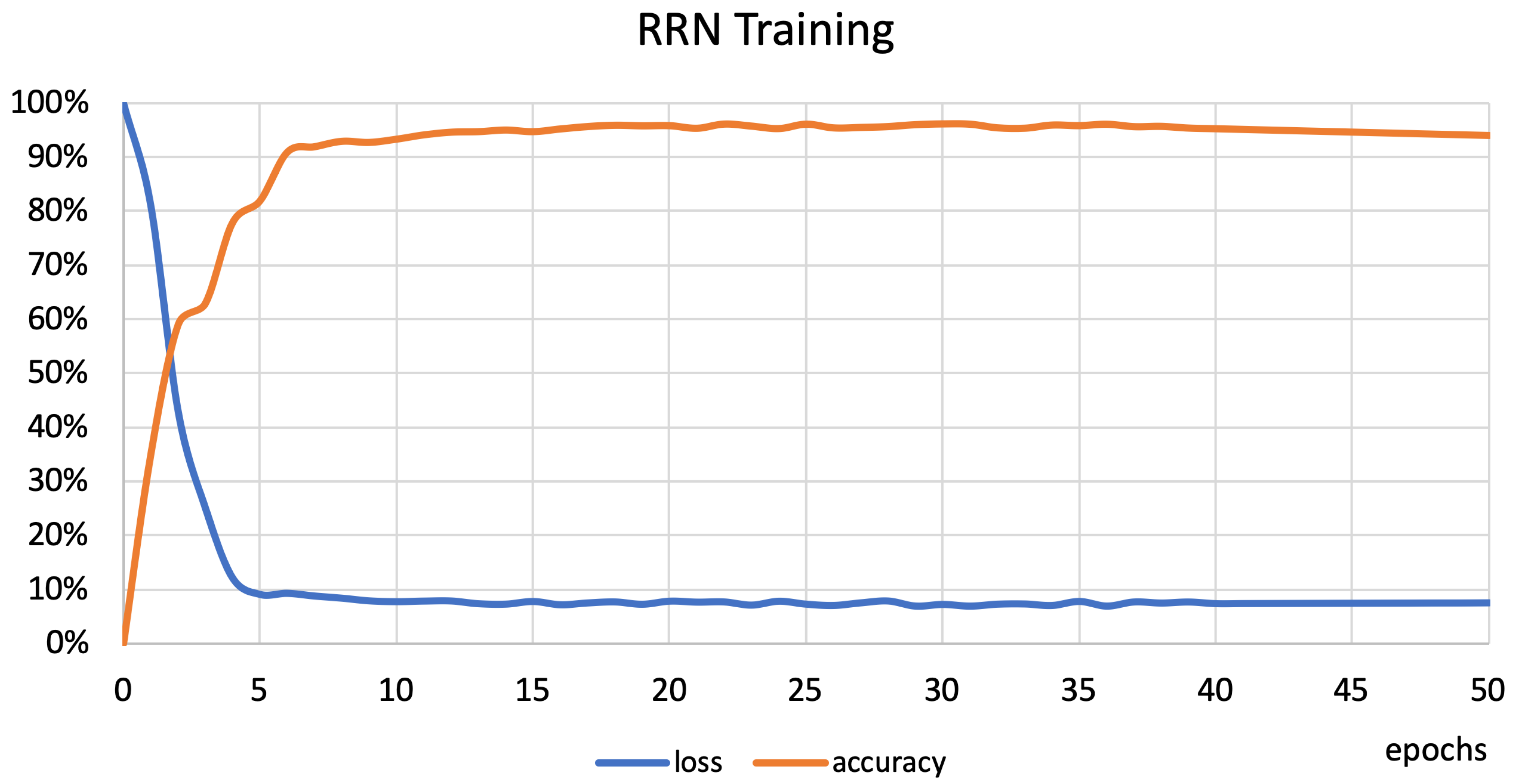

3.2. RNN Model Construction

| Listing 1 TensorFlow Keras script for RNN implementation |

|

4. Experiments and Discussion

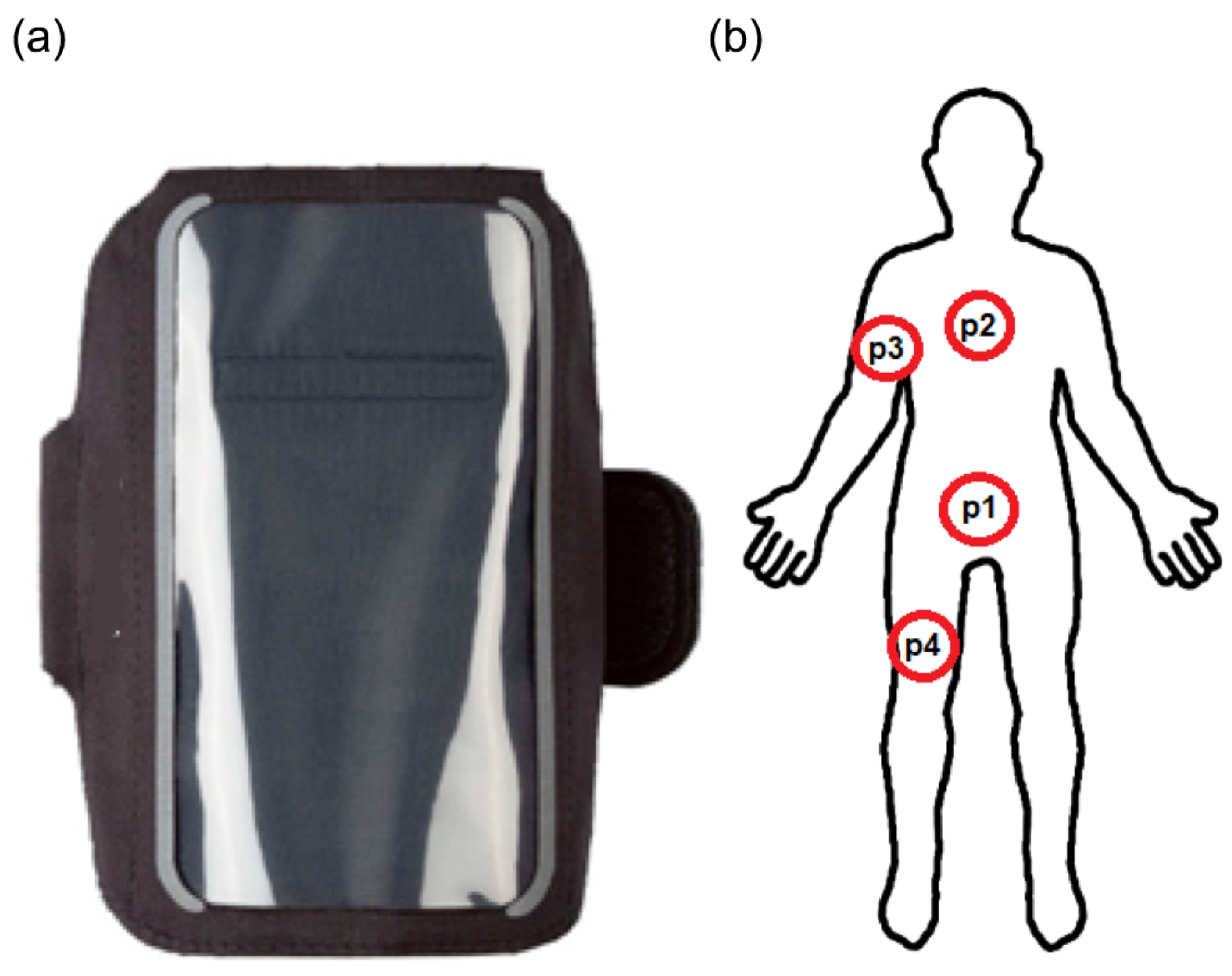

4.1. Experimental Testbed

- walking at a normal pace, where legs and arms are moving without any luggage,

- jogging at a moderate pace, where limb movement is faster and the body is moving more dynamically,

- squats, where a person is performing squats in place with arms maintaining balance,

- jump, where a person jumps in place as a part of aerobic exercise,

- lying, where a person is lying on his back, and major movement corresponds to chest breathing,

- arms swing, where the arms are moving during the exercise or housework,

- siting, where the person sits at the desk and performs simple writing (office) activities,

- standing in place, where only natural body balance can be registered.

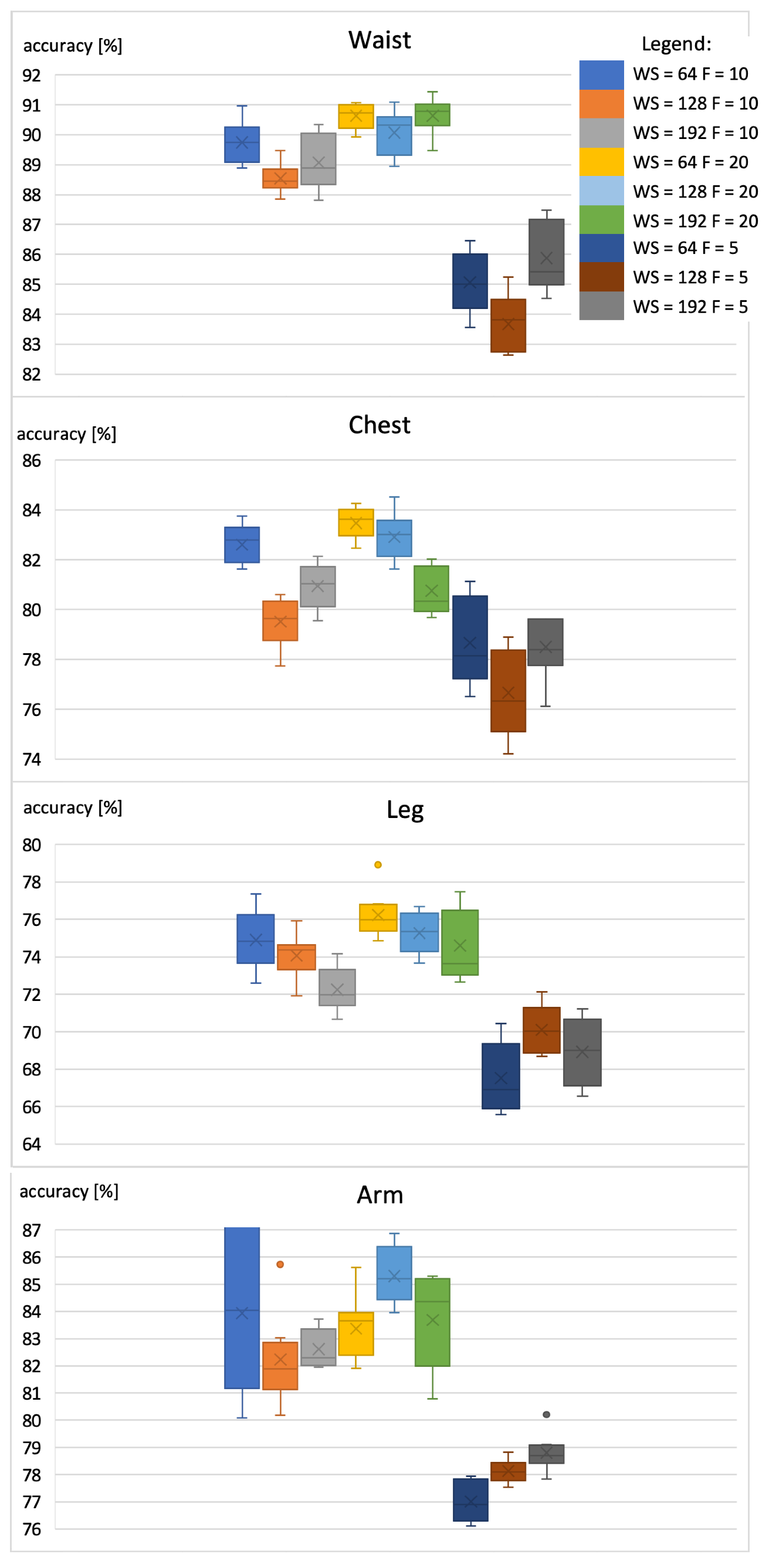

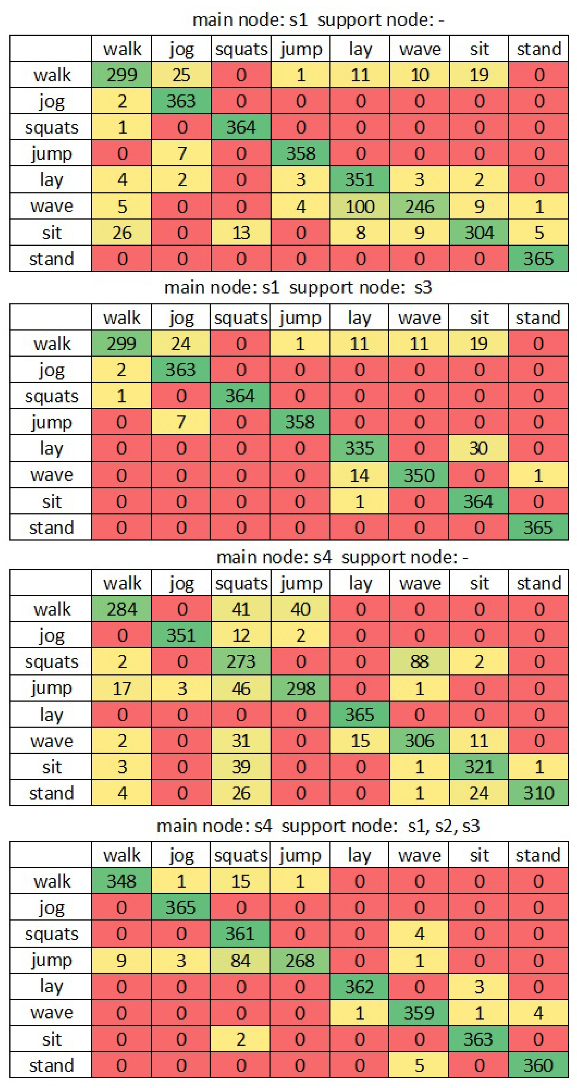

4.2. Results and Discussion

5. Conclusions

- The development of recognition methods that enable movement detection of single and multiple body parts to categorize them and divide them into activity groups. The optimal set of sensors (with their position) will be proposed based on such methods for each activity group and their combinations.

- The combination of different sensor modalities aimed to resolve the issue of noisy data from wearable sensors. The potential research can establish the background for a broader range of recognized activities, e.g., using vision sensors.

- The development of more advanced data suppression methods to further reduce the transmitted data from supporting sensor nodes and to increase their lifetime. Additionally, other suppression methods, as presented in [8], could be adopted.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| t | time step |

| set of activities for which i-th sensor node should send to the main node | |

| D | set of all recognized activities (activities are denoted by natural numbers, ) |

| activity recognized by sensor node i at time step t | |

| activity recognized by the main node at time step t based on the voting procedure | |

| weight of the recognized activity () | |

| binary variable, which describes the necessity of sending to the main node | |

| machine learning model used by i-th sensor node | |

| time series of sensor readings collected by node i at time step t | |

| a | accuracy of activity recognition |

| tuning parameter, which helps to neglect the influence of outliers |

Appendix A. Verification of a Model

| True Positives | False Positives | True Negative | False Negative | Recall | Precision | Sensivity | Specificity | F-means | Accuracy | Cohen’s Cappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 299 | 38 | 2517 | 66 | 82% | 89% | 82% | 99% | 85% | ||

| 2 | 363 | 34 | 2521 | 2 | 99% | 91% | 99% | 99% | 95% | ||

| 3 | 364 | 13 | 2542 | 1 | 100% | 97% | 100% | 99% | 98% | ||

| 4 | 358 | 8 | 2547 | 7 | 98% | 98% | 98% | 100% | 98% | ||

| 5 | 351 | 119 | 2436 | 14 | 96% | 75% | 96% | 95% | 84% | ||

| 6 | 246 | 22 | 2533 | 119 | 67% | 92% | 67% | 99% | 78% | ||

| 7 | 304 | 30 | 2525 | 61 | 83% | 91% | 83% | 99% | 87% | ||

| 8 | 365 | 6 | 2549 | 0 | 100% | 98% | 100% | 100% | 99% | ||

| Total | 91% | 89% |

| True Positives | False Positives | True Negative | False Negative | Recall | Precision | Sensivity | Specificity | F-means | Accuracy | Cohen’s Cappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 299 | 3 | 2552 | 66 | 82% | 99% | 82% | 100% | 90% | ||

| 2 | 363 | 31 | 2524 | 2 | 99% | 92% | 99% | 99% | 96% | ||

| 3 | 364 | 0 | 2555 | 1 | 100% | 100% | 100% | 100% | 100% | ||

| 4 | 358 | 1 | 2554 | 7 | 98% | 100% | 98% | 100% | 99% | ||

| 5 | 335 | 26 | 2529 | 30 | 92% | 93% | 92% | 99% | 92% | ||

| 6 | 350 | 11 | 2544 | 15 | 96% | 97% | 96% | 100% | 96% | ||

| 7 | 364 | 49 | 2506 | 1 | 100% | 88% | 100% | 98% | 94% | ||

| 8 | 365 | 1 | 2554 | 0 | 100% | 100% | 100% | 100% | 100% | ||

| Total | 96% | 95% |

| True Positives | False Positives | True Negative | False Negative | Recall | Precision | Sensivity | Specificity | F-means | Accuracy | Cohen’s Cappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 284 | 28 | 2527 | 81 | 78% | 91% | 78% | 99% | 84% | ||

| 2 | 351 | 3 | 2552 | 14 | 96% | 99% | 96% | 100% | 98% | ||

| 3 | 273 | 195 | 2360 | 92 | 75% | 58% | 75% | 92% | 66% | ||

| 4 | 298 | 42 | 2513 | 67 | 82% | 88% | 82% | 98% | 85% | ||

| 5 | 365 | 15 | 2540 | 0 | 100% | 96% | 100% | 99% | 98% | ||

| 6 | 306 | 91 | 2464 | 59 | 84% | 77% | 84% | 96% | 80% | ||

| 7 | 321 | 37 | 2518 | 44 | 88% | 90% | 88% | 99% | 89% | ||

| 8 | 310 | 1 | 2554 | 55 | 85% | 100% | 85% | 100% | 92% | ||

| Total | 86% | 84% |

| True Positives | False Positives | True Negative | False Negative | Recall | Precision | Sensivity | Specificity | F-means | Accuracy | Cohen’s Cappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 348 | 9 | 2546 | 17 | 95% | 97% | 95% | 100% | 96% | ||

| 2 | 365 | 4 | 2551 | 0 | 100% | 99% | 100% | 100% | 99% | ||

| 3 | 361 | 101 | 2454 | 4 | 99% | 78% | 99% | 96% | 87% | ||

| 4 | 268 | 1 | 2554 | 97 | 73% | 100% | 73% | 100% | 85% | ||

| 5 | 362 | 1 | 2554 | 3 | 99% | 100% | 99% | 100% | 99% | ||

| 6 | 359 | 10 | 2545 | 6 | 98% | 97% | 98% | 100% | 98% | ||

| 7 | 363 | 4 | 2551 | 2 | 99% | 99% | 99% | 100% | 99% | ||

| 8 | 360 | 4 | 2551 | 5 | 99% | 99% | 99% | 100% | 99% | ||

| Total | 95% | 95% |

References

- Cooper, A.; Page, A.; Fox, K.; Misson, J. Physical activity patterns in normal, overweight and obese individuals using minute-by-minute accelerometry. Eur. J. Clin. Nutr. 2000, 54, 887–894. [Google Scholar] [CrossRef]

- Ekelund, U.; Brage, S.; Griffin, S.; Wareham, N. Objectively measured moderate-and vigorous-intensity physical activity but not sedentary time predicts insulin resistance in high-risk individuals. Diabetes Care 2009, 32, 1081–1086. [Google Scholar] [CrossRef]

- Legge, A.; Blanchard, C.; Hanly, J. Physical activity, sedentary behaviour and their associations with cardiovascular risk in systemic lupus erythematosus. Rheumatology 2020, 59, 1128–1136. [Google Scholar] [CrossRef]

- Loprinzi, P. Objectively measured light and moderate-to-vigorous physical activity is associated with lower depression levels among older US adults. Aging Mental Health 2013, 17, 801–805. [Google Scholar] [CrossRef]

- Smirnova, E.; Leroux, A.; Cao, Q.; Tabacu, L.; Zipunnikov, V.; Crainiceanu, C.; Urbanek, J. The predictive performance of objective measures of physical activity derived from accelerometry data for 5-year all-cause mortality in older adults: National Health and Nutritional Examination Survey 2003–2006. J. Gerontol. Ser. A 2020, 75, 1779–1785. [Google Scholar] [CrossRef] [PubMed]

- Straczkiewicz, M.; James, P.; Onnela, J. A systematic review of smartphone-based human activity recognition methods for health research. NPJ Digit. Med. 2021, 4, 1–15. [Google Scholar] [CrossRef]

- Yadav, S.; Tiwari, K.; Pandey, H.M.; Akbar, S. A review of multimodal human activity recognition with special emphasis on classification, applications, challenges and future directions. Knowl. Based Syst. 2021, 223, 106970. [Google Scholar] [CrossRef]

- Lewandowski, M.; Płaczek, B.; Bernas, M. Classifier-Based Data Transmission Reduction in Wearable Sensor Network for Human Activity Monitoring. Sensors 2020, 21, 85. [Google Scholar] [CrossRef] [PubMed]

- Giannini, P.; Bassani, G.; Avizzano, C.; Filippeschi, A. Wearable sensor network for biomechanical overload assessment in manual material handling. Sensors 2020, 20, 3877. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Zhao, J.; Yu, Y.; Zeng, H. Improved 1D-CNNs for behavior recognition using wearable sensor network. Comput. Commun. 2020, 151, 165–171. [Google Scholar] [CrossRef]

- Jarwan, A.; Sabbah, A.; Ibnkahla, M. Data transmission reduction schemes in WSNs for efficient IoT systems. IEEE J. Sel. Areas Commun. 2019, 37, 1307–1324. [Google Scholar] [CrossRef]

- Lewandowski, M.; Bernas, M.; Loska, P.; Szymała, P.; Płaczek, B. Extending Lifetime of Wireless Sensor Network in Application to Road Traffic Monitoring. In International Conference on Computer Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 112–126. [Google Scholar]

- Wu, W.; Dasgupta, S.; Ramirez, E.; Peterson, C.; Norman, G. Classification accuracies of physical activities using smartphone motion sensors. J. Med. Internet Res. 2012, 14, e2208. [Google Scholar] [CrossRef]

- Guvensan, M.; Dusun, B.; Can, B.; Turkmen, H. A novel segment-based approach for improving classification performance of transport mode detection. Sensors 2017, 18, 87. [Google Scholar] [CrossRef]

- Della Mea, V.; Quattrin, O.; Parpinel, M. A feasibility study on smartphone accelerometer-based recognition of household activities and influence of smartphone position. Informatics Health Soc. Care 2017, 42, 321–334. [Google Scholar] [CrossRef] [PubMed]

- Klein, I. Smartphone location recognition: A deep learning-based approach. Sensors 2019, 20, 214. [Google Scholar] [CrossRef]

- Sanhudo, L.; Calvetti, D.; Martins, J.; Ramos, N.; Mêda, P.; Gonçalves, M.; Sousa, H. Activity classification using accelerometers and machine learning for complex construction worker activities. J. Build. Eng. 2021, 35, 102001. [Google Scholar] [CrossRef]

- Ferrari, A.; Micucci, D.; Mobilio, M.; Napoletano, P. On the personalization of classification models for human activity recognition. IEEE Access 2020, 8, 32066–32079. [Google Scholar] [CrossRef]

- Vanini, S.; Faraci, F.; Ferrari, A.; Giordano, S. Using barometric pressure data to recognize vertical displacement activities on smartphones. Comput. Commun. 2016, 87, 37–48. [Google Scholar] [CrossRef]

- Miao, F.; He, Y.; Liu, J.; Li, Y.; Ayoola, I. Identifying typical physical activity on smartphone with varying positions and orientations. Biomed. Eng. Online 2015, 14, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Wannenburg, J.; Malekian, R. Physical activity recognition from smartphone accelerometer data for user context awareness sensing. IEEE Trans. Syst. Man Cybern. Syst. 2016, 47, 3142–3149. [Google Scholar] [CrossRef]

- Yurur, O.; Labrador, M.; Moreno, W. Adaptive and energy efficient context representation framework in mobile sensing. IEEE Trans. Mob. Comput. 2013, 13, 1681–1693. [Google Scholar] [CrossRef]

- Chen, Y.; Shen, C. Performance analysis of smartphone-sensor behavior for human activity recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Javed, A.; Sarwar, M.; Khan, S.; Iwendi, C.; Mittal, M.; Kumar, N. Analyzing the effectiveness and contribution of each axis of tri-axial accelerometer sensor for accurate activity recognition. Sensors 2020, 20, 2216. [Google Scholar] [CrossRef]

- Li, P.; Wang, Y.; Tian, Y.; Zhou, T.; Li, J. An automatic user-adapted physical activity classification method using smartphones. IEEE Trans. Biomed. Eng. 2016, 64, 706–714. [Google Scholar] [CrossRef] [PubMed]

- Awan, M.; Guangbin, Z.; Kim, C.; Kim, S. Human activity recognition in WSN: A comparative study. Int. J. Networked Distrib. Comput. 2014, 2, 221–230. [Google Scholar] [CrossRef]

- Yang, R.; Wang, B. PACP: A position-independent activity recognition method using smartphone sensors. Information 2016, 7, 72. [Google Scholar] [CrossRef]

- Mukherjee, D.; Mondal, R.; Singh, P.; Sarkar, R.; Bhattacharjee, D. EnsemConvNet: A deep learning approach for human activity recognition using smartphone sensors for healthcare applications. Multimed. Tools Appl. 2020, 79, 31663–31690. [Google Scholar] [CrossRef]

- Wang, G.; Li, Q.; Wang, L.; Wang, W.; Wu, M.; Liu, T. Impact of sliding window length in indoor human motion modes and pose pattern recognition based on smartphone sensors. Sensors 2018, 18, 1965. [Google Scholar] [CrossRef]

- Bashir, S.; Doolan, D.; Petrovski, A. The effect of window length on accuracy of smartphone-based activity recognition. IAENG Int. J. Comput. Sci. 2016, 43, 126–136. [Google Scholar]

- Derawi, M.; Bours, P. Gait and activity recognition using commercial phones. Comput. Secur. 2013, 39, 137144. [Google Scholar] [CrossRef]

- Avilés-Cruz, C.; Ferreyra-Ramírez, A.; Zúñiga-López, A.; Villegas-Cortéz, J. Coarse-fine convolutional deep-learning strategy for human activity recognition. Sensors 2019, 19, 1556. [Google Scholar] [CrossRef]

- Zhao, B.; Li, S.; Gao, Y.; Li, C.; Li, W. A framework of combining short-term spatial/frequency feature extraction and long-term IndRNN for activity recognition. Sensors 2020, 20, 6984. [Google Scholar] [CrossRef]

- Arif, M.; Bilal, M.; Kattan, A.; Ahamed, S. Better physical activity classification using smartphone acceleration sensor. J. Med. Syst. 2014, 38, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Gonzalez, D.; Rivero, D.; Fernandez-Blanco, E.; Luaces, M. A public domain dataset for real-life human activity recognition using smartphone sensors. Sensors 2020, 20, 2200. [Google Scholar] [CrossRef]

- Saeedi, S.; Moussa, A.; El-Sheimy, N. Context-aware personal navigation using embedded sensor fusion in smartphones. Sensors 2014, 14, 5742–5767. [Google Scholar] [CrossRef]

- Lu, D.; Nguyen, D.; Nguyen, T.; Nguyen, H. Vehicle mode and driving activity detection based on analyzing sensor data of smartphones. Sensors 2018, 18, 1036. [Google Scholar] [CrossRef] [PubMed]

- Pires, I.; Marques, G.; Garcia, N.; Flórez-Revuelta, F.; Canavarro Teixeira, M.; Zdravevski, E.; Spinsante, S.; Coimbra, M. Pattern recognition techniques for the identification of activities of daily living using a mobile device accelerometer. Electronics 2020, 9, 509. [Google Scholar] [CrossRef]

- Alo, U.; Nweke, H.; Teh, Y.; Murtaza, G. Smartphone motion sensor-based complex human activity identification using deep stacked autoencoder algorithm for enhanced smart healthcare system. Sensors 2020, 20, 6300. [Google Scholar] [CrossRef]

- Murad, A.; Pyun, J. Deep recurrent neural networks for human activity recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef]

- Ullah, M.; Ullah, H.; Khan, S.; Cheikh, F. Stacked lstm network for human activity recognition using smartphone data. In Proceedings of the 2019 8th European Workshop On Visual Information Processing (EUVIP), Rome, Italy, 28–31 October 2019; pp. 175–180. [Google Scholar]

- Liciotti, D.; Bernardini, M.; Romeo, L.; Frontoni, E. A sequential deep learning application for recognising human activities in smart homes. Neurocomputing 2020, 396, 501–513. [Google Scholar] [CrossRef]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Alawneh, L.; Alsarhan, T.; Al-Zinati, M.; Al-Ayyoub, M.; Jararweh, Y.; Lu, H. Enhancing human activity recognition using deep learning and time series augmented data. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 10565–10580. [Google Scholar] [CrossRef]

- Liu, L.; He, J.; Ren, K.; Lungu, J.; Hou, Y.; Dong, R. An Information Gain-Based Model and an Attention-Based RNN for Wearable Human Activity Recognition. Entropy 2021, 23, 1635. [Google Scholar] [CrossRef]

- Wu, B.; Ma, C.; Poslad, S.; Selviah, D.R. An Adaptive Human Activity-Aided Hand-Held Smartphone-Based Pedestrian Dead Reckoning Positioning System. Remote Sens. 2021, 13, 2137. [Google Scholar] [CrossRef]

- Wu, C.; Tseng, Y. Data compression by temporal and spatial correlations in a body-area sensor network: A case study in pilates motion recognition. IEEE Trans. Mob. Comput. 2010, 10, 1459–1472. [Google Scholar] [CrossRef]

- Miskowicz, M. Send-on-delta concept: An event-based data reporting strategy. Sensors 2006, 6, 49–63. [Google Scholar] [CrossRef]

- Al-Janabi, S.; Salman, A. Sensitive integration of multilevel optimization model in human activity recognition for smartphone and smartwatch applications. Big Data Min. Anal. 2021, 4, 124–138. [Google Scholar] [CrossRef]

- Ganjewar, P.; Barani, S.; Wagh, S. A hierarchical fractional LMS prediction method for data reduction in a wireless sensor network. Ad Hoc Netw. 2019, 87, 113–127. [Google Scholar] [CrossRef]

- Putra, I.; Brusey, J.; Gaura, E.; Vesilo, R. An event-triggered machine learning approach for accelerometer-based fall detection. Sensors 2017, 18, 20. [Google Scholar] [CrossRef]

- Guillaume, C. LSTMs for Human Activity Recognition. 2016. Available online: https://github.com/guillaume-chevalier/LSTM-Human-Activity-Recognition (accessed on 12 July 2022).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Wang, H.; Zhou, J.; Wang, Y.; Wei, J.; Liu, W.; Yu, C.; Li, Z. Optimization algorithms of neural networks for traditional time-domain equalizer in optical communications. Appl. Sci. 2019, 9, 3907. [Google Scholar] [CrossRef]

- Berthold, M.; Cebron, N.; Dill, F.; Gabriel, T.; Kötter, T.; Meinl, T.; Ohl, P.; Thiel, K.; Wiswedel, B. KNIME-the Konstanz information miner: Version 2.0 and beyond. ACM Sigkdd Explor. Newsl. 2009, 11, 26–31. [Google Scholar] [CrossRef]

| Main Node | Support Node | Vectors | Accuracy Based on Training Set [%] |

|---|---|---|---|

| [1, 1, 0, 1, 1, 1, 1, 0] | 96.92 | ||

| [1, 0, 0, 0, 1, 1, 1, 0] | 97.12 | ||

| [1, 1, 0, 1, 1, 1, 1, 0] | 97.26 | ||

| , | [1, 0, 0, 0, 0, 1, 0, 0], [1, 0, 0, 0, 1, 1, 1, 0] | 99.86 | |

| , | [1, 0, 0, 0, 0, 1, 1, 0], [1, 1, 0, 1, 1, 1, 1, 0] | 99.25 | |

| , | [1, 0, 0, 0, 0, 1, 1, 0], [1, 1, 0, 1, 1, 1, 1, 0] | 99.45 | |

| , , | [1, 0, 0, 0, 0, 0, 0, 0], [1, 0, 0, 0, 0, 1, 1, 0], [1, 1, 0, 1, 1, 1, 1, 0] | 100.00 | |

| [1, 1, 0, 1, 1, 1, 0, 1] | 87.05 | ||

| [1, 0, 0, 1, 1, 1, 1, 1] | 94.93 | ||

| [1, 1, 0, 1, 1, 1, 1, 1] | 86.92 | ||

| , | [1, 1, 0, 1, 1, 0, 0, 0], [1, 1, 0, 1, 1, 1, 1, 1] | 99.38 | |

| , | [0, 1, 0, 0, 0, 1, 0, 0], [1, 1, 0, 1, 1, 1, 1, 1] | 96.71 | |

| , | [1, 1, 0, 0, 0, 1, 0, 0], [1, 1, 0, 1, 1, 1, 1, 1] | 98.90 | |

| , , | [0, 1, 0, 0, 0, 0, 0, 0], [1, 0, 0, 0, 0, 1, 0, 0], [1, 1, 0, 1, 1, 1, 1, 1] | 99.86 | |

| [1, 1, 0, 1, 1, 1, 0, 0] | 90.82 | ||

| [1, 1, 0, 1, 1, 1, 1, 0] | 93.63 | ||

| [1, 1, 0, 1, 1, 1, 0, 0] | 91.37 | ||

| , | [0, 0, 0, 1, 1, 1, 0, 0], [1, 1, 0, 1, 1, 1, 1, 0] | 99.18 | |

| , | [1, 1, 0, 0, 0, 1, 0, 0],[1, 1, 0, 1, 1, 1, 0, 0] | 97.60 | |

| , | [1, 1, 0, 0, 0, 1, 0, 0], [1, 1, 0, 1, 1, 1, 0, 0] | 98.49 | |

| , , | [0, 0, 0, 0, 0, 0, 0, 0],[ 1, 1, 0, 0, 0, 1, 0, 0], [1, 1, 0, 1, 1, 1, 0, 0] | 99.93 | |

| [1, 1, 1, 0, 0, 1, 1, 1] | 95.62 | ||

| [1, 1, 1, 0, 0, 1, 1, 1] | 96.92 | ||

| [1, 1, 1, 0, 0, 1, 1, 1] | 93.77 | ||

| , | [1, 1, 0, 0, 0, 0, 0, 0],[ 1, 1, 1, 0, 0, 1, 1, 1] | 99.04 | |

| , | [1, 1, 0, 0, 0, 0, 0, 0], [1, 1, 1, 0, 0, 1, 1, 1] | 97.33 | |

| , | [1, 1, 0, 0, 0, 0, 0, 0], [1, 1, 1, 0, 0, 1, 1, 1] | 98.42 | |

| , , | [1, 0, 0, 0, 0, 0, 0, 0], [1, 1, 0, 0, 0, 0, 0, 0], [1, 1, 1, 0, 0, 1, 1, 1] | 99.93 |

| Main Node | Support Node | Accuracy [%] | Suppression [%] |

|---|---|---|---|

| - | 90.75 | - | |

| - | 81.20 | - | |

| - | 75.34 | - | |

| - | 85.89 | - | |

| 96.47 | 25 | ||

| 95.82 | 50 | ||

| 95.86 | 25 | ||

| , | 98.53 | 63 | |

| , | 98.94 | 44 | |

| , | 98.25 | 44 | |

| , , | 99.59 | 58 | |

| 85.96 | 25 | ||

| 93.15 | 25 | ||

| 86.68 | 13 | ||

| , | 98.12 | 31 | |

| , | 86.88 | 44 | |

| , | 86.82 | 38 | |

| , , | 86.82 | 58 | |

| 89.59 | 38 | ||

| 91.20 | 25 | ||

| 90.86 | 38 | ||

| , | 96.95 | 44 | |

| , | 93.84 | 50 | |

| , , | 95.48 | 50 | |

| , , | 95.48 | 67 | |

| 92.64 | 25 | ||

| 85.00 | 25 | ||

| 90.82 | 25 | ||

| , | 85.68 | 50 | |

| , | 93.18 | 50 | |

| , | 94.66 | 50 | |

| , , | 95.41 | 63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bernaś, M.; Płaczek, B.; Lewandowski, M. Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes. Sensors 2022, 22, 9451. https://doi.org/10.3390/s22239451

Bernaś M, Płaczek B, Lewandowski M. Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes. Sensors. 2022; 22(23):9451. https://doi.org/10.3390/s22239451

Chicago/Turabian StyleBernaś, Marcin, Bartłomiej Płaczek, and Marcin Lewandowski. 2022. "Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes" Sensors 22, no. 23: 9451. https://doi.org/10.3390/s22239451

APA StyleBernaś, M., Płaczek, B., & Lewandowski, M. (2022). Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes. Sensors, 22(23), 9451. https://doi.org/10.3390/s22239451