Abstract

In this paper, we propose an enhancement of three-dimensional (3D) image visualization techniques by using different pickup plane reconstructions. In conventional 3D visualization techniques, synthetic aperture integral imaging (SAII) and volumetric computational reconstruction (VCR) can be utilized. However, due to the lack of image information and shifting pixels, it may be difficult to obtain better lateral and longitudinal resolutions of 3D images. Thus, we propose a new elemental image acquisition and computational reconstruction to improve both the lateral and longitudinal resolutions of 3D objects. To prove the feasibility of our proposed method, we present the performance metrics, such as mean squared error (MSE), peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and peak-to-sidelobe ratio (PSR). Therefore, our method can improve both the lateral and longitudinal resolutions of 3D objects more than the conventional technique.

1. Introduction

Three-dimensional (3D) image visualization is a significant issue for many applications, such as unmanned autonomous vehicles, media content, defense, and so on [,,,,,,,]. To visualize 3D images, various methods can be utilized, such as time of flight, stereoscopic imaging, holography, and integral imaging [,,,]. By utilizing incoherent light with a general camera, integral imaging [,,,,] can be used. It is a passive multi-perspective imaging technique that obtains depth information from 2D images, which are called elemental images. Unlike stereoscopic imaging techniques, such as anaglyphs, shutter glasses, and film-patterned retarders, integral imaging can visualize 3D images in full color, full parallax, and with continuous viewing points without special viewing devices. However, since integral imaging utilizes a lenslet array, the resolution of each elemental image is limited by the number of lenses and the resolution of the sensor. Thus, integral imaging by the lenslet array may visualize the 3D image in low resolution with a shallow depth of focus.

To solve lateral and longitudinal resolutions of 3D images in integral imaging, synthetic aperture integral imaging (SAII) [,,,,] was reported. The elemental image acquisition obtains different perspectives of elemental images by fixing the distances between scenes and the pickup plane. It can record elemental images with the same resolution as the image sensor. After obtaining high-resolution elemental images by SAII, volumetric computational reconstruction (VCR) [,,,,,] can be used for 3D image generation. It can reconstruct 3D image from elemental images by shifting the pixels. It shifts each elemental image and superposes them to obtain 3D information. However, 3D images may be visualized with limited resolutions because of the fixed distance between scenes and the pickup plane.

To solve this problem, elemental images captured at different pickup positions at in-depth directions are required. Thus, in this paper, we merged SAII with axially distributed sensing (ADS) [,,,]. ADS is another 3D image visualization method that records different perspectives of elemental images by moving the image sensor along the optical axis. This feature makes ADS have fewer resolution limitations caused by the fixed distance. In the reconstruction sequence, unlike SAII, ADS utilizes the relative magnification ratio for each elemental image from different distances. However, ADS may not generate the 3D images of the center part because of a lack of perspective information. In this paper, our method uses the advantages of both SAII and ADS. In the image acquisition, we obtain elemental images by moving the sensor on the pickup plane with different positions of in-depth direction. The shifting pixel and relative magnification ratio are utilized for the 3D image reconstruction. Therefore, our methods can enhance the lateral and longitudinal resolutions of 3D images simultaneously.

This paper is organized as follows. In Section 2, we present the basic concept of integral imaging, SAII, ADS, and our proposed method. Then, in Section 3, we show the experimental results for supporting the feasibility of our proposed method with the performance metrics, such as peak signal-to-noise ratio (PSNR), structural similarity (SSIM), mean squared error (MSE), and peak-to-sidelobe ratio (PSR). Finally, we conclude with our summary in Section 4.

2. Three-Dimensional Integral Imaging with Multiple Pickup Positions

In this section, we present the basic concepts of integral imaging, synthetic aperture integral imaging, axially distributed sensing, and our method.

2.1. Integral Imaging

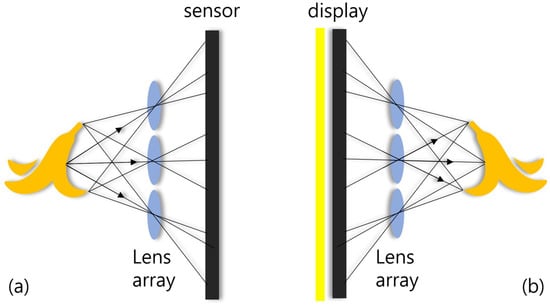

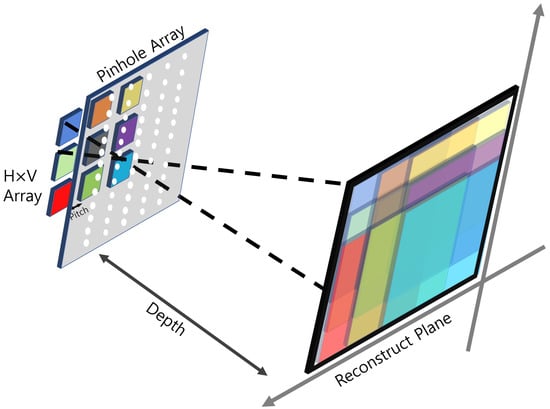

To visualize natural 3D scenes, integral imaging was reported by G. Lippmann in 1908 []. Integral imaging provides full color and full parallax 3D images with multi-perspective elemental images. Figure 1 illustrates an overview of integral imaging. As shown in Figure 1, integral imaging generates 3D images by two sequences: image acquisition and reconstruction. Image acquisition provides multi-perspective elemental images from different locations of the lenslets. In the reconstruction, there is an optical method (Figure 1b) and a computational method (Figure 2). In the optical method, the 3D image is displayed by backpropagating the elemental image through the same lenslet array. In the computational method, as shown in Figure 2, a nonuniform volumetric computational reconstruction (VCR) is utilized. It uses different shifting pixels for elemental images through various reconstruction depths and superposes them. It can be described as follows []

where are the actual shifting pixels in a real number, are the number of pixels for each elemental image in x and y directions, are the pitches for the elemental images, f is the focal length of the camera, are the sensor sizes of the camera, is the reconstruction depth, are the shifting pixels assigned to the kth row and lth column elemental images, are the number of row and column elemental images, is the round operator, is the overlapping matrix for nonuniform VCR, is the kth row, lth is the column elemental image, and is the 3D image by the nonuniform VCR. However, since the resolutions of elemental images are limited by the number of lenses and the resolution of the sensor, the resolutions of 3D images may be degraded. To solve this problem, high-resolution elemental images are required in integral imaging.

Figure 1.

Overview of integral imaging; (a) is the acquisition technique and (b) is the reconstruction technique.

Figure 2.

Overview of nonuniform volumetric computational reconstruction (VCR).

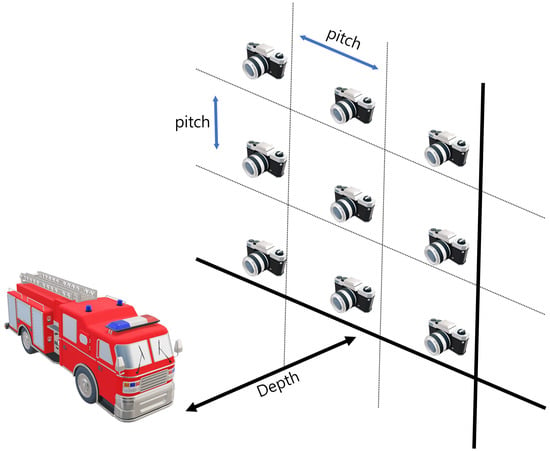

2.2. Synthetic Aperture Integral Imaging

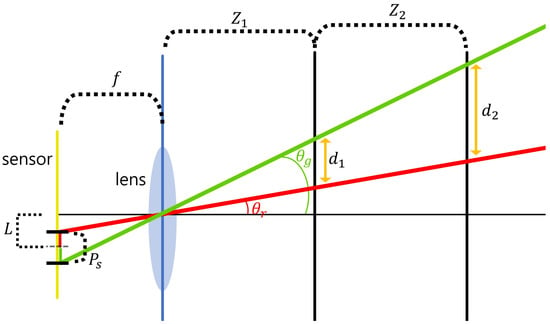

Synthetic aperture integral imaging (SAII) is an elemental image acquisition technique. Unlike lenslet array-based integral imaging, SAII utilizes a full sensor for capturing each elemental image. Therefore, 3D images by SAII can have high resolutions. Figure 3 illustrates the SAII. As shown in Figure 3, it fixes the distance between the 3D object and the pickup plane and generates the elemental images by moving the image sensor on the pickup plane with the same pitch. However, 3D objects by using elemental images with single distances may not be visualized well due to the limited resolutions. Figure 4 illustrates the resolution problems of 3D image visualizations. In Figure 4, is the pixel size, f is the focal length, is the distance between the sensor and 3D scenes, L is the distance between the center of the sensor and pixel, are the angles of the red and green rays, and is the distance where we recognize a pixel as single information. It can be described as follows:

Figure 3.

Illustrations of synthetic aperture integral imaging (SAII).

Figure 4.

Illustration of the resolution problem.

As shown in Figure 4 and Equations (5) and (6), the distance between the sensor and the scene () is the main factor for calculating the distances (). In short, as the distance between the 3D object and the pickup plane decrease, the acquisition area per pixel becomes smaller. Therefore, it can obtain more precise information.

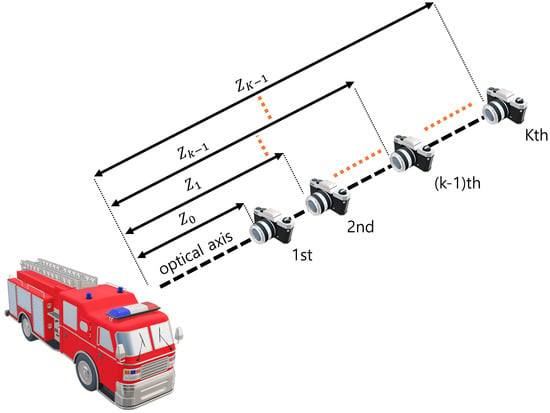

2.3. Axially Distributed Sensing

Axially distributed sensing obtains different perspectives of the images by changing the distance between the pickup and reconstruction plane. Then, it can reconstruct the 3D image by using a relative magnification ratio for each elemental image. Figure 5 illustrates image acquisition in ADS. As shown in Figure 5, the image sensor is aligned on the optical axis with different depths. These depth differences lead to ADS having fewer resolution limitations, which are caused by the distance between the 3D object and the pickup plane. Elemental images by ADS contain different pickup areas per pixel. Therefore, in ADS reconstruction, a relative magnification ratio can be utilized. It can be described as follows [,,,]

where is the nearest distance between the pickup position and the reconstruction plane, is the distance between the kth pickup position and the reconstruction plane, is the relative magnification ratio between and , is the matrix, is the overlapping matrix for ADS, is the elemental image at the kth pickup position, is the 3D image by ADS. However, since the center parts of elemental images have no perspectives, ADS may not reconstruct the center part of a 3D scene.

Figure 5.

Image acquisition in axially distributed sensing (ADS).

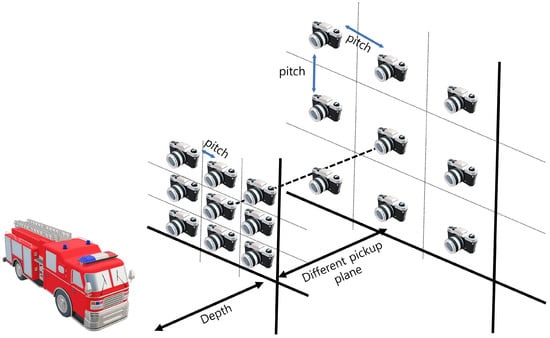

2.4. Our Method

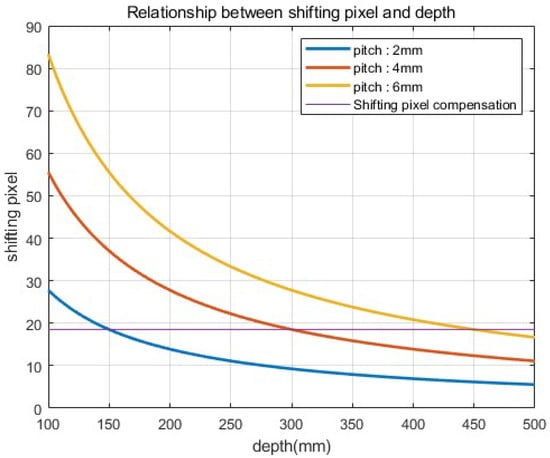

To visualize 3D images with enhanced lateral and longitudinal resolutions, we merged the SAII, ADS, and VCR techniques in this paper. In elemental image acquisition, we set the camera arrays at different pickup planes with different lateral perspectives. Figure 6 illustrates the alignment of the camera array in our method. As shown in Figure 6, elemental images with different perspectives are recorded per pickup plane. On each pickup plane, different pitches among cameras are considered. In Equation (1), the shifting pixels of each elemental image can be decided by the number of pixels (), pitch (), focal length (f), sensor size (), and depth between the pickup and reconstruction plane (). However, the number of pixels, focal lengths, and sensor sizes are constant values when the camera and lens are chosen. Thus, in this case, the shifting pixel can be described as follows

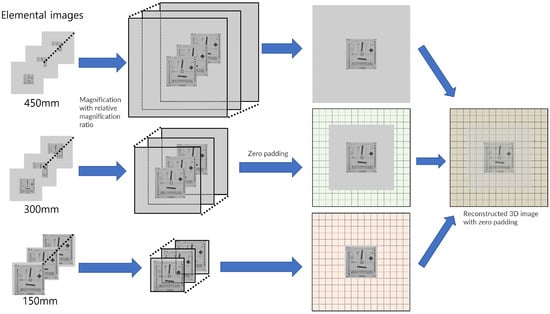

k is the merged constant value of , , and f. In Equation (10), shifting pixels () are considered by pitch () and depth (). Figure 7 illustrates the relationship between the shifting pixel, pitch, and depth. As shown in Figure 7, the number of shifting pixels gradually decreases as the depth increases. However, in Figure 7, the shifting pixel can be compensated by using a higher pitch. Therefore, a higher pitch is used for the longest pickup plane to compensate for the lack of shifting pixels. Then we reconstruct the elemental images by considering various shifting pixels and the relative magnification ratio. It is described as follows:

where is the nearest distance between the pickup and reconstruction plane, M is the number of pickup planes, and is the distance between the mth pickup and reconstruction plane. are the number of pixels for the elemental image located at the nearest distance between the pickup and the reconstruction plane, are the number of pixels for the elemental image located at the distance between the mth pickup and reconstruction plane, are the actual shifting pixels for the mth pickup plane, f is the focal length of the camera lens, is the pitch among cameras at the mth pickup plane, is the depth between the mth pickup and the reconstruction plane, are the shifting pixels for the kth row and lth column elemental image on the mth pickup plane, is the kth row, lth column, and mth pickup plane elemental image with pixels, is the magnification function that magnifies the elemental image by using the bicubic interpolation method with pixels, is the zero padding function, is the expanded elemental image for reconstruction by our method, is the one matrix with zero padding, is the overlapping matrix for the reconstruction by our method, and is the reconstructed 3D image by our method.

Figure 6.

Sensor alignment in our method.

Figure 7.

Relationship between shifting pixel and depth.

Figure 8 illustrates the procedure of our computational reconstruction. In the procedure, we needed to calculate the relative magnification ratio for every elemental image and resize all elemental images considering this magnification ratio (). Then, we calculated the shifting pixels () and assigned them to every elemental image. For convenience, in the reconstruction sequence, we applied zero padding with () sizes to every elemental image. Finally, 3D images with enhanced lateral and longitudinal resolutions can be reconstructed.

Figure 8.

Procedure of our computational reconstruction.

3. Results of the Simulation

3.1. The First Simulation Setup

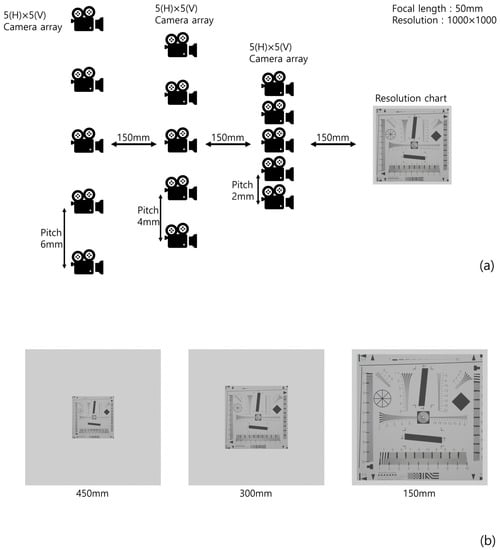

To prove the feasibility of our method, we implemented a simulation using ‘Blender’. Figure 9 illustrates the simulation setup and 3D scenes, where 5(H) × 5(V) camera arrays for each (different) depth are used, the focal length is f = 50 mm, the pitches of the camera arrays are 6, 4, and 2 mm, to set the same pitch for each pickup plane (Figure 7), the sensors size is 36(H) × 36(V) mm, and 1000(H) × 1000(V) is the number of pixels used for recording each elemental image. The conventional method only used the elemental images at the furthest pickup plane to reconstruct the 3D images. We used a resolution chart [] as the object to prove the feasibility of our method.

Figure 9.

(a) Simulation setup and (b) 3D scene for each depth.

3.2. The First Simulation Result

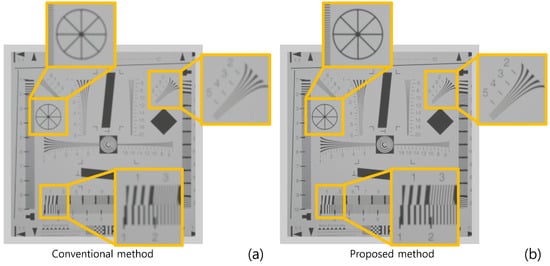

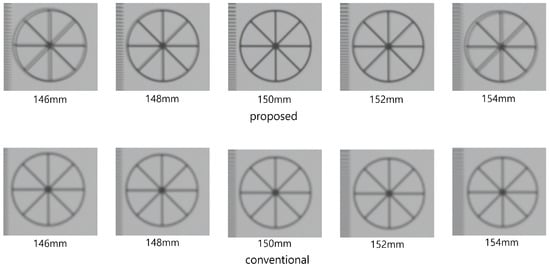

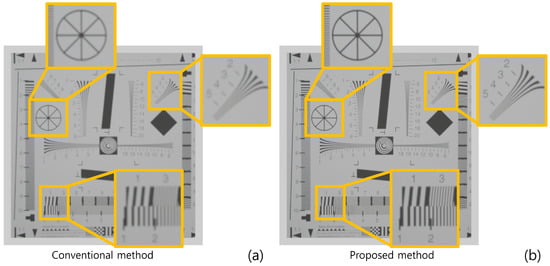

Figure 10 shows the simulation results reconstructed at 150 mm. As shown in Figure 10, both conventional and proposed methods visualize 3D images correctly. However, our method has better visual quality than the conventional method. To show the feasibility of visual quality enhancement, we calculated the performance metrics, such as peak signal-to-noise ratio (PSNR), mean squared error (MSE), and structural similarity (SSIM) by using the cropped image in Figure 10. Table 1 shows the performance metric results. As shown in Table 1, sample images reconstructed by our methods have lower MSE and higher SSIM and PSNR values than the conventional method. Moreover, when we reconstruct the 3D sample images via various depths, the proposed method has better depth resolution than the conventional method. Figure 11 shows the 3D sample images reconstructed via various depths. As shown in Figure 11, it is difficult to find the correct depth (150 mm) in the conventional method. In contrast, the correct depth can be found easily in our proposed method.

Figure 10.

Reconstructed 3D images at 150 mm by (a) the conventional method and (b) the proposed method.

Table 1.

Performance metrics of the first simulation results.

Figure 11.

3D sample images via various depths.

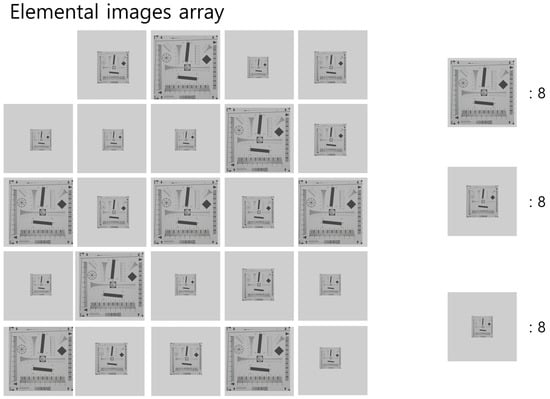

3.3. The Second Simulation Setup

However, the first simulation was unfair, because the proposed method used three times the elemental images than the conventional method. Thus, in the second simulation, we used fewer elemental images for the proposed method than the conventional method. Figure 12 shows the elemental images used in the second simulation. As shown in Figure 12, we extracted eight elemental images on every pickup plane.

Figure 12.

Elemental images of the second simulation.

3.4. Simulation Result

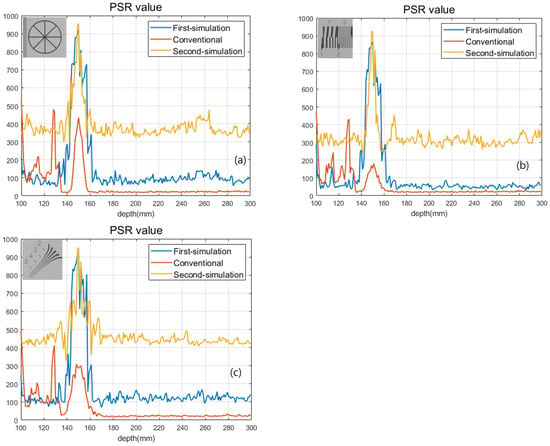

Figure 13 and Table 2 show the result of the second simulation. As shown in Figure 13 and Table 2, our proposed method has better visual quality than the conventional method. Finally, we calculated the peak-to-sidelobe ratio (PSR) value to show the enhancement of the depth resolution as shown in Figure 14. Each plot used a different sample (in the exact same locations as Figure 13) from the original image. As shown in Figure 14, our method provides a higher peak on the correct depth compared to the conventional method.

Figure 13.

Reconstructed 3D images in the second simulation by (a) the conventional method and (b) proposed method.

Table 2.

Performance metrics of the second simulation results.

Figure 14.

PSR values for (a) circle sample, (b) line sample, and (c) diagonal sample.

4. Conclusions

In this paper, we propose a 3D visualization method with an enhanced 3D resolution by merging SAII, VCR, and ADS. It may improve the lateral and longitudinal resolutions of 3D images by acquiring elemental images from different depths. Considering PSNR, MSE, SSIM, and PSR values as performance metrics, we prove that our method has better results than the conventional method. We believe that our method can be applied to many industries, such as unmanned autonomous vehicles, media content, and defense. However, it has some drawbacks. First, it requires great effort to implement real optical experiments due to lens aberration and alignment problems. Thus, we need more research and accurate optical components to apply our method in real optical experiments. Second, its processing speed is slower than the conventional method because it requires more processing steps to use the relative magnification ratio. Therefore, we will investigate the solutions to these drawbacks in future work.

Author Contributions

Conceptualization, M.C. and J.L.; methodology, M.C. and J.L.; software, J.L.; validation, M.C. and J.L.; formal analysis, J.L.; investigation, J.L.; resources, J.L.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, M.C.; visualization, J.L.; supervision, M.C.; project administration, M.C.; funding acquisition, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2020R1F1A1068637).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-Dimensional Optical Sensing and Visualization Using Integral Imaging. Proc. IEEE 2011, 4, 556–575. [Google Scholar]

- Cho, M.; Javidi, B. Three-Dimensional Photon Counting Imaging with Axially Distributed Sensing. Sensors 2016, 16, 1184. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.; Javidi, B. Three-dimensional integral imaging of micro-objects. Opt. Lett. 2004, 11, 1230–1232. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Cabré, E.; Cho, M.; Javidi, B. Information authentication using photon-counting double-random-phase encrypted images. Opt. Lett. 2011, 1, 22–24. [Google Scholar] [CrossRef] [PubMed]

- Yeom, S.; Javidi, B.; Watson, E. Photon counting passive 3D image sensing for automatic target recognition. Opt. Express 2005, 23, 9310–9330. [Google Scholar] [CrossRef] [PubMed]

- Hecht, E. Optics; Pearson: San Francisco, CA, USA, 2002. [Google Scholar]

- Javidi, B.; Moon, I.; Yeom, S. Three-dimensional identification of biological microorganism using integral imaging. Opt. Express 2006, 25, 12096–12108. [Google Scholar] [CrossRef]

- Geng, J.; Xie, J. Review of 3-D Endoscopic Surface Imaging Techniques. IEEE Sens. J. 2014, 4, 945–960. [Google Scholar]

- Xiao, X.; Javidi, B.; Martinez-Corral, M.; Stern, A. Advances in three-dimensional integral imaging: Sensing, display, and applications. Appl. Opt. 2013, 4, 546–560. [Google Scholar] [CrossRef]

- Kovalev, M.; Gritsenko, I.; Stsepuro, N.; Nosov, P.; Krasin, G.; Kudryashov, S. Reconstructing the Spatial Parameters of a Laser Beam Using the Transport-of-Intensity Equation. Sensors 2022, 5, 1765. [Google Scholar] [CrossRef]

- Zuo, C.; Li, J.; Sun, J.; Fan, Y.; Zhang, J.; Lu, L.; Zhang, R.; Wang, B.; Huang, L.; Chen, Q. Transport of intensity equation: A tutorial. Opt. Lasers Eng. 2020, 135, 106187. [Google Scholar] [CrossRef]

- Hong, S.; Jang, J.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Express 2004, 3, 483–491. [Google Scholar] [CrossRef]

- Martinez-Corral, M.; Javidi, B. Fundamentals of 3D imaging and displays: A tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 2018, 3, 512–566. [Google Scholar] [CrossRef]

- Javidi, B.; Hong, S. Three-Dimensional Holographic Image Sensing and Integral Imaging Display. J. Disp. Technol. 2005, 2, 341–346. [Google Scholar] [CrossRef]

- Jang, J.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Lett. 2002, 13, 1144–1146. [Google Scholar] [CrossRef]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Lasers Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Yun, H.; Llavador, A.; Saavedra, G.; Cho, M. Three-dimensional imaging system with both improved lateral resolution and depth of field considering non-uniform system parameters. Appl. Opt. 2018, 31, 9423–9431. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-dimensional photon counting integral imaging using moving array lens technique. Opt. Lett. 2012, 9, 1487–1489. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Fourier focusing in integral imaging with optimum visualization pixels. Opt. Lasers Eng. 2020, 127, 105952. [Google Scholar] [CrossRef]

- Schulein, R.; DaneshPanah, M.; Javidi, B. 3D imaging with axially distributed sensing. Opt. Lett. 2009, 13, 2012–2014. [Google Scholar] [CrossRef]

- Cho, M.; Shin, D. 3D Integral Imaging Display using Axially Recorded Multiple Images. J. Opt. Soc. Korea 2013, 5, 410–414. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-Dimensional Photon Counting Axially Distributed Image Sensing. J. Disp. Technol. 2013, 1, 56–62. [Google Scholar] [CrossRef]

- Lippmann, G. La photographie integrale. C R Acad SCI 1908, 146, 446–451. [Google Scholar]

- Westin, S.H. ISO 12233 Test Chart. 2010. Available online: https://www.graphics.cornell.edu/~westin/misc/res-chart.html (accessed on 30 October 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).